GDDR7 Error Resilience: FEC Overheads, BER Targets And Reliability

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GDDR7 Error Resilience Background and Objectives

Graphics Double Data Rate (GDDR) memory technology has evolved significantly over the past two decades, with each generation bringing substantial improvements in bandwidth, power efficiency, and reliability. The evolution from GDDR5 to GDDR6 marked significant advancements in data rates, and now GDDR7 represents the next frontier in high-performance graphics memory technology. As data rates continue to increase beyond 30 Gbps per pin, signal integrity challenges and bit error rates (BER) have become critical concerns that demand innovative solutions.

The development of error resilience mechanisms in memory systems has historically followed the increasing demands of data-intensive applications. Early GDDR implementations relied primarily on basic error detection capabilities, while modern implementations require sophisticated Forward Error Correction (FEC) schemes to maintain system reliability at higher speeds. This technological progression reflects the industry's response to the growing complexity of graphics processing, artificial intelligence, and high-performance computing workloads.

GDDR7 operates in an increasingly challenging signal integrity environment where traditional design margins are no longer sufficient to guarantee error-free operation. The primary objective of error resilience in GDDR7 is to achieve a delicate balance between performance, power consumption, and reliability. This balance must accommodate the needs of diverse applications ranging from consumer gaming to data center acceleration and automotive systems, each with distinct reliability requirements.

The technical goals for GDDR7 error resilience include establishing appropriate Bit Error Rate (BER) targets that align with application requirements while minimizing the overhead associated with Forward Error Correction (FEC) mechanisms. These targets must consider both random and systematic errors that occur at high data rates, as well as the increasing importance of soft errors in advanced process nodes.

Another critical objective is determining the optimal FEC scheme that provides sufficient error correction capability without imposing excessive latency or power penalties. This involves evaluating various coding techniques, from simple parity-based approaches to more complex schemes like BCH (Bose-Chaudhuri-Hocquenghem) codes or LDPC (Low-Density Parity-Check) codes, and assessing their implementation complexity and correction capabilities.

Furthermore, GDDR7 error resilience aims to establish reliability metrics and testing methodologies that accurately predict real-world performance across diverse operating conditions. This includes developing comprehensive models for error mechanisms at high data rates and creating standardized approaches for characterizing memory subsystem reliability that can be adopted across the industry.

The development of error resilience mechanisms in memory systems has historically followed the increasing demands of data-intensive applications. Early GDDR implementations relied primarily on basic error detection capabilities, while modern implementations require sophisticated Forward Error Correction (FEC) schemes to maintain system reliability at higher speeds. This technological progression reflects the industry's response to the growing complexity of graphics processing, artificial intelligence, and high-performance computing workloads.

GDDR7 operates in an increasingly challenging signal integrity environment where traditional design margins are no longer sufficient to guarantee error-free operation. The primary objective of error resilience in GDDR7 is to achieve a delicate balance between performance, power consumption, and reliability. This balance must accommodate the needs of diverse applications ranging from consumer gaming to data center acceleration and automotive systems, each with distinct reliability requirements.

The technical goals for GDDR7 error resilience include establishing appropriate Bit Error Rate (BER) targets that align with application requirements while minimizing the overhead associated with Forward Error Correction (FEC) mechanisms. These targets must consider both random and systematic errors that occur at high data rates, as well as the increasing importance of soft errors in advanced process nodes.

Another critical objective is determining the optimal FEC scheme that provides sufficient error correction capability without imposing excessive latency or power penalties. This involves evaluating various coding techniques, from simple parity-based approaches to more complex schemes like BCH (Bose-Chaudhuri-Hocquenghem) codes or LDPC (Low-Density Parity-Check) codes, and assessing their implementation complexity and correction capabilities.

Furthermore, GDDR7 error resilience aims to establish reliability metrics and testing methodologies that accurately predict real-world performance across diverse operating conditions. This includes developing comprehensive models for error mechanisms at high data rates and creating standardized approaches for characterizing memory subsystem reliability that can be adopted across the industry.

Market Demand Analysis for High-Reliability Memory

The demand for high-reliability memory solutions has witnessed unprecedented growth across multiple sectors, primarily driven by the expansion of data-intensive applications. The global high-performance memory market, which encompasses GDDR technologies, reached approximately $12.5 billion in 2022 and is projected to grow at a CAGR of 23.5% through 2028, with error-resilient memory solutions representing a significant growth segment.

AI and machine learning applications have emerged as primary drivers for enhanced memory reliability. Training large language models and complex neural networks requires not only vast amounts of high-speed memory but also exceptional data integrity. Even minor bit errors can propagate through neural networks, causing significant accuracy degradation in AI systems. This has created a substantial market pull for memory technologies with advanced error correction capabilities.

The automotive sector, particularly with the advancement of autonomous driving systems, represents another critical market for high-reliability memory. Level 3+ autonomous vehicles incorporate numerous sensors generating terabytes of data that must be processed with zero tolerance for errors. Industry analysts estimate that the automotive memory market will grow by 27% annually through 2027, with reliability features becoming non-negotiable requirements.

Data center operators face mounting pressure to ensure data integrity while simultaneously improving energy efficiency. A 2023 industry survey revealed that 78% of enterprise data center managers ranked memory reliability as a "critical" factor in their procurement decisions, surpassing even performance considerations. The financial implications of memory errors in data centers can be substantial, with the average cost of downtime estimated at $9,000 per minute for large enterprises.

Edge computing applications present another expanding market segment demanding reliable memory solutions. As computational workloads move closer to data sources, these systems often operate in harsh environmental conditions that increase error rates. The industrial IoT sector alone is expected to deploy over 45 billion connected devices by 2025, all requiring varying degrees of memory reliability.

Telecommunications infrastructure, particularly with 5G and upcoming 6G deployments, constitutes a significant market for error-resilient memory. Base stations and network equipment operating continuously in diverse environmental conditions require memory solutions that can maintain data integrity despite potential interference sources. The telecom equipment market is projected to reach $653 billion by 2026, with memory components representing an essential reliability factor.

The scientific and research community, including organizations working on quantum computing, weather modeling, and genomic sequencing, requires memory solutions with exceptional error resilience. These applications cannot tolerate data corruption, as even minor errors can invalidate research outcomes representing thousands of computation hours.

AI and machine learning applications have emerged as primary drivers for enhanced memory reliability. Training large language models and complex neural networks requires not only vast amounts of high-speed memory but also exceptional data integrity. Even minor bit errors can propagate through neural networks, causing significant accuracy degradation in AI systems. This has created a substantial market pull for memory technologies with advanced error correction capabilities.

The automotive sector, particularly with the advancement of autonomous driving systems, represents another critical market for high-reliability memory. Level 3+ autonomous vehicles incorporate numerous sensors generating terabytes of data that must be processed with zero tolerance for errors. Industry analysts estimate that the automotive memory market will grow by 27% annually through 2027, with reliability features becoming non-negotiable requirements.

Data center operators face mounting pressure to ensure data integrity while simultaneously improving energy efficiency. A 2023 industry survey revealed that 78% of enterprise data center managers ranked memory reliability as a "critical" factor in their procurement decisions, surpassing even performance considerations. The financial implications of memory errors in data centers can be substantial, with the average cost of downtime estimated at $9,000 per minute for large enterprises.

Edge computing applications present another expanding market segment demanding reliable memory solutions. As computational workloads move closer to data sources, these systems often operate in harsh environmental conditions that increase error rates. The industrial IoT sector alone is expected to deploy over 45 billion connected devices by 2025, all requiring varying degrees of memory reliability.

Telecommunications infrastructure, particularly with 5G and upcoming 6G deployments, constitutes a significant market for error-resilient memory. Base stations and network equipment operating continuously in diverse environmental conditions require memory solutions that can maintain data integrity despite potential interference sources. The telecom equipment market is projected to reach $653 billion by 2026, with memory components representing an essential reliability factor.

The scientific and research community, including organizations working on quantum computing, weather modeling, and genomic sequencing, requires memory solutions with exceptional error resilience. These applications cannot tolerate data corruption, as even minor errors can invalidate research outcomes representing thousands of computation hours.

Current Error Correction Challenges in GDDR7

GDDR7 memory technology faces significant error correction challenges as data rates continue to escalate beyond 30 Gbps. The primary challenge stems from the inherent signal integrity issues that emerge at these ultra-high speeds, where even minor electrical noise can cause bit errors. Traditional Error Correction Code (ECC) mechanisms are becoming increasingly inadequate as Bit Error Rates (BER) worsen with each generation of memory technology.

The current Forward Error Correction (FEC) implementations in GDDR6X and early GDDR7 designs struggle with balancing correction capability against performance overhead. As GDDR7 pushes toward 32 Gbps per pin, the error correction mechanisms must handle significantly higher raw BER while maintaining acceptable latency profiles. This creates a fundamental tension between reliability and performance that memory designers must navigate.

Power consumption presents another critical challenge. More sophisticated error correction algorithms require additional logic circuitry, which increases both static and dynamic power consumption. In mobile and power-constrained applications, this additional power overhead can be prohibitive, forcing compromises in error resilience capabilities.

The architectural integration of error correction mechanisms also poses significant challenges. GDDR7 memory controllers must implement increasingly complex FEC schemes while maintaining backward compatibility with existing memory hierarchies. This complexity extends to both hardware implementation and software interfaces, requiring careful coordination across the memory subsystem.

Manufacturing variability compounds these challenges. As process nodes shrink to accommodate higher densities, the variance in electrical characteristics between memory chips increases. Error correction mechanisms must be robust enough to handle this manufacturing variability while still providing consistent performance across all memory modules.

Thermal considerations further complicate error correction implementation. Higher data rates generate more heat, which can increase error rates in a feedback loop. Error correction mechanisms must be designed to remain effective even as operating temperatures fluctuate under varying workloads.

The industry currently lacks standardized benchmarks for evaluating error correction efficiency in GDDR7 implementations. Without clear metrics for comparing different approaches, memory manufacturers and system integrators struggle to make informed decisions about appropriate error correction strategies for specific applications.

Finally, there is the challenge of predicting future error patterns. As GDDR7 technology matures and applications evolve, the nature and distribution of errors may change, potentially rendering current correction strategies less effective. Adaptive error correction mechanisms that can evolve with changing error profiles represent a promising but technically challenging direction for future research.

The current Forward Error Correction (FEC) implementations in GDDR6X and early GDDR7 designs struggle with balancing correction capability against performance overhead. As GDDR7 pushes toward 32 Gbps per pin, the error correction mechanisms must handle significantly higher raw BER while maintaining acceptable latency profiles. This creates a fundamental tension between reliability and performance that memory designers must navigate.

Power consumption presents another critical challenge. More sophisticated error correction algorithms require additional logic circuitry, which increases both static and dynamic power consumption. In mobile and power-constrained applications, this additional power overhead can be prohibitive, forcing compromises in error resilience capabilities.

The architectural integration of error correction mechanisms also poses significant challenges. GDDR7 memory controllers must implement increasingly complex FEC schemes while maintaining backward compatibility with existing memory hierarchies. This complexity extends to both hardware implementation and software interfaces, requiring careful coordination across the memory subsystem.

Manufacturing variability compounds these challenges. As process nodes shrink to accommodate higher densities, the variance in electrical characteristics between memory chips increases. Error correction mechanisms must be robust enough to handle this manufacturing variability while still providing consistent performance across all memory modules.

Thermal considerations further complicate error correction implementation. Higher data rates generate more heat, which can increase error rates in a feedback loop. Error correction mechanisms must be designed to remain effective even as operating temperatures fluctuate under varying workloads.

The industry currently lacks standardized benchmarks for evaluating error correction efficiency in GDDR7 implementations. Without clear metrics for comparing different approaches, memory manufacturers and system integrators struggle to make informed decisions about appropriate error correction strategies for specific applications.

Finally, there is the challenge of predicting future error patterns. As GDDR7 technology matures and applications evolve, the nature and distribution of errors may change, potentially rendering current correction strategies less effective. Adaptive error correction mechanisms that can evolve with changing error profiles represent a promising but technically challenging direction for future research.

Current FEC Implementation Strategies for GDDR7

01 Error correction code (ECC) implementations in GDDR7 memory

GDDR7 memory systems implement advanced error correction code (ECC) mechanisms to enhance data reliability. These implementations include forward error correction (FEC) techniques that can detect and correct bit errors without requiring data retransmission. The ECC implementations are designed to handle specific bit error rates (BER) targets while minimizing overhead on memory performance. These systems often employ multi-bit error detection and single-bit error correction capabilities to maintain data integrity in high-speed memory operations.- Error correction code (ECC) implementations for GDDR7 memory: Various error correction code (ECC) implementations are used in GDDR7 memory to enhance error resilience. These implementations include forward error correction (FEC) techniques that can detect and correct bit errors without requiring retransmission of data. Advanced ECC algorithms are specifically designed to address the high-speed requirements of GDDR7 memory while maintaining low latency. These implementations help achieve target bit error rates (BER) while optimizing memory performance and reliability.

- FEC overhead optimization techniques for high-speed memory: Forward Error Correction (FEC) overhead optimization techniques are crucial for GDDR7 memory systems to balance error resilience with performance. These techniques involve adaptive FEC schemes that adjust the redundancy level based on channel conditions and error rates. By optimizing FEC overhead, memory systems can achieve target reliability while minimizing the impact on bandwidth and latency. Advanced algorithms dynamically allocate error correction resources based on real-time error statistics and memory usage patterns.

- BER target achievement strategies for GDDR7 reliability: Bit Error Rate (BER) target achievement strategies are implemented in GDDR7 memory to ensure reliable operation at high speeds. These strategies include sophisticated error detection mechanisms, adaptive equalization techniques, and signal integrity optimizations. By carefully managing voltage margins, timing parameters, and thermal characteristics, GDDR7 memory can maintain BER targets even under challenging operating conditions. Advanced testing methodologies are employed to validate BER performance across various workloads and environmental conditions.

- Memory reliability enhancement through architectural innovations: Architectural innovations in GDDR7 memory design significantly enhance reliability through structural redundancy, improved signal integrity, and advanced error management. These innovations include redundant memory arrays, enhanced refresh mechanisms, and sophisticated power management schemes. By implementing resilient circuit designs and robust interface protocols, GDDR7 memory can maintain data integrity even in the presence of transient errors. These architectural enhancements work in conjunction with error correction mechanisms to achieve comprehensive reliability improvements.

- Error resilience testing and validation methodologies: Comprehensive testing and validation methodologies are essential for ensuring GDDR7 memory error resilience. These methodologies include stress testing under extreme conditions, statistical error injection, and long-term reliability assessment. Advanced diagnostic tools analyze error patterns to identify potential weaknesses in memory design or implementation. By simulating various error scenarios and validating recovery mechanisms, manufacturers can ensure that GDDR7 memory meets stringent reliability requirements across its operational lifetime. These testing approaches help establish confidence in memory performance under diverse workloads and environmental conditions.

02 FEC overhead optimization for GDDR7 memory reliability

Forward Error Correction (FEC) overhead optimization is crucial for GDDR7 memory systems to balance error resilience with performance. These techniques involve calculating the optimal amount of redundancy needed to achieve target reliability levels while minimizing impact on memory bandwidth and latency. Advanced algorithms dynamically adjust FEC overhead based on operating conditions, error patterns, and system requirements. This optimization ensures that memory systems can maintain high data transfer rates while still providing sufficient protection against errors in challenging environments.Expand Specific Solutions03 Bit Error Rate (BER) target management in high-speed memory

Managing Bit Error Rate (BER) targets is essential for GDDR7 memory reliability. These systems employ sophisticated error monitoring and prediction techniques to establish appropriate BER thresholds based on application requirements. Adaptive mechanisms continuously assess channel conditions and adjust error correction strategies to maintain BER within acceptable limits. The management systems include performance counters and diagnostic tools that track error occurrences and patterns, enabling proactive intervention before errors impact system stability.Expand Specific Solutions04 Memory reliability enhancement through architectural innovations

GDDR7 memory incorporates architectural innovations specifically designed to enhance reliability. These include redundant memory arrays, error-resilient circuit designs, and voltage/timing margin improvements that reduce susceptibility to transient errors. Advanced refresh mechanisms help mitigate bit-flip errors caused by charge leakage, while thermal management features prevent reliability degradation at high operating temperatures. The architecture also supports graceful degradation modes that can isolate and bypass faulty memory sections while maintaining overall system operation.Expand Specific Solutions05 Error resilience testing and validation methodologies

Comprehensive testing and validation methodologies are employed to ensure GDDR7 memory error resilience meets reliability targets. These include stress testing under extreme operating conditions, statistical error injection to verify correction capabilities, and long-term reliability assessment. Advanced simulation models predict error behavior under various environmental and operational scenarios. The validation process incorporates both manufacturing tests and in-system monitoring capabilities that continuously verify error resilience performance throughout the memory's operational lifetime.Expand Specific Solutions

Key Players in GDDR7 and FEC Technology

GDDR7 Error Resilience technology is currently in an emerging growth phase, with the market expected to expand significantly as high-performance computing and AI applications drive demand for more reliable memory solutions. The global market for error-resilient memory technologies is projected to reach several billion dollars by 2025. Technologically, the field is advancing rapidly but remains in mid-maturity, with key players developing differentiated approaches. Samsung Electronics and Micron Technology lead in GDDR7 memory development, while Intel and QUALCOMM focus on integrated error correction solutions. SK hynix has made notable progress in Forward Error Correction (FEC) implementations, and Rambus offers specialized IP for error resilience. IBM and Huawei are advancing enterprise-grade reliability solutions, creating a competitive landscape where technical differentiation in achieving lower Bit Error Rate targets while minimizing overhead is becoming crucial.

Intel Corp.

Technical Solution: Intel's approach to GDDR7 error resilience centers on their "Integrated Memory Resilience" architecture that addresses reliability challenges across multiple system levels. Their solution implements a sophisticated error correction framework with variable FEC overhead ranging from 5-12% depending on application requirements and operating conditions. Intel's implementation utilizes a combination of hardware-based ECC (Error-Correcting Code) and software-assisted error management techniques to achieve a target BER of 10^-15. A distinctive feature of Intel's approach is their "System-Level Resilience" technology that coordinates error management between memory subsystems and processing elements, allowing for intelligent workload adaptation when memory errors are detected. Their architecture includes dedicated hardware monitors that track error statistics and voltage/timing margins in real-time, enabling dynamic adjustment of memory controller parameters to maintain optimal reliability. Intel has also developed specialized error correction optimizations for AI and high-performance computing workloads that provide enhanced protection for critical data structures while minimizing overhead for less sensitive data.

Strengths: Comprehensive system-level approach provides superior coordination between memory and processing elements; workload-specific optimizations deliver excellent performance for targeted applications. Weaknesses: Tight integration with Intel processors may limit flexibility with third-party systems; some advanced features require specific software support.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung's GDDR7 error resilience technology implements a sophisticated multi-level error correction framework designed specifically for high-bandwidth graphics applications. Their solution features an innovative "Tiered Reliability Architecture" that applies different levels of error protection based on data criticality. For critical system data, Samsung employs high-strength LDPC codes with up to 15% overhead, while less critical graphical data receives lighter-weight protection with approximately 5-8% overhead. This approach optimizes the performance-reliability tradeoff across different data types. Samsung's implementation achieves a baseline BER of 10^-14 with capability to dynamically improve to 10^-16 for critical operations. The system incorporates advanced channel monitoring that continuously evaluates signal integrity and proactively adjusts equalization parameters to maintain optimal performance. Samsung has also developed proprietary "Thermal-Aware FEC" technology that automatically increases error correction strength during high-temperature conditions when errors are more likely to occur.

Strengths: Intelligent allocation of FEC resources based on data criticality maximizes effective bandwidth; thermal-aware adaptation provides excellent reliability under varying conditions. Weaknesses: Complex implementation requires sophisticated controller logic; tiered protection approach may create challenges for applications with unpredictable data criticality patterns.

Core Error Resilience Mechanisms and Innovations

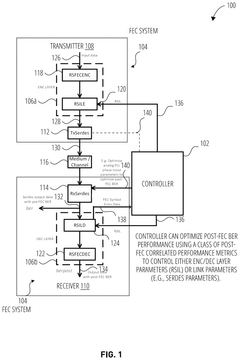

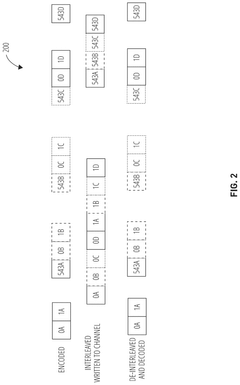

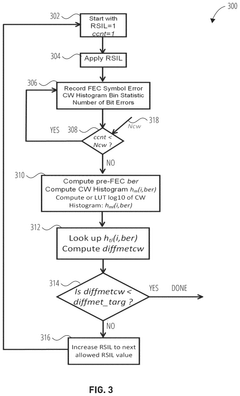

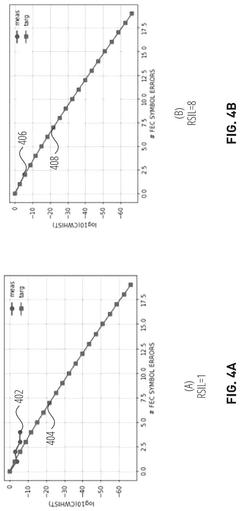

Adapting forward error correction (FEC) or link parameters for improved post-FEC performance

PatentActiveUS12267210B2

Innovation

- The implementation of post-FEC correlated performance metrics, such as codeword histogram difference metrics and auto-correlation function metrics, allows for dynamic adaptation of FEC-related and link parameters to optimize post-FEC BER performance, overcoming the limitations of conventional systems.

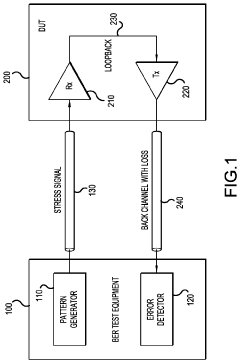

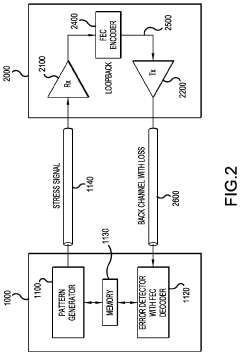

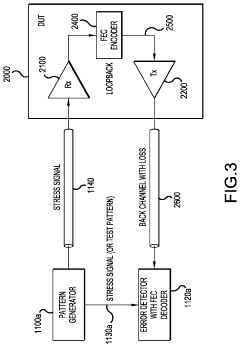

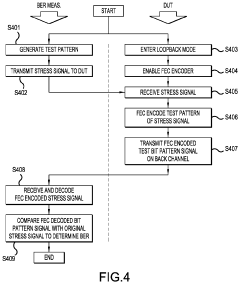

Bit error ratio (BER) measurement including forward error correction (FEC) on back channel

PatentActiveUS11228380B2

Innovation

- The implementation of forward error correction (FEC) encoding and decoding in the loopback mode of the device under test (DUT) to differentiate between errors introduced by the back channel and those caused by the receiver, allowing for a more reliable assessment of the BER by eliminating back channel-induced errors.

Power-Performance-Reliability Tradeoffs in GDDR7

The intricate relationship between power consumption, performance capabilities, and reliability metrics forms a critical trilemma in GDDR7 memory design. As memory speeds push beyond 32 Gbps, these three factors become increasingly interdependent, requiring sophisticated engineering approaches to achieve optimal balance.

Power consumption in GDDR7 increases substantially with higher data rates, primarily due to the need for more aggressive signal equalization, stronger drivers, and more complex error correction circuitry. Initial measurements suggest that GDDR7 may consume 15-20% more power per bit transferred compared to GDDR6X at equivalent speeds, though this is offset by improved energy efficiency per computation.

Performance scaling in GDDR7 introduces new reliability challenges as signal integrity degrades with increasing frequencies. The implementation of PAM3 signaling (3-level pulse amplitude modulation) enables higher data rates but narrows the noise margin between signal levels, making the system more susceptible to errors. This necessitates more sophisticated error detection and correction mechanisms.

Reliability becomes the limiting factor at higher speeds, with bit error rates (BER) increasing exponentially beyond certain frequency thresholds. GDDR7 implements advanced Forward Error Correction (FEC) schemes that can maintain system reliability at the cost of additional power and latency overhead. These FEC mechanisms consume approximately 5-8% of the total power budget but reduce effective uncorrectable error rates by several orders of magnitude.

The tradeoff optimization varies significantly across application domains. High-performance computing applications may prioritize reliability and accept higher power consumption, while mobile graphics solutions might sacrifice maximum performance to maintain power efficiency. Gaming applications typically seek a balance that maximizes performance while maintaining acceptable error rates.

Temperature sensitivity adds another dimension to this relationship, as higher operating temperatures can dramatically increase error rates. GDDR7 implementations include adaptive power management features that can modulate performance based on thermal conditions, ensuring reliability under varying environmental circumstances.

Memory controller designs for GDDR7 must incorporate sophisticated power-aware scheduling algorithms that can dynamically adjust refresh rates, signal voltage levels, and error correction aggressiveness based on workload characteristics and system conditions. These algorithms typically monitor error statistics in real-time and can preemptively reduce performance parameters when error rates approach critical thresholds.

Power consumption in GDDR7 increases substantially with higher data rates, primarily due to the need for more aggressive signal equalization, stronger drivers, and more complex error correction circuitry. Initial measurements suggest that GDDR7 may consume 15-20% more power per bit transferred compared to GDDR6X at equivalent speeds, though this is offset by improved energy efficiency per computation.

Performance scaling in GDDR7 introduces new reliability challenges as signal integrity degrades with increasing frequencies. The implementation of PAM3 signaling (3-level pulse amplitude modulation) enables higher data rates but narrows the noise margin between signal levels, making the system more susceptible to errors. This necessitates more sophisticated error detection and correction mechanisms.

Reliability becomes the limiting factor at higher speeds, with bit error rates (BER) increasing exponentially beyond certain frequency thresholds. GDDR7 implements advanced Forward Error Correction (FEC) schemes that can maintain system reliability at the cost of additional power and latency overhead. These FEC mechanisms consume approximately 5-8% of the total power budget but reduce effective uncorrectable error rates by several orders of magnitude.

The tradeoff optimization varies significantly across application domains. High-performance computing applications may prioritize reliability and accept higher power consumption, while mobile graphics solutions might sacrifice maximum performance to maintain power efficiency. Gaming applications typically seek a balance that maximizes performance while maintaining acceptable error rates.

Temperature sensitivity adds another dimension to this relationship, as higher operating temperatures can dramatically increase error rates. GDDR7 implementations include adaptive power management features that can modulate performance based on thermal conditions, ensuring reliability under varying environmental circumstances.

Memory controller designs for GDDR7 must incorporate sophisticated power-aware scheduling algorithms that can dynamically adjust refresh rates, signal voltage levels, and error correction aggressiveness based on workload characteristics and system conditions. These algorithms typically monitor error statistics in real-time and can preemptively reduce performance parameters when error rates approach critical thresholds.

Standardization Efforts for GDDR7 Error Resilience

The standardization of GDDR7 error resilience mechanisms represents a critical collaborative effort across the memory industry. JEDEC, as the primary standards body for semiconductor memory technologies, has established working groups specifically focused on developing comprehensive specifications for GDDR7 error detection and correction methodologies. These standardization efforts aim to ensure interoperability between memory components from different manufacturers while maintaining consistent reliability targets.

Industry consensus is forming around standardized Forward Error Correction (FEC) implementations that balance error correction capabilities with bandwidth overhead constraints. The JEDEC GDDR7 task group has proposed several FEC schemes with varying correction capabilities, including single-bit error correction/double-bit error detection (SEC-DED) and more advanced multi-bit correction algorithms specifically tailored for high-speed memory interfaces.

The standardization process has established specific Bit Error Rate (BER) targets that GDDR7 memory must achieve across different operating conditions. These targets typically specify maximum acceptable raw BER values in the range of 10^-15 to 10^-12, with post-FEC BER requirements several orders of magnitude lower. Memory manufacturers must demonstrate compliance with these standardized BER targets through rigorous testing procedures.

Reliability qualification standards for GDDR7 are being developed to ensure consistent performance across temperature ranges, voltage variations, and aging effects. These standards define specific test methodologies for evaluating error resilience under worst-case operating conditions and accelerated stress testing. The qualification procedures include standardized metrics for quantifying error correction overhead impact on effective bandwidth.

Cross-industry collaboration has been essential in developing these standards, with participation from memory manufacturers, GPU vendors, and system integrators. This collaborative approach ensures that standardized error resilience mechanisms address the requirements of diverse applications, from high-performance computing to automotive and AI acceleration.

The standardization timeline indicates that preliminary GDDR7 error resilience specifications were released in early 2023, with final ratification expected by mid-2024. This phased approach allows for industry feedback and refinement of the specifications before widespread implementation. Memory manufacturers have already begun developing products that comply with these emerging standards.

Compliance testing frameworks are being established to verify that GDDR7 memory products meet the standardized error resilience requirements. These frameworks include reference test patterns specifically designed to stress error detection and correction mechanisms under various operating conditions.

Industry consensus is forming around standardized Forward Error Correction (FEC) implementations that balance error correction capabilities with bandwidth overhead constraints. The JEDEC GDDR7 task group has proposed several FEC schemes with varying correction capabilities, including single-bit error correction/double-bit error detection (SEC-DED) and more advanced multi-bit correction algorithms specifically tailored for high-speed memory interfaces.

The standardization process has established specific Bit Error Rate (BER) targets that GDDR7 memory must achieve across different operating conditions. These targets typically specify maximum acceptable raw BER values in the range of 10^-15 to 10^-12, with post-FEC BER requirements several orders of magnitude lower. Memory manufacturers must demonstrate compliance with these standardized BER targets through rigorous testing procedures.

Reliability qualification standards for GDDR7 are being developed to ensure consistent performance across temperature ranges, voltage variations, and aging effects. These standards define specific test methodologies for evaluating error resilience under worst-case operating conditions and accelerated stress testing. The qualification procedures include standardized metrics for quantifying error correction overhead impact on effective bandwidth.

Cross-industry collaboration has been essential in developing these standards, with participation from memory manufacturers, GPU vendors, and system integrators. This collaborative approach ensures that standardized error resilience mechanisms address the requirements of diverse applications, from high-performance computing to automotive and AI acceleration.

The standardization timeline indicates that preliminary GDDR7 error resilience specifications were released in early 2023, with final ratification expected by mid-2024. This phased approach allows for industry feedback and refinement of the specifications before widespread implementation. Memory manufacturers have already begun developing products that comply with these emerging standards.

Compliance testing frameworks are being established to verify that GDDR7 memory products meet the standardized error resilience requirements. These frameworks include reference test patterns specifically designed to stress error detection and correction mechanisms under various operating conditions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!