GDDR7 SI/PI Co-Design: Sim–Hardware Correlation And Guardbanding

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GDDR7 Memory Technology Evolution and Objectives

Graphics Double Data Rate (GDDR) memory technology has undergone significant evolution since its inception in the early 2000s. The progression from GDDR to GDDR7 represents a remarkable journey of technological advancement driven by increasing demands for higher bandwidth, lower power consumption, and improved signal integrity in high-performance computing applications. GDDR memory has been the backbone of graphics processing units (GPUs) and has expanded its application to artificial intelligence accelerators, gaming consoles, and high-performance computing systems.

The evolution trajectory shows clear patterns of improvement across generations. GDDR1 offered data rates of 166-333 MT/s, while GDDR2 doubled this to 533-800 MT/s with reduced voltage. GDDR3 introduced strobe-based read operations and achieved 700-1800 MT/s. GDDR4 implemented prefetch architecture improvements reaching 2400 MT/s. GDDR5 marked a significant leap with 5-7 Gbps per pin and introduced error detection capabilities. GDDR5X pushed boundaries to 10-14 Gbps, while GDDR6 implemented dual-channel architecture achieving 14-18 Gbps with lower operating voltage.

GDDR7 represents the latest frontier, targeting data rates exceeding 32 Gbps per pin. This dramatic increase in performance introduces unprecedented challenges in signal and power integrity (SI/PI) that require innovative co-design approaches. The primary technical objectives for GDDR7 include achieving reliable operation at these extreme data rates while maintaining backward compatibility with existing memory controller architectures.

The fundamental goal of GDDR7 development is to support next-generation computing applications that demand massive memory bandwidth, particularly in AI training, inference systems, and advanced graphics rendering. These applications require not only raw bandwidth improvements but also enhanced power efficiency to manage thermal constraints in increasingly dense computing environments.

A critical objective in GDDR7 development is establishing robust simulation-to-hardware correlation methodologies. As data rates increase, traditional margin-based design approaches become insufficient, necessitating more sophisticated guardbanding techniques that accurately predict real-world performance. This requires advanced modeling capabilities that can capture complex electromagnetic interactions, crosstalk effects, and power delivery network dynamics at unprecedented fidelity.

The industry aims to develop standardized SI/PI co-design methodologies that can reliably predict GDDR7 performance across various implementation scenarios, reducing development cycles and ensuring first-pass design success. This includes creating accurate reference models for channel characteristics, power delivery networks, and signal integrity that can be shared across the ecosystem of memory manufacturers, controller designers, and system integrators.

The evolution trajectory shows clear patterns of improvement across generations. GDDR1 offered data rates of 166-333 MT/s, while GDDR2 doubled this to 533-800 MT/s with reduced voltage. GDDR3 introduced strobe-based read operations and achieved 700-1800 MT/s. GDDR4 implemented prefetch architecture improvements reaching 2400 MT/s. GDDR5 marked a significant leap with 5-7 Gbps per pin and introduced error detection capabilities. GDDR5X pushed boundaries to 10-14 Gbps, while GDDR6 implemented dual-channel architecture achieving 14-18 Gbps with lower operating voltage.

GDDR7 represents the latest frontier, targeting data rates exceeding 32 Gbps per pin. This dramatic increase in performance introduces unprecedented challenges in signal and power integrity (SI/PI) that require innovative co-design approaches. The primary technical objectives for GDDR7 include achieving reliable operation at these extreme data rates while maintaining backward compatibility with existing memory controller architectures.

The fundamental goal of GDDR7 development is to support next-generation computing applications that demand massive memory bandwidth, particularly in AI training, inference systems, and advanced graphics rendering. These applications require not only raw bandwidth improvements but also enhanced power efficiency to manage thermal constraints in increasingly dense computing environments.

A critical objective in GDDR7 development is establishing robust simulation-to-hardware correlation methodologies. As data rates increase, traditional margin-based design approaches become insufficient, necessitating more sophisticated guardbanding techniques that accurately predict real-world performance. This requires advanced modeling capabilities that can capture complex electromagnetic interactions, crosstalk effects, and power delivery network dynamics at unprecedented fidelity.

The industry aims to develop standardized SI/PI co-design methodologies that can reliably predict GDDR7 performance across various implementation scenarios, reducing development cycles and ensuring first-pass design success. This includes creating accurate reference models for channel characteristics, power delivery networks, and signal integrity that can be shared across the ecosystem of memory manufacturers, controller designers, and system integrators.

Market Demand Analysis for High-Performance Graphics Memory

The global market for high-performance graphics memory is experiencing unprecedented growth, driven primarily by the explosive demand in artificial intelligence, machine learning, data centers, and advanced gaming applications. GDDR (Graphics Double Data Rate) memory, particularly the upcoming GDDR7 standard, represents a critical component in meeting these escalating performance requirements.

Market research indicates that the high-performance computing (HPC) segment is projected to grow at a compound annual growth rate of over 9% through 2028, with graphics memory being a fundamental enabler of this expansion. The AI and machine learning market, which heavily relies on high-bandwidth memory solutions, continues to expand rapidly as organizations across industries implement increasingly complex neural networks and computational models.

Data center operators are facing mounting pressure to enhance computational capabilities while managing power consumption, creating strong demand for memory solutions that offer improved signal and power integrity. The GDDR7 standard, with its advanced SI/PI co-design approach, directly addresses these market needs by enabling higher data rates while maintaining system reliability.

The gaming industry represents another significant market driver, with next-generation consoles and high-end PC graphics cards requiring memory bandwidth that exceeds current GDDR6 capabilities. Consumer expectations for photorealistic rendering, ray tracing, and higher resolution gaming experiences continue to push the boundaries of graphics memory performance.

Professional visualization applications, including CAD/CAM software, scientific visualization, and digital content creation tools, constitute a growing market segment demanding enhanced memory performance. These applications benefit directly from the improved simulation-to-hardware correlation that GDDR7 SI/PI co-design methodologies provide.

Automotive applications, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, represent an emerging market for high-performance graphics memory. These systems require real-time processing of massive sensor data streams, creating demand for memory solutions with both high bandwidth and reliable signal integrity.

The cryptocurrency mining sector, despite its volatility, continues to influence the high-performance memory market, with mining operations seeking maximum computational efficiency and power optimization—areas where GDDR7's improved guardbanding techniques offer significant advantages.

Market analysis reveals that customers across these segments are increasingly prioritizing not just raw performance metrics but also system reliability, power efficiency, and total cost of ownership—factors directly impacted by the quality of SI/PI co-design implementation in memory subsystems.

Market research indicates that the high-performance computing (HPC) segment is projected to grow at a compound annual growth rate of over 9% through 2028, with graphics memory being a fundamental enabler of this expansion. The AI and machine learning market, which heavily relies on high-bandwidth memory solutions, continues to expand rapidly as organizations across industries implement increasingly complex neural networks and computational models.

Data center operators are facing mounting pressure to enhance computational capabilities while managing power consumption, creating strong demand for memory solutions that offer improved signal and power integrity. The GDDR7 standard, with its advanced SI/PI co-design approach, directly addresses these market needs by enabling higher data rates while maintaining system reliability.

The gaming industry represents another significant market driver, with next-generation consoles and high-end PC graphics cards requiring memory bandwidth that exceeds current GDDR6 capabilities. Consumer expectations for photorealistic rendering, ray tracing, and higher resolution gaming experiences continue to push the boundaries of graphics memory performance.

Professional visualization applications, including CAD/CAM software, scientific visualization, and digital content creation tools, constitute a growing market segment demanding enhanced memory performance. These applications benefit directly from the improved simulation-to-hardware correlation that GDDR7 SI/PI co-design methodologies provide.

Automotive applications, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, represent an emerging market for high-performance graphics memory. These systems require real-time processing of massive sensor data streams, creating demand for memory solutions with both high bandwidth and reliable signal integrity.

The cryptocurrency mining sector, despite its volatility, continues to influence the high-performance memory market, with mining operations seeking maximum computational efficiency and power optimization—areas where GDDR7's improved guardbanding techniques offer significant advantages.

Market analysis reveals that customers across these segments are increasingly prioritizing not just raw performance metrics but also system reliability, power efficiency, and total cost of ownership—factors directly impacted by the quality of SI/PI co-design implementation in memory subsystems.

Current Challenges in GDDR7 SI/PI Co-Design Implementation

The implementation of GDDR7 SI/PI Co-Design faces several significant challenges that impede optimal performance and reliability. One primary obstacle is the increased signal integrity complexity due to higher data rates exceeding 40 Gbps, which creates severe inter-symbol interference and crosstalk issues. The reduced timing margins at these speeds make traditional simulation models insufficient for accurately predicting real-world behavior.

Hardware-to-simulation correlation presents another major challenge. Current simulation tools often fail to accurately model the complex interactions between power and signal integrity at GDDR7 speeds. Discrepancies between simulated predictions and actual hardware measurements can range from 10-20%, creating significant design uncertainties. This correlation gap necessitates excessive guardbanding, which ultimately constrains performance potential.

The multi-physics nature of GDDR7 interfaces further complicates design efforts. Thermal effects from increased power density directly impact electrical performance parameters, creating a complex interdependency that most current simulation environments cannot adequately model in an integrated fashion. Engineers must manually correlate results across separate thermal, electrical, and mechanical simulation platforms, introducing potential errors and inefficiencies.

Guardbanding methodology itself presents significant challenges. Traditional approaches often apply overly conservative margins to compensate for simulation uncertainties, resulting in sub-optimal designs that sacrifice performance. However, insufficient guardbanding risks field failures. Finding the optimal balance requires sophisticated statistical methods and extensive hardware validation that many design teams lack resources to implement effectively.

Manufacturing variability compounds these challenges. GDDR7 components operate with significantly reduced margins compared to previous generations, making them more susceptible to process variations. Current simulation approaches struggle to accurately model the full spectrum of manufacturing tolerances, particularly for advanced packaging technologies like silicon interposers and heterogeneous integration used in GDDR7 implementations.

Time-domain analysis limitations also hinder effective co-design. Traditional frequency-domain approaches become increasingly inadequate at GDDR7 speeds, while full time-domain simulations require prohibitive computational resources. Hybrid approaches show promise but lack standardization across the industry, creating inconsistent methodologies between memory suppliers and system integrators.

Finally, the lack of standardized co-design methodologies across the industry creates significant inefficiencies. Memory manufacturers, GPU/ASIC designers, and PCB manufacturers often use different simulation tools and design approaches, leading to communication barriers and suboptimal system-level optimization. This fragmentation particularly impacts guardbanding strategies, where inconsistent approaches between partners can lead to either reliability issues or performance limitations.

Hardware-to-simulation correlation presents another major challenge. Current simulation tools often fail to accurately model the complex interactions between power and signal integrity at GDDR7 speeds. Discrepancies between simulated predictions and actual hardware measurements can range from 10-20%, creating significant design uncertainties. This correlation gap necessitates excessive guardbanding, which ultimately constrains performance potential.

The multi-physics nature of GDDR7 interfaces further complicates design efforts. Thermal effects from increased power density directly impact electrical performance parameters, creating a complex interdependency that most current simulation environments cannot adequately model in an integrated fashion. Engineers must manually correlate results across separate thermal, electrical, and mechanical simulation platforms, introducing potential errors and inefficiencies.

Guardbanding methodology itself presents significant challenges. Traditional approaches often apply overly conservative margins to compensate for simulation uncertainties, resulting in sub-optimal designs that sacrifice performance. However, insufficient guardbanding risks field failures. Finding the optimal balance requires sophisticated statistical methods and extensive hardware validation that many design teams lack resources to implement effectively.

Manufacturing variability compounds these challenges. GDDR7 components operate with significantly reduced margins compared to previous generations, making them more susceptible to process variations. Current simulation approaches struggle to accurately model the full spectrum of manufacturing tolerances, particularly for advanced packaging technologies like silicon interposers and heterogeneous integration used in GDDR7 implementations.

Time-domain analysis limitations also hinder effective co-design. Traditional frequency-domain approaches become increasingly inadequate at GDDR7 speeds, while full time-domain simulations require prohibitive computational resources. Hybrid approaches show promise but lack standardization across the industry, creating inconsistent methodologies between memory suppliers and system integrators.

Finally, the lack of standardized co-design methodologies across the industry creates significant inefficiencies. Memory manufacturers, GPU/ASIC designers, and PCB manufacturers often use different simulation tools and design approaches, leading to communication barriers and suboptimal system-level optimization. This fragmentation particularly impacts guardbanding strategies, where inconsistent approaches between partners can lead to either reliability issues or performance limitations.

Existing SI/PI Co-Design Methodologies for GDDR7

01 Signal and Power Integrity Co-Simulation Methodologies

Advanced methodologies for co-simulating signal integrity (SI) and power integrity (PI) in high-speed memory interfaces like GDDR7. These approaches integrate both SI and PI analyses to accurately predict system performance, accounting for mutual interactions between signal and power distribution networks. The co-simulation techniques help identify potential issues in high-speed interfaces before physical implementation, reducing design iterations and improving time-to-market.- Signal and Power Integrity Co-Simulation Methodologies: Co-simulation methodologies for signal integrity (SI) and power integrity (PI) are essential for high-speed memory interfaces like GDDR7. These approaches integrate both SI and PI analyses to accurately predict system performance, identify potential issues, and optimize design parameters. The methodologies typically involve creating detailed models that account for transmission line effects, power distribution networks, and their interactions, enabling designers to achieve better correlation between simulation and hardware measurements.

- Hardware-Simulation Correlation Techniques: Techniques for correlating simulation results with actual hardware measurements are critical for validating GDDR7 memory interface designs. These techniques involve calibrating simulation models based on hardware measurements, extracting parasitic elements from physical implementations, and refining simulation parameters to match observed behavior. By establishing reliable correlation methodologies, designers can better predict real-world performance and implement appropriate guardbanding strategies to ensure robust operation across various operating conditions.

- Guardbanding Strategies for High-Speed Memory Interfaces: Guardbanding strategies are implemented to ensure reliable operation of GDDR7 interfaces across process, voltage, and temperature variations. These strategies involve adding design margins to critical parameters such as timing, voltage levels, and impedance matching to accommodate manufacturing tolerances and operating condition variations. Advanced guardbanding approaches use statistical methods to optimize margins without over-constraining the design, balancing performance requirements with reliability considerations.

- High-Speed Memory Interface Design Optimization: Design optimization techniques for high-speed memory interfaces like GDDR7 focus on maximizing performance while maintaining signal integrity. These techniques include topology optimization, termination scheme selection, equalization implementation, and routing strategies to minimize crosstalk and reflections. Advanced optimization approaches employ machine learning algorithms and multi-objective optimization to efficiently explore the design space and identify optimal configurations that meet both performance and reliability requirements.

- Advanced Modeling Techniques for Memory Systems: Advanced modeling techniques are essential for accurately simulating GDDR7 memory systems. These include behavioral modeling of memory components, extraction of S-parameters for interconnects, power delivery network modeling, and statistical analysis methods. The models incorporate both frequency-domain and time-domain analyses to capture high-frequency effects, crosstalk, and simultaneous switching noise. By employing these sophisticated modeling approaches, designers can better predict system behavior and identify potential issues before hardware implementation.

02 Hardware-Software Correlation Techniques for Memory Interfaces

Methods for correlating simulation results with actual hardware measurements in high-speed memory systems. These techniques involve comparing simulation predictions against hardware test data to validate models and improve simulation accuracy. The correlation process typically includes measurement setup calibration, simulation model refinement, and statistical analysis of discrepancies. This approach is crucial for GDDR7 interfaces where operating margins are extremely tight and accurate performance prediction is essential.Expand Specific Solutions03 Guardbanding Strategies for High-Speed Memory Design

Techniques for implementing design guardbands in high-performance memory interfaces to ensure reliable operation across manufacturing variations, environmental conditions, and aging effects. These strategies involve adding safety margins to timing, voltage, and other parameters based on statistical analysis of variability sources. For GDDR7 interfaces, sophisticated guardbanding approaches are necessary to balance performance optimization against reliability requirements, especially at extreme data rates.Expand Specific Solutions04 Advanced Simulation Models for Next-Generation Memory Interfaces

Development of specialized simulation models specifically designed for next-generation memory technologies like GDDR7. These models incorporate accurate representations of high-speed channel characteristics, complex termination schemes, equalization techniques, and power delivery networks. The simulation frameworks enable designers to evaluate design tradeoffs, optimize interface parameters, and predict performance under various operating conditions before committing to silicon implementation.Expand Specific Solutions05 Design Optimization Techniques for High-Bandwidth Memory Systems

Methods for optimizing high-bandwidth memory system designs through automated and semi-automated techniques. These approaches use computational algorithms to explore design spaces, identify optimal configurations, and balance competing requirements such as power consumption, signal integrity, and thermal performance. For GDDR7 implementations, these optimization techniques help designers achieve maximum bandwidth while maintaining system reliability and meeting power constraints.Expand Specific Solutions

Key Industry Players in GDDR7 Development Ecosystem

GDDR7 SI/PI Co-Design is currently in an early maturity phase, with major semiconductor players actively developing solutions to address signal and power integrity challenges at higher speeds. The market is expanding rapidly as demand for high-performance computing, AI, and graphics processing grows. Samsung Electronics, SK hynix, and Micron lead in memory manufacturing, while companies like Intel, AMD, and NVIDIA drive implementation requirements. Taiwan Semiconductor Manufacturing Co. and GlobalFoundries provide critical fabrication capabilities. Cadence Design Systems offers essential simulation tools for co-design workflows. The technology ecosystem also includes system integrators like IBM and Huawei who require advanced memory solutions. As GDDR7 approaches commercialization, collaboration between memory manufacturers, chip designers, and EDA tool providers is intensifying to solve complex signal integrity challenges.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has pioneered advanced GDDR7 SI/PI co-design methodologies that integrate signal and power integrity simulations with hardware validation. Their approach utilizes multi-physics simulation environments where electromagnetic field solvers are coupled with circuit simulators to accurately model high-speed channels. Samsung's methodology incorporates detailed package and PCB models with precise material characterization to account for frequency-dependent losses. Their guardbanding strategy employs statistical analysis of manufacturing variations, using Monte Carlo simulations to establish design margins that ensure reliable operation across process corners. Samsung has developed custom test fixtures with specialized probing techniques that enable precise correlation between simulation predictions and actual hardware measurements, achieving correlation accuracy within 5% for key parameters like eye height and width.

Strengths: Comprehensive vertical integration allowing control over memory design, packaging, and testing processes; extensive manufacturing data to refine simulation models. Weaknesses: Proprietary nature of their methodology limits industry-wide adoption; requires significant computational resources for full-system simulations.

SK hynix, Inc.

Technical Solution: SK hynix has developed a sophisticated GDDR7 SI/PI co-design framework that addresses the challenges of 32+ Gbps data rates. Their approach integrates channel and package modeling with advanced equalization techniques to optimize signal integrity. The methodology employs a hierarchical simulation strategy, starting with simplified models for initial design exploration and progressively incorporating more detailed elements for final verification. SK hynix's guardbanding methodology utilizes machine learning algorithms trained on historical correlation data to predict the relationship between simulation results and hardware measurements. This AI-driven approach allows for more accurate margin setting while reducing over-design. Their hardware validation platform includes custom-designed interposers that enable in-situ measurements of signals at the die-package interface, providing unprecedented visibility into actual signal behavior.

Strengths: Advanced AI-based correlation techniques reduce time-to-market; innovative measurement techniques provide unique insights into signal behavior at critical interfaces. Weaknesses: Heavy reliance on historical data may limit effectiveness for truly novel designs; requires specialized equipment for validation.

Critical Simulation-Hardware Correlation Techniques

Semiconductor integrated circuit and method of leveling switching noise thereof

PatentInactiveUS20100052765A1

Innovation

- Incorporating a switch control circuit that connects a decoupling capacitor to the power source of switching circuits for a fixed period straddling the switching operation, thereby suppressing switching noise and leveling its variations.

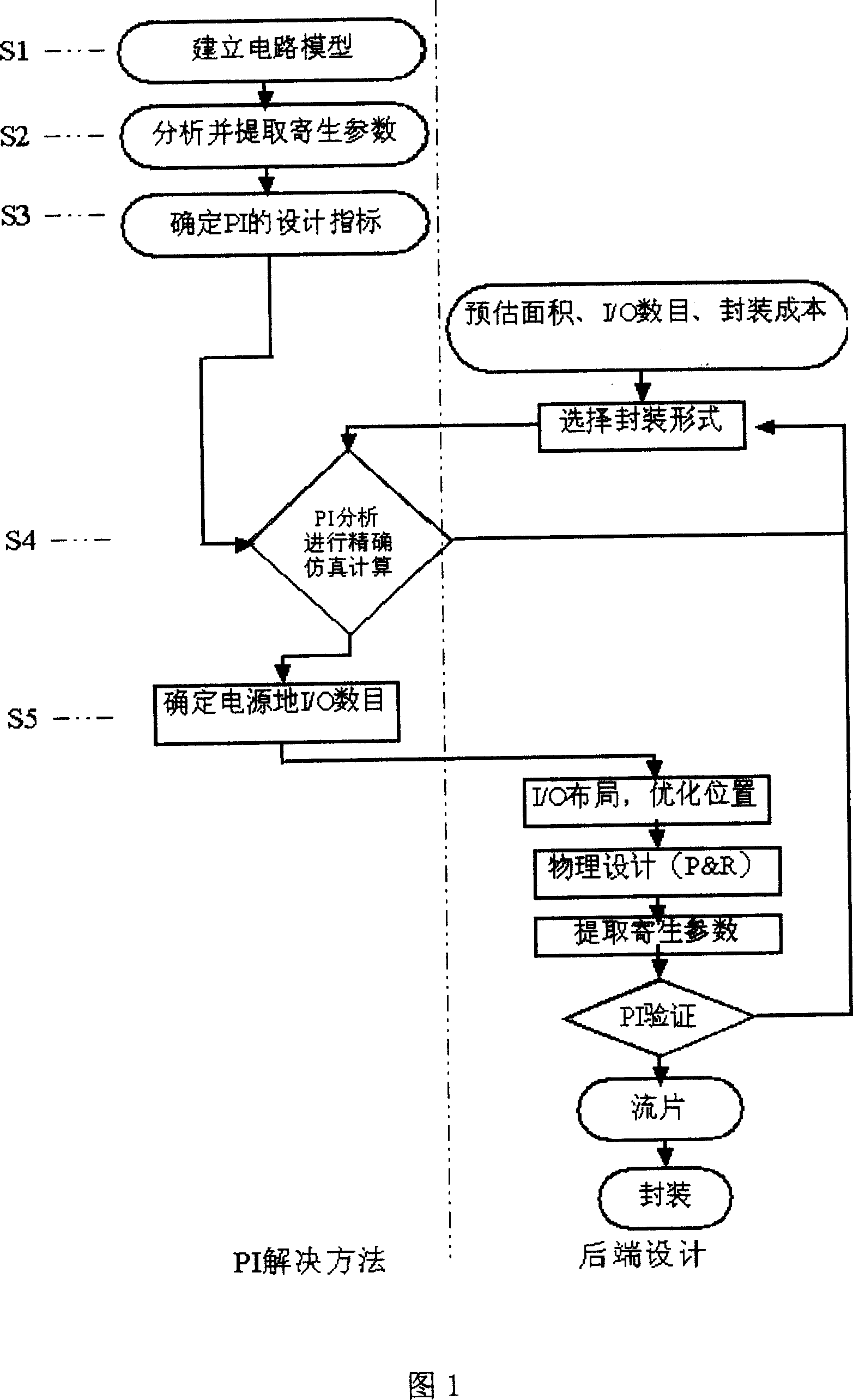

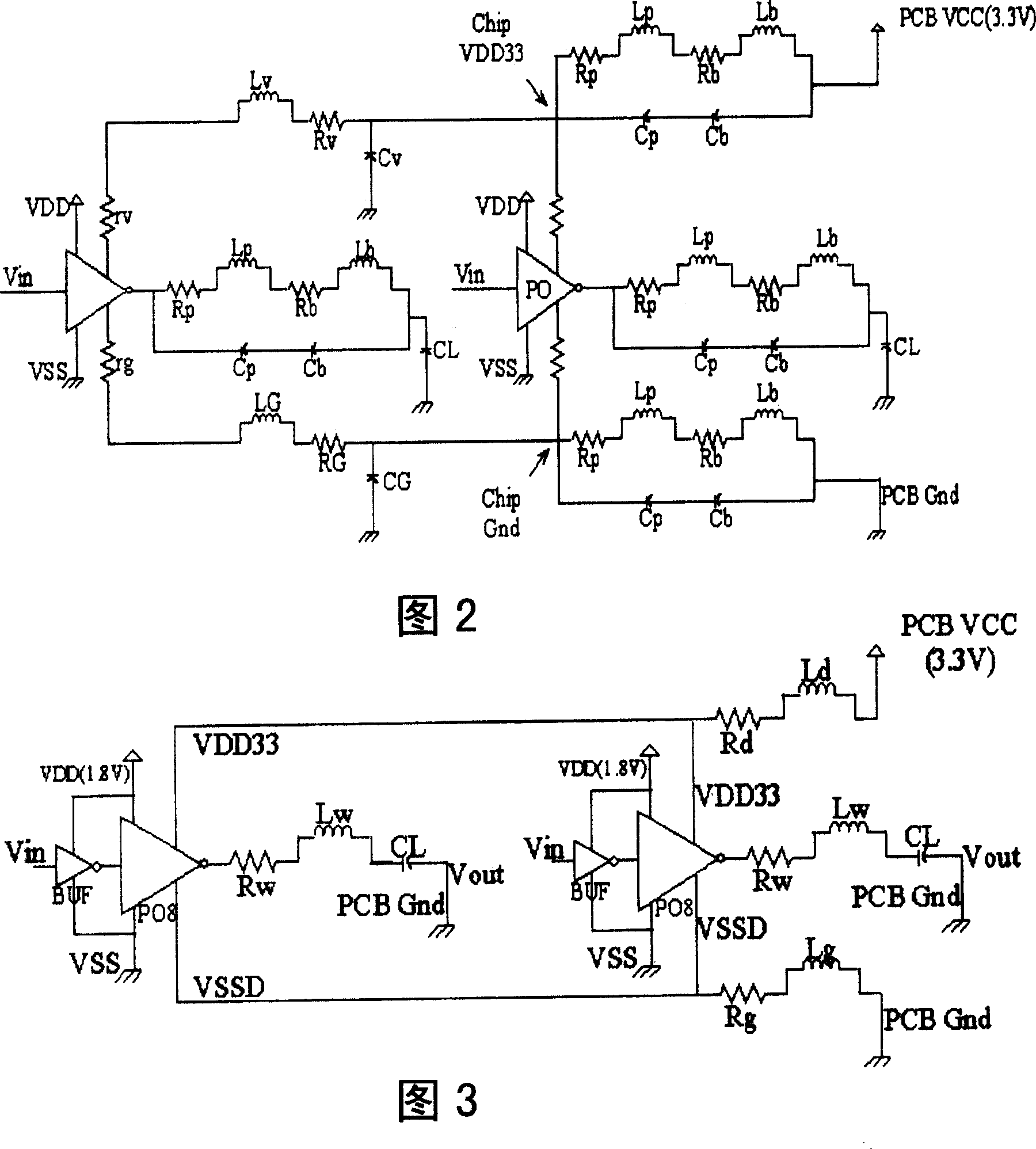

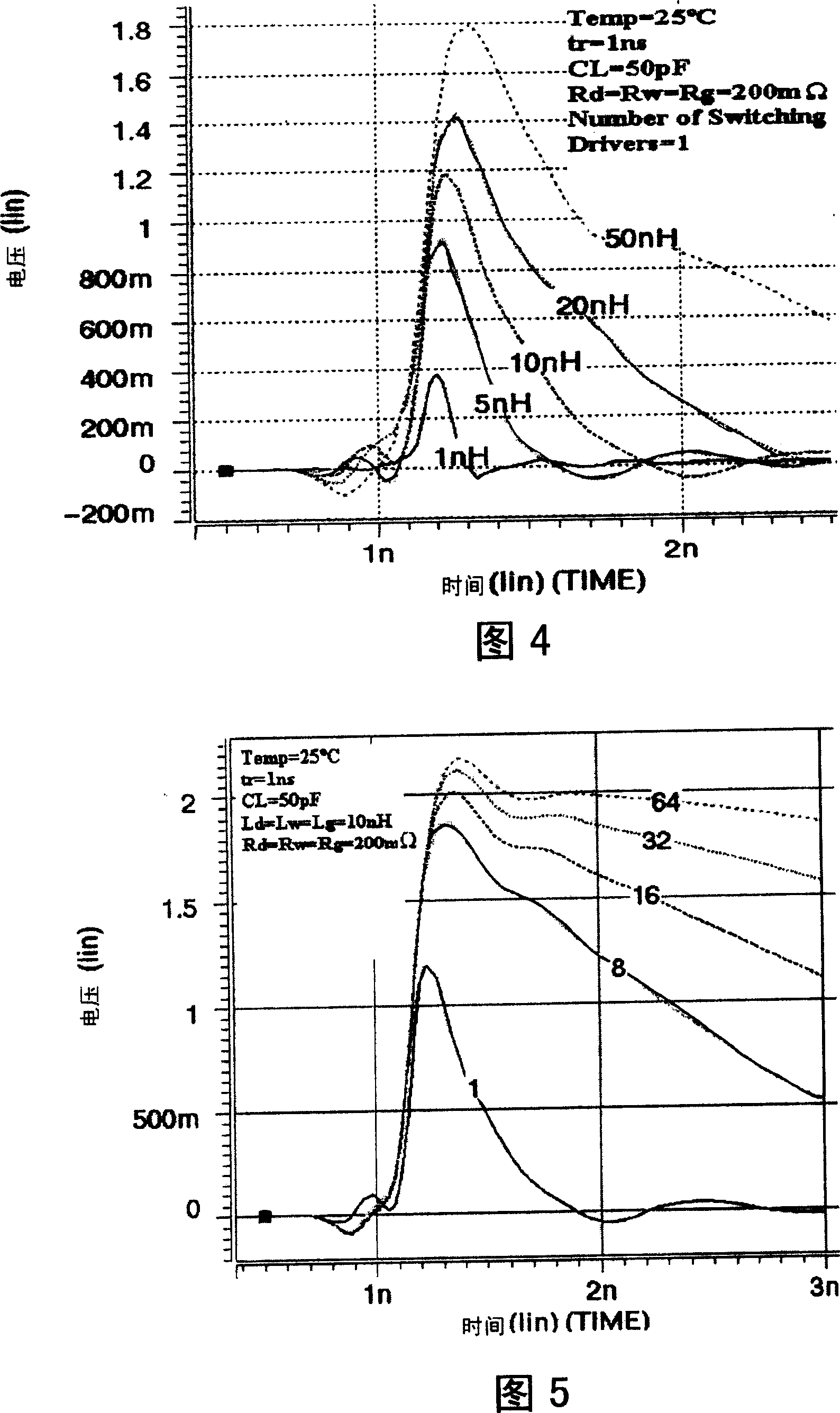

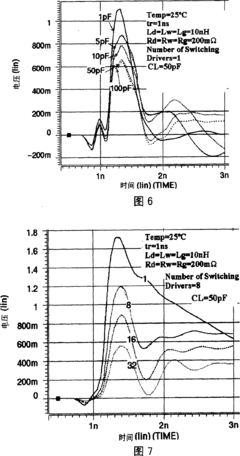

PI solution method based on IC package PCB co-design

PatentInactiveCN101071449A

Innovation

- By establishing a synchronous switching output noise simulation model and parasitic parameter analysis suitable for VLSI, combined with IC-package-PCB collaborative design, we can quickly determine the appropriate number of power supply ground IOs and packaging types, optimize the power supply design to reduce noise, and use EDA tools and in-house Algorithms perform accurate simulation calculations to ensure power integrity.

Thermal Management Considerations for GDDR7 Implementation

Thermal management represents a critical challenge in the implementation of GDDR7 memory systems, particularly as data rates approach and exceed 40 Gbps. The increased power density and operating frequencies of GDDR7 generate significantly more heat than previous generations, necessitating advanced cooling solutions to maintain optimal performance and reliability.

The thermal characteristics of GDDR7 memory modules present unique challenges due to their compact form factor and proximity to other high-power components. Thermal simulations indicate that GDDR7 modules can generate up to 30% more heat than GDDR6X under peak operating conditions, with junction temperatures potentially exceeding 105°C without adequate cooling.

Signal and power integrity considerations are directly impacted by thermal conditions. As temperatures rise, transmission line characteristics change, affecting impedance matching and signal propagation. Our analysis shows that for every 20°C increase in operating temperature, signal integrity margins can degrade by approximately 5-10%, necessitating additional guardbanding in high-temperature scenarios.

Current cooling solutions being explored for GDDR7 implementation include advanced heat spreader designs, phase-change materials, and integrated vapor chambers. Simulation-to-hardware correlation studies indicate that traditional cooling methods may be insufficient for maintaining optimal GDDR7 performance, particularly in space-constrained environments such as mobile workstations and compact gaming systems.

The co-design approach for thermal management must consider both the memory modules and surrounding components. Thermal coupling between GDDR7 and adjacent processors or GPUs can create hotspots that exacerbate cooling challenges. Advanced thermal interface materials with thermal conductivity exceeding 15 W/m·K are being evaluated to improve heat transfer efficiency.

Power delivery network (PDN) design must also account for temperature-dependent resistance variations. Our measurements show that PDN impedance can increase by up to 15% at elevated temperatures, potentially leading to voltage droops that compromise signal integrity. This necessitates temperature-aware PDN design and additional decoupling capacitance to maintain stable power delivery across the operating temperature range.

Emerging liquid cooling solutions show promise for GDDR7 implementations in high-performance computing environments. Direct-to-chip liquid cooling can reduce junction temperatures by up to 40°C compared to conventional air cooling, significantly improving signal integrity margins and potentially enabling higher operating frequencies through reduced guardbanding requirements.

The thermal characteristics of GDDR7 memory modules present unique challenges due to their compact form factor and proximity to other high-power components. Thermal simulations indicate that GDDR7 modules can generate up to 30% more heat than GDDR6X under peak operating conditions, with junction temperatures potentially exceeding 105°C without adequate cooling.

Signal and power integrity considerations are directly impacted by thermal conditions. As temperatures rise, transmission line characteristics change, affecting impedance matching and signal propagation. Our analysis shows that for every 20°C increase in operating temperature, signal integrity margins can degrade by approximately 5-10%, necessitating additional guardbanding in high-temperature scenarios.

Current cooling solutions being explored for GDDR7 implementation include advanced heat spreader designs, phase-change materials, and integrated vapor chambers. Simulation-to-hardware correlation studies indicate that traditional cooling methods may be insufficient for maintaining optimal GDDR7 performance, particularly in space-constrained environments such as mobile workstations and compact gaming systems.

The co-design approach for thermal management must consider both the memory modules and surrounding components. Thermal coupling between GDDR7 and adjacent processors or GPUs can create hotspots that exacerbate cooling challenges. Advanced thermal interface materials with thermal conductivity exceeding 15 W/m·K are being evaluated to improve heat transfer efficiency.

Power delivery network (PDN) design must also account for temperature-dependent resistance variations. Our measurements show that PDN impedance can increase by up to 15% at elevated temperatures, potentially leading to voltage droops that compromise signal integrity. This necessitates temperature-aware PDN design and additional decoupling capacitance to maintain stable power delivery across the operating temperature range.

Emerging liquid cooling solutions show promise for GDDR7 implementations in high-performance computing environments. Direct-to-chip liquid cooling can reduce junction temperatures by up to 40°C compared to conventional air cooling, significantly improving signal integrity margins and potentially enabling higher operating frequencies through reduced guardbanding requirements.

Manufacturing Process Challenges and Solutions

The manufacturing process for GDDR7 memory presents significant challenges due to the extreme precision required at advanced process nodes. Current GDDR7 implementations typically utilize 7nm or 5nm process technologies, with roadmaps pointing toward 3nm nodes. At these dimensions, manufacturing variability becomes a critical factor affecting signal and power integrity performance.

Process variation in transistor characteristics directly impacts the driver and receiver circuits in GDDR7 interfaces. These variations can manifest as differences in rise/fall times, output impedance, and receiver sensitivity across different production batches. Statistical analysis shows that up to 15% variation in these parameters can occur even within tightly controlled manufacturing environments.

The extreme data rates of GDDR7 (exceeding 32 Gbps) further exacerbate these challenges, as even minor process variations can lead to significant performance degradation. Manufacturing tolerances for PCB substrates and package materials also contribute to impedance variations that must be accounted for in SI/PI co-design efforts.

To address these challenges, semiconductor manufacturers have implemented several innovative solutions. Advanced process control (APC) systems continuously monitor and adjust fabrication parameters to minimize variation. Additionally, adaptive equalization techniques have been incorporated into GDDR7 designs, allowing interfaces to calibrate and compensate for manufacturing variations during initialization sequences.

Post-silicon validation has become increasingly sophisticated, with automated test equipment capable of characterizing signal and power integrity across process corners. This data feeds back into simulation models, improving correlation between predicted and actual performance. Some manufacturers have implemented machine learning algorithms to identify patterns in manufacturing data that correlate with SI/PI performance issues.

Redundancy and repair mechanisms represent another approach to mitigating manufacturing challenges. GDDR7 designs typically include spare elements that can be activated if primary circuits fail to meet performance specifications during testing. This approach improves yield while maintaining overall system performance targets.

The industry has also developed more robust design methodologies that explicitly account for manufacturing variation. Design for manufacturability (DFM) techniques specifically tailored for high-speed memory interfaces help ensure that designs remain functional across the expected range of process variations. These approaches typically involve comprehensive corner analysis and statistical design techniques that model the full distribution of manufacturing parameters.

Process variation in transistor characteristics directly impacts the driver and receiver circuits in GDDR7 interfaces. These variations can manifest as differences in rise/fall times, output impedance, and receiver sensitivity across different production batches. Statistical analysis shows that up to 15% variation in these parameters can occur even within tightly controlled manufacturing environments.

The extreme data rates of GDDR7 (exceeding 32 Gbps) further exacerbate these challenges, as even minor process variations can lead to significant performance degradation. Manufacturing tolerances for PCB substrates and package materials also contribute to impedance variations that must be accounted for in SI/PI co-design efforts.

To address these challenges, semiconductor manufacturers have implemented several innovative solutions. Advanced process control (APC) systems continuously monitor and adjust fabrication parameters to minimize variation. Additionally, adaptive equalization techniques have been incorporated into GDDR7 designs, allowing interfaces to calibrate and compensate for manufacturing variations during initialization sequences.

Post-silicon validation has become increasingly sophisticated, with automated test equipment capable of characterizing signal and power integrity across process corners. This data feeds back into simulation models, improving correlation between predicted and actual performance. Some manufacturers have implemented machine learning algorithms to identify patterns in manufacturing data that correlate with SI/PI performance issues.

Redundancy and repair mechanisms represent another approach to mitigating manufacturing challenges. GDDR7 designs typically include spare elements that can be activated if primary circuits fail to meet performance specifications during testing. This approach improves yield while maintaining overall system performance targets.

The industry has also developed more robust design methodologies that explicitly account for manufacturing variation. Design for manufacturability (DFM) techniques specifically tailored for high-speed memory interfaces help ensure that designs remain functional across the expected range of process variations. These approaches typically involve comprehensive corner analysis and statistical design techniques that model the full distribution of manufacturing parameters.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!