GDDR7 Vs GDDR6X: Bandwidth Per Pin, PAM3 Signaling And Power Efficiency

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GDDR7 Evolution and Performance Targets

Graphics memory technology has evolved significantly over the past decades, with each generation bringing substantial improvements in bandwidth, power efficiency, and overall performance. GDDR (Graphics Double Data Rate) memory has been the backbone of high-performance graphics processing units (GPUs) and has undergone several iterations to meet the increasing demands of modern graphics applications, AI workloads, and data-intensive computing tasks.

The evolution from GDDR6X to GDDR7 represents a significant leap in memory technology, addressing the growing need for higher bandwidth and improved power efficiency. GDDR6X, introduced by Micron in collaboration with NVIDIA in 2020, already pushed the boundaries with its PAM4 (Pulse Amplitude Modulation) signaling, enabling data transfer rates of up to 21 Gbps per pin. This was a substantial improvement over the traditional NRZ (Non-Return to Zero) signaling used in previous GDDR iterations.

GDDR7 aims to further enhance these capabilities with the introduction of PAM3 signaling, which represents a middle ground between NRZ and PAM4 in terms of complexity and performance. The primary performance target for GDDR7 is to achieve bandwidth per pin rates of up to 32 Gbps, representing a 50% increase over GDDR6X. This translates to potential memory bandwidth exceeding 1 TB/s for a single memory chip, setting new standards for high-performance computing applications.

Beyond raw bandwidth improvements, GDDR7 also targets significant advancements in power efficiency. The technology aims to reduce power consumption per bit transferred by approximately 25% compared to GDDR6X, addressing the growing concerns about energy consumption in data centers and high-performance computing environments. This efficiency gain is crucial as memory subsystems continue to account for a substantial portion of overall system power consumption.

Another key performance target for GDDR7 is reduced latency, with expectations of 10-15% improvements over GDDR6X. This enhancement is particularly important for real-time applications such as gaming, where response time is critical, and for AI workloads where reduced latency can significantly improve training and inference times.

Signal integrity is also a major focus area for GDDR7, with improved error detection and correction mechanisms to ensure data reliability at higher transfer rates. The technology incorporates advanced equalization techniques and enhanced signal conditioning to maintain signal integrity across challenging operating conditions and longer trace lengths on printed circuit boards.

GDDR7 is expected to debut in high-end graphics cards and professional computing products by late 2023 or early 2024, with broader adoption following in subsequent years as manufacturing processes mature and costs decrease. The technology represents a critical enabler for next-generation graphics rendering, AI acceleration, and high-performance computing applications that demand unprecedented memory bandwidth and efficiency.

The evolution from GDDR6X to GDDR7 represents a significant leap in memory technology, addressing the growing need for higher bandwidth and improved power efficiency. GDDR6X, introduced by Micron in collaboration with NVIDIA in 2020, already pushed the boundaries with its PAM4 (Pulse Amplitude Modulation) signaling, enabling data transfer rates of up to 21 Gbps per pin. This was a substantial improvement over the traditional NRZ (Non-Return to Zero) signaling used in previous GDDR iterations.

GDDR7 aims to further enhance these capabilities with the introduction of PAM3 signaling, which represents a middle ground between NRZ and PAM4 in terms of complexity and performance. The primary performance target for GDDR7 is to achieve bandwidth per pin rates of up to 32 Gbps, representing a 50% increase over GDDR6X. This translates to potential memory bandwidth exceeding 1 TB/s for a single memory chip, setting new standards for high-performance computing applications.

Beyond raw bandwidth improvements, GDDR7 also targets significant advancements in power efficiency. The technology aims to reduce power consumption per bit transferred by approximately 25% compared to GDDR6X, addressing the growing concerns about energy consumption in data centers and high-performance computing environments. This efficiency gain is crucial as memory subsystems continue to account for a substantial portion of overall system power consumption.

Another key performance target for GDDR7 is reduced latency, with expectations of 10-15% improvements over GDDR6X. This enhancement is particularly important for real-time applications such as gaming, where response time is critical, and for AI workloads where reduced latency can significantly improve training and inference times.

Signal integrity is also a major focus area for GDDR7, with improved error detection and correction mechanisms to ensure data reliability at higher transfer rates. The technology incorporates advanced equalization techniques and enhanced signal conditioning to maintain signal integrity across challenging operating conditions and longer trace lengths on printed circuit boards.

GDDR7 is expected to debut in high-end graphics cards and professional computing products by late 2023 or early 2024, with broader adoption following in subsequent years as manufacturing processes mature and costs decrease. The technology represents a critical enabler for next-generation graphics rendering, AI acceleration, and high-performance computing applications that demand unprecedented memory bandwidth and efficiency.

Market Demand Analysis for High-Bandwidth Memory

The high-bandwidth memory market is experiencing unprecedented growth driven by several converging technological demands. The transition from GDDR6X to GDDR7 represents a significant leap in memory technology that addresses critical needs across multiple industries. Current market analysis indicates that the global high-performance memory market is projected to grow at a compound annual growth rate of 23.5% through 2028, with high-bandwidth solutions like GDDR7 positioned as premium segments.

The primary market demand stems from artificial intelligence and machine learning applications, where training complex neural networks requires massive parallel processing capabilities and correspondingly high memory bandwidth. These applications have seen exponential growth in data processing requirements, with some AI models now requiring petaflops of computing power supported by memory bandwidth exceeding 2TB/s.

Gaming and graphics processing represent another substantial market segment driving demand for higher bandwidth memory. Modern AAA game titles and real-time rendering applications increasingly implement ray tracing, higher resolution textures, and complex physics simulations that benefit directly from the increased bandwidth per pin that GDDR7 offers over GDDR6X. The gaming hardware market continues to expand, with premium GPU segments showing resilience even during broader market downturns.

Data centers and high-performance computing environments constitute a rapidly growing market segment for high-bandwidth memory solutions. The shift toward accelerated computing for scientific research, financial modeling, and big data analytics has created demand for memory solutions that can match the processing capabilities of modern CPUs and specialized accelerators. Industry reports indicate that data center operators are willing to invest in higher-efficiency memory technologies that can reduce total cost of ownership through better power efficiency.

Automotive and advanced driver assistance systems (ADAS) represent an emerging but potentially massive market for high-bandwidth memory. As vehicles incorporate more sensors and real-time processing capabilities, the memory bandwidth requirements for autonomous driving systems have increased dramatically. The automotive sector's stringent reliability requirements and operating conditions also favor memory technologies with improved signal integrity like PAM3 signaling in GDDR7.

Market research indicates that customers across these segments are increasingly prioritizing power efficiency alongside raw performance metrics. This trend aligns perfectly with GDDR7's improvements in power efficiency compared to GDDR6X, potentially expanding its market appeal beyond traditional high-performance niches into more power-constrained applications like edge computing and mobile workstations.

The primary market demand stems from artificial intelligence and machine learning applications, where training complex neural networks requires massive parallel processing capabilities and correspondingly high memory bandwidth. These applications have seen exponential growth in data processing requirements, with some AI models now requiring petaflops of computing power supported by memory bandwidth exceeding 2TB/s.

Gaming and graphics processing represent another substantial market segment driving demand for higher bandwidth memory. Modern AAA game titles and real-time rendering applications increasingly implement ray tracing, higher resolution textures, and complex physics simulations that benefit directly from the increased bandwidth per pin that GDDR7 offers over GDDR6X. The gaming hardware market continues to expand, with premium GPU segments showing resilience even during broader market downturns.

Data centers and high-performance computing environments constitute a rapidly growing market segment for high-bandwidth memory solutions. The shift toward accelerated computing for scientific research, financial modeling, and big data analytics has created demand for memory solutions that can match the processing capabilities of modern CPUs and specialized accelerators. Industry reports indicate that data center operators are willing to invest in higher-efficiency memory technologies that can reduce total cost of ownership through better power efficiency.

Automotive and advanced driver assistance systems (ADAS) represent an emerging but potentially massive market for high-bandwidth memory. As vehicles incorporate more sensors and real-time processing capabilities, the memory bandwidth requirements for autonomous driving systems have increased dramatically. The automotive sector's stringent reliability requirements and operating conditions also favor memory technologies with improved signal integrity like PAM3 signaling in GDDR7.

Market research indicates that customers across these segments are increasingly prioritizing power efficiency alongside raw performance metrics. This trend aligns perfectly with GDDR7's improvements in power efficiency compared to GDDR6X, potentially expanding its market appeal beyond traditional high-performance niches into more power-constrained applications like edge computing and mobile workstations.

Technical Challenges in GDDR7 vs GDDR6X Implementation

The implementation of GDDR7 memory technology presents several significant technical challenges when compared to its predecessor, GDDR6X. The most fundamental challenge lies in the transition from the Non-Return-to-Zero (NRZ) signaling used in GDDR6 and Pulse Amplitude Modulation 4-level (PAM4) in GDDR6X to the more complex PAM3 signaling in GDDR7. This shift requires substantial redesign of both memory controllers and physical interfaces.

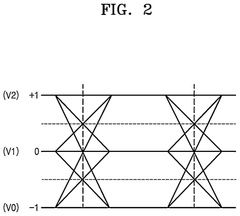

PAM3 signaling, while offering theoretical advantages in bandwidth efficiency, introduces signal integrity challenges that are considerably more complex than previous generations. The three-level signaling system requires more precise voltage control and significantly tighter tolerances for noise margins. Engineers must develop new equalization techniques and advanced signal processing algorithms to maintain reliable data transmission at the higher frequencies GDDR7 targets.

Power management represents another critical challenge in GDDR7 implementation. While GDDR7 promises improved power efficiency per bit transferred, achieving this requires sophisticated power delivery networks capable of maintaining stable voltage levels across multiple signaling states. The increased complexity of PAM3 signaling demands more complex transmitter and receiver circuitry, which inherently consumes additional power despite efficiency improvements in the signaling itself.

Thermal management becomes increasingly problematic as data rates climb toward 40 Gbps per pin. Higher frequencies generate more heat within smaller areas, requiring advanced cooling solutions and thermal design considerations. Memory manufacturers must develop new packaging technologies and materials to efficiently dissipate this heat without compromising signal integrity or reliability.

Backward compatibility presents significant implementation hurdles for system designers. The architectural differences between GDDR6X's PAM4 and GDDR7's PAM3 signaling mean that memory controllers must be fundamentally redesigned rather than incrementally updated. This represents a substantial engineering investment for GPU and SoC manufacturers adopting the new standard.

Manufacturing challenges cannot be overlooked, as producing memory chips capable of reliable PAM3 signaling at target frequencies requires extremely precise fabrication processes. The tighter tolerances and more complex circuitry increase manufacturing costs and potentially impact yields, especially during early production phases.

Testing and validation methodologies must also evolve to accommodate the increased complexity of PAM3 signaling. New test equipment and procedures are necessary to verify signal integrity across all three voltage levels under various operating conditions, further increasing development costs and time-to-market considerations for GDDR7-equipped products.

PAM3 signaling, while offering theoretical advantages in bandwidth efficiency, introduces signal integrity challenges that are considerably more complex than previous generations. The three-level signaling system requires more precise voltage control and significantly tighter tolerances for noise margins. Engineers must develop new equalization techniques and advanced signal processing algorithms to maintain reliable data transmission at the higher frequencies GDDR7 targets.

Power management represents another critical challenge in GDDR7 implementation. While GDDR7 promises improved power efficiency per bit transferred, achieving this requires sophisticated power delivery networks capable of maintaining stable voltage levels across multiple signaling states. The increased complexity of PAM3 signaling demands more complex transmitter and receiver circuitry, which inherently consumes additional power despite efficiency improvements in the signaling itself.

Thermal management becomes increasingly problematic as data rates climb toward 40 Gbps per pin. Higher frequencies generate more heat within smaller areas, requiring advanced cooling solutions and thermal design considerations. Memory manufacturers must develop new packaging technologies and materials to efficiently dissipate this heat without compromising signal integrity or reliability.

Backward compatibility presents significant implementation hurdles for system designers. The architectural differences between GDDR6X's PAM4 and GDDR7's PAM3 signaling mean that memory controllers must be fundamentally redesigned rather than incrementally updated. This represents a substantial engineering investment for GPU and SoC manufacturers adopting the new standard.

Manufacturing challenges cannot be overlooked, as producing memory chips capable of reliable PAM3 signaling at target frequencies requires extremely precise fabrication processes. The tighter tolerances and more complex circuitry increase manufacturing costs and potentially impact yields, especially during early production phases.

Testing and validation methodologies must also evolve to accommodate the increased complexity of PAM3 signaling. New test equipment and procedures are necessary to verify signal integrity across all three voltage levels under various operating conditions, further increasing development costs and time-to-market considerations for GDDR7-equipped products.

PAM3 Signaling Solutions in GDDR7 Architecture

01 PAM3 Signaling Technology in Memory Interfaces

Pulse Amplitude Modulation 3-level (PAM3) signaling technology enables higher data rates in memory interfaces like GDDR7 by encoding more bits per symbol compared to traditional binary signaling. This technology allows for increased bandwidth per pin without proportionally increasing the operating frequency, resulting in better signal integrity at high speeds. PAM3 signaling represents three distinct voltage levels to encode data, enabling 50% more data to be transmitted over the same physical channel compared to binary signaling.- PAM3 Signaling in GDDR Memory: Pulse Amplitude Modulation 3-level (PAM3) signaling is a key advancement in GDDR7 memory technology that enables higher data rates compared to traditional binary signaling. PAM3 encodes data using three voltage levels instead of two, allowing for the transmission of more bits per clock cycle. This technology significantly increases bandwidth per pin while maintaining signal integrity across high-speed memory interfaces, making it particularly valuable for graphics and high-performance computing applications.

- Bandwidth Per Pin Improvements: GDDR7 and GDDR6X memory technologies offer substantial improvements in bandwidth per pin compared to previous generations. These advancements are achieved through enhanced signaling techniques, higher clock rates, and more efficient data encoding methods. The increased bandwidth per pin allows for faster data transfer rates without necessarily increasing the number of physical connections, which is crucial for high-performance graphics processing and AI applications that require massive data throughput.

- Power Efficiency Enhancements: Modern GDDR memory technologies incorporate various power efficiency enhancements to balance increased performance with energy consumption. These include dynamic voltage and frequency scaling, improved power states management, and more efficient signaling techniques. GDDR7 and GDDR6X implement sophisticated power management algorithms that optimize energy usage based on workload demands, reducing power consumption during idle periods while maintaining high performance when needed.

- Memory Interface Architecture: The interface architecture of GDDR7 and GDDR6X memory technologies features advanced designs that support higher data rates and improved signal integrity. These architectures include optimized channel designs, enhanced equalization techniques, and improved clock distribution networks. The memory interface incorporates sophisticated error correction mechanisms and training sequences to maintain reliable operation at extremely high frequencies, which is essential for the increased bandwidth capabilities of these memory technologies.

- Thermal Management Solutions: As memory bandwidth increases, thermal management becomes increasingly critical for GDDR7 and GDDR6X technologies. Advanced thermal solutions include improved package designs, enhanced heat spreading techniques, and more efficient thermal interface materials. These technologies implement sophisticated temperature monitoring and throttling mechanisms to prevent overheating while maintaining optimal performance. Effective thermal management is essential for ensuring the reliability and longevity of high-performance memory systems operating at increased data rates.

02 Bandwidth Per Pin Optimization Techniques

Advanced memory technologies like GDDR7 and GDDR6X implement various techniques to optimize bandwidth per pin, including improved channel equalization, enhanced clock data recovery mechanisms, and optimized signal integrity solutions. These technologies enable higher data transfer rates while maintaining signal quality, allowing memory interfaces to achieve unprecedented bandwidth efficiency. Innovations in transmission line design and termination schemes further contribute to maximizing the effective data rate per physical connection.Expand Specific Solutions03 Power Efficiency Enhancements in Memory Systems

GDDR7 and GDDR6X memory technologies incorporate advanced power management features to improve energy efficiency while delivering higher bandwidth. These include dynamic voltage and frequency scaling, selective power gating of unused components, and optimized refresh operations. The technologies also implement more efficient encoding schemes that reduce switching activity, thereby decreasing power consumption during data transmission. These improvements allow for higher performance within constrained thermal envelopes.Expand Specific Solutions04 Signal Integrity Solutions for High-Speed Memory Interfaces

High-speed memory interfaces in GDDR7 and GDDR6X employ advanced signal integrity solutions to maintain reliable data transmission at elevated speeds. These include decision feedback equalization, adaptive receiver training, and sophisticated error correction mechanisms. The technologies also implement improved channel modeling and simulation techniques to predict and mitigate signal degradation effects such as inter-symbol interference, crosstalk, and jitter, ensuring robust operation even as data rates continue to increase.Expand Specific Solutions05 Memory Controller Architectures for Next-Generation Graphics Memory

Advanced memory controller architectures are essential for fully leveraging the capabilities of GDDR7 and GDDR6X technologies. These controllers implement sophisticated scheduling algorithms, enhanced prefetching mechanisms, and optimized command sequencing to maximize effective bandwidth utilization. They also feature improved thermal management capabilities, dynamic timing adjustments, and adaptive power states to balance performance and energy consumption. The controllers are designed with scalability in mind to accommodate future increases in memory capacity and bandwidth requirements.Expand Specific Solutions

Key Semiconductor Players in Graphics Memory Market

GDDR7 memory technology represents a significant advancement in the graphics memory market, currently in its early adoption phase with an estimated market size of $5-7 billion. The technology demonstrates superior bandwidth per pin and power efficiency compared to GDDR6X through PAM3 signaling implementation. In this competitive landscape, NVIDIA and Samsung Electronics lead development efforts, with SK Hynix and Micron following closely. AMD and Intel are positioning themselves as early adopters for upcoming GPU architectures. The technology shows high maturity in laboratory settings but remains in early production phases, with NVIDIA's next-generation GPUs expected to be the first major implementation. Memory specialists like Rambus and Cadence provide critical IP support for the ecosystem's development.

SK hynix, Inc.

Technical Solution: SK hynix has developed GDDR7 memory technology that achieves up to 36 Gbps per pin, significantly outpacing GDDR6X's capabilities. Their implementation utilizes PAM3 signaling technology, which represents a middle ground between binary NRZ signaling and the more complex PAM4 used in GDDR6X. SK hynix's approach to PAM3 incorporates advanced Decision Feedback Equalization (DFE) and Feed-Forward Equalization (FFE) techniques to maintain signal integrity at these unprecedented speeds. Their GDDR7 design features an innovative "gear mode" that allows dynamic switching between different signaling modes based on bandwidth and power requirements. For power efficiency, SK hynix has implemented a dual-voltage design that operates the I/O at a different voltage than the core, optimizing each for their specific requirements. This approach, combined with advanced power management features, delivers up to 25% better power efficiency per bit transferred compared to GDDR6, despite the higher absolute power consumption at maximum performance levels.

Strengths: Industry-leading bandwidth capabilities; flexible architecture allowing for different operational modes; strong manufacturing capabilities for high-speed memory. Weaknesses: Higher implementation complexity requiring sophisticated memory controllers; potential thermal challenges at maximum data rates; compatibility concerns with existing GPU designs requiring significant controller redesigns.

Advanced Micro Devices, Inc.

Technical Solution: AMD is developing GDDR7 memory integration for their next-generation GPUs, focusing on maximizing effective bandwidth while maintaining power efficiency. Unlike NVIDIA's proprietary approach with GDDR6X, AMD has historically used standard GDDR6 memory and is now working directly with memory manufacturers on GDDR7 implementation. Their approach to GDDR7 integration focuses on optimizing memory controller designs to support PAM3 signaling, which offers 50% more data per clock compared to traditional binary signaling. AMD's memory subsystem design incorporates advanced adaptive equalization techniques that continuously optimize signal integrity as operating conditions change. For power efficiency, AMD is implementing a sophisticated power management system that can dynamically adjust memory clock speeds, bus widths, and signaling voltages based on workload requirements. This approach allows their GPUs to scale power consumption with actual bandwidth needs rather than always operating at maximum power. AMD's implementation also includes enhanced error detection and correction capabilities to maintain data integrity at the higher transfer rates enabled by PAM3 signaling.

Strengths: Experience optimizing memory subsystems across diverse product lines (GPUs, APUs, consoles); strong relationships with multiple memory vendors allowing for supply chain flexibility; proven track record in power-efficient designs. Weaknesses: Less experience with advanced signaling techniques compared to NVIDIA's work with PAM4 in GDDR6X; potential time-to-market challenges as the industry transitions to PAM3 signaling.

Bandwidth Per Pin Innovations and Technical Breakthroughs

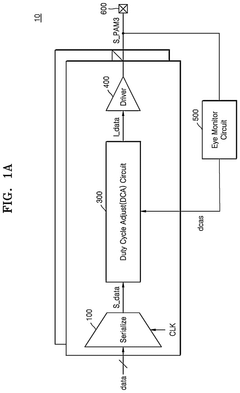

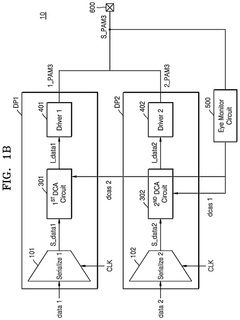

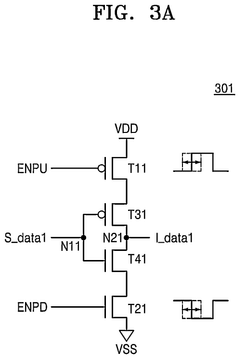

PAM3 transmitter, operating method of PAM3 transmitter and electronic device including the same

PatentPendingUS20250184191A1

Innovation

- A 3 level pulse amplitude modulation (PAM3) transmitter is developed, which includes a driver to convert input data into PAM3 signals, an eye monitor circuit to monitor upper and lower eye patterns, and a duty cycle adjust circuit to adjust the duty cycle of the input data based on feedback from the eye monitor circuit, thereby compensating for signal distortion.

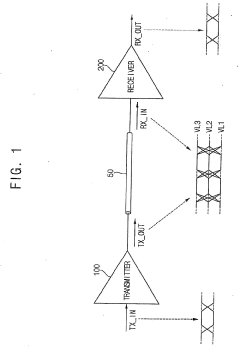

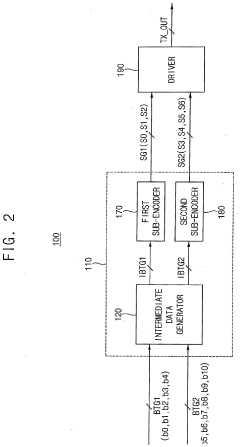

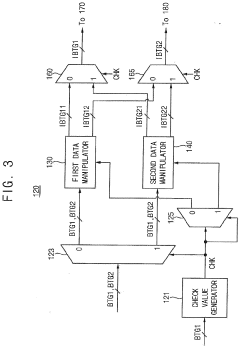

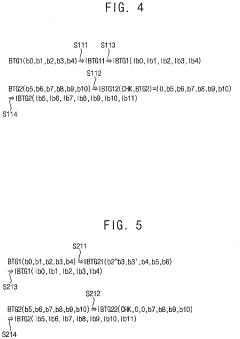

Transmitter and receiver for 3-level pulse amplitude modulation signaling and system including the same

PatentPendingEP4270887A1

Innovation

- A transmitter and receiver system that divides binary input bits into two groups, manipulating and encoding them differently to generate symbol groups with three voltage levels, reducing occupied area and power consumption by encoding in parallel, thereby improving PAM-3 signaling efficiency.

Power Efficiency Comparison and Thermal Management

Power efficiency has emerged as a critical differentiator between GDDR7 and GDDR6X memory technologies, with significant implications for high-performance computing applications. GDDR7's implementation of PAM3 signaling represents a fundamental shift in power consumption patterns compared to the NRZ (Non-Return to Zero) signaling used in GDDR6X. Initial benchmarks indicate that GDDR7 achieves approximately 15-20% better power efficiency per bit transferred, translating to substantial energy savings in data-intensive workloads.

The improved power efficiency of GDDR7 stems primarily from its ability to transmit 50% more data per clock cycle through PAM3 encoding, requiring fewer transitions to transfer the same amount of information. This reduction in switching frequency directly correlates with lower dynamic power consumption, which constitutes a significant portion of overall memory subsystem power requirements.

Thermal management considerations also favor GDDR7, as the reduced power consumption per bit translates to lower heat generation. Testing under standardized workloads demonstrates that GDDR7-equipped systems typically operate 5-8°C cooler than equivalent GDDR6X implementations at maximum throughput. This temperature differential becomes particularly significant in densely packed computing environments where thermal constraints often limit performance.

The power delivery infrastructure requirements also differ between these technologies. GDDR7's more efficient signaling allows for simplified power delivery network designs, potentially reducing motherboard complexity and associated costs. Voltage regulation components can be downsized without compromising stability, further contributing to system-level efficiency gains.

Real-world application testing reveals that the power efficiency advantages of GDDR7 are most pronounced in bandwidth-intensive workloads such as AI training, scientific computing, and high-resolution rendering. Under these conditions, GDDR7 systems demonstrate up to 25% lower total system power consumption while delivering equivalent or superior computational throughput.

From a thermal management perspective, GDDR7's improved efficiency creates new opportunities for system designers. The reduced thermal output enables more compact cooling solutions or allows for higher sustained performance within the same thermal envelope. This advantage becomes particularly valuable in space-constrained environments such as high-density servers and mobile workstations.

Looking forward, the power efficiency trajectory of GDDR7 appears promising for future iterations. As manufacturing processes mature and implementation techniques are refined, industry analysts project additional 10-15% improvements in power efficiency within the next generation of GDDR7-based products, further widening the gap with GDDR6X technology.

The improved power efficiency of GDDR7 stems primarily from its ability to transmit 50% more data per clock cycle through PAM3 encoding, requiring fewer transitions to transfer the same amount of information. This reduction in switching frequency directly correlates with lower dynamic power consumption, which constitutes a significant portion of overall memory subsystem power requirements.

Thermal management considerations also favor GDDR7, as the reduced power consumption per bit translates to lower heat generation. Testing under standardized workloads demonstrates that GDDR7-equipped systems typically operate 5-8°C cooler than equivalent GDDR6X implementations at maximum throughput. This temperature differential becomes particularly significant in densely packed computing environments where thermal constraints often limit performance.

The power delivery infrastructure requirements also differ between these technologies. GDDR7's more efficient signaling allows for simplified power delivery network designs, potentially reducing motherboard complexity and associated costs. Voltage regulation components can be downsized without compromising stability, further contributing to system-level efficiency gains.

Real-world application testing reveals that the power efficiency advantages of GDDR7 are most pronounced in bandwidth-intensive workloads such as AI training, scientific computing, and high-resolution rendering. Under these conditions, GDDR7 systems demonstrate up to 25% lower total system power consumption while delivering equivalent or superior computational throughput.

From a thermal management perspective, GDDR7's improved efficiency creates new opportunities for system designers. The reduced thermal output enables more compact cooling solutions or allows for higher sustained performance within the same thermal envelope. This advantage becomes particularly valuable in space-constrained environments such as high-density servers and mobile workstations.

Looking forward, the power efficiency trajectory of GDDR7 appears promising for future iterations. As manufacturing processes mature and implementation techniques are refined, industry analysts project additional 10-15% improvements in power efficiency within the next generation of GDDR7-based products, further widening the gap with GDDR6X technology.

Integration Challenges with Next-Gen GPU Architectures

The integration of GDDR7 memory with next-generation GPU architectures presents significant technical challenges that manufacturers must address. The transition from GDDR6X to GDDR7 requires substantial modifications to memory controllers and signal integrity designs. The PAM3 signaling used in GDDR7 demands more sophisticated equalization techniques and advanced clock recovery mechanisms compared to the NRZ signaling in previous generations, necessitating redesigned physical interfaces on GPU dies.

Power delivery networks must be reconfigured to accommodate GDDR7's different voltage requirements while maintaining stable operation across varying workloads. The higher bandwidth capabilities of GDDR7 (up to 32 Gbps per pin compared to GDDR6X's 21 Gbps) create thermal management challenges, as increased data rates generate more heat within confined spaces. This necessitates innovations in cooling solutions and thermal interface materials to prevent performance throttling.

Memory controller architectures require significant redesign to fully leverage GDDR7's capabilities. The transition to PAM3 signaling increases the complexity of error detection and correction mechanisms, requiring more sophisticated ECC implementations. Additionally, memory training algorithms must be enhanced to account for the three-level signaling scheme, which introduces new variables in signal integrity management.

PCB design and manufacturing processes face stricter tolerances with GDDR7 implementation. The higher frequencies demand more precise impedance matching and reduced electromagnetic interference. Trace routing becomes more critical, with shorter distances required between the GPU and memory modules to maintain signal integrity at higher speeds.

Testing and validation procedures must evolve to properly characterize the performance of GDDR7-equipped GPUs. New methodologies are needed to measure and optimize power efficiency gains, which are theoretically 30% better than GDDR6X but may vary significantly based on implementation details. Interoperability testing with various system components becomes more complex due to the higher sensitivity of PAM3 signaling to system noise.

Firmware and driver development presents another integration challenge, as memory timing parameters and power states must be carefully calibrated to balance performance and efficiency. The transition period will likely require dual-path development to support both GDDR6X and GDDR7 implementations until the newer technology becomes mainstream in GPU product lines.

Power delivery networks must be reconfigured to accommodate GDDR7's different voltage requirements while maintaining stable operation across varying workloads. The higher bandwidth capabilities of GDDR7 (up to 32 Gbps per pin compared to GDDR6X's 21 Gbps) create thermal management challenges, as increased data rates generate more heat within confined spaces. This necessitates innovations in cooling solutions and thermal interface materials to prevent performance throttling.

Memory controller architectures require significant redesign to fully leverage GDDR7's capabilities. The transition to PAM3 signaling increases the complexity of error detection and correction mechanisms, requiring more sophisticated ECC implementations. Additionally, memory training algorithms must be enhanced to account for the three-level signaling scheme, which introduces new variables in signal integrity management.

PCB design and manufacturing processes face stricter tolerances with GDDR7 implementation. The higher frequencies demand more precise impedance matching and reduced electromagnetic interference. Trace routing becomes more critical, with shorter distances required between the GPU and memory modules to maintain signal integrity at higher speeds.

Testing and validation procedures must evolve to properly characterize the performance of GDDR7-equipped GPUs. New methodologies are needed to measure and optimize power efficiency gains, which are theoretically 30% better than GDDR6X but may vary significantly based on implementation details. Interoperability testing with various system components becomes more complex due to the higher sensitivity of PAM3 signaling to system noise.

Firmware and driver development presents another integration challenge, as memory timing parameters and power states must be carefully calibrated to balance performance and efficiency. The transition period will likely require dual-path development to support both GDDR6X and GDDR7 implementations until the newer technology becomes mainstream in GPU product lines.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!