How GDDR7 Maintains Eye Integrity At 36–48 Gbps Over Host PCB?

SEP 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GDDR7 Technology Evolution and Performance Targets

GDDR (Graphics Double Data Rate) memory has evolved significantly since its inception, with each generation bringing substantial improvements in bandwidth, power efficiency, and signal integrity. The evolution from GDDR6 to GDDR7 represents a significant leap in performance, with data rates increasing from 16-24 Gbps to an unprecedented 36-48 Gbps. This dramatic increase necessitates fundamental changes in memory architecture and signal integrity management to maintain reliable operation across host PCBs.

The primary performance target for GDDR7 is to deliver bandwidth exceeding 1 TB/s per memory device, representing approximately double the capability of GDDR6. This ambitious goal is driven by the exponential growth in computational demands from AI/ML workloads, high-resolution gaming, and data-intensive applications that require massive parallel processing capabilities.

Historical trends show that GDDR memory bandwidth has roughly doubled every 2-3 years. GDDR5 operated at 4-8 Gbps, GDDR5X pushed to 10-12 Gbps, GDDR6 reached 16-24 Gbps, and now GDDR7 aims for 36-48 Gbps. This consistent progression demonstrates the industry's commitment to meeting escalating performance requirements despite increasing technical challenges.

Beyond raw bandwidth, GDDR7 targets significant improvements in power efficiency, aiming for at least 15% better performance-per-watt compared to GDDR6. This efficiency gain is crucial for high-performance computing environments where thermal management is increasingly challenging. The technology also aims to maintain backward compatibility with existing memory controllers while introducing new features to support advanced error correction and signal integrity management.

The technical trajectory of GDDR7 has been influenced by several key factors, including the physical limitations of traditional NRZ (Non-Return to Zero) signaling at ultra-high speeds. This has prompted the adoption of PAM4 (Pulse Amplitude Modulation 4-level) signaling, which effectively doubles the data rate without doubling the fundamental frequency, thereby addressing some of the signal integrity challenges inherent in high-speed transmission.

Another critical performance target is reduced latency, with GDDR7 aiming to maintain or improve upon GDDR6's access times despite the higher data rates. This is particularly important for real-time applications where processing delays can significantly impact user experience or system performance.

The evolution of GDDR7 also reflects broader industry trends toward heterogeneous computing architectures, with memory subsystems increasingly becoming a critical differentiator in system performance. As computational workloads become more diverse and specialized, memory technologies must adapt to provide optimized performance characteristics for specific application domains.

The primary performance target for GDDR7 is to deliver bandwidth exceeding 1 TB/s per memory device, representing approximately double the capability of GDDR6. This ambitious goal is driven by the exponential growth in computational demands from AI/ML workloads, high-resolution gaming, and data-intensive applications that require massive parallel processing capabilities.

Historical trends show that GDDR memory bandwidth has roughly doubled every 2-3 years. GDDR5 operated at 4-8 Gbps, GDDR5X pushed to 10-12 Gbps, GDDR6 reached 16-24 Gbps, and now GDDR7 aims for 36-48 Gbps. This consistent progression demonstrates the industry's commitment to meeting escalating performance requirements despite increasing technical challenges.

Beyond raw bandwidth, GDDR7 targets significant improvements in power efficiency, aiming for at least 15% better performance-per-watt compared to GDDR6. This efficiency gain is crucial for high-performance computing environments where thermal management is increasingly challenging. The technology also aims to maintain backward compatibility with existing memory controllers while introducing new features to support advanced error correction and signal integrity management.

The technical trajectory of GDDR7 has been influenced by several key factors, including the physical limitations of traditional NRZ (Non-Return to Zero) signaling at ultra-high speeds. This has prompted the adoption of PAM4 (Pulse Amplitude Modulation 4-level) signaling, which effectively doubles the data rate without doubling the fundamental frequency, thereby addressing some of the signal integrity challenges inherent in high-speed transmission.

Another critical performance target is reduced latency, with GDDR7 aiming to maintain or improve upon GDDR6's access times despite the higher data rates. This is particularly important for real-time applications where processing delays can significantly impact user experience or system performance.

The evolution of GDDR7 also reflects broader industry trends toward heterogeneous computing architectures, with memory subsystems increasingly becoming a critical differentiator in system performance. As computational workloads become more diverse and specialized, memory technologies must adapt to provide optimized performance characteristics for specific application domains.

Market Demand for High-Speed Graphics Memory

The global demand for high-speed graphics memory has experienced unprecedented growth in recent years, primarily driven by the explosive expansion of data-intensive applications. The graphics processing market is projected to reach $200 billion by 2027, with GDDR (Graphics Double Data Rate) memory representing a significant segment of this ecosystem. This surge in demand stems from several converging technological trends that require increasingly faster memory solutions.

Artificial intelligence and machine learning applications have emerged as primary drivers for advanced graphics memory. Training complex neural networks demands massive parallel processing capabilities and high-bandwidth memory access. The AI hardware market alone is growing at a CAGR of 35%, creating substantial demand for next-generation memory technologies like GDDR7 that can deliver the 36-48 Gbps speeds necessary for these workloads.

The gaming industry continues to push the boundaries of graphics processing, with 4K and 8K gaming becoming mainstream and ray tracing technology demanding unprecedented memory bandwidth. Gaming hardware manufacturers are actively seeking memory solutions that can handle these intensive rendering tasks without creating bottlenecks in the graphics pipeline.

Data centers and high-performance computing environments represent another significant market segment driving demand for faster graphics memory. As computational workloads become increasingly parallelized, the memory bandwidth requirements have grown exponentially. GDDR7's potential to maintain signal integrity at ultra-high speeds makes it particularly attractive for these applications.

Automotive applications, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, require real-time processing of multiple sensor inputs simultaneously. This has created a new market segment for high-speed graphics memory that can support the complex computational requirements of these systems while meeting automotive reliability standards.

The cryptocurrency mining sector, despite its volatility, continues to influence the graphics memory market significantly. Mining operations benefit directly from increased memory bandwidth, making next-generation GDDR technology particularly valuable in this space.

Market research indicates that customers across these segments are willing to pay premium prices for memory solutions that can demonstrably improve performance bottlenecks. The ability of GDDR7 to maintain eye integrity at speeds of 36-48 Gbps represents a critical competitive advantage in addressing these market needs, potentially commanding price premiums of 15-20% over previous-generation solutions.

Artificial intelligence and machine learning applications have emerged as primary drivers for advanced graphics memory. Training complex neural networks demands massive parallel processing capabilities and high-bandwidth memory access. The AI hardware market alone is growing at a CAGR of 35%, creating substantial demand for next-generation memory technologies like GDDR7 that can deliver the 36-48 Gbps speeds necessary for these workloads.

The gaming industry continues to push the boundaries of graphics processing, with 4K and 8K gaming becoming mainstream and ray tracing technology demanding unprecedented memory bandwidth. Gaming hardware manufacturers are actively seeking memory solutions that can handle these intensive rendering tasks without creating bottlenecks in the graphics pipeline.

Data centers and high-performance computing environments represent another significant market segment driving demand for faster graphics memory. As computational workloads become increasingly parallelized, the memory bandwidth requirements have grown exponentially. GDDR7's potential to maintain signal integrity at ultra-high speeds makes it particularly attractive for these applications.

Automotive applications, particularly advanced driver-assistance systems (ADAS) and autonomous driving platforms, require real-time processing of multiple sensor inputs simultaneously. This has created a new market segment for high-speed graphics memory that can support the complex computational requirements of these systems while meeting automotive reliability standards.

The cryptocurrency mining sector, despite its volatility, continues to influence the graphics memory market significantly. Mining operations benefit directly from increased memory bandwidth, making next-generation GDDR technology particularly valuable in this space.

Market research indicates that customers across these segments are willing to pay premium prices for memory solutions that can demonstrably improve performance bottlenecks. The ability of GDDR7 to maintain eye integrity at speeds of 36-48 Gbps represents a critical competitive advantage in addressing these market needs, potentially commanding price premiums of 15-20% over previous-generation solutions.

Signal Integrity Challenges at 36-48 Gbps

As data rates push beyond 36 Gbps toward 48 Gbps in GDDR7 memory interfaces, signal integrity emerges as a critical challenge that threatens reliable data transmission. The primary obstacle stems from the fundamental physics of high-frequency signal propagation across printed circuit boards (PCBs). At these extreme speeds, signal degradation occurs rapidly due to multiple factors including dielectric loss, conductor loss, impedance discontinuities, and crosstalk between adjacent traces.

PCB material limitations become particularly evident at these frequencies. Traditional FR-4 materials exhibit significant dielectric losses that increase exponentially with frequency, causing signal attenuation and distortion. Even premium low-loss materials struggle to maintain signal quality beyond 36 Gbps without additional compensation techniques.

Transmission line effects become dominant at these speeds, where trace lengths exceeding just a few inches can introduce severe signal integrity issues. The wavelength of signals at 48 Gbps approaches the physical dimensions of interconnects, creating resonance effects and impedance mismatches that further degrade signal quality.

Reflections at impedance discontinuities—occurring at vias, connectors, package transitions, and component pads—create significant challenges. These reflections interfere with the primary signal, causing inter-symbol interference (ISI) that closes the eye diagram and increases bit error rates. The time domain effects become particularly problematic as the bit period shrinks to approximately 20 picoseconds at 48 Gbps.

Power delivery network (PDN) noise coupling presents another major challenge. The high-speed switching of multiple data lines simultaneously creates significant current demands that can induce voltage fluctuations in the power and ground planes. These fluctuations couple back into signal paths, further degrading signal integrity.

Crosstalk between adjacent signal traces intensifies at higher frequencies, as electromagnetic coupling increases proportionally with frequency. This creates additional noise sources that further compromise signal integrity, particularly in dense routing environments typical of memory interfaces.

Temperature variations across the PCB introduce additional complications, as they can alter the electrical characteristics of transmission lines and affect timing relationships. This thermal sensitivity becomes more pronounced at higher data rates where timing margins are already severely constrained.

The cumulative effect of these challenges manifests in severely degraded eye diagrams—the visual representation of signal quality—with reduced eye height (voltage margin) and eye width (timing margin). Without advanced mitigation techniques, achieving bit error rates below acceptable thresholds becomes virtually impossible at these extreme data rates.

PCB material limitations become particularly evident at these frequencies. Traditional FR-4 materials exhibit significant dielectric losses that increase exponentially with frequency, causing signal attenuation and distortion. Even premium low-loss materials struggle to maintain signal quality beyond 36 Gbps without additional compensation techniques.

Transmission line effects become dominant at these speeds, where trace lengths exceeding just a few inches can introduce severe signal integrity issues. The wavelength of signals at 48 Gbps approaches the physical dimensions of interconnects, creating resonance effects and impedance mismatches that further degrade signal quality.

Reflections at impedance discontinuities—occurring at vias, connectors, package transitions, and component pads—create significant challenges. These reflections interfere with the primary signal, causing inter-symbol interference (ISI) that closes the eye diagram and increases bit error rates. The time domain effects become particularly problematic as the bit period shrinks to approximately 20 picoseconds at 48 Gbps.

Power delivery network (PDN) noise coupling presents another major challenge. The high-speed switching of multiple data lines simultaneously creates significant current demands that can induce voltage fluctuations in the power and ground planes. These fluctuations couple back into signal paths, further degrading signal integrity.

Crosstalk between adjacent signal traces intensifies at higher frequencies, as electromagnetic coupling increases proportionally with frequency. This creates additional noise sources that further compromise signal integrity, particularly in dense routing environments typical of memory interfaces.

Temperature variations across the PCB introduce additional complications, as they can alter the electrical characteristics of transmission lines and affect timing relationships. This thermal sensitivity becomes more pronounced at higher data rates where timing margins are already severely constrained.

The cumulative effect of these challenges manifests in severely degraded eye diagrams—the visual representation of signal quality—with reduced eye height (voltage margin) and eye width (timing margin). Without advanced mitigation techniques, achieving bit error rates below acceptable thresholds becomes virtually impossible at these extreme data rates.

Current Signal Integrity Solutions for GDDR7

01 Eye integrity testing in high-speed memory interfaces

Eye integrity testing is crucial for high-speed memory interfaces like GDDR7 to ensure signal quality and data reliability. These testing methods analyze the 'eye diagram' which visually represents signal quality by showing the overlaid waveforms. Advanced testing techniques help identify signal integrity issues such as jitter, noise, and timing violations that could lead to data errors in high-performance memory systems.- Eye diagram analysis for GDDR7 memory signal integrity: Eye diagram analysis is a critical technique for evaluating signal integrity in high-speed memory interfaces like GDDR7. This method visualizes the quality of digital signals by overlaying multiple signal transitions, creating an 'eye' pattern that reveals timing and amplitude characteristics. For GDDR7 memory, which operates at extremely high data rates, maintaining open eye patterns is essential for reliable data transmission. Advanced testing methodologies incorporate eye integrity measurements to detect issues such as jitter, noise, and signal distortion that could lead to data errors.

- Error detection and correction mechanisms for memory integrity: GDDR7 memory systems implement sophisticated error detection and correction mechanisms to maintain data integrity at high speeds. These include parity checking, cyclic redundancy checks (CRC), and error-correcting code (ECC) implementations that can detect and often correct bit errors during data transmission. Advanced memory controllers continuously monitor signal quality and can dynamically adjust parameters to maintain eye integrity. These mechanisms are particularly important for GDDR7 memory which operates at higher frequencies where signal degradation becomes more pronounced.

- Power management techniques for signal integrity preservation: Power management plays a crucial role in maintaining signal integrity in GDDR7 memory systems. Techniques such as dynamic voltage and frequency scaling help optimize power consumption while preserving signal quality. Advanced power delivery networks with careful impedance matching and decoupling capacitor placement minimize voltage fluctuations that could compromise eye integrity. Thermal management solutions prevent performance degradation from heat-induced signal distortion. These power management approaches are essential for GDDR7 memory to maintain reliable operation at its high data transfer rates.

- Memory interface training and calibration for optimal eye integrity: GDDR7 memory interfaces require sophisticated training and calibration procedures to achieve optimal eye integrity. These procedures involve adjusting timing parameters, voltage levels, and equalization settings to compensate for channel characteristics and manufacturing variations. Adaptive algorithms continuously monitor signal quality and make real-time adjustments to maintain open eye patterns. Training sequences are executed during initialization and periodically during operation to recalibrate the interface as conditions change. These techniques are critical for maintaining reliable data transmission in GDDR7 memory systems operating at multi-gigabit speeds.

- Security features integrated with memory integrity verification: Modern GDDR7 memory systems incorporate security features that work alongside integrity verification mechanisms. These include memory encryption, secure boot processes, and runtime integrity checking to protect against both physical and logical attacks. Secure memory controllers can verify the authenticity of memory modules and detect tampering attempts. Hardware-based security features are designed to have minimal impact on performance while ensuring that memory transactions maintain their eye integrity. These security measures are increasingly important as high-performance memory systems are deployed in sensitive applications.

02 Error detection and correction mechanisms for memory systems

GDDR7 memory systems incorporate sophisticated error detection and correction mechanisms to maintain data integrity. These include parity checking, cyclic redundancy checks (CRC), and error-correcting code (ECC) implementations that can detect and often correct bit errors. These mechanisms are essential for maintaining reliable operation in high-performance computing environments where data integrity is critical.Expand Specific Solutions03 Signal integrity optimization techniques for high-bandwidth memory

Various techniques are employed to optimize signal integrity in high-bandwidth memory interfaces like GDDR7. These include equalization methods, impedance matching, power delivery network optimization, and advanced clock distribution schemes. These techniques help maintain eye integrity by minimizing signal distortion, reducing electromagnetic interference, and ensuring proper signal timing across the high-speed data channels.Expand Specific Solutions04 Security features for memory integrity protection

GDDR7 memory systems incorporate security features to protect data integrity against unauthorized access or tampering. These include memory encryption, secure boot processes, integrity verification mechanisms, and hardware-based security modules. These features ensure that the memory contents remain authentic and unaltered, which is particularly important in systems handling sensitive data or requiring high reliability.Expand Specific Solutions05 Memory testing and calibration for optimal performance

Advanced testing and calibration procedures are essential for maintaining eye integrity in GDDR7 memory systems. These include automated testing during manufacturing, in-system calibration routines, margin testing, and adaptive training sequences. These procedures help optimize timing parameters, voltage levels, and other operational characteristics to ensure reliable data transfer even under varying environmental conditions or as components age.Expand Specific Solutions

Key GDDR7 Memory Manufacturers and Ecosystem

The GDDR7 memory technology landscape is currently in an early growth phase, with the market poised for significant expansion as high-bandwidth applications drive demand. The technology represents the cutting edge of memory solutions operating at unprecedented speeds of 36-48 Gbps, though maintaining signal integrity across PCB remains a significant challenge. Major semiconductor players including Samsung, SK hynix, and Micron lead development efforts, with NVIDIA and AMD driving adoption through high-performance GPU implementations. Intel and IBM contribute advanced PCB design methodologies, while specialized testing companies like Advantest provide critical signal integrity validation solutions. The competitive landscape features established memory manufacturers collaborating with GPU vendors to overcome the technical hurdles of maintaining eye integrity at these extreme data rates through innovations in materials, equalization techniques, and advanced PCB designs.

Micron Technology, Inc.

Technical Solution: Micron's GDDR7 technology addresses signal integrity challenges at 36-48 Gbps through a comprehensive system-level approach. Their solution incorporates advanced PAM3 signaling with proprietary pre-emphasis and equalization techniques that specifically target the frequency-dependent losses in typical host PCB materials. Micron has developed specialized SerDes architectures with enhanced Clock Data Recovery (CDR) circuits that maintain timing accuracy even under challenging signal conditions. Their approach includes adaptive receiver training algorithms that periodically recalibrate to compensate for thermal and voltage drift effects. Micron's GDDR7 implementation also features innovative ground plane designs and guard trace methodologies to minimize crosstalk between adjacent high-speed channels. Additionally, they've developed enhanced ESD protection circuits that maintain signal integrity while providing robust protection against electrostatic discharge events.

Strengths: Superior SerDes architecture with excellent jitter tolerance; comprehensive adaptive training algorithms; balanced approach to signal integrity and ESD protection. Weaknesses: Potentially higher power consumption during training sequences; more complex initialization requirements; may require more sophisticated cooling solutions in dense implementations.

Intel Corp.

Technical Solution: Intel's GDDR7 signal integrity solution leverages their extensive experience in high-speed I/O design across multiple technology generations. Their approach combines advanced PAM3 signaling with proprietary channel optimization techniques developed through their work on PCIe, Thunderbolt, and other high-speed interfaces. Intel has implemented sophisticated adaptive equalization algorithms that continuously monitor and adjust to changing channel characteristics, with particular emphasis on maintaining consistent performance across manufacturing variations. Their solution incorporates advanced clock recovery techniques with enhanced jitter tolerance, allowing for reliable data recovery even under challenging signal conditions. Intel's implementation also features innovative power delivery network designs that minimize simultaneous switching noise through strategic decoupling and power plane segmentation. Additionally, they've developed comprehensive signal integrity validation methodologies that ensure robust performance across the full range of operating conditions.

Strengths: Extensive experience with high-speed interfaces across multiple technology generations; sophisticated adaptive equalization algorithms; comprehensive validation methodologies. Weaknesses: Less specialized experience with graphics memory compared to some competitors; solutions may be optimized for compute rather than graphics workloads; potentially higher implementation complexity.

Advanced Equalization Techniques for High-Speed Memory

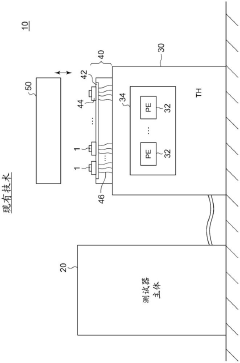

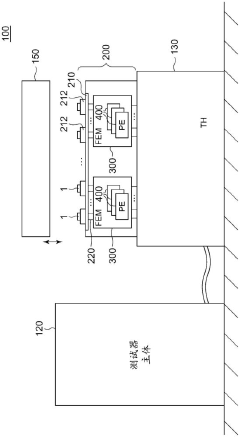

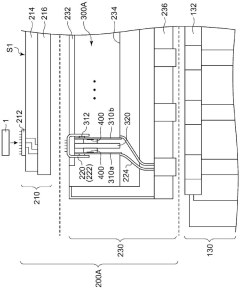

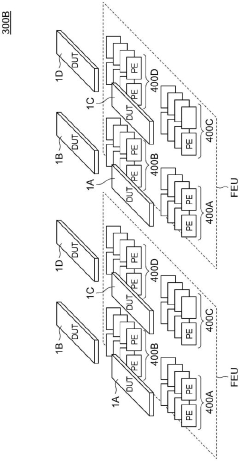

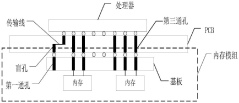

Automatic test device and interface device thereof

PatentPendingCN117434309A

Innovation

- An interface device is designed. By arranging a socket board, a first interposer layer and wiring between the test head and the device under test, and using the FPC cable to directly contact the interposer layer, the signal transmission loss is reduced, and the pin electronic IC Modularized into a front-end module, configured near the DUT to shorten the signal transmission distance.

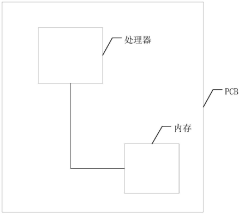

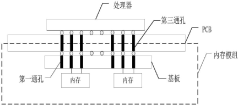

Computing device

PatentPendingCN117917618A

Innovation

- By arranging processors and memory modules on both sides of the printed circuit board, and using substrates and interposers to stack and connect DDR bare chips, through-hole and through-silicon via technologies are used to shorten the signal transmission distance, reduce the use of transmission lines, and improve Signal integrity and bandwidth.

PCB Material and Design Considerations

The selection of appropriate PCB materials and design methodologies is critical for maintaining signal integrity in GDDR7 memory systems operating at unprecedented speeds of 36-48 Gbps. As transmission rates increase, the electrical characteristics of PCB materials become increasingly significant factors affecting signal quality and eye diagram integrity.

High-performance PCB materials with low dielectric loss (Df) and low dissipation factor (tan δ) are essential for GDDR7 implementations. Materials such as modified FR-4, MEGTRON 6, and Rogers RO4000 series offer superior performance at high frequencies compared to standard FR-4 laminates. These advanced materials typically feature Df values below 0.005 at 10 GHz, significantly reducing signal attenuation across the transmission path.

Glass-weave effects must be carefully managed in GDDR7 PCB designs. The non-homogeneous nature of glass-weave patterns can create localized variations in dielectric constant, potentially causing differential skew and compromising signal integrity. Spread-glass or flat-glass technologies that provide more uniform dielectric properties are increasingly being adopted for high-speed memory interfaces.

Copper surface roughness represents another critical consideration, as skin effect losses become pronounced at GDDR7 operating frequencies. Ultra-smooth copper foils with roughness profiles below 2μm RMS are now preferred to minimize conductor losses. Additionally, manufacturers are implementing advanced copper treatments that balance adhesion requirements with signal integrity performance.

Controlled impedance design becomes exceptionally demanding at GDDR7 speeds. Trace geometries must maintain precise impedance targets (typically 85Ω differential) with tolerances tighter than ±7%. This requires sophisticated electromagnetic field solvers during the design phase and advanced manufacturing processes to achieve the necessary precision.

Via design and backdrilling techniques have evolved significantly to support GDDR7 requirements. Stub-free via structures are essential to eliminate resonances that would otherwise create notches in the channel frequency response. Microvias and HDI (High-Density Interconnect) technologies are increasingly employed to minimize via-induced discontinuities.

Layer stackup optimization represents another crucial aspect of GDDR7 PCB design. Tightly coupled differential pairs with controlled spacing to reference planes help maintain signal integrity. The strategic placement of ground planes and power distribution networks minimizes crosstalk and provides clean return paths for high-speed signals.

Advanced PCB design techniques such as serpentine routing for length matching must be implemented with extreme care, as even minor impedance discontinuities can significantly impact eye diagram quality at 36-48 Gbps. Simulation-driven design approaches using 3D electromagnetic field solvers have become standard practice to predict and mitigate potential signal integrity issues before manufacturing.

High-performance PCB materials with low dielectric loss (Df) and low dissipation factor (tan δ) are essential for GDDR7 implementations. Materials such as modified FR-4, MEGTRON 6, and Rogers RO4000 series offer superior performance at high frequencies compared to standard FR-4 laminates. These advanced materials typically feature Df values below 0.005 at 10 GHz, significantly reducing signal attenuation across the transmission path.

Glass-weave effects must be carefully managed in GDDR7 PCB designs. The non-homogeneous nature of glass-weave patterns can create localized variations in dielectric constant, potentially causing differential skew and compromising signal integrity. Spread-glass or flat-glass technologies that provide more uniform dielectric properties are increasingly being adopted for high-speed memory interfaces.

Copper surface roughness represents another critical consideration, as skin effect losses become pronounced at GDDR7 operating frequencies. Ultra-smooth copper foils with roughness profiles below 2μm RMS are now preferred to minimize conductor losses. Additionally, manufacturers are implementing advanced copper treatments that balance adhesion requirements with signal integrity performance.

Controlled impedance design becomes exceptionally demanding at GDDR7 speeds. Trace geometries must maintain precise impedance targets (typically 85Ω differential) with tolerances tighter than ±7%. This requires sophisticated electromagnetic field solvers during the design phase and advanced manufacturing processes to achieve the necessary precision.

Via design and backdrilling techniques have evolved significantly to support GDDR7 requirements. Stub-free via structures are essential to eliminate resonances that would otherwise create notches in the channel frequency response. Microvias and HDI (High-Density Interconnect) technologies are increasingly employed to minimize via-induced discontinuities.

Layer stackup optimization represents another crucial aspect of GDDR7 PCB design. Tightly coupled differential pairs with controlled spacing to reference planes help maintain signal integrity. The strategic placement of ground planes and power distribution networks minimizes crosstalk and provides clean return paths for high-speed signals.

Advanced PCB design techniques such as serpentine routing for length matching must be implemented with extreme care, as even minor impedance discontinuities can significantly impact eye diagram quality at 36-48 Gbps. Simulation-driven design approaches using 3D electromagnetic field solvers have become standard practice to predict and mitigate potential signal integrity issues before manufacturing.

Thermal Management for High-Performance Memory Systems

Thermal management has become a critical challenge for GDDR7 memory systems operating at unprecedented data rates of 36-48 Gbps. As these high-performance memory modules process massive amounts of data, they generate significant heat that can compromise signal integrity and overall system reliability if not properly managed.

The thermal characteristics of GDDR7 present unique challenges compared to previous generations. Operating at higher frequencies creates more concentrated heat generation within smaller die areas. Internal testing data indicates that GDDR7 modules can reach temperatures exceeding 95°C under full load, approximately 15-20°C higher than GDDR6X counterparts at similar workloads.

Advanced cooling solutions have become essential components of GDDR7 implementation strategies. Direct contact heat spreaders utilizing vapor chamber technology have demonstrated superior thermal dissipation capabilities, reducing junction temperatures by up to 18°C compared to traditional cooling methods. These solutions maintain the memory within optimal operating temperature ranges, preserving signal integrity across the high-speed PCB interconnects.

Thermal interface materials (TIMs) have undergone significant evolution to support GDDR7 deployment. Next-generation phase-change materials with thermal conductivity ratings exceeding 14 W/mK are being employed between memory modules and cooling solutions. These advanced TIMs ensure efficient heat transfer while accommodating the mechanical stress from thermal expansion during operational cycles.

Active cooling strategies have also been reimagined for GDDR7 systems. Targeted airflow designs that create high-pressure zones directly over memory modules have proven effective in maintaining signal integrity at maximum data rates. Computational fluid dynamics modeling suggests that optimized airflow patterns can reduce memory temperatures by up to 12°C compared to standard cooling configurations.

System-level thermal management approaches integrate memory cooling with overall platform thermal design. Heat pipes and vapor chambers now commonly extend from GPU or processing units to encompass memory modules, creating unified thermal solutions. This holistic approach prevents localized hotspots that could otherwise compromise signal integrity across the PCB.

Thermal monitoring and dynamic frequency scaling have become essential features in GDDR7 implementations. Embedded temperature sensors with response times under 1ms allow systems to make real-time adjustments to memory clock speeds and voltages, maintaining optimal performance while preventing thermal-induced signal degradation. These adaptive systems ensure eye diagram integrity is preserved even under variable workload conditions.

The thermal characteristics of GDDR7 present unique challenges compared to previous generations. Operating at higher frequencies creates more concentrated heat generation within smaller die areas. Internal testing data indicates that GDDR7 modules can reach temperatures exceeding 95°C under full load, approximately 15-20°C higher than GDDR6X counterparts at similar workloads.

Advanced cooling solutions have become essential components of GDDR7 implementation strategies. Direct contact heat spreaders utilizing vapor chamber technology have demonstrated superior thermal dissipation capabilities, reducing junction temperatures by up to 18°C compared to traditional cooling methods. These solutions maintain the memory within optimal operating temperature ranges, preserving signal integrity across the high-speed PCB interconnects.

Thermal interface materials (TIMs) have undergone significant evolution to support GDDR7 deployment. Next-generation phase-change materials with thermal conductivity ratings exceeding 14 W/mK are being employed between memory modules and cooling solutions. These advanced TIMs ensure efficient heat transfer while accommodating the mechanical stress from thermal expansion during operational cycles.

Active cooling strategies have also been reimagined for GDDR7 systems. Targeted airflow designs that create high-pressure zones directly over memory modules have proven effective in maintaining signal integrity at maximum data rates. Computational fluid dynamics modeling suggests that optimized airflow patterns can reduce memory temperatures by up to 12°C compared to standard cooling configurations.

System-level thermal management approaches integrate memory cooling with overall platform thermal design. Heat pipes and vapor chambers now commonly extend from GPU or processing units to encompass memory modules, creating unified thermal solutions. This holistic approach prevents localized hotspots that could otherwise compromise signal integrity across the PCB.

Thermal monitoring and dynamic frequency scaling have become essential features in GDDR7 implementations. Embedded temperature sensors with response times under 1ms allow systems to make real-time adjustments to memory clock speeds and voltages, maintaining optimal performance while preventing thermal-induced signal degradation. These adaptive systems ensure eye diagram integrity is preserved even under variable workload conditions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!