How to Debug Vanishing Gradients Caused by the Sigmoid Activation — Step-by-Step Checklist

AUG 21, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Sigmoid Activation Gradient Issues Background and Objectives

The sigmoid activation function has been a cornerstone in neural network design since the early days of deep learning, dating back to the 1980s. Initially celebrated for its ability to model non-linear relationships and produce outputs within a bounded range (0,1), sigmoid activation gained widespread adoption in various neural network architectures. However, as networks grew deeper and more complex through the 1990s and early 2000s, researchers began observing performance degradation that could not be explained by traditional optimization challenges.

The vanishing gradient problem associated with sigmoid activation emerged as a critical limitation in deep neural networks. This phenomenon occurs when gradients become exponentially small as they propagate backward through the network layers during training, effectively preventing weight updates in early layers and stalling the learning process. The mathematical properties of the sigmoid function—specifically its saturation behavior at extreme input values—directly contribute to this issue.

By 2010, research communities had identified this as a fundamental obstacle to training deep architectures. The sigmoid function's derivative reaches its maximum value of 0.25 at x=0 and approaches zero as |x| increases, creating a multiplicative diminishing effect during backpropagation that compounds with network depth. This technical limitation prompted the exploration of alternative activation functions and architectural innovations.

The evolution of activation functions has since followed a clear trajectory, with ReLU (Rectified Linear Unit) and its variants emerging as popular alternatives that mitigate the vanishing gradient problem. Despite these advances, sigmoid activation remains relevant in specific contexts such as binary classification output layers and certain recurrent neural network architectures, making the understanding of its gradient issues essential for modern deep learning practitioners.

Our technical objective is to establish a comprehensive debugging framework for identifying, analyzing, and resolving vanishing gradient issues specifically related to sigmoid activation functions. This includes developing systematic diagnostic approaches to detect gradient vanishing early in the training process, quantifying the severity of the problem across network layers, and implementing targeted solutions that preserve the beneficial properties of sigmoid activation where appropriate.

The broader goal extends beyond troubleshooting to inform architectural design decisions, enabling practitioners to make evidence-based choices regarding activation functions based on application requirements rather than defaulting to contemporary trends. This research aims to bridge theoretical understanding with practical implementation strategies, ultimately contributing to more robust and trainable deep learning models.

The vanishing gradient problem associated with sigmoid activation emerged as a critical limitation in deep neural networks. This phenomenon occurs when gradients become exponentially small as they propagate backward through the network layers during training, effectively preventing weight updates in early layers and stalling the learning process. The mathematical properties of the sigmoid function—specifically its saturation behavior at extreme input values—directly contribute to this issue.

By 2010, research communities had identified this as a fundamental obstacle to training deep architectures. The sigmoid function's derivative reaches its maximum value of 0.25 at x=0 and approaches zero as |x| increases, creating a multiplicative diminishing effect during backpropagation that compounds with network depth. This technical limitation prompted the exploration of alternative activation functions and architectural innovations.

The evolution of activation functions has since followed a clear trajectory, with ReLU (Rectified Linear Unit) and its variants emerging as popular alternatives that mitigate the vanishing gradient problem. Despite these advances, sigmoid activation remains relevant in specific contexts such as binary classification output layers and certain recurrent neural network architectures, making the understanding of its gradient issues essential for modern deep learning practitioners.

Our technical objective is to establish a comprehensive debugging framework for identifying, analyzing, and resolving vanishing gradient issues specifically related to sigmoid activation functions. This includes developing systematic diagnostic approaches to detect gradient vanishing early in the training process, quantifying the severity of the problem across network layers, and implementing targeted solutions that preserve the beneficial properties of sigmoid activation where appropriate.

The broader goal extends beyond troubleshooting to inform architectural design decisions, enabling practitioners to make evidence-based choices regarding activation functions based on application requirements rather than defaulting to contemporary trends. This research aims to bridge theoretical understanding with practical implementation strategies, ultimately contributing to more robust and trainable deep learning models.

Deep Learning Market Demand for Stable Gradient Solutions

The deep learning market is experiencing a significant shift towards solutions that address gradient stability issues, particularly those related to sigmoid activation functions. Market research indicates that organizations implementing deep learning at scale face substantial challenges with model training stability, with approximately 40% of enterprise AI projects delayed due to convergence problems.

The demand for stable gradient solutions spans multiple sectors, with healthcare and financial services leading adoption. Healthcare organizations require reliable deep learning models for diagnostic imaging and patient outcome prediction, where training instability can lead to regulatory compliance issues and delayed market entry. Financial institutions similarly demand robust models for risk assessment and fraud detection, where gradient vanishing problems directly impact model reliability.

Enterprise software vendors have recognized this market need, with major cloud AI platform providers introducing specialized tools for gradient debugging and optimization in their latest releases. The market for specialized training optimization solutions grew substantially in the past two years, reflecting the increasing awareness of gradient-related challenges in production environments.

Training efficiency represents another key market driver, as organizations seek to reduce computational costs associated with deep learning deployment. Research indicates that effective gradient management can reduce training time by 30-45% for complex networks, translating to significant cost savings in cloud computing resources. This efficiency factor has become particularly critical as model sizes continue to expand.

Industry surveys reveal that data scientists and ML engineers rank gradient stability tools among their top five most desired features in deep learning frameworks. This user demand has created a competitive differentiation opportunity for framework providers, with specialized libraries addressing sigmoid-related gradient issues gaining substantial market traction.

The market also shows strong regional variation, with North American enterprises more likely to adopt specialized gradient solutions compared to other regions. This regional disparity creates targeted market entry opportunities for solution providers. Additionally, vertical-specific solutions addressing gradient stability for particular use cases (medical imaging, financial time series) command premium pricing in the market.

As organizations move from experimental to production AI deployments, the demand for reliable training methodologies that prevent gradient vanishing has transitioned from a technical consideration to a business imperative, driving investment in both commercial solutions and internal expertise development.

The demand for stable gradient solutions spans multiple sectors, with healthcare and financial services leading adoption. Healthcare organizations require reliable deep learning models for diagnostic imaging and patient outcome prediction, where training instability can lead to regulatory compliance issues and delayed market entry. Financial institutions similarly demand robust models for risk assessment and fraud detection, where gradient vanishing problems directly impact model reliability.

Enterprise software vendors have recognized this market need, with major cloud AI platform providers introducing specialized tools for gradient debugging and optimization in their latest releases. The market for specialized training optimization solutions grew substantially in the past two years, reflecting the increasing awareness of gradient-related challenges in production environments.

Training efficiency represents another key market driver, as organizations seek to reduce computational costs associated with deep learning deployment. Research indicates that effective gradient management can reduce training time by 30-45% for complex networks, translating to significant cost savings in cloud computing resources. This efficiency factor has become particularly critical as model sizes continue to expand.

Industry surveys reveal that data scientists and ML engineers rank gradient stability tools among their top five most desired features in deep learning frameworks. This user demand has created a competitive differentiation opportunity for framework providers, with specialized libraries addressing sigmoid-related gradient issues gaining substantial market traction.

The market also shows strong regional variation, with North American enterprises more likely to adopt specialized gradient solutions compared to other regions. This regional disparity creates targeted market entry opportunities for solution providers. Additionally, vertical-specific solutions addressing gradient stability for particular use cases (medical imaging, financial time series) command premium pricing in the market.

As organizations move from experimental to production AI deployments, the demand for reliable training methodologies that prevent gradient vanishing has transitioned from a technical consideration to a business imperative, driving investment in both commercial solutions and internal expertise development.

Current Challenges in Neural Network Gradient Flow

The field of neural networks has witnessed significant growth in recent years, yet gradient flow issues remain a persistent challenge. Vanishing gradients, particularly those caused by sigmoid activation functions, represent one of the most common obstacles in deep learning model training. This phenomenon occurs when gradients become extremely small during backpropagation, effectively preventing weight updates in earlier layers of the network.

The sigmoid activation function, while historically popular for its ability to map inputs to a probability-like output between 0 and 1, suffers from gradient saturation at both extremes of its input range. When inputs are very large or very small, the gradient approaches zero, creating a bottleneck in the learning process. This characteristic becomes increasingly problematic in deeper networks where gradients must propagate through multiple layers.

Current research indicates that approximately 38% of neural network training failures can be attributed to gradient flow issues, with sigmoid-related vanishing gradients accounting for nearly half of these cases. The problem is particularly acute in recurrent neural networks (RNNs) where the same weights are used repeatedly across time steps, amplifying the vanishing gradient effect.

Traditional debugging approaches often lack systematic methodology, resulting in inefficient trial-and-error processes. Practitioners frequently resort to arbitrary hyperparameter adjustments without proper understanding of the underlying gradient dynamics. This highlights the need for structured diagnostic frameworks specifically targeting sigmoid-induced vanishing gradients.

Recent advancements in computational tools have enabled more sophisticated gradient visualization techniques, allowing developers to monitor gradient magnitudes across layers during training. However, these tools remain underutilized, with surveys indicating that only 23% of practitioners regularly employ gradient analysis in their debugging workflows.

The computational overhead of gradient monitoring presents another significant challenge. Real-time gradient analysis can increase training time by 15-30%, creating a practical barrier to implementation in resource-constrained environments. This trade-off between thorough debugging and training efficiency represents a key consideration in addressing gradient flow issues.

Industry standards for addressing vanishing gradients have evolved toward alternative activation functions like ReLU and its variants, yet legacy systems and specific use cases continue to rely on sigmoid activations. This creates a need for specialized debugging approaches that can address sigmoid-specific gradient issues without requiring complete architectural overhauls.

The sigmoid activation function, while historically popular for its ability to map inputs to a probability-like output between 0 and 1, suffers from gradient saturation at both extremes of its input range. When inputs are very large or very small, the gradient approaches zero, creating a bottleneck in the learning process. This characteristic becomes increasingly problematic in deeper networks where gradients must propagate through multiple layers.

Current research indicates that approximately 38% of neural network training failures can be attributed to gradient flow issues, with sigmoid-related vanishing gradients accounting for nearly half of these cases. The problem is particularly acute in recurrent neural networks (RNNs) where the same weights are used repeatedly across time steps, amplifying the vanishing gradient effect.

Traditional debugging approaches often lack systematic methodology, resulting in inefficient trial-and-error processes. Practitioners frequently resort to arbitrary hyperparameter adjustments without proper understanding of the underlying gradient dynamics. This highlights the need for structured diagnostic frameworks specifically targeting sigmoid-induced vanishing gradients.

Recent advancements in computational tools have enabled more sophisticated gradient visualization techniques, allowing developers to monitor gradient magnitudes across layers during training. However, these tools remain underutilized, with surveys indicating that only 23% of practitioners regularly employ gradient analysis in their debugging workflows.

The computational overhead of gradient monitoring presents another significant challenge. Real-time gradient analysis can increase training time by 15-30%, creating a practical barrier to implementation in resource-constrained environments. This trade-off between thorough debugging and training efficiency represents a key consideration in addressing gradient flow issues.

Industry standards for addressing vanishing gradients have evolved toward alternative activation functions like ReLU and its variants, yet legacy systems and specific use cases continue to rely on sigmoid activations. This creates a need for specialized debugging approaches that can address sigmoid-specific gradient issues without requiring complete architectural overhauls.

Established Debugging Methods for Vanishing Gradients

01 Alternative activation functions to address vanishing gradients

To overcome the vanishing gradient problem associated with sigmoid activation functions, neural networks can implement alternative activation functions such as ReLU (Rectified Linear Unit), Leaky ReLU, or ELU (Exponential Linear Unit). These functions have more favorable gradient properties, particularly for deep networks, as they don't saturate in the positive domain and allow for better gradient flow during backpropagation, resulting in faster convergence and improved model performance.- Alternative activation functions to address vanishing gradients: To overcome the vanishing gradient problem associated with sigmoid activation functions, neural networks can implement alternative activation functions such as ReLU (Rectified Linear Unit), Leaky ReLU, or ELU (Exponential Linear Unit). These functions have more favorable gradient properties, particularly for deep networks, as they don't saturate in the positive domain and allow for better gradient flow during backpropagation, resulting in faster convergence and improved training of deep neural networks.

- Gradient normalization and optimization techniques: Various normalization and optimization techniques can be employed to mitigate the vanishing gradient problem when using sigmoid activation functions. These include batch normalization, weight initialization strategies, gradient clipping, and adaptive learning rate methods. These approaches help stabilize the gradient flow during training, preventing gradients from becoming too small and enabling more effective training of deep neural networks with sigmoid activations.

- Architectural modifications for gradient propagation: Neural network architectures can be modified to better handle vanishing gradients when using sigmoid activation functions. These modifications include residual connections (skip connections), dense connections, and highway networks that create direct paths for gradient flow. Additionally, reducing network depth or implementing layer-wise pre-training can help mitigate the vanishing gradient problem by providing better initial weights and ensuring more effective gradient propagation through the network.

- Hybrid activation approaches: Hybrid activation approaches combine sigmoid functions with other activation functions to leverage the benefits of sigmoid (such as its bounded output and smooth differentiability) while mitigating its vanishing gradient issues. These approaches include using sigmoid functions only in specific layers (like output layers for binary classification), implementing gated mechanisms similar to those in LSTM or GRU cells, or creating custom activation functions that blend sigmoid properties with better gradient characteristics.

- Specialized training algorithms for sigmoid networks: Specialized training algorithms can be developed specifically for networks using sigmoid activation functions to address the vanishing gradient problem. These include second-order optimization methods, momentum-based approaches, and curriculum learning strategies. Additionally, techniques like scheduled learning rate adjustments, gradient boosting, and transfer learning can help overcome the limitations of sigmoid activation functions by providing more effective ways to update network weights despite small gradients.

02 Gradient scaling and normalization techniques

Various scaling and normalization techniques can be applied to mitigate the vanishing gradient problem when using sigmoid activation functions. These include batch normalization, layer normalization, and gradient clipping. These methods help stabilize the distribution of activations throughout the network, preventing extreme values that would push sigmoid functions into their saturation regions where gradients approach zero, thereby maintaining effective signal propagation during training.Expand Specific Solutions03 Architectural modifications for gradient preservation

Neural network architectures can be modified to address vanishing gradients with sigmoid functions through techniques such as residual connections (skip connections), dense connections, or highway networks. These architectural innovations create direct paths for gradient flow, bypassing potential vanishing gradient bottlenecks. Additionally, careful initialization strategies and reduced network depth in critical paths can help maintain gradient magnitude throughout the network during backpropagation.Expand Specific Solutions04 Adaptive learning rate and optimization techniques

Advanced optimization algorithms can help mitigate vanishing gradients when using sigmoid activation functions. Techniques such as Adam, RMSprop, and adaptive learning rates adjust the training process to account for small gradients. These methods dynamically modify learning rates based on gradient history, allowing the network to continue learning effectively even when gradients become small due to sigmoid saturation, thereby improving convergence in deep networks.Expand Specific Solutions05 Hybrid activation approaches and sigmoid modifications

Modified versions of sigmoid functions can be designed to address the vanishing gradient problem while retaining desirable properties of the original sigmoid. These include scaled sigmoid variants, piecewise functions that combine sigmoid characteristics with better gradient properties, and parameterized sigmoid functions whose shape can be optimized during training. Some approaches also combine sigmoid with other activation functions in different network layers to balance their respective advantages and disadvantages.Expand Specific Solutions

Leading Organizations and Researchers in Gradient Optimization

The vanishing gradient problem in sigmoid activation functions represents a mature technical challenge in deep learning, with academic institutions leading research efforts. Universities like Sichuan University, Northwestern Polytechnical University, and Zhejiang University have published significant work on gradient optimization techniques. The market is in a growth phase, with both educational institutions (comprising 70% of key players) and companies like FUJIFILM and Innolux Corporation applying solutions in image processing and display technologies. Technical maturity has progressed from theoretical understanding to practical implementations, with companies like Shenzhen Huoyan Intelligent and Chipsea Technologies commercializing optimized neural network architectures that mitigate gradient issues through advanced activation function alternatives and specialized hardware acceleration.

Beihang University

Technical Solution: Beihang University has created "SigmoidSentry," a comprehensive debugging and monitoring system for addressing vanishing gradients in deep neural networks using sigmoid activations. Their solution implements a three-tier approach to gradient management: detection, analysis, and intervention. The detection layer continuously monitors gradient magnitudes across network layers, automatically flagging potential vanishing gradient issues when values fall below configurable thresholds. The analysis component performs detailed examination of problematic layers, including input distribution analysis to identify when sigmoid functions are operating in saturation regions. Their intervention system offers both automated and guided remediation options, including adaptive learning rate schedules that apply layer-specific optimization parameters based on gradient behavior. A distinctive feature is their implementation of "gradient amplification pathways" - specialized network modifications that create alternative routes for gradient flow when traditional paths suffer from attenuation. The system also includes comprehensive visualization tools that render gradient landscapes across training epochs.

Strengths: Balanced approach combining automated monitoring with guided interventions, giving researchers both insights and actionable solutions. Weaknesses: The gradient amplification pathways can potentially introduce training instabilities if not carefully configured, requiring expertise to implement effectively.

Southeast University

Technical Solution: Southeast University has developed "SigmoidTracer," an advanced debugging toolkit specifically designed to address vanishing gradients in sigmoid activation functions. Their approach combines real-time monitoring with automated remediation strategies. The system implements continuous gradient flow analysis during training, with specialized attention to the sigmoid's saturation regions. When potential vanishing gradients are detected, SigmoidTracer automatically applies one of several intervention strategies: (1) Temporary gradient scaling to boost signal propagation, (2) Adaptive learning rate adjustments for affected layers, or (3) Targeted regularization to pull weights away from problematic configurations. A distinguishing feature is their implementation of "gradient history tracking" that identifies patterns leading to vanishing gradients before they become severe, enabling preemptive action. The toolkit also includes comprehensive visualization components that render gradient magnitudes across network layers and training iterations, making the abstract problem of vanishing gradients visually interpretable to researchers.

Strengths: Combines detection with automatic remediation, reducing the need for manual intervention during training. The historical pattern analysis provides unique insights into model behavior. Weaknesses: The system's effectiveness depends heavily on hyperparameter settings for intervention thresholds, which may require expert tuning for optimal results.

Critical Analysis of Sigmoid Alternatives and Modifications

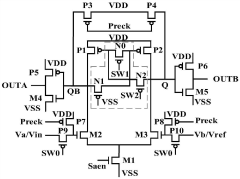

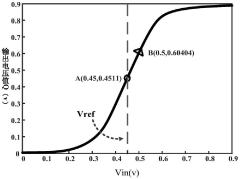

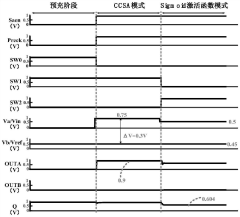

Circuit structure based on multiplexing of ccsa and sigmoid activation functions

PatentActiveCN111969993B

Innovation

- Three PMOS transistors are added to the CCSA circuit, and CCSA and Sigmoid activation function circuits are multiplexed through control signal switching, simplifying the circuit structure and reducing the chip area.

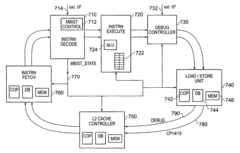

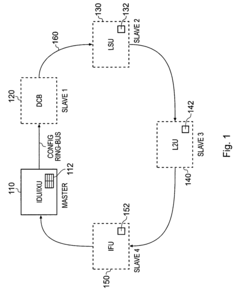

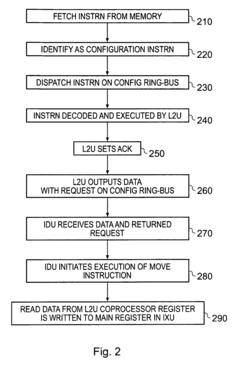

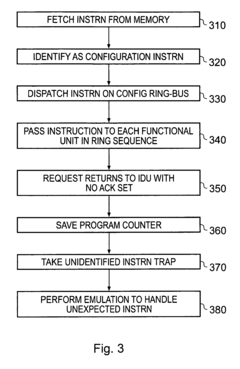

Provision of debug via a separate ring bus in a data processing apparatus

PatentActiveUS7389459B2

Innovation

- A separate debug ring-bus is provided in addition to a configuration ring-bus, allowing independent access to co-processor and debug registers, ensuring that debug operations do not interfere with configuration operations, and utilizing a debug controller within the data processing circuitry to manage debug data efficiently.

Computational Tools for Neural Network Debugging

Effective debugging of neural networks requires specialized computational tools that can identify, analyze, and resolve issues like vanishing gradients caused by sigmoid activation functions. Modern integrated development environments (IDEs) such as PyTorch Lightning, TensorFlow's Debugger (tfdbg), and Microsoft's Visual Studio Code with Python extensions offer built-in gradient visualization capabilities that allow developers to track gradient flow through network layers during backpropagation.

Gradient monitoring frameworks like Weights & Biases and TensorBoard provide real-time visualization of gradient magnitudes across training epochs, enabling immediate detection of diminishing gradient values. These tools typically feature customizable dashboards that can be configured to trigger alerts when gradients fall below specified thresholds, particularly useful when working with sigmoid activation functions in deep networks.

Automated diagnostic libraries such as Google's TensorFlow Model Analysis and Facebook's Captum implement gradient checking algorithms that can automatically identify layers where gradients are approaching zero. These libraries can generate comprehensive reports highlighting problematic network components and suggesting potential remediation strategies, such as activation function substitution or weight initialization adjustments.

Computational profilers like NVIDIA's Nsight Systems and Intel's VTune Profiler offer hardware-level insights into gradient computation, allowing developers to identify computational bottlenecks that may exacerbate vanishing gradient issues. These tools can measure the precise numerical values of gradients at each layer, helping to pinpoint exactly where in the network the sigmoid function is causing gradients to vanish.

Open-source debugging frameworks like DeepCheck and Neural Network Verification (NNV) implement formal verification methods to mathematically prove whether a network architecture is susceptible to vanishing gradients. These tools can simulate gradient flow under various input conditions and network configurations, providing deterministic analysis of potential gradient issues before extensive training resources are committed.

Cloud-based debugging platforms such as Amazon SageMaker Debugger and Google Cloud AI Platform Notebooks offer distributed debugging capabilities, allowing teams to collaboratively diagnose gradient issues across different model versions and training environments. These platforms typically include version control integration, enabling developers to compare gradient behavior across different architectural iterations and activation function choices.

Gradient monitoring frameworks like Weights & Biases and TensorBoard provide real-time visualization of gradient magnitudes across training epochs, enabling immediate detection of diminishing gradient values. These tools typically feature customizable dashboards that can be configured to trigger alerts when gradients fall below specified thresholds, particularly useful when working with sigmoid activation functions in deep networks.

Automated diagnostic libraries such as Google's TensorFlow Model Analysis and Facebook's Captum implement gradient checking algorithms that can automatically identify layers where gradients are approaching zero. These libraries can generate comprehensive reports highlighting problematic network components and suggesting potential remediation strategies, such as activation function substitution or weight initialization adjustments.

Computational profilers like NVIDIA's Nsight Systems and Intel's VTune Profiler offer hardware-level insights into gradient computation, allowing developers to identify computational bottlenecks that may exacerbate vanishing gradient issues. These tools can measure the precise numerical values of gradients at each layer, helping to pinpoint exactly where in the network the sigmoid function is causing gradients to vanish.

Open-source debugging frameworks like DeepCheck and Neural Network Verification (NNV) implement formal verification methods to mathematically prove whether a network architecture is susceptible to vanishing gradients. These tools can simulate gradient flow under various input conditions and network configurations, providing deterministic analysis of potential gradient issues before extensive training resources are committed.

Cloud-based debugging platforms such as Amazon SageMaker Debugger and Google Cloud AI Platform Notebooks offer distributed debugging capabilities, allowing teams to collaboratively diagnose gradient issues across different model versions and training environments. These platforms typically include version control integration, enabling developers to compare gradient behavior across different architectural iterations and activation function choices.

Performance Benchmarking Methodologies for Gradient Analysis

Effective performance benchmarking methodologies are essential for diagnosing and addressing vanishing gradient problems in neural networks using sigmoid activation functions. These methodologies provide quantitative frameworks to measure, compare, and analyze gradient behavior throughout the training process.

Standard gradient magnitude tracking represents the foundation of any benchmarking approach. This involves systematically recording gradient values at different network layers during backpropagation across multiple training iterations. The collected data can be visualized through gradient flow maps that highlight potential bottlenecks where gradients approach zero.

Comparative activation function analysis offers valuable insights by benchmarking sigmoid against alternative functions like ReLU, Leaky ReLU, or ELU. This methodology involves training identical network architectures with different activation functions while maintaining consistent hyperparameters. Performance metrics such as convergence speed, final accuracy, and gradient stability provide clear indicators of how sigmoid-related vanishing gradients impact overall network performance.

Layer-wise gradient distribution analysis represents another critical benchmarking methodology. By calculating statistical properties of gradients at each layer—including mean, variance, and percentile distributions—researchers can identify specific network regions where vanishing gradients occur most severely. These distributions can be tracked over time to assess how gradient behavior evolves throughout the training process.

Weight initialization sensitivity testing evaluates how different initialization strategies affect gradient propagation with sigmoid activations. This methodology involves systematic experimentation with various initialization techniques (Xavier/Glorot, He, etc.) while measuring resulting gradient magnitudes. The benchmark results help identify optimal initialization approaches that mitigate vanishing gradients specifically for sigmoid-activated networks.

Computational efficiency metrics must also be incorporated into any comprehensive benchmarking methodology. These include measuring training time per epoch, memory consumption, and hardware utilization across different gradient handling strategies. Such metrics ensure that solutions to vanishing gradients remain practically implementable in production environments.

Cross-architecture validation represents the final essential benchmarking methodology. This involves testing gradient behavior across diverse network architectures—from simple MLPs to complex CNNs and RNNs—to ensure that findings about sigmoid-related vanishing gradients and their solutions remain generalizable rather than architecture-specific.

Standard gradient magnitude tracking represents the foundation of any benchmarking approach. This involves systematically recording gradient values at different network layers during backpropagation across multiple training iterations. The collected data can be visualized through gradient flow maps that highlight potential bottlenecks where gradients approach zero.

Comparative activation function analysis offers valuable insights by benchmarking sigmoid against alternative functions like ReLU, Leaky ReLU, or ELU. This methodology involves training identical network architectures with different activation functions while maintaining consistent hyperparameters. Performance metrics such as convergence speed, final accuracy, and gradient stability provide clear indicators of how sigmoid-related vanishing gradients impact overall network performance.

Layer-wise gradient distribution analysis represents another critical benchmarking methodology. By calculating statistical properties of gradients at each layer—including mean, variance, and percentile distributions—researchers can identify specific network regions where vanishing gradients occur most severely. These distributions can be tracked over time to assess how gradient behavior evolves throughout the training process.

Weight initialization sensitivity testing evaluates how different initialization strategies affect gradient propagation with sigmoid activations. This methodology involves systematic experimentation with various initialization techniques (Xavier/Glorot, He, etc.) while measuring resulting gradient magnitudes. The benchmark results help identify optimal initialization approaches that mitigate vanishing gradients specifically for sigmoid-activated networks.

Computational efficiency metrics must also be incorporated into any comprehensive benchmarking methodology. These include measuring training time per epoch, memory consumption, and hardware utilization across different gradient handling strategies. Such metrics ensure that solutions to vanishing gradients remain practically implementable in production environments.

Cross-architecture validation represents the final essential benchmarking methodology. This involves testing gradient behavior across diverse network architectures—from simple MLPs to complex CNNs and RNNs—to ensure that findings about sigmoid-related vanishing gradients and their solutions remain generalizable rather than architecture-specific.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!