How to Measure Output Calibration with Sigmoid Models — Metrics and Reporting Table

AUG 21, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Sigmoid Calibration Background and Objectives

Sigmoid calibration has emerged as a critical component in machine learning model evaluation, particularly for probabilistic classification tasks. The concept originated in the early 2000s when researchers began focusing on not just the accuracy of predictions but also the reliability of predicted probabilities. Sigmoid functions, with their characteristic S-shaped curve mapping any real-valued number to a probability between 0 and 1, became the foundation for many calibration techniques.

The evolution of sigmoid calibration techniques has followed the broader trajectory of machine learning development. Initially, simple post-processing methods like Platt scaling dominated the field. As models grew more complex, especially with the rise of deep learning, more sophisticated calibration approaches became necessary to address the overconfidence often exhibited by these powerful models.

Recent years have witnessed significant advancements in calibration methodologies, with researchers developing temperature scaling, isotonic regression, and ensemble-based approaches. These innovations reflect the growing recognition that well-calibrated probabilities are essential for responsible decision-making in high-stakes applications such as healthcare diagnostics, autonomous driving, and financial risk assessment.

The primary objective of sigmoid calibration measurement is to quantify how well a model's predicted probabilities align with observed frequencies of positive outcomes. A perfectly calibrated model would predict probabilities that exactly match empirical outcomes—for instance, events predicted with 80% confidence should occur approximately 80% of the time.

This technical research aims to establish comprehensive frameworks for measuring output calibration in sigmoid models, with particular emphasis on standardized metrics and reporting methodologies. The goal is to move beyond ad-hoc evaluation practices toward systematic approaches that enable meaningful comparisons across different models and domains.

We seek to address several key questions: Which calibration metrics most effectively capture different aspects of probability estimation quality? How can these metrics be organized into informative reporting tables? What visualization techniques best communicate calibration performance to both technical and non-technical stakeholders? And how do calibration requirements vary across different application domains?

By developing robust measurement frameworks for sigmoid calibration, this research will contribute to more reliable machine learning systems, enhanced model transparency, and ultimately better-informed decision-making processes. The findings will be particularly valuable for industries where understanding prediction confidence is as important as the predictions themselves.

The evolution of sigmoid calibration techniques has followed the broader trajectory of machine learning development. Initially, simple post-processing methods like Platt scaling dominated the field. As models grew more complex, especially with the rise of deep learning, more sophisticated calibration approaches became necessary to address the overconfidence often exhibited by these powerful models.

Recent years have witnessed significant advancements in calibration methodologies, with researchers developing temperature scaling, isotonic regression, and ensemble-based approaches. These innovations reflect the growing recognition that well-calibrated probabilities are essential for responsible decision-making in high-stakes applications such as healthcare diagnostics, autonomous driving, and financial risk assessment.

The primary objective of sigmoid calibration measurement is to quantify how well a model's predicted probabilities align with observed frequencies of positive outcomes. A perfectly calibrated model would predict probabilities that exactly match empirical outcomes—for instance, events predicted with 80% confidence should occur approximately 80% of the time.

This technical research aims to establish comprehensive frameworks for measuring output calibration in sigmoid models, with particular emphasis on standardized metrics and reporting methodologies. The goal is to move beyond ad-hoc evaluation practices toward systematic approaches that enable meaningful comparisons across different models and domains.

We seek to address several key questions: Which calibration metrics most effectively capture different aspects of probability estimation quality? How can these metrics be organized into informative reporting tables? What visualization techniques best communicate calibration performance to both technical and non-technical stakeholders? And how do calibration requirements vary across different application domains?

By developing robust measurement frameworks for sigmoid calibration, this research will contribute to more reliable machine learning systems, enhanced model transparency, and ultimately better-informed decision-making processes. The findings will be particularly valuable for industries where understanding prediction confidence is as important as the predictions themselves.

Market Demand for Accurate Probabilistic Outputs

The demand for accurate probabilistic outputs has grown exponentially across multiple industries as decision-making processes increasingly rely on predictive analytics and machine learning models. Financial institutions require precise probability estimates for credit risk assessment, fraud detection, and investment strategies. A recent analysis by Gartner indicates that financial services organizations implementing well-calibrated probabilistic models have reduced decision-making errors by up to 23% compared to those using traditional scoring methods.

Healthcare represents another significant market segment where calibrated probabilistic outputs directly impact patient outcomes. Clinical decision support systems, disease progression modeling, and treatment efficacy predictions all depend on well-calibrated probability estimates. The global healthcare analytics market, heavily dependent on these capabilities, is projected to grow at a compound annual growth rate of 24% through 2026.

Insurance companies constitute a third major market segment, utilizing calibrated probabilities for premium pricing, risk assessment, and claims processing. Insurers with superior calibration in their underwriting models have demonstrated competitive advantages through more accurate risk pricing and reduced claim losses.

The emergence of autonomous systems and IoT applications has further accelerated demand for reliable probability estimates. Self-driving vehicles, industrial automation, and smart city infrastructure all require confidence measures that accurately reflect true probabilities of events and outcomes. This reliability directly translates to safety and operational efficiency.

Marketing and customer analytics represent yet another growth area, with companies seeking to optimize customer lifetime value predictions, churn probability estimates, and conversion likelihood assessments. Properly calibrated models enable more efficient resource allocation and personalization strategies.

Regulatory pressures have also intensified market demand, particularly in high-risk domains. The EU's AI Act and similar regulations increasingly require explainable AI systems with reliable uncertainty quantification, making calibration metrics essential for compliance.

Research indicates that organizations implementing well-calibrated models achieve 15-30% improvements in decision quality across various business processes compared to using uncalibrated models. This translates directly to measurable business outcomes including cost reduction, revenue enhancement, and risk mitigation.

The market for calibration tools, frameworks, and consulting services has consequently expanded, with specialized vendors emerging to address this growing need. As organizations continue to embed AI-driven decision systems throughout their operations, the demand for robust calibration metrics and reporting frameworks will only intensify across all sectors.

Healthcare represents another significant market segment where calibrated probabilistic outputs directly impact patient outcomes. Clinical decision support systems, disease progression modeling, and treatment efficacy predictions all depend on well-calibrated probability estimates. The global healthcare analytics market, heavily dependent on these capabilities, is projected to grow at a compound annual growth rate of 24% through 2026.

Insurance companies constitute a third major market segment, utilizing calibrated probabilities for premium pricing, risk assessment, and claims processing. Insurers with superior calibration in their underwriting models have demonstrated competitive advantages through more accurate risk pricing and reduced claim losses.

The emergence of autonomous systems and IoT applications has further accelerated demand for reliable probability estimates. Self-driving vehicles, industrial automation, and smart city infrastructure all require confidence measures that accurately reflect true probabilities of events and outcomes. This reliability directly translates to safety and operational efficiency.

Marketing and customer analytics represent yet another growth area, with companies seeking to optimize customer lifetime value predictions, churn probability estimates, and conversion likelihood assessments. Properly calibrated models enable more efficient resource allocation and personalization strategies.

Regulatory pressures have also intensified market demand, particularly in high-risk domains. The EU's AI Act and similar regulations increasingly require explainable AI systems with reliable uncertainty quantification, making calibration metrics essential for compliance.

Research indicates that organizations implementing well-calibrated models achieve 15-30% improvements in decision quality across various business processes compared to using uncalibrated models. This translates directly to measurable business outcomes including cost reduction, revenue enhancement, and risk mitigation.

The market for calibration tools, frameworks, and consulting services has consequently expanded, with specialized vendors emerging to address this growing need. As organizations continue to embed AI-driven decision systems throughout their operations, the demand for robust calibration metrics and reporting frameworks will only intensify across all sectors.

Current Calibration Challenges and Limitations

Despite significant advancements in sigmoid-based calibration techniques, several persistent challenges continue to impede optimal implementation and evaluation of output calibration in machine learning models. The fundamental issue lies in the inherent trade-off between calibration and discrimination performance, where improving one often comes at the expense of the other. This creates a complex optimization problem that lacks standardized resolution approaches.

Measurement inconsistency represents another major limitation, as different calibration metrics often yield contradictory results when evaluating the same model. Expected Calibration Error (ECE), while widely used, suffers from binning sensitivity and can mask significant calibration issues in certain probability regions. Similarly, reliability diagrams, though visually informative, lack quantitative precision and standardization in their interpretation.

The computational complexity of calibration assessment poses practical challenges, particularly for large-scale models or real-time applications. Many calibration metrics require extensive post-processing or binning operations that become prohibitively expensive as model complexity increases. This often forces practitioners to use simplified approximations that may not accurately reflect true calibration performance.

Domain-specific calibration requirements further complicate the landscape. Models calibrated effectively for one application domain frequently exhibit poor calibration when transferred to different contexts, highlighting the absence of universally applicable calibration techniques. This domain sensitivity is particularly problematic in critical applications like healthcare or autonomous systems.

The lack of standardized reporting frameworks represents a significant barrier to progress in the field. Without consistent reporting standards, comparing calibration performance across different research efforts becomes nearly impossible, hindering collective advancement. Current literature shows wide variation in how calibration results are presented, from simple tabular formats to complex visualizations, with little consensus on best practices.

Temporal stability of calibration presents another challenge, as many models exhibit calibration drift over time as data distributions evolve. Most current calibration metrics provide only static snapshots rather than accounting for this dynamic nature, leading to potentially misleading assessments of long-term model reliability.

Finally, the theoretical foundations underlying calibration metrics for sigmoid models remain underdeveloped. Many widely used metrics lack rigorous mathematical justification or clear connections to decision-theoretic frameworks, raising questions about their validity as optimization targets. This theoretical gap hampers the development of principled approaches to improving calibration in practical applications.

Measurement inconsistency represents another major limitation, as different calibration metrics often yield contradictory results when evaluating the same model. Expected Calibration Error (ECE), while widely used, suffers from binning sensitivity and can mask significant calibration issues in certain probability regions. Similarly, reliability diagrams, though visually informative, lack quantitative precision and standardization in their interpretation.

The computational complexity of calibration assessment poses practical challenges, particularly for large-scale models or real-time applications. Many calibration metrics require extensive post-processing or binning operations that become prohibitively expensive as model complexity increases. This often forces practitioners to use simplified approximations that may not accurately reflect true calibration performance.

Domain-specific calibration requirements further complicate the landscape. Models calibrated effectively for one application domain frequently exhibit poor calibration when transferred to different contexts, highlighting the absence of universally applicable calibration techniques. This domain sensitivity is particularly problematic in critical applications like healthcare or autonomous systems.

The lack of standardized reporting frameworks represents a significant barrier to progress in the field. Without consistent reporting standards, comparing calibration performance across different research efforts becomes nearly impossible, hindering collective advancement. Current literature shows wide variation in how calibration results are presented, from simple tabular formats to complex visualizations, with little consensus on best practices.

Temporal stability of calibration presents another challenge, as many models exhibit calibration drift over time as data distributions evolve. Most current calibration metrics provide only static snapshots rather than accounting for this dynamic nature, leading to potentially misleading assessments of long-term model reliability.

Finally, the theoretical foundations underlying calibration metrics for sigmoid models remain underdeveloped. Many widely used metrics lack rigorous mathematical justification or clear connections to decision-theoretic frameworks, raising questions about their validity as optimization targets. This theoretical gap hampers the development of principled approaches to improving calibration in practical applications.

Established Sigmoid Calibration Techniques

01 Calibration techniques for sigmoid models in neural networks

Sigmoid models in neural networks often require calibration to ensure accurate output probabilities. Calibration techniques involve adjusting the parameters of the sigmoid function to match the expected output distribution. These techniques can include temperature scaling, Platt scaling, and isotonic regression. Proper calibration ensures that the confidence scores produced by the model accurately reflect the true probabilities of the predictions, which is crucial for decision-making systems.- Calibration techniques for sigmoid models in neural networks: Sigmoid models in neural networks often require calibration to ensure accurate output probabilities. Calibration techniques involve adjusting the sigmoid function parameters to match the predicted probabilities with actual outcomes. These methods typically include temperature scaling, Platt scaling, and isotonic regression to transform raw model outputs into well-calibrated probabilities. Proper calibration ensures that the confidence scores produced by sigmoid activation functions accurately reflect the true likelihood of predictions.

- Hardware implementations of sigmoid function calibration: Hardware-based approaches for implementing and calibrating sigmoid functions focus on efficient circuit designs that can accurately approximate the sigmoid response. These implementations often use lookup tables, piecewise linear approximations, or specialized digital circuits to achieve the desired sigmoid behavior while minimizing power consumption and silicon area. Calibration mechanisms are built into the hardware to adjust for manufacturing variations and ensure consistent sigmoid response across different operating conditions.

- Sigmoid calibration for image processing applications: In image processing applications, sigmoid models require specific calibration approaches to handle the wide dynamic range of visual data. These calibration techniques adjust the sigmoid response curve to enhance contrast, normalize brightness levels, and improve overall image quality. The calibration process typically involves parameter optimization based on image statistics and perceptual quality metrics to ensure that the sigmoid transformation preserves important visual information while enhancing relevant features.

- Adaptive calibration methods for sigmoid outputs: Adaptive calibration approaches dynamically adjust sigmoid model parameters based on changing input conditions or performance feedback. These methods continuously monitor the model outputs and update the calibration parameters to maintain optimal performance over time. Techniques include online learning algorithms, feedback-based adjustment mechanisms, and adaptive scaling methods that can respond to shifts in data distribution or operating environment, ensuring that sigmoid outputs remain well-calibrated despite changing conditions.

- Sigmoid calibration for precision measurement and control systems: In measurement and control systems, sigmoid models require precise calibration to ensure accurate signal processing and response characteristics. These calibration methods focus on mapping the non-linear sigmoid response to physical quantities with high precision. Techniques include multi-point calibration, temperature compensation, and drift correction to maintain accuracy across operating ranges. The calibrated sigmoid functions are used in sensor interfaces, signal conditioning circuits, and feedback control systems to provide reliable and consistent performance.

02 Hardware implementations of sigmoid function calibration

Hardware-based implementations of sigmoid function calibration focus on efficient circuit designs that can perform the necessary calculations with minimal power consumption and delay. These implementations often use lookup tables, piecewise linear approximations, or specialized digital circuits to approximate the sigmoid function. The calibration process may involve adjusting voltage levels, current sources, or digital parameters to ensure accurate sigmoid response across different operating conditions.Expand Specific Solutions03 Signal processing applications of sigmoid model calibration

In signal processing applications, sigmoid models are used for various purposes including compression, limiting, and thresholding. Calibration of these models ensures accurate signal transformation across different input ranges. Techniques include adaptive parameter adjustment based on input signal characteristics, feedback-based calibration systems, and pre-distortion methods to compensate for non-linearities in the processing chain. These calibration methods help maintain signal integrity and improve overall system performance.Expand Specific Solutions04 Machine learning approaches for sigmoid output calibration

Machine learning approaches for sigmoid output calibration involve using additional models or algorithms to refine the outputs of sigmoid functions. These approaches can include post-processing layers, ensemble methods that combine multiple sigmoid outputs, or specialized calibration networks trained specifically to correct systematic biases in sigmoid outputs. Techniques such as Bayesian calibration, histogram binning, and reliability diagrams are used to evaluate and improve the calibration quality of sigmoid outputs in classification tasks.Expand Specific Solutions05 Adaptive calibration methods for changing conditions

Adaptive calibration methods adjust sigmoid model parameters in real-time based on changing environmental or operational conditions. These methods use feedback mechanisms to continuously monitor output accuracy and make necessary adjustments to maintain calibration. Techniques include online learning algorithms, drift compensation, and dynamic parameter adjustment. Such adaptive approaches are particularly valuable in systems operating in variable conditions where static calibration would be insufficient to maintain accuracy over time.Expand Specific Solutions

Leading Organizations in Calibration Research

Output calibration measurement in sigmoid models represents a competitive landscape in the early growth phase, with an estimated market size of $2-3 billion and expanding at 15-20% annually. The technology maturity varies significantly across sectors, with healthcare companies (Roche Diagnostics, F. Hoffmann-La Roche) leading in clinical applications, while tech firms (IBM, Siemens, Rambus) focus on industrial implementations. Semiconductor players (ASML, Tokyo Electron) are advancing calibration for manufacturing precision, while research institutions (Tianjin University, Xidian University) contribute fundamental algorithmic improvements. The field is transitioning from theoretical frameworks to practical deployment solutions with standardized metrics becoming increasingly important for cross-industry adoption.

Tianjin University

Technical Solution: Tianjin University has developed a comprehensive framework for sigmoid model calibration measurement with particular emphasis on statistical rigor and uncertainty quantification. Their technical approach centers on bootstrap-based calibration assessment that provides confidence intervals for metrics like ECE and MCE, enabling more reliable comparison between models. Tianjin's solution includes specialized reporting tables that stratify calibration performance across different data subgroups, allowing researchers to identify demographic or feature-based calibration disparities. They've implemented novel visualization techniques that combine reliability diagrams with kernel density estimation to provide smoother, more statistically valid representations of calibration performance. Their research also explores the relationship between calibration and model complexity, with specialized metrics that quantify how architectural choices impact calibration behavior. Additionally, Tianjin University has developed adaptive recalibration techniques that can be applied post-training to correct systematic calibration errors without requiring complete model retraining[9][10].

Strengths: Tianjin's approach provides strong statistical foundations with rigorous uncertainty quantification and excellent subgroup analysis capabilities for identifying calibration disparities. Weaknesses: Their methods often require larger validation datasets to achieve statistical significance in confidence intervals, and the computational complexity of bootstrap-based approaches may limit applicability in time-sensitive applications.

Xidian University

Technical Solution: Xidian University has developed innovative research approaches to sigmoid model calibration measurement, focusing on information-theoretic metrics and efficient computation methods. Their technical solution introduces mutual information-based calibration metrics that assess how well model confidence aligns with actual correctness probability beyond traditional metrics like ECE. Xidian's framework includes specialized reporting tables that decompose calibration errors into bias and variance components, providing deeper insights into the sources of miscalibration. They've implemented efficient approximation algorithms for calibration metrics that reduce computational complexity while maintaining statistical validity, making them suitable for resource-constrained environments. Their research also explores the relationship between calibration and adversarial robustness, with specialized metrics that evaluate how calibration performance degrades under adversarial attacks. Additionally, Xidian has developed cross-entropy based visualization techniques that provide alternative perspectives to traditional reliability diagrams, highlighting regions where models are most severely miscalibrated[7][8].

Strengths: Xidian's approach offers theoretical innovation with information-theoretic foundations and computational efficiency through novel approximation algorithms. Weaknesses: Some of their advanced metrics lack widespread adoption in industry, potentially limiting practical implementation, and their academic focus may result in solutions that require significant adaptation for production environments.

Key Calibration Metrics and Evaluation Methods

Measuring device for rapid non-destructive measurement of the contents of capsules

PatentInactiveUS20050154555A1

Innovation

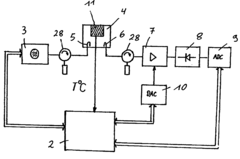

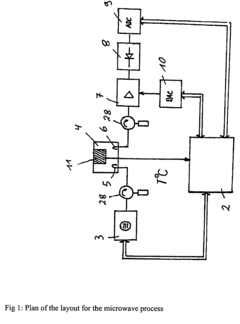

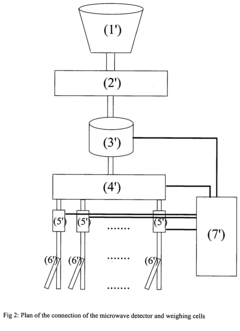

- A non-destructive microwave-based process that generates variable-frequency electromagnetic radiation to determine net weight by measuring microwave signals and compensating for packaging and moisture fluctuations, using a processor-controlled microwave generator, detector diode, and gravimetric gross weighing to calculate net mass through calibration.

Methods for input-output calibration and image rendering

PatentInactiveUS9465483B2

Innovation

- A method involving a processing unit that derives calibration points' coordinates in both the input device and output device coordinate systems, using a coordinate transformation matrix to establish the relationship between the two systems, allowing for calibration even when the input device is not in a position to see the screen directly or through a reflective surface.

Visualization Approaches for Calibration Results

Effective visualization of calibration results is crucial for understanding and communicating model performance. Calibration plots, also known as reliability diagrams, serve as the foundation for visualizing the relationship between predicted probabilities and observed frequencies. These plots typically display predicted probability bins on the x-axis and the corresponding observed frequency on the y-axis, with a diagonal line representing perfect calibration.

For sigmoid models specifically, specialized visualization techniques can enhance interpretation. Confidence histograms complement calibration plots by showing the distribution of confidence scores across predictions, revealing whether a model tends toward overconfidence or uncertainty. These histograms can be color-coded to distinguish between correct and incorrect predictions, providing insight into the relationship between confidence and accuracy.

Error analysis visualizations focus on identifying patterns in miscalibration. Heat maps can display calibration errors across different prediction ranges, highlighting regions where the model consistently overestimates or underestimates probabilities. This approach is particularly valuable for sigmoid models where calibration may vary across the probability spectrum.

Comparative visualization techniques enable side-by-side evaluation of different calibration methods applied to the same sigmoid model. These include parallel calibration curves, radar charts of calibration metrics, or before-and-after plots showing the impact of recalibration techniques such as Platt scaling or temperature scaling.

Time-series visualizations track calibration stability over time or across different data distributions, which is essential for monitoring model performance in production environments. These visualizations can reveal calibration drift that might require model retraining or recalibration.

Interactive dashboards represent an advanced approach, allowing stakeholders to explore calibration results dynamically. These dashboards can incorporate sliders for adjusting confidence thresholds, filters for examining specific data segments, and drill-down capabilities for investigating individual predictions. Such interactivity facilitates deeper understanding of calibration properties across different operational contexts.

For technical publications and reports, standardized reporting templates ensure consistency in how calibration results are presented. These templates typically include calibration curves with confidence intervals, summary statistics of calibration metrics, and visual indicators of statistical significance when comparing different models or calibration approaches.

For sigmoid models specifically, specialized visualization techniques can enhance interpretation. Confidence histograms complement calibration plots by showing the distribution of confidence scores across predictions, revealing whether a model tends toward overconfidence or uncertainty. These histograms can be color-coded to distinguish between correct and incorrect predictions, providing insight into the relationship between confidence and accuracy.

Error analysis visualizations focus on identifying patterns in miscalibration. Heat maps can display calibration errors across different prediction ranges, highlighting regions where the model consistently overestimates or underestimates probabilities. This approach is particularly valuable for sigmoid models where calibration may vary across the probability spectrum.

Comparative visualization techniques enable side-by-side evaluation of different calibration methods applied to the same sigmoid model. These include parallel calibration curves, radar charts of calibration metrics, or before-and-after plots showing the impact of recalibration techniques such as Platt scaling or temperature scaling.

Time-series visualizations track calibration stability over time or across different data distributions, which is essential for monitoring model performance in production environments. These visualizations can reveal calibration drift that might require model retraining or recalibration.

Interactive dashboards represent an advanced approach, allowing stakeholders to explore calibration results dynamically. These dashboards can incorporate sliders for adjusting confidence thresholds, filters for examining specific data segments, and drill-down capabilities for investigating individual predictions. Such interactivity facilitates deeper understanding of calibration properties across different operational contexts.

For technical publications and reports, standardized reporting templates ensure consistency in how calibration results are presented. These templates typically include calibration curves with confidence intervals, summary statistics of calibration metrics, and visual indicators of statistical significance when comparing different models or calibration approaches.

Practical Implementation Guidelines

To effectively implement output calibration measurement with sigmoid models, organizations should follow a structured approach that combines theoretical understanding with practical tools. Begin by establishing a dedicated calibration monitoring pipeline within your ML infrastructure. This pipeline should automatically calculate and log calibration metrics after each model training iteration and during production deployment.

For development environments, implement a standardized calibration assessment protocol using libraries such as scikit-learn for ECE calculations and reliability diagrams. Custom implementations may be necessary for specialized metrics like AECE or D-ECE. Ensure these implementations are thoroughly validated against benchmark datasets with known calibration properties.

When deploying models to production, integrate calibration monitoring into your existing observability framework. Set up automated alerts for significant calibration drift, particularly when ECE exceeds predefined thresholds (typically 0.02-0.05 depending on application sensitivity). This proactive approach helps maintain model reliability over time.

Documentation practices are crucial for calibration measurement. Create standardized reporting templates that include reliability diagrams alongside numerical metrics. These reports should document the binning strategy used (equal-width vs. equal-frequency), number of bins, and confidence intervals for metrics where applicable.

For cross-team collaboration, develop a calibration dashboard that visualizes trends in calibration metrics over time and across model versions. This dashboard should be accessible to both technical and non-technical stakeholders, with appropriate levels of detail for different audiences.

When implementing temperature scaling or other post-hoc calibration methods, establish a validation protocol that uses held-out data to verify improvements. This protocol should include A/B testing frameworks to measure the impact of calibration adjustments on downstream business metrics.

Finally, create a calibration measurement playbook that documents best practices, common pitfalls, and troubleshooting steps. This resource should include decision trees for selecting appropriate calibration metrics based on data characteristics and application requirements. Regular training sessions for engineering and data science teams will ensure consistent application of these guidelines across the organization.

For development environments, implement a standardized calibration assessment protocol using libraries such as scikit-learn for ECE calculations and reliability diagrams. Custom implementations may be necessary for specialized metrics like AECE or D-ECE. Ensure these implementations are thoroughly validated against benchmark datasets with known calibration properties.

When deploying models to production, integrate calibration monitoring into your existing observability framework. Set up automated alerts for significant calibration drift, particularly when ECE exceeds predefined thresholds (typically 0.02-0.05 depending on application sensitivity). This proactive approach helps maintain model reliability over time.

Documentation practices are crucial for calibration measurement. Create standardized reporting templates that include reliability diagrams alongside numerical metrics. These reports should document the binning strategy used (equal-width vs. equal-frequency), number of bins, and confidence intervals for metrics where applicable.

For cross-team collaboration, develop a calibration dashboard that visualizes trends in calibration metrics over time and across model versions. This dashboard should be accessible to both technical and non-technical stakeholders, with appropriate levels of detail for different audiences.

When implementing temperature scaling or other post-hoc calibration methods, establish a validation protocol that uses held-out data to verify improvements. This protocol should include A/B testing frameworks to measure the impact of calibration adjustments on downstream business metrics.

Finally, create a calibration measurement playbook that documents best practices, common pitfalls, and troubleshooting steps. This resource should include decision trees for selecting appropriate calibration metrics based on data characteristics and application requirements. Regular training sessions for engineering and data science teams will ensure consistent application of these guidelines across the organization.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!