How to Minimize Errors in Dynamic Light Scattering Measurements

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

DLS Technology Background and Objectives

Dynamic Light Scattering (DLS) emerged in the 1960s as a powerful technique for measuring particle size distributions in colloidal suspensions. The technology leverages the Brownian motion of particles in solution and analyzes the resulting scattered light intensity fluctuations to determine particle size. Over the past six decades, DLS has evolved from basic laboratory setups to sophisticated commercial instruments with advanced data processing capabilities.

The fundamental principle of DLS relies on the correlation between particle size and diffusion speed - smaller particles move faster than larger ones in solution. By measuring the time-dependent fluctuations in scattered light intensity, DLS instruments can calculate the diffusion coefficient and subsequently determine the hydrodynamic diameter of particles using the Stokes-Einstein equation.

Recent technological advancements have significantly improved DLS capabilities, including multi-angle detection systems, enhanced laser stability, and more sophisticated correlation algorithms. These developments have expanded the application range of DLS from simple size measurements to more complex characterizations of polydisperse samples, protein aggregation studies, and nanoparticle analysis.

Despite these advances, DLS measurements remain susceptible to various sources of error that can compromise data quality and interpretation. These include dust contamination, sample concentration effects, multiple scattering phenomena, and temperature fluctuations. The sensitivity of DLS to these factors necessitates careful experimental design and rigorous quality control protocols.

The primary objective of minimizing errors in DLS measurements is to enhance measurement accuracy and reproducibility across diverse sample types. This goal is particularly crucial as DLS applications expand into critical fields such as pharmaceutical development, nanomaterial characterization, and biological research, where precise particle size determination directly impacts product quality, safety, and efficacy.

Current research trends focus on developing robust methodologies to identify, quantify, and mitigate measurement errors through improved instrument design, standardized sample preparation protocols, and advanced data analysis algorithms. Machine learning approaches are increasingly being explored to distinguish between meaningful signals and artifacts in complex DLS data.

As industries continue to develop nanoscale materials and formulations, the demand for high-precision DLS measurements grows accordingly. The technology's evolution is now directed toward achieving greater sensitivity for heterogeneous samples, extending the detectable size range, and providing more detailed characterization of complex colloidal systems while minimizing measurement uncertainties.

The fundamental principle of DLS relies on the correlation between particle size and diffusion speed - smaller particles move faster than larger ones in solution. By measuring the time-dependent fluctuations in scattered light intensity, DLS instruments can calculate the diffusion coefficient and subsequently determine the hydrodynamic diameter of particles using the Stokes-Einstein equation.

Recent technological advancements have significantly improved DLS capabilities, including multi-angle detection systems, enhanced laser stability, and more sophisticated correlation algorithms. These developments have expanded the application range of DLS from simple size measurements to more complex characterizations of polydisperse samples, protein aggregation studies, and nanoparticle analysis.

Despite these advances, DLS measurements remain susceptible to various sources of error that can compromise data quality and interpretation. These include dust contamination, sample concentration effects, multiple scattering phenomena, and temperature fluctuations. The sensitivity of DLS to these factors necessitates careful experimental design and rigorous quality control protocols.

The primary objective of minimizing errors in DLS measurements is to enhance measurement accuracy and reproducibility across diverse sample types. This goal is particularly crucial as DLS applications expand into critical fields such as pharmaceutical development, nanomaterial characterization, and biological research, where precise particle size determination directly impacts product quality, safety, and efficacy.

Current research trends focus on developing robust methodologies to identify, quantify, and mitigate measurement errors through improved instrument design, standardized sample preparation protocols, and advanced data analysis algorithms. Machine learning approaches are increasingly being explored to distinguish between meaningful signals and artifacts in complex DLS data.

As industries continue to develop nanoscale materials and formulations, the demand for high-precision DLS measurements grows accordingly. The technology's evolution is now directed toward achieving greater sensitivity for heterogeneous samples, extending the detectable size range, and providing more detailed characterization of complex colloidal systems while minimizing measurement uncertainties.

Market Applications and Demand Analysis

Dynamic Light Scattering (DLS) technology has witnessed significant market growth across various industries due to its ability to accurately measure particle size distributions in solutions. The global market for DLS instruments was valued at approximately $300 million in 2022 and is projected to grow at a compound annual growth rate of 6-7% through 2028, driven by expanding applications in pharmaceutical development, biotechnology, and materials science.

The pharmaceutical and biotechnology sectors represent the largest market segment for DLS technology, accounting for nearly 40% of the total market share. This dominance stems from the critical need for precise particle characterization in drug formulation, protein aggregation studies, and quality control processes. The increasing development of complex biopharmaceuticals, including monoclonal antibodies and nanoparticle-based drug delivery systems, has further accelerated demand for high-precision DLS measurements.

Academic and research institutions constitute another significant market segment, representing approximately 25% of the global DLS market. These institutions utilize DLS technology for fundamental research in colloid science, polymer characterization, and nanomaterial development. The growing focus on nanoscience and nanotechnology research has substantially increased the demand for accurate particle sizing techniques.

The materials science and chemical industries collectively account for about 20% of the market share, employing DLS for quality control in manufacturing processes, product development, and research activities. Applications range from polymer characterization to ceramic processing and coating formulations, where particle size distribution directly impacts product performance.

Environmental monitoring and food safety sectors have emerged as rapidly growing application areas, currently representing about 10% of the market. These sectors utilize DLS for detecting and characterizing microplastics in water systems, analyzing food emulsions, and ensuring product stability and quality.

Market analysis indicates a growing demand for DLS instruments with enhanced error minimization capabilities. End-users consistently identify measurement accuracy and reproducibility as critical purchasing factors, with over 70% of surveyed users citing error reduction features as "very important" or "extremely important" in their procurement decisions.

Regional market distribution shows North America leading with approximately 35% market share, followed by Europe (30%), Asia-Pacific (25%), and rest of the world (10%). However, the Asia-Pacific region is experiencing the fastest growth rate, driven by expanding research infrastructure and increasing industrial applications in countries like China, Japan, and India.

The pharmaceutical and biotechnology sectors represent the largest market segment for DLS technology, accounting for nearly 40% of the total market share. This dominance stems from the critical need for precise particle characterization in drug formulation, protein aggregation studies, and quality control processes. The increasing development of complex biopharmaceuticals, including monoclonal antibodies and nanoparticle-based drug delivery systems, has further accelerated demand for high-precision DLS measurements.

Academic and research institutions constitute another significant market segment, representing approximately 25% of the global DLS market. These institutions utilize DLS technology for fundamental research in colloid science, polymer characterization, and nanomaterial development. The growing focus on nanoscience and nanotechnology research has substantially increased the demand for accurate particle sizing techniques.

The materials science and chemical industries collectively account for about 20% of the market share, employing DLS for quality control in manufacturing processes, product development, and research activities. Applications range from polymer characterization to ceramic processing and coating formulations, where particle size distribution directly impacts product performance.

Environmental monitoring and food safety sectors have emerged as rapidly growing application areas, currently representing about 10% of the market. These sectors utilize DLS for detecting and characterizing microplastics in water systems, analyzing food emulsions, and ensuring product stability and quality.

Market analysis indicates a growing demand for DLS instruments with enhanced error minimization capabilities. End-users consistently identify measurement accuracy and reproducibility as critical purchasing factors, with over 70% of surveyed users citing error reduction features as "very important" or "extremely important" in their procurement decisions.

Regional market distribution shows North America leading with approximately 35% market share, followed by Europe (30%), Asia-Pacific (25%), and rest of the world (10%). However, the Asia-Pacific region is experiencing the fastest growth rate, driven by expanding research infrastructure and increasing industrial applications in countries like China, Japan, and India.

Current Challenges in DLS Measurements

Dynamic Light Scattering (DLS) measurements face several significant challenges that can compromise data accuracy and reliability. The technique, while powerful for determining particle size distributions in colloidal systems, is inherently sensitive to multiple experimental variables that must be carefully controlled.

Sample preparation represents a primary challenge, as contaminants such as dust particles can dramatically skew results. Even minute quantities of large contaminants can dominate the scattering signal, leading to misinterpretation of the actual particle size distribution. This is particularly problematic when measuring samples with small particle sizes or low concentrations.

Concentration effects present another substantial hurdle. At high concentrations, multiple scattering events occur, violating the fundamental assumption of single scattering in DLS theory. Conversely, extremely dilute samples may not generate sufficient scattering intensity for reliable measurements. Finding the optimal concentration window remains challenging across different sample types.

Temperature fluctuations during measurement can significantly impact results through their effect on solvent viscosity and Brownian motion. Even small temperature variations of 0.5°C can lead to measurable differences in calculated particle sizes, necessitating precise temperature control systems.

Sample polydispersity poses a fundamental limitation to DLS technology. The technique inherently favors larger particles due to their stronger scattering intensity (proportional to r^6), potentially masking smaller populations in polydisperse samples. This bias can lead to inaccurate size distribution representations in complex mixtures.

Instrument-specific challenges include laser stability issues, detector sensitivity limitations, and optical alignment precision. Modern DLS instruments incorporate various correction algorithms, but these can sometimes introduce artifacts if not properly understood and applied by operators.

Data interpretation remains perhaps the most significant challenge. Converting correlation functions to size distributions is mathematically an ill-posed problem, requiring regularization methods that may produce different results depending on the specific algorithms employed. This creates difficulties in comparing results across different instruments or laboratories.

Time-dependent phenomena such as sample aggregation, sedimentation, or degradation during measurement can produce misleading results. These dynamic processes may occur on timescales comparable to the measurement duration, complicating data interpretation.

Finally, standardization across the field remains incomplete, with different manufacturers employing proprietary algorithms and reporting conventions. This creates challenges for result comparability and reproducibility, particularly for complex or non-ideal samples that deviate from the spherical, monodisperse ideal.

Sample preparation represents a primary challenge, as contaminants such as dust particles can dramatically skew results. Even minute quantities of large contaminants can dominate the scattering signal, leading to misinterpretation of the actual particle size distribution. This is particularly problematic when measuring samples with small particle sizes or low concentrations.

Concentration effects present another substantial hurdle. At high concentrations, multiple scattering events occur, violating the fundamental assumption of single scattering in DLS theory. Conversely, extremely dilute samples may not generate sufficient scattering intensity for reliable measurements. Finding the optimal concentration window remains challenging across different sample types.

Temperature fluctuations during measurement can significantly impact results through their effect on solvent viscosity and Brownian motion. Even small temperature variations of 0.5°C can lead to measurable differences in calculated particle sizes, necessitating precise temperature control systems.

Sample polydispersity poses a fundamental limitation to DLS technology. The technique inherently favors larger particles due to their stronger scattering intensity (proportional to r^6), potentially masking smaller populations in polydisperse samples. This bias can lead to inaccurate size distribution representations in complex mixtures.

Instrument-specific challenges include laser stability issues, detector sensitivity limitations, and optical alignment precision. Modern DLS instruments incorporate various correction algorithms, but these can sometimes introduce artifacts if not properly understood and applied by operators.

Data interpretation remains perhaps the most significant challenge. Converting correlation functions to size distributions is mathematically an ill-posed problem, requiring regularization methods that may produce different results depending on the specific algorithms employed. This creates difficulties in comparing results across different instruments or laboratories.

Time-dependent phenomena such as sample aggregation, sedimentation, or degradation during measurement can produce misleading results. These dynamic processes may occur on timescales comparable to the measurement duration, complicating data interpretation.

Finally, standardization across the field remains incomplete, with different manufacturers employing proprietary algorithms and reporting conventions. This creates challenges for result comparability and reproducibility, particularly for complex or non-ideal samples that deviate from the spherical, monodisperse ideal.

Error Minimization Methodologies

01 Error correction methods in DLS measurements

Various methods have been developed to correct errors in Dynamic Light Scattering (DLS) measurements. These include algorithmic approaches to compensate for systematic errors, statistical methods to reduce random noise, and calibration techniques using reference standards. These correction methods help improve the accuracy and reliability of particle size distribution measurements by minimizing the impact of instrumental and environmental factors on the scattering data.- Measurement error correction in dynamic light scattering: Various methods and systems have been developed to correct measurement errors in dynamic light scattering (DLS) techniques. These include algorithms for compensating systematic errors, statistical approaches for reducing random errors, and calibration techniques that improve accuracy. Advanced error correction methods can account for factors such as multiple scattering effects, background noise, and instrument-specific biases, significantly enhancing the reliability of particle size measurements.

- Hardware solutions for reducing DLS errors: Specialized hardware components and configurations have been designed to minimize errors in dynamic light scattering measurements. These include improved optical arrangements, advanced detector systems, and temperature control mechanisms that reduce thermal fluctuations affecting measurements. Some innovations focus on optimizing the scattering angle detection, implementing multi-angle measurement capabilities, and utilizing fiber optic technologies to enhance signal quality and reduce interference.

- Software algorithms for DLS data processing: Sophisticated software algorithms have been developed to process dynamic light scattering data and minimize interpretation errors. These include advanced correlation analysis techniques, machine learning approaches for pattern recognition, and statistical methods for outlier detection and removal. Some algorithms specifically address challenges like polydisperse samples, non-spherical particles, and concentration-dependent effects that traditionally lead to measurement inaccuracies.

- Sample preparation techniques to minimize DLS errors: Proper sample preparation methods have been developed to reduce errors in dynamic light scattering measurements. These techniques focus on controlling factors such as sample concentration, eliminating dust and contaminants, preventing aggregation, and maintaining sample stability during measurement. Specialized filtration methods, dispersion techniques, and buffer formulations help ensure that the measured particle characteristics accurately represent the true sample properties.

- Calibration standards and validation methods for DLS: Reference materials and validation protocols have been established to quantify and minimize errors in dynamic light scattering systems. These include certified size standards, performance verification samples, and interlaboratory comparison methodologies. Regular calibration using these standards helps identify systematic errors, drift, and other measurement biases. Validation procedures ensure that DLS instruments maintain accuracy across different sample types and measurement conditions.

02 Hardware solutions for reducing DLS measurement errors

Specialized hardware components and configurations have been designed to minimize errors in Dynamic Light Scattering systems. These include improved optical arrangements, advanced detector systems, temperature control mechanisms, and vibration isolation platforms. By enhancing the physical components of DLS instruments, these innovations reduce measurement artifacts and improve signal-to-noise ratios, resulting in more accurate particle characterization.Expand Specific Solutions03 Signal processing techniques for DLS error reduction

Advanced signal processing techniques are employed to address errors in Dynamic Light Scattering data. These include digital filtering algorithms, correlation function analysis methods, and mathematical transformations that help extract meaningful information from noisy scattering signals. By applying sophisticated computational approaches to raw DLS data, these techniques can identify and mitigate various sources of measurement error.Expand Specific Solutions04 Sample preparation methods to minimize DLS errors

Proper sample preparation protocols have been developed to reduce errors in Dynamic Light Scattering measurements. These include techniques for controlling dust contamination, preventing sample aggregation, optimizing concentration levels, and ensuring sample homogeneity. By addressing potential sources of error before measurement, these methods improve the quality of DLS data and enhance the reliability of particle size determinations.Expand Specific Solutions05 Multi-angle and multi-wavelength DLS approaches for error reduction

Multi-angle and multi-wavelength Dynamic Light Scattering techniques have been developed to overcome limitations of traditional single-angle measurements. By collecting scattering data at multiple angles or using different wavelengths of light, these approaches provide more comprehensive information about particle systems and help identify measurement artifacts. This redundancy in data collection allows for cross-validation and more robust error detection, particularly for complex or polydisperse samples.Expand Specific Solutions

Leading DLS Instrument Manufacturers

Dynamic Light Scattering (DLS) measurement technology is currently in a mature growth phase, with an estimated global market size of $300-400 million annually. The competitive landscape is characterized by established scientific instrument manufacturers and specialized optical technology companies. Leading players include Malvern Panalytical, which dominates with comprehensive DLS solutions, and Wyatt Technology, recognized for high-precision instruments. Other significant competitors include LS Instruments AG and Postnova Analytics, which focus on specialized applications. Japanese corporations like Shimadzu, Otsuka Electronics, and PULSTEC have strengthened their market positions through integration with broader analytical platforms. Academic institutions such as Kyoto University and The Johns Hopkins University contribute significantly to advancing measurement methodologies. The technology continues to evolve toward multi-angle systems, machine learning error correction, and standardized protocols to minimize measurement errors.

Postnova Analytics GmbH

Technical Solution: Postnova Analytics has developed Field-Flow Fractionation (FFF) coupled with Multi-Angle Light Scattering (MALS) and Dynamic Light Scattering (DLS) to overcome sample polydispersity issues that typically cause errors in conventional DLS measurements. Their integrated system first separates particles based on size using FFF before performing DLS measurements on the fractionated sample, eliminating errors from larger particles dominating scattering intensity. Postnova's technology includes advanced flow control systems that maintain stable flow rates within ±0.1%, critical for accurate time-resolved DLS measurements. Their instruments feature temperature stabilization to ±0.01°C throughout the entire flow path to prevent thermal gradient-induced errors. The company's software incorporates specialized algorithms for converting retention time to particle size with high precision, while simultaneously analyzing DLS data for each fraction to provide comprehensive size and shape information.

Strengths: Fractionation before DLS measurement eliminates polydispersity-related errors; combined techniques provide more complete characterization than DLS alone; excellent for complex biological samples and nanoparticle mixtures. Weaknesses: Significantly more complex and expensive than standalone DLS systems; longer analysis times compared to batch DLS; requires expertise in both FFF and DLS techniques for proper method development and data interpretation.

LS Instruments AG

Technical Solution: LS Instruments has developed 3D Cross-Correlation Dynamic Light Scattering (3D-DLS) technology that effectively suppresses multiple scattering contributions, allowing accurate measurements in samples up to 10 times more concentrated than conventional DLS. Their modulated 3D-DLS technique employs two synchronized laser beams and detectors to isolate single scattering events from multiple scattering noise. The company's instruments incorporate advanced digital correlators with nanosecond time resolution and extended delay time ranges to capture both fast dynamics of small particles and slow movements of larger structures. LS Instruments' systems feature proprietary echo cancellation algorithms that minimize reflection artifacts from optical components, improving measurement accuracy especially for weakly scattering samples. Their software includes advanced regularization methods for particle size distribution analysis that are less sensitive to noise and experimental errors compared to traditional inversion algorithms.

Strengths: Superior performance with concentrated or turbid samples where conventional DLS fails; modulated cross-correlation technique effectively eliminates multiple scattering errors; specialized algorithms provide more reliable size distributions for complex samples. Weaknesses: More complex optical setup increases instrument cost; requires more maintenance than simpler systems; higher technical expertise needed for optimal operation and data interpretation.

Key Innovations in DLS Signal Processing

Measurement of dynamic light scattering of samples

PatentInactiveJP2023541897A

Innovation

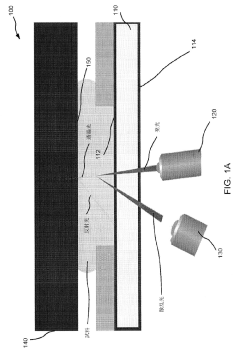

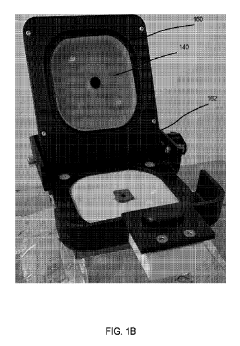

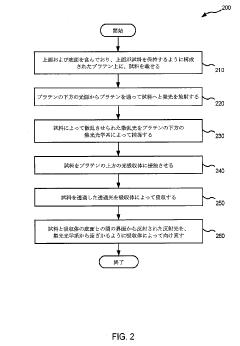

- An apparatus and method that utilize a platen with polished optical surfaces, a light source, collection optics, and a light absorber to capture scattered light and redirect reflected light away from the optical system, ensuring a controlled light path and minimizing interference, while allowing for precise temperature control and small sample volumes.

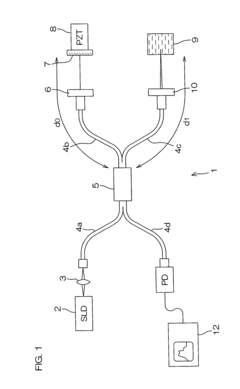

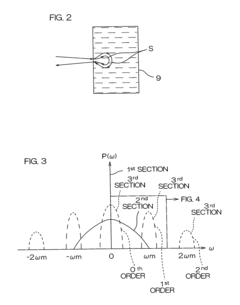

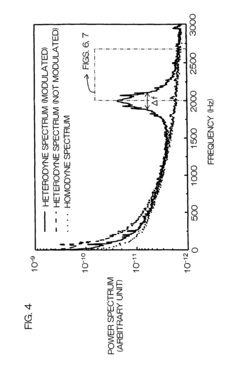

Dynamic light scattering measurement apparatus using phase modulation interference method

PatentInactiveUS7236250B2

Innovation

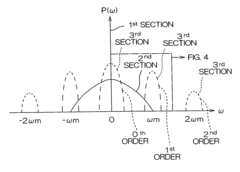

- A dynamic light scattering measurement apparatus using a low coherence light source and phase modulation interference method, where the light path length within the sample medium is normalized by the mean free path of particles to ensure that the light path length is not more than three times the mean free path, allowing for precise extraction of single scattering spectrum components from multiple scattering media.

Sample Preparation Best Practices

Sample preparation represents a critical foundation for accurate Dynamic Light Scattering (DLS) measurements. Proper preparation techniques significantly reduce measurement errors and ensure reliable data collection. The first essential consideration involves sample cleanliness. All containers, cuvettes, and tools must undergo thorough cleaning using filtered solvents to eliminate dust particles and contaminants that could interfere with light scattering signals. For optimal results, containers should be rinsed with filtered solvent at least three times before sample introduction.

Filtration stands as a fundamental step in sample preparation. Samples should be filtered through membrane filters with appropriate pore sizes (typically 0.2-0.45 μm) to remove large particulates and dust. The filtration process must be performed in a controlled environment, preferably in a laminar flow hood or clean room, to prevent environmental contamination. When selecting filtration materials, compatibility with the sample composition must be considered to avoid unwanted chemical interactions or sample adsorption.

Temperature equilibration plays a crucial role in minimizing measurement errors. Samples should be allowed to reach thermal equilibrium with the instrument environment for at least 15-30 minutes before measurement. This prevents temperature gradients within the sample that can cause convection currents and result in artificial particle movement, leading to inaccurate size determinations. Additionally, temperature control systems should maintain stability within ±0.1°C throughout the measurement process.

Sample concentration optimization represents another critical factor. Excessively concentrated samples can lead to multiple scattering effects, while overly dilute samples may produce insufficient scattering intensity. The ideal concentration typically falls within the range of 0.01% to 0.1% by weight for most colloidal systems, though this varies depending on particle properties and instrument specifications. Serial dilutions may be necessary to determine the optimal concentration range for specific sample types.

Buffer composition and pH control are essential for biological and charged samples. The ionic strength of the buffer should be carefully controlled to manage electrostatic interactions between particles. For protein samples, buffer conditions must maintain protein stability while preventing aggregation. pH adjustments should be made using filtered solutions, and the final pH should be verified after sample preparation but before measurement to ensure consistency.

Degassing procedures help eliminate air bubbles that can scatter light and introduce measurement artifacts. For sensitive samples, gentle degassing techniques such as sonication at low power or vacuum degassing at room temperature are recommended. The degassing time should be optimized to remove bubbles without altering sample properties through excessive heating or mechanical stress.

Filtration stands as a fundamental step in sample preparation. Samples should be filtered through membrane filters with appropriate pore sizes (typically 0.2-0.45 μm) to remove large particulates and dust. The filtration process must be performed in a controlled environment, preferably in a laminar flow hood or clean room, to prevent environmental contamination. When selecting filtration materials, compatibility with the sample composition must be considered to avoid unwanted chemical interactions or sample adsorption.

Temperature equilibration plays a crucial role in minimizing measurement errors. Samples should be allowed to reach thermal equilibrium with the instrument environment for at least 15-30 minutes before measurement. This prevents temperature gradients within the sample that can cause convection currents and result in artificial particle movement, leading to inaccurate size determinations. Additionally, temperature control systems should maintain stability within ±0.1°C throughout the measurement process.

Sample concentration optimization represents another critical factor. Excessively concentrated samples can lead to multiple scattering effects, while overly dilute samples may produce insufficient scattering intensity. The ideal concentration typically falls within the range of 0.01% to 0.1% by weight for most colloidal systems, though this varies depending on particle properties and instrument specifications. Serial dilutions may be necessary to determine the optimal concentration range for specific sample types.

Buffer composition and pH control are essential for biological and charged samples. The ionic strength of the buffer should be carefully controlled to manage electrostatic interactions between particles. For protein samples, buffer conditions must maintain protein stability while preventing aggregation. pH adjustments should be made using filtered solutions, and the final pH should be verified after sample preparation but before measurement to ensure consistency.

Degassing procedures help eliminate air bubbles that can scatter light and introduce measurement artifacts. For sensitive samples, gentle degassing techniques such as sonication at low power or vacuum degassing at room temperature are recommended. The degassing time should be optimized to remove bubbles without altering sample properties through excessive heating or mechanical stress.

Data Validation Standards

To establish reliable Dynamic Light Scattering (DLS) measurements, robust data validation standards are essential. These standards should encompass comprehensive criteria for evaluating measurement quality, ensuring consistency, and identifying potential errors. The primary validation parameters include count rate stability, correlation function quality, polydispersity index (PDI), and signal-to-noise ratio. Count rates should remain within ±10% throughout measurements to confirm sample stability, while correlation functions must exhibit smooth decay curves without irregularities that could indicate contamination or aggregation.

Statistical validation requires multiple measurements (typically 3-5) with relative standard deviation below 5% for size measurements and below 10% for derived parameters. Automated validation algorithms can flag measurements falling outside these parameters, with particular attention to cumulant fit residuals that should not exceed 5×10^-3 for reliable data. For multimodal distributions, additional validation metrics such as peak resolution and distribution width consistency become critical.

Reference materials play a crucial role in validation protocols. NIST-traceable polystyrene latex standards (50-200 nm) should be measured regularly to verify instrument performance, with acceptance criteria of ±2% of certified values. System suitability tests using these standards should be performed daily, with comprehensive calibration verification monthly or after maintenance events.

Cross-validation with orthogonal techniques strengthens data reliability. Size measurements from DLS should be compared with results from electron microscopy, analytical ultracentrifugation, or size-exclusion chromatography when possible. Discrepancies exceeding 15% warrant investigation and may indicate fundamental limitations in applying DLS to specific sample types.

Documentation standards are equally important for validation. Each measurement should include metadata capturing temperature stability (±0.1°C), laser power fluctuations (<1%), detector count rate ranges, and sample preparation details. Automated data quality reports should generate validation scores based on weighted criteria, with measurements scoring below 70% flagged for review or rejection.

For regulatory compliance, particularly in pharmaceutical applications, validation standards should align with ICH guidelines and FDA recommendations for analytical method validation. This includes establishing limits of detection, quantification ranges (typically 5-1000 nm for most DLS instruments), and measurement reproducibility across different operators and instruments, with inter-instrument variability not exceeding 5% for standard samples.

Statistical validation requires multiple measurements (typically 3-5) with relative standard deviation below 5% for size measurements and below 10% for derived parameters. Automated validation algorithms can flag measurements falling outside these parameters, with particular attention to cumulant fit residuals that should not exceed 5×10^-3 for reliable data. For multimodal distributions, additional validation metrics such as peak resolution and distribution width consistency become critical.

Reference materials play a crucial role in validation protocols. NIST-traceable polystyrene latex standards (50-200 nm) should be measured regularly to verify instrument performance, with acceptance criteria of ±2% of certified values. System suitability tests using these standards should be performed daily, with comprehensive calibration verification monthly or after maintenance events.

Cross-validation with orthogonal techniques strengthens data reliability. Size measurements from DLS should be compared with results from electron microscopy, analytical ultracentrifugation, or size-exclusion chromatography when possible. Discrepancies exceeding 15% warrant investigation and may indicate fundamental limitations in applying DLS to specific sample types.

Documentation standards are equally important for validation. Each measurement should include metadata capturing temperature stability (±0.1°C), laser power fluctuations (<1%), detector count rate ranges, and sample preparation details. Automated data quality reports should generate validation scores based on weighted criteria, with measurements scoring below 70% flagged for review or rejection.

For regulatory compliance, particularly in pharmaceutical applications, validation standards should align with ICH guidelines and FDA recommendations for analytical method validation. This includes establishing limits of detection, quantification ranges (typically 5-1000 nm for most DLS instruments), and measurement reproducibility across different operators and instruments, with inter-instrument variability not exceeding 5% for standard samples.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!