Quantum Computing for Improving Statistical Analysis Methods

JUL 17, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Computing in Statistical Analysis: Background and Objectives

Quantum computing represents a paradigm shift in computational capabilities, offering unprecedented potential to revolutionize statistical analysis methods. This emerging field harnesses the principles of quantum mechanics to perform complex calculations at speeds unattainable by classical computers. The evolution of quantum computing has been marked by significant milestones, from theoretical concepts proposed in the 1980s to the development of practical quantum processors in recent years.

The primary objective of integrating quantum computing into statistical analysis is to overcome the limitations of classical computing in handling large-scale, multidimensional datasets and complex probabilistic models. As data volumes continue to grow exponentially across various industries, traditional statistical methods often struggle with computational efficiency and accuracy. Quantum computing aims to address these challenges by leveraging quantum superposition and entanglement to process vast amounts of information simultaneously.

One of the key trends in this technological evolution is the development of quantum algorithms specifically designed for statistical applications. These algorithms, such as quantum principal component analysis and quantum support vector machines, promise to dramatically reduce computational time for high-dimensional data analysis and pattern recognition tasks. Additionally, quantum-enhanced Monte Carlo methods are being explored to improve the efficiency of simulations in fields like finance and physics.

The convergence of quantum computing and statistical analysis is expected to yield breakthroughs in various domains. In finance, it could lead to more accurate risk assessments and portfolio optimization. In healthcare, it may enable more precise analysis of genomic data for personalized medicine. Environmental sciences could benefit from enhanced climate modeling and prediction capabilities.

However, realizing these objectives faces several technical challenges. Current quantum systems are prone to errors due to environmental interference, limiting their practical applications. Developing error-correction techniques and increasing the number of stable qubits are crucial steps towards achieving quantum advantage in statistical analysis. Moreover, bridging the gap between theoretical quantum algorithms and their implementation on actual quantum hardware remains an ongoing research focus.

As the field progresses, interdisciplinary collaboration between quantum physicists, computer scientists, and statisticians is becoming increasingly important. This synergy is essential for developing quantum-inspired classical algorithms, hybrid quantum-classical systems, and new statistical frameworks that can fully exploit the power of quantum computing. The ultimate goal is to create a new generation of statistical tools that can handle the complexity and scale of modern data analysis challenges, opening up new frontiers in scientific discovery and data-driven decision-making.

The primary objective of integrating quantum computing into statistical analysis is to overcome the limitations of classical computing in handling large-scale, multidimensional datasets and complex probabilistic models. As data volumes continue to grow exponentially across various industries, traditional statistical methods often struggle with computational efficiency and accuracy. Quantum computing aims to address these challenges by leveraging quantum superposition and entanglement to process vast amounts of information simultaneously.

One of the key trends in this technological evolution is the development of quantum algorithms specifically designed for statistical applications. These algorithms, such as quantum principal component analysis and quantum support vector machines, promise to dramatically reduce computational time for high-dimensional data analysis and pattern recognition tasks. Additionally, quantum-enhanced Monte Carlo methods are being explored to improve the efficiency of simulations in fields like finance and physics.

The convergence of quantum computing and statistical analysis is expected to yield breakthroughs in various domains. In finance, it could lead to more accurate risk assessments and portfolio optimization. In healthcare, it may enable more precise analysis of genomic data for personalized medicine. Environmental sciences could benefit from enhanced climate modeling and prediction capabilities.

However, realizing these objectives faces several technical challenges. Current quantum systems are prone to errors due to environmental interference, limiting their practical applications. Developing error-correction techniques and increasing the number of stable qubits are crucial steps towards achieving quantum advantage in statistical analysis. Moreover, bridging the gap between theoretical quantum algorithms and their implementation on actual quantum hardware remains an ongoing research focus.

As the field progresses, interdisciplinary collaboration between quantum physicists, computer scientists, and statisticians is becoming increasingly important. This synergy is essential for developing quantum-inspired classical algorithms, hybrid quantum-classical systems, and new statistical frameworks that can fully exploit the power of quantum computing. The ultimate goal is to create a new generation of statistical tools that can handle the complexity and scale of modern data analysis challenges, opening up new frontiers in scientific discovery and data-driven decision-making.

Market Demand for Advanced Statistical Analysis Tools

The market demand for advanced statistical analysis tools has been steadily increasing across various sectors, driven by the growing complexity of data and the need for more sophisticated analytical capabilities. As organizations accumulate vast amounts of data, traditional statistical methods often fall short in processing and extracting meaningful insights from these large datasets. This has created a significant opportunity for quantum computing to revolutionize statistical analysis methods.

In the financial sector, there is a pressing need for more accurate risk assessment and predictive modeling. Quantum computing's ability to handle complex probabilistic calculations could greatly enhance the precision of financial forecasts and risk management strategies. Investment firms and banks are particularly interested in leveraging quantum-enhanced statistical tools to gain a competitive edge in market analysis and portfolio optimization.

The healthcare industry is another major driver of demand for advanced statistical analysis tools. With the rise of personalized medicine and genomic research, there is an unprecedented need for processing and analyzing large-scale biological data. Quantum computing's potential to accelerate drug discovery processes and improve patient outcome predictions has garnered significant attention from pharmaceutical companies and research institutions.

In the realm of artificial intelligence and machine learning, researchers and companies are exploring quantum algorithms to enhance statistical learning models. The ability of quantum systems to process high-dimensional data and perform complex optimizations could lead to breakthroughs in pattern recognition, natural language processing, and computer vision applications.

Government agencies and scientific research organizations are also showing increased interest in quantum-enhanced statistical tools for climate modeling, particle physics simulations, and cryptography. The potential for quantum computing to solve previously intractable problems in these fields is driving substantial investments and collaborations.

The market for quantum computing in statistical analysis is still in its early stages, with most applications currently in the research and development phase. However, industry analysts project rapid growth in the coming years as quantum hardware becomes more accessible and stable. Major technology companies and startups are actively developing quantum software platforms and applications tailored for statistical analysis, anticipating a surge in demand as the technology matures.

As quantum computing continues to advance, there is a growing need for skilled professionals who can bridge the gap between quantum physics, computer science, and statistical analysis. This has led to an increase in educational programs and training initiatives focused on quantum computing and its applications in data science and analytics.

In the financial sector, there is a pressing need for more accurate risk assessment and predictive modeling. Quantum computing's ability to handle complex probabilistic calculations could greatly enhance the precision of financial forecasts and risk management strategies. Investment firms and banks are particularly interested in leveraging quantum-enhanced statistical tools to gain a competitive edge in market analysis and portfolio optimization.

The healthcare industry is another major driver of demand for advanced statistical analysis tools. With the rise of personalized medicine and genomic research, there is an unprecedented need for processing and analyzing large-scale biological data. Quantum computing's potential to accelerate drug discovery processes and improve patient outcome predictions has garnered significant attention from pharmaceutical companies and research institutions.

In the realm of artificial intelligence and machine learning, researchers and companies are exploring quantum algorithms to enhance statistical learning models. The ability of quantum systems to process high-dimensional data and perform complex optimizations could lead to breakthroughs in pattern recognition, natural language processing, and computer vision applications.

Government agencies and scientific research organizations are also showing increased interest in quantum-enhanced statistical tools for climate modeling, particle physics simulations, and cryptography. The potential for quantum computing to solve previously intractable problems in these fields is driving substantial investments and collaborations.

The market for quantum computing in statistical analysis is still in its early stages, with most applications currently in the research and development phase. However, industry analysts project rapid growth in the coming years as quantum hardware becomes more accessible and stable. Major technology companies and startups are actively developing quantum software platforms and applications tailored for statistical analysis, anticipating a surge in demand as the technology matures.

As quantum computing continues to advance, there is a growing need for skilled professionals who can bridge the gap between quantum physics, computer science, and statistical analysis. This has led to an increase in educational programs and training initiatives focused on quantum computing and its applications in data science and analytics.

Current State and Challenges in Quantum-Enhanced Statistics

Quantum computing has made significant strides in recent years, particularly in its application to statistical analysis methods. The current state of quantum-enhanced statistics is characterized by a blend of promising advancements and persistent challenges. Quantum algorithms have demonstrated potential in accelerating certain statistical computations, offering exponential speedups in some cases compared to classical methods.

One of the key areas where quantum computing shows promise is in the realm of Monte Carlo simulations. Quantum Monte Carlo algorithms have been developed that can potentially outperform their classical counterparts, especially for high-dimensional problems. These algorithms leverage quantum superposition to explore multiple states simultaneously, potentially reducing the number of iterations required for convergence.

Another area of progress is in quantum-enhanced machine learning. Quantum support vector machines and quantum principal component analysis have shown the ability to process high-dimensional data more efficiently than classical algorithms. These techniques could revolutionize data analysis in fields such as finance, genomics, and climate modeling.

Despite these advancements, several challenges persist in the field of quantum-enhanced statistics. One major hurdle is the limited qubit coherence time in current quantum hardware. This constraint restricts the complexity and scale of statistical problems that can be tackled effectively using quantum systems. Researchers are actively working on improving qubit stability and error correction techniques to address this issue.

Another significant challenge is the development of quantum algorithms that can consistently outperform classical methods across a wide range of statistical problems. While quantum algorithms have shown superiority in specific scenarios, generalizing these advantages to broader statistical applications remains an ongoing effort. This includes developing quantum versions of widely used statistical tests and estimation methods.

The integration of quantum and classical computing resources also presents a challenge. Hybrid quantum-classical algorithms are being explored as a potential solution, but optimizing the interplay between quantum and classical components requires further research and development.

Data input and output remain bottlenecks in quantum statistical analysis. Efficiently encoding classical data into quantum states and extracting meaningful results from quantum measurements are areas that require continued innovation. This is particularly crucial for handling large datasets common in modern statistical applications.

Lastly, the interpretability of quantum-enhanced statistical results poses a challenge. As quantum algorithms become more complex, ensuring that their outputs can be understood and validated by statisticians and domain experts is essential for widespread adoption in practical applications.

One of the key areas where quantum computing shows promise is in the realm of Monte Carlo simulations. Quantum Monte Carlo algorithms have been developed that can potentially outperform their classical counterparts, especially for high-dimensional problems. These algorithms leverage quantum superposition to explore multiple states simultaneously, potentially reducing the number of iterations required for convergence.

Another area of progress is in quantum-enhanced machine learning. Quantum support vector machines and quantum principal component analysis have shown the ability to process high-dimensional data more efficiently than classical algorithms. These techniques could revolutionize data analysis in fields such as finance, genomics, and climate modeling.

Despite these advancements, several challenges persist in the field of quantum-enhanced statistics. One major hurdle is the limited qubit coherence time in current quantum hardware. This constraint restricts the complexity and scale of statistical problems that can be tackled effectively using quantum systems. Researchers are actively working on improving qubit stability and error correction techniques to address this issue.

Another significant challenge is the development of quantum algorithms that can consistently outperform classical methods across a wide range of statistical problems. While quantum algorithms have shown superiority in specific scenarios, generalizing these advantages to broader statistical applications remains an ongoing effort. This includes developing quantum versions of widely used statistical tests and estimation methods.

The integration of quantum and classical computing resources also presents a challenge. Hybrid quantum-classical algorithms are being explored as a potential solution, but optimizing the interplay between quantum and classical components requires further research and development.

Data input and output remain bottlenecks in quantum statistical analysis. Efficiently encoding classical data into quantum states and extracting meaningful results from quantum measurements are areas that require continued innovation. This is particularly crucial for handling large datasets common in modern statistical applications.

Lastly, the interpretability of quantum-enhanced statistical results poses a challenge. As quantum algorithms become more complex, ensuring that their outputs can be understood and validated by statisticians and domain experts is essential for widespread adoption in practical applications.

Existing Quantum Algorithms for Statistical Analysis

01 Quantum-enhanced statistical analysis methods

These methods leverage quantum computing to enhance traditional statistical analysis techniques. They can process large datasets more efficiently, potentially offering improved accuracy and speed for complex statistical calculations. This approach combines quantum algorithms with classical statistical methods to tackle problems that are computationally intensive for classical computers.- Quantum-enhanced statistical analysis methods: Quantum computing techniques are applied to enhance traditional statistical analysis methods, offering improved accuracy and efficiency in data processing. These methods leverage quantum superposition and entanglement to perform complex calculations faster than classical computers, enabling more sophisticated analysis of large datasets.

- Quantum machine learning for statistical analysis: Integration of quantum computing principles with machine learning algorithms to develop advanced statistical analysis tools. These hybrid approaches combine the strengths of quantum systems with classical machine learning techniques to tackle complex statistical problems and pattern recognition tasks more effectively.

- Quantum-inspired classical algorithms for statistical analysis: Development of classical algorithms inspired by quantum computing concepts to improve statistical analysis methods. These algorithms mimic certain aspects of quantum computation on classical hardware, providing enhanced performance for specific statistical tasks without requiring actual quantum hardware.

- Error correction and noise mitigation in quantum statistical analysis: Techniques for addressing errors and noise in quantum systems when performing statistical analysis. These methods aim to improve the reliability and accuracy of quantum-based statistical computations by minimizing the impact of quantum decoherence and other sources of errors inherent in quantum systems.

- Quantum-assisted data sampling and distribution analysis: Utilization of quantum computing capabilities to enhance data sampling techniques and analyze complex probability distributions in statistical analysis. These methods leverage quantum superposition to explore multiple data points simultaneously, potentially leading to more efficient and accurate statistical inferences.

02 Quantum machine learning for data analysis

Quantum machine learning algorithms are applied to statistical analysis tasks, potentially offering advantages in pattern recognition, clustering, and classification. These methods can handle high-dimensional data more effectively than classical approaches, making them suitable for complex statistical problems in fields like finance, bioinformatics, and materials science.Expand Specific Solutions03 Quantum-inspired classical algorithms for statistical analysis

These algorithms draw inspiration from quantum computing principles but are implemented on classical hardware. They aim to bridge the gap between quantum and classical computing, offering improved performance for certain statistical analysis tasks without requiring quantum hardware. This approach can be particularly useful in the near term as quantum hardware continues to develop.Expand Specific Solutions04 Error mitigation techniques for quantum statistical analysis

As quantum computers are prone to errors due to noise and decoherence, specialized error mitigation techniques are developed for statistical analysis applications. These methods aim to improve the reliability and accuracy of quantum statistical calculations, making them more practical for real-world use cases.Expand Specific Solutions05 Hybrid quantum-classical approaches for statistical analysis

These methods combine quantum and classical computing resources to optimize statistical analysis workflows. By leveraging the strengths of both paradigms, hybrid approaches can tackle complex statistical problems more efficiently than purely classical or quantum methods alone. This can include using quantum algorithms for specific computationally intensive steps within a larger classical analysis pipeline.Expand Specific Solutions

Key Players in Quantum Computing and Statistical Software

The quantum computing landscape for improving statistical analysis methods is in an early developmental stage, with significant potential for growth. The market size is expanding rapidly, driven by increasing demand for advanced data analysis tools across various industries. While the technology is still maturing, major players like Google, IBM, and Intel are making substantial investments in research and development. Emerging companies such as Zapata Computing and 1QB Information Technologies are also contributing to the field's advancement. The competitive landscape is characterized by a mix of established tech giants and specialized quantum computing startups, each working to develop more powerful and efficient quantum algorithms for statistical analysis. As the technology progresses, we can expect to see increased collaboration between academia and industry to overcome current limitations and unlock the full potential of quantum computing in statistical analysis.

Google LLC

Technical Solution: Google's approach to quantum computing for improving statistical analysis methods focuses on developing quantum algorithms that can significantly enhance the speed and accuracy of complex statistical computations. Their Sycamore quantum processor has demonstrated quantum supremacy by performing a specific computation in 200 seconds that would take a classical supercomputer 10,000 years[1]. For statistical analysis, Google is exploring quantum machine learning techniques, such as quantum principal component analysis and quantum support vector machines, which can process high-dimensional data more efficiently than classical methods[2]. They are also working on quantum optimization algorithms that could revolutionize areas like portfolio optimization and risk analysis in finance[3].

Strengths: Advanced quantum hardware, vast computational resources, and a strong research team. Weaknesses: Quantum computers are still prone to errors and require extensive error correction, limiting their practical applications in the near term.

Intel Corp.

Technical Solution: Intel's approach to quantum computing for statistical analysis focuses on developing scalable quantum systems using their spin qubit technology. They are working on integrating quantum processors with classical computing infrastructure to create hybrid systems that can enhance statistical analysis methods[13]. Intel is exploring quantum algorithms for machine learning tasks, such as quantum support vector machines and quantum principal component analysis, which could significantly improve data classification and dimensionality reduction in statistical analysis[14]. They are also developing cryogenic control chips to improve the scalability and reliability of quantum systems, which is crucial for complex statistical computations[15].

Strengths: Expertise in semiconductor manufacturing, potential for scalable qubit production, and integration with classical computing systems. Weaknesses: Quantum hardware is still in early stages of development compared to some competitors.

Breakthrough Quantum Techniques for Statistical Computations

Methods and structure for improved interactive statistical analysis

PatentInactiveUS7373274B2

Innovation

- A computer-based user interface system that automates statistical analysis by receiving user input, retrieving and reformating data, and generating results with minimal user interaction, providing a flexible and easy-to-use platform for performing various statistical analyses.

Quantum statistic machine

PatentPendingUS20250139489A1

Innovation

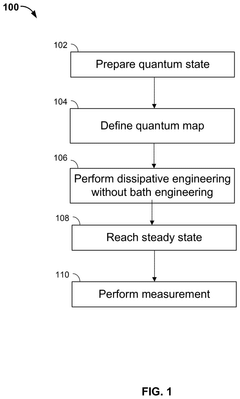

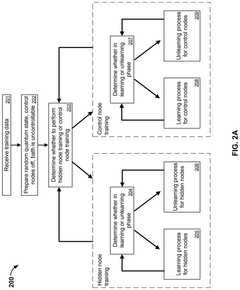

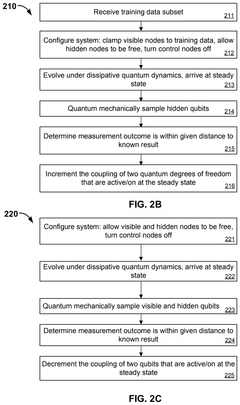

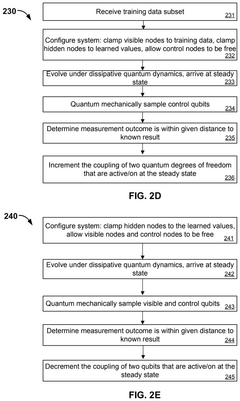

- The development of a Quantum Statistic Machine (QSM) that exploits dissipative quantum dynamical maps to solve hard optimization and inference tasks without requiring extreme quantum error correction, using a programmable non-equilibrium ergodic open quantum Markov chain with a unique attracting steady state.

Quantum Hardware Requirements for Statistical Applications

Quantum computing hardware for statistical applications requires specialized components and architectures designed to handle complex probabilistic calculations and large datasets. The primary hardware requirements include quantum processors with sufficient qubit count and coherence times to perform meaningful statistical operations. Current quantum processors typically range from 50 to 100 qubits, but statistical applications may require thousands or even millions of qubits for practical use.

Quantum error correction is crucial for maintaining the stability and accuracy of statistical computations. This necessitates the implementation of fault-tolerant quantum circuits and error-correcting codes, which in turn demand additional physical qubits and sophisticated control systems. The ratio of physical to logical qubits can be as high as 1000:1, highlighting the substantial hardware overhead required for reliable quantum statistical analysis.

Quantum memory systems are essential for storing and retrieving quantum states during statistical computations. These systems must maintain quantum coherence over extended periods, typically achieved through superconducting circuits or trapped ion technologies. The development of efficient quantum memory architectures is ongoing, with current systems capable of maintaining coherence for milliseconds to seconds.

Quantum-classical hybrid systems are vital for interfacing quantum processors with classical computers and data sources. These systems require high-speed, low-latency communication channels and specialized control hardware to manage the flow of information between quantum and classical domains. Additionally, cryogenic systems are necessary to maintain the ultra-low temperatures required for many quantum computing platforms, typically operating at millikelvin temperatures.

Quantum measurement devices are critical for extracting results from quantum statistical computations. These devices must be highly sensitive and capable of performing non-destructive measurements on quantum states. Advanced readout technologies, such as superconducting quantum interference devices (SQUIDs) or single-photon detectors, are commonly employed for this purpose.

As quantum hardware continues to evolve, scalability remains a significant challenge. Developing modular and interconnected quantum processors will be crucial for expanding the capabilities of quantum statistical analysis. This may involve the integration of multiple quantum processing units and the development of quantum networking technologies to enable distributed quantum computing for large-scale statistical applications.

Quantum error correction is crucial for maintaining the stability and accuracy of statistical computations. This necessitates the implementation of fault-tolerant quantum circuits and error-correcting codes, which in turn demand additional physical qubits and sophisticated control systems. The ratio of physical to logical qubits can be as high as 1000:1, highlighting the substantial hardware overhead required for reliable quantum statistical analysis.

Quantum memory systems are essential for storing and retrieving quantum states during statistical computations. These systems must maintain quantum coherence over extended periods, typically achieved through superconducting circuits or trapped ion technologies. The development of efficient quantum memory architectures is ongoing, with current systems capable of maintaining coherence for milliseconds to seconds.

Quantum-classical hybrid systems are vital for interfacing quantum processors with classical computers and data sources. These systems require high-speed, low-latency communication channels and specialized control hardware to manage the flow of information between quantum and classical domains. Additionally, cryogenic systems are necessary to maintain the ultra-low temperatures required for many quantum computing platforms, typically operating at millikelvin temperatures.

Quantum measurement devices are critical for extracting results from quantum statistical computations. These devices must be highly sensitive and capable of performing non-destructive measurements on quantum states. Advanced readout technologies, such as superconducting quantum interference devices (SQUIDs) or single-photon detectors, are commonly employed for this purpose.

As quantum hardware continues to evolve, scalability remains a significant challenge. Developing modular and interconnected quantum processors will be crucial for expanding the capabilities of quantum statistical analysis. This may involve the integration of multiple quantum processing units and the development of quantum networking technologies to enable distributed quantum computing for large-scale statistical applications.

Ethical Implications of Quantum-Enhanced Statistical Analysis

The integration of quantum computing into statistical analysis methods raises significant ethical considerations that demand careful examination. As quantum-enhanced statistical techniques become more powerful and prevalent, they have the potential to revolutionize data analysis across various fields, including healthcare, finance, and social sciences. However, this advancement also brings forth ethical challenges that must be addressed proactively.

One primary concern is the potential for quantum-enhanced statistical analysis to exacerbate existing biases and inequalities. The increased computational power may lead to more sophisticated data mining techniques, potentially uncovering patterns that could be used to discriminate against certain groups or individuals. This raises questions about fairness, equity, and the responsible use of advanced analytical capabilities.

Privacy and data protection issues also come to the forefront with quantum-enhanced statistical analysis. The ability to process and analyze vast amounts of data more efficiently may lead to increased risks of re-identification of anonymized data sets. This could potentially compromise individual privacy and confidentiality, especially in sensitive areas such as medical research or financial transactions.

The interpretability and transparency of quantum-enhanced statistical models present another ethical challenge. As these models become more complex and rely on quantum phenomena, it may become increasingly difficult for non-experts to understand and scrutinize the decision-making processes. This lack of transparency could lead to issues of accountability and trust in the results produced by these advanced analytical methods.

Furthermore, the potential for quantum-enhanced statistical analysis to be used in predictive policing, surveillance, or other applications that may infringe on civil liberties must be carefully considered. The increased accuracy and predictive power of these techniques could be misused to target specific individuals or groups, raising concerns about privacy, autonomy, and democratic values.

The ethical implications also extend to the realm of scientific research and publication. As quantum-enhanced statistical methods become more prevalent, there may be a widening gap between researchers who have access to quantum computing resources and those who do not. This could lead to disparities in research capabilities and potentially skew the scientific landscape, raising questions about equity in academic and research environments.

Addressing these ethical concerns requires a multifaceted approach involving policymakers, researchers, ethicists, and industry stakeholders. Developing robust ethical guidelines, promoting transparency in the development and application of quantum-enhanced statistical methods, and ensuring equitable access to these technologies are crucial steps in navigating the ethical landscape of this emerging field.

One primary concern is the potential for quantum-enhanced statistical analysis to exacerbate existing biases and inequalities. The increased computational power may lead to more sophisticated data mining techniques, potentially uncovering patterns that could be used to discriminate against certain groups or individuals. This raises questions about fairness, equity, and the responsible use of advanced analytical capabilities.

Privacy and data protection issues also come to the forefront with quantum-enhanced statistical analysis. The ability to process and analyze vast amounts of data more efficiently may lead to increased risks of re-identification of anonymized data sets. This could potentially compromise individual privacy and confidentiality, especially in sensitive areas such as medical research or financial transactions.

The interpretability and transparency of quantum-enhanced statistical models present another ethical challenge. As these models become more complex and rely on quantum phenomena, it may become increasingly difficult for non-experts to understand and scrutinize the decision-making processes. This lack of transparency could lead to issues of accountability and trust in the results produced by these advanced analytical methods.

Furthermore, the potential for quantum-enhanced statistical analysis to be used in predictive policing, surveillance, or other applications that may infringe on civil liberties must be carefully considered. The increased accuracy and predictive power of these techniques could be misused to target specific individuals or groups, raising concerns about privacy, autonomy, and democratic values.

The ethical implications also extend to the realm of scientific research and publication. As quantum-enhanced statistical methods become more prevalent, there may be a widening gap between researchers who have access to quantum computing resources and those who do not. This could lead to disparities in research capabilities and potentially skew the scientific landscape, raising questions about equity in academic and research environments.

Addressing these ethical concerns requires a multifaceted approach involving policymakers, researchers, ethicists, and industry stakeholders. Developing robust ethical guidelines, promoting transparency in the development and application of quantum-enhanced statistical methods, and ensuring equitable access to these technologies are crucial steps in navigating the ethical landscape of this emerging field.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!