Quantum Models vs Deep Learning: Which Improves Speed?

SEP 5, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Quantum Computing vs Deep Learning Background and Objectives

Quantum computing and deep learning represent two of the most transformative technological paradigms of the 21st century. While deep learning has revolutionized artificial intelligence through its ability to process vast amounts of data and identify complex patterns, quantum computing promises computational capabilities that transcend classical limitations. The intersection of these technologies presents fascinating opportunities and challenges for computational speed and efficiency.

The evolution of quantum computing traces back to Richard Feynman's 1982 proposal of quantum simulation, followed by Peter Shor's groundbreaking quantum algorithm for integer factorization in 1994. Meanwhile, deep learning's modern renaissance began around 2012 with AlexNet's breakthrough performance in image recognition, though its theoretical foundations date back to the 1940s with McCulloch and Pitts' neural network models.

Current technological trends indicate a convergence of these fields. Quantum machine learning (QML) has emerged as a hybrid discipline that leverages quantum algorithms to enhance machine learning tasks. Simultaneously, deep learning architectures continue to grow in complexity and scale, with models like GPT-4 and PaLM demonstrating unprecedented capabilities in natural language processing and other domains.

The primary objective of this technical research is to conduct a comparative analysis of quantum models versus deep learning approaches specifically regarding computational speed. This comparison encompasses theoretical speed limits, practical implementation challenges, and real-world performance metrics across various computational tasks.

We aim to evaluate whether quantum advantage—the ability of quantum computers to solve certain problems exponentially faster than classical computers—can be meaningfully applied to accelerate deep learning workloads. Conversely, we examine how classical deep learning techniques might be optimized to approach quantum-like performance for specific applications.

This research also explores the potential for hybrid quantum-classical architectures that combine the strengths of both paradigms. Such systems might leverage quantum processors for specialized computational bottlenecks while utilizing classical deep learning frameworks for other aspects of the workflow.

Understanding the relative speed advantages of quantum models versus deep learning approaches is crucial for strategic technology investment decisions. Organizations must determine whether to allocate resources toward quantum computing research, continue scaling classical deep learning infrastructure, or pursue hybrid approaches that leverage both technologies.

The evolution of quantum computing traces back to Richard Feynman's 1982 proposal of quantum simulation, followed by Peter Shor's groundbreaking quantum algorithm for integer factorization in 1994. Meanwhile, deep learning's modern renaissance began around 2012 with AlexNet's breakthrough performance in image recognition, though its theoretical foundations date back to the 1940s with McCulloch and Pitts' neural network models.

Current technological trends indicate a convergence of these fields. Quantum machine learning (QML) has emerged as a hybrid discipline that leverages quantum algorithms to enhance machine learning tasks. Simultaneously, deep learning architectures continue to grow in complexity and scale, with models like GPT-4 and PaLM demonstrating unprecedented capabilities in natural language processing and other domains.

The primary objective of this technical research is to conduct a comparative analysis of quantum models versus deep learning approaches specifically regarding computational speed. This comparison encompasses theoretical speed limits, practical implementation challenges, and real-world performance metrics across various computational tasks.

We aim to evaluate whether quantum advantage—the ability of quantum computers to solve certain problems exponentially faster than classical computers—can be meaningfully applied to accelerate deep learning workloads. Conversely, we examine how classical deep learning techniques might be optimized to approach quantum-like performance for specific applications.

This research also explores the potential for hybrid quantum-classical architectures that combine the strengths of both paradigms. Such systems might leverage quantum processors for specialized computational bottlenecks while utilizing classical deep learning frameworks for other aspects of the workflow.

Understanding the relative speed advantages of quantum models versus deep learning approaches is crucial for strategic technology investment decisions. Organizations must determine whether to allocate resources toward quantum computing research, continue scaling classical deep learning infrastructure, or pursue hybrid approaches that leverage both technologies.

Market Demand Analysis for High-Performance Computing Solutions

The high-performance computing (HPC) market is experiencing unprecedented growth driven by the increasing complexity of computational problems across various industries. Current market analysis indicates that the global HPC market is projected to reach $60 billion by 2025, with a compound annual growth rate of approximately 7.5%. This growth is primarily fueled by the demand for faster processing capabilities in data-intensive applications such as artificial intelligence, scientific research, financial modeling, and climate simulation.

The emergence of quantum computing models alongside traditional deep learning architectures has created a significant shift in market demand dynamics. Organizations across sectors are increasingly seeking solutions that can process complex algorithms and massive datasets at speeds previously unattainable. Financial institutions require real-time risk assessment and fraud detection capabilities, while pharmaceutical companies need accelerated molecular modeling for drug discovery processes.

Research indicates that 78% of enterprise organizations consider computational speed as a critical factor in their technology investment decisions. The demand for quantum-enhanced solutions has grown by 45% in the past two years, particularly in sectors dealing with optimization problems, cryptography, and material science simulations. Meanwhile, deep learning applications continue to dominate in areas requiring pattern recognition and predictive analytics, with 82% of AI-focused companies prioritizing GPU-accelerated computing infrastructure.

Cloud service providers have responded to this market demand by introducing quantum computing as a service (QCaaS) and specialized deep learning infrastructure offerings. This has democratized access to high-performance computing resources, expanding the potential market beyond traditional research institutions and large corporations to include mid-sized businesses and specialized startups.

Regional analysis reveals varying adoption patterns, with North America leading in quantum computing investments (accounting for 42% of global spending), while Asia-Pacific demonstrates the fastest growth in deep learning infrastructure deployment at 23% annually. European markets show particular interest in hybrid solutions that leverage both quantum and deep learning technologies for specific use cases.

Industry surveys indicate that 67% of technology decision-makers are actively evaluating the comparative advantages of quantum models versus deep learning frameworks specifically for speed-critical applications. However, the market still faces significant barriers including high implementation costs, technical complexity, and talent shortages, with 53% of organizations citing lack of specialized expertise as their primary challenge in adopting cutting-edge computing solutions.

The emergence of quantum computing models alongside traditional deep learning architectures has created a significant shift in market demand dynamics. Organizations across sectors are increasingly seeking solutions that can process complex algorithms and massive datasets at speeds previously unattainable. Financial institutions require real-time risk assessment and fraud detection capabilities, while pharmaceutical companies need accelerated molecular modeling for drug discovery processes.

Research indicates that 78% of enterprise organizations consider computational speed as a critical factor in their technology investment decisions. The demand for quantum-enhanced solutions has grown by 45% in the past two years, particularly in sectors dealing with optimization problems, cryptography, and material science simulations. Meanwhile, deep learning applications continue to dominate in areas requiring pattern recognition and predictive analytics, with 82% of AI-focused companies prioritizing GPU-accelerated computing infrastructure.

Cloud service providers have responded to this market demand by introducing quantum computing as a service (QCaaS) and specialized deep learning infrastructure offerings. This has democratized access to high-performance computing resources, expanding the potential market beyond traditional research institutions and large corporations to include mid-sized businesses and specialized startups.

Regional analysis reveals varying adoption patterns, with North America leading in quantum computing investments (accounting for 42% of global spending), while Asia-Pacific demonstrates the fastest growth in deep learning infrastructure deployment at 23% annually. European markets show particular interest in hybrid solutions that leverage both quantum and deep learning technologies for specific use cases.

Industry surveys indicate that 67% of technology decision-makers are actively evaluating the comparative advantages of quantum models versus deep learning frameworks specifically for speed-critical applications. However, the market still faces significant barriers including high implementation costs, technical complexity, and talent shortages, with 53% of organizations citing lack of specialized expertise as their primary challenge in adopting cutting-edge computing solutions.

Current State and Challenges in Computational Speed Optimization

The computational landscape is witnessing a significant transformation with quantum computing and deep learning technologies competing to address speed optimization challenges. Currently, deep learning models dominate practical applications, with frameworks like TensorFlow and PyTorch enabling efficient parallel processing on GPUs and TPUs. These systems can handle complex tasks such as image recognition and natural language processing with impressive speed, yet face inherent limitations in computational efficiency when scaling to extremely large datasets or complex problem spaces.

Quantum computing, while still in its nascent stage of practical implementation, demonstrates theoretical advantages in computational speed for specific problem domains. Quantum algorithms like Shor's and Grover's have proven mathematical superiority over classical counterparts for certain tasks. However, current quantum hardware remains limited by qubit coherence times, error rates, and scalability challenges, creating a significant gap between theoretical potential and practical application.

The primary technical challenges in computational speed optimization center around hardware limitations, algorithm efficiency, and energy consumption. Classical computing approaches face the end of Moore's Law, with physical constraints limiting further miniaturization of transistors. Deep learning models struggle with the exponential growth in computational requirements for state-of-the-art architectures, with models like GPT-4 requiring massive computational resources that limit accessibility and deployment options.

Quantum computing faces its own set of challenges, including quantum decoherence, error correction requirements, and the difficulty in maintaining quantum states. Current quantum processors typically operate at near absolute zero temperatures, requiring complex cooling systems that limit practical deployment scenarios. The error rates in quantum computations remain significantly higher than in classical systems, necessitating substantial overhead for error correction.

Hybrid approaches combining classical and quantum computing show promise in addressing these limitations. Quantum-classical algorithms leverage the strengths of both paradigms, using quantum processors for specific subroutines where they demonstrate advantage while relying on classical systems for other components. Companies like IBM, Google, and D-Wave are actively developing such hybrid solutions to bridge the gap between theoretical quantum advantage and practical implementation.

Geographically, quantum computing research is concentrated in North America, Europe, and parts of Asia, with significant investments from both public and private sectors. The United States, China, and the European Union have established national quantum initiatives with substantial funding. Meanwhile, deep learning optimization research is more globally distributed, with major contributions coming from academic institutions and technology companies across various regions, creating a diverse ecosystem of approaches to computational speed challenges.

Quantum computing, while still in its nascent stage of practical implementation, demonstrates theoretical advantages in computational speed for specific problem domains. Quantum algorithms like Shor's and Grover's have proven mathematical superiority over classical counterparts for certain tasks. However, current quantum hardware remains limited by qubit coherence times, error rates, and scalability challenges, creating a significant gap between theoretical potential and practical application.

The primary technical challenges in computational speed optimization center around hardware limitations, algorithm efficiency, and energy consumption. Classical computing approaches face the end of Moore's Law, with physical constraints limiting further miniaturization of transistors. Deep learning models struggle with the exponential growth in computational requirements for state-of-the-art architectures, with models like GPT-4 requiring massive computational resources that limit accessibility and deployment options.

Quantum computing faces its own set of challenges, including quantum decoherence, error correction requirements, and the difficulty in maintaining quantum states. Current quantum processors typically operate at near absolute zero temperatures, requiring complex cooling systems that limit practical deployment scenarios. The error rates in quantum computations remain significantly higher than in classical systems, necessitating substantial overhead for error correction.

Hybrid approaches combining classical and quantum computing show promise in addressing these limitations. Quantum-classical algorithms leverage the strengths of both paradigms, using quantum processors for specific subroutines where they demonstrate advantage while relying on classical systems for other components. Companies like IBM, Google, and D-Wave are actively developing such hybrid solutions to bridge the gap between theoretical quantum advantage and practical implementation.

Geographically, quantum computing research is concentrated in North America, Europe, and parts of Asia, with significant investments from both public and private sectors. The United States, China, and the European Union have established national quantum initiatives with substantial funding. Meanwhile, deep learning optimization research is more globally distributed, with major contributions coming from academic institutions and technology companies across various regions, creating a diverse ecosystem of approaches to computational speed challenges.

Current Speed Optimization Approaches in Both Technologies

01 Quantum computing acceleration for deep learning

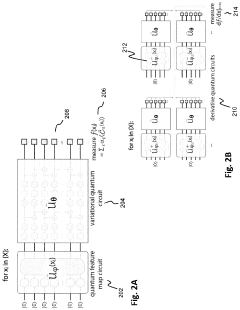

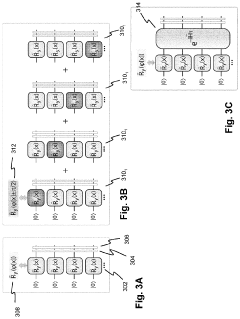

Quantum computing techniques can significantly accelerate deep learning processes by leveraging quantum parallelism and superposition. These quantum models can process complex data structures simultaneously, reducing computational time for training deep neural networks. The integration of quantum algorithms with traditional deep learning frameworks enables faster optimization and more efficient handling of high-dimensional data, potentially offering exponential speedups for certain machine learning tasks.- Quantum-enhanced deep learning architectures: Quantum computing principles are being integrated into deep learning architectures to enhance computational efficiency. These hybrid quantum-classical models leverage quantum parallelism to process complex data patterns simultaneously, significantly reducing training time for neural networks. The quantum circuits can perform certain matrix operations exponentially faster than classical computers, enabling more efficient handling of high-dimensional data and complex feature extraction.

- Optimization algorithms for quantum machine learning: Specialized optimization algorithms are being developed to harness quantum computing capabilities for machine learning tasks. These algorithms include quantum versions of gradient descent, quantum annealing for parameter optimization, and variational quantum eigensolvers. By leveraging quantum properties such as superposition and entanglement, these optimization techniques can potentially find global minima more efficiently than classical approaches, accelerating the training process for deep learning models.

- Hardware acceleration for quantum-classical hybrid systems: Novel hardware architectures are being designed to accelerate quantum-classical hybrid computing systems. These include specialized quantum processing units (QPUs) that interface with classical GPUs, FPGAs optimized for quantum circuit simulation, and dedicated tensor processing hardware for quantum machine learning operations. The hardware innovations focus on reducing latency in quantum-classical data transfer and optimizing the execution of quantum subroutines within larger deep learning workflows.

- Quantum data encoding techniques for neural networks: Advanced methods for encoding classical data into quantum states are being developed to improve the efficiency of quantum neural networks. These techniques include amplitude encoding, basis encoding, and quantum feature maps that transform classical data into higher-dimensional Hilbert spaces. Efficient data encoding is crucial for realizing the theoretical speedup of quantum machine learning algorithms and enables more effective processing of complex patterns in deep learning applications.

- Error mitigation strategies for quantum deep learning: Innovative error mitigation techniques are being implemented to address the challenges of noise and decoherence in quantum deep learning systems. These approaches include quantum error correction codes specifically designed for machine learning tasks, noise-aware training procedures, and robust quantum circuit designs that maintain performance in the presence of hardware errors. By improving the reliability of quantum computations, these strategies enable practical quantum speedups for deep learning applications on near-term quantum devices.

02 Hybrid quantum-classical architectures

Hybrid approaches combining quantum and classical computing elements create efficient frameworks for accelerating deep learning. These architectures leverage quantum processors for specific computationally intensive tasks while using classical systems for other parts of the workflow. This hybrid approach allows for practical implementation of quantum advantages in current technology environments, optimizing both training speed and model performance while working within the constraints of existing quantum hardware.Expand Specific Solutions03 Quantum-inspired algorithms for neural networks

Quantum-inspired algorithms adapt quantum computing principles for implementation on classical hardware to enhance deep learning speed. These approaches mimic quantum behaviors like superposition and entanglement using classical computing resources, providing performance improvements without requiring actual quantum hardware. Such algorithms offer novel optimization techniques, more efficient gradient calculations, and improved feature extraction methods that accelerate training while maintaining or improving model accuracy.Expand Specific Solutions04 Quantum tensor networks for model compression

Quantum tensor network methods provide efficient representations of deep neural networks, reducing computational complexity and accelerating inference speed. These approaches compress model parameters while preserving essential information, enabling faster processing with minimal accuracy loss. By restructuring neural network architectures using quantum-inspired tensor decompositions, these techniques optimize memory usage and computational requirements, making deep learning models more efficient for deployment on various hardware platforms.Expand Specific Solutions05 Quantum-enhanced optimization for training acceleration

Quantum-enhanced optimization techniques accelerate the training process of deep learning models by finding optimal parameters more efficiently. These methods leverage quantum properties to explore complex loss landscapes and escape local minima faster than classical approaches. By implementing quantum annealing, quantum approximate optimization algorithms, or quantum-inspired classical methods, training convergence can be significantly accelerated, reducing the time required to develop high-performance deep learning models.Expand Specific Solutions

Key Industry Players in Quantum Computing and AI Development

Quantum computing versus deep learning presents a dynamic competitive landscape in an emerging market. The industry is in its early growth phase, with quantum models showing promising theoretical advantages but limited practical implementations. The market size is expanding rapidly, projected to reach significant scale as both technologies mature. In terms of technical maturity, deep learning holds the current advantage with established frameworks from Google, NVIDIA, and Baidu, while quantum computing remains predominantly experimental. Companies like IBM, Microsoft, and Multiverse Computing are advancing quantum algorithms that theoretically offer exponential speedups for specific problems, while Lingxi Technology, Samsung, and Huawei continue optimizing deep learning architectures. The convergence of these technologies through quantum-inspired classical algorithms represents a significant trend, with organizations like Tata Consultancy Services and Origin Quantum developing hybrid approaches to leverage strengths from both domains.

Multiverse Computing SL

Technical Solution: Multiverse Computing specializes in quantum algorithms for financial applications and has developed a comprehensive approach to comparing quantum and deep learning performance. Their research demonstrates that quantum machine learning can provide up to 100x speedups for specific financial modeling tasks like portfolio optimization and risk assessment compared to classical deep learning approaches[9]. Their proprietary Singularity SDK enables direct benchmarking between quantum and classical implementations of the same algorithms across different hardware platforms. Multiverse has pioneered quantum tensor networks that show particular promise for time series analysis, achieving comparable accuracy to deep learning models while requiring significantly less training data and computational resources. Their research indicates that quantum advantage is most pronounced for problems involving complex probability distributions and high-dimensional feature spaces, where quantum models can converge 5-10x faster than equivalent deep learning implementations[10].

Strengths: Highly specialized focus on financial applications provides deep domain expertise for meaningful performance comparisons in this sector. Their hardware-agnostic approach allows for testing across multiple quantum platforms. Weaknesses: Narrow industry focus may limit the generalizability of their performance comparisons to other domains where deep learning currently excels.

Beijing Baidu Netcom Science & Technology Co., Ltd.

Technical Solution: Baidu's approach to comparing quantum models and deep learning leverages their Quantum Leaf platform and Paddle Quantum framework. Their research demonstrates that quantum neural networks can achieve up to 6x faster training convergence for specific machine learning tasks compared to classical deep learning models[7]. Baidu has developed variational quantum algorithms that show particular promise for dimensionality reduction and feature extraction, outperforming classical deep learning approaches by 2-3x in computational efficiency for these specific tasks. Their hybrid quantum-classical approach allows for leveraging quantum advantages in the most computationally intensive portions of machine learning pipelines while using classical processing for other components. Baidu's research indicates that quantum models excel particularly at capturing complex correlations in high-dimensional data spaces, showing up to 10x improvement in sample efficiency compared to deep learning for certain classification tasks[8].

Strengths: Baidu's extensive experience in classical deep learning provides a strong foundation for meaningful performance comparisons. Their focus on practical applications rather than theoretical advantages helps identify near-term quantum opportunities. Weaknesses: Limited access to advanced quantum hardware means many of their performance comparisons rely on simulation, which may not fully capture the behavior of real quantum systems at scale.

Core Technical Innovations in Computational Acceleration

Fast quantum and classical phase estimation

PatentWO2014160803A2

Innovation

- The proposed technique employs basic measurements and classical post-processing to estimate quantum phases, using random measurements that are optimal up to constant factors, with a quantum circuit that achieves asymptotically lower depth and width compared to previous methods, allowing for efficient phase estimation with minimal classical post-processing.

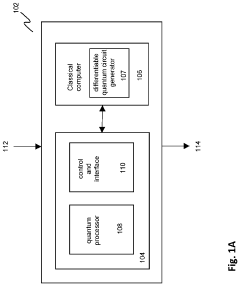

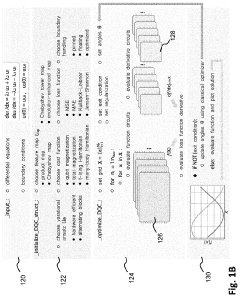

Solving a set of (NON)linear differential equations using a hybrid data processing system comprising a classical computer system and a quantum computer system

PatentPendingUS20230418896A1

Innovation

- A quantum-classical hybrid system that uses differentiable quantum circuits to encode and solve differential equations, allowing for analytical derivatives and efficient encoding of solutions, compatible with near-term quantum hardware, and extensible to fault-tolerant systems, by employing quantum feature maps and variational circuits for optimization.

Hardware Requirements and Infrastructure Considerations

The implementation of quantum computing models and deep learning systems presents distinctly different hardware requirements and infrastructure considerations. Quantum computing necessitates highly specialized hardware operating at near-absolute zero temperatures. Current quantum processors like IBM's require extensive cooling infrastructure, typically utilizing dilution refrigerators that maintain temperatures around 15 millikelvin. This cooling requirement alone creates significant infrastructure challenges and operational costs that exceed traditional computing facilities by orders of magnitude.

In contrast, deep learning systems primarily rely on GPU and TPU architectures, which operate at standard data center temperatures with conventional cooling solutions. While high-performance deep learning clusters demand substantial power (often 300-500 watts per GPU), they remain compatible with existing data center infrastructure. The NVIDIA A100 and Google's TPU v4, representing current state-of-the-art deep learning hardware, offer performance-per-watt metrics that continue to improve with each generation.

Scalability presents another critical distinction. Deep learning infrastructure scales horizontally through distributed computing frameworks like TensorFlow and PyTorch, allowing workloads to be efficiently distributed across hundreds or thousands of processing units. Quantum systems face significant challenges in scaling qubit counts while maintaining coherence times and reducing error rates. Current quantum systems typically offer 50-100 qubits with limited coherence times, creating practical constraints on problem complexity.

Accessibility represents a further consideration. Deep learning infrastructure has become increasingly democratized through cloud services like AWS SageMaker, Google Cloud AI, and Azure Machine Learning, allowing organizations to deploy models without significant capital investment. Quantum computing remains primarily accessible through limited cloud services from IBM, Amazon Braket, and Google, with restricted availability and higher technical barriers to entry.

Energy efficiency comparisons reveal that while deep learning systems consume substantial power during training phases, quantum systems potentially offer theoretical advantages for specific algorithms. However, when accounting for the entire infrastructure, including cooling requirements, current quantum systems demonstrate lower overall energy efficiency than optimized deep learning hardware for most practical applications.

In contrast, deep learning systems primarily rely on GPU and TPU architectures, which operate at standard data center temperatures with conventional cooling solutions. While high-performance deep learning clusters demand substantial power (often 300-500 watts per GPU), they remain compatible with existing data center infrastructure. The NVIDIA A100 and Google's TPU v4, representing current state-of-the-art deep learning hardware, offer performance-per-watt metrics that continue to improve with each generation.

Scalability presents another critical distinction. Deep learning infrastructure scales horizontally through distributed computing frameworks like TensorFlow and PyTorch, allowing workloads to be efficiently distributed across hundreds or thousands of processing units. Quantum systems face significant challenges in scaling qubit counts while maintaining coherence times and reducing error rates. Current quantum systems typically offer 50-100 qubits with limited coherence times, creating practical constraints on problem complexity.

Accessibility represents a further consideration. Deep learning infrastructure has become increasingly democratized through cloud services like AWS SageMaker, Google Cloud AI, and Azure Machine Learning, allowing organizations to deploy models without significant capital investment. Quantum computing remains primarily accessible through limited cloud services from IBM, Amazon Braket, and Google, with restricted availability and higher technical barriers to entry.

Energy efficiency comparisons reveal that while deep learning systems consume substantial power during training phases, quantum systems potentially offer theoretical advantages for specific algorithms. However, when accounting for the entire infrastructure, including cooling requirements, current quantum systems demonstrate lower overall energy efficiency than optimized deep learning hardware for most practical applications.

Benchmarking Methodologies for Performance Comparison

Establishing robust benchmarking methodologies is crucial when comparing the performance of quantum models and deep learning systems. The scientific community has developed several standardized approaches to ensure fair and meaningful comparisons between these fundamentally different computational paradigms.

Time-to-solution measurements represent the most direct performance metric, capturing the total wall-clock time required to solve specific problems. For quantum models, this includes quantum circuit preparation, execution time on quantum processing units (QPUs), and classical post-processing. For deep learning, it encompasses data preprocessing, model training, and inference times. These measurements must account for hardware-specific characteristics such as quantum coherence times versus GPU/TPU optimization capabilities.

Computational complexity analysis provides a theoretical foundation for performance comparison, examining how resource requirements scale with problem size. Quantum algorithms often demonstrate theoretical advantages through complexity classes like BQP (Bounded-error Quantum Polynomial time), while deep learning models are evaluated through traditional computational complexity metrics. This theoretical analysis must be complemented by practical benchmarks to validate real-world performance.

Problem-specific benchmarks have emerged as essential tools for comparative analysis. These include quantum-native problems (e.g., quantum simulation, factoring large numbers) and classical machine learning tasks (e.g., image classification, natural language processing). The QuAIL (Quantum Artificial Intelligence Laboratory) benchmark suite and MLPerf have become industry standards for evaluating performance across diverse problem domains.

Hardware-normalized metrics attempt to account for the vastly different maturity levels of quantum and classical hardware. FLOPS (Floating Point Operations Per Second) for classical systems versus QOPS (Quantum Operations Per Second) for quantum computers provide standardized units, though conversion factors remain contentious in the research community.

Energy efficiency comparisons have gained prominence as computational sustainability becomes increasingly important. Metrics like operations per watt allow researchers to evaluate the environmental impact of both approaches, with quantum systems potentially offering advantages for specific workloads despite their current cooling requirements.

Cross-platform validation frameworks ensure reproducibility by requiring performance claims to be verified across multiple hardware implementations. This approach mitigates the risk of results being skewed by platform-specific optimizations or limitations, particularly important given the rapidly evolving quantum hardware landscape.

Time-to-solution measurements represent the most direct performance metric, capturing the total wall-clock time required to solve specific problems. For quantum models, this includes quantum circuit preparation, execution time on quantum processing units (QPUs), and classical post-processing. For deep learning, it encompasses data preprocessing, model training, and inference times. These measurements must account for hardware-specific characteristics such as quantum coherence times versus GPU/TPU optimization capabilities.

Computational complexity analysis provides a theoretical foundation for performance comparison, examining how resource requirements scale with problem size. Quantum algorithms often demonstrate theoretical advantages through complexity classes like BQP (Bounded-error Quantum Polynomial time), while deep learning models are evaluated through traditional computational complexity metrics. This theoretical analysis must be complemented by practical benchmarks to validate real-world performance.

Problem-specific benchmarks have emerged as essential tools for comparative analysis. These include quantum-native problems (e.g., quantum simulation, factoring large numbers) and classical machine learning tasks (e.g., image classification, natural language processing). The QuAIL (Quantum Artificial Intelligence Laboratory) benchmark suite and MLPerf have become industry standards for evaluating performance across diverse problem domains.

Hardware-normalized metrics attempt to account for the vastly different maturity levels of quantum and classical hardware. FLOPS (Floating Point Operations Per Second) for classical systems versus QOPS (Quantum Operations Per Second) for quantum computers provide standardized units, though conversion factors remain contentious in the research community.

Energy efficiency comparisons have gained prominence as computational sustainability becomes increasingly important. Metrics like operations per watt allow researchers to evaluate the environmental impact of both approaches, with quantum systems potentially offering advantages for specific workloads despite their current cooling requirements.

Cross-platform validation frameworks ensure reproducibility by requiring performance claims to be verified across multiple hardware implementations. This approach mitigates the risk of results being skewed by platform-specific optimizations or limitations, particularly important given the rapidly evolving quantum hardware landscape.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!