How Time-Of-Flight Ensures Low-Latency Depth For Robotics And AR?

SEP 22, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

ToF Technology Background and Objectives

Time-of-Flight (ToF) technology has evolved significantly since its inception in the early 1990s, initially developed for scientific applications in particle physics and astronomy. The fundamental principle behind ToF involves measuring the time taken for light to travel from a source to an object and back to a sensor, enabling precise distance calculations. This technology has undergone remarkable transformation over the past three decades, transitioning from bulky, expensive laboratory equipment to compact, cost-effective components suitable for consumer electronics and industrial applications.

The evolution of ToF technology has been accelerated by advancements in semiconductor manufacturing, photonics, and signal processing algorithms. Early ToF systems relied on discrete components with limited resolution and significant power requirements. Modern implementations leverage integrated circuits, specialized sensors, and sophisticated algorithms to achieve millimeter-level accuracy with minimal latency, making them ideal for real-time applications in robotics and augmented reality (AR).

Current technological trends in ToF are focused on miniaturization, power efficiency, and enhanced performance in challenging environmental conditions. The integration of ToF sensors with machine learning algorithms has further improved depth perception capabilities, enabling more accurate object recognition and spatial mapping. Additionally, the development of multi-frequency and multi-phase ToF systems has addressed traditional limitations related to ambient light interference and multi-path reflections.

The primary technical objective for ToF in robotics and AR applications is to achieve sub-10ms latency in depth sensing while maintaining high spatial resolution and accuracy across varying distances. This low-latency performance is critical for enabling natural interaction in AR environments and ensuring safe, responsive operation of robotic systems. Secondary objectives include reducing power consumption to extend battery life in mobile applications and improving performance under diverse lighting conditions.

For robotics specifically, ToF technology aims to provide reliable obstacle detection, precise object manipulation capabilities, and efficient simultaneous localization and mapping (SLAM). In AR applications, the objectives extend to accurate real-time environment reconstruction, realistic occlusion handling, and seamless integration of virtual objects with the physical world.

The convergence of ToF technology with complementary sensing modalities, such as structured light and stereo vision, represents another important trend, as multi-modal approaches can overcome the limitations of individual technologies. This fusion approach is particularly promising for addressing challenging scenarios involving transparent objects, highly reflective surfaces, or outdoor environments with strong ambient light.

The evolution of ToF technology has been accelerated by advancements in semiconductor manufacturing, photonics, and signal processing algorithms. Early ToF systems relied on discrete components with limited resolution and significant power requirements. Modern implementations leverage integrated circuits, specialized sensors, and sophisticated algorithms to achieve millimeter-level accuracy with minimal latency, making them ideal for real-time applications in robotics and augmented reality (AR).

Current technological trends in ToF are focused on miniaturization, power efficiency, and enhanced performance in challenging environmental conditions. The integration of ToF sensors with machine learning algorithms has further improved depth perception capabilities, enabling more accurate object recognition and spatial mapping. Additionally, the development of multi-frequency and multi-phase ToF systems has addressed traditional limitations related to ambient light interference and multi-path reflections.

The primary technical objective for ToF in robotics and AR applications is to achieve sub-10ms latency in depth sensing while maintaining high spatial resolution and accuracy across varying distances. This low-latency performance is critical for enabling natural interaction in AR environments and ensuring safe, responsive operation of robotic systems. Secondary objectives include reducing power consumption to extend battery life in mobile applications and improving performance under diverse lighting conditions.

For robotics specifically, ToF technology aims to provide reliable obstacle detection, precise object manipulation capabilities, and efficient simultaneous localization and mapping (SLAM). In AR applications, the objectives extend to accurate real-time environment reconstruction, realistic occlusion handling, and seamless integration of virtual objects with the physical world.

The convergence of ToF technology with complementary sensing modalities, such as structured light and stereo vision, represents another important trend, as multi-modal approaches can overcome the limitations of individual technologies. This fusion approach is particularly promising for addressing challenging scenarios involving transparent objects, highly reflective surfaces, or outdoor environments with strong ambient light.

Market Demand Analysis for Low-Latency Depth Sensing

The demand for low-latency depth sensing technologies has experienced significant growth across multiple sectors, with robotics and augmented reality (AR) applications leading this expansion. Market research indicates that the global depth sensing market is projected to grow at a compound annual growth rate of 12.5% between 2021 and 2026, reaching a market value exceeding $7.2 billion by the end of this period.

In the robotics sector, the need for real-time environmental perception has become critical as autonomous robots transition from controlled industrial settings to dynamic human environments. Manufacturing industries require robots with precise depth perception capabilities to perform complex assembly tasks with sub-millimeter accuracy. Meanwhile, service robots operating in healthcare, retail, and domestic environments demand depth sensing systems that can process spatial information with latencies under 20 milliseconds to ensure safe human-robot interaction.

The AR market presents equally compelling demand drivers, with major technology companies investing heavily in spatial computing platforms that rely on accurate, low-latency depth mapping. Consumer AR applications require depth sensing solutions that can operate efficiently on mobile devices with limited power resources while maintaining frame rates above 60 Hz to prevent motion sickness and ensure immersive experiences.

Enterprise AR applications in fields such as architecture, manufacturing, and healthcare demand even more precise depth mapping capabilities. For instance, medical AR applications require depth sensing with accuracy within 1-2 millimeters and latency below 10 milliseconds to support surgical guidance systems.

Market segmentation analysis reveals that Time-of-Flight (ToF) technology has emerged as a preferred solution due to its ability to deliver the required combination of speed, accuracy, and power efficiency. The automotive sector represents another significant growth area, with advanced driver assistance systems (ADAS) and autonomous vehicles requiring depth sensing solutions that can operate reliably in diverse environmental conditions.

Regional analysis shows North America leading the market with approximately 35% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is experiencing the fastest growth rate due to rapid industrialization and increasing adoption of automation technologies in manufacturing hubs like China, Japan, and South Korea.

Customer surveys indicate that key purchasing factors for depth sensing technologies include latency performance, power consumption, size constraints, and integration capabilities with existing systems. As applications become more sophisticated, the market increasingly favors solutions that can deliver depth data with latencies below 5 milliseconds while maintaining high resolution and accuracy across varying distances and lighting conditions.

In the robotics sector, the need for real-time environmental perception has become critical as autonomous robots transition from controlled industrial settings to dynamic human environments. Manufacturing industries require robots with precise depth perception capabilities to perform complex assembly tasks with sub-millimeter accuracy. Meanwhile, service robots operating in healthcare, retail, and domestic environments demand depth sensing systems that can process spatial information with latencies under 20 milliseconds to ensure safe human-robot interaction.

The AR market presents equally compelling demand drivers, with major technology companies investing heavily in spatial computing platforms that rely on accurate, low-latency depth mapping. Consumer AR applications require depth sensing solutions that can operate efficiently on mobile devices with limited power resources while maintaining frame rates above 60 Hz to prevent motion sickness and ensure immersive experiences.

Enterprise AR applications in fields such as architecture, manufacturing, and healthcare demand even more precise depth mapping capabilities. For instance, medical AR applications require depth sensing with accuracy within 1-2 millimeters and latency below 10 milliseconds to support surgical guidance systems.

Market segmentation analysis reveals that Time-of-Flight (ToF) technology has emerged as a preferred solution due to its ability to deliver the required combination of speed, accuracy, and power efficiency. The automotive sector represents another significant growth area, with advanced driver assistance systems (ADAS) and autonomous vehicles requiring depth sensing solutions that can operate reliably in diverse environmental conditions.

Regional analysis shows North America leading the market with approximately 35% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is experiencing the fastest growth rate due to rapid industrialization and increasing adoption of automation technologies in manufacturing hubs like China, Japan, and South Korea.

Customer surveys indicate that key purchasing factors for depth sensing technologies include latency performance, power consumption, size constraints, and integration capabilities with existing systems. As applications become more sophisticated, the market increasingly favors solutions that can deliver depth data with latencies below 5 milliseconds while maintaining high resolution and accuracy across varying distances and lighting conditions.

Current ToF Technology Challenges in Robotics and AR

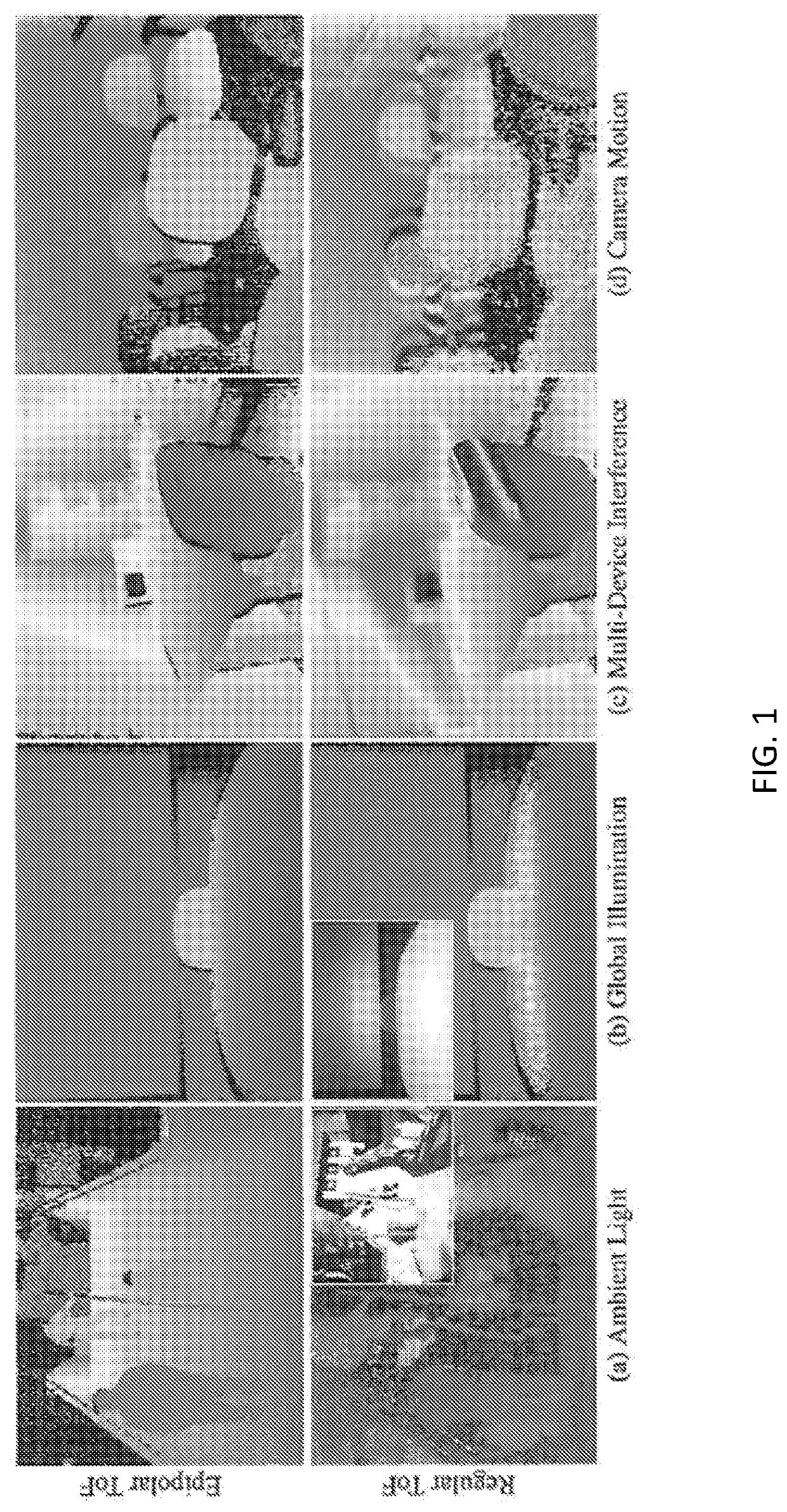

Despite significant advancements in Time-of-Flight (ToF) technology, several critical challenges persist that limit its optimal performance in robotics and augmented reality applications. The primary challenge remains achieving consistently low latency while maintaining high accuracy in depth sensing across diverse environmental conditions. Current ToF systems struggle with motion artifacts when either the sensor or the subject is moving rapidly, creating temporal inconsistencies in depth maps that can severely impact real-time applications.

Signal-to-noise ratio (SNR) presents another significant hurdle, particularly in outdoor environments where ambient light interference can overwhelm the ToF sensor's light signals. This interference substantially reduces the effective range and accuracy of ToF systems, limiting their utility in uncontrolled lighting conditions that are common in real-world robotic deployments and outdoor AR experiences.

Power consumption continues to be a major constraint, especially for mobile AR devices and autonomous robots with limited battery capacity. High-performance ToF systems require substantial power for their light emitters and processing units, creating a difficult trade-off between depth sensing performance and operational runtime. This challenge becomes particularly acute in wearable AR devices where form factor and heat dissipation are additional concerns.

Resolution limitations also persist in current ToF implementations. While RGB cameras have reached multi-megapixel resolutions, many commercial ToF sensors still operate at significantly lower resolutions (typically 240×180 to 640×480 pixels). This resolution gap creates alignment challenges when overlaying depth information with high-resolution RGB imagery in AR applications or for precise robotic manipulation tasks.

Multi-camera calibration and synchronization present complex technical hurdles in systems requiring multiple ToF sensors. Achieving precise temporal and spatial alignment between multiple depth sensors remains difficult, particularly when attempting to create comprehensive environmental models for robot navigation or immersive AR experiences.

Material properties of observed surfaces significantly impact ToF performance, with highly reflective, transparent, or absorptive materials causing measurement errors. Current algorithms struggle to compensate for these material-dependent variations, resulting in inconsistent depth measurements across different surface types commonly encountered in both robotics and AR applications.

Processing requirements for real-time depth map filtering, enhancement, and integration with other sensor data remain computationally intensive. Edge computing devices in robots and AR headsets often lack sufficient processing power to implement the most advanced ToF data enhancement algorithms while maintaining the low latency required for responsive operation.

Signal-to-noise ratio (SNR) presents another significant hurdle, particularly in outdoor environments where ambient light interference can overwhelm the ToF sensor's light signals. This interference substantially reduces the effective range and accuracy of ToF systems, limiting their utility in uncontrolled lighting conditions that are common in real-world robotic deployments and outdoor AR experiences.

Power consumption continues to be a major constraint, especially for mobile AR devices and autonomous robots with limited battery capacity. High-performance ToF systems require substantial power for their light emitters and processing units, creating a difficult trade-off between depth sensing performance and operational runtime. This challenge becomes particularly acute in wearable AR devices where form factor and heat dissipation are additional concerns.

Resolution limitations also persist in current ToF implementations. While RGB cameras have reached multi-megapixel resolutions, many commercial ToF sensors still operate at significantly lower resolutions (typically 240×180 to 640×480 pixels). This resolution gap creates alignment challenges when overlaying depth information with high-resolution RGB imagery in AR applications or for precise robotic manipulation tasks.

Multi-camera calibration and synchronization present complex technical hurdles in systems requiring multiple ToF sensors. Achieving precise temporal and spatial alignment between multiple depth sensors remains difficult, particularly when attempting to create comprehensive environmental models for robot navigation or immersive AR experiences.

Material properties of observed surfaces significantly impact ToF performance, with highly reflective, transparent, or absorptive materials causing measurement errors. Current algorithms struggle to compensate for these material-dependent variations, resulting in inconsistent depth measurements across different surface types commonly encountered in both robotics and AR applications.

Processing requirements for real-time depth map filtering, enhancement, and integration with other sensor data remain computationally intensive. Edge computing devices in robots and AR headsets often lack sufficient processing power to implement the most advanced ToF data enhancement algorithms while maintaining the low latency required for responsive operation.

Current ToF Implementation Solutions

01 Time-of-Flight sensor optimization for low latency depth sensing

Advanced Time-of-Flight (ToF) sensors are designed with optimized hardware configurations to reduce latency in depth data acquisition. These optimizations include specialized pixel architectures, improved photon detection circuits, and integrated signal processing units that enable faster depth measurements. The sensors can capture depth information with minimal delay, making them suitable for real-time applications requiring immediate spatial awareness.- Time-of-Flight sensor optimization for low-latency depth sensing: Advanced Time-of-Flight (ToF) sensors are designed with optimized hardware configurations to reduce latency in depth data acquisition. These systems employ specialized pixel architectures, high-speed photon detectors, and integrated processing units that enable rapid capture and processing of depth information. The optimization includes improved signal processing algorithms and sensor readout mechanisms that minimize the time between light emission and depth data generation, resulting in significantly reduced latency for real-time applications.

- Processing techniques for accelerating ToF depth computation: Specialized processing techniques are implemented to accelerate the computation of depth data from Time-of-Flight measurements. These include parallel processing architectures, hardware acceleration units, and optimized algorithms that reduce computational overhead. Advanced signal processing methods filter noise while maintaining low latency, and techniques such as phase unwrapping and multi-frequency approaches improve depth accuracy without sacrificing speed. Some implementations use dedicated processors or FPGAs to perform calculations in real-time with minimal delay.

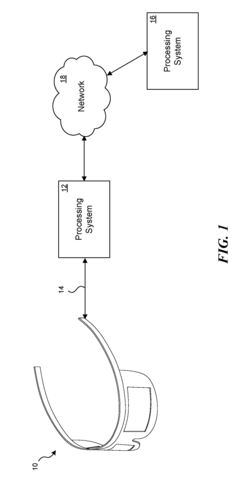

- System architecture for low-latency ToF applications: Novel system architectures are designed specifically for low-latency Time-of-Flight applications. These architectures integrate sensors, processing units, and memory in optimized configurations that minimize data transfer bottlenecks. Direct memory access techniques and high-speed interfaces reduce delays in data movement, while specialized hardware pipelines enable efficient processing of ToF data. Some systems employ edge computing approaches to process depth information closer to the sensor, further reducing latency for time-critical applications.

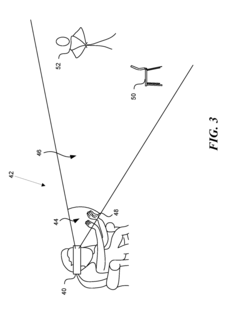

- Multi-camera ToF systems for comprehensive low-latency depth mapping: Multi-camera Time-of-Flight systems combine data from multiple sensors to create comprehensive depth maps with reduced latency. These systems synchronize multiple ToF cameras to capture different perspectives simultaneously, enabling more complete scene coverage and reducing occlusion issues. Advanced fusion algorithms combine the data from multiple sensors in real-time, while maintaining low latency. The distributed sensing approach also allows for load balancing of processing requirements across multiple units, further reducing overall system latency.

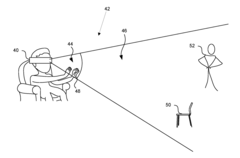

- Application-specific ToF implementations for real-time depth sensing: Time-of-Flight technology is implemented in application-specific ways to meet the low-latency requirements of different use cases. For augmented reality and virtual reality applications, ToF systems are optimized to provide depth information with minimal delay for realistic user interactions. In autonomous vehicles and robotics, specialized ToF implementations focus on rapid obstacle detection and distance measurement. Industrial automation applications employ ToF systems designed for high-speed object tracking and position verification with extremely low latency requirements.

02 Processing algorithms for accelerated depth data computation

Specialized algorithms are implemented to accelerate the processing of ToF depth data, significantly reducing computational latency. These include parallel processing techniques, optimized signal filtering methods, and efficient depth calculation algorithms that minimize processing time. Advanced computational approaches enable real-time depth map generation with reduced latency, allowing for immediate response in interactive applications.Expand Specific Solutions03 System architecture for low-latency ToF implementation

Integrated system architectures are designed specifically for low-latency ToF applications, featuring optimized data pathways between sensors, processors, and output devices. These architectures incorporate dedicated hardware accelerators, high-speed memory buffers, and streamlined communication protocols to minimize delays in the depth sensing pipeline. The holistic system design ensures that depth information flows efficiently from capture to application with minimal latency.Expand Specific Solutions04 Multi-sensor fusion for enhanced low-latency depth perception

Multiple sensor technologies are combined to improve the accuracy and reduce the latency of depth perception systems. By fusing ToF data with information from complementary sensors such as stereo cameras or structured light systems, these approaches can overcome individual sensor limitations while maintaining low latency. The fusion techniques employ predictive algorithms and parallel processing to integrate multiple data streams efficiently, resulting in more robust and responsive depth sensing.Expand Specific Solutions05 Application-specific ToF implementations for latency-critical scenarios

Specialized ToF implementations are developed for applications where depth sensing latency is critical, such as autonomous navigation, augmented reality, and human-computer interaction. These implementations feature customized illumination patterns, sensor configurations, and processing pipelines optimized for specific use cases. By focusing on the particular requirements of each application, these systems achieve the lowest possible latency while maintaining sufficient accuracy for their intended purpose.Expand Specific Solutions

Key Players in ToF Sensor Industry

Time-of-Flight (ToF) technology for depth sensing is currently in a growth phase, with the market expanding rapidly due to increasing applications in robotics, AR, and autonomous systems. The global ToF sensor market is projected to reach significant scale as demand for real-time 3D imaging solutions grows. Technologically, major players have achieved varying levels of maturity: Sony Semiconductor Solutions and Sony Depthsensing Solutions lead with advanced sensor technologies, while Microsoft has integrated ToF into their AR products. Samsung, OPPO, and Magic Leap are actively developing consumer applications. Companies like Orbbec and Shenzhen Aoruida are focusing on specialized industrial and automotive implementations. DJI and LG Innotek are leveraging ToF for drone navigation and mobile applications respectively. The competitive landscape shows established electronics giants competing with specialized sensor manufacturers, with innovation focused on reducing latency and improving accuracy for real-time applications.

Microsoft Technology Licensing LLC

Technical Solution: Microsoft has developed advanced Time-of-Flight (ToF) technology primarily for their Azure Kinect DK and HoloLens platforms. Their approach utilizes a high-frequency modulated infrared light source combined with specialized image sensors to measure the phase shift between emitted and reflected light signals. This allows for precise depth mapping with millimeter-level accuracy at ranges up to 5 meters. Microsoft's implementation incorporates multi-path interference (MPI) correction algorithms that address common ToF challenges in complex environments with reflective surfaces. Their system achieves depth frame rates of up to 30 fps with latency under 20ms, making it suitable for real-time robotics applications and AR experiences[1]. Microsoft has also developed custom silicon for ToF processing that performs depth calculations directly on-chip, reducing the computational load on the main processor and further decreasing latency. Their latest ToF sensors feature a 1MP resolution with a wide field of view (120° diagonal), enabling detailed environmental mapping for both indoor robotics navigation and immersive AR applications[3].

Strengths: Microsoft's ToF technology excels in low-latency performance (sub-20ms) and high accuracy (±1mm at 1m range), with robust operation under varying lighting conditions. Their custom silicon approach reduces power consumption while maintaining high performance. Weaknesses: The system has higher cost compared to simpler depth sensing solutions and shows reduced accuracy in outdoor environments with strong ambient infrared light.

Sony Semiconductor Solutions Corp.

Technical Solution: Sony Semiconductor Solutions has pioneered back-illuminated Time-of-Flight (ToF) image sensors specifically designed for low-latency depth sensing in robotics and AR applications. Their DepthSense™ ToF technology utilizes a unique pixel architecture that incorporates high-speed charge transfer and accumulation structures, enabling depth measurements with latencies as low as 10ms. Sony's approach employs indirect ToF (iToF) methodology with modulated infrared light at frequencies up to 100MHz, measuring phase differences between emitted and received light signals to calculate precise distance information. Their latest sensors feature a 10μm pixel size with over 90% quantum efficiency in the near-infrared spectrum, allowing for accurate depth sensing even in challenging low-light environments[2]. Sony has also developed specialized signal processing algorithms that mitigate multi-path interference and ambient light noise, critical for maintaining accuracy in complex real-world environments. Their VL53L5 sensor achieves a depth accuracy of ±2cm at ranges up to 4 meters while consuming only 940mW during active sensing, making it particularly suitable for power-constrained mobile AR devices and autonomous robots requiring reliable obstacle detection[4].

Strengths: Sony's ToF sensors deliver exceptional low-light performance with industry-leading quantum efficiency and signal-to-noise ratios. Their compact form factor and low power consumption make them ideal for integration into mobile devices and small robots. Weaknesses: The current generation has somewhat limited maximum range (4-5m) compared to some competing technologies, and performance can degrade in environments with strong infrared interference.

Core ToF Patents and Technical Innovations

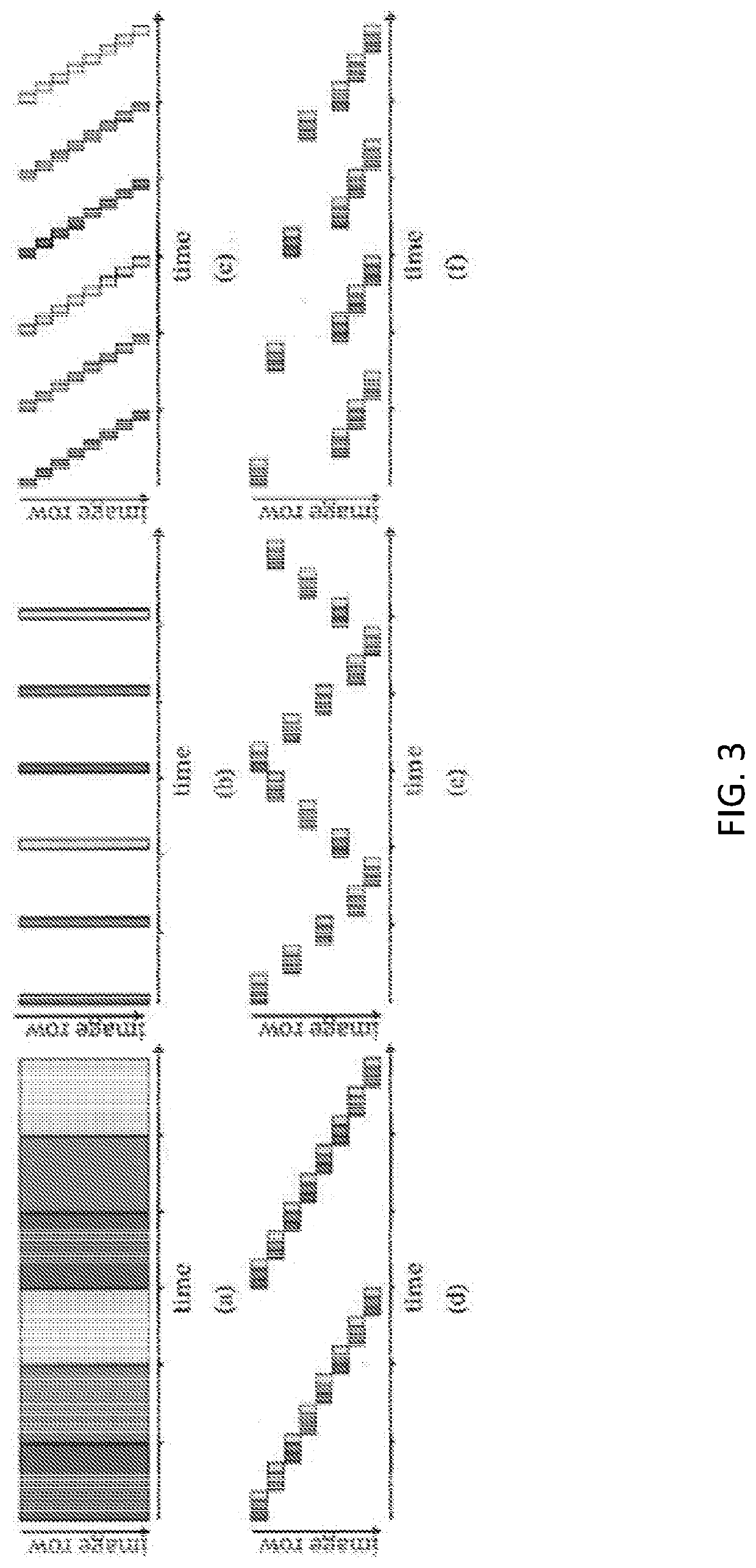

Alternating Frequency Captures for Time of Flight Depth Sensing

PatentActiveUS20180218509A1

Innovation

- An electromagnetic radiation emitter that alternates between two frequencies on a frame-by-frame basis to de-alias depth computations, using phase calculations from reflected radiation to determine distances, thereby reducing power consumption while maintaining high frame rates for depth detection.

Method for epipolar time of flight imaging

PatentActiveUS20200092533A1

Innovation

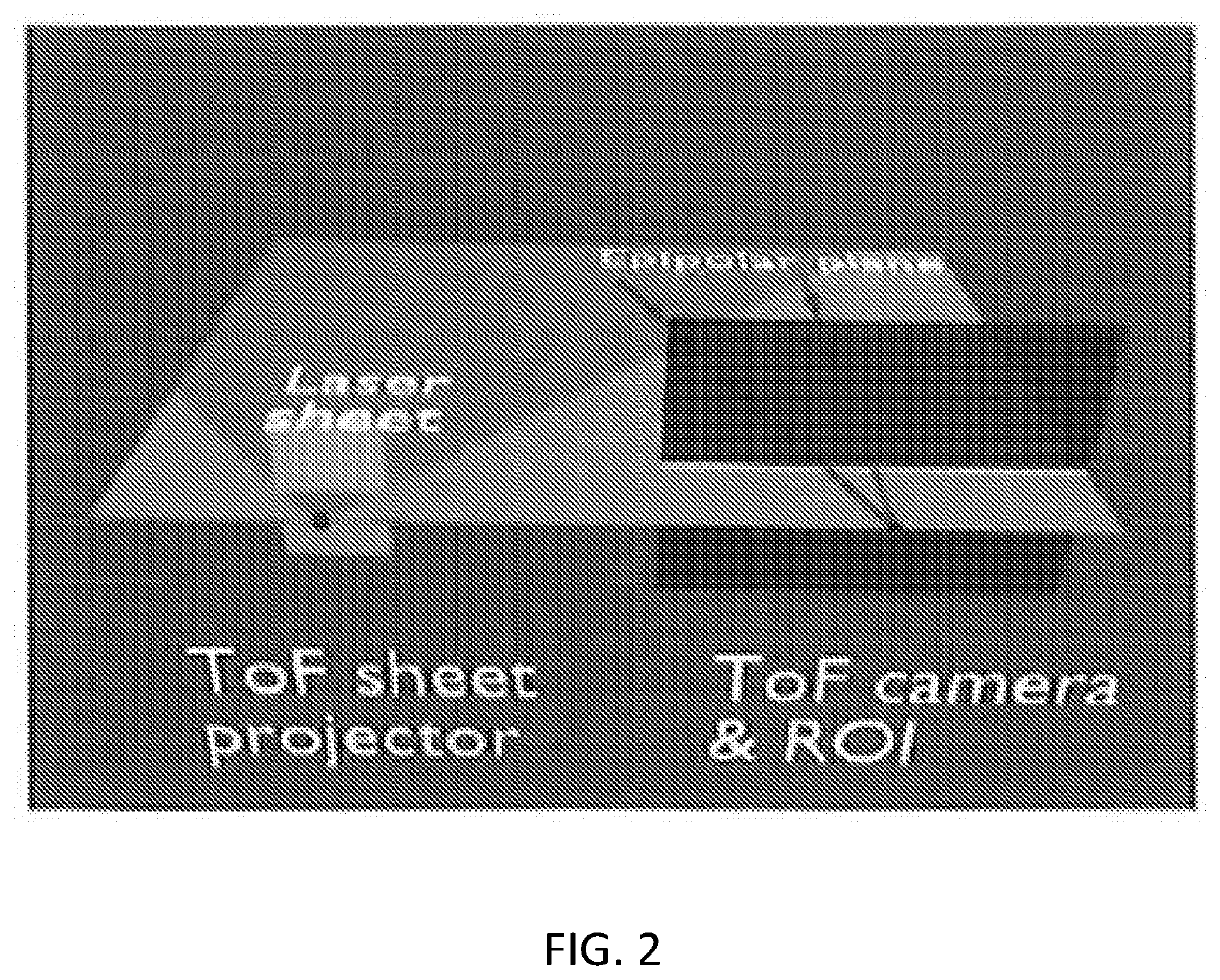

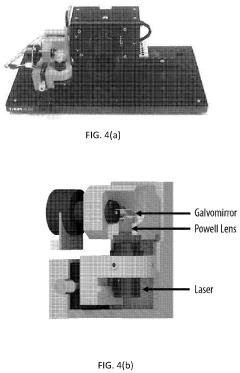

- The use of energy-efficient epipolar imaging, where a continuously-modulated sheet of laser light is projected along carefully chosen epipolar planes, allowing only a strip of CW-ToF pixels to be exposed, enhancing range, robustness, and minimizing interference and motion artifacts.

Integration Challenges with Existing Systems

Integrating Time-of-Flight (ToF) technology into existing robotic and AR systems presents significant challenges that require careful consideration. Current robotic platforms and AR devices often utilize established sensing technologies such as structured light, stereo vision, or LiDAR systems. The introduction of ToF sensors necessitates substantial modifications to both hardware interfaces and software processing pipelines, creating potential compatibility issues with legacy components.

Hardware integration challenges primarily revolve around physical constraints and power requirements. ToF sensors typically demand specific mounting positions to achieve optimal field of view and depth accuracy. This spatial requirement may conflict with existing sensor arrangements on robotic platforms or the compact form factors of AR headsets. Additionally, ToF systems require precise timing synchronization with other sensors to maintain coherent environmental mapping, which may necessitate redesigning timing circuits or implementing new synchronization protocols.

Power consumption represents another critical integration hurdle. While ToF technology has become more energy-efficient, it still requires substantial power for the active illumination component. This poses particular challenges for battery-operated devices such as mobile robots or wearable AR systems, potentially reducing operational time or requiring battery capacity upgrades.

From a software perspective, integrating ToF data streams into existing perception frameworks demands significant adaptation. Most current robotic perception stacks and AR spatial mapping algorithms are optimized for different sensor modalities. Developers must implement new drivers, calibration routines, and processing algorithms specifically tailored to handle the unique characteristics of ToF data, including its noise profile and error patterns. This often requires extensive refactoring of perception pipelines rather than simple plug-and-play integration.

Data fusion represents perhaps the most complex integration challenge. Combining ToF depth information with data from other sensors (cameras, IMUs, LiDAR) requires sophisticated sensor fusion algorithms to resolve discrepancies in resolution, frame rate, and field of view. Particularly in dynamic environments, temporal alignment between different sensor streams becomes critical for accurate scene reconstruction and object tracking. Existing fusion algorithms may require substantial modification to properly incorporate the high-frequency, low-latency depth data that ToF sensors provide.

Calibration complexity increases significantly with ToF integration. The technology requires precise intrinsic calibration to account for lens distortion and systematic measurement errors, as well as extrinsic calibration to align with other sensors in the system. Maintaining this calibration over time, especially in systems subject to vibration or temperature variations, presents ongoing challenges that may necessitate the development of new auto-calibration routines.

Hardware integration challenges primarily revolve around physical constraints and power requirements. ToF sensors typically demand specific mounting positions to achieve optimal field of view and depth accuracy. This spatial requirement may conflict with existing sensor arrangements on robotic platforms or the compact form factors of AR headsets. Additionally, ToF systems require precise timing synchronization with other sensors to maintain coherent environmental mapping, which may necessitate redesigning timing circuits or implementing new synchronization protocols.

Power consumption represents another critical integration hurdle. While ToF technology has become more energy-efficient, it still requires substantial power for the active illumination component. This poses particular challenges for battery-operated devices such as mobile robots or wearable AR systems, potentially reducing operational time or requiring battery capacity upgrades.

From a software perspective, integrating ToF data streams into existing perception frameworks demands significant adaptation. Most current robotic perception stacks and AR spatial mapping algorithms are optimized for different sensor modalities. Developers must implement new drivers, calibration routines, and processing algorithms specifically tailored to handle the unique characteristics of ToF data, including its noise profile and error patterns. This often requires extensive refactoring of perception pipelines rather than simple plug-and-play integration.

Data fusion represents perhaps the most complex integration challenge. Combining ToF depth information with data from other sensors (cameras, IMUs, LiDAR) requires sophisticated sensor fusion algorithms to resolve discrepancies in resolution, frame rate, and field of view. Particularly in dynamic environments, temporal alignment between different sensor streams becomes critical for accurate scene reconstruction and object tracking. Existing fusion algorithms may require substantial modification to properly incorporate the high-frequency, low-latency depth data that ToF sensors provide.

Calibration complexity increases significantly with ToF integration. The technology requires precise intrinsic calibration to account for lens distortion and systematic measurement errors, as well as extrinsic calibration to align with other sensors in the system. Maintaining this calibration over time, especially in systems subject to vibration or temperature variations, presents ongoing challenges that may necessitate the development of new auto-calibration routines.

Power Efficiency and Form Factor Considerations

Power efficiency and form factor considerations are critical factors in the successful deployment of Time-of-Flight (ToF) technology for robotics and augmented reality applications. As these systems increasingly require mobility and extended operation times, optimizing energy consumption becomes paramount for practical implementation.

ToF sensors traditionally consume significant power due to their active illumination requirements. The emitter component must generate high-intensity infrared light pulses, which can drain batteries quickly. Recent advancements have focused on reducing this power demand through more efficient illumination patterns and intelligent power management systems. Modern ToF modules now implement adaptive illumination techniques that adjust light intensity based on environmental conditions and distance requirements, significantly reducing power consumption during operation.

Signal processing components also contribute substantially to power requirements. The computational demands of processing ToF data in real-time necessitate powerful processors that can quickly analyze incoming light signals. Industry leaders have developed specialized low-power ASICs (Application-Specific Integrated Circuits) that optimize these calculations while minimizing energy usage, enabling longer operation times for battery-powered devices.

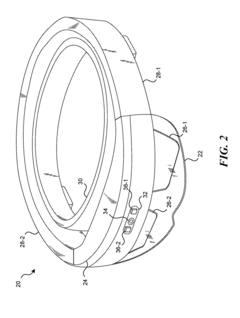

Form factor considerations present another significant challenge for ToF integration in compact devices. Traditional depth sensing systems were bulky, limiting their application in sleek consumer products or small robotic platforms. Miniaturization efforts have yielded impressive results, with some modern ToF modules measuring less than 5mm in height while maintaining high performance characteristics. This reduction in size enables integration into slim AR glasses frames and compact robotic sensors without compromising functionality.

Thermal management represents another crucial aspect of form factor design. The active illumination components generate heat during operation, which must be effectively dissipated to prevent performance degradation and ensure component longevity. Engineers have developed innovative thermal management solutions, including passive cooling structures and thermally conductive pathways that efficiently channel heat away from sensitive components.

Integration challenges also arise when combining ToF sensors with other necessary components in space-constrained devices. Manufacturers have responded with highly integrated modules that combine emitters, sensors, and processing units in single packages, significantly reducing the overall footprint. These system-in-package solutions enable more elegant product designs while maintaining the low-latency performance critical for robotics and AR applications.

The industry continues to push boundaries in this area, with research focusing on novel materials and architectures that could further reduce power consumption while shrinking form factors. Emerging technologies like organic semiconductors and advanced packaging techniques promise to deliver even more efficient and compact ToF solutions in the near future.

ToF sensors traditionally consume significant power due to their active illumination requirements. The emitter component must generate high-intensity infrared light pulses, which can drain batteries quickly. Recent advancements have focused on reducing this power demand through more efficient illumination patterns and intelligent power management systems. Modern ToF modules now implement adaptive illumination techniques that adjust light intensity based on environmental conditions and distance requirements, significantly reducing power consumption during operation.

Signal processing components also contribute substantially to power requirements. The computational demands of processing ToF data in real-time necessitate powerful processors that can quickly analyze incoming light signals. Industry leaders have developed specialized low-power ASICs (Application-Specific Integrated Circuits) that optimize these calculations while minimizing energy usage, enabling longer operation times for battery-powered devices.

Form factor considerations present another significant challenge for ToF integration in compact devices. Traditional depth sensing systems were bulky, limiting their application in sleek consumer products or small robotic platforms. Miniaturization efforts have yielded impressive results, with some modern ToF modules measuring less than 5mm in height while maintaining high performance characteristics. This reduction in size enables integration into slim AR glasses frames and compact robotic sensors without compromising functionality.

Thermal management represents another crucial aspect of form factor design. The active illumination components generate heat during operation, which must be effectively dissipated to prevent performance degradation and ensure component longevity. Engineers have developed innovative thermal management solutions, including passive cooling structures and thermally conductive pathways that efficiently channel heat away from sensitive components.

Integration challenges also arise when combining ToF sensors with other necessary components in space-constrained devices. Manufacturers have responded with highly integrated modules that combine emitters, sensors, and processing units in single packages, significantly reducing the overall footprint. These system-in-package solutions enable more elegant product designs while maintaining the low-latency performance critical for robotics and AR applications.

The industry continues to push boundaries in this area, with research focusing on novel materials and architectures that could further reduce power consumption while shrinking form factors. Emerging technologies like organic semiconductors and advanced packaging techniques promise to deliver even more efficient and compact ToF solutions in the near future.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!