Time-Of-Flight Fusion: IMU/Camera Alignment, Extrinsics And Latency

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

TOF Fusion Background and Objectives

Time-of-Flight (ToF) technology has evolved significantly over the past decade, transforming from a niche sensing method to a mainstream solution for depth perception in various applications. The fusion of ToF sensors with Inertial Measurement Units (IMUs) and traditional cameras represents a critical advancement in spatial computing, augmented reality, autonomous navigation, and robotics. This technological convergence aims to overcome the inherent limitations of individual sensing modalities by leveraging their complementary strengths.

The historical trajectory of ToF technology began with basic range-finding applications but has rapidly expanded to include high-resolution 3D mapping, gesture recognition, and object tracking. The integration with IMUs and cameras emerged as researchers recognized that ToF sensors alone struggled with motion blur, limited range, and performance degradation in certain lighting conditions. This fusion approach has gained momentum particularly since 2015, when mobile device manufacturers began incorporating depth sensors alongside traditional imaging systems.

Current technical objectives for ToF fusion systems center on achieving precise temporal and spatial alignment between these heterogeneous sensors. Accurate extrinsic calibration between ToF sensors, IMUs, and cameras is fundamental to creating a unified coordinate system that enables reliable depth-enhanced perception. Additionally, minimizing latency between sensor measurements represents a critical goal, as even millisecond-level discrepancies can significantly impact applications requiring real-time response.

The market trajectory for this technology shows accelerating adoption across multiple sectors. In consumer electronics, ToF fusion enables enhanced photography effects, secure facial recognition, and immersive AR experiences. In automotive applications, these systems support advanced driver assistance features and environmental mapping. Industrial applications leverage the technology for precise robotic manipulation and quality control processes.

Research objectives in this field focus on developing robust algorithms for sensor synchronization that can operate under varying environmental conditions. This includes addressing challenges such as motion compensation, handling different sensor sampling rates, and accounting for environmental factors that differentially affect each sensor type. The ultimate goal is to create fusion systems that deliver consistent, high-fidelity spatial data with minimal computational overhead and power consumption.

As the technology continues to mature, standardization efforts are emerging to establish common protocols for sensor alignment and data fusion, which will be essential for broader industry adoption and interoperability between different hardware implementations.

The historical trajectory of ToF technology began with basic range-finding applications but has rapidly expanded to include high-resolution 3D mapping, gesture recognition, and object tracking. The integration with IMUs and cameras emerged as researchers recognized that ToF sensors alone struggled with motion blur, limited range, and performance degradation in certain lighting conditions. This fusion approach has gained momentum particularly since 2015, when mobile device manufacturers began incorporating depth sensors alongside traditional imaging systems.

Current technical objectives for ToF fusion systems center on achieving precise temporal and spatial alignment between these heterogeneous sensors. Accurate extrinsic calibration between ToF sensors, IMUs, and cameras is fundamental to creating a unified coordinate system that enables reliable depth-enhanced perception. Additionally, minimizing latency between sensor measurements represents a critical goal, as even millisecond-level discrepancies can significantly impact applications requiring real-time response.

The market trajectory for this technology shows accelerating adoption across multiple sectors. In consumer electronics, ToF fusion enables enhanced photography effects, secure facial recognition, and immersive AR experiences. In automotive applications, these systems support advanced driver assistance features and environmental mapping. Industrial applications leverage the technology for precise robotic manipulation and quality control processes.

Research objectives in this field focus on developing robust algorithms for sensor synchronization that can operate under varying environmental conditions. This includes addressing challenges such as motion compensation, handling different sensor sampling rates, and accounting for environmental factors that differentially affect each sensor type. The ultimate goal is to create fusion systems that deliver consistent, high-fidelity spatial data with minimal computational overhead and power consumption.

As the technology continues to mature, standardization efforts are emerging to establish common protocols for sensor alignment and data fusion, which will be essential for broader industry adoption and interoperability between different hardware implementations.

Market Applications for TOF-IMU-Camera Fusion

The integration of Time-of-Flight (ToF) sensors with IMU and camera systems has created significant market opportunities across multiple industries. This fusion technology addresses critical challenges in spatial awareness, motion tracking, and environmental perception that individual sensors cannot solve alone.

In the automotive sector, ToF-IMU-camera fusion systems are revolutionizing advanced driver assistance systems (ADAS) and autonomous driving capabilities. These integrated sensor packages enable precise depth perception and motion understanding even in challenging lighting conditions, enhancing pedestrian detection, obstacle avoidance, and parking assistance features. Major automotive manufacturers are incorporating these systems to achieve higher levels of driving automation while maintaining safety standards.

Consumer electronics represents another substantial market, with smartphone manufacturers implementing ToF-IMU-camera fusion to enable enhanced augmented reality experiences, improved computational photography, and gesture recognition interfaces. This technology allows for more accurate 3D mapping of environments, realistic object placement in AR applications, and better motion tracking for gaming and fitness applications.

The robotics industry has embraced this sensor fusion approach for navigation and manipulation tasks. Warehouse robots, domestic service robots, and industrial automation systems benefit from the improved spatial awareness and motion understanding that ToF-IMU-camera fusion provides. The technology enables robots to operate more autonomously in dynamic environments with reduced computational requirements compared to traditional computer vision approaches.

Healthcare applications are emerging as a promising growth area, particularly in rehabilitation, gait analysis, and patient monitoring systems. The precise motion tracking capabilities combined with depth perception allow for quantitative assessment of patient movements without requiring specialized markers or equipment. This enables more accessible and continuous monitoring in both clinical and home settings.

Security and surveillance systems benefit from the enhanced object detection and tracking capabilities of fused ToF-IMU-camera systems. The ability to accurately determine depth and motion patterns improves intruder detection while reducing false alarms caused by shadows or lighting changes. These systems can operate effectively in varying environmental conditions, including low light scenarios.

Drone and UAV manufacturers are implementing this sensor fusion approach to improve navigation accuracy, obstacle avoidance, and stabilization in GPS-denied environments. The combination of depth perception with motion sensing enables more precise autonomous flight in complex environments such as urban canyons, forests, or indoor spaces where traditional navigation methods may fail.

In the automotive sector, ToF-IMU-camera fusion systems are revolutionizing advanced driver assistance systems (ADAS) and autonomous driving capabilities. These integrated sensor packages enable precise depth perception and motion understanding even in challenging lighting conditions, enhancing pedestrian detection, obstacle avoidance, and parking assistance features. Major automotive manufacturers are incorporating these systems to achieve higher levels of driving automation while maintaining safety standards.

Consumer electronics represents another substantial market, with smartphone manufacturers implementing ToF-IMU-camera fusion to enable enhanced augmented reality experiences, improved computational photography, and gesture recognition interfaces. This technology allows for more accurate 3D mapping of environments, realistic object placement in AR applications, and better motion tracking for gaming and fitness applications.

The robotics industry has embraced this sensor fusion approach for navigation and manipulation tasks. Warehouse robots, domestic service robots, and industrial automation systems benefit from the improved spatial awareness and motion understanding that ToF-IMU-camera fusion provides. The technology enables robots to operate more autonomously in dynamic environments with reduced computational requirements compared to traditional computer vision approaches.

Healthcare applications are emerging as a promising growth area, particularly in rehabilitation, gait analysis, and patient monitoring systems. The precise motion tracking capabilities combined with depth perception allow for quantitative assessment of patient movements without requiring specialized markers or equipment. This enables more accessible and continuous monitoring in both clinical and home settings.

Security and surveillance systems benefit from the enhanced object detection and tracking capabilities of fused ToF-IMU-camera systems. The ability to accurately determine depth and motion patterns improves intruder detection while reducing false alarms caused by shadows or lighting changes. These systems can operate effectively in varying environmental conditions, including low light scenarios.

Drone and UAV manufacturers are implementing this sensor fusion approach to improve navigation accuracy, obstacle avoidance, and stabilization in GPS-denied environments. The combination of depth perception with motion sensing enables more precise autonomous flight in complex environments such as urban canyons, forests, or indoor spaces where traditional navigation methods may fail.

Technical Challenges in Sensor Fusion Systems

Sensor fusion systems integrating Time-of-Flight (ToF) sensors with IMUs and cameras face significant technical challenges that impact system performance and reliability. The precise alignment of these heterogeneous sensors represents a fundamental obstacle, as each sensor operates with different coordinate systems, measurement units, and physical principles. Achieving accurate spatial registration between ToF depth data, IMU motion measurements, and camera images requires sophisticated calibration techniques that account for both intrinsic and extrinsic parameters.

Extrinsic calibration, which determines the relative position and orientation between sensors, presents particular difficulty in ToF fusion systems. Traditional calibration methods often fail to capture the complex relationships between these diverse sensor modalities. The non-linear characteristics of ToF measurements, affected by factors such as material reflectivity and ambient lighting conditions, further complicate the calibration process. Even minor misalignments can propagate into substantial errors in the fused data output.

Temporal synchronization emerges as another critical challenge, with sensor latency introducing significant complications. ToF sensors, IMUs, and cameras typically operate at different sampling rates and processing times. The ToF sensor may have frame rates of 30-60Hz, while IMUs can sample at rates exceeding 1000Hz, and cameras operate at various frame rates depending on resolution and lighting conditions. This temporal disparity creates alignment problems when fusing data streams that were not captured simultaneously.

Latency compensation mechanisms must address both deterministic and variable delays. Hardware-induced latencies from sensor readout, data transfer, and processing create a temporal offset between when an event occurs and when it is registered in the system. Software processing pipelines introduce additional variable delays that depend on computational load and algorithm complexity. Without proper compensation, these latencies result in motion artifacts and misalignment in dynamic scenes.

Environmental factors further exacerbate these challenges. ToF sensors are particularly susceptible to interference from ambient infrared light, multiple reflections, and scattering effects. These environmental dependencies create non-uniform error distributions that are difficult to model and compensate for in the fusion algorithm. The performance of the entire system can degrade significantly under challenging lighting conditions or when encountering highly reflective or absorptive surfaces.

Real-time processing requirements impose additional constraints on fusion algorithms. Many applications demand immediate sensor fusion results with minimal latency, limiting the computational complexity of alignment and calibration techniques. This creates a fundamental trade-off between accuracy and processing speed that must be carefully balanced based on application requirements.

Extrinsic calibration, which determines the relative position and orientation between sensors, presents particular difficulty in ToF fusion systems. Traditional calibration methods often fail to capture the complex relationships between these diverse sensor modalities. The non-linear characteristics of ToF measurements, affected by factors such as material reflectivity and ambient lighting conditions, further complicate the calibration process. Even minor misalignments can propagate into substantial errors in the fused data output.

Temporal synchronization emerges as another critical challenge, with sensor latency introducing significant complications. ToF sensors, IMUs, and cameras typically operate at different sampling rates and processing times. The ToF sensor may have frame rates of 30-60Hz, while IMUs can sample at rates exceeding 1000Hz, and cameras operate at various frame rates depending on resolution and lighting conditions. This temporal disparity creates alignment problems when fusing data streams that were not captured simultaneously.

Latency compensation mechanisms must address both deterministic and variable delays. Hardware-induced latencies from sensor readout, data transfer, and processing create a temporal offset between when an event occurs and when it is registered in the system. Software processing pipelines introduce additional variable delays that depend on computational load and algorithm complexity. Without proper compensation, these latencies result in motion artifacts and misalignment in dynamic scenes.

Environmental factors further exacerbate these challenges. ToF sensors are particularly susceptible to interference from ambient infrared light, multiple reflections, and scattering effects. These environmental dependencies create non-uniform error distributions that are difficult to model and compensate for in the fusion algorithm. The performance of the entire system can degrade significantly under challenging lighting conditions or when encountering highly reflective or absorptive surfaces.

Real-time processing requirements impose additional constraints on fusion algorithms. Many applications demand immediate sensor fusion results with minimal latency, limiting the computational complexity of alignment and calibration techniques. This creates a fundamental trade-off between accuracy and processing speed that must be carefully balanced based on application requirements.

Current Alignment and Calibration Methodologies

01 Time-of-Flight sensor calibration and alignment techniques

Various methods for calibrating and aligning Time-of-Flight (ToF) sensors with other sensing systems. These techniques involve determining the spatial relationship between ToF sensors and other sensors (such as cameras or LiDAR) to ensure accurate data fusion. The calibration process typically includes measuring and compensating for extrinsic parameters like position and orientation offsets between different sensors in a multi-sensor system.- Time-of-Flight sensor calibration and alignment techniques: Various methods for calibrating and aligning Time-of-Flight (ToF) sensors with other sensing systems. These techniques involve determining the spatial relationship between ToF sensors and other sensors (such as cameras or LiDAR) to ensure accurate data fusion. The calibration process typically includes measuring and compensating for intrinsic and extrinsic parameters to minimize alignment errors and improve the accuracy of depth measurements.

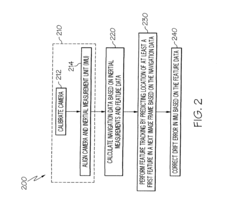

- Latency compensation in ToF sensor fusion systems: Methods for addressing temporal misalignment (latency) issues in Time-of-Flight sensor fusion applications. These approaches include timestamp synchronization, predictive algorithms to compensate for delays between different sensor data streams, and buffering techniques to align data temporally. Latency compensation is crucial for real-time applications where precise timing between multiple sensors affects the accuracy of the fused data output.

- Extrinsic parameter estimation for ToF sensor fusion: Techniques for determining the extrinsic parameters (position and orientation) between Time-of-Flight sensors and other sensing modalities. These methods include feature-based alignment, target-based calibration, and optimization algorithms that minimize registration errors. Accurate extrinsic parameter estimation enables proper transformation of data between different sensor coordinate systems, which is essential for effective sensor fusion.

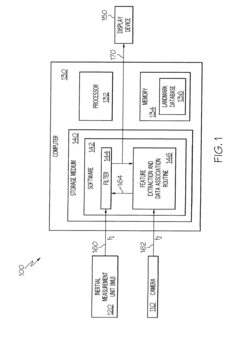

- Multi-sensor fusion architectures incorporating ToF data: System architectures that integrate Time-of-Flight sensor data with information from other sensing modalities such as RGB cameras, infrared sensors, or radar. These architectures include hardware configurations, data processing pipelines, and fusion algorithms that combine the strengths of different sensors. The fusion approaches may be early fusion (raw data level), late fusion (decision level), or hybrid methods that optimize for specific application requirements.

- Real-time processing techniques for ToF sensor data: Methods for efficiently processing Time-of-Flight sensor data in real-time applications. These techniques include parallel processing architectures, hardware acceleration, optimized algorithms for point cloud processing, and efficient data structures. Real-time processing is particularly important for applications requiring immediate response, such as autonomous navigation, object tracking, or augmented reality, where delays in processing ToF data would impact system performance.

02 Latency compensation in ToF sensor fusion systems

Methods for addressing temporal misalignment (latency) in Time-of-Flight sensor fusion systems. These approaches include timestamp synchronization, predictive algorithms to compensate for delays between data acquisition and processing, and techniques to align data streams from multiple sensors with different capture rates. Latency compensation is crucial for accurate fusion of ToF data with other sensor inputs, especially in dynamic environments or real-time applications.Expand Specific Solutions03 Multi-sensor fusion architectures incorporating ToF data

System architectures for fusing Time-of-Flight sensor data with information from other sensing modalities. These designs include hardware configurations, data processing pipelines, and fusion algorithms that combine depth information from ToF sensors with RGB images, LiDAR point clouds, or other sensor data. The architectures address challenges in data alignment, synchronization, and integration to produce coherent environmental representations for applications like autonomous navigation or augmented reality.Expand Specific Solutions04 Extrinsic parameter estimation for ToF sensors

Algorithms and methods specifically focused on determining the extrinsic parameters (position and orientation) of Time-of-Flight sensors relative to other sensors or a reference coordinate system. These approaches include target-based calibration, feature matching across sensor modalities, optimization techniques to minimize alignment errors, and automatic extrinsic parameter estimation during system operation. Accurate extrinsic calibration is essential for proper spatial alignment of ToF data with other sensor information.Expand Specific Solutions05 Real-time ToF data processing and alignment

Techniques for processing and aligning Time-of-Flight sensor data in real-time applications. These methods include efficient algorithms for point cloud registration, hardware acceleration for ToF data processing, parallel computing approaches, and optimized fusion pipelines. Real-time processing enables applications like autonomous navigation, object tracking, and interactive systems where immediate alignment and fusion of ToF data with other sensor inputs is required despite computational constraints.Expand Specific Solutions

Leading Companies in TOF and Sensor Fusion

Time-of-Flight fusion technology, combining IMU and camera data, is currently in a growth phase with increasing market adoption across robotics, autonomous vehicles, and AR/VR applications. The market is expanding rapidly, projected to reach several billion dollars by 2025, driven by demand for precise spatial awareness in consumer electronics and industrial applications. Technologically, the field shows varying maturity levels among key players. Microsoft Technology Licensing and PMD Technologies demonstrate advanced capabilities in sensor fusion algorithms, while companies like ifm electronic and ams-OSRAM lead in hardware integration. Academic institutions including Beijing Institute of Technology and Zhejiang University contribute significant research advancements. Chinese companies such as Xpeng Aeroht and Tencent are increasingly investing in this space, particularly for autonomous systems applications, indicating a competitive landscape with both established players and emerging innovators.

PMD Technologies Ltd.

Technical Solution: PMD Technologies has developed advanced Time-of-Flight (ToF) fusion solutions that integrate IMU and camera data with precise alignment techniques. Their technology employs a sophisticated calibration process that determines the spatial relationship (extrinsics) between the ToF sensor and IMU/camera systems with sub-millimeter accuracy. PMD's approach uses a multi-stage calibration workflow that first establishes the intrinsic parameters of each sensor independently before determining their relative positions and orientations. For latency compensation, they implement a predictive filtering algorithm that accounts for the different sampling rates and processing delays of each sensor type. Their solution includes hardware synchronization triggers that ensure temporal alignment between sensor measurements, critical for dynamic scenes. PMD has also developed specialized software libraries that handle the fusion of depth data with motion information, enabling robust 3D reconstruction even during rapid movement.

Strengths: Industry-leading precision in sensor alignment with sub-millimeter accuracy; comprehensive solution addressing both spatial and temporal alignment challenges. Weaknesses: Their solutions often require specialized hardware components for optimal performance, potentially increasing implementation costs and complexity.

ifm electronic GmbH

Technical Solution: ifm electronic has pioneered an integrated approach to Time-of-Flight fusion that addresses the critical challenges of IMU/camera alignment, extrinsics calibration, and latency compensation. Their O3D series of 3D sensors incorporates a factory-calibrated system where ToF cameras and IMUs are precisely aligned during manufacturing, eliminating the need for field calibration in many applications. The company's technology utilizes a proprietary algorithm that continuously monitors and adjusts for any drift between sensors during operation. For extrinsics determination, ifm employs a reference object-based calibration method that can achieve accuracy within 0.1 degrees of rotation and 0.5mm of translation. Their latency management system implements a timestamp-based synchronization protocol that aligns data streams from different sensors with microsecond precision, enabling accurate fusion even in high-speed industrial applications. The system also features adaptive sampling rates that optimize performance based on the detected motion characteristics.

Strengths: Factory-calibrated systems reduce deployment complexity; industrial-grade robustness suitable for harsh environments; microsecond-level synchronization precision. Weaknesses: Less flexibility for custom sensor configurations; higher initial cost compared to non-integrated solutions.

Key Innovations in Extrinsic Parameter Estimation

Camera and inertial measurement unit integration with navigation data feedback for feature tracking

PatentActiveEP2434256A2

Innovation

- A navigation device integrating an inertial measurement unit (IMU) with a monocular camera and a processor that extracts features from image frames, using both inertial measurements and feature positions to calculate navigation data, with a hybrid extended Kalman filter to integrate and correct data, reducing errors and processing requirements.

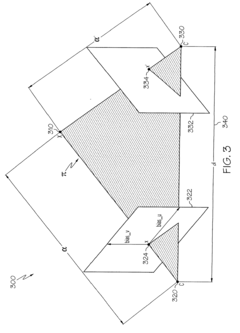

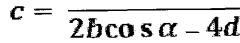

Time-of-flight system with time-of-flight receiving devices which are spatially separate from one another, and method for measuring the distance from an object

PatentWO2014180553A1

Innovation

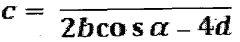

- A time-of-flight system with spatially separated receiving devices that use a common modulated electromagnetic radiation source, synchronized across all devices, allowing for geometric correction of depth values to determine object distance using the law of cosines, enabling flexible positioning and accurate 3D data acquisition from multiple angles without the need for a common installation space.

Benchmarking and Performance Metrics

Establishing robust benchmarking methodologies and performance metrics is crucial for evaluating Time-of-Flight fusion systems that integrate IMU and camera data. The primary challenge lies in creating standardized evaluation frameworks that can accurately measure the precision of sensor alignment, extrinsic calibration quality, and system latency under various operational conditions.

Temporal alignment accuracy represents a fundamental performance metric, typically measured in microseconds of deviation between IMU and camera timestamps. High-performing systems generally achieve synchronization errors below 1ms, with elite implementations reaching sub-500μs precision. These measurements should be conducted across different motion profiles to ensure consistency under varying dynamics.

Spatial calibration quality metrics focus on the accuracy of extrinsic parameters between sensors. The industry standard employs reprojection error as a key indicator, with values below 0.5 pixels considered excellent for most applications. Additionally, rotation error (measured in degrees) and translation error (measured in millimeters) provide direct insights into the physical alignment precision between the IMU and camera coordinate frames.

End-to-end system latency represents another critical performance dimension, encompassing data acquisition, processing, and output generation times. Competitive ToF fusion systems typically maintain latencies below 20ms, with cutting-edge implementations achieving 5-10ms response times. This metric significantly impacts the system's suitability for real-time applications such as autonomous navigation or augmented reality.

Environmental robustness testing has emerged as an essential benchmarking approach, evaluating system performance across varying lighting conditions, temperature ranges, and vibration profiles. Degradation curves plotting performance metrics against environmental variables provide valuable insights into operational boundaries and failure modes.

Computational efficiency metrics, including CPU/GPU utilization, memory footprint, and power consumption, have gained prominence as ToF fusion systems increasingly target mobile and embedded platforms. These metrics help quantify the practical deployability of different fusion algorithms across hardware constraints.

The research community has developed several standardized datasets for benchmarking, including EuRoC, TUM VI, and KITTI, each offering different motion profiles and environmental conditions. However, there remains a need for specialized datasets that specifically target the unique challenges of ToF camera fusion with IMUs, particularly regarding depth accuracy correlation with motion estimation.

Temporal alignment accuracy represents a fundamental performance metric, typically measured in microseconds of deviation between IMU and camera timestamps. High-performing systems generally achieve synchronization errors below 1ms, with elite implementations reaching sub-500μs precision. These measurements should be conducted across different motion profiles to ensure consistency under varying dynamics.

Spatial calibration quality metrics focus on the accuracy of extrinsic parameters between sensors. The industry standard employs reprojection error as a key indicator, with values below 0.5 pixels considered excellent for most applications. Additionally, rotation error (measured in degrees) and translation error (measured in millimeters) provide direct insights into the physical alignment precision between the IMU and camera coordinate frames.

End-to-end system latency represents another critical performance dimension, encompassing data acquisition, processing, and output generation times. Competitive ToF fusion systems typically maintain latencies below 20ms, with cutting-edge implementations achieving 5-10ms response times. This metric significantly impacts the system's suitability for real-time applications such as autonomous navigation or augmented reality.

Environmental robustness testing has emerged as an essential benchmarking approach, evaluating system performance across varying lighting conditions, temperature ranges, and vibration profiles. Degradation curves plotting performance metrics against environmental variables provide valuable insights into operational boundaries and failure modes.

Computational efficiency metrics, including CPU/GPU utilization, memory footprint, and power consumption, have gained prominence as ToF fusion systems increasingly target mobile and embedded platforms. These metrics help quantify the practical deployability of different fusion algorithms across hardware constraints.

The research community has developed several standardized datasets for benchmarking, including EuRoC, TUM VI, and KITTI, each offering different motion profiles and environmental conditions. However, there remains a need for specialized datasets that specifically target the unique challenges of ToF camera fusion with IMUs, particularly regarding depth accuracy correlation with motion estimation.

System Integration and Hardware Considerations

The successful integration of Time-of-Flight (ToF) sensors with IMU and camera systems requires careful hardware selection and system architecture design. When implementing ToF fusion solutions, hardware compatibility becomes a critical factor affecting overall system performance. Different ToF sensors offer varying resolution, range capabilities, and frame rates that must align with the specifications of companion IMUs and cameras to achieve optimal fusion results.

Physical mounting considerations significantly impact the accuracy of extrinsic calibration between sensors. Rigid mounting structures minimize relative movement between sensors during operation, reducing calibration drift over time. Custom-designed sensor brackets that maintain precise geometric relationships between ToF sensors, cameras, and IMUs are essential for maintaining calibration stability in dynamic environments.

Synchronization hardware plays a pivotal role in addressing latency challenges. Hardware-level synchronization mechanisms such as trigger signals, PPS (Pulse Per Second) inputs, and hardware timestamps enable precise temporal alignment between sensor data streams. Advanced systems implement FPGA-based synchronization controllers that can coordinate sensor triggering with sub-millisecond precision, significantly reducing temporal alignment errors in fusion algorithms.

Power management considerations cannot be overlooked in ToF fusion systems. ToF sensors typically consume substantial power during operation, particularly when operating at high frame rates or in extended range modes. Implementing efficient power distribution systems with appropriate voltage regulation and thermal management is necessary to maintain system stability and prevent performance degradation due to thermal effects.

Data throughput requirements present another significant integration challenge. High-resolution ToF sensors generate substantial data volumes that must be efficiently transferred and processed alongside IMU and camera data. System designers must carefully select interface technologies (USB3, Ethernet, MIPI CSI) that provide sufficient bandwidth while considering latency implications of each protocol. Some implementations utilize dedicated data routing hardware such as PCIe switches or custom backplanes to manage multi-sensor data flows.

Environmental factors also influence hardware selection and integration strategies. ToF sensors deployed in outdoor environments require additional considerations for ambient light interference, temperature variations, and mechanical protection. Integrated environmental compensation mechanisms, such as ambient light rejection filters and temperature sensors for drift correction, enhance system robustness across diverse operating conditions.

Physical mounting considerations significantly impact the accuracy of extrinsic calibration between sensors. Rigid mounting structures minimize relative movement between sensors during operation, reducing calibration drift over time. Custom-designed sensor brackets that maintain precise geometric relationships between ToF sensors, cameras, and IMUs are essential for maintaining calibration stability in dynamic environments.

Synchronization hardware plays a pivotal role in addressing latency challenges. Hardware-level synchronization mechanisms such as trigger signals, PPS (Pulse Per Second) inputs, and hardware timestamps enable precise temporal alignment between sensor data streams. Advanced systems implement FPGA-based synchronization controllers that can coordinate sensor triggering with sub-millisecond precision, significantly reducing temporal alignment errors in fusion algorithms.

Power management considerations cannot be overlooked in ToF fusion systems. ToF sensors typically consume substantial power during operation, particularly when operating at high frame rates or in extended range modes. Implementing efficient power distribution systems with appropriate voltage regulation and thermal management is necessary to maintain system stability and prevent performance degradation due to thermal effects.

Data throughput requirements present another significant integration challenge. High-resolution ToF sensors generate substantial data volumes that must be efficiently transferred and processed alongside IMU and camera data. System designers must carefully select interface technologies (USB3, Ethernet, MIPI CSI) that provide sufficient bandwidth while considering latency implications of each protocol. Some implementations utilize dedicated data routing hardware such as PCIe switches or custom backplanes to manage multi-sensor data flows.

Environmental factors also influence hardware selection and integration strategies. ToF sensors deployed in outdoor environments require additional considerations for ambient light interference, temperature variations, and mechanical protection. Integrated environmental compensation mechanisms, such as ambient light rejection filters and temperature sensors for drift correction, enhance system robustness across diverse operating conditions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!