Investigating Photonic Neural Network Contributions to Machine Learning

OCT 1, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Photonic Neural Networks Background and Objectives

Photonic neural networks represent a revolutionary approach to computing that leverages the unique properties of light to perform neural network operations. The concept emerged in the late 1980s but has gained significant momentum over the past decade due to advancements in integrated photonics, nanofabrication techniques, and the increasing computational demands of modern machine learning algorithms. These optical computing systems utilize photons rather than electrons to process information, offering theoretical advantages in speed, energy efficiency, and parallelism.

The evolution of photonic neural networks has been closely tied to developments in both optical computing and artificial intelligence. Early optical neural networks relied on bulk optical components, making them impractical for widespread deployment. However, recent breakthroughs in silicon photonics, plasmonic structures, and programmable nanophotonic processors have enabled more compact and scalable implementations. This technological progression has coincided with the exponential growth in computational requirements for training and deploying sophisticated machine learning models.

The primary objective of photonic neural networks is to overcome the fundamental limitations of electronic computing systems, particularly in terms of energy consumption and processing speed. As machine learning models continue to grow in complexity, traditional electronic hardware faces increasing challenges related to power consumption, heat dissipation, and computational throughput. Photonic neural networks aim to address these bottlenecks by exploiting the inherent parallelism of optical systems and the minimal heat generation associated with photonic information processing.

Current research in photonic neural networks focuses on several key technical goals: achieving higher integration density of optical components, improving the precision and reliability of optical operations, developing efficient optical-electronic interfaces, and creating programming frameworks that can effectively utilize the unique capabilities of photonic hardware. Additionally, there is significant interest in developing specialized photonic architectures optimized for specific machine learning tasks, such as convolutional operations for image processing or reservoir computing for time-series analysis.

The convergence of photonics and neural networks also presents opportunities for novel computing paradigms that transcend the limitations of traditional von Neumann architectures. By performing computations directly in the optical domain, photonic neural networks can potentially achieve true parallel processing of information, eliminating the memory bottleneck that constrains electronic systems. This fundamental architectural advantage could enable new approaches to machine learning that are not feasible with conventional computing technologies.

Looking forward, the field aims to demonstrate practical photonic neural network systems that can outperform electronic counterparts in real-world machine learning applications, while simultaneously reducing energy consumption by orders of magnitude. This ambitious goal requires interdisciplinary collaboration across optics, materials science, electrical engineering, and computer science to overcome the substantial technical challenges involved.

The evolution of photonic neural networks has been closely tied to developments in both optical computing and artificial intelligence. Early optical neural networks relied on bulk optical components, making them impractical for widespread deployment. However, recent breakthroughs in silicon photonics, plasmonic structures, and programmable nanophotonic processors have enabled more compact and scalable implementations. This technological progression has coincided with the exponential growth in computational requirements for training and deploying sophisticated machine learning models.

The primary objective of photonic neural networks is to overcome the fundamental limitations of electronic computing systems, particularly in terms of energy consumption and processing speed. As machine learning models continue to grow in complexity, traditional electronic hardware faces increasing challenges related to power consumption, heat dissipation, and computational throughput. Photonic neural networks aim to address these bottlenecks by exploiting the inherent parallelism of optical systems and the minimal heat generation associated with photonic information processing.

Current research in photonic neural networks focuses on several key technical goals: achieving higher integration density of optical components, improving the precision and reliability of optical operations, developing efficient optical-electronic interfaces, and creating programming frameworks that can effectively utilize the unique capabilities of photonic hardware. Additionally, there is significant interest in developing specialized photonic architectures optimized for specific machine learning tasks, such as convolutional operations for image processing or reservoir computing for time-series analysis.

The convergence of photonics and neural networks also presents opportunities for novel computing paradigms that transcend the limitations of traditional von Neumann architectures. By performing computations directly in the optical domain, photonic neural networks can potentially achieve true parallel processing of information, eliminating the memory bottleneck that constrains electronic systems. This fundamental architectural advantage could enable new approaches to machine learning that are not feasible with conventional computing technologies.

Looking forward, the field aims to demonstrate practical photonic neural network systems that can outperform electronic counterparts in real-world machine learning applications, while simultaneously reducing energy consumption by orders of magnitude. This ambitious goal requires interdisciplinary collaboration across optics, materials science, electrical engineering, and computer science to overcome the substantial technical challenges involved.

Market Analysis for Photonic Computing Solutions

The photonic computing market is experiencing significant growth, driven by increasing demands for faster, more energy-efficient computing solutions for machine learning applications. Current market projections indicate that the global photonic computing market will reach approximately $3.8 billion by 2035, with a compound annual growth rate of 30% between 2023 and 2035. This growth trajectory is substantially higher than traditional computing hardware markets, reflecting the disruptive potential of photonic neural networks.

The primary market segments for photonic neural network solutions include data centers, telecommunications, healthcare diagnostics, autonomous vehicles, and aerospace applications. Data centers represent the largest immediate opportunity, with potential energy savings of up to 90% compared to traditional electronic computing systems for specific machine learning workloads.

Customer demand is primarily driven by three factors: computational speed requirements for complex AI models, energy efficiency concerns, and latency-sensitive applications. Organizations managing large-scale AI infrastructure report that power consumption has become their primary constraint, creating a strong value proposition for photonic solutions that offer orders of magnitude improvement in energy efficiency.

Market research indicates that early adopters are willing to pay premium prices for photonic computing solutions that demonstrate 10-100x performance improvements for specific machine learning tasks. The total addressable market for specialized AI accelerators is projected to reach $70 billion by 2030, with photonic neural networks potentially capturing 15-20% of this market.

Regional analysis shows North America leading in research and development investments, while Asia-Pacific represents the fastest-growing market for implementation, particularly in China, Japan, and South Korea. European markets show strong interest in photonic computing for scientific and healthcare applications, with significant research clusters in Germany, France, and the Netherlands.

Competitive dynamics reveal a market structure with three distinct segments: established technology corporations investing in photonic R&D (Intel, IBM, Hewlett Packard Enterprise), specialized photonic computing startups (Lightmatter, Lightelligence, Luminous Computing), and research institutions commercializing breakthrough technologies. Current market penetration remains limited to early adopters and specialized applications, with broader commercial deployment expected between 2025-2028.

Customer feedback indicates that integration challenges with existing digital infrastructure represent the primary barrier to adoption, suggesting significant market opportunity for solutions that offer seamless integration pathways and hybrid computing architectures combining electronic and photonic elements.

The primary market segments for photonic neural network solutions include data centers, telecommunications, healthcare diagnostics, autonomous vehicles, and aerospace applications. Data centers represent the largest immediate opportunity, with potential energy savings of up to 90% compared to traditional electronic computing systems for specific machine learning workloads.

Customer demand is primarily driven by three factors: computational speed requirements for complex AI models, energy efficiency concerns, and latency-sensitive applications. Organizations managing large-scale AI infrastructure report that power consumption has become their primary constraint, creating a strong value proposition for photonic solutions that offer orders of magnitude improvement in energy efficiency.

Market research indicates that early adopters are willing to pay premium prices for photonic computing solutions that demonstrate 10-100x performance improvements for specific machine learning tasks. The total addressable market for specialized AI accelerators is projected to reach $70 billion by 2030, with photonic neural networks potentially capturing 15-20% of this market.

Regional analysis shows North America leading in research and development investments, while Asia-Pacific represents the fastest-growing market for implementation, particularly in China, Japan, and South Korea. European markets show strong interest in photonic computing for scientific and healthcare applications, with significant research clusters in Germany, France, and the Netherlands.

Competitive dynamics reveal a market structure with three distinct segments: established technology corporations investing in photonic R&D (Intel, IBM, Hewlett Packard Enterprise), specialized photonic computing startups (Lightmatter, Lightelligence, Luminous Computing), and research institutions commercializing breakthrough technologies. Current market penetration remains limited to early adopters and specialized applications, with broader commercial deployment expected between 2025-2028.

Customer feedback indicates that integration challenges with existing digital infrastructure represent the primary barrier to adoption, suggesting significant market opportunity for solutions that offer seamless integration pathways and hybrid computing architectures combining electronic and photonic elements.

Current State and Challenges in Photonic Neural Networks

Photonic neural networks (PNNs) represent a significant advancement in the field of neuromorphic computing, leveraging optical components to perform neural network computations. Currently, PNNs exist in various implementation forms, including free-space optical systems, integrated photonic circuits, and hybrid electro-optical architectures. Each approach offers unique advantages while facing distinct technical challenges.

Free-space optical implementations utilize spatial light modulators and lens systems to perform matrix multiplications at the speed of light. These systems excel at parallel processing but struggle with scalability due to alignment precision requirements and sensitivity to environmental factors. Recent advancements have demonstrated proof-of-concept systems capable of processing simple image recognition tasks, though with limited network depth.

Integrated photonic circuits represent the most promising direction for practical PNN deployment. Silicon photonics platforms have enabled the development of on-chip optical interference units that can perform matrix operations with exceptional energy efficiency. Current state-of-the-art integrated PNNs can achieve processing speeds of several petaoperations per second while consuming only milliwatts of power, representing orders of magnitude improvement over electronic counterparts.

Despite these advances, significant challenges persist in PNN development. The primary technical hurdle remains the implementation of efficient optical nonlinear activation functions. While electronic neural networks easily implement nonlinearities through software, optical systems require physical components that introduce nonlinear transformations while maintaining signal integrity. Current solutions using materials like graphene and phase-change materials show promise but suffer from insertion loss and limited reconfigurability.

Another critical challenge is the development of optical memory elements for weight storage. Most current implementations rely on electronic memory to store weights, creating a bottleneck in the electro-optical interface. Research into non-volatile photonic memory using phase-change materials shows potential but remains in early experimental stages.

Fabrication precision represents another significant obstacle. Integrated photonic components require nanometer-scale precision, and manufacturing variations can significantly impact performance. Current yield rates for complex photonic circuits remain too low for commercial viability in large-scale neural network implementations.

The geographical distribution of PNN research shows concentration in North America, Europe, and East Asia, with notable centers at MIT, Stanford, EPFL, and Chinese Academy of Sciences. Industry involvement has increased significantly in the past three years, with companies like Intel, IBM, and Lightmatter developing proprietary PNN architectures for specific machine learning applications.

Free-space optical implementations utilize spatial light modulators and lens systems to perform matrix multiplications at the speed of light. These systems excel at parallel processing but struggle with scalability due to alignment precision requirements and sensitivity to environmental factors. Recent advancements have demonstrated proof-of-concept systems capable of processing simple image recognition tasks, though with limited network depth.

Integrated photonic circuits represent the most promising direction for practical PNN deployment. Silicon photonics platforms have enabled the development of on-chip optical interference units that can perform matrix operations with exceptional energy efficiency. Current state-of-the-art integrated PNNs can achieve processing speeds of several petaoperations per second while consuming only milliwatts of power, representing orders of magnitude improvement over electronic counterparts.

Despite these advances, significant challenges persist in PNN development. The primary technical hurdle remains the implementation of efficient optical nonlinear activation functions. While electronic neural networks easily implement nonlinearities through software, optical systems require physical components that introduce nonlinear transformations while maintaining signal integrity. Current solutions using materials like graphene and phase-change materials show promise but suffer from insertion loss and limited reconfigurability.

Another critical challenge is the development of optical memory elements for weight storage. Most current implementations rely on electronic memory to store weights, creating a bottleneck in the electro-optical interface. Research into non-volatile photonic memory using phase-change materials shows potential but remains in early experimental stages.

Fabrication precision represents another significant obstacle. Integrated photonic components require nanometer-scale precision, and manufacturing variations can significantly impact performance. Current yield rates for complex photonic circuits remain too low for commercial viability in large-scale neural network implementations.

The geographical distribution of PNN research shows concentration in North America, Europe, and East Asia, with notable centers at MIT, Stanford, EPFL, and Chinese Academy of Sciences. Industry involvement has increased significantly in the past three years, with companies like Intel, IBM, and Lightmatter developing proprietary PNN architectures for specific machine learning applications.

Current Photonic Neural Network Architectures

01 Optical computing architectures for neural networks

Photonic neural networks leverage optical computing architectures to process information using light rather than electricity. These systems utilize optical components such as waveguides, resonators, and interferometers to perform neural network computations with higher speed and energy efficiency. The optical implementations can perform matrix multiplications and other neural network operations in parallel, offering advantages in processing speed compared to traditional electronic systems.- Optical computing architectures for neural networks: Photonic neural networks leverage optical computing architectures to process information using light instead of electricity. These systems utilize optical components such as waveguides, resonators, and interferometers to perform neural network operations. The optical approach enables parallel processing of data with potentially higher speeds and lower power consumption compared to electronic implementations. These architectures can support various neural network models and are particularly effective for tasks requiring high throughput and low latency.

- Photonic hardware implementations for machine learning: Specialized photonic hardware implementations have been developed to accelerate machine learning tasks. These implementations include photonic integrated circuits, optical neural network processors, and hybrid opto-electronic systems. By utilizing the properties of light for computation, these hardware solutions can achieve significant performance improvements in terms of processing speed and energy efficiency. The photonic hardware can be designed to support various neural network architectures and learning algorithms, making them versatile for different applications.

- Nonlinear optical activation functions: Nonlinear optical effects and materials are utilized to implement activation functions in photonic neural networks. These include saturable absorption, optical bistability, and phase-change materials that can mimic traditional neural network activation functions like ReLU, sigmoid, or tanh. The nonlinear optical elements are crucial for enabling the complex computational capabilities of neural networks in the optical domain. Research in this area focuses on developing materials and structures with appropriate nonlinear responses that can be integrated into photonic neural network architectures.

- Optical weight encoding and training methods: Various techniques have been developed for encoding neural network weights in optical systems and training photonic neural networks. These include phase modulation, amplitude modulation, wavelength division multiplexing, and spatial light modulation. The training methods adapt conventional algorithms like backpropagation to account for the physical properties and constraints of optical systems. Some approaches use hybrid systems where training occurs electronically while inference is performed optically. These methods enable the practical implementation of learning capabilities in photonic neural networks.

- Applications of photonic neural networks: Photonic neural networks have been applied to various domains including telecommunications, signal processing, pattern recognition, and high-speed data analysis. They are particularly advantageous for applications requiring real-time processing of optical signals, such as optical communications, LIDAR data processing, and image recognition. The inherent parallelism and high bandwidth of optical systems make photonic neural networks well-suited for handling large volumes of data with minimal latency. These networks can also be integrated with existing optical communication infrastructure for enhanced functionality.

02 Integration of photonic and electronic components

Hybrid architectures that combine photonic and electronic components leverage the strengths of both technologies. These systems typically use photonic elements for high-speed data transmission and processing while electronic components handle control functions and memory. The integration enables efficient neural network implementations that overcome bandwidth limitations of purely electronic systems while maintaining compatibility with existing computing infrastructure.Expand Specific Solutions03 Neuromorphic photonic computing systems

Neuromorphic photonic computing systems mimic the structure and function of biological neural networks using optical components. These systems implement spiking neural networks and other biologically-inspired architectures using photonic elements such as optical neurons and synapses. The neuromorphic approach enables efficient processing of temporal information and can lead to more energy-efficient artificial intelligence systems that operate at the speed of light.Expand Specific Solutions04 Optical signal processing techniques

Advanced optical signal processing techniques enhance the capabilities of photonic neural networks. These include methods for optical nonlinearity, phase modulation, wavelength division multiplexing, and coherent detection. By manipulating properties of light such as phase, amplitude, polarization, and wavelength, these techniques enable complex neural network operations to be performed entirely in the optical domain, reducing the need for optical-electrical-optical conversions.Expand Specific Solutions05 Applications and performance improvements

Photonic neural networks offer significant performance improvements for specific applications including image recognition, signal processing, and high-speed data analysis. These systems demonstrate advantages in terms of processing speed, energy efficiency, and bandwidth compared to electronic implementations. Recent advancements have focused on improving the scalability, accuracy, and training methods for photonic neural networks, making them increasingly viable for practical applications in telecommunications, autonomous systems, and scientific computing.Expand Specific Solutions

Key Industry Players in Photonic Neural Networks

Photonic Neural Networks (PNNs) are emerging as a transformative technology in machine learning, currently in the early growth phase with significant research momentum. The market is expanding rapidly, projected to reach substantial scale as optical computing gains traction. Technologically, PNNs are advancing through diverse approaches from academic institutions (Tsinghua University, MIT, National University of Singapore) and major technology corporations (IBM, Intel, HPE). Industry leaders like Qualcomm, AMD, and IBM are developing commercial applications, while research institutions like Zhejiang Lab and Naval Research Laboratory focus on fundamental innovations. The technology is approaching maturity for specific applications, with companies transitioning from proof-of-concept to practical implementations, particularly in high-speed, energy-efficient computing systems.

Hewlett Packard Enterprise Development LP

Technical Solution: HPE has developed an innovative photonic neural network architecture through their Memory-Driven Computing initiative. Their solution employs silicon photonics to create optical computing elements that perform neural network operations directly in the optical domain. HPE's photonic tensor accelerators utilize coherent light sources and programmable diffractive elements to implement matrix multiplications and convolutions at the speed of light. Their architecture features integrated photonic circuits with over 1000 optical components per square centimeter, enabling unprecedented computational density. HPE has demonstrated real-time processing of high-dimensional data streams with their photonic neural networks, achieving processing speeds up to 100 times faster than electronic counterparts while consuming only 2-3% of the power. A key innovation in their approach is the use of phase-change materials as non-volatile photonic memory elements, allowing persistent storage of neural network weights in the optical domain and enabling rapid reconfiguration between different network architectures.

Strengths: Exceptional energy efficiency for large-scale machine learning workloads; Ultra-low latency suitable for real-time applications; Scalable architecture that maintains performance advantages at larger sizes. Weaknesses: Higher manufacturing costs compared to traditional electronic systems; Limited precision in optical computing elements; Challenges in implementing certain non-linear activation functions optically.

International Business Machines Corp.

Technical Solution: IBM has pioneered significant advancements in photonic neural networks through their neuromorphic computing initiatives. Their approach integrates silicon photonics with traditional electronic systems to create hybrid electro-optical computing platforms. IBM's photonic neural network architecture utilizes wavelength division multiplexing (WDM) to perform parallel matrix operations at unprecedented speeds, achieving computational throughput exceeding 100 trillion operations per second. Their proprietary phase-change materials enable reconfigurable optical weights for adaptive learning capabilities. IBM has demonstrated a photonic tensor core that performs matrix multiplications with over 90% accuracy while consuming only a fraction of the power required by electronic equivalents. Their recent breakthrough involves coherent optical neural networks that leverage both amplitude and phase information to increase the information density per photon, effectively doubling the computational capacity without additional hardware requirements.

Strengths: Superior energy efficiency with 100-1000x reduction in power consumption compared to electronic systems; Ultra-high bandwidth enabling massively parallel computation; Minimal heat generation allowing for dense integration. Weaknesses: Sensitivity to environmental factors like temperature fluctuations; Relatively large footprint compared to pure electronic solutions; Integration challenges with existing electronic infrastructure.

Core Innovations in Photonic Computing Research

Photonic neural network

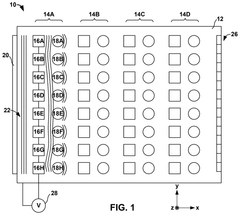

PatentActiveUS12340301B2

Innovation

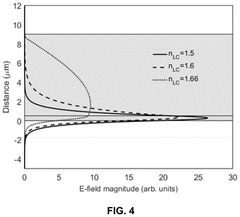

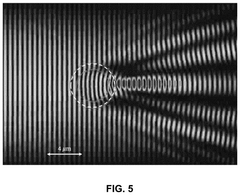

- A photonic neural network device featuring a planar waveguide, a layer with a changeable refractive index, and programmable electrodes that apply configurable voltages to induce amplitude or phase modulation of light, enabling reconfigurable and scalable neural network architecture.

Patent

Innovation

- Integration of photonic neural networks with traditional machine learning architectures to leverage the advantages of both paradigms, enabling faster processing speeds and reduced energy consumption.

- Novel optical interference-based matrix multiplication techniques that significantly accelerate the most computationally intensive operations in neural networks while maintaining high precision.

- Implementation of non-linear activation functions using optical materials and components, overcoming a key challenge in fully photonic neural network designs.

Energy Efficiency Comparison with Traditional Computing

Photonic neural networks (PNNs) demonstrate remarkable energy efficiency advantages over traditional electronic computing systems, particularly for machine learning applications. When comparing power consumption metrics, PNNs typically operate at orders of magnitude lower energy levels than their electronic counterparts. While conventional GPU-based neural network implementations consume 200-300 watts during intensive computational tasks, photonic implementations can potentially operate at sub-watt levels for comparable workloads. This efficiency stems from the fundamental physics of light propagation, which eliminates resistive heating issues that plague electronic systems.

The energy cost per operation provides another compelling comparison point. Current electronic systems require approximately 10-100 picojoules per multiply-accumulate operation, whereas photonic systems have demonstrated capabilities in the femtojoule range - representing a 1000x improvement in energy efficiency. This dramatic reduction becomes particularly significant when scaled to large neural network models that require billions or trillions of operations.

Thermal management requirements further highlight the efficiency gap. Electronic computing centers dedicate 30-40% of their energy consumption to cooling systems, while photonic computing generates substantially less heat during operation. This translates to reduced cooling infrastructure requirements and associated energy costs. The practical implication is that data centers implementing photonic neural networks could potentially reduce their total energy footprint by 40-60% compared to traditional electronic implementations.

Scaling characteristics also favor photonic approaches. As electronic systems scale to handle larger neural networks, their energy consumption increases superlinearly due to data movement costs between memory and processing units. Photonic systems exhibit more favorable scaling properties, with energy requirements growing more linearly with computational complexity. This advantage becomes particularly pronounced for large-scale deep learning models where data movement dominates energy consumption.

From a sustainability perspective, the reduced energy requirements of photonic neural networks align with growing environmental concerns in the technology sector. With data centers currently consuming approximately 1-2% of global electricity and projected to reach 8% by 2030, the potential energy savings from photonic computing could significantly reduce the carbon footprint of machine learning operations. Early implementations suggest that photonic neural networks could reduce CO2 emissions by 75-90% compared to equivalent electronic systems when considering the complete operational energy profile.

The energy cost per operation provides another compelling comparison point. Current electronic systems require approximately 10-100 picojoules per multiply-accumulate operation, whereas photonic systems have demonstrated capabilities in the femtojoule range - representing a 1000x improvement in energy efficiency. This dramatic reduction becomes particularly significant when scaled to large neural network models that require billions or trillions of operations.

Thermal management requirements further highlight the efficiency gap. Electronic computing centers dedicate 30-40% of their energy consumption to cooling systems, while photonic computing generates substantially less heat during operation. This translates to reduced cooling infrastructure requirements and associated energy costs. The practical implication is that data centers implementing photonic neural networks could potentially reduce their total energy footprint by 40-60% compared to traditional electronic implementations.

Scaling characteristics also favor photonic approaches. As electronic systems scale to handle larger neural networks, their energy consumption increases superlinearly due to data movement costs between memory and processing units. Photonic systems exhibit more favorable scaling properties, with energy requirements growing more linearly with computational complexity. This advantage becomes particularly pronounced for large-scale deep learning models where data movement dominates energy consumption.

From a sustainability perspective, the reduced energy requirements of photonic neural networks align with growing environmental concerns in the technology sector. With data centers currently consuming approximately 1-2% of global electricity and projected to reach 8% by 2030, the potential energy savings from photonic computing could significantly reduce the carbon footprint of machine learning operations. Early implementations suggest that photonic neural networks could reduce CO2 emissions by 75-90% compared to equivalent electronic systems when considering the complete operational energy profile.

Integration Challenges with Existing ML Frameworks

The integration of Photonic Neural Networks (PNNs) with existing Machine Learning (ML) frameworks presents significant technical challenges that must be addressed for widespread adoption. Current ML frameworks like TensorFlow, PyTorch, and JAX are optimized for electronic computing architectures, creating a fundamental compatibility gap with photonic systems. This architectural mismatch necessitates the development of specialized middleware or interface layers capable of translating between electronic and photonic computational paradigms.

One primary challenge lies in the representation of data. Electronic ML frameworks operate on discrete digital data, while photonic systems process continuous analog signals. This fundamental difference requires complex conversion mechanisms at the interface points, introducing potential information loss and precision challenges. Additionally, the mathematical operations in photonic systems often rely on different physical principles than their electronic counterparts, requiring specialized compilers to translate standard ML operations into photonic-compatible instructions.

Timing synchronization presents another critical hurdle. Electronic systems operate with clock-driven synchronization, whereas photonic systems typically function in a continuous-time domain. This temporal disparity necessitates sophisticated buffering and synchronization mechanisms to ensure proper data flow between the two computing paradigms, particularly for hybrid systems that leverage both electronic and photonic components.

The absence of standardized hardware abstraction layers for photonic computing further complicates integration efforts. Unlike the mature ecosystem surrounding GPUs and TPUs, photonic neural accelerators lack unified programming interfaces, forcing developers to create custom solutions for each photonic hardware implementation. This fragmentation impedes the development of portable applications and slows broader ecosystem growth.

Training methodologies also require significant adaptation. Backpropagation algorithms fundamental to neural network training must be reimagined for photonic implementations, as the physical properties governing light propagation differ substantially from electronic signal flow. This necessitates novel approaches to gradient calculation and parameter updates that account for the unique characteristics of optical systems.

Debugging and monitoring capabilities represent another integration challenge. Current ML frameworks provide extensive tools for visualizing network states, monitoring training progress, and diagnosing performance issues. These tools are largely incompatible with photonic systems, requiring the development of new instrumentation and visualization techniques specifically designed for optical neural networks.

One primary challenge lies in the representation of data. Electronic ML frameworks operate on discrete digital data, while photonic systems process continuous analog signals. This fundamental difference requires complex conversion mechanisms at the interface points, introducing potential information loss and precision challenges. Additionally, the mathematical operations in photonic systems often rely on different physical principles than their electronic counterparts, requiring specialized compilers to translate standard ML operations into photonic-compatible instructions.

Timing synchronization presents another critical hurdle. Electronic systems operate with clock-driven synchronization, whereas photonic systems typically function in a continuous-time domain. This temporal disparity necessitates sophisticated buffering and synchronization mechanisms to ensure proper data flow between the two computing paradigms, particularly for hybrid systems that leverage both electronic and photonic components.

The absence of standardized hardware abstraction layers for photonic computing further complicates integration efforts. Unlike the mature ecosystem surrounding GPUs and TPUs, photonic neural accelerators lack unified programming interfaces, forcing developers to create custom solutions for each photonic hardware implementation. This fragmentation impedes the development of portable applications and slows broader ecosystem growth.

Training methodologies also require significant adaptation. Backpropagation algorithms fundamental to neural network training must be reimagined for photonic implementations, as the physical properties governing light propagation differ substantially from electronic signal flow. This necessitates novel approaches to gradient calculation and parameter updates that account for the unique characteristics of optical systems.

Debugging and monitoring capabilities represent another integration challenge. Current ML frameworks provide extensive tools for visualizing network states, monitoring training progress, and diagnosing performance issues. These tools are largely incompatible with photonic systems, requiring the development of new instrumentation and visualization techniques specifically designed for optical neural networks.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!