Standardization Processes for Photonic Neural Networks

OCT 1, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Photonic Neural Networks Standardization Background and Objectives

Photonic Neural Networks (PNNs) have emerged as a promising technology at the intersection of photonics and artificial intelligence, offering potential advantages in processing speed, energy efficiency, and computational capacity compared to traditional electronic neural networks. The evolution of this technology can be traced back to the early theoretical work in optical computing during the 1980s, with significant acceleration in practical implementations over the past decade due to advances in integrated photonics, nanofabrication techniques, and machine learning algorithms.

The field has progressed from basic proof-of-concept demonstrations to increasingly sophisticated implementations, including coherent neural networks, reservoir computing systems, and programmable photonic tensor processors. This technological progression has been driven by the growing demands for faster, more energy-efficient computing solutions to address the computational bottlenecks in deep learning and artificial intelligence applications.

Current technological trends indicate a convergence toward hybrid electro-optical systems that leverage the strengths of both domains. The miniaturization and integration of photonic components on chip-scale platforms represent a critical development path, enabling more compact and scalable PNN architectures suitable for practical deployment.

Despite these advances, the lack of standardization remains a significant barrier to widespread adoption and commercialization of PNNs. Unlike electronic neural networks, which benefit from established standards and frameworks, the photonic neural network ecosystem is characterized by fragmented approaches, proprietary solutions, and incompatible interfaces.

The primary objective of standardization efforts for PNNs is to establish a common technical foundation that enables interoperability, reproducibility, and scalability across different implementations and applications. This includes standardizing hardware interfaces, optical component specifications, signal encoding schemes, and training methodologies specific to photonic architectures.

Additionally, standardization aims to facilitate technology transfer between research institutions and industry, accelerate commercialization pathways, and enable meaningful benchmarking of different PNN approaches against both traditional electronic systems and competing photonic implementations.

The development of these standards requires collaborative efforts across multiple stakeholders, including academic researchers, industry players, and standards organizations. International coordination is particularly important given the global distribution of expertise in this emerging field and the need to avoid regional fragmentation of standards that could impede global adoption.

The field has progressed from basic proof-of-concept demonstrations to increasingly sophisticated implementations, including coherent neural networks, reservoir computing systems, and programmable photonic tensor processors. This technological progression has been driven by the growing demands for faster, more energy-efficient computing solutions to address the computational bottlenecks in deep learning and artificial intelligence applications.

Current technological trends indicate a convergence toward hybrid electro-optical systems that leverage the strengths of both domains. The miniaturization and integration of photonic components on chip-scale platforms represent a critical development path, enabling more compact and scalable PNN architectures suitable for practical deployment.

Despite these advances, the lack of standardization remains a significant barrier to widespread adoption and commercialization of PNNs. Unlike electronic neural networks, which benefit from established standards and frameworks, the photonic neural network ecosystem is characterized by fragmented approaches, proprietary solutions, and incompatible interfaces.

The primary objective of standardization efforts for PNNs is to establish a common technical foundation that enables interoperability, reproducibility, and scalability across different implementations and applications. This includes standardizing hardware interfaces, optical component specifications, signal encoding schemes, and training methodologies specific to photonic architectures.

Additionally, standardization aims to facilitate technology transfer between research institutions and industry, accelerate commercialization pathways, and enable meaningful benchmarking of different PNN approaches against both traditional electronic systems and competing photonic implementations.

The development of these standards requires collaborative efforts across multiple stakeholders, including academic researchers, industry players, and standards organizations. International coordination is particularly important given the global distribution of expertise in this emerging field and the need to avoid regional fragmentation of standards that could impede global adoption.

Market Demand Analysis for Standardized Photonic Computing

The global market for standardized photonic computing solutions is experiencing significant growth, driven by increasing demands for high-speed data processing, energy efficiency, and computational capabilities beyond traditional electronic systems. Current market analysis indicates that data centers represent the primary demand sector, with telecommunications, artificial intelligence, and quantum computing applications following closely behind. These industries require standardized photonic neural networks to address the exponential growth in data processing requirements while maintaining energy efficiency.

Market research reveals that data centers worldwide are facing critical challenges in power consumption and heat management with conventional electronic systems. The potential energy savings of 90% offered by photonic computing represents a compelling value proposition, with the global data center market projected to reach $517 billion by 2030. This creates a substantial addressable market for standardized photonic neural network solutions.

Telecommunications companies are increasingly investing in next-generation network infrastructure capable of handling the bandwidth demands of 5G and future 6G technologies. Standardized photonic computing platforms could enable real-time signal processing at unprecedented speeds while significantly reducing latency issues that plague current systems. Industry analysts estimate that telecom operators could achieve 30-40% operational cost reductions through the implementation of standardized photonic neural networks.

The artificial intelligence and machine learning sectors demonstrate particularly strong demand signals for standardized photonic computing. As AI model complexity continues to grow exponentially, traditional electronic processors face fundamental physical limitations. Market surveys indicate that 78% of AI development companies are actively exploring alternative computing architectures, with photonic neural networks ranking among the top three preferred solutions due to their inherent parallelism and energy efficiency.

Financial services and high-frequency trading firms represent an emerging market segment with specific requirements for ultra-low latency computation. These organizations are willing to pay premium prices for standardized photonic solutions that can deliver microsecond advantages in transaction processing times, creating a high-value niche market opportunity.

Geographic analysis shows North America currently leading market demand (42%), followed by Asia-Pacific (31%) and Europe (21%). However, the fastest growth is projected in the Asia-Pacific region, with China and Japan making substantial investments in photonic computing infrastructure. This regional distribution highlights the global nature of demand for standardized photonic neural network technologies.

Market research reveals that data centers worldwide are facing critical challenges in power consumption and heat management with conventional electronic systems. The potential energy savings of 90% offered by photonic computing represents a compelling value proposition, with the global data center market projected to reach $517 billion by 2030. This creates a substantial addressable market for standardized photonic neural network solutions.

Telecommunications companies are increasingly investing in next-generation network infrastructure capable of handling the bandwidth demands of 5G and future 6G technologies. Standardized photonic computing platforms could enable real-time signal processing at unprecedented speeds while significantly reducing latency issues that plague current systems. Industry analysts estimate that telecom operators could achieve 30-40% operational cost reductions through the implementation of standardized photonic neural networks.

The artificial intelligence and machine learning sectors demonstrate particularly strong demand signals for standardized photonic computing. As AI model complexity continues to grow exponentially, traditional electronic processors face fundamental physical limitations. Market surveys indicate that 78% of AI development companies are actively exploring alternative computing architectures, with photonic neural networks ranking among the top three preferred solutions due to their inherent parallelism and energy efficiency.

Financial services and high-frequency trading firms represent an emerging market segment with specific requirements for ultra-low latency computation. These organizations are willing to pay premium prices for standardized photonic solutions that can deliver microsecond advantages in transaction processing times, creating a high-value niche market opportunity.

Geographic analysis shows North America currently leading market demand (42%), followed by Asia-Pacific (31%) and Europe (21%). However, the fastest growth is projected in the Asia-Pacific region, with China and Japan making substantial investments in photonic computing infrastructure. This regional distribution highlights the global nature of demand for standardized photonic neural network technologies.

Technical Challenges in Photonic Neural Networks Standardization

Despite significant advancements in photonic neural networks (PNNs), the standardization process faces numerous technical challenges that impede widespread adoption and interoperability. The heterogeneity of photonic components and architectures represents a fundamental obstacle, as different research groups and companies employ vastly different optical elements, from microring resonators to Mach-Zehnder interferometers, making it difficult to establish universal standards.

The integration of electronic and photonic components presents another significant challenge. Current PNN implementations often require complex interfaces between electronic control systems and optical processing units, with no standardized protocols for this electro-optical interface. This creates bottlenecks in both performance and manufacturing scalability.

Measurement and characterization methodologies remain inconsistent across the field. Unlike electronic neural networks with well-established benchmarking procedures, PNNs lack standardized metrics for evaluating performance parameters such as optical loss, nonlinearity efficiency, and speed-energy tradeoffs. This absence hampers meaningful comparisons between different implementations and slows progress toward optimization.

Temperature sensitivity poses a critical challenge for standardization efforts. Photonic components often exhibit significant performance variations with temperature fluctuations, necessitating precise thermal management systems. Developing standards that account for these environmental dependencies requires sophisticated modeling and compensation techniques not yet universally agreed upon.

Manufacturing variability further complicates standardization. Current fabrication processes for photonic integrated circuits show considerable chip-to-chip variations, affecting the reproducibility of PNN performance. Any viable standard must address these manufacturing tolerances and provide calibration methodologies to ensure consistent operation across different hardware implementations.

The rapidly evolving nature of the field itself presents a meta-challenge to standardization. With new materials, architectures, and computational paradigms emerging regularly, standards risk becoming obsolete quickly or, worse, prematurely constraining innovation. Finding the right balance between establishing necessary interoperability guidelines while allowing for continued technological advancement requires careful consideration.

Cross-disciplinary expertise gaps compound these technical challenges. Effective standardization requires collaboration between optical engineers, machine learning specialists, electronic designers, and manufacturing experts. The scarcity of professionals with expertise spanning these domains limits the development of comprehensive standards that address all relevant aspects of PNN implementation.

The integration of electronic and photonic components presents another significant challenge. Current PNN implementations often require complex interfaces between electronic control systems and optical processing units, with no standardized protocols for this electro-optical interface. This creates bottlenecks in both performance and manufacturing scalability.

Measurement and characterization methodologies remain inconsistent across the field. Unlike electronic neural networks with well-established benchmarking procedures, PNNs lack standardized metrics for evaluating performance parameters such as optical loss, nonlinearity efficiency, and speed-energy tradeoffs. This absence hampers meaningful comparisons between different implementations and slows progress toward optimization.

Temperature sensitivity poses a critical challenge for standardization efforts. Photonic components often exhibit significant performance variations with temperature fluctuations, necessitating precise thermal management systems. Developing standards that account for these environmental dependencies requires sophisticated modeling and compensation techniques not yet universally agreed upon.

Manufacturing variability further complicates standardization. Current fabrication processes for photonic integrated circuits show considerable chip-to-chip variations, affecting the reproducibility of PNN performance. Any viable standard must address these manufacturing tolerances and provide calibration methodologies to ensure consistent operation across different hardware implementations.

The rapidly evolving nature of the field itself presents a meta-challenge to standardization. With new materials, architectures, and computational paradigms emerging regularly, standards risk becoming obsolete quickly or, worse, prematurely constraining innovation. Finding the right balance between establishing necessary interoperability guidelines while allowing for continued technological advancement requires careful consideration.

Cross-disciplinary expertise gaps compound these technical challenges. Effective standardization requires collaboration between optical engineers, machine learning specialists, electronic designers, and manufacturing experts. The scarcity of professionals with expertise spanning these domains limits the development of comprehensive standards that address all relevant aspects of PNN implementation.

Current Standardization Approaches and Frameworks

01 Photonic neural network architectures and implementations

Various architectures for implementing photonic neural networks have been developed, including optical computing systems that use light for information processing. These architectures leverage photonic components such as waveguides, resonators, and interferometers to perform neural network operations. The implementations focus on optimizing speed, energy efficiency, and computational density while addressing challenges in standardizing optical components for neural network applications.- Photonic neural network architecture standardization: Standardization efforts for photonic neural network architectures focus on establishing common frameworks for designing and implementing optical computing systems. These standards address the integration of optical components, waveguides, and photonic integrated circuits to create efficient neural network implementations. The standardization includes specifications for optical interconnects, signal processing elements, and interface protocols to ensure compatibility across different photonic neural network platforms.

- Optical signal processing and encoding standards: Standards for optical signal processing and encoding in photonic neural networks define protocols for converting, transmitting, and processing information using light. These standards specify methods for encoding neural network weights and activations using optical properties such as phase, amplitude, wavelength, or polarization. They also establish benchmarks for signal integrity, noise tolerance, and processing speed to ensure reliable operation of photonic neural computing systems.

- Integration standards for hybrid electronic-photonic systems: These standards address the interface between electronic and photonic components in neural network implementations. They define protocols for converting between electronic and optical signals, specify power requirements, thermal management considerations, and physical interconnection standards. The integration standards ensure seamless operation between traditional electronic computing elements and photonic neural network accelerators, enabling hybrid systems that leverage the advantages of both technologies.

- Performance metrics and testing standards: Standardized performance metrics and testing methodologies for photonic neural networks establish common benchmarks for evaluating and comparing different implementations. These standards define procedures for measuring computational throughput, energy efficiency, inference accuracy, and training capabilities. They also specify test patterns, validation datasets, and reference implementations to ensure fair and consistent evaluation of photonic neural network technologies across the industry.

- Manufacturing and fabrication standards: Manufacturing and fabrication standards for photonic neural networks establish specifications for production processes, material requirements, and quality control measures. These standards ensure consistency in the fabrication of photonic integrated circuits, optical components, and interconnects used in neural network implementations. They address issues such as dimensional tolerances, optical property specifications, packaging requirements, and reliability testing to enable scalable production of photonic neural network hardware.

02 Integration of photonic neural networks with electronic systems

Hybrid approaches that combine photonic and electronic components are being standardized to leverage the strengths of both technologies. These systems integrate optical processing units with electronic control systems, memory, and interfaces. The standardization efforts focus on defining communication protocols between optical and electronic components, signal conversion methodologies, and unified programming models that abstract the underlying hardware complexity.Expand Specific Solutions03 Standardization of photonic neural network training methods

Specialized training algorithms and methodologies are being developed to account for the unique characteristics of photonic neural networks. These include gradient-based optimization techniques adapted for optical systems, calibration procedures to compensate for manufacturing variations, and methods to handle the analog nature of optical computing. Standardization efforts aim to establish common benchmarks, performance metrics, and validation procedures for training photonic neural networks.Expand Specific Solutions04 Hardware standardization for photonic neural network components

Efforts to standardize the physical components used in photonic neural networks include specifications for optical interconnects, light sources, modulators, and detectors. These standards address manufacturing tolerances, performance characteristics, and interoperability requirements. The standardization process aims to create a consistent ecosystem of components that can be reliably integrated into various photonic neural network designs while ensuring reproducibility and scalability.Expand Specific Solutions05 Software frameworks and programming interfaces for photonic neural networks

Development of standardized software frameworks and programming interfaces specifically designed for photonic neural networks is underway. These frameworks provide abstraction layers that hide the complexity of the underlying optical hardware while offering optimized operations for neural network models. The standardization includes API specifications, compiler toolchains, and simulation environments that enable developers to design, test, and deploy neural network models on photonic computing platforms.Expand Specific Solutions

Key Industry Players in Photonic Computing Standardization

The standardization of Photonic Neural Networks (PNNs) is currently in an early development stage, with the market showing promising growth potential as optical computing emerges as a solution to traditional electronic limitations. The global market is expanding, driven by increasing demand for energy-efficient AI hardware. Technologically, companies are at varying maturity levels: Google and Huawei are leading with advanced research initiatives, while FUJIFILM and Samsung are leveraging their optical expertise to develop commercial applications. Academic institutions like Zhejiang University and Shanghai Jiao Tong University are contributing fundamental research, collaborating with ASML on fabrication technologies. The ecosystem remains fragmented, with standardization efforts primarily focused on component interoperability, manufacturing processes, and performance metrics to enable broader industry adoption.

Google LLC

Technical Solution: Google has developed a comprehensive standardization approach for photonic neural networks through their Tensor Processing Units (TPUs) adaptation for photonic computing. Their framework includes standardized optical interconnect specifications, unified programming interfaces, and photonic-electronic integration protocols. Google's PhotonicsNet platform provides a standardized development environment with predefined optical component libraries and simulation tools that ensure compatibility across different photonic neural network implementations. They've also established benchmarking methodologies specifically for photonic neural networks, measuring metrics like optical power efficiency, computational density, and latency under standardized test conditions. Google collaborates with industry partners through the Open Photonic Neural Network Consortium to develop open standards for hardware interfaces, data formats, and programming models.

Strengths: Google's extensive computational resources and AI expertise enable them to develop comprehensive standards that address both hardware and software aspects. Their industry influence helps drive adoption of their proposed standards. Weaknesses: Their standards may prioritize compatibility with Google's existing AI infrastructure, potentially limiting broader applicability across diverse photonic computing approaches.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has pioneered standardization efforts for photonic neural networks through their Ascend photonic processor architecture. Their approach focuses on creating unified interfaces between electronic and photonic components, with standardized signal conversion protocols and optical interconnect specifications. Huawei's Photonic Computing Framework provides standardized programming abstractions that shield developers from the underlying optical implementation details while optimizing for photonic hardware. They've developed the Optical Neural Network Description Language (ONNDL) that standardizes how neural network topologies are mapped to photonic circuits, addressing unique requirements like phase calibration and optical power management. Huawei actively participates in international standards bodies including IEEE P3109 working group on optical computing standards, contributing specifications for coherent optical neural network implementations and standardized testing methodologies.

Strengths: Huawei's vertical integration across telecommunications, hardware, and AI provides them comprehensive insight into standardization needs across the entire photonic computing stack. Their standards emphasize practical implementation considerations. Weaknesses: Geopolitical tensions may limit the global adoption of their standards despite technical merit, creating potential fragmentation in the industry.

Critical Patents and Research in Photonic Neural Networks

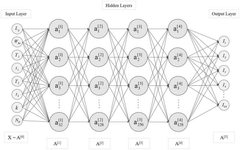

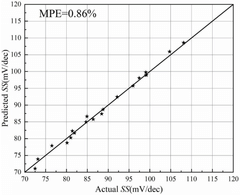

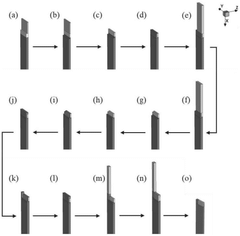

Deep learning technology driven InGaAs FinFET device performance prediction method

PatentPendingCN118709534A

Innovation

- Using the InGaAs FinFET device performance prediction method driven by deep learning technology, by integrating TCAD simulation technology and deep learning network model, using the PReLUNet neural network model combined with the PReLU activation function and Adam optimizer, using simulation data for pre-training and fine-tuning with a small amount of experimental data , reduce dependence on expensive experimental data, and improve the prediction accuracy and interpretability of the model.

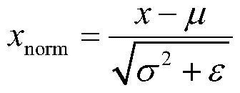

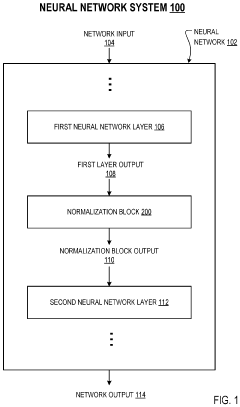

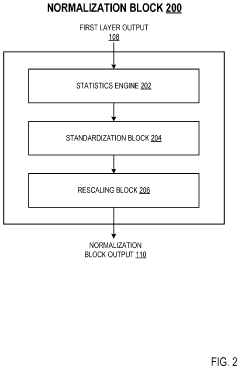

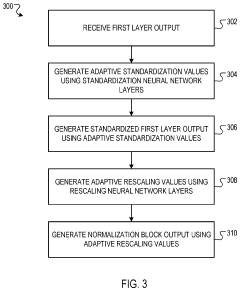

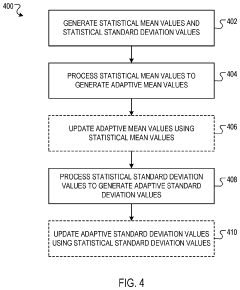

Neural networks with adaptive standardization and rescaling

PatentPendingUS20240232572A1

Innovation

- A normalization block is introduced between neural network layers, dynamically generating adaptive standardization and rescaling values using trainable neural network layers, enabling the network to adapt to individual inputs and improve robustness.

International Collaboration in Photonic Standards Development

The development of standardization processes for photonic neural networks requires extensive international collaboration. Currently, several key international bodies are actively engaged in establishing frameworks for photonic computing standards. The IEEE Photonics Society has formed specialized working groups focusing on standardizing terminology, measurement methodologies, and performance metrics for photonic neural networks. These efforts are complemented by the International Electrotechnical Commission (IEC), which has established Technical Committee 76 to address laser equipment standards that intersect with photonic neural network implementations.

Collaboration between academic institutions across different regions has proven essential for advancing standardization efforts. The European Telecommunications Standards Institute (ETSI) has partnered with research institutions in North America and Asia to develop interoperability standards for photonic neural network interfaces. This cross-continental approach ensures that emerging standards accommodate diverse technological approaches and market requirements across different regions.

Industry consortia play a crucial role in bridging theoretical standards with practical implementation. The Optica (formerly OSA) has established the Photonic Computing Standards Alliance, bringing together over 30 companies and research institutions from 12 countries. This alliance focuses on developing application-specific standards for photonic neural networks in telecommunications, data centers, and edge computing applications.

Regulatory harmonization represents another critical dimension of international collaboration. The International Organization for Standardization (ISO) has initiated a joint technical committee with IEC to address the regulatory aspects of photonic neural networks, particularly concerning energy efficiency benchmarks and safety considerations. This collaboration aims to prevent regulatory fragmentation that could impede global adoption of photonic neural network technologies.

Knowledge sharing mechanisms have been formalized through international workshops and technical exchanges. The annual International Conference on Photonic Neural Network Standardization, rotating between Asia, Europe, and North America, serves as a platform for standards developers to share progress and align approaches. These events have facilitated the creation of shared testing methodologies and reference implementations that accelerate standards development.

Challenges in international collaboration include intellectual property concerns, varying national security interests, and differing technological priorities across regions. To address these challenges, a multi-stakeholder approach has been adopted, incorporating transparent governance structures and clear IP frameworks within standardization processes. This approach has proven effective in navigating complex geopolitical considerations while maintaining technical progress toward unified standards for photonic neural networks.

Collaboration between academic institutions across different regions has proven essential for advancing standardization efforts. The European Telecommunications Standards Institute (ETSI) has partnered with research institutions in North America and Asia to develop interoperability standards for photonic neural network interfaces. This cross-continental approach ensures that emerging standards accommodate diverse technological approaches and market requirements across different regions.

Industry consortia play a crucial role in bridging theoretical standards with practical implementation. The Optica (formerly OSA) has established the Photonic Computing Standards Alliance, bringing together over 30 companies and research institutions from 12 countries. This alliance focuses on developing application-specific standards for photonic neural networks in telecommunications, data centers, and edge computing applications.

Regulatory harmonization represents another critical dimension of international collaboration. The International Organization for Standardization (ISO) has initiated a joint technical committee with IEC to address the regulatory aspects of photonic neural networks, particularly concerning energy efficiency benchmarks and safety considerations. This collaboration aims to prevent regulatory fragmentation that could impede global adoption of photonic neural network technologies.

Knowledge sharing mechanisms have been formalized through international workshops and technical exchanges. The annual International Conference on Photonic Neural Network Standardization, rotating between Asia, Europe, and North America, serves as a platform for standards developers to share progress and align approaches. These events have facilitated the creation of shared testing methodologies and reference implementations that accelerate standards development.

Challenges in international collaboration include intellectual property concerns, varying national security interests, and differing technological priorities across regions. To address these challenges, a multi-stakeholder approach has been adopted, incorporating transparent governance structures and clear IP frameworks within standardization processes. This approach has proven effective in navigating complex geopolitical considerations while maintaining technical progress toward unified standards for photonic neural networks.

Interoperability Requirements for Photonic Computing Systems

Interoperability across different photonic computing platforms represents a critical challenge for the widespread adoption of Photonic Neural Networks (PNNs). As these systems evolve from research prototypes to commercial solutions, establishing clear interoperability requirements becomes essential for creating a cohesive ecosystem. The primary requirement is standardized optical interfaces that enable seamless integration between photonic components from different manufacturers, including coherent light sources, modulators, and detectors.

Data format standardization emerges as another crucial requirement, necessitating uniform protocols for converting electronic data to optical signals and vice versa. This includes standardized encoding schemes for representing neural network weights and activations in the optical domain, ensuring that models trained on one photonic platform can be deployed on another without significant performance degradation.

Control systems interoperability must address the synchronization challenges between electronic control circuits and optical components. Timing precision requirements in photonic systems often reach picosecond levels, demanding standardized timing protocols and interfaces that can maintain this precision across heterogeneous systems.

Power management represents a significant interoperability concern, as different photonic computing architectures may have vastly different power profiles. Standardized power interfaces and management protocols would enable more efficient integration into existing computing infrastructures while maximizing the energy efficiency advantages inherent to photonic computing.

Software compatibility forms perhaps the most complex interoperability requirement. The development of standard APIs and middleware solutions would allow existing machine learning frameworks like TensorFlow and PyTorch to seamlessly target photonic hardware backends. This software layer must abstract the underlying hardware complexities while exposing photonic-specific optimizations to developers.

Testing and calibration procedures require standardization to ensure consistent performance across platforms. This includes methods for characterizing optical noise, drift compensation, and wavelength stability - all critical factors affecting the reliability of photonic neural networks in production environments.

Finally, security and encryption standards must be established specifically for photonic computing systems, addressing unique challenges such as side-channel attacks based on light leakage or interference patterns. As photonic neural networks process increasingly sensitive data, these security standards will become essential for enterprise adoption.

Data format standardization emerges as another crucial requirement, necessitating uniform protocols for converting electronic data to optical signals and vice versa. This includes standardized encoding schemes for representing neural network weights and activations in the optical domain, ensuring that models trained on one photonic platform can be deployed on another without significant performance degradation.

Control systems interoperability must address the synchronization challenges between electronic control circuits and optical components. Timing precision requirements in photonic systems often reach picosecond levels, demanding standardized timing protocols and interfaces that can maintain this precision across heterogeneous systems.

Power management represents a significant interoperability concern, as different photonic computing architectures may have vastly different power profiles. Standardized power interfaces and management protocols would enable more efficient integration into existing computing infrastructures while maximizing the energy efficiency advantages inherent to photonic computing.

Software compatibility forms perhaps the most complex interoperability requirement. The development of standard APIs and middleware solutions would allow existing machine learning frameworks like TensorFlow and PyTorch to seamlessly target photonic hardware backends. This software layer must abstract the underlying hardware complexities while exposing photonic-specific optimizations to developers.

Testing and calibration procedures require standardization to ensure consistent performance across platforms. This includes methods for characterizing optical noise, drift compensation, and wavelength stability - all critical factors affecting the reliability of photonic neural networks in production environments.

Finally, security and encryption standards must be established specifically for photonic computing systems, addressing unique challenges such as side-channel attacks based on light leakage or interference patterns. As photonic neural networks process increasingly sensitive data, these security standards will become essential for enterprise adoption.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!