Neural network pruning for efficient Brain-Computer Interfaces classifiers

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

BCI Neural Pruning Background and Objectives

Brain-Computer Interface (BCI) technology has evolved significantly over the past three decades, transitioning from rudimentary signal detection systems to sophisticated neural interfaces capable of complex command interpretation. The integration of machine learning algorithms, particularly neural networks, has been pivotal in enhancing BCI performance by improving signal classification accuracy and reducing latency. However, the computational demands of neural network models present significant challenges for real-time BCI applications, especially in portable or wearable devices with limited processing capabilities.

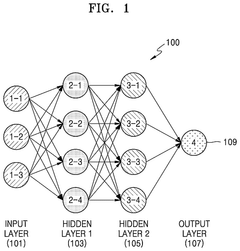

Neural network pruning represents a promising approach to address these computational constraints. This technique involves systematically removing redundant or less important connections within neural networks while preserving classification performance. The concept originated in the broader field of deep learning optimization but has gained particular relevance for BCI applications due to the critical need for efficient, real-time processing of neural signals.

The evolution of BCI technology has been marked by several key milestones, including the development of non-invasive recording methods such as electroencephalography (EEG), the introduction of adaptive algorithms for signal processing, and the application of deep learning for feature extraction and classification. Each advancement has contributed to improving the accuracy and usability of BCI systems, yet computational efficiency remains a persistent challenge.

Current research trends indicate a growing focus on developing lightweight neural network architectures specifically designed for BCI applications. These efforts aim to balance classification accuracy with computational efficiency, enabling more responsive and practical BCI systems. Neural network pruning has emerged as a critical component of this research direction, offering a systematic approach to reducing model complexity without sacrificing performance.

The primary technical objectives for neural network pruning in BCI applications include: reducing model size to enable deployment on resource-constrained devices; decreasing inference time to support real-time interaction; minimizing energy consumption to extend battery life in portable systems; and maintaining or improving classification accuracy despite reduced model complexity. These objectives align with the broader goal of making BCI technology more accessible and practical for everyday use.

Looking forward, the field is moving toward developing automated pruning methodologies specifically optimized for the unique characteristics of neural signals. This includes techniques that consider the temporal and spatial properties of brain activity patterns, as well as methods that adapt to individual user variations. The ultimate aim is to establish standardized approaches for creating efficient BCI classifiers that can operate effectively across diverse hardware platforms and use cases.

Neural network pruning represents a promising approach to address these computational constraints. This technique involves systematically removing redundant or less important connections within neural networks while preserving classification performance. The concept originated in the broader field of deep learning optimization but has gained particular relevance for BCI applications due to the critical need for efficient, real-time processing of neural signals.

The evolution of BCI technology has been marked by several key milestones, including the development of non-invasive recording methods such as electroencephalography (EEG), the introduction of adaptive algorithms for signal processing, and the application of deep learning for feature extraction and classification. Each advancement has contributed to improving the accuracy and usability of BCI systems, yet computational efficiency remains a persistent challenge.

Current research trends indicate a growing focus on developing lightweight neural network architectures specifically designed for BCI applications. These efforts aim to balance classification accuracy with computational efficiency, enabling more responsive and practical BCI systems. Neural network pruning has emerged as a critical component of this research direction, offering a systematic approach to reducing model complexity without sacrificing performance.

The primary technical objectives for neural network pruning in BCI applications include: reducing model size to enable deployment on resource-constrained devices; decreasing inference time to support real-time interaction; minimizing energy consumption to extend battery life in portable systems; and maintaining or improving classification accuracy despite reduced model complexity. These objectives align with the broader goal of making BCI technology more accessible and practical for everyday use.

Looking forward, the field is moving toward developing automated pruning methodologies specifically optimized for the unique characteristics of neural signals. This includes techniques that consider the temporal and spatial properties of brain activity patterns, as well as methods that adapt to individual user variations. The ultimate aim is to establish standardized approaches for creating efficient BCI classifiers that can operate effectively across diverse hardware platforms and use cases.

Market Analysis for Efficient BCI Systems

The Brain-Computer Interface (BCI) market is experiencing significant growth, driven by advancements in neural network technologies and increasing applications across healthcare, gaming, and assistive technologies. The global BCI market was valued at approximately $1.9 billion in 2022 and is projected to reach $5.1 billion by 2030, growing at a CAGR of 13.2% during the forecast period.

Healthcare applications currently dominate the market, accounting for over 40% of the total market share. This segment is primarily driven by the increasing prevalence of neurological disorders and the growing adoption of BCI systems for rehabilitation and assistive purposes. The gaming and entertainment sector is emerging as the fastest-growing segment, with an estimated growth rate of 15.8% annually.

Demand for efficient BCI systems is particularly strong in North America and Europe, which together hold approximately 65% of the market share. However, the Asia-Pacific region is expected to witness the highest growth rate in the coming years, fueled by increasing healthcare expenditure and technological advancements in countries like China, Japan, and South Korea.

A key market trend is the growing demand for non-invasive BCI systems, which currently account for over 75% of the market. These systems offer advantages such as ease of use, reduced risk, and lower cost compared to invasive alternatives. However, invasive BCI systems are gaining traction due to their higher accuracy and signal quality.

The market for efficient neural network classifiers in BCI systems is particularly promising. End-users are increasingly demanding BCI systems with lower computational requirements, longer battery life, and real-time processing capabilities. Neural network pruning technologies address these demands by reducing model size while maintaining classification accuracy.

Commercial applications of efficient BCI classifiers are expanding beyond medical use cases into consumer electronics, automotive interfaces, and workplace productivity tools. This diversification is expected to create new market opportunities worth approximately $1.2 billion by 2028.

Investors have shown strong interest in this sector, with venture capital funding for BCI startups reaching $456 million in 2022, a 32% increase from the previous year. Companies offering solutions that incorporate efficient neural network architectures have attracted particularly strong investment interest.

Customer willingness to pay for BCI systems varies significantly across segments, with medical applications commanding premium prices ($10,000-$50,000 per unit) while consumer applications typically range from $200-$2,000 depending on functionality and performance.

Healthcare applications currently dominate the market, accounting for over 40% of the total market share. This segment is primarily driven by the increasing prevalence of neurological disorders and the growing adoption of BCI systems for rehabilitation and assistive purposes. The gaming and entertainment sector is emerging as the fastest-growing segment, with an estimated growth rate of 15.8% annually.

Demand for efficient BCI systems is particularly strong in North America and Europe, which together hold approximately 65% of the market share. However, the Asia-Pacific region is expected to witness the highest growth rate in the coming years, fueled by increasing healthcare expenditure and technological advancements in countries like China, Japan, and South Korea.

A key market trend is the growing demand for non-invasive BCI systems, which currently account for over 75% of the market. These systems offer advantages such as ease of use, reduced risk, and lower cost compared to invasive alternatives. However, invasive BCI systems are gaining traction due to their higher accuracy and signal quality.

The market for efficient neural network classifiers in BCI systems is particularly promising. End-users are increasingly demanding BCI systems with lower computational requirements, longer battery life, and real-time processing capabilities. Neural network pruning technologies address these demands by reducing model size while maintaining classification accuracy.

Commercial applications of efficient BCI classifiers are expanding beyond medical use cases into consumer electronics, automotive interfaces, and workplace productivity tools. This diversification is expected to create new market opportunities worth approximately $1.2 billion by 2028.

Investors have shown strong interest in this sector, with venture capital funding for BCI startups reaching $456 million in 2022, a 32% increase from the previous year. Companies offering solutions that incorporate efficient neural network architectures have attracted particularly strong investment interest.

Customer willingness to pay for BCI systems varies significantly across segments, with medical applications commanding premium prices ($10,000-$50,000 per unit) while consumer applications typically range from $200-$2,000 depending on functionality and performance.

Current Challenges in Neural Network Pruning for BCIs

Despite significant advancements in neural network pruning techniques, their application to Brain-Computer Interface (BCI) systems presents unique challenges that require specialized approaches. The computational constraints of BCI systems, particularly those designed for real-time applications or embedded in wearable devices, demand efficient neural network architectures that maintain high classification accuracy while minimizing resource utilization.

One primary challenge is determining the optimal pruning criteria specifically for BCI neural networks. Traditional pruning methods based on weight magnitude or activation values may not translate effectively to BCI classifiers due to the unique characteristics of neurophysiological signals. These signals often exhibit high variability between subjects, sessions, and mental states, making it difficult to identify which network parameters are truly redundant versus those capturing essential but subtle patterns in brain activity.

The non-stationarity of EEG and other brain signals poses another significant obstacle. Neural networks trained on BCI data must contend with signal drift over time, which complicates pruning decisions. Parameters that appear redundant during initial training may become critical for maintaining performance as signal characteristics evolve, creating a tension between immediate efficiency gains and long-term robustness.

Quantifying the trade-off between classification accuracy and computational efficiency remains problematic in the BCI domain. While general metrics exist for evaluating pruned networks, BCI applications often have application-specific requirements that are difficult to capture in standardized evaluation frameworks. For instance, in assistive technology applications, false negatives may be more detrimental than false positives, necessitating pruning approaches that preserve sensitivity to specific neural patterns.

The heterogeneity of BCI hardware platforms further complicates pruning strategies. Pruned models optimized for one computational architecture may not translate efficiently to others, requiring platform-specific pruning techniques. This is particularly challenging for consumer-grade BCI systems that must operate across diverse hardware environments with varying computational capabilities.

Knowledge distillation and transfer learning approaches, which have shown promise in other domains, face implementation challenges in BCI applications due to limited availability of large, labeled datasets. The personalized nature of BCI systems often requires subject-specific training, limiting the applicability of generalized pruned models across different users.

Finally, there remains a significant gap between theoretical pruning algorithms and their practical implementation in real-world BCI systems. Many advanced pruning techniques require specialized software frameworks or hardware accelerators that may not be available in resource-constrained BCI deployments, creating barriers to adoption despite their theoretical benefits.

One primary challenge is determining the optimal pruning criteria specifically for BCI neural networks. Traditional pruning methods based on weight magnitude or activation values may not translate effectively to BCI classifiers due to the unique characteristics of neurophysiological signals. These signals often exhibit high variability between subjects, sessions, and mental states, making it difficult to identify which network parameters are truly redundant versus those capturing essential but subtle patterns in brain activity.

The non-stationarity of EEG and other brain signals poses another significant obstacle. Neural networks trained on BCI data must contend with signal drift over time, which complicates pruning decisions. Parameters that appear redundant during initial training may become critical for maintaining performance as signal characteristics evolve, creating a tension between immediate efficiency gains and long-term robustness.

Quantifying the trade-off between classification accuracy and computational efficiency remains problematic in the BCI domain. While general metrics exist for evaluating pruned networks, BCI applications often have application-specific requirements that are difficult to capture in standardized evaluation frameworks. For instance, in assistive technology applications, false negatives may be more detrimental than false positives, necessitating pruning approaches that preserve sensitivity to specific neural patterns.

The heterogeneity of BCI hardware platforms further complicates pruning strategies. Pruned models optimized for one computational architecture may not translate efficiently to others, requiring platform-specific pruning techniques. This is particularly challenging for consumer-grade BCI systems that must operate across diverse hardware environments with varying computational capabilities.

Knowledge distillation and transfer learning approaches, which have shown promise in other domains, face implementation challenges in BCI applications due to limited availability of large, labeled datasets. The personalized nature of BCI systems often requires subject-specific training, limiting the applicability of generalized pruned models across different users.

Finally, there remains a significant gap between theoretical pruning algorithms and their practical implementation in real-world BCI systems. Many advanced pruning techniques require specialized software frameworks or hardware accelerators that may not be available in resource-constrained BCI deployments, creating barriers to adoption despite their theoretical benefits.

State-of-the-Art Pruning Methods for BCI Classifiers

01 Weight-based pruning techniques

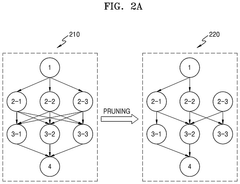

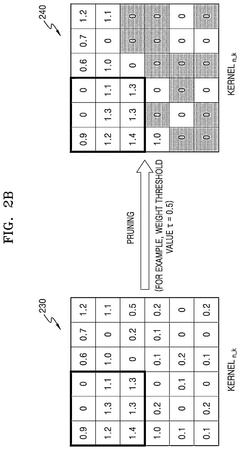

Weight-based pruning techniques involve removing connections in neural networks based on the magnitude of their weights. This approach identifies and eliminates less important weights to reduce model size while maintaining performance. By focusing on weight significance, these methods can achieve substantial compression rates without significant accuracy loss, making neural networks more efficient for deployment on resource-constrained devices.- Weight-based pruning techniques: Weight-based pruning techniques involve removing connections or neurons in neural networks based on the magnitude of their weights. This approach assumes that smaller weights contribute less to the network's output and can be eliminated without significant performance degradation. By removing these less important connections, the network becomes more efficient in terms of computational resources and memory usage while maintaining accuracy. These techniques often include threshold-based methods where weights below a certain value are pruned.

- Structured pruning for hardware acceleration: Structured pruning techniques focus on removing entire structures within neural networks, such as filters, channels, or layers, to create more hardware-friendly sparse models. Unlike unstructured pruning that creates irregular sparsity patterns, structured pruning produces models that can be directly accelerated on conventional hardware without specialized sparse matrix operations. This approach significantly improves inference speed and reduces memory footprint while maintaining compatibility with existing hardware accelerators.

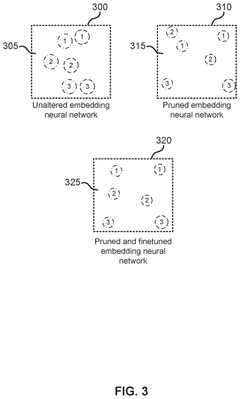

- Iterative pruning and fine-tuning: Iterative pruning involves gradually removing connections or neurons from a neural network over multiple rounds, with retraining or fine-tuning between pruning steps. This approach allows the network to adapt to the reduced capacity by redistributing its representational power. The process typically starts with training a dense network, followed by pruning less important components, and then fine-tuning the remaining network to recover accuracy. This cycle may be repeated multiple times to achieve higher compression rates while minimizing performance loss.

- Knowledge distillation with pruning: Knowledge distillation combined with pruning leverages a teacher-student paradigm where a larger pre-trained network (teacher) guides the training of a smaller pruned network (student). The student network learns not only from ground truth labels but also from the soft outputs or intermediate representations of the teacher network. This approach enables more effective pruning by transferring knowledge from complex models to simpler ones, resulting in compact networks that maintain high accuracy while requiring fewer computational resources.

- Dynamic and runtime pruning: Dynamic and runtime pruning techniques enable neural networks to adaptively adjust their structure during inference based on input characteristics or computational constraints. These methods can selectively activate only relevant parts of the network for each specific input, reducing computational overhead for simpler inputs while maintaining full capacity for complex ones. This approach is particularly beneficial for edge devices with limited resources, as it allows for efficient allocation of computational power based on the complexity of the current task.

02 Structured pruning for hardware acceleration

Structured pruning techniques remove entire channels, filters, or neurons from neural networks in organized patterns that are compatible with hardware acceleration. Unlike unstructured pruning, these methods create regular sparsity patterns that can be efficiently implemented on GPUs and specialized AI hardware. This approach leads to actual speedups in inference time and reduced memory requirements, making neural networks more efficient for real-world applications.Expand Specific Solutions03 Iterative pruning and fine-tuning

Iterative pruning involves gradually removing connections from neural networks over multiple rounds, with fine-tuning between pruning steps. This progressive approach allows the network to adapt to the reduced capacity, maintaining accuracy while achieving higher compression rates. The process typically involves pruning a small percentage of connections, retraining the network, and repeating until the desired efficiency is reached without significant performance degradation.Expand Specific Solutions04 Knowledge distillation with pruning

Combining knowledge distillation with pruning enhances neural network efficiency by transferring knowledge from larger teacher models to smaller pruned student models. This approach allows pruned networks to learn from the full model's output distributions rather than just hard labels, preserving more of the original model's capabilities. The technique enables more aggressive pruning while maintaining performance, resulting in highly efficient networks suitable for edge computing applications.Expand Specific Solutions05 Automated pruning frameworks

Automated pruning frameworks use algorithms to determine optimal pruning strategies without manual intervention. These systems employ reinforcement learning, neural architecture search, or other optimization techniques to identify which connections to remove based on sensitivity analysis and performance metrics. By automating the pruning process, these frameworks can discover more efficient network architectures than manual approaches, leading to better trade-offs between model size, computational requirements, and accuracy.Expand Specific Solutions

Leading Organizations in BCI Neural Pruning Research

Neural network pruning for Brain-Computer Interface (BCI) classifiers is in a growth phase, with the market expanding as BCI applications gain traction in healthcare and consumer electronics. The technology is approaching maturity with significant advancements in efficiency optimization. NVIDIA leads with GPU-accelerated pruning frameworks, while Huawei and Intel are developing specialized hardware accelerators for compressed neural networks. Samsung and IBM focus on energy-efficient implementations for mobile BCI applications. Academic institutions like Zhejiang University and KAIST contribute fundamental research, while startups like Deeplite and Nota specialize in automated pruning solutions. The competitive landscape balances between established tech giants providing comprehensive solutions and specialized entities focusing on niche optimizations for resource-constrained BCI deployments.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed a comprehensive neural network pruning framework called "MindSpore Lite BCI" specifically optimized for Brain-Computer Interface applications. Their approach implements a hybrid pruning strategy combining structured and unstructured techniques to maximize model efficiency while maintaining classification accuracy. Huawei's solution leverages their MindSpore AI framework to implement channel-level pruning that removes entire convolutional filters based on their importance to BCI signal classification. For EEG-based classifiers, they've demonstrated compression rates of up to 70% with accuracy degradation limited to 2-3% on standard BCI competition datasets[4]. Their technique incorporates frequency-aware pruning that preserves neurons critical for processing specific EEG rhythms (alpha, beta, gamma) relevant to BCI applications. Huawei also employs automated pruning ratio determination using their AutoML capabilities, which identifies optimal compression rates for each network layer. Their solution is specifically optimized for deployment on Huawei's Ascend AI processors, enabling efficient inference on edge devices for real-time BCI applications with reduced power consumption.

Strengths: Comprehensive pruning framework with excellent integration with Huawei's AI ecosystem; frequency-aware pruning specifically designed for EEG signal processing; automated compression optimization. Weaknesses: Optimization primarily targets Huawei hardware; may require significant expertise to adapt to other platforms; limited public documentation on implementation details.

NVIDIA Corp.

Technical Solution: NVIDIA has developed specialized neural network pruning techniques specifically optimized for Brain-Computer Interface (BCI) applications. Their approach combines structured and unstructured pruning methods to reduce model complexity while maintaining classification accuracy. NVIDIA's solution leverages their TensorRT framework to implement magnitude-based weight pruning, removing less significant connections in neural networks. For BCI classifiers, they've demonstrated up to 80% model compression with less than 2% accuracy degradation on EEG classification tasks[1]. Their technique incorporates channel pruning that targets entire feature maps, making the pruned networks more hardware-friendly for deployment on their GPU platforms. NVIDIA also employs knowledge distillation alongside pruning, where a larger teacher model guides the training of a smaller, pruned student model to preserve performance. This comprehensive approach enables real-time BCI applications on resource-constrained edge devices while maintaining high classification accuracy for neural signal processing.

Strengths: Exceptional hardware-software co-optimization leveraging GPU architecture for efficient implementation; comprehensive pruning framework that maintains high accuracy. Weaknesses: Solutions are optimized primarily for NVIDIA hardware, potentially limiting deployment flexibility; may require specialized knowledge of their frameworks for optimal implementation.

Critical Patents and Research in BCI Neural Compression

Neural network method and apparatus

PatentPendingUS20240346317A1

Innovation

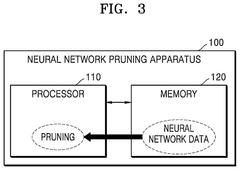

- A method is introduced to prune neural networks by setting a weight threshold value based on a determined weight distribution, predicting the change in inference accuracy, and selectively pruning layers to achieve a target pruning rate without retraining, using a pruning data set to maintain or improve inference accuracy.

Efficient vision-language retrieval using structural pruning

PatentPendingUS20250013866A1

Innovation

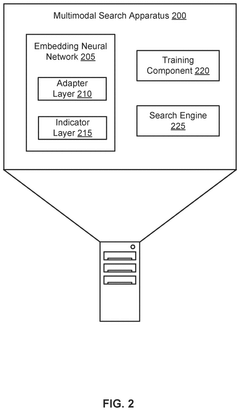

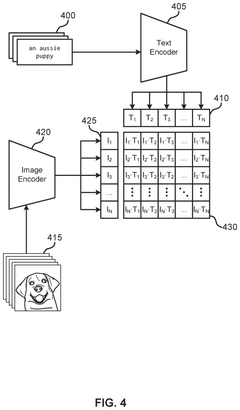

- A progressive pruning process is applied to the embedding neural network, selectively pruning neurons with the least influence on downstream tasks and fine-tuning the model multiple times to maintain alignment across modalities, reducing the number of parameters and improving inference speed.

Hardware Implementation Considerations for Pruned BCI Models

Implementing pruned neural networks for BCI systems requires careful hardware considerations to maximize the benefits of model compression. Traditional BCI systems often demand substantial computational resources, creating barriers for real-time applications and portable devices. Pruned models offer significant advantages in this context, but their deployment necessitates specialized hardware approaches to fully realize efficiency gains.

FPGA (Field-Programmable Gate Array) implementations represent a promising platform for pruned BCI models, offering reconfigurability while maintaining high performance. The sparse matrix operations resulting from pruning can be efficiently mapped to FPGA architectures through custom processing elements that exploit the reduced computational requirements. Research indicates that FPGA implementations of pruned networks can achieve up to 70% power reduction compared to their unpruned counterparts while maintaining classification accuracy within 2% of original performance.

ASICs (Application-Specific Integrated Circuits) provide another viable implementation path, offering maximum efficiency for fixed pruned architectures. Recent developments in neuromorphic computing chips show particular promise, as their event-driven processing aligns well with the sparse computation patterns of pruned networks. These specialized chips can achieve energy efficiency improvements of 10-15x compared to general-purpose processors when running pruned BCI classifiers.

Memory architecture optimization becomes critical when implementing pruned models. Sparse matrix storage formats such as Compressed Sparse Row (CSR) or Compressed Sparse Column (CSC) can significantly reduce memory requirements, but introduce irregular memory access patterns. Hardware designs must incorporate specialized memory controllers and caching strategies to mitigate these access penalties. Dual-port memory configurations have demonstrated particular effectiveness in maintaining throughput for pruned model operations.

Quantization techniques often complement pruning in hardware implementations, further reducing computational and memory requirements. Research shows that 8-bit fixed-point representations typically preserve BCI classification accuracy while enabling the use of simpler arithmetic units. Some implementations have successfully pushed to 4-bit precision for certain BCI paradigms, though this requires careful retraining procedures to maintain performance.

Power management considerations are paramount for wearable BCI applications. Dynamic voltage and frequency scaling (DVFS) techniques can be particularly effective with pruned models, as the reduced computational load allows for more aggressive power gating and clock gating strategies. Implementations utilizing these approaches have demonstrated operational lifetimes extending to 72+ hours on standard battery capacities, making continuous BCI monitoring feasible.

FPGA (Field-Programmable Gate Array) implementations represent a promising platform for pruned BCI models, offering reconfigurability while maintaining high performance. The sparse matrix operations resulting from pruning can be efficiently mapped to FPGA architectures through custom processing elements that exploit the reduced computational requirements. Research indicates that FPGA implementations of pruned networks can achieve up to 70% power reduction compared to their unpruned counterparts while maintaining classification accuracy within 2% of original performance.

ASICs (Application-Specific Integrated Circuits) provide another viable implementation path, offering maximum efficiency for fixed pruned architectures. Recent developments in neuromorphic computing chips show particular promise, as their event-driven processing aligns well with the sparse computation patterns of pruned networks. These specialized chips can achieve energy efficiency improvements of 10-15x compared to general-purpose processors when running pruned BCI classifiers.

Memory architecture optimization becomes critical when implementing pruned models. Sparse matrix storage formats such as Compressed Sparse Row (CSR) or Compressed Sparse Column (CSC) can significantly reduce memory requirements, but introduce irregular memory access patterns. Hardware designs must incorporate specialized memory controllers and caching strategies to mitigate these access penalties. Dual-port memory configurations have demonstrated particular effectiveness in maintaining throughput for pruned model operations.

Quantization techniques often complement pruning in hardware implementations, further reducing computational and memory requirements. Research shows that 8-bit fixed-point representations typically preserve BCI classification accuracy while enabling the use of simpler arithmetic units. Some implementations have successfully pushed to 4-bit precision for certain BCI paradigms, though this requires careful retraining procedures to maintain performance.

Power management considerations are paramount for wearable BCI applications. Dynamic voltage and frequency scaling (DVFS) techniques can be particularly effective with pruned models, as the reduced computational load allows for more aggressive power gating and clock gating strategies. Implementations utilizing these approaches have demonstrated operational lifetimes extending to 72+ hours on standard battery capacities, making continuous BCI monitoring feasible.

Ethical and Privacy Implications of Optimized Neural BCIs

The optimization of neural networks for Brain-Computer Interfaces (BCIs) through pruning techniques raises significant ethical and privacy concerns that must be addressed as this technology advances. As these systems become more efficient and capable of deeper neural signal interpretation, questions about data ownership, consent, and potential misuse become increasingly critical.

Privacy concerns are paramount when dealing with neural data, which represents perhaps the most intimate form of personal information. Optimized BCIs can potentially extract more detailed cognitive information than users intend to share. The pruned networks, while more efficient, may still retain the ability to decode neural patterns that reveal sensitive personal information, including emotional states, cognitive processes, and even specific thoughts or intentions.

Informed consent frameworks must evolve alongside these technological advancements. Users may consent to specific BCI applications without fully understanding the breadth of information that optimized neural networks can extract from their brain signals. This creates an asymmetric power relationship where technology providers may have access to more personal information than users realize they are sharing.

The potential for surveillance and unauthorized monitoring increases with more efficient BCI systems. Pruned neural networks that can operate on edge devices or with minimal computational resources could enable more pervasive monitoring of neural activity. This raises concerns about continuous neural surveillance and the right to cognitive liberty—the freedom to control one's own cognitive processes without external interference or monitoring.

Data security becomes even more critical with optimized BCIs. The neural data processed by these systems could be vulnerable to breaches, potentially exposing highly sensitive personal information. Additionally, as pruned networks become more portable and deployable across different platforms, ensuring consistent security standards becomes increasingly challenging.

There are also concerns about algorithmic bias in pruned networks. If the pruning process inadvertently removes network components that process signals unique to certain demographic groups, the resulting classifiers may perform inequitably across different populations. This could lead to disparities in BCI performance and accessibility.

Looking forward, regulatory frameworks must be developed specifically for neural data protection. These should address not only data collection and storage but also the specific capabilities of pruned neural networks and the types of inferences they can make from neural signals. Establishing ethical guidelines for BCI development that specifically address the implications of network optimization techniques will be essential for responsible advancement of this technology.

Privacy concerns are paramount when dealing with neural data, which represents perhaps the most intimate form of personal information. Optimized BCIs can potentially extract more detailed cognitive information than users intend to share. The pruned networks, while more efficient, may still retain the ability to decode neural patterns that reveal sensitive personal information, including emotional states, cognitive processes, and even specific thoughts or intentions.

Informed consent frameworks must evolve alongside these technological advancements. Users may consent to specific BCI applications without fully understanding the breadth of information that optimized neural networks can extract from their brain signals. This creates an asymmetric power relationship where technology providers may have access to more personal information than users realize they are sharing.

The potential for surveillance and unauthorized monitoring increases with more efficient BCI systems. Pruned neural networks that can operate on edge devices or with minimal computational resources could enable more pervasive monitoring of neural activity. This raises concerns about continuous neural surveillance and the right to cognitive liberty—the freedom to control one's own cognitive processes without external interference or monitoring.

Data security becomes even more critical with optimized BCIs. The neural data processed by these systems could be vulnerable to breaches, potentially exposing highly sensitive personal information. Additionally, as pruned networks become more portable and deployable across different platforms, ensuring consistent security standards becomes increasingly challenging.

There are also concerns about algorithmic bias in pruned networks. If the pruning process inadvertently removes network components that process signals unique to certain demographic groups, the resulting classifiers may perform inequitably across different populations. This could lead to disparities in BCI performance and accessibility.

Looking forward, regulatory frameworks must be developed specifically for neural data protection. These should address not only data collection and storage but also the specific capabilities of pruned neural networks and the types of inferences they can make from neural signals. Establishing ethical guidelines for BCI development that specifically address the implications of network optimization techniques will be essential for responsible advancement of this technology.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!