Phased Array in 5G Deployment: Compare Signal Robustness

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

5G Phased Array Evolution and Objectives

Phased array technology has evolved significantly over the past decades, transitioning from military applications to becoming a cornerstone of modern telecommunications. In the context of 5G deployment, phased arrays represent a critical enabling technology that addresses the fundamental challenges of millimeter wave (mmWave) communications. The historical trajectory of phased arrays began with analog beamforming systems in radar applications, progressing through digital beamforming architectures, and culminating in today's hybrid beamforming solutions that balance performance with implementation complexity.

The evolution of 5G phased array technology has been marked by several key milestones. Early implementations focused on sub-6 GHz bands with limited array elements, while current advanced systems operate in mmWave spectrum (24-100 GHz) with hundreds of antenna elements. This progression has been driven by the need to overcome the severe path loss and penetration challenges inherent to higher frequency communications while maintaining signal robustness in dynamic environments.

A primary objective of phased array implementation in 5G is to achieve directional, high-gain beamforming that can compensate for the propagation limitations of mmWave signals. This directional approach represents a paradigm shift from the omnidirectional transmission methods of previous generations, enabling focused energy transmission that significantly improves signal-to-noise ratios and reduces interference in dense deployment scenarios.

Signal robustness, a critical performance metric for 5G networks, has been enhanced through several evolutionary improvements in phased array design. These include the development of adaptive beamforming algorithms that can rapidly adjust to changing channel conditions, multi-user MIMO techniques that optimize spatial multiplexing, and beam tracking mechanisms that maintain connectivity during user mobility. Each advancement has contributed to more reliable communications in challenging propagation environments.

The technical objectives for current and future phased array systems in 5G focus on several dimensions: increasing spectral efficiency through higher-order spatial multiplexing, reducing power consumption through more efficient RF front-end designs, minimizing form factors for easier deployment, and enhancing beam agility for faster adaptation to dynamic environments. These objectives are particularly important for ensuring signal robustness in non-line-of-sight conditions and high-mobility scenarios.

Looking forward, the evolution of phased arrays aims to address remaining challenges in 5G deployment, including beam management complexity, hardware calibration requirements, and cost-effective manufacturing at scale. The industry is moving toward more integrated solutions that combine RF components, digital processing, and thermal management in compact packages suitable for diverse deployment scenarios from macro cells to small cells and customer premises equipment.

The evolution of 5G phased array technology has been marked by several key milestones. Early implementations focused on sub-6 GHz bands with limited array elements, while current advanced systems operate in mmWave spectrum (24-100 GHz) with hundreds of antenna elements. This progression has been driven by the need to overcome the severe path loss and penetration challenges inherent to higher frequency communications while maintaining signal robustness in dynamic environments.

A primary objective of phased array implementation in 5G is to achieve directional, high-gain beamforming that can compensate for the propagation limitations of mmWave signals. This directional approach represents a paradigm shift from the omnidirectional transmission methods of previous generations, enabling focused energy transmission that significantly improves signal-to-noise ratios and reduces interference in dense deployment scenarios.

Signal robustness, a critical performance metric for 5G networks, has been enhanced through several evolutionary improvements in phased array design. These include the development of adaptive beamforming algorithms that can rapidly adjust to changing channel conditions, multi-user MIMO techniques that optimize spatial multiplexing, and beam tracking mechanisms that maintain connectivity during user mobility. Each advancement has contributed to more reliable communications in challenging propagation environments.

The technical objectives for current and future phased array systems in 5G focus on several dimensions: increasing spectral efficiency through higher-order spatial multiplexing, reducing power consumption through more efficient RF front-end designs, minimizing form factors for easier deployment, and enhancing beam agility for faster adaptation to dynamic environments. These objectives are particularly important for ensuring signal robustness in non-line-of-sight conditions and high-mobility scenarios.

Looking forward, the evolution of phased arrays aims to address remaining challenges in 5G deployment, including beam management complexity, hardware calibration requirements, and cost-effective manufacturing at scale. The industry is moving toward more integrated solutions that combine RF components, digital processing, and thermal management in compact packages suitable for diverse deployment scenarios from macro cells to small cells and customer premises equipment.

Market Demand Analysis for 5G Phased Array Solutions

The global market for 5G phased array solutions is experiencing unprecedented growth, driven by the rapid deployment of 5G networks worldwide. Current market analysis indicates that the demand for phased array technology in 5G infrastructure is primarily fueled by the need for enhanced signal robustness in increasingly congested wireless environments. Telecommunications operators are actively seeking solutions that can provide reliable connectivity in high-density urban areas, where signal interference and multipath effects pose significant challenges.

Market research reveals that the telecommunications sector's investment in phased array technology has grown substantially since 2019, with major network operators allocating significant portions of their capital expenditure to advanced antenna systems. This trend is particularly evident in regions with high population density and sophisticated digital economies, such as East Asia, North America, and Western Europe.

Consumer demand for high-bandwidth applications, including 4K/8K video streaming, augmented reality, and cloud gaming, is creating pressure on network operators to enhance signal quality and reliability. Enterprise customers are similarly driving demand through requirements for mission-critical applications in healthcare, manufacturing, and smart city initiatives, where signal robustness is non-negotiable.

The market for 5G phased array solutions shows distinct segmentation based on deployment scenarios. Urban deployments prioritize solutions offering high capacity and interference mitigation, while suburban and rural implementations focus on extended coverage and energy efficiency. This diversification has created specialized market niches for phased array technologies optimized for specific deployment contexts.

Industry surveys indicate that network operators consider signal robustness as the second most important factor in their purchasing decisions for 5G infrastructure, following only total cost of ownership. This prioritization reflects the growing recognition that consistent service quality is essential for customer retention and competitive differentiation in saturated telecommunications markets.

Regulatory developments are also shaping market demand, with spectrum allocation policies in key markets increasingly favoring technologies that demonstrate efficient spectrum utilization. Phased array systems, with their ability to implement advanced beamforming techniques, are well-positioned to meet these regulatory requirements, further stimulating market demand.

Market forecasts suggest that the demand for phased array solutions will continue to accelerate as 5G networks mature and densify. The transition from initial coverage-focused deployments to capacity-enhancement phases is expected to drive particularly strong growth in urban and suburban markets, where signal robustness challenges are most acute.

Market research reveals that the telecommunications sector's investment in phased array technology has grown substantially since 2019, with major network operators allocating significant portions of their capital expenditure to advanced antenna systems. This trend is particularly evident in regions with high population density and sophisticated digital economies, such as East Asia, North America, and Western Europe.

Consumer demand for high-bandwidth applications, including 4K/8K video streaming, augmented reality, and cloud gaming, is creating pressure on network operators to enhance signal quality and reliability. Enterprise customers are similarly driving demand through requirements for mission-critical applications in healthcare, manufacturing, and smart city initiatives, where signal robustness is non-negotiable.

The market for 5G phased array solutions shows distinct segmentation based on deployment scenarios. Urban deployments prioritize solutions offering high capacity and interference mitigation, while suburban and rural implementations focus on extended coverage and energy efficiency. This diversification has created specialized market niches for phased array technologies optimized for specific deployment contexts.

Industry surveys indicate that network operators consider signal robustness as the second most important factor in their purchasing decisions for 5G infrastructure, following only total cost of ownership. This prioritization reflects the growing recognition that consistent service quality is essential for customer retention and competitive differentiation in saturated telecommunications markets.

Regulatory developments are also shaping market demand, with spectrum allocation policies in key markets increasingly favoring technologies that demonstrate efficient spectrum utilization. Phased array systems, with their ability to implement advanced beamforming techniques, are well-positioned to meet these regulatory requirements, further stimulating market demand.

Market forecasts suggest that the demand for phased array solutions will continue to accelerate as 5G networks mature and densify. The transition from initial coverage-focused deployments to capacity-enhancement phases is expected to drive particularly strong growth in urban and suburban markets, where signal robustness challenges are most acute.

Current Phased Array Technology Challenges in 5G

Despite significant advancements in phased array technology for 5G deployment, several critical challenges persist that impact signal robustness and overall system performance. One of the primary obstacles is the thermal management of densely packed antenna arrays. As 5G systems operate at higher frequencies with more elements in smaller form factors, heat dissipation becomes increasingly problematic, leading to potential signal degradation and reduced component lifespan.

Beamforming accuracy presents another significant challenge, particularly in dynamic environments. Current phased array systems struggle to maintain precise beam steering when confronted with rapidly moving targets or complex multipath scenarios. This limitation directly impacts signal robustness in urban environments where reflections and obstructions are common, resulting in inconsistent coverage and reduced data rates.

Power consumption remains a critical concern for widespread 5G implementation. The complex signal processing required for advanced beamforming techniques demands substantial computational resources, creating a trade-off between performance and energy efficiency. This challenge is particularly acute for small cell deployments where power availability may be limited, forcing compromises in array performance and signal robustness.

Manufacturing complexity and cost considerations continue to impede the mass deployment of sophisticated phased array systems. The precise calibration required between array elements and the need for high-quality RF components drive up production expenses. These factors have slowed the adoption of more advanced array architectures that could potentially deliver superior signal robustness.

Interference management represents an evolving challenge as 5G networks become more densely deployed. Current phased array technologies struggle to effectively mitigate interference from adjacent cells or other wireless systems operating in nearby frequency bands. This limitation can significantly degrade signal quality in congested spectrum environments, reducing the theoretical performance advantages of 5G technology.

Weather susceptibility remains a persistent issue, particularly for millimeter-wave implementations. Current phased array systems experience notable signal attenuation during precipitation events, with rain, snow, and fog causing substantial degradation in link reliability. This vulnerability undermines the consistency of service quality, especially in regions with variable weather conditions.

Lastly, the integration of phased arrays with existing infrastructure presents both technical and logistical challenges. The physical installation requirements, backhaul connectivity needs, and interoperability with legacy systems create implementation barriers that impact the overall robustness of 5G networks, particularly during the transition period from 4G to 5G technologies.

Beamforming accuracy presents another significant challenge, particularly in dynamic environments. Current phased array systems struggle to maintain precise beam steering when confronted with rapidly moving targets or complex multipath scenarios. This limitation directly impacts signal robustness in urban environments where reflections and obstructions are common, resulting in inconsistent coverage and reduced data rates.

Power consumption remains a critical concern for widespread 5G implementation. The complex signal processing required for advanced beamforming techniques demands substantial computational resources, creating a trade-off between performance and energy efficiency. This challenge is particularly acute for small cell deployments where power availability may be limited, forcing compromises in array performance and signal robustness.

Manufacturing complexity and cost considerations continue to impede the mass deployment of sophisticated phased array systems. The precise calibration required between array elements and the need for high-quality RF components drive up production expenses. These factors have slowed the adoption of more advanced array architectures that could potentially deliver superior signal robustness.

Interference management represents an evolving challenge as 5G networks become more densely deployed. Current phased array technologies struggle to effectively mitigate interference from adjacent cells or other wireless systems operating in nearby frequency bands. This limitation can significantly degrade signal quality in congested spectrum environments, reducing the theoretical performance advantages of 5G technology.

Weather susceptibility remains a persistent issue, particularly for millimeter-wave implementations. Current phased array systems experience notable signal attenuation during precipitation events, with rain, snow, and fog causing substantial degradation in link reliability. This vulnerability undermines the consistency of service quality, especially in regions with variable weather conditions.

Lastly, the integration of phased arrays with existing infrastructure presents both technical and logistical challenges. The physical installation requirements, backhaul connectivity needs, and interoperability with legacy systems create implementation barriers that impact the overall robustness of 5G networks, particularly during the transition period from 4G to 5G technologies.

Signal Robustness Comparison in Current Solutions

01 Signal processing techniques for phased array robustness

Various signal processing algorithms can be implemented to enhance the robustness of phased array systems against interference and noise. These techniques include adaptive beamforming, digital signal processing methods, and filtering algorithms that can dynamically adjust to changing signal environments. By implementing these advanced processing techniques, phased array systems can maintain signal integrity even in challenging conditions, improving overall system reliability and performance.- Interference mitigation techniques for phased array systems: Various techniques are employed to mitigate interference in phased array systems, enhancing signal robustness. These include adaptive beamforming algorithms that dynamically adjust the array pattern to suppress interference sources, spatial filtering methods that exploit the directional properties of phased arrays to reject unwanted signals, and advanced signal processing techniques that can identify and remove interference components from the received signal, thereby improving the overall system performance in challenging electromagnetic environments.

- Environmental resilience in phased array radar systems: Phased array radar systems incorporate various features to maintain signal robustness against environmental challenges. These include compensation mechanisms for atmospheric effects that can distort radar signals, temperature stabilization systems that maintain consistent performance across varying thermal conditions, and weatherproofing techniques that protect sensitive electronic components from moisture, dust, and other environmental factors. These approaches collectively ensure reliable operation and consistent signal quality in diverse and challenging operational environments.

- Calibration and error correction methods: Robust phased array systems employ sophisticated calibration and error correction methods to maintain signal integrity. These include real-time phase and amplitude calibration techniques that compensate for component variations, automated diagnostic routines that detect and correct system anomalies, and adaptive algorithms that continuously optimize array performance. By implementing these methods, phased array systems can achieve higher precision in beam steering, reduced sidelobe levels, and more accurate target detection and tracking capabilities even when individual array elements experience degradation.

- Redundancy and fault tolerance designs: To enhance signal robustness, phased array systems incorporate redundancy and fault tolerance features. These include distributed processing architectures that continue functioning even when some components fail, graceful degradation capabilities that maintain acceptable performance despite partial system failures, and hot-swappable modules that allow for maintenance without system shutdown. These design approaches ensure continuous operation and signal integrity even when the system experiences component failures or damage, making them particularly valuable for critical applications in defense, aerospace, and communications.

- Advanced signal processing algorithms: Phased array systems employ sophisticated signal processing algorithms to enhance signal robustness. These include adaptive filtering techniques that dynamically respond to changing signal environments, machine learning approaches that can identify and extract signals from complex noise backgrounds, and digital beamforming methods that provide greater flexibility in signal manipulation compared to analog approaches. These advanced algorithms enable phased array systems to maintain high performance in challenging signal environments with multiple sources of interference, multipath effects, and low signal-to-noise ratios.

02 Hardware design for improved signal robustness

The physical design and architecture of phased array systems significantly impact signal robustness. Key hardware considerations include antenna element configuration, component quality, thermal management, and electromagnetic shielding. Advanced materials and manufacturing techniques can reduce signal degradation, while redundant elements and fault-tolerant designs ensure continued operation even when individual components fail. These hardware improvements collectively enhance the resilience of phased array systems in various operational environments.Expand Specific Solutions03 Calibration and error correction methods

Regular calibration and error correction are essential for maintaining phased array signal robustness. These methods compensate for manufacturing variations, component aging, and environmental effects that could otherwise degrade performance. Automated self-calibration routines, phase error correction algorithms, and real-time monitoring systems can detect and adjust for deviations, ensuring consistent signal quality and accurate beamforming. These techniques are particularly important for systems operating in dynamic or harsh environments.Expand Specific Solutions04 Environmental adaptation and interference mitigation

Phased array systems must adapt to changing environmental conditions and mitigate various forms of interference to maintain signal robustness. This includes techniques for operating in adverse weather, compensating for atmospheric effects, and rejecting jamming signals. Adaptive algorithms can identify and suppress interference sources, while environmental sensing capabilities allow the system to proactively adjust parameters based on current conditions. These adaptations ensure reliable performance across diverse operational scenarios.Expand Specific Solutions05 Power management and fault tolerance

Effective power management and fault tolerance strategies are crucial for maintaining phased array signal robustness. These include intelligent power distribution, energy-efficient signal processing, and graceful degradation capabilities. Systems can be designed to operate with reduced functionality rather than complete failure when components malfunction. Redundant power supplies, backup processing units, and fault detection mechanisms ensure continuous operation even during partial system failures, enhancing overall reliability and signal integrity.Expand Specific Solutions

Key Industry Players in 5G Phased Array Market

The phased array technology in 5G deployment is currently in a growth phase, with the market expected to expand significantly as 5G networks continue to roll out globally. The competitive landscape is characterized by established telecommunications giants and semiconductor leaders developing increasingly sophisticated solutions. Huawei, Samsung, Qualcomm, Nokia, and Ericsson are at the forefront, having achieved high technical maturity in phased array antenna systems that enhance signal robustness through beamforming capabilities. MediaTek, Infineon, and GlobalFoundries are advancing component-level innovations, while research institutions like Georgia Tech and KAIST contribute to fundamental technological breakthroughs. The ecosystem is evolving toward more integrated, energy-efficient solutions with improved signal performance in challenging environments.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed advanced Massive MIMO phased array systems for 5G deployment with their MetaAAU technology. Their solution incorporates 384 antenna elements with innovative Extremely Large Antenna Array (ELAA) architecture, enabling precise beamforming capabilities. The company's phased array technology utilizes adaptive beamforming algorithms that dynamically adjust signal patterns based on real-time environmental conditions and user distribution. Huawei's systems employ advanced calibration techniques to maintain phase coherence across the array elements, which is crucial for signal robustness in challenging environments. Their MetaAAU technology demonstrates up to 30% wider coverage and 6dB higher uplink signal gain compared to traditional AAU solutions, significantly enhancing signal robustness in weak coverage scenarios. Additionally, Huawei implements AI-driven beam management that continuously optimizes signal quality by predicting user movement patterns and preemptively adjusting beam directions.

Strengths: Superior coverage extension in weak signal areas; excellent energy efficiency (approximately 30% reduction in power consumption); advanced AI-driven beam optimization for dynamic environments. Weaknesses: Higher implementation complexity requiring specialized expertise; potential security concerns in some markets limiting deployment options; relatively higher initial deployment costs compared to conventional antenna systems.

QUALCOMM, Inc.

Technical Solution: Qualcomm has developed comprehensive phased array solutions for 5G deployment through their QTM527 mmWave antenna modules and Snapdragon X65/X70 modem-RF systems. Their approach integrates phased array technology directly into both network infrastructure and end-user devices, creating a cohesive ecosystem that optimizes signal robustness across the entire communication chain. Qualcomm's phased array implementation features their proprietary Smart Transmit technology that dynamically balances power across multiple antenna elements to maximize signal strength while maintaining regulatory compliance. Their systems employ advanced beamforming techniques with up to 1024-QAM modulation support, enabling high data rates even in challenging signal conditions. Qualcomm's solution incorporates AI-enhanced beam selection algorithms that can predict optimal beam patterns based on environmental factors and usage patterns. Their mmWave modules support beam switching times of less than 5 microseconds, allowing for extremely responsive adaptation to changing signal conditions. Additionally, Qualcomm's phased arrays implement sophisticated power management techniques that optimize energy efficiency while maintaining signal quality.

Strengths: End-to-end optimization across both infrastructure and devices; superior mmWave performance with extended range capabilities; excellent power efficiency through adaptive algorithms. Weaknesses: Higher component costs affecting overall solution pricing; greater complexity in initial calibration and setup; more sensitive to physical blockage compared to sub-6GHz solutions.

Critical Patents in Phased Array Signal Processing

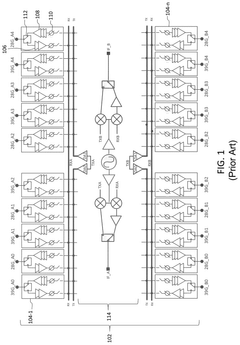

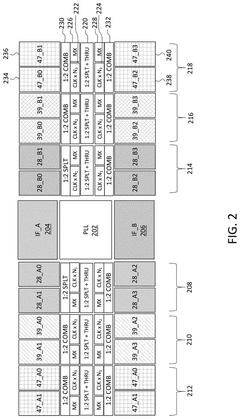

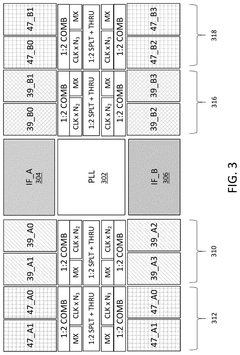

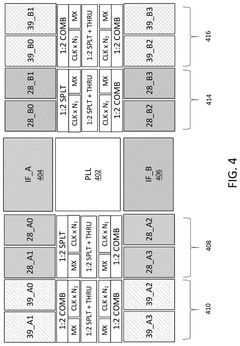

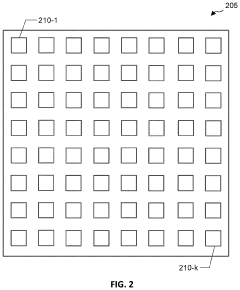

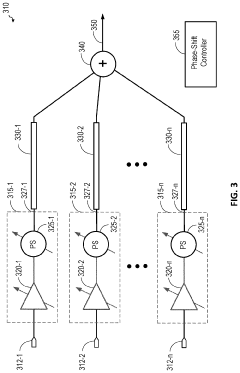

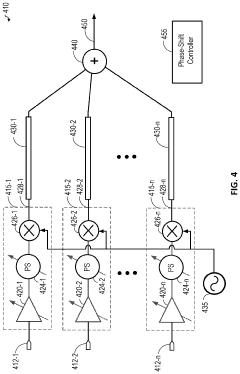

Tile-based phased-array architecture for multi-band phased-array design and communication method

PatentActiveUS12113301B2

Innovation

- A tile-based RFIC architecture that integrates PLL, data stream circuitry, and FE elements, with each tile designed for specific frequency bands and configured to communicate data stream signals in a cascading sequence, using up/down conversion mixers and FE elements to convert signals between IF and RF, reducing signal distribution losses and power consumption.

Signal combiner

PatentPendingUS20220207428A1

Innovation

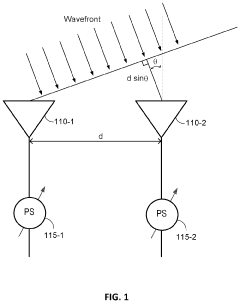

- A phased-array receiver system that includes multiple receiver elements, low noise amplifiers, phase shifters, and a combiner to electronically steer the receive direction by aligning signal phases and combining them effectively, using transmission lines to route signals from receiver elements to the combiner for constructive combination.

Spectrum Allocation Impact on Phased Array Performance

Spectrum allocation significantly influences phased array performance in 5G networks, with different frequency bands offering distinct advantages and challenges. The millimeter wave (mmWave) spectrum (24-100 GHz) provides substantial bandwidth but requires sophisticated phased array implementations to overcome propagation limitations. These higher frequencies experience greater path loss and atmospheric absorption, necessitating more antenna elements and precise beamforming to maintain signal integrity.

Lower frequency allocations (sub-6 GHz) offer better propagation characteristics but provide less bandwidth. Phased arrays operating in these bands can achieve wider coverage with fewer elements, though they deliver more modest data rates compared to mmWave implementations. This fundamental trade-off between coverage and capacity directly impacts deployment strategies and hardware requirements.

The mid-band spectrum (3.5-6 GHz) represents a compromise position, balancing reasonable propagation characteristics with moderate bandwidth availability. Phased arrays in this range demonstrate good performance in urban and suburban environments without the extreme density requirements of mmWave deployments.

Spectrum fragmentation across different regions presents additional challenges for phased array design. Global variations in frequency allocations necessitate region-specific array configurations, complicating equipment standardization and increasing manufacturing costs. For example, C-band allocations differ significantly between North America, Europe, and Asia, requiring tailored phased array designs for each market.

Channel bandwidth allocations within each spectrum band also impact array performance. Wider channels enable higher data rates but may require more sophisticated signal processing to maintain robustness against interference. The 100-400 MHz channels typical in mmWave deployments demand more complex beamforming algorithms compared to the narrower channels in sub-6 GHz bands.

Spectrum sharing scenarios, increasingly common in 5G deployments, introduce additional complexity for phased array systems. Dynamic spectrum access requires arrays capable of rapidly adapting to changing frequency availability, potentially compromising performance optimization for any single band. Cognitive radio techniques integrated with phased array systems show promise in addressing these challenges, enabling more efficient spectrum utilization while maintaining signal robustness.

The licensing model for different spectrum allocations further influences deployment strategies. Exclusively licensed bands permit optimized phased array configurations with predictable interference profiles, while shared or unlicensed spectrum requires more adaptive approaches to maintain performance in variable interference environments.

Lower frequency allocations (sub-6 GHz) offer better propagation characteristics but provide less bandwidth. Phased arrays operating in these bands can achieve wider coverage with fewer elements, though they deliver more modest data rates compared to mmWave implementations. This fundamental trade-off between coverage and capacity directly impacts deployment strategies and hardware requirements.

The mid-band spectrum (3.5-6 GHz) represents a compromise position, balancing reasonable propagation characteristics with moderate bandwidth availability. Phased arrays in this range demonstrate good performance in urban and suburban environments without the extreme density requirements of mmWave deployments.

Spectrum fragmentation across different regions presents additional challenges for phased array design. Global variations in frequency allocations necessitate region-specific array configurations, complicating equipment standardization and increasing manufacturing costs. For example, C-band allocations differ significantly between North America, Europe, and Asia, requiring tailored phased array designs for each market.

Channel bandwidth allocations within each spectrum band also impact array performance. Wider channels enable higher data rates but may require more sophisticated signal processing to maintain robustness against interference. The 100-400 MHz channels typical in mmWave deployments demand more complex beamforming algorithms compared to the narrower channels in sub-6 GHz bands.

Spectrum sharing scenarios, increasingly common in 5G deployments, introduce additional complexity for phased array systems. Dynamic spectrum access requires arrays capable of rapidly adapting to changing frequency availability, potentially compromising performance optimization for any single band. Cognitive radio techniques integrated with phased array systems show promise in addressing these challenges, enabling more efficient spectrum utilization while maintaining signal robustness.

The licensing model for different spectrum allocations further influences deployment strategies. Exclusively licensed bands permit optimized phased array configurations with predictable interference profiles, while shared or unlicensed spectrum requires more adaptive approaches to maintain performance in variable interference environments.

Energy Efficiency Considerations in Deployment

Energy efficiency has emerged as a critical factor in the deployment of 5G phased array systems, directly impacting operational costs, environmental sustainability, and network performance. The power consumption of phased array antenna systems in 5G networks is significantly higher compared to previous generations due to the increased number of antenna elements and complex signal processing requirements. Current estimates indicate that a typical 5G base station consumes approximately 2-3 times more power than its 4G counterpart, creating substantial challenges for network operators.

The energy consumption profile of phased array systems varies considerably based on deployment scenarios. Dense urban deployments typically require more energy due to higher capacity demands and interference management needs, while suburban and rural implementations face different efficiency challenges related to coverage optimization. Research indicates that beamforming techniques, while enhancing signal robustness, introduce additional energy overhead of 15-30% depending on implementation specifics.

Advanced power management strategies have been developed to address these challenges. Adaptive power control mechanisms that dynamically adjust transmission power based on traffic load and channel conditions can reduce energy consumption by up to 25%. Sleep mode operations, which selectively deactivate antenna elements during low-traffic periods, have demonstrated energy savings of 30-40% in real-world deployments while maintaining acceptable signal quality.

Hardware innovations are equally important in improving energy efficiency. The transition from traditional silicon-based power amplifiers to Gallium Nitride (GaN) technology has yielded efficiency improvements of 15-20%. Additionally, integrated circuit designs that optimize power distribution across the phased array elements have reduced energy losses by approximately 10-15% compared to first-generation 5G hardware implementations.

The relationship between signal robustness and energy efficiency presents important trade-offs. Higher transmission power improves signal reliability but increases energy consumption, while sophisticated beamforming algorithms enhance signal quality at the cost of computational overhead. Field tests have demonstrated that optimized beamforming configurations can maintain 95% of maximum signal robustness while reducing energy consumption by up to 35% compared to maximum power configurations.

Looking forward, emerging technologies such as AI-driven predictive power management and reconfigurable intelligent surfaces (RIS) promise further efficiency gains. Early implementations of AI-based systems have demonstrated potential energy savings of 20-30% while maintaining comparable signal performance metrics. These advancements will be crucial as 5G networks continue to expand, particularly in meeting sustainability goals and controlling operational expenses.

The energy consumption profile of phased array systems varies considerably based on deployment scenarios. Dense urban deployments typically require more energy due to higher capacity demands and interference management needs, while suburban and rural implementations face different efficiency challenges related to coverage optimization. Research indicates that beamforming techniques, while enhancing signal robustness, introduce additional energy overhead of 15-30% depending on implementation specifics.

Advanced power management strategies have been developed to address these challenges. Adaptive power control mechanisms that dynamically adjust transmission power based on traffic load and channel conditions can reduce energy consumption by up to 25%. Sleep mode operations, which selectively deactivate antenna elements during low-traffic periods, have demonstrated energy savings of 30-40% in real-world deployments while maintaining acceptable signal quality.

Hardware innovations are equally important in improving energy efficiency. The transition from traditional silicon-based power amplifiers to Gallium Nitride (GaN) technology has yielded efficiency improvements of 15-20%. Additionally, integrated circuit designs that optimize power distribution across the phased array elements have reduced energy losses by approximately 10-15% compared to first-generation 5G hardware implementations.

The relationship between signal robustness and energy efficiency presents important trade-offs. Higher transmission power improves signal reliability but increases energy consumption, while sophisticated beamforming algorithms enhance signal quality at the cost of computational overhead. Field tests have demonstrated that optimized beamforming configurations can maintain 95% of maximum signal robustness while reducing energy consumption by up to 35% compared to maximum power configurations.

Looking forward, emerging technologies such as AI-driven predictive power management and reconfigurable intelligent surfaces (RIS) promise further efficiency gains. Early implementations of AI-based systems have demonstrated potential energy savings of 20-30% while maintaining comparable signal performance metrics. These advancements will be crucial as 5G networks continue to expand, particularly in meeting sustainability goals and controlling operational expenses.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!