Compiler toolchains for RISC-V: GCC vs LLVM optimizations

AUG 25, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

RISC-V Compiler Evolution and Objectives

The RISC-V instruction set architecture has emerged as a significant open-source alternative to proprietary architectures, gaining momentum across various computing domains. The evolution of compiler technology for RISC-V represents a critical component in the architecture's adoption and performance optimization. Historically, compiler development for new architectures has followed established patterns of initial support followed by progressive optimization phases, and RISC-V is no exception.

The compiler landscape for RISC-V has evolved from basic functionality to increasingly sophisticated optimization capabilities. Initially, compiler support focused on fundamental instruction generation and correctness, with GCC being the first major compiler to provide comprehensive RISC-V support. As the architecture matured, LLVM support emerged, creating a dual-track ecosystem that offers developers alternative toolchains with different optimization philosophies and capabilities.

The technical objectives for RISC-V compilers center on several key dimensions. First, performance optimization remains paramount, with particular emphasis on leveraging RISC-V's extensible instruction set architecture to generate highly efficient code. This includes specialized optimizations for the various standard extensions (M, A, F, D, C) as well as custom extensions that implementers may develop.

Power efficiency represents another critical objective, particularly as RISC-V gains traction in embedded and IoT applications. Compiler optimizations that minimize energy consumption through instruction selection, scheduling, and code size reduction are increasingly important in these domains.

Code size optimization constitutes a third major objective, especially for embedded applications where memory footprint directly impacts system cost. The RISC-V compressed instruction extension (C) provides hardware support for this goal, but compiler intelligence in utilizing these instructions effectively remains essential.

Cross-platform compatibility presents unique challenges and opportunities, as RISC-V implementations span from tiny microcontrollers to high-performance computing systems. Compilers must adapt optimization strategies across this spectrum while maintaining consistent behavior.

Looking forward, the technical trajectory for RISC-V compilers includes deeper integration with vector extensions (V), bit manipulation extensions (B), and other emerging ISA features. Additionally, auto-vectorization capabilities, profile-guided optimization, and link-time optimization represent areas where both GCC and LLVM continue to evolve their RISC-V support.

The comparative analysis of GCC versus LLVM optimization approaches reveals different strengths, with implications for various application domains and development workflows. Understanding these differences is crucial for developers and organizations making strategic decisions about their RISC-V toolchain investments.

The compiler landscape for RISC-V has evolved from basic functionality to increasingly sophisticated optimization capabilities. Initially, compiler support focused on fundamental instruction generation and correctness, with GCC being the first major compiler to provide comprehensive RISC-V support. As the architecture matured, LLVM support emerged, creating a dual-track ecosystem that offers developers alternative toolchains with different optimization philosophies and capabilities.

The technical objectives for RISC-V compilers center on several key dimensions. First, performance optimization remains paramount, with particular emphasis on leveraging RISC-V's extensible instruction set architecture to generate highly efficient code. This includes specialized optimizations for the various standard extensions (M, A, F, D, C) as well as custom extensions that implementers may develop.

Power efficiency represents another critical objective, particularly as RISC-V gains traction in embedded and IoT applications. Compiler optimizations that minimize energy consumption through instruction selection, scheduling, and code size reduction are increasingly important in these domains.

Code size optimization constitutes a third major objective, especially for embedded applications where memory footprint directly impacts system cost. The RISC-V compressed instruction extension (C) provides hardware support for this goal, but compiler intelligence in utilizing these instructions effectively remains essential.

Cross-platform compatibility presents unique challenges and opportunities, as RISC-V implementations span from tiny microcontrollers to high-performance computing systems. Compilers must adapt optimization strategies across this spectrum while maintaining consistent behavior.

Looking forward, the technical trajectory for RISC-V compilers includes deeper integration with vector extensions (V), bit manipulation extensions (B), and other emerging ISA features. Additionally, auto-vectorization capabilities, profile-guided optimization, and link-time optimization represent areas where both GCC and LLVM continue to evolve their RISC-V support.

The comparative analysis of GCC versus LLVM optimization approaches reveals different strengths, with implications for various application domains and development workflows. Understanding these differences is crucial for developers and organizations making strategic decisions about their RISC-V toolchain investments.

Market Demand for RISC-V Compilation Solutions

The RISC-V architecture has experienced significant market growth in recent years, driving increased demand for sophisticated compilation solutions. Market research indicates that RISC-V shipments are projected to reach billions of units by 2025, with a compound annual growth rate exceeding 60% between 2020-2025. This explosive growth has created substantial demand for optimized compiler toolchains that can maximize the architecture's performance potential.

The market for RISC-V compilation solutions is primarily segmented into three key sectors: embedded systems, data centers, and consumer electronics. In the embedded systems space, demand is driven by IoT devices, automotive applications, and industrial automation, where code size optimization and energy efficiency are paramount concerns. These applications require compilers capable of generating highly optimized code that minimizes resource utilization.

Data center applications represent another significant market segment, where performance optimization is the primary concern. As organizations explore RISC-V as an alternative to x86 and ARM architectures for server deployments, the need for compilers that can deliver competitive performance has intensified. This segment values compilation solutions that can effectively leverage RISC-V's extensibility and customization capabilities.

Consumer electronics manufacturers are increasingly adopting RISC-V for various applications, from smartphones to wearables. This market segment demands compilation solutions that balance performance with energy efficiency, while supporting rapid development cycles and diverse software ecosystems.

Geographic analysis reveals that Asia-Pacific currently leads RISC-V adoption, with China making substantial investments in domestic RISC-V development. North America follows closely, driven by technology companies seeking alternatives to proprietary architectures. Europe shows growing interest, particularly in automotive and industrial applications.

Market surveys indicate that compiler performance optimization ranks among the top three concerns for RISC-V adopters, alongside ecosystem maturity and hardware availability. Organizations evaluating RISC-V implementation cite compiler optimization capabilities as a critical factor in their decision-making process, highlighting the strategic importance of advanced compilation solutions.

The market increasingly demands specialized optimizations for various RISC-V extensions, including vector processing (RVV), bit manipulation, and cryptographic extensions. This trend is creating opportunities for compiler solutions that can effectively target these extensions while maintaining compatibility with the base instruction set.

The market for RISC-V compilation solutions is primarily segmented into three key sectors: embedded systems, data centers, and consumer electronics. In the embedded systems space, demand is driven by IoT devices, automotive applications, and industrial automation, where code size optimization and energy efficiency are paramount concerns. These applications require compilers capable of generating highly optimized code that minimizes resource utilization.

Data center applications represent another significant market segment, where performance optimization is the primary concern. As organizations explore RISC-V as an alternative to x86 and ARM architectures for server deployments, the need for compilers that can deliver competitive performance has intensified. This segment values compilation solutions that can effectively leverage RISC-V's extensibility and customization capabilities.

Consumer electronics manufacturers are increasingly adopting RISC-V for various applications, from smartphones to wearables. This market segment demands compilation solutions that balance performance with energy efficiency, while supporting rapid development cycles and diverse software ecosystems.

Geographic analysis reveals that Asia-Pacific currently leads RISC-V adoption, with China making substantial investments in domestic RISC-V development. North America follows closely, driven by technology companies seeking alternatives to proprietary architectures. Europe shows growing interest, particularly in automotive and industrial applications.

Market surveys indicate that compiler performance optimization ranks among the top three concerns for RISC-V adopters, alongside ecosystem maturity and hardware availability. Organizations evaluating RISC-V implementation cite compiler optimization capabilities as a critical factor in their decision-making process, highlighting the strategic importance of advanced compilation solutions.

The market increasingly demands specialized optimizations for various RISC-V extensions, including vector processing (RVV), bit manipulation, and cryptographic extensions. This trend is creating opportunities for compiler solutions that can effectively target these extensions while maintaining compatibility with the base instruction set.

Current State and Challenges in RISC-V Compilers

The RISC-V compiler ecosystem has evolved significantly in recent years, with GCC and LLVM emerging as the two dominant toolchains. Currently, both compilers support the base integer instruction sets (RV32I, RV64I) and most standard extensions including M (multiplication/division), A (atomic), F (single-precision floating-point), D (double-precision floating-point), and C (compressed instructions). However, support for newer extensions like V (vector), B (bit manipulation), and P (packed SIMD) remains in various stages of development across both platforms.

GCC for RISC-V has reached maturity with stable support in mainline versions since 7.1. It benefits from extensive testing in production environments and offers reliable code generation for most standard use cases. The GCC backend for RISC-V is particularly well-optimized for embedded systems and has been the default choice for many RISC-V hardware vendors due to its stability and predictable performance characteristics.

LLVM support for RISC-V has made remarkable progress, with full upstream integration achieved in LLVM 9.0. The LLVM toolchain offers more aggressive optimization strategies and a more modular architecture that facilitates experimentation with new instruction set extensions. Its modern codebase and design philosophy align well with RISC-V's extensible nature, making it increasingly popular among developers working on custom extensions.

Despite these advancements, both compiler toolchains face significant challenges. One major issue is the fragmentation of extension support, with different vendors implementing proprietary extensions that lack standardized compiler support. This creates compatibility issues and hinders the development of portable software across different RISC-V implementations.

Performance optimization remains another critical challenge. While both compilers perform adequately for general-purpose code, specialized workloads like machine learning, cryptography, and signal processing often require hand-tuned assembly or intrinsics to achieve optimal performance. The auto-vectorization capabilities, particularly important for high-performance computing, still lag behind those available for more established architectures like x86 and ARM.

The rapidly evolving RISC-V specification presents additional complications for compiler developers. As new extensions are ratified, compilers must quickly adapt to support them while maintaining backward compatibility. This constant evolution creates a moving target for optimization strategies and code generation patterns.

Testing and validation infrastructure also presents challenges. The diversity of RISC-V implementations means that compilers must generate correct code across a wide range of microarchitectures with different performance characteristics, making comprehensive testing difficult. The lack of standardized benchmarking suites specifically designed for RISC-V further complicates performance evaluation and optimization efforts.

GCC for RISC-V has reached maturity with stable support in mainline versions since 7.1. It benefits from extensive testing in production environments and offers reliable code generation for most standard use cases. The GCC backend for RISC-V is particularly well-optimized for embedded systems and has been the default choice for many RISC-V hardware vendors due to its stability and predictable performance characteristics.

LLVM support for RISC-V has made remarkable progress, with full upstream integration achieved in LLVM 9.0. The LLVM toolchain offers more aggressive optimization strategies and a more modular architecture that facilitates experimentation with new instruction set extensions. Its modern codebase and design philosophy align well with RISC-V's extensible nature, making it increasingly popular among developers working on custom extensions.

Despite these advancements, both compiler toolchains face significant challenges. One major issue is the fragmentation of extension support, with different vendors implementing proprietary extensions that lack standardized compiler support. This creates compatibility issues and hinders the development of portable software across different RISC-V implementations.

Performance optimization remains another critical challenge. While both compilers perform adequately for general-purpose code, specialized workloads like machine learning, cryptography, and signal processing often require hand-tuned assembly or intrinsics to achieve optimal performance. The auto-vectorization capabilities, particularly important for high-performance computing, still lag behind those available for more established architectures like x86 and ARM.

The rapidly evolving RISC-V specification presents additional complications for compiler developers. As new extensions are ratified, compilers must quickly adapt to support them while maintaining backward compatibility. This constant evolution creates a moving target for optimization strategies and code generation patterns.

Testing and validation infrastructure also presents challenges. The diversity of RISC-V implementations means that compilers must generate correct code across a wide range of microarchitectures with different performance characteristics, making comprehensive testing difficult. The lack of standardized benchmarking suites specifically designed for RISC-V further complicates performance evaluation and optimization efforts.

Comparative Analysis of GCC vs LLVM Optimization Techniques

01 Compiler optimization techniques for performance improvement

Various optimization techniques implemented in compiler toolchains like GCC and LLVM to improve code execution performance. These include loop optimizations, function inlining, constant propagation, dead code elimination, and instruction scheduling. These techniques analyze code patterns and transform them to generate more efficient machine code while preserving program semantics.- Code optimization techniques in compiler toolchains: Compiler toolchains like GCC and LLVM implement various code optimization techniques to improve program performance. These techniques include loop optimization, function inlining, constant propagation, dead code elimination, and instruction scheduling. By analyzing the program structure and applying these optimizations, compilers can generate more efficient machine code that executes faster and uses fewer resources.

- Intermediate representation and optimization passes: Modern compiler toolchains use intermediate representations (IR) as a bridge between source code and target machine code. GCC uses GIMPLE and RTL, while LLVM uses its own IR. These representations enable multiple optimization passes that progressively transform and improve the code. Each pass focuses on specific aspects like control flow analysis, data flow optimization, or target-specific optimizations, allowing for a modular and extensible optimization framework.

- Hardware-specific and platform-aware optimizations: Compiler toolchains implement hardware-specific optimizations that leverage features of target architectures. These include SIMD instruction generation, cache optimization, branch prediction improvements, and processor-specific tuning. GCC and LLVM can generate code optimized for specific CPU models, taking advantage of unique instruction sets and hardware capabilities to maximize performance on particular platforms.

- Link-time and whole-program optimization: Link-time optimization (LTO) and whole-program optimization techniques allow compilers to perform optimizations across multiple source files or entire programs. By analyzing the complete program at link time rather than individual compilation units, these techniques enable more aggressive optimizations like function specialization, interprocedural constant propagation, and global dead code elimination. Both GCC and LLVM support these capabilities to produce more efficient executables.

- Profile-guided and feedback-directed optimization: Profile-guided optimization (PGO) and feedback-directed optimization techniques use runtime profiling data to guide the optimization process. By collecting information about program behavior during execution, such as branch frequencies and hot paths, compilers can make more informed decisions about code layout, inlining, and other optimizations. This approach allows GCC and LLVM to optimize code based on actual usage patterns rather than static heuristics.

02 Intermediate representation and code generation strategies

Compiler toolchains use intermediate representations (IR) to facilitate optimization across different source languages and target architectures. LLVM's IR and GCC's GIMPLE allow for language-agnostic optimizations before final code generation. These strategies enable effective translation from high-level code to optimized machine code through multiple transformation phases.Expand Specific Solutions03 Hardware-specific and target architecture optimizations

Compiler toolchains implement optimizations tailored to specific hardware features and target architectures. These include vectorization for SIMD instructions, pipeline scheduling, register allocation, and architecture-specific code generation. GCC and LLVM can generate code optimized for different CPU models, instruction sets, and hardware capabilities.Expand Specific Solutions04 Profile-guided and feedback-directed optimizations

Optimization techniques that use runtime profiling data to guide compiler decisions. By collecting execution statistics during test runs, compilers can identify hot paths, optimize branch prediction, and improve code layout. These approaches allow GCC and LLVM to make more informed optimization decisions based on actual program behavior rather than static analysis alone.Expand Specific Solutions05 Link-time and whole-program optimization frameworks

Advanced optimization techniques that operate across compilation units during the linking phase. These frameworks enable interprocedural analysis, function specialization, and global dead code elimination that wouldn't be possible when compiling individual source files. LLVM's LTO and GCC's LTO/WHOPR allow for more aggressive optimizations by considering the entire program as an optimization unit.Expand Specific Solutions

Key Organizations in RISC-V Compiler Ecosystem

The RISC-V compiler toolchain landscape is currently in a growth phase, with market size expanding as RISC-V adoption increases across embedded systems, IoT, and data centers. While the technology is maturing rapidly, it remains less optimized than established architectures. IBM and Stream Computing are leading commercial development, with Stream Computing's STCP920 AI computing card demonstrating specialized RISC-V implementations. Academic institutions like Fudan University and the Institute of Software Chinese Academy of Sciences are contributing significant research to compiler optimizations. The ecosystem shows a competitive dynamic between GCC's established presence and LLVM's growing adoption, with companies increasingly investing in custom optimization techniques to leverage RISC-V's extensible architecture for specific workloads.

International Business Machines Corp.

Technical Solution: IBM has made significant contributions to RISC-V compiler technology through both GCC and LLVM toolchains. Their approach emphasizes enterprise-grade reliability and performance optimization for server and high-performance computing workloads. IBM's compiler implementations feature advanced loop optimization techniques, function inlining strategies, and interprocedural analysis specifically tailored for RISC-V. Their LLVM-based toolchain includes custom optimization passes for RISC-V that leverage IBM's extensive experience with POWER architecture optimization. IBM has developed specialized profile-guided optimization (PGO) techniques for RISC-V that enable dynamic feedback-directed compilation, resulting in significant performance improvements for specific workloads. Additionally, IBM has contributed to the development of OpenMP and OpenACC support in RISC-V compilers, enabling efficient parallel programming on RISC-V multicore systems.

Strengths: Enterprise-grade reliability, extensive experience with compiler optimization for RISC architectures, and strong focus on high-performance computing workloads. Weaknesses: Optimizations may be biased toward server-class implementations rather than embedded or low-power RISC-V variants, potentially limiting applicability across the full RISC-V ecosystem.

Tianjin Kylin Information Technology Corp

Technical Solution: Kylin has developed specialized compiler toolchain optimizations for RISC-V architectures with a focus on security and reliability for operating system development. Their compiler technology emphasizes both GCC and LLVM toolchains with particular attention to security-oriented optimizations. Kylin's approach includes enhanced static analysis capabilities integrated directly into the compilation process, identifying potential security vulnerabilities during code generation. Their LLVM implementation features specialized optimization passes for RISC-V that focus on maintaining security properties while maximizing performance. Kylin has developed custom optimization techniques for system-level code, including kernel and driver development, with specific attention to interrupt handling efficiency and context switching performance on RISC-V architectures. Additionally, they have contributed significant improvements to both toolchains for supporting secure compilation practices, including stack protection mechanisms and control-flow integrity features specifically adapted for RISC-V.

Strengths: Strong focus on security and reliability optimizations, specialized expertise in operating system development, and balanced approach between security and performance. Weaknesses: May prioritize security features over raw performance in some optimization decisions, potentially resulting in less aggressive optimizations compared to competitors focused primarily on performance.

Core Optimization Algorithms and Implementation Details

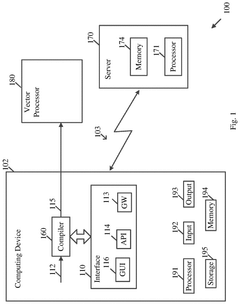

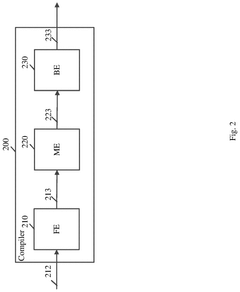

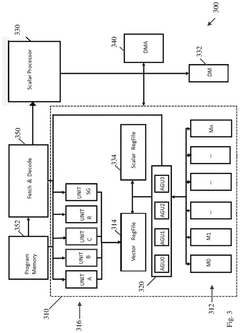

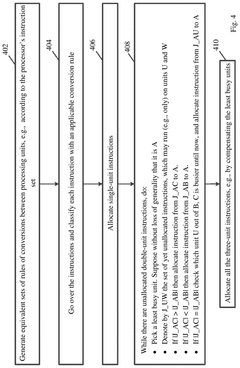

Apparatus, system, and method of compiling code for a processor

PatentPendingUS20250028509A1

Innovation

- The development of a compiler system that includes a Vector Micro-Code Processor (VMP) OpenCL compiler, utilizing a Low Level Virtual Machine (LLVM) based compilation scheme, to efficiently compile source code into target code optimized for vector processors. This involves a front-end for parsing OpenCL C-code, a middle-end for optimizations, and a back-end for generating target-specific machine code.

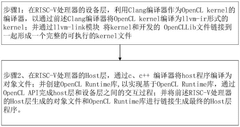

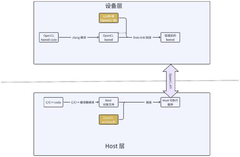

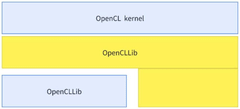

Method for realizing OpenCL on RISC-V processor

PatentPendingCN118860374A

Innovation

- Implement OpenCL on the RISC-V processor, compile the OpenCL kernel into llvm-ir form through the Clang compiler, and use the llvm-link tool link to generate a complete executable kernel file, develop the OpenCL Lib library and Runtime library, and support vector instructions and memory access characteristics, optimize the compilation and execution scheduling of the OpenCL kernel, and use LLVM Pass to perform statistical processing of vector and memory characteristics to achieve optimization for the RISC-V architecture.

Performance Benchmarking Methodologies

Effective performance benchmarking is crucial for comparing compiler optimizations between GCC and LLVM for RISC-V architectures. A comprehensive benchmarking methodology must incorporate standardized test suites that represent real-world workloads across various domains, including embedded systems, high-performance computing, and general-purpose applications relevant to RISC-V implementations.

The SPEC CPU benchmark suite serves as a primary industry standard for evaluating compiler performance, with both integer and floating-point components providing insights into different optimization capabilities. For RISC-V specific evaluation, the RISC-V architectural test suite and CoreMark benchmarks offer targeted metrics that highlight architecture-specific optimization opportunities.

Benchmarking methodologies should employ consistent measurement techniques across multiple dimensions: execution time, code size, memory usage, and power consumption. These metrics must be collected under controlled conditions with statistical rigor, typically running each test multiple times to account for system variability and reporting confidence intervals alongside mean values.

Cross-compilation scenarios require special consideration in the benchmarking methodology. Tests should evaluate both native compilation on RISC-V hardware and cross-compilation from x86 hosts, as these represent different use cases with potentially different optimization outcomes. The methodology should document the exact compiler flags, optimization levels, and target architecture specifications used for each test to ensure reproducibility.

Microbenchmarks targeting specific compiler optimizations provide granular insights into particular strengths and weaknesses of each toolchain. These should include loop optimizations, vectorization capabilities, link-time optimization effectiveness, and interprocedural analysis performance. Complementing these focused tests, application-level benchmarks evaluate how optimizations interact in complex codebases.

A robust methodology must also consider compilation time as a practical metric, particularly for development environments where build speed impacts productivity. The trade-off between compilation time and runtime performance represents an important consideration for toolchain selection in different development contexts.

Finally, the benchmarking approach should include a systematic analysis of generated assembly code to identify qualitative differences in optimization strategies between GCC and LLVM. This analysis helps explain performance variations and provides insights into how each compiler's internal architecture influences its optimization capabilities for the RISC-V instruction set.

The SPEC CPU benchmark suite serves as a primary industry standard for evaluating compiler performance, with both integer and floating-point components providing insights into different optimization capabilities. For RISC-V specific evaluation, the RISC-V architectural test suite and CoreMark benchmarks offer targeted metrics that highlight architecture-specific optimization opportunities.

Benchmarking methodologies should employ consistent measurement techniques across multiple dimensions: execution time, code size, memory usage, and power consumption. These metrics must be collected under controlled conditions with statistical rigor, typically running each test multiple times to account for system variability and reporting confidence intervals alongside mean values.

Cross-compilation scenarios require special consideration in the benchmarking methodology. Tests should evaluate both native compilation on RISC-V hardware and cross-compilation from x86 hosts, as these represent different use cases with potentially different optimization outcomes. The methodology should document the exact compiler flags, optimization levels, and target architecture specifications used for each test to ensure reproducibility.

Microbenchmarks targeting specific compiler optimizations provide granular insights into particular strengths and weaknesses of each toolchain. These should include loop optimizations, vectorization capabilities, link-time optimization effectiveness, and interprocedural analysis performance. Complementing these focused tests, application-level benchmarks evaluate how optimizations interact in complex codebases.

A robust methodology must also consider compilation time as a practical metric, particularly for development environments where build speed impacts productivity. The trade-off between compilation time and runtime performance represents an important consideration for toolchain selection in different development contexts.

Finally, the benchmarking approach should include a systematic analysis of generated assembly code to identify qualitative differences in optimization strategies between GCC and LLVM. This analysis helps explain performance variations and provides insights into how each compiler's internal architecture influences its optimization capabilities for the RISC-V instruction set.

Ecosystem Integration and Toolchain Compatibility

The RISC-V ecosystem's growth depends significantly on the integration capabilities of its compiler toolchains. GCC and LLVM demonstrate different approaches to ecosystem compatibility, with GCC offering robust support for traditional development environments while LLVM provides more flexible integration options for modern tooling landscapes.

GCC's toolchain for RISC-V has established strong compatibility with existing GNU/Linux development environments, making it particularly valuable for embedded systems and legacy codebases. Its integration with the GNU build system provides seamless interaction with autotools, make, and other traditional build infrastructures. However, GCC's monolithic architecture can present challenges when integrating with newer development paradigms and cloud-based compilation services.

LLVM's modular design offers superior flexibility for ecosystem integration, particularly in heterogeneous computing environments. Its library-based architecture allows components to be used independently, facilitating integration with IDEs, static analysis tools, and just-in-time compilation frameworks. This modularity has made LLVM the preferred choice for companies developing custom tooling solutions around RISC-V, especially in specialized domains like AI accelerators and domain-specific architectures.

Cross-platform development presents different integration challenges for both toolchains. LLVM's native cross-compilation capabilities provide a more streamlined experience across different host systems, while GCC often requires more complex configuration for cross-platform scenarios. This distinction becomes particularly relevant as RISC-V hardware targets diversify across embedded, mobile, and server applications.

IDE integration represents another critical aspect of ecosystem compatibility. LLVM's libclang and other components offer rich APIs that modern development environments like Visual Studio Code, CLion, and Eclipse can leverage for features such as code completion, refactoring, and static analysis. GCC has made progress in this area but still lags in providing comprehensive programmatic interfaces for IDE integration.

For continuous integration and deployment pipelines, both toolchains present different integration profiles. LLVM's more standardized error output format and modular design facilitate automated testing and integration with CI/CD systems. GCC's mature but less flexible architecture may require additional tooling to achieve the same level of automation in modern DevOps environments.

The emergence of language servers and cloud-based development environments has further highlighted the differences in toolchain compatibility. LLVM's architecture aligns well with these distributed development models, while GCC continues to evolve to meet these new integration challenges in the expanding RISC-V ecosystem.

GCC's toolchain for RISC-V has established strong compatibility with existing GNU/Linux development environments, making it particularly valuable for embedded systems and legacy codebases. Its integration with the GNU build system provides seamless interaction with autotools, make, and other traditional build infrastructures. However, GCC's monolithic architecture can present challenges when integrating with newer development paradigms and cloud-based compilation services.

LLVM's modular design offers superior flexibility for ecosystem integration, particularly in heterogeneous computing environments. Its library-based architecture allows components to be used independently, facilitating integration with IDEs, static analysis tools, and just-in-time compilation frameworks. This modularity has made LLVM the preferred choice for companies developing custom tooling solutions around RISC-V, especially in specialized domains like AI accelerators and domain-specific architectures.

Cross-platform development presents different integration challenges for both toolchains. LLVM's native cross-compilation capabilities provide a more streamlined experience across different host systems, while GCC often requires more complex configuration for cross-platform scenarios. This distinction becomes particularly relevant as RISC-V hardware targets diversify across embedded, mobile, and server applications.

IDE integration represents another critical aspect of ecosystem compatibility. LLVM's libclang and other components offer rich APIs that modern development environments like Visual Studio Code, CLion, and Eclipse can leverage for features such as code completion, refactoring, and static analysis. GCC has made progress in this area but still lags in providing comprehensive programmatic interfaces for IDE integration.

For continuous integration and deployment pipelines, both toolchains present different integration profiles. LLVM's more standardized error output format and modular design facilitate automated testing and integration with CI/CD systems. GCC's mature but less flexible architecture may require additional tooling to achieve the same level of automation in modern DevOps environments.

The emergence of language servers and cloud-based development environments has further highlighted the differences in toolchain compatibility. LLVM's architecture aligns well with these distributed development models, while GCC continues to evolve to meet these new integration challenges in the expanding RISC-V ecosystem.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!