RISC-V in data center offloads: DPUs, NICs, and storage

AUG 25, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

RISC-V Evolution and Data Center Offload Goals

RISC-V has emerged as a transformative force in computing architecture since its inception at UC Berkeley in 2010. This open-source instruction set architecture (ISA) has evolved from an academic project to a global industry standard, with significant implications for data center infrastructure. The evolution of RISC-V has been characterized by its modular design philosophy, allowing for customization while maintaining compatibility across implementations, a feature particularly valuable for specialized data center offload applications.

The trajectory of RISC-V development has accelerated dramatically in recent years, with the establishment of the RISC-V Foundation in 2015 (now RISC-V International) marking a pivotal moment in its commercialization. The subsequent ratification of the base ISA specification in 2019 provided the stability necessary for enterprise adoption, while extensions for vector processing, bit manipulation, and cryptography have enhanced its applicability to data center workloads.

In the context of data center offloads, RISC-V aims to address several critical challenges facing modern infrastructure. Primary among these is the need to overcome the performance limitations of traditional CPU-centric architectures when handling data-intensive tasks such as networking, storage, and security processing. By offloading these functions to specialized processors, data centers can achieve higher throughput, lower latency, and improved energy efficiency.

The strategic goals for RISC-V in data center offloads encompass both technical and economic dimensions. Technically, RISC-V seeks to provide a flexible computing foundation for DPUs (Data Processing Units), SmartNICs (Network Interface Cards), and storage controllers that can be optimized for specific workloads while maintaining programmability. This adaptability is crucial as data centers increasingly require customized acceleration for diverse applications ranging from AI inference to database operations.

Economically, RISC-V aims to disrupt the vendor lock-in prevalent in proprietary architectures, fostering an open ecosystem that reduces costs and accelerates innovation. By enabling semiconductor companies and system integrators to design purpose-built solutions without licensing fees, RISC-V potentially lowers the barrier to entry for creating specialized data center components.

The long-term vision for RISC-V in data center offloads extends beyond mere performance improvements to fundamentally reimagining data center architecture. As computational demands continue to diversify, the goal is to create a heterogeneous computing environment where specialized RISC-V cores handle specific tasks optimally, working in concert with general-purpose CPUs. This disaggregation of computing resources promises not only technical benefits but also more efficient resource utilization and improved scalability for next-generation data centers.

The trajectory of RISC-V development has accelerated dramatically in recent years, with the establishment of the RISC-V Foundation in 2015 (now RISC-V International) marking a pivotal moment in its commercialization. The subsequent ratification of the base ISA specification in 2019 provided the stability necessary for enterprise adoption, while extensions for vector processing, bit manipulation, and cryptography have enhanced its applicability to data center workloads.

In the context of data center offloads, RISC-V aims to address several critical challenges facing modern infrastructure. Primary among these is the need to overcome the performance limitations of traditional CPU-centric architectures when handling data-intensive tasks such as networking, storage, and security processing. By offloading these functions to specialized processors, data centers can achieve higher throughput, lower latency, and improved energy efficiency.

The strategic goals for RISC-V in data center offloads encompass both technical and economic dimensions. Technically, RISC-V seeks to provide a flexible computing foundation for DPUs (Data Processing Units), SmartNICs (Network Interface Cards), and storage controllers that can be optimized for specific workloads while maintaining programmability. This adaptability is crucial as data centers increasingly require customized acceleration for diverse applications ranging from AI inference to database operations.

Economically, RISC-V aims to disrupt the vendor lock-in prevalent in proprietary architectures, fostering an open ecosystem that reduces costs and accelerates innovation. By enabling semiconductor companies and system integrators to design purpose-built solutions without licensing fees, RISC-V potentially lowers the barrier to entry for creating specialized data center components.

The long-term vision for RISC-V in data center offloads extends beyond mere performance improvements to fundamentally reimagining data center architecture. As computational demands continue to diversify, the goal is to create a heterogeneous computing environment where specialized RISC-V cores handle specific tasks optimally, working in concert with general-purpose CPUs. This disaggregation of computing resources promises not only technical benefits but also more efficient resource utilization and improved scalability for next-generation data centers.

Market Demand Analysis for RISC-V Based Offload Solutions

The data center landscape is experiencing a significant transformation driven by the need for more efficient, scalable, and specialized computing solutions. RISC-V based offload solutions, including Data Processing Units (DPUs), Network Interface Cards (NICs), and storage controllers, are emerging as potential game-changers in this evolving market. Current market analysis indicates a growing demand for these solutions across various segments of the data center ecosystem.

Cloud service providers are increasingly seeking heterogeneous computing architectures to optimize workload performance while reducing operational costs. According to recent industry reports, the global market for data center accelerators is projected to grow at a compound annual growth rate of 36.7% from 2021 to 2026, reaching $21.2 billion. Within this broader market, RISC-V based solutions are gaining traction due to their customizability and potential for cost reduction.

The demand for RISC-V in data center offloads is primarily driven by several key factors. First, the open-source nature of RISC-V architecture enables greater customization and innovation, allowing companies to design purpose-built solutions for specific workloads without licensing fees. This represents a significant departure from proprietary architectures that dominate the current market.

Energy efficiency has become a critical concern for data center operators, with power consumption accounting for up to 40% of operational costs. RISC-V based offload solutions offer promising power efficiency advantages, particularly when optimized for specific tasks such as network packet processing or storage management. Market research indicates that data centers could potentially reduce power consumption by 15-25% through strategic deployment of specialized offload processors.

Security requirements are also fueling market demand for RISC-V solutions. With the rise of confidential computing and zero-trust architectures, there is growing interest in hardware-based security features that can be transparently implemented in open instruction set architectures. RISC-V's extensibility makes it particularly suitable for integrating custom security features directly into hardware.

From a regional perspective, North America currently leads the market for data center offload solutions, accounting for approximately 42% of global demand. However, the Asia-Pacific region is expected to witness the fastest growth rate over the next five years, driven by massive data center expansions in China, India, and Southeast Asia.

The storage segment presents particularly strong growth potential for RISC-V based solutions. With the exponential growth of data volumes and the shift toward computational storage, there is increasing demand for programmable storage controllers that can perform data processing tasks directly on storage devices, reducing data movement and improving overall system efficiency.

Cloud service providers are increasingly seeking heterogeneous computing architectures to optimize workload performance while reducing operational costs. According to recent industry reports, the global market for data center accelerators is projected to grow at a compound annual growth rate of 36.7% from 2021 to 2026, reaching $21.2 billion. Within this broader market, RISC-V based solutions are gaining traction due to their customizability and potential for cost reduction.

The demand for RISC-V in data center offloads is primarily driven by several key factors. First, the open-source nature of RISC-V architecture enables greater customization and innovation, allowing companies to design purpose-built solutions for specific workloads without licensing fees. This represents a significant departure from proprietary architectures that dominate the current market.

Energy efficiency has become a critical concern for data center operators, with power consumption accounting for up to 40% of operational costs. RISC-V based offload solutions offer promising power efficiency advantages, particularly when optimized for specific tasks such as network packet processing or storage management. Market research indicates that data centers could potentially reduce power consumption by 15-25% through strategic deployment of specialized offload processors.

Security requirements are also fueling market demand for RISC-V solutions. With the rise of confidential computing and zero-trust architectures, there is growing interest in hardware-based security features that can be transparently implemented in open instruction set architectures. RISC-V's extensibility makes it particularly suitable for integrating custom security features directly into hardware.

From a regional perspective, North America currently leads the market for data center offload solutions, accounting for approximately 42% of global demand. However, the Asia-Pacific region is expected to witness the fastest growth rate over the next five years, driven by massive data center expansions in China, India, and Southeast Asia.

The storage segment presents particularly strong growth potential for RISC-V based solutions. With the exponential growth of data volumes and the shift toward computational storage, there is increasing demand for programmable storage controllers that can perform data processing tasks directly on storage devices, reducing data movement and improving overall system efficiency.

RISC-V Offload Technology Status and Challenges

The global RISC-V ecosystem has experienced significant growth in recent years, yet its adoption in data center offload technologies presents a complex landscape of achievements and obstacles. Currently, RISC-V implementations in Data Processing Units (DPUs), Network Interface Cards (NICs), and storage controllers remain in early stages compared to established architectures like Arm and x86.

In the DPU segment, companies like Fungible (now acquired by Microsoft) pioneered RISC-V implementations, while Marvell's OCTEON 10 DPU incorporates RISC-V cores alongside Arm processors. These hybrid approaches highlight the transitional nature of current deployments, where RISC-V often serves in auxiliary rather than primary processing roles.

For networking applications, RISC-V faces significant challenges in matching the performance optimization and ecosystem maturity of incumbent solutions. The architecture's inherent flexibility allows for customized instruction set extensions beneficial for packet processing, but the lack of standardized networking-specific extensions creates fragmentation in implementation approaches.

Storage controller implementations using RISC-V remain limited, with Western Digital's SweRV cores representing early adoption primarily in enterprise storage products. The architecture's potential for power efficiency makes it attractive for storage applications, yet ecosystem gaps in specialized storage protocols and interfaces slow broader implementation.

Technical challenges impeding wider RISC-V adoption in data center offloads include performance optimization gaps, particularly in vector processing capabilities critical for data-intensive workloads. While the RISC-V Vector Extension (RVV) shows promise, its implementation in silicon and software support remains nascent compared to AVX/SVE alternatives.

The software ecosystem represents perhaps the most significant barrier, with limited availability of optimized networking and storage stacks, device drivers, and middleware specifically designed for RISC-V-based offload processors. This creates a chicken-and-egg problem where hardware vendors await software support while software developers prioritize established architectures.

Geographically, RISC-V offload technology development shows concentration in North America (primarily Silicon Valley startups and established semiconductor companies) and China (where government initiatives actively promote RISC-V adoption). European contributions focus primarily on academic research and specialized applications, while Japan and South Korea demonstrate growing interest through recent industry consortia formations.

In the DPU segment, companies like Fungible (now acquired by Microsoft) pioneered RISC-V implementations, while Marvell's OCTEON 10 DPU incorporates RISC-V cores alongside Arm processors. These hybrid approaches highlight the transitional nature of current deployments, where RISC-V often serves in auxiliary rather than primary processing roles.

For networking applications, RISC-V faces significant challenges in matching the performance optimization and ecosystem maturity of incumbent solutions. The architecture's inherent flexibility allows for customized instruction set extensions beneficial for packet processing, but the lack of standardized networking-specific extensions creates fragmentation in implementation approaches.

Storage controller implementations using RISC-V remain limited, with Western Digital's SweRV cores representing early adoption primarily in enterprise storage products. The architecture's potential for power efficiency makes it attractive for storage applications, yet ecosystem gaps in specialized storage protocols and interfaces slow broader implementation.

Technical challenges impeding wider RISC-V adoption in data center offloads include performance optimization gaps, particularly in vector processing capabilities critical for data-intensive workloads. While the RISC-V Vector Extension (RVV) shows promise, its implementation in silicon and software support remains nascent compared to AVX/SVE alternatives.

The software ecosystem represents perhaps the most significant barrier, with limited availability of optimized networking and storage stacks, device drivers, and middleware specifically designed for RISC-V-based offload processors. This creates a chicken-and-egg problem where hardware vendors await software support while software developers prioritize established architectures.

Geographically, RISC-V offload technology development shows concentration in North America (primarily Silicon Valley startups and established semiconductor companies) and China (where government initiatives actively promote RISC-V adoption). European contributions focus primarily on academic research and specialized applications, while Japan and South Korea demonstrate growing interest through recent industry consortia formations.

Current RISC-V DPU, NIC and Storage Solutions

01 RISC-V processor architecture and implementation

RISC-V is an open-source instruction set architecture (ISA) based on reduced instruction set computing principles. Patents in this category cover fundamental aspects of RISC-V processor design, including core architecture, instruction execution, and hardware implementation. These innovations focus on the efficient execution of the RISC-V instruction set while optimizing for performance, power consumption, and silicon area.- RISC-V processor architecture and implementation: RISC-V is an open-source instruction set architecture (ISA) that follows reduced instruction set computer (RISC) principles. The architecture provides a foundation for processor design with various implementations focusing on efficiency, scalability, and customization. These implementations include different core designs, pipeline structures, and execution models that optimize for specific use cases while maintaining compatibility with the RISC-V ISA specification.

- RISC-V instruction execution and optimization: Various techniques are employed to optimize instruction execution in RISC-V processors, including specialized pipeline designs, branch prediction mechanisms, and instruction scheduling algorithms. These optimizations aim to improve performance while maintaining the simplicity inherent in the RISC architecture. Advanced execution units and parallel processing capabilities enhance throughput and efficiency in modern RISC-V implementations.

- RISC-V extensions and customization: The RISC-V architecture supports various extensions that add functionality beyond the base instruction set. These extensions include vector processing, floating-point operations, bit manipulation, and cryptographic functions. The modular nature of RISC-V allows designers to implement only the extensions needed for specific applications, enabling customization for different performance, power, and area requirements while maintaining compatibility with the core ISA.

- RISC-V system-on-chip (SoC) integration: RISC-V processors are increasingly integrated into system-on-chip designs that combine the CPU core with memory controllers, peripheral interfaces, and specialized accelerators. These SoC implementations leverage the open nature of RISC-V to create customized solutions for various applications including embedded systems, IoT devices, and high-performance computing. The integration approaches focus on optimizing system-level performance, power efficiency, and area utilization.

- RISC-V memory management and addressing: Memory management in RISC-V architectures encompasses various techniques for efficient address translation, cache organization, and memory protection. These implementations include virtual memory systems, translation lookaside buffers (TLBs), and memory management units (MMUs) designed specifically for the RISC-V ISA. Advanced addressing modes and memory access optimizations help improve performance while maintaining the clean separation between architecture and implementation that characterizes RISC-V.

02 RISC-V extensions and specialized instructions

This category encompasses patents related to extensions of the base RISC-V instruction set architecture. These extensions add specialized functionality for specific applications such as vector processing, cryptography, or floating-point operations. The patents describe how these custom instructions are implemented and integrated with the core RISC-V architecture to enhance performance for targeted workloads while maintaining compatibility with the base ISA.Expand Specific Solutions03 RISC-V microarchitectural optimizations

Patents in this category focus on microarchitectural techniques to improve RISC-V processor performance. These include pipeline design, branch prediction, cache organization, and out-of-order execution mechanisms specifically tailored for RISC-V implementations. The innovations aim to enhance instruction throughput, reduce latency, and improve overall efficiency while adhering to the RISC-V instruction set architecture.Expand Specific Solutions04 RISC-V system-on-chip integration

This category covers patents related to integrating RISC-V cores into system-on-chip (SoC) designs. The patents describe architectures for connecting RISC-V processors with various peripherals, memory subsystems, and accelerators. These innovations address challenges in system-level integration, including bus interfaces, memory coherence, power management, and multi-core configurations specific to RISC-V-based systems.Expand Specific Solutions05 RISC-V software and firmware solutions

Patents in this category focus on software and firmware aspects of RISC-V implementations. These include compilation techniques, operating system support, boot processes, and runtime environments optimized for RISC-V processors. The innovations address challenges in code generation, binary translation, and software optimization to fully leverage the capabilities of RISC-V hardware while ensuring compatibility across different implementations.Expand Specific Solutions

Key Industry Players in RISC-V Offload Ecosystem

RISC-V in data center offloads is experiencing rapid growth in an early-to-mid market development stage. The market is expanding significantly as companies seek more efficient, customizable solutions for DPUs, NICs, and storage applications. While still maturing, the technology shows promising adoption trends with key players driving innovation. Intel, Huawei, and Mellanox (NVIDIA) lead with established market presence, while VMware provides virtualization solutions that complement RISC-V implementations. Emerging players like Zhongke Yushu and Shanghai Tianshu Zhixin are developing specialized RISC-V-based DPU solutions. Fujitsu and VIA Technologies contribute significant IP and system integration expertise, creating a competitive landscape that balances established semiconductor giants with innovative startups focused on data center infrastructure optimization.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has embraced RISC-V architecture for data center offload applications through their advanced SmartNIC and DPU solutions. Their Atlas series incorporates RISC-V cores specifically designed for network processing, storage acceleration, and security functions. Huawei's implementation leverages custom RISC-V extensions optimized for packet processing and data movement operations, achieving significant performance improvements in network-intensive workloads. Their DPU architecture features multiple RISC-V cores working alongside dedicated accelerators for specific functions like encryption, compression, and storage protocols. Huawei has also developed a comprehensive software stack for their RISC-V-based offload processors, including drivers, libraries, and development tools that integrate with mainstream cloud platforms. The company's investment in RISC-V reflects their strategy to develop indigenous technology capabilities while benefiting from the open instruction set architecture's flexibility and customization options.

Strengths: Huawei's vertical integration allows them to optimize the entire hardware-software stack for RISC-V implementations. Their experience in networking equipment provides deep domain expertise for offload applications. Weaknesses: Geopolitical challenges may limit global market access for their RISC-V solutions. Their ecosystem may be more regionally focused compared to competitors with broader international presence.

Shandong Inspur Science Research Institute Co. Ltd.

Technical Solution: Inspur has developed a comprehensive RISC-V strategy for data center offloads, particularly focusing on storage acceleration and network processing. Their JiuZhang series of storage controllers incorporates RISC-V cores specifically designed to handle storage protocols, data compression, and encryption tasks. Inspur's implementation features custom RISC-V extensions optimized for data movement operations, achieving significant performance improvements in I/O-intensive workloads. Their architecture employs multiple RISC-V cores working in parallel to process storage commands and manage data paths, effectively offloading these tasks from main server CPUs. Inspur has also developed specialized firmware and software stacks that leverage the RISC-V cores' capabilities for NVMe virtualization, storage pooling, and disaggregation in cloud environments. Their solutions demonstrate how RISC-V can be effectively deployed in storage subsystems to improve overall data center efficiency while maintaining compatibility with existing infrastructure.

Strengths: Inspur's strong position in the Chinese server market provides a ready customer base for their RISC-V solutions. Their vertical integration allows for highly optimized hardware-software co-design. Weaknesses: Their international market presence is still developing, potentially limiting global adoption of their RISC-V implementations. Documentation and ecosystem support may be less comprehensive than more established competitors.

Core RISC-V Offload Architecture Innovations

Data processing unit integration

PatentWO2023141164A1

Innovation

- A system that employs a vendor-neutral interface and secure firmware calls to abstract low-level signaling and protocol communications, allowing a single DPU operating system image to be used across different DPUs and servers by translating vendor-specific calls into architecture-specific calls, enabling consistent functionality and management without requiring unique builds for each combination.

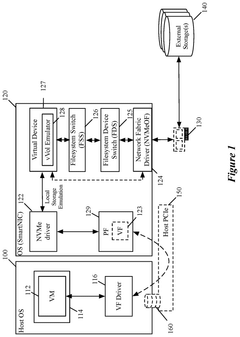

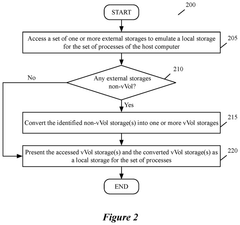

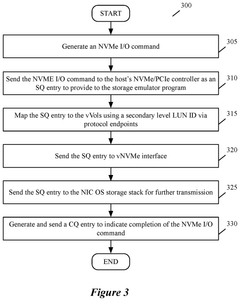

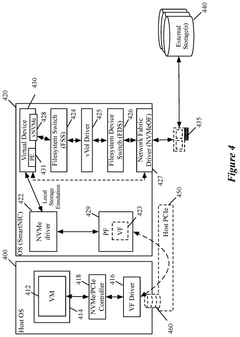

Nvme fabrics to vvol bridge for DPU storage

PatentPendingUS20250117237A1

Innovation

- A method is implemented on a NIC to deploy a storage emulator program that converts various external storages, including non-native vVol storages, into virtual volume (vVol) storages, thereby emulating a local vVol or NVMe storage device. This is achieved by configuring a virtual function (VF) of a physical function (PF) of the NIC's interface and using a storage conversion application to manage the conversion and presentation of these storages.

Power Efficiency and TCO Analysis

Power efficiency represents a critical factor in the evaluation of RISC-V based data center offload solutions. When comparing RISC-V implementations in DPUs, NICs, and storage controllers against traditional x86 or Arm alternatives, significant power consumption advantages emerge. RISC-V's simplified instruction set architecture inherently requires fewer transistors and less complex circuitry, resulting in lower thermal design power (TDP) ratings across comparable performance profiles.

Recent benchmarks indicate that RISC-V based DPUs can achieve up to 30% better performance-per-watt metrics compared to equivalent Arm implementations. This efficiency translates directly to reduced operational expenditure in large-scale deployments where thousands of units operate continuously. For hyperscale data centers, this efficiency differential can represent millions in annual power cost savings.

Total Cost of Ownership (TCO) analysis reveals compelling long-term economics for RISC-V adoption in offload applications. While initial acquisition costs for RISC-V solutions may currently carry a premium due to lower production volumes, the 3-5 year TCO calculations demonstrate net positive returns driven by power savings, cooling cost reductions, and increased rack density possibilities.

The open-source nature of RISC-V further enhances TCO considerations through reduced licensing costs and greater customization flexibility. Organizations can tailor implementations specifically for their workload requirements without incurring additional IP licensing expenses. This customization potential allows for optimization of power profiles based on specific offload functions, whether packet processing in NICs or compression algorithms in storage controllers.

Cooling infrastructure represents another significant TCO component positively impacted by RISC-V efficiency. Lower heat generation per computational unit reduces cooling requirements, potentially allowing for higher compute density or reduced cooling infrastructure investment. In liquid-cooled modern data centers, this translates to smaller heat exchangers and reduced pumping energy requirements.

Carbon footprint considerations increasingly factor into data center technology decisions. RISC-V based offload solutions contribute to sustainability goals through their inherent efficiency advantages. For organizations with carbon reduction commitments, the adoption of more efficient RISC-V accelerators represents a tangible path toward meeting environmental targets while maintaining computational capabilities.

Recent benchmarks indicate that RISC-V based DPUs can achieve up to 30% better performance-per-watt metrics compared to equivalent Arm implementations. This efficiency translates directly to reduced operational expenditure in large-scale deployments where thousands of units operate continuously. For hyperscale data centers, this efficiency differential can represent millions in annual power cost savings.

Total Cost of Ownership (TCO) analysis reveals compelling long-term economics for RISC-V adoption in offload applications. While initial acquisition costs for RISC-V solutions may currently carry a premium due to lower production volumes, the 3-5 year TCO calculations demonstrate net positive returns driven by power savings, cooling cost reductions, and increased rack density possibilities.

The open-source nature of RISC-V further enhances TCO considerations through reduced licensing costs and greater customization flexibility. Organizations can tailor implementations specifically for their workload requirements without incurring additional IP licensing expenses. This customization potential allows for optimization of power profiles based on specific offload functions, whether packet processing in NICs or compression algorithms in storage controllers.

Cooling infrastructure represents another significant TCO component positively impacted by RISC-V efficiency. Lower heat generation per computational unit reduces cooling requirements, potentially allowing for higher compute density or reduced cooling infrastructure investment. In liquid-cooled modern data centers, this translates to smaller heat exchangers and reduced pumping energy requirements.

Carbon footprint considerations increasingly factor into data center technology decisions. RISC-V based offload solutions contribute to sustainability goals through their inherent efficiency advantages. For organizations with carbon reduction commitments, the adoption of more efficient RISC-V accelerators represents a tangible path toward meeting environmental targets while maintaining computational capabilities.

Open Source Ecosystem and Standardization Efforts

The RISC-V ecosystem for data center offload applications has experienced remarkable growth through collaborative open source initiatives and standardization efforts. The RISC-V International organization serves as the central governing body, coordinating the development of specifications and extensions specifically targeting data processing units (DPUs), network interface cards (NICs), and storage controllers.

Several key open source projects have emerged as foundational elements for RISC-V in data center offloads. The RISC-V Software Ecosystem (RISE) project provides essential firmware, drivers, and middleware components specifically optimized for offload processors. Additionally, the Data Plane Development Kit (DPDK) has been adapted for RISC-V architectures, enabling efficient packet processing capabilities critical for DPU and NIC implementations.

Standardization efforts have focused on establishing consistent interfaces and protocols across different RISC-V implementations. The RISC-V DPU Working Group has developed reference architectures and programming models that ensure interoperability between various vendor solutions. Similarly, the Storage Interface Extensions (SIE) specification defines standard instruction set extensions optimized for storage acceleration tasks.

Industry collaboration has been instrumental in advancing these standardization efforts. Major cloud service providers, semiconductor companies, and storage vendors have formed the RISC-V Data Center Alliance to coordinate development priorities and ensure alignment with real-world deployment requirements. This alliance has published reference designs and implementation guidelines that serve as blueprints for RISC-V-based offload solutions.

Open source software frameworks supporting RISC-V offload processors have gained significant traction. Projects like NVMe-oF (NVMe over Fabrics) and Ceph have been adapted to leverage RISC-V accelerators, while new initiatives such as the Open Programmable Infrastructure project provide abstraction layers that simplify development across different offload architectures.

The standardization landscape continues to evolve with efforts to harmonize RISC-V implementations with existing industry standards. The SNIA (Storage Networking Industry Association) and OCP (Open Compute Project) have established working groups focused on integrating RISC-V into their reference architectures and compliance programs, ensuring that RISC-V-based solutions can seamlessly operate within established data center environments.

Several key open source projects have emerged as foundational elements for RISC-V in data center offloads. The RISC-V Software Ecosystem (RISE) project provides essential firmware, drivers, and middleware components specifically optimized for offload processors. Additionally, the Data Plane Development Kit (DPDK) has been adapted for RISC-V architectures, enabling efficient packet processing capabilities critical for DPU and NIC implementations.

Standardization efforts have focused on establishing consistent interfaces and protocols across different RISC-V implementations. The RISC-V DPU Working Group has developed reference architectures and programming models that ensure interoperability between various vendor solutions. Similarly, the Storage Interface Extensions (SIE) specification defines standard instruction set extensions optimized for storage acceleration tasks.

Industry collaboration has been instrumental in advancing these standardization efforts. Major cloud service providers, semiconductor companies, and storage vendors have formed the RISC-V Data Center Alliance to coordinate development priorities and ensure alignment with real-world deployment requirements. This alliance has published reference designs and implementation guidelines that serve as blueprints for RISC-V-based offload solutions.

Open source software frameworks supporting RISC-V offload processors have gained significant traction. Projects like NVMe-oF (NVMe over Fabrics) and Ceph have been adapted to leverage RISC-V accelerators, while new initiatives such as the Open Programmable Infrastructure project provide abstraction layers that simplify development across different offload architectures.

The standardization landscape continues to evolve with efforts to harmonize RISC-V implementations with existing industry standards. The SNIA (Storage Networking Industry Association) and OCP (Open Compute Project) have established working groups focused on integrating RISC-V into their reference architectures and compliance programs, ensuring that RISC-V-based solutions can seamlessly operate within established data center environments.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!