How to choose RISC-V cores for edge AI: MCU vs application class

AUG 25, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

RISC-V for Edge AI: Background and Objectives

RISC-V architecture has emerged as a significant player in the computing landscape since its inception at the University of California, Berkeley in 2010. This open-source instruction set architecture (ISA) has evolved from an academic project to a viable alternative to proprietary architectures like ARM and x86. The trajectory of RISC-V development has been marked by increasing industry adoption, standardization efforts, and ecosystem growth, particularly in embedded systems and specialized computing domains.

Edge AI represents the convergence of artificial intelligence capabilities with edge computing, enabling machine learning inference and sometimes training to occur directly on end devices rather than in centralized cloud environments. This paradigm shift addresses critical challenges in latency, bandwidth conservation, privacy, and operational reliability for AI applications.

The intersection of RISC-V and Edge AI presents a compelling technological frontier. RISC-V's modular and extensible nature makes it particularly suitable for customized AI workloads at the edge, where power efficiency, performance, and cost considerations are paramount. The architecture's open nature allows for specialized extensions tailored to machine learning operations, potentially offering advantages over fixed proprietary architectures.

Current technological trends indicate growing momentum toward deploying more sophisticated AI capabilities on increasingly resource-constrained edge devices. This evolution necessitates careful consideration of processor architecture choices, particularly between microcontroller (MCU) class RISC-V cores and application-class cores for Edge AI implementations.

MCU-class RISC-V cores typically feature simpler pipelines, smaller caches, and lower operating frequencies, optimized for power efficiency in constrained environments. In contrast, application-class cores incorporate more sophisticated microarchitectural features like out-of-order execution, deeper pipelines, and comprehensive memory hierarchies to deliver higher computational throughput.

The technical objectives of this investigation include establishing a comprehensive framework for evaluating the suitability of different RISC-V core classes for various Edge AI deployment scenarios. This framework must consider factors including computational requirements of target AI workloads, power and thermal constraints, real-time performance needs, and system cost considerations.

Additionally, we aim to identify the technological inflection points where transitioning from MCU-class to application-class cores becomes advantageous for Edge AI implementations, accounting for evolving AI model architectures, quantization techniques, and hardware acceleration approaches that may alter these boundaries over time.

Edge AI represents the convergence of artificial intelligence capabilities with edge computing, enabling machine learning inference and sometimes training to occur directly on end devices rather than in centralized cloud environments. This paradigm shift addresses critical challenges in latency, bandwidth conservation, privacy, and operational reliability for AI applications.

The intersection of RISC-V and Edge AI presents a compelling technological frontier. RISC-V's modular and extensible nature makes it particularly suitable for customized AI workloads at the edge, where power efficiency, performance, and cost considerations are paramount. The architecture's open nature allows for specialized extensions tailored to machine learning operations, potentially offering advantages over fixed proprietary architectures.

Current technological trends indicate growing momentum toward deploying more sophisticated AI capabilities on increasingly resource-constrained edge devices. This evolution necessitates careful consideration of processor architecture choices, particularly between microcontroller (MCU) class RISC-V cores and application-class cores for Edge AI implementations.

MCU-class RISC-V cores typically feature simpler pipelines, smaller caches, and lower operating frequencies, optimized for power efficiency in constrained environments. In contrast, application-class cores incorporate more sophisticated microarchitectural features like out-of-order execution, deeper pipelines, and comprehensive memory hierarchies to deliver higher computational throughput.

The technical objectives of this investigation include establishing a comprehensive framework for evaluating the suitability of different RISC-V core classes for various Edge AI deployment scenarios. This framework must consider factors including computational requirements of target AI workloads, power and thermal constraints, real-time performance needs, and system cost considerations.

Additionally, we aim to identify the technological inflection points where transitioning from MCU-class to application-class cores becomes advantageous for Edge AI implementations, accounting for evolving AI model architectures, quantization techniques, and hardware acceleration approaches that may alter these boundaries over time.

Market Demand Analysis for Edge AI Processors

The edge AI processor market is experiencing unprecedented growth, driven by the increasing demand for on-device artificial intelligence capabilities across multiple industries. Current market analysis indicates that the global edge AI processor market is projected to reach $25 billion by 2026, with a compound annual growth rate exceeding 20%. This rapid expansion reflects the shifting paradigm from cloud-based AI processing to edge computing solutions that offer reduced latency, enhanced privacy, and lower bandwidth requirements.

The demand for edge AI processors is particularly strong in consumer electronics, automotive, industrial automation, and healthcare sectors. In consumer electronics, smart home devices, wearables, and smartphones require efficient AI processing for voice recognition, image processing, and predictive user interactions. The automotive industry is increasingly incorporating AI processors for advanced driver assistance systems (ADAS), autonomous driving features, and in-cabin monitoring systems.

Industrial IoT applications represent another significant market segment, with manufacturing facilities deploying edge AI for predictive maintenance, quality control, and process optimization. Healthcare applications include portable diagnostic devices, patient monitoring systems, and point-of-care analytics tools that require real-time processing capabilities.

Market research indicates a clear bifurcation in customer requirements between microcontroller-based solutions and application-class processors. MCU-based edge AI solutions are gaining traction in ultra-low power applications where battery life and form factor are critical constraints. These typically address use cases requiring intermittent inference with modest computational demands, such as sensor fusion, keyword spotting, and simple anomaly detection.

In contrast, application-class processors are seeing increased adoption in scenarios requiring continuous AI workloads with higher computational complexity, such as computer vision, natural language processing, and multi-modal AI applications. This segment demands processors capable of handling multiple concurrent AI models while potentially running full operating systems.

RISC-V based solutions are disrupting this market landscape, with adoption growing at approximately 40% annually. The open instruction set architecture offers compelling advantages including customization flexibility, reduced licensing costs, and supply chain diversification. Market surveys indicate that 65% of embedded system designers are considering RISC-V for their next edge AI projects, citing architectural openness and ecosystem growth as primary motivators.

Regional analysis shows Asia-Pacific leading edge AI processor adoption, followed by North America and Europe. China, in particular, has made significant investments in RISC-V development as part of its technology self-sufficiency initiatives, further accelerating market growth in this region.

The demand for edge AI processors is particularly strong in consumer electronics, automotive, industrial automation, and healthcare sectors. In consumer electronics, smart home devices, wearables, and smartphones require efficient AI processing for voice recognition, image processing, and predictive user interactions. The automotive industry is increasingly incorporating AI processors for advanced driver assistance systems (ADAS), autonomous driving features, and in-cabin monitoring systems.

Industrial IoT applications represent another significant market segment, with manufacturing facilities deploying edge AI for predictive maintenance, quality control, and process optimization. Healthcare applications include portable diagnostic devices, patient monitoring systems, and point-of-care analytics tools that require real-time processing capabilities.

Market research indicates a clear bifurcation in customer requirements between microcontroller-based solutions and application-class processors. MCU-based edge AI solutions are gaining traction in ultra-low power applications where battery life and form factor are critical constraints. These typically address use cases requiring intermittent inference with modest computational demands, such as sensor fusion, keyword spotting, and simple anomaly detection.

In contrast, application-class processors are seeing increased adoption in scenarios requiring continuous AI workloads with higher computational complexity, such as computer vision, natural language processing, and multi-modal AI applications. This segment demands processors capable of handling multiple concurrent AI models while potentially running full operating systems.

RISC-V based solutions are disrupting this market landscape, with adoption growing at approximately 40% annually. The open instruction set architecture offers compelling advantages including customization flexibility, reduced licensing costs, and supply chain diversification. Market surveys indicate that 65% of embedded system designers are considering RISC-V for their next edge AI projects, citing architectural openness and ecosystem growth as primary motivators.

Regional analysis shows Asia-Pacific leading edge AI processor adoption, followed by North America and Europe. China, in particular, has made significant investments in RISC-V development as part of its technology self-sufficiency initiatives, further accelerating market growth in this region.

Current RISC-V Core Landscape and Challenges

The RISC-V instruction set architecture (ISA) has gained significant momentum in recent years, with a growing ecosystem of cores available for various applications. When examining the current RISC-V core landscape specifically for edge AI applications, we observe a clear bifurcation between microcontroller (MCU) class cores and application-class processors, each presenting distinct characteristics and challenges.

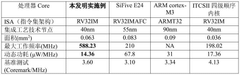

MCU-class RISC-V cores, typically based on the RV32E or RV32I base ISA with minimal extensions, are characterized by their small silicon footprint, low power consumption (often in the mW range), and limited performance capabilities. Leading implementations include SiFive's E-series, GreenWaves Technologies' GAP8, and Espressif's ESP32-C3. These cores generally operate at frequencies below 400MHz and feature memory constraints under 512KB.

Application-class RISC-V cores, conversely, implement more extensive ISA extensions (RV64GC being common) and offer significantly higher performance with multi-core configurations, out-of-order execution, and sophisticated branch prediction. Notable examples include SiFive's P550, Alibaba's XuanTie C910, and RIOS Lab's PicoRio. These cores typically operate at GHz frequencies with substantial caches and memory interfaces.

The primary challenge in the current landscape is the performance-efficiency gap between these two classes. MCU cores struggle with complex AI workloads despite their energy efficiency, while application cores consume too much power for many edge devices despite their computational capabilities. This creates a "middle ground" challenge where certain edge AI applications require more performance than MCU cores can deliver but cannot accommodate the power envelope of application processors.

Another significant challenge is the fragmented ecosystem of extensions and custom instructions. While RISC-V's extensibility is a strength, the proliferation of vendor-specific AI accelerators and custom instructions creates compatibility issues and software fragmentation. The "V" vector extension and "P" packed SIMD extensions are still maturing, leading to inconsistent implementation across vendors.

The software ecosystem presents additional challenges, particularly for AI frameworks. While TensorFlow Lite and ONNX Runtime have made progress in RISC-V support, optimization for specific cores remains inconsistent. The lack of standardized AI libraries and toolchains optimized for RISC-V creates development barriers and performance bottlenecks.

Geographically, we observe concentration of RISC-V core development in specific regions. While North America leads in architectural innovation (SiFive, Western Digital), China has emerged as a major force in application-class cores (T-Head, RIOS), and Europe focuses on MCU-class implementations for IoT (GreenWaves, Codasip). This distribution influences availability and support for different core types across markets.

MCU-class RISC-V cores, typically based on the RV32E or RV32I base ISA with minimal extensions, are characterized by their small silicon footprint, low power consumption (often in the mW range), and limited performance capabilities. Leading implementations include SiFive's E-series, GreenWaves Technologies' GAP8, and Espressif's ESP32-C3. These cores generally operate at frequencies below 400MHz and feature memory constraints under 512KB.

Application-class RISC-V cores, conversely, implement more extensive ISA extensions (RV64GC being common) and offer significantly higher performance with multi-core configurations, out-of-order execution, and sophisticated branch prediction. Notable examples include SiFive's P550, Alibaba's XuanTie C910, and RIOS Lab's PicoRio. These cores typically operate at GHz frequencies with substantial caches and memory interfaces.

The primary challenge in the current landscape is the performance-efficiency gap between these two classes. MCU cores struggle with complex AI workloads despite their energy efficiency, while application cores consume too much power for many edge devices despite their computational capabilities. This creates a "middle ground" challenge where certain edge AI applications require more performance than MCU cores can deliver but cannot accommodate the power envelope of application processors.

Another significant challenge is the fragmented ecosystem of extensions and custom instructions. While RISC-V's extensibility is a strength, the proliferation of vendor-specific AI accelerators and custom instructions creates compatibility issues and software fragmentation. The "V" vector extension and "P" packed SIMD extensions are still maturing, leading to inconsistent implementation across vendors.

The software ecosystem presents additional challenges, particularly for AI frameworks. While TensorFlow Lite and ONNX Runtime have made progress in RISC-V support, optimization for specific cores remains inconsistent. The lack of standardized AI libraries and toolchains optimized for RISC-V creates development barriers and performance bottlenecks.

Geographically, we observe concentration of RISC-V core development in specific regions. While North America leads in architectural innovation (SiFive, Western Digital), China has emerged as a major force in application-class cores (T-Head, RIOS), and Europe focuses on MCU-class implementations for IoT (GreenWaves, Codasip). This distribution influences availability and support for different core types across markets.

MCU vs Application Class Implementation Approaches

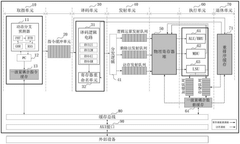

01 RISC-V processor architecture and implementation

RISC-V is an open-source instruction set architecture (ISA) that follows reduced instruction set computer (RISC) principles. The architecture provides a foundation for processor design with various extensions and configurations. Implementations can range from simple embedded cores to complex multi-core systems, offering flexibility for different performance and power requirements. The open nature of RISC-V allows for customization and innovation in processor design.- RISC-V processor architecture and implementation: RISC-V is an open-source instruction set architecture (ISA) that follows reduced instruction set computer (RISC) principles. The architecture provides a foundation for processor design with various implementations focusing on efficiency, scalability, and customization. RISC-V cores can be implemented with different feature sets and performance characteristics to meet specific application requirements, from embedded systems to high-performance computing.

- Multi-core RISC-V implementations: Multi-core RISC-V implementations involve integrating multiple RISC-V processor cores within a single system. These designs enable parallel processing capabilities, improved performance, and enhanced efficiency for complex computational tasks. Multi-core architectures can be homogeneous (identical cores) or heterogeneous (different core types), allowing for optimized workload distribution and power management across the system.

- RISC-V extensions and customization: RISC-V architecture supports various extensions and customization options that enhance the base instruction set. These extensions include vector processing, floating-point operations, bit manipulation, and application-specific instructions. The modular nature of RISC-V allows designers to implement only the extensions needed for their specific applications, optimizing performance while maintaining efficiency and reducing silicon area.

- RISC-V power management and optimization: Power management and optimization techniques for RISC-V cores focus on reducing energy consumption while maintaining performance. These approaches include dynamic voltage and frequency scaling, power gating unused components, and implementing low-power operating modes. Advanced optimization techniques may involve microarchitectural improvements, clock gating, and specialized power domains to enhance energy efficiency in various operating conditions.

- RISC-V security features and implementations: Security features in RISC-V cores address various threats and vulnerabilities in computing systems. These implementations include secure boot mechanisms, trusted execution environments, memory protection units, and cryptographic accelerators. RISC-V security extensions provide hardware-based security capabilities that protect against both physical and software-based attacks, ensuring data integrity, confidentiality, and system reliability in diverse application environments.

02 Multi-core RISC-V systems and parallel processing

Multi-core RISC-V implementations enable parallel processing capabilities, where multiple processor cores work simultaneously to improve performance. These systems can distribute workloads across cores, enhancing throughput for parallel tasks. The architecture supports various synchronization mechanisms and memory coherence protocols to maintain data consistency across cores. Multi-core RISC-V designs can be scaled according to application requirements, from dual-core to many-core configurations.Expand Specific Solutions03 RISC-V extensions and customization

RISC-V architecture supports various standard and custom extensions that enhance functionality for specific applications. These extensions can add instructions for vector processing, floating-point operations, bit manipulation, cryptography, and other specialized functions. The modular nature of RISC-V allows designers to include only the extensions needed for their application, optimizing for performance, power, and area. Custom instructions can be implemented to accelerate specific algorithms or workloads.Expand Specific Solutions04 RISC-V pipeline and microarchitecture design

The pipeline architecture in RISC-V processors determines how instructions flow through the processor. Designs range from simple 3-stage pipelines for low-power applications to complex out-of-order execution pipelines for high-performance systems. Microarchitectural features include branch prediction, instruction prefetching, and various execution units. The pipeline design affects critical performance metrics such as clock frequency, instructions per cycle (IPC), and power consumption.Expand Specific Solutions05 RISC-V power management and optimization

Power management techniques in RISC-V cores include dynamic voltage and frequency scaling, power gating, and clock gating to reduce energy consumption. The architecture supports various sleep modes and power states that can be utilized based on workload requirements. Optimization strategies focus on balancing performance and power efficiency through microarchitectural choices and circuit design techniques. These approaches are particularly important for battery-powered and energy-constrained applications.Expand Specific Solutions

Key RISC-V IP Providers and Semiconductor Companies

The RISC-V edge AI computing market is currently in a growth phase, with an expanding ecosystem transitioning from early adoption to mainstream implementation. The market is projected to reach significant scale as edge AI applications proliferate across IoT, automotive, and consumer electronics sectors. From a technical maturity perspective, the landscape shows a clear bifurcation between MCU-class cores (optimized for power efficiency) and application-class cores (delivering higher performance). Companies like Alibaba Group, VIA Technologies, and Gowin Semiconductor are advancing MCU-class implementations for constrained environments, while Inspur, C*Core Technology, and Nanjing Qinheng Microelectronics are developing more robust application-class solutions. This competitive dynamic is driving innovation in both segments, with companies increasingly focusing on specialized extensions for AI acceleration while maintaining RISC-V's inherent flexibility and customization advantages.

Gowin Semiconductor Corp.

Technical Solution: Gowin Semiconductor has developed a hybrid approach to edge AI processing by integrating RISC-V cores within their FPGA architecture. Their solution allows for flexible implementation of either MCU-class or application-class RISC-V cores depending on the specific edge AI requirements. For lightweight inference tasks, Gowin implements compact MCU-class RISC-V cores that consume minimal FPGA resources while providing sufficient performance for basic AI operations. For more demanding applications, they offer implementation templates for application-class RISC-V cores with custom AI acceleration blocks implemented in the FPGA fabric. This approach enables developers to create specialized AI processing pipelines that can be reconfigured based on evolving requirements. Gowin's architecture includes dedicated DSP blocks that can be leveraged for neural network computations, and their development tools provide automated mapping of common AI operations to these resources. The company has demonstrated implementations that achieve up to 5x better performance/watt compared to general-purpose processors for specific edge AI workloads.

Strengths: Highly flexible architecture allowing customization of both the RISC-V core and accelerator components; ability to reconfigure the implementation as AI models evolve; good balance of performance and power efficiency. Weaknesses: Requires FPGA design expertise to fully optimize; higher initial complexity compared to fixed-architecture solutions; potentially higher unit cost for low-volume applications.

Alibaba Group Holding Ltd.

Technical Solution: Alibaba's T-Head semiconductor subsidiary has developed the Xuantie series of RISC-V cores specifically optimized for edge AI applications. Their approach differentiates between MCU-class cores (like the E902) for ultra-low power scenarios and application-class cores (such as the C910 and C906) for more complex edge AI workloads. The C906 application processor supports vector extensions and custom AI instructions that accelerate neural network operations while maintaining power efficiency. Alibaba's XuanTie RISC-V cores feature a scalable architecture allowing developers to select appropriate configurations based on specific edge AI requirements. Their cores include dedicated neural network accelerators and DSP extensions that can be enabled or disabled depending on the target application's needs. The company has demonstrated up to 100x performance improvements for certain AI workloads compared to standard implementations without these optimizations.

Strengths: Highly customizable architecture allowing fine-tuned performance/power tradeoffs; extensive ecosystem support including development tools and software libraries; proven deployment in commercial products. Weaknesses: Requires significant expertise to fully optimize for specific applications; some advanced features only available in higher-end cores, increasing power consumption.

Technical Deep Dive: RISC-V Extensions for AI

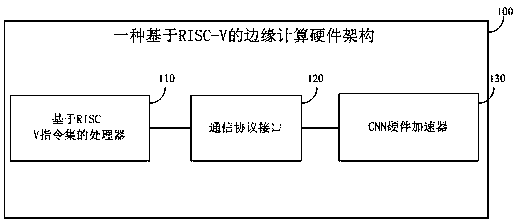

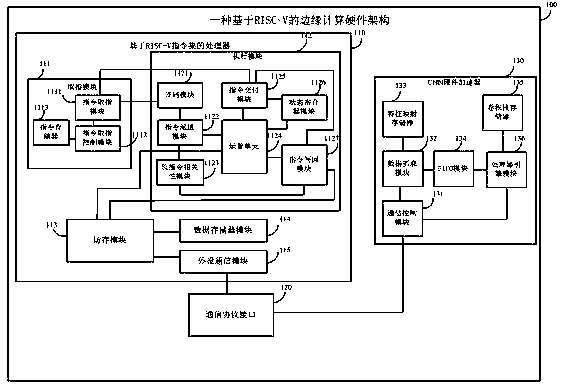

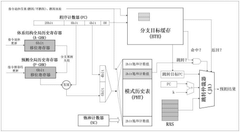

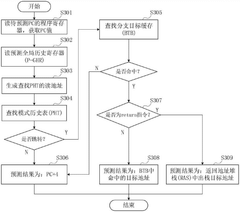

Edge computing hardware architecture based on RISC-V

PatentActiveCN110007961A

Innovation

- Design an edge computing hardware architecture based on the RISC-V instruction set, including a processor and CNN hardware accelerator. Through low-power design and independent clock gating and other technologies, the circuit complexity is reduced and low-power consumption and small-area performance are achieved. requirements, and control CNN hardware accelerators for artificial intelligence processing on mobile devices.

Low-power-consumption single-emission out-of-order execution RISC-V processor and instruction processing method

PatentPendingCN119718430A

Innovation

- A low-power single-transmitter random execution RISC-V processor microarchitecture is designed, adopting a seven-stage pipeline architecture, combining gating clock, input gating and glitch optimization technologies to improve the processor's instruction parallelism and processing efficiency while reducing power consumption.

Power-Performance-Area Tradeoffs in Core Selection

When selecting RISC-V cores for edge AI applications, the power-performance-area (PPA) tradeoff represents a critical decision matrix. MCU-class cores typically offer lower power consumption, ranging from milliwatts to tens of milliwatts, making them ideal for battery-operated devices with strict energy constraints. These cores generally occupy silicon areas between 0.01-0.1 mm² in modern process nodes, enabling compact form factors essential for space-constrained edge devices.

Application-class cores, conversely, deliver substantially higher performance with their more sophisticated microarchitectures, including deeper pipelines, out-of-order execution, and advanced branch prediction. This performance advantage comes at the cost of increased power consumption (hundreds of milliwatts to several watts) and larger silicon footprints (0.5-5 mm²), necessitating more robust thermal management solutions.

The computational demands of edge AI workloads create interesting inflection points in this tradeoff space. For lightweight inference tasks such as keyword spotting or simple anomaly detection, MCU-class cores may provide sufficient performance while maintaining minimal power profiles. More complex AI applications like computer vision or natural language processing often benefit from application-class cores' superior computational capabilities.

Energy efficiency metrics, particularly performance per watt, offer valuable insights when evaluating these tradeoffs. Recent RISC-V implementations have demonstrated significant improvements in this metric, with some specialized AI-enhanced cores achieving 2-5x better energy efficiency compared to general-purpose alternatives when executing neural network operations.

Silicon area considerations extend beyond the core itself to include memory subsystems, which often dominate the overall footprint in AI-focused designs. Application-class cores typically require larger caches and more complex memory hierarchies, further increasing their area requirements but potentially reducing external memory access penalties during AI workload execution.

The optimal selection ultimately depends on deployment-specific constraints. For example, always-on sensing applications prioritize ultra-low power consumption, favoring MCU-class cores, while interactive AI applications with real-time requirements may necessitate application-class cores despite their higher power demands. Hybrid approaches incorporating both core types are emerging as promising solutions, enabling dynamic workload distribution based on performance and power requirements.

Application-class cores, conversely, deliver substantially higher performance with their more sophisticated microarchitectures, including deeper pipelines, out-of-order execution, and advanced branch prediction. This performance advantage comes at the cost of increased power consumption (hundreds of milliwatts to several watts) and larger silicon footprints (0.5-5 mm²), necessitating more robust thermal management solutions.

The computational demands of edge AI workloads create interesting inflection points in this tradeoff space. For lightweight inference tasks such as keyword spotting or simple anomaly detection, MCU-class cores may provide sufficient performance while maintaining minimal power profiles. More complex AI applications like computer vision or natural language processing often benefit from application-class cores' superior computational capabilities.

Energy efficiency metrics, particularly performance per watt, offer valuable insights when evaluating these tradeoffs. Recent RISC-V implementations have demonstrated significant improvements in this metric, with some specialized AI-enhanced cores achieving 2-5x better energy efficiency compared to general-purpose alternatives when executing neural network operations.

Silicon area considerations extend beyond the core itself to include memory subsystems, which often dominate the overall footprint in AI-focused designs. Application-class cores typically require larger caches and more complex memory hierarchies, further increasing their area requirements but potentially reducing external memory access penalties during AI workload execution.

The optimal selection ultimately depends on deployment-specific constraints. For example, always-on sensing applications prioritize ultra-low power consumption, favoring MCU-class cores, while interactive AI applications with real-time requirements may necessitate application-class cores despite their higher power demands. Hybrid approaches incorporating both core types are emerging as promising solutions, enabling dynamic workload distribution based on performance and power requirements.

Software Ecosystem Maturity and Development Tools

The software ecosystem surrounding RISC-V cores represents a critical factor when selecting between MCU and application-class implementations for edge AI applications. The RISC-V ecosystem has evolved significantly in recent years, though with notable differences in maturity between these two implementation classes.

For MCU-class RISC-V cores, the software ecosystem has reached reasonable maturity with several established toolchains. GCC and LLVM compilers provide solid support for the base ISA and most common extensions. Development environments like PlatformIO, Zephyr RTOS, and FreeRTOS offer familiar frameworks for embedded developers. However, AI-specific libraries and tools for MCU-class cores remain somewhat limited, often requiring manual optimization for specific hardware configurations.

Application-class RISC-V cores benefit from more comprehensive software support, including full Linux distributions such as Fedora RISC-V and Debian RISC-V. These environments enable access to established AI frameworks like TensorFlow Lite and PyTorch, which have been ported to RISC-V with varying degrees of optimization. The SiFive Performance series and StarFive's VisionFive boards have catalyzed significant improvements in this ecosystem segment.

Development tools show similar divergence between the two classes. MCU-class cores typically rely on vendor-specific SDKs with basic debugging capabilities, while application-class implementations leverage more sophisticated tools including advanced profilers and trace analyzers. SEGGER J-Link and OpenOCD provide debugging support across both categories, though with more comprehensive features for application-class systems.

The fragmentation of extensions represents a persistent challenge for the RISC-V ecosystem. Different vendors implement varying combinations of standard and custom extensions, complicating software portability. This issue affects MCU implementations more severely, where proprietary extensions for DSP and AI acceleration are common. The RISC-V International organization has been working to standardize vector (V), bit manipulation (B), and other extensions to address this fragmentation.

Community support and documentation quality vary significantly across the ecosystem. Well-established vendors like SiFive and Microchip offer comprehensive documentation and support channels, while newer market entrants may provide less robust resources. This disparity affects development timelines and should factor into core selection decisions for time-sensitive edge AI projects.

For MCU-class RISC-V cores, the software ecosystem has reached reasonable maturity with several established toolchains. GCC and LLVM compilers provide solid support for the base ISA and most common extensions. Development environments like PlatformIO, Zephyr RTOS, and FreeRTOS offer familiar frameworks for embedded developers. However, AI-specific libraries and tools for MCU-class cores remain somewhat limited, often requiring manual optimization for specific hardware configurations.

Application-class RISC-V cores benefit from more comprehensive software support, including full Linux distributions such as Fedora RISC-V and Debian RISC-V. These environments enable access to established AI frameworks like TensorFlow Lite and PyTorch, which have been ported to RISC-V with varying degrees of optimization. The SiFive Performance series and StarFive's VisionFive boards have catalyzed significant improvements in this ecosystem segment.

Development tools show similar divergence between the two classes. MCU-class cores typically rely on vendor-specific SDKs with basic debugging capabilities, while application-class implementations leverage more sophisticated tools including advanced profilers and trace analyzers. SEGGER J-Link and OpenOCD provide debugging support across both categories, though with more comprehensive features for application-class systems.

The fragmentation of extensions represents a persistent challenge for the RISC-V ecosystem. Different vendors implement varying combinations of standard and custom extensions, complicating software portability. This issue affects MCU implementations more severely, where proprietary extensions for DSP and AI acceleration are common. The RISC-V International organization has been working to standardize vector (V), bit manipulation (B), and other extensions to address this fragmentation.

Community support and documentation quality vary significantly across the ecosystem. Well-established vendors like SiFive and Microchip offer comprehensive documentation and support channels, while newer market entrants may provide less robust resources. This disparity affects development timelines and should factor into core selection decisions for time-sensitive edge AI projects.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!