Deep learning frameworks for robust Brain-Computer Interfaces feature extraction

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

BCI Deep Learning Background and Objectives

Brain-Computer Interfaces (BCIs) represent a transformative technology that enables direct communication between the brain and external devices, bypassing conventional neuromuscular pathways. The evolution of BCIs has been marked by significant advancements in signal acquisition, processing techniques, and interpretation algorithms. Initially developed for medical applications to assist individuals with severe motor disabilities, BCIs have expanded into diverse fields including gaming, education, security, and cognitive enhancement.

The integration of deep learning with BCI systems marks a pivotal advancement in this domain. Traditional BCI systems relied heavily on hand-crafted feature extraction methods, which often struggled with the complex, non-stationary nature of neurophysiological signals. These conventional approaches frequently required extensive domain expertise and manual parameter tuning, resulting in systems that lacked robustness across different users and environmental conditions.

Deep learning frameworks offer a promising alternative by automatically learning hierarchical representations from raw or minimally processed neural data. Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and more recently, transformer architectures have demonstrated remarkable capabilities in extracting meaningful patterns from high-dimensional, noisy neurophysiological signals such as electroencephalography (EEG), electrocorticography (ECoG), and functional magnetic resonance imaging (fMRI).

The technical trajectory of BCI development has evolved from simple binary classification tasks to increasingly sophisticated multi-class and continuous control paradigms. Early systems focused primarily on detecting specific event-related potentials, while contemporary approaches aim to decode complex intentions and emotional states. This evolution parallels advancements in deep learning architectures, which have progressively improved in their ability to model temporal dependencies and spatial patterns in neural data.

The primary objective of implementing deep learning frameworks for BCI feature extraction is to enhance system robustness, adaptability, and performance. Specifically, these frameworks aim to address critical challenges including: reducing calibration requirements through transfer learning approaches; improving signal-to-noise ratio in real-world environments; accommodating non-stationarity in neural signals over time; and facilitating generalization across different users and mental states.

Furthermore, there is growing interest in developing end-to-end deep learning systems that can directly map raw neural signals to control commands, eliminating the need for explicit feature engineering. Such systems promise to simplify BCI implementation while potentially uncovering novel neural patterns that conventional approaches might overlook. The ultimate goal is to create intuitive, reliable interfaces that can function effectively in dynamic real-world environments, bringing BCIs closer to widespread practical adoption beyond controlled laboratory settings.

The integration of deep learning with BCI systems marks a pivotal advancement in this domain. Traditional BCI systems relied heavily on hand-crafted feature extraction methods, which often struggled with the complex, non-stationary nature of neurophysiological signals. These conventional approaches frequently required extensive domain expertise and manual parameter tuning, resulting in systems that lacked robustness across different users and environmental conditions.

Deep learning frameworks offer a promising alternative by automatically learning hierarchical representations from raw or minimally processed neural data. Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and more recently, transformer architectures have demonstrated remarkable capabilities in extracting meaningful patterns from high-dimensional, noisy neurophysiological signals such as electroencephalography (EEG), electrocorticography (ECoG), and functional magnetic resonance imaging (fMRI).

The technical trajectory of BCI development has evolved from simple binary classification tasks to increasingly sophisticated multi-class and continuous control paradigms. Early systems focused primarily on detecting specific event-related potentials, while contemporary approaches aim to decode complex intentions and emotional states. This evolution parallels advancements in deep learning architectures, which have progressively improved in their ability to model temporal dependencies and spatial patterns in neural data.

The primary objective of implementing deep learning frameworks for BCI feature extraction is to enhance system robustness, adaptability, and performance. Specifically, these frameworks aim to address critical challenges including: reducing calibration requirements through transfer learning approaches; improving signal-to-noise ratio in real-world environments; accommodating non-stationarity in neural signals over time; and facilitating generalization across different users and mental states.

Furthermore, there is growing interest in developing end-to-end deep learning systems that can directly map raw neural signals to control commands, eliminating the need for explicit feature engineering. Such systems promise to simplify BCI implementation while potentially uncovering novel neural patterns that conventional approaches might overlook. The ultimate goal is to create intuitive, reliable interfaces that can function effectively in dynamic real-world environments, bringing BCIs closer to widespread practical adoption beyond controlled laboratory settings.

Market Analysis for BCI Applications

The global Brain-Computer Interface (BCI) market is experiencing significant growth, driven by advancements in deep learning frameworks for feature extraction. Current market valuations place the BCI sector at approximately 1.9 billion USD in 2023, with projections indicating a compound annual growth rate of 12-15% through 2030, potentially reaching 4.5 billion USD by the end of the decade.

Healthcare applications currently dominate the BCI market landscape, accounting for roughly 60% of total market share. Within this segment, neurological disorder management represents the largest application area, particularly for conditions such as epilepsy, Parkinson's disease, and stroke rehabilitation. The robust feature extraction capabilities of deep learning frameworks have substantially improved diagnostic accuracy and treatment efficacy in these domains.

The gaming and entertainment sector has emerged as the fastest-growing application segment, with an estimated growth rate of 20% annually. Consumer-grade BCI devices with improved signal processing have begun penetrating mainstream markets, creating new opportunities for immersive gaming experiences and novel forms of digital interaction.

Military and defense applications constitute a smaller but strategically significant market segment, focusing primarily on enhanced soldier performance monitoring and advanced communication systems. These applications demand particularly robust feature extraction methods to function reliably in high-stress environments.

Geographically, North America leads the market with approximately 45% share, followed by Europe (25%) and Asia-Pacific (20%). China and India are demonstrating the most rapid growth trajectories in the Asia-Pacific region, driven by substantial investments in neurotechnology research and development.

Key market drivers include increasing prevalence of neurological disorders, growing adoption of BCI technology in rehabilitation, and expanding applications in consumer electronics. The integration of artificial intelligence and deep learning frameworks has significantly enhanced signal processing capabilities, making BCI systems more accessible and functional for everyday applications.

Market barriers remain substantial, including high development costs, regulatory hurdles, and concerns regarding data privacy and security. The average development cost for advanced BCI systems incorporating robust deep learning frameworks for feature extraction typically exceeds 2 million USD, creating significant entry barriers for smaller companies.

Customer adoption patterns indicate growing acceptance of non-invasive BCI technologies, with consumer willingness to adopt these technologies increasing by approximately 15% annually. This trend is particularly pronounced among younger demographics and technology enthusiasts, suggesting potential for mainstream adoption within the next decade.

Healthcare applications currently dominate the BCI market landscape, accounting for roughly 60% of total market share. Within this segment, neurological disorder management represents the largest application area, particularly for conditions such as epilepsy, Parkinson's disease, and stroke rehabilitation. The robust feature extraction capabilities of deep learning frameworks have substantially improved diagnostic accuracy and treatment efficacy in these domains.

The gaming and entertainment sector has emerged as the fastest-growing application segment, with an estimated growth rate of 20% annually. Consumer-grade BCI devices with improved signal processing have begun penetrating mainstream markets, creating new opportunities for immersive gaming experiences and novel forms of digital interaction.

Military and defense applications constitute a smaller but strategically significant market segment, focusing primarily on enhanced soldier performance monitoring and advanced communication systems. These applications demand particularly robust feature extraction methods to function reliably in high-stress environments.

Geographically, North America leads the market with approximately 45% share, followed by Europe (25%) and Asia-Pacific (20%). China and India are demonstrating the most rapid growth trajectories in the Asia-Pacific region, driven by substantial investments in neurotechnology research and development.

Key market drivers include increasing prevalence of neurological disorders, growing adoption of BCI technology in rehabilitation, and expanding applications in consumer electronics. The integration of artificial intelligence and deep learning frameworks has significantly enhanced signal processing capabilities, making BCI systems more accessible and functional for everyday applications.

Market barriers remain substantial, including high development costs, regulatory hurdles, and concerns regarding data privacy and security. The average development cost for advanced BCI systems incorporating robust deep learning frameworks for feature extraction typically exceeds 2 million USD, creating significant entry barriers for smaller companies.

Customer adoption patterns indicate growing acceptance of non-invasive BCI technologies, with consumer willingness to adopt these technologies increasing by approximately 15% annually. This trend is particularly pronounced among younger demographics and technology enthusiasts, suggesting potential for mainstream adoption within the next decade.

Current Challenges in BCI Feature Extraction

Despite significant advancements in deep learning frameworks for Brain-Computer Interfaces (BCI), feature extraction remains a critical bottleneck in developing robust systems. The inherent non-stationarity of brain signals presents a fundamental challenge, as neural patterns exhibit substantial variability across sessions and subjects. This temporal instability undermines the generalizability of trained models, requiring frequent recalibration and limiting practical deployment scenarios.

Signal-to-noise ratio issues continue to plague BCI systems, particularly in non-invasive approaches like EEG. Artifacts from muscle movements, eye blinks, and environmental electrical interference contaminate the neural signals of interest. While deep learning architectures demonstrate promising noise-handling capabilities, they often require extensive training data to effectively distinguish signal from noise—a requirement that conflicts with the limited datasets typically available in BCI research.

Inter-subject variability represents another significant hurdle. Anatomical differences in brain structure, coupled with individual variations in cognitive processing, result in highly personalized neural signatures. Current deep learning frameworks struggle to develop universal feature extractors that can accommodate this diversity without subject-specific calibration, limiting scalability for widespread applications.

The interpretability deficit in deep learning models poses particular concerns for BCI applications, especially in medical contexts. The "black box" nature of complex neural networks obscures understanding of which neurophysiological features drive predictions, raising both scientific and ethical questions about deployment in sensitive applications like assistive technology or clinical diagnosis.

Computational efficiency remains problematic for real-time BCI applications. While deep learning approaches excel at feature extraction accuracy, many sophisticated architectures demand substantial computational resources, creating implementation barriers for portable or wearable BCI systems where power consumption and processing latency are critical constraints.

Data scarcity continues to undermine robust feature extraction development. High-quality BCI datasets are limited by expensive collection procedures, participant fatigue, and ethical considerations. This scarcity particularly impacts deep learning approaches, which typically require massive training datasets to achieve optimal performance and generalization capabilities.

The multi-modal integration challenge persists as researchers attempt to combine EEG with other neuroimaging techniques or physiological signals. While such fusion potentially enhances feature extraction robustness, current deep learning frameworks lack standardized architectures for effectively integrating heterogeneous data streams with different temporal and spatial resolutions.

Signal-to-noise ratio issues continue to plague BCI systems, particularly in non-invasive approaches like EEG. Artifacts from muscle movements, eye blinks, and environmental electrical interference contaminate the neural signals of interest. While deep learning architectures demonstrate promising noise-handling capabilities, they often require extensive training data to effectively distinguish signal from noise—a requirement that conflicts with the limited datasets typically available in BCI research.

Inter-subject variability represents another significant hurdle. Anatomical differences in brain structure, coupled with individual variations in cognitive processing, result in highly personalized neural signatures. Current deep learning frameworks struggle to develop universal feature extractors that can accommodate this diversity without subject-specific calibration, limiting scalability for widespread applications.

The interpretability deficit in deep learning models poses particular concerns for BCI applications, especially in medical contexts. The "black box" nature of complex neural networks obscures understanding of which neurophysiological features drive predictions, raising both scientific and ethical questions about deployment in sensitive applications like assistive technology or clinical diagnosis.

Computational efficiency remains problematic for real-time BCI applications. While deep learning approaches excel at feature extraction accuracy, many sophisticated architectures demand substantial computational resources, creating implementation barriers for portable or wearable BCI systems where power consumption and processing latency are critical constraints.

Data scarcity continues to undermine robust feature extraction development. High-quality BCI datasets are limited by expensive collection procedures, participant fatigue, and ethical considerations. This scarcity particularly impacts deep learning approaches, which typically require massive training datasets to achieve optimal performance and generalization capabilities.

The multi-modal integration challenge persists as researchers attempt to combine EEG with other neuroimaging techniques or physiological signals. While such fusion potentially enhances feature extraction robustness, current deep learning frameworks lack standardized architectures for effectively integrating heterogeneous data streams with different temporal and spatial resolutions.

State-of-the-Art Deep Learning Frameworks for BCI

01 Deep learning frameworks for feature extraction in computer vision

Various deep learning frameworks are employed for robust feature extraction in computer vision applications. These frameworks utilize convolutional neural networks (CNNs) and other architectures to automatically learn hierarchical representations from visual data. The extracted features are robust to variations in lighting, pose, and other environmental factors, making them suitable for tasks such as object detection, image classification, and scene understanding.- Deep learning frameworks for feature extraction in computer vision: Various deep learning frameworks are employed for robust feature extraction in computer vision applications. These frameworks utilize convolutional neural networks (CNNs) and other architectures to automatically learn hierarchical representations from visual data. The extracted features are robust to variations in lighting, pose, and other environmental factors, making them suitable for tasks such as object detection, image classification, and scene understanding.

- Feature extraction techniques for natural language processing: Deep learning frameworks provide robust feature extraction capabilities for natural language processing tasks. These frameworks employ techniques such as word embeddings, recurrent neural networks, and transformer architectures to capture semantic and syntactic features from text data. The extracted features enable applications like sentiment analysis, machine translation, and text classification with improved accuracy and generalization ability.

- Transfer learning and pre-trained models for feature extraction: Transfer learning approaches leverage pre-trained deep learning models to extract robust features from limited data. By utilizing models trained on large datasets, these frameworks can extract meaningful representations that generalize well to new tasks. Fine-tuning techniques allow adaptation of these pre-trained feature extractors to specific domains while maintaining their robustness and discriminative power.

- Attention mechanisms for enhanced feature extraction: Attention mechanisms in deep learning frameworks enable more robust feature extraction by focusing on relevant parts of the input data. These mechanisms dynamically weight the importance of different features based on the context, improving the quality of extracted representations. Self-attention and cross-attention techniques have proven particularly effective for capturing long-range dependencies and complex relationships in data.

- Adversarial training for robust feature extraction: Adversarial training methods enhance the robustness of feature extraction in deep learning frameworks. By exposing models to adversarial examples during training, these approaches learn to extract features that are invariant to small perturbations and attacks. This results in more reliable feature representations that maintain their discriminative power even under challenging conditions or when processing out-of-distribution samples.

02 Feature extraction techniques for natural language processing

Deep learning frameworks provide robust feature extraction capabilities for natural language processing tasks. These frameworks employ techniques such as word embeddings, recurrent neural networks, and transformer architectures to capture semantic and syntactic features from text data. The extracted features enable applications like sentiment analysis, machine translation, and text classification with improved accuracy and generalization ability.Expand Specific Solutions03 Transfer learning and pre-trained models for feature extraction

Transfer learning approaches leverage pre-trained deep learning models to extract robust features from limited data. By utilizing models trained on large datasets, these frameworks can extract meaningful representations that generalize well to new domains or tasks. This approach reduces the need for extensive training data and computational resources while maintaining high-quality feature extraction capabilities for various applications.Expand Specific Solutions04 Robust feature extraction for time-series and sequential data

Deep learning frameworks designed for time-series and sequential data analysis employ specialized architectures for robust feature extraction. These include recurrent neural networks, long short-term memory networks, and temporal convolutional networks that capture temporal dependencies and patterns. The extracted features are robust to noise, missing values, and temporal variations, making them valuable for applications in finance, healthcare, and industrial monitoring.Expand Specific Solutions05 Attention mechanisms and transformers for enhanced feature extraction

Advanced deep learning frameworks incorporate attention mechanisms and transformer architectures to enhance feature extraction capabilities. These approaches allow models to focus on relevant parts of the input data while ignoring irrelevant information, resulting in more discriminative and robust features. The self-attention mechanism enables capturing long-range dependencies and contextual information, improving performance across various domains including vision, language, and multimodal applications.Expand Specific Solutions

Leading Organizations in BCI Deep Learning Research

The Brain-Computer Interface (BCI) market for deep learning feature extraction is in a growth phase, characterized by increasing research activity and commercial interest. The market is projected to expand significantly due to advancements in neural signal processing and AI applications. Technologically, we observe varying maturity levels among key players: academic institutions like California Institute of Technology, Xidian University, and Sichuan University are driving fundamental research, while companies including Intel, IBM, and Precision Neuroscience are commercializing applications. Elucid Bioimaging and Kepler Vision Technologies represent specialized players developing niche applications. The competitive landscape features a blend of tech giants investing in neural interfaces alongside specialized startups and research-focused universities, with increasing cross-sector collaboration accelerating innovation in robust feature extraction techniques.

California Institute of Technology

Technical Solution: Caltech has developed sophisticated deep learning frameworks for BCI feature extraction through its interdisciplinary research initiatives. Their approach combines principles from computational neuroscience with state-of-the-art deep learning techniques to create more biologically plausible models. Caltech's framework employs hierarchical convolutional neural networks that mimic the processing hierarchy of the visual cortex, enabling more effective extraction of features from visual evoked potentials. Their system incorporates advanced regularization techniques specifically designed for neural data, reducing overfitting when training on limited BCI datasets. Caltech researchers have implemented self-supervised contrastive learning methods that can leverage unlabeled neural recordings to improve feature representations. The framework includes specialized attention mechanisms that can dynamically focus on the most relevant aspects of neural signals based on context. Additionally, Caltech has developed interpretable deep learning models that provide insights into which neural patterns contribute to specific decoded intentions, enhancing trust and enabling refinement of BCI systems.

Strengths: Cutting-edge theoretical foundations; strong integration of neuroscience principles with AI; innovative approaches to model interpretability. Weaknesses: Potentially more focused on fundamental research than practical applications; may require significant computational expertise to implement; possibly less optimized for clinical deployment than more application-focused solutions.

The Regents of the University of California

Technical Solution: The University of California system has pioneered several deep learning frameworks for robust BCI feature extraction through its various campuses and research centers. Their approach combines advanced signal processing techniques with deep learning architectures specifically optimized for neural data. UC researchers have developed adaptive filtering methods that can effectively remove artifacts from EEG signals in real-time, significantly improving the signal-to-noise ratio for subsequent feature extraction. Their framework incorporates spatiotemporal convolutional neural networks that can simultaneously capture both spatial and temporal patterns in brain activity, leading to more robust feature extraction. UC's system also implements transfer learning techniques that allow models trained on large datasets to be fine-tuned for individual users with minimal calibration data. The framework includes uncertainty quantification methods that provide confidence estimates for decoded intentions, enhancing reliability in clinical applications. Additionally, UC researchers have developed specialized attention mechanisms that can focus on the most informative frequency bands and electrode locations for specific BCI tasks.

Strengths: Strong academic research foundation; extensive peer-reviewed publications validating approaches; collaborative development across multiple UC campuses. Weaknesses: Potentially less commercialized than industry solutions; may require significant expertise to implement; research prototypes might not be as user-friendly as commercial systems.

Key Innovations in Robust Feature Extraction Techniques

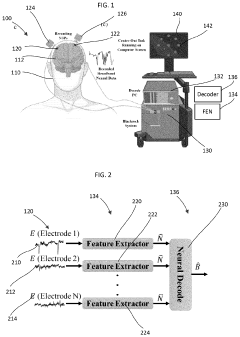

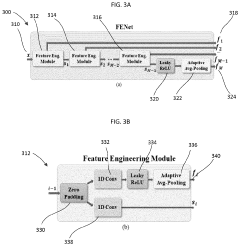

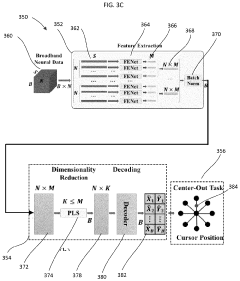

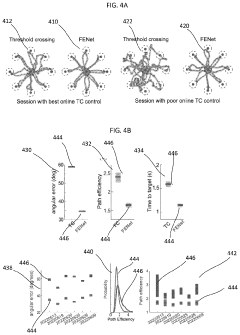

Features extraction network for estimating neural activity from electrical recordings

PatentPendingUS20240046071A1

Innovation

- A feature extraction network (FENet) is developed that uses a multi-layer 1D convolutional architecture to learn an optimized mapping from electrical signals to neural features, employing convolutional filters, activation functions, and pooling layers, with shared parameters across electrodes to generalize to new recordings and improve feature extraction accuracy.

Deep feature extraction and training tools and associated methods

PatentActiveUS11568176B1

Innovation

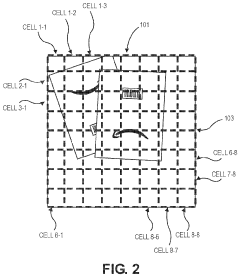

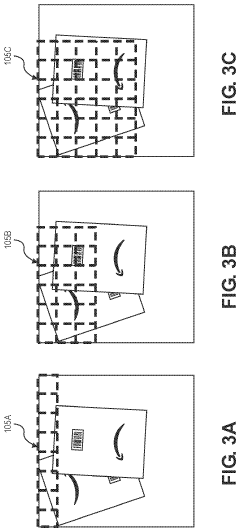

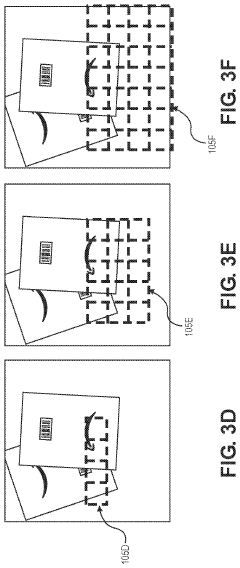

- The implementation of deep feature extraction and training tools that tessellate imaging data into cells, mask subsets, and process masked outputs to identify and visualize deep features, allowing for the understanding and modification of features that lead to correct or incorrect decisions.

Ethical and Privacy Considerations in BCI Technology

The integration of deep learning frameworks into Brain-Computer Interface (BCI) technology raises significant ethical and privacy concerns that must be addressed before widespread adoption. Neural data collected through BCI systems contains highly sensitive information about users' cognitive processes, emotional states, and potentially even thoughts or intentions. This unprecedented access to neural activity creates novel privacy vulnerabilities that traditional data protection frameworks may be inadequate to address.

Primary concerns include the potential for unauthorized access to neural data during transmission or storage. Deep learning models used for feature extraction may inadvertently capture more information than strictly necessary for the intended BCI application, creating risks of function creep where data collected for one purpose could be repurposed for another without explicit consent. The black-box nature of many deep learning algorithms further complicates this issue, as users may not fully understand what information is being extracted from their neural signals.

Informed consent presents another critical challenge. Users may not comprehend the full implications of sharing neural data, especially when deep learning systems continue to evolve and find new patterns in historical data. This raises questions about whether truly informed consent is possible when the future capabilities of these systems remain unknown. Additionally, the potential for algorithmic bias in deep learning frameworks could lead to discriminatory outcomes if BCI systems perform differently across demographic groups.

The possibility of neural data being used for identification purposes raises concerns about anonymity and surveillance. Research has demonstrated that EEG signals contain unique identifiers similar to fingerprints, which could enable tracking across different contexts. This capability, combined with deep learning's pattern recognition strengths, creates unprecedented challenges for maintaining user privacy and autonomy.

Regulatory frameworks must evolve to address these novel challenges. Current data protection regulations like GDPR in Europe provide some safeguards, but specialized guidelines for neural data protection are needed. Principles such as data minimization, purpose limitation, and privacy-by-design should be incorporated into BCI development processes. Technical solutions including differential privacy, federated learning, and secure multi-party computation offer promising approaches to enhance privacy while maintaining the utility of deep learning for feature extraction.

Ultimately, balancing the tremendous potential benefits of deep learning-enhanced BCI technology with robust ethical and privacy protections will require multidisciplinary collaboration between neuroscientists, computer scientists, ethicists, legal experts, and end-users. Transparent development practices and ongoing stakeholder engagement will be essential to ensure these powerful technologies develop in ways that respect human rights and dignity.

Primary concerns include the potential for unauthorized access to neural data during transmission or storage. Deep learning models used for feature extraction may inadvertently capture more information than strictly necessary for the intended BCI application, creating risks of function creep where data collected for one purpose could be repurposed for another without explicit consent. The black-box nature of many deep learning algorithms further complicates this issue, as users may not fully understand what information is being extracted from their neural signals.

Informed consent presents another critical challenge. Users may not comprehend the full implications of sharing neural data, especially when deep learning systems continue to evolve and find new patterns in historical data. This raises questions about whether truly informed consent is possible when the future capabilities of these systems remain unknown. Additionally, the potential for algorithmic bias in deep learning frameworks could lead to discriminatory outcomes if BCI systems perform differently across demographic groups.

The possibility of neural data being used for identification purposes raises concerns about anonymity and surveillance. Research has demonstrated that EEG signals contain unique identifiers similar to fingerprints, which could enable tracking across different contexts. This capability, combined with deep learning's pattern recognition strengths, creates unprecedented challenges for maintaining user privacy and autonomy.

Regulatory frameworks must evolve to address these novel challenges. Current data protection regulations like GDPR in Europe provide some safeguards, but specialized guidelines for neural data protection are needed. Principles such as data minimization, purpose limitation, and privacy-by-design should be incorporated into BCI development processes. Technical solutions including differential privacy, federated learning, and secure multi-party computation offer promising approaches to enhance privacy while maintaining the utility of deep learning for feature extraction.

Ultimately, balancing the tremendous potential benefits of deep learning-enhanced BCI technology with robust ethical and privacy protections will require multidisciplinary collaboration between neuroscientists, computer scientists, ethicists, legal experts, and end-users. Transparent development practices and ongoing stakeholder engagement will be essential to ensure these powerful technologies develop in ways that respect human rights and dignity.

Clinical Validation and Regulatory Pathways

Clinical validation represents a critical milestone in the development pathway for Brain-Computer Interface (BCI) technologies utilizing deep learning frameworks for feature extraction. The validation process typically follows a multi-phase approach, beginning with proof-of-concept studies in controlled laboratory environments, followed by small-scale clinical trials, and eventually progressing to larger randomized controlled trials. Current validation protocols focus on measuring signal quality, classification accuracy, information transfer rates, and user experience metrics across diverse patient populations and environmental conditions.

Regulatory frameworks for BCI technologies vary significantly across global markets, creating a complex landscape for developers. In the United States, the FDA has established a regulatory pathway for BCI devices under medical device regulations, with classification depending on invasiveness and intended use. Non-invasive BCI systems for communication or environmental control typically fall under Class II devices, requiring 510(k) clearance, while invasive systems may require the more rigorous Premarket Approval (PMA) process.

The European Union's Medical Device Regulation (MDR) presents additional requirements, particularly regarding clinical evidence and post-market surveillance. Deep learning components in BCI systems introduce unique regulatory challenges related to algorithm transparency, validation of training data, and continuous performance monitoring. Regulatory bodies increasingly require demonstration of algorithm stability and reliability across diverse populations.

Key performance indicators for clinical validation include signal-to-noise ratio improvements, feature extraction robustness across sessions, classification accuracy in real-world environments, and adaptation to user variability. Studies indicate that deep learning approaches must demonstrate at least 20% improvement in classification accuracy over traditional methods to justify implementation costs and complexity.

Emerging regulatory considerations specifically address the adaptive nature of deep learning algorithms in BCI applications. The FDA's proposed framework for AI/ML-based Software as a Medical Device (SaMD) may significantly impact regulatory pathways for advanced BCI systems. This framework emphasizes a "predetermined change control plan" that allows for algorithm updates while maintaining safety and effectiveness.

Industry-academic partnerships have emerged as effective models for navigating the clinical validation process, combining academic rigor with industry resources. Notable examples include collaborations between neural engineering laboratories and medical device manufacturers that have successfully progressed through regulatory pathways while maintaining scientific integrity and addressing patient needs.

Regulatory frameworks for BCI technologies vary significantly across global markets, creating a complex landscape for developers. In the United States, the FDA has established a regulatory pathway for BCI devices under medical device regulations, with classification depending on invasiveness and intended use. Non-invasive BCI systems for communication or environmental control typically fall under Class II devices, requiring 510(k) clearance, while invasive systems may require the more rigorous Premarket Approval (PMA) process.

The European Union's Medical Device Regulation (MDR) presents additional requirements, particularly regarding clinical evidence and post-market surveillance. Deep learning components in BCI systems introduce unique regulatory challenges related to algorithm transparency, validation of training data, and continuous performance monitoring. Regulatory bodies increasingly require demonstration of algorithm stability and reliability across diverse populations.

Key performance indicators for clinical validation include signal-to-noise ratio improvements, feature extraction robustness across sessions, classification accuracy in real-world environments, and adaptation to user variability. Studies indicate that deep learning approaches must demonstrate at least 20% improvement in classification accuracy over traditional methods to justify implementation costs and complexity.

Emerging regulatory considerations specifically address the adaptive nature of deep learning algorithms in BCI applications. The FDA's proposed framework for AI/ML-based Software as a Medical Device (SaMD) may significantly impact regulatory pathways for advanced BCI systems. This framework emphasizes a "predetermined change control plan" that allows for algorithm updates while maintaining safety and effectiveness.

Industry-academic partnerships have emerged as effective models for navigating the clinical validation process, combining academic rigor with industry resources. Notable examples include collaborations between neural engineering laboratories and medical device manufacturers that have successfully progressed through regulatory pathways while maintaining scientific integrity and addressing patient needs.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!