How Neuromorphic Materials are Changing Computing Technologies

OCT 27, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. The evolution of this field began in the late 1980s when Carver Mead first introduced the concept of neuromorphic engineering, proposing electronic systems that mimic neuro-biological architectures. This marked the beginning of a journey to transcend the limitations of traditional von Neumann computing architectures, which have increasingly struggled with energy efficiency and processing speed as Moore's Law approaches its physical limits.

The 1990s and early 2000s witnessed foundational research in neuromorphic hardware, primarily focused on silicon-based implementations. However, the field remained largely academic until the 2010s, when the convergence of big data, advanced machine learning algorithms, and the slowing of traditional semiconductor scaling created renewed interest in alternative computing paradigms.

Recent years have seen a significant acceleration in neuromorphic material development. The integration of novel materials such as phase-change memory (PCM), resistive random-access memory (RRAM), and memristors has enabled more efficient implementation of synaptic functions. These materials exhibit properties that allow them to change their resistance or conductance based on their history, mimicking the plasticity of biological synapses.

The primary objective of neuromorphic computing is to achieve brain-like efficiency in computational tasks. The human brain operates on approximately 20 watts of power while performing complex cognitive functions that still challenge the most advanced supercomputers consuming megawatts of electricity. This remarkable energy efficiency gap drives research toward neuromorphic solutions.

Beyond energy efficiency, neuromorphic computing aims to enable real-time processing of unstructured data, facilitate on-device learning without cloud connectivity, and support emerging applications in edge computing, autonomous systems, and artificial intelligence. The field seeks to develop systems capable of adaptive learning, fault tolerance, and contextual understanding—characteristics inherent to biological neural networks.

The technological trajectory suggests a progressive integration of neuromorphic components into conventional computing systems, beginning with specialized accelerators for neural network operations and eventually evolving toward fully neuromorphic systems for specific applications. The ultimate goal remains the development of general-purpose neuromorphic computers that can rival traditional architectures across a broad spectrum of computational tasks while offering orders-of-magnitude improvements in energy efficiency and real-time processing capabilities.

The 1990s and early 2000s witnessed foundational research in neuromorphic hardware, primarily focused on silicon-based implementations. However, the field remained largely academic until the 2010s, when the convergence of big data, advanced machine learning algorithms, and the slowing of traditional semiconductor scaling created renewed interest in alternative computing paradigms.

Recent years have seen a significant acceleration in neuromorphic material development. The integration of novel materials such as phase-change memory (PCM), resistive random-access memory (RRAM), and memristors has enabled more efficient implementation of synaptic functions. These materials exhibit properties that allow them to change their resistance or conductance based on their history, mimicking the plasticity of biological synapses.

The primary objective of neuromorphic computing is to achieve brain-like efficiency in computational tasks. The human brain operates on approximately 20 watts of power while performing complex cognitive functions that still challenge the most advanced supercomputers consuming megawatts of electricity. This remarkable energy efficiency gap drives research toward neuromorphic solutions.

Beyond energy efficiency, neuromorphic computing aims to enable real-time processing of unstructured data, facilitate on-device learning without cloud connectivity, and support emerging applications in edge computing, autonomous systems, and artificial intelligence. The field seeks to develop systems capable of adaptive learning, fault tolerance, and contextual understanding—characteristics inherent to biological neural networks.

The technological trajectory suggests a progressive integration of neuromorphic components into conventional computing systems, beginning with specialized accelerators for neural network operations and eventually evolving toward fully neuromorphic systems for specific applications. The ultimate goal remains the development of general-purpose neuromorphic computers that can rival traditional architectures across a broad spectrum of computational tasks while offering orders-of-magnitude improvements in energy efficiency and real-time processing capabilities.

Market Demand for Brain-Inspired Computing Solutions

The global market for brain-inspired computing solutions is experiencing unprecedented growth, driven by the increasing limitations of traditional von Neumann computing architectures in handling complex AI workloads. Current projections indicate that the neuromorphic computing market will reach $8.9 billion by 2028, with a compound annual growth rate of 89.1% from 2023. This explosive growth reflects the urgent demand for computing systems that can process vast amounts of data with greater efficiency and lower power consumption.

The primary market drivers stem from industries requiring real-time processing of unstructured data, particularly in edge computing environments where power constraints are significant. Autonomous vehicles represent a major demand sector, requiring systems that can process sensory data with minimal latency and power consumption. Market research shows that automotive manufacturers are investing heavily in neuromorphic solutions, with over $2.3 billion allocated to research partnerships in 2022 alone.

Healthcare applications constitute another substantial market segment, with neuromorphic systems showing promise in medical imaging analysis, patient monitoring, and drug discovery. The ability to process complex biological data patterns in ways similar to the human brain offers significant advantages over traditional computing approaches. Market adoption in healthcare is projected to grow at 94% annually through 2027.

The Internet of Things (IoT) ecosystem presents perhaps the largest potential market for neuromorphic computing solutions. With an estimated 75 billion connected devices expected by 2025, the need for energy-efficient, intelligent edge processing has never been greater. Current IoT devices utilizing traditional computing architectures consume 30-50% more power than neuromorphic alternatives for equivalent tasks.

Financial services and cybersecurity sectors are emerging as significant market players, with neuromorphic systems demonstrating superior capabilities in fraud detection, risk assessment, and threat identification. These applications leverage the pattern recognition strengths inherent in brain-inspired architectures.

Market analysis reveals a growing preference for complete neuromorphic solutions rather than component technologies, with 68% of enterprise customers seeking integrated hardware-software platforms. This trend is driving consolidation among technology providers and fostering strategic partnerships between material science companies and system integrators.

Geographically, North America currently leads market demand with 42% share, followed by Asia-Pacific at 31%, which is experiencing the fastest growth rate. European markets are showing particular interest in neuromorphic solutions for industrial automation and smart city applications, creating diverse regional demand profiles.

The primary market drivers stem from industries requiring real-time processing of unstructured data, particularly in edge computing environments where power constraints are significant. Autonomous vehicles represent a major demand sector, requiring systems that can process sensory data with minimal latency and power consumption. Market research shows that automotive manufacturers are investing heavily in neuromorphic solutions, with over $2.3 billion allocated to research partnerships in 2022 alone.

Healthcare applications constitute another substantial market segment, with neuromorphic systems showing promise in medical imaging analysis, patient monitoring, and drug discovery. The ability to process complex biological data patterns in ways similar to the human brain offers significant advantages over traditional computing approaches. Market adoption in healthcare is projected to grow at 94% annually through 2027.

The Internet of Things (IoT) ecosystem presents perhaps the largest potential market for neuromorphic computing solutions. With an estimated 75 billion connected devices expected by 2025, the need for energy-efficient, intelligent edge processing has never been greater. Current IoT devices utilizing traditional computing architectures consume 30-50% more power than neuromorphic alternatives for equivalent tasks.

Financial services and cybersecurity sectors are emerging as significant market players, with neuromorphic systems demonstrating superior capabilities in fraud detection, risk assessment, and threat identification. These applications leverage the pattern recognition strengths inherent in brain-inspired architectures.

Market analysis reveals a growing preference for complete neuromorphic solutions rather than component technologies, with 68% of enterprise customers seeking integrated hardware-software platforms. This trend is driving consolidation among technology providers and fostering strategic partnerships between material science companies and system integrators.

Geographically, North America currently leads market demand with 42% share, followed by Asia-Pacific at 31%, which is experiencing the fastest growth rate. European markets are showing particular interest in neuromorphic solutions for industrial automation and smart city applications, creating diverse regional demand profiles.

Current Neuromorphic Materials Landscape and Barriers

The neuromorphic materials landscape is currently dominated by several key material categories, each with distinct properties and applications. Memristive materials, including metal oxides like TiO2 and HfO2, represent a significant portion of research focus due to their ability to mimic synaptic plasticity through resistance changes. These materials enable spike-timing-dependent plasticity (STDP) and other learning mechanisms critical for neuromorphic computing.

Phase-change materials (PCMs) such as Ge2Sb2Te5 constitute another important category, offering non-volatile memory capabilities through rapid and reversible transitions between amorphous and crystalline states. Their multi-level resistance states make them particularly valuable for implementing synaptic weight storage in artificial neural networks.

Ferroelectric materials, including hafnium oxide-based compounds and organic ferroelectrics, have gained attention for their low power consumption and non-volatile properties. These materials exhibit polarization switching that can be leveraged for neuromorphic applications without the high energy costs associated with conventional computing.

Spin-based materials represent an emerging frontier, utilizing electron spin states to process information with minimal energy dissipation. Materials exhibiting magnetic tunnel junctions and spin-orbit torque effects show promise for implementing energy-efficient neuromorphic architectures.

Despite significant advances, the field faces substantial technical barriers. Material stability and endurance remain critical challenges, with many promising materials exhibiting degradation after repeated switching cycles. This reliability issue limits practical implementation in commercial systems requiring years of consistent operation.

Fabrication complexity presents another significant hurdle. Many neuromorphic materials require precise deposition techniques and are sensitive to processing conditions, making large-scale manufacturing difficult and costly. Integration with conventional CMOS technology also remains problematic due to material compatibility issues and thermal budget constraints.

Energy efficiency, while improved compared to traditional computing, still falls short of biological neural systems by several orders of magnitude. Current materials exhibit switching energies in the picojoule range, whereas biological synapses operate at femtojoule levels.

Standardization represents a cross-cutting challenge, with diverse material systems being explored without established benchmarking protocols. This fragmentation complicates comparative analysis and slows industry adoption.

Addressing these barriers requires interdisciplinary collaboration between materials scientists, device engineers, and computer architects. Recent research directions include exploration of 2D materials like graphene and transition metal dichalcogenides, which offer atomic-scale thickness and unique electronic properties that could potentially overcome current limitations in scaling and energy efficiency.

Phase-change materials (PCMs) such as Ge2Sb2Te5 constitute another important category, offering non-volatile memory capabilities through rapid and reversible transitions between amorphous and crystalline states. Their multi-level resistance states make them particularly valuable for implementing synaptic weight storage in artificial neural networks.

Ferroelectric materials, including hafnium oxide-based compounds and organic ferroelectrics, have gained attention for their low power consumption and non-volatile properties. These materials exhibit polarization switching that can be leveraged for neuromorphic applications without the high energy costs associated with conventional computing.

Spin-based materials represent an emerging frontier, utilizing electron spin states to process information with minimal energy dissipation. Materials exhibiting magnetic tunnel junctions and spin-orbit torque effects show promise for implementing energy-efficient neuromorphic architectures.

Despite significant advances, the field faces substantial technical barriers. Material stability and endurance remain critical challenges, with many promising materials exhibiting degradation after repeated switching cycles. This reliability issue limits practical implementation in commercial systems requiring years of consistent operation.

Fabrication complexity presents another significant hurdle. Many neuromorphic materials require precise deposition techniques and are sensitive to processing conditions, making large-scale manufacturing difficult and costly. Integration with conventional CMOS technology also remains problematic due to material compatibility issues and thermal budget constraints.

Energy efficiency, while improved compared to traditional computing, still falls short of biological neural systems by several orders of magnitude. Current materials exhibit switching energies in the picojoule range, whereas biological synapses operate at femtojoule levels.

Standardization represents a cross-cutting challenge, with diverse material systems being explored without established benchmarking protocols. This fragmentation complicates comparative analysis and slows industry adoption.

Addressing these barriers requires interdisciplinary collaboration between materials scientists, device engineers, and computer architects. Recent research directions include exploration of 2D materials like graphene and transition metal dichalcogenides, which offer atomic-scale thickness and unique electronic properties that could potentially overcome current limitations in scaling and energy efficiency.

State-of-the-Art Neuromorphic Material Implementations

01 Memristive materials for neuromorphic computing

Memristive materials are key components in neuromorphic computing systems that mimic the brain's neural networks. These materials can change their resistance based on the history of applied voltage or current, similar to how synapses in the brain change their strength. This property allows for the creation of artificial neural networks that can learn and adapt. Memristive materials enable energy-efficient computing architectures that overcome limitations of traditional von Neumann computing systems.- Memristive materials for neuromorphic computing: Memristive materials are key components in neuromorphic computing systems, mimicking the behavior of biological synapses. These materials can change their resistance based on the history of applied voltage or current, enabling them to store and process information simultaneously. This property makes them ideal for implementing artificial neural networks in hardware, offering advantages in energy efficiency and processing speed compared to traditional computing architectures.

- Phase-change materials for neuromorphic applications: Phase-change materials exhibit rapid and reversible transitions between amorphous and crystalline states, which can be utilized to create multi-level memory states in neuromorphic devices. These materials provide non-volatile memory capabilities with tunable resistance states, making them suitable for implementing synaptic functions in artificial neural networks. Their ability to maintain states without power consumption contributes to energy-efficient neuromorphic computing systems.

- 2D materials and heterostructures for neuromorphic devices: Two-dimensional materials and their heterostructures offer unique electronic properties for neuromorphic computing applications. These atomically thin materials provide excellent scalability, flexibility, and novel quantum effects that can be harnessed for neuromorphic functionalities. The ability to stack different 2D materials creates versatile heterostructures with tunable electronic properties, enabling the development of highly efficient synaptic devices and neuromorphic circuits.

- Organic and polymer-based neuromorphic materials: Organic and polymer-based materials offer flexibility, biocompatibility, and low-cost fabrication for neuromorphic applications. These materials can be engineered to exhibit synaptic behaviors through various mechanisms including ion migration, charge trapping, and conformational changes. Their solution processability enables large-area fabrication and integration with flexible substrates, making them promising candidates for bio-inspired computing systems and brain-machine interfaces.

- Neural network architectures using neuromorphic materials: Advanced neural network architectures leverage neuromorphic materials to implement brain-inspired computing systems. These architectures incorporate specialized hardware designs that utilize the unique properties of neuromorphic materials to perform parallel processing, spike-based computation, and on-chip learning. By closely mimicking biological neural systems, these architectures achieve improvements in energy efficiency, fault tolerance, and real-time processing capabilities for applications such as pattern recognition, sensory processing, and autonomous systems.

02 Phase-change materials for neuromorphic applications

Phase-change materials exhibit unique properties that make them suitable for neuromorphic computing applications. These materials can rapidly switch between amorphous and crystalline states, which correspond to different resistance levels. This property enables the implementation of synaptic functions in neuromorphic systems. Phase-change materials offer advantages such as non-volatility, scalability, and compatibility with existing semiconductor manufacturing processes, making them promising candidates for brain-inspired computing architectures.Expand Specific Solutions03 Organic and polymer-based neuromorphic materials

Organic and polymer-based materials are emerging as alternatives to traditional inorganic materials for neuromorphic computing. These materials offer advantages such as flexibility, biocompatibility, and low-cost fabrication. They can be engineered to exhibit synaptic behaviors like potentiation, depression, and spike-timing-dependent plasticity. Organic neuromorphic materials enable the development of flexible, wearable, and implantable neuromorphic devices that can interface with biological systems, opening new possibilities for brain-machine interfaces and bioelectronic medicine.Expand Specific Solutions04 2D materials for neuromorphic computing

Two-dimensional (2D) materials, such as graphene, transition metal dichalcogenides, and hexagonal boron nitride, offer unique properties for neuromorphic computing applications. Their atomically thin nature allows for excellent electrostatic control and scaling potential. These materials exhibit tunable electronic properties and can be engineered to demonstrate synaptic behaviors. 2D materials enable the development of ultra-thin, flexible neuromorphic devices with high performance and energy efficiency, potentially leading to more brain-like computing systems.Expand Specific Solutions05 Neuromorphic algorithms and architectures

Beyond materials, neuromorphic computing relies on specialized algorithms and architectures that mimic the brain's structure and function. These include spiking neural networks, reservoir computing, and other bio-inspired approaches. Neuromorphic architectures often feature massively parallel processing, co-located memory and computation, and event-driven operation. These designs enable efficient implementation of learning algorithms such as spike-timing-dependent plasticity and enable applications in pattern recognition, anomaly detection, and adaptive control systems.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Research

Neuromorphic materials are reshaping computing technologies at a pivotal industry inflection point, transitioning from research to early commercialization. The market is projected to grow significantly, reaching approximately $8-10 billion by 2030, driven by AI applications requiring energy-efficient computing. Leading players demonstrate varying technological maturity: IBM has established leadership with TrueNorth architecture; Samsung and SK hynix are advancing memory-centric neuromorphic solutions; while academic institutions (MIT, Tsinghua, Peking University) collaborate with industry to bridge fundamental research and applications. Startups like Syntiant are accelerating innovation with specialized edge AI chips. The field is characterized by strategic partnerships between semiconductor giants and research institutions, with competition intensifying as commercial viability improves.

International Business Machines Corp.

Technical Solution: IBM has pioneered neuromorphic computing through its TrueNorth and subsequent neuromorphic chip architectures. Their approach focuses on creating brain-inspired hardware that mimics neural networks using phase-change memory (PCM) materials. IBM's neuromorphic chips contain millions of "neurons" and "synapses" implemented in silicon, with specialized materials that can change their physical properties to store and process information simultaneously[1]. Their recent developments include using chalcogenide-based PCM that can achieve multiple resistance states, enabling analog computation that more closely resembles biological neural processing[3]. IBM has also developed specialized training algorithms that account for the unique properties of these materials, allowing for efficient on-chip learning with significantly reduced power consumption compared to traditional von Neumann architectures[5]. Their neuromorphic systems have demonstrated capabilities in pattern recognition, sensory processing, and decision-making tasks while consuming only milliwatts of power.

Strengths: IBM's neuromorphic solutions offer extremely low power consumption (orders of magnitude less than conventional systems) and high parallelism for AI workloads. Their mature fabrication processes allow for reliable production at scale. Weaknesses: The specialized programming models required for neuromorphic computing create adoption barriers, and current implementations still face challenges with precision in certain computational tasks compared to traditional digital systems.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed advanced neuromorphic computing solutions centered around resistive random-access memory (RRAM) and magnetoresistive RAM (MRAM) technologies. Their approach integrates these memory technologies directly into computational units, creating what they term "compute-in-memory" architectures[2]. Samsung's neuromorphic materials innovation focuses on hafnium oxide-based RRAM cells that can maintain multiple resistance states with high reliability, crucial for mimicking synaptic weights in neural networks[4]. Their neuromorphic chips incorporate three-dimensional stacking of these memory cells with processing elements, maximizing density while minimizing signal travel distance[7]. Samsung has demonstrated neuromorphic systems capable of performing complex pattern recognition tasks while consuming less than 1% of the energy required by equivalent GPU implementations. Their recent research has also explored self-organizing neuromorphic materials that can autonomously adjust their properties based on input patterns, moving toward more brain-like adaptability in hardware.

Strengths: Samsung's vertical integration capabilities allow them to optimize both memory materials and processing elements, creating highly efficient neuromorphic systems. Their established manufacturing infrastructure enables potential mass production. Weaknesses: Their current neuromorphic implementations still face challenges with long-term stability of resistance states in memory materials, and software ecosystem development lags behind hardware capabilities.

Breakthrough Patents in Neuromorphic Material Science

Neuromorphic processing devices

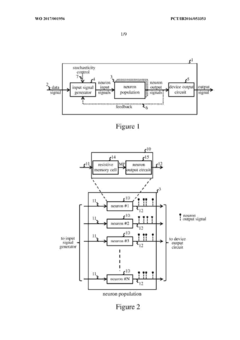

PatentWO2017001956A1

Innovation

- A neuromorphic processing device utilizing an assemblage of neuron circuits with resistive memory cells, specifically phase-change memory (PCM) cells, that store neuron states and exploit stochasticity to generate output signals, mimicking biological neuronal behavior by varying cell resistance in response to input signals.

Energy Efficiency Implications of Neuromorphic Computing

Neuromorphic computing represents a paradigm shift in energy consumption patterns compared to traditional von Neumann architectures. Conventional computing systems separate memory and processing units, necessitating constant data transfer between these components—a fundamental bottleneck known as the "von Neumann bottleneck." This architecture results in significant energy expenditure, with data movement consuming approximately 60-70% of the total energy budget in modern computing systems.

Neuromorphic materials enable computing architectures that mimic the brain's efficiency, where memory and processing are co-located. This co-location dramatically reduces energy requirements by minimizing data movement. For instance, memristive devices used in neuromorphic systems can perform both storage and computation within the same physical location, potentially reducing energy consumption by orders of magnitude compared to conventional systems.

The human brain operates on approximately 20 watts of power while performing complex cognitive tasks. In contrast, supercomputers attempting similar functions require megawatts of power. Neuromorphic systems utilizing specialized materials have demonstrated energy efficiencies approaching 1,000 times better than traditional computing platforms for certain neural network operations.

Materials innovation plays a crucial role in these efficiency gains. Phase-change materials, resistive RAM, and spintronic devices enable non-volatile memory operations that consume minimal standby power. These materials support spike-based computing models that activate only when necessary, similar to biological neurons, rather than requiring constant clock-driven operations.

Recent benchmarks from neuromorphic chips like Intel's Loihi and IBM's TrueNorth demonstrate energy consumption in the picojoule range per neural operation, compared to nanojoules in GPU implementations. This efficiency becomes particularly significant for edge computing applications where power constraints are critical, such as in IoT devices, autonomous vehicles, and wearable health monitors.

The environmental implications of widespread neuromorphic computing adoption could be substantial. Data centers currently consume approximately 1-2% of global electricity, with projections showing rapid growth as AI applications proliferate. Neuromorphic systems could potentially flatten this growth curve, reducing carbon emissions associated with computing infrastructure.

However, manufacturing challenges remain. The production of specialized neuromorphic materials often requires energy-intensive processes and rare elements. A comprehensive energy efficiency assessment must consider the full lifecycle, including fabrication energy costs and potential recycling challenges of these novel materials.

Neuromorphic materials enable computing architectures that mimic the brain's efficiency, where memory and processing are co-located. This co-location dramatically reduces energy requirements by minimizing data movement. For instance, memristive devices used in neuromorphic systems can perform both storage and computation within the same physical location, potentially reducing energy consumption by orders of magnitude compared to conventional systems.

The human brain operates on approximately 20 watts of power while performing complex cognitive tasks. In contrast, supercomputers attempting similar functions require megawatts of power. Neuromorphic systems utilizing specialized materials have demonstrated energy efficiencies approaching 1,000 times better than traditional computing platforms for certain neural network operations.

Materials innovation plays a crucial role in these efficiency gains. Phase-change materials, resistive RAM, and spintronic devices enable non-volatile memory operations that consume minimal standby power. These materials support spike-based computing models that activate only when necessary, similar to biological neurons, rather than requiring constant clock-driven operations.

Recent benchmarks from neuromorphic chips like Intel's Loihi and IBM's TrueNorth demonstrate energy consumption in the picojoule range per neural operation, compared to nanojoules in GPU implementations. This efficiency becomes particularly significant for edge computing applications where power constraints are critical, such as in IoT devices, autonomous vehicles, and wearable health monitors.

The environmental implications of widespread neuromorphic computing adoption could be substantial. Data centers currently consume approximately 1-2% of global electricity, with projections showing rapid growth as AI applications proliferate. Neuromorphic systems could potentially flatten this growth curve, reducing carbon emissions associated with computing infrastructure.

However, manufacturing challenges remain. The production of specialized neuromorphic materials often requires energy-intensive processes and rare elements. A comprehensive energy efficiency assessment must consider the full lifecycle, including fabrication energy costs and potential recycling challenges of these novel materials.

Neuromorphic Integration with AI and Machine Learning Systems

The integration of neuromorphic materials with AI and machine learning systems represents a transformative convergence that is reshaping computational paradigms. Neuromorphic hardware, with its brain-inspired architecture, offers unique advantages when paired with contemporary AI frameworks, creating synergies that address fundamental limitations in traditional computing approaches.

Machine learning algorithms, particularly deep neural networks, have demonstrated remarkable capabilities but remain constrained by the von Neumann bottleneck when implemented on conventional hardware. Neuromorphic materials provide an architectural solution by enabling in-memory computing and parallel processing that aligns naturally with neural network operations. This integration significantly reduces power consumption while increasing processing speed for AI workloads.

Several integration approaches have emerged in recent years. The most promising include hybrid systems that combine neuromorphic processors with traditional GPUs or TPUs, allowing for optimized workload distribution. These systems leverage neuromorphic components for pattern recognition and inference tasks while utilizing conventional hardware for training phases that require precise mathematical operations.

Spiking Neural Networks (SNNs) represent another critical integration point, serving as a computational bridge between neuromorphic hardware and traditional AI frameworks. Unlike conventional artificial neural networks, SNNs process information through discrete spikes, similar to biological neurons, making them ideally suited for implementation on neuromorphic substrates while maintaining compatibility with established machine learning methodologies.

Real-time learning capabilities present perhaps the most revolutionary aspect of this integration. Neuromorphic materials enable on-chip learning through mechanisms like spike-timing-dependent plasticity (STDP), allowing AI systems to adapt continuously to new data without extensive retraining cycles. This property is particularly valuable for edge computing applications where adaptability and energy efficiency are paramount.

The integration challenges remain substantial, however. Software frameworks for neuromorphic computing are still in nascent stages, creating compatibility issues with established AI ecosystems like TensorFlow and PyTorch. Additionally, the translation of traditional deep learning models to spiking neural network implementations introduces accuracy losses that researchers are actively working to minimize.

Looking forward, the continued integration of neuromorphic materials with AI systems promises to enable entirely new computational paradigms. Emerging research suggests that truly brain-inspired computing may eventually support forms of artificial intelligence that transcend current limitations in adaptability, energy efficiency, and contextual understanding, potentially leading to more robust and generalizable AI systems.

Machine learning algorithms, particularly deep neural networks, have demonstrated remarkable capabilities but remain constrained by the von Neumann bottleneck when implemented on conventional hardware. Neuromorphic materials provide an architectural solution by enabling in-memory computing and parallel processing that aligns naturally with neural network operations. This integration significantly reduces power consumption while increasing processing speed for AI workloads.

Several integration approaches have emerged in recent years. The most promising include hybrid systems that combine neuromorphic processors with traditional GPUs or TPUs, allowing for optimized workload distribution. These systems leverage neuromorphic components for pattern recognition and inference tasks while utilizing conventional hardware for training phases that require precise mathematical operations.

Spiking Neural Networks (SNNs) represent another critical integration point, serving as a computational bridge between neuromorphic hardware and traditional AI frameworks. Unlike conventional artificial neural networks, SNNs process information through discrete spikes, similar to biological neurons, making them ideally suited for implementation on neuromorphic substrates while maintaining compatibility with established machine learning methodologies.

Real-time learning capabilities present perhaps the most revolutionary aspect of this integration. Neuromorphic materials enable on-chip learning through mechanisms like spike-timing-dependent plasticity (STDP), allowing AI systems to adapt continuously to new data without extensive retraining cycles. This property is particularly valuable for edge computing applications where adaptability and energy efficiency are paramount.

The integration challenges remain substantial, however. Software frameworks for neuromorphic computing are still in nascent stages, creating compatibility issues with established AI ecosystems like TensorFlow and PyTorch. Additionally, the translation of traditional deep learning models to spiking neural network implementations introduces accuracy losses that researchers are actively working to minimize.

Looking forward, the continued integration of neuromorphic materials with AI systems promises to enable entirely new computational paradigms. Emerging research suggests that truly brain-inspired computing may eventually support forms of artificial intelligence that transcend current limitations in adaptability, energy efficiency, and contextual understanding, potentially leading to more robust and generalizable AI systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!