Material Parameters Driving Efficiency in Neuromorphic Computing

OCT 27, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. The evolution of this field can be traced back to the 1980s when Carver Mead first introduced the concept of using analog VLSI systems to mimic neurobiological architectures. Since then, the field has progressed through several distinct phases, each marked by significant technological breakthroughs and shifting objectives.

The initial phase focused primarily on theoretical foundations and proof-of-concept implementations. Researchers concentrated on understanding how neural processes could be translated into electronic equivalents, with early devices demonstrating basic neural functions but lacking practical computational capabilities. Material constraints during this period limited efficiency, with silicon-based CMOS technology serving as the primary platform despite its limitations in mimicking biological neural dynamics.

The second evolutionary phase, spanning roughly from 2000 to 2015, saw increased emphasis on practical applications and scalability. During this period, researchers began exploring alternative materials beyond traditional silicon, including various metal oxides and phase-change materials that could better emulate synaptic plasticity. The objectives shifted toward creating systems capable of handling specific machine learning tasks while maintaining energy efficiency superior to conventional computing architectures.

Currently, we are in what can be considered the third major phase of neuromorphic computing evolution, characterized by a focus on material-driven efficiency improvements. The primary objectives now encompass achieving ultra-low power consumption, enhanced computational density, and improved learning capabilities through novel material implementations. Materials science has become central to addressing fundamental challenges in neuromorphic computing, with parameters such as switching speed, energy per switching event, and retention time emerging as critical factors.

Looking forward, the field is trending toward several key objectives: achieving biological-level energy efficiency (femtojoules per synaptic operation), developing systems capable of online learning and adaptation, and creating architectures that seamlessly integrate sensing and computing functions. Material innovation stands at the forefront of these goals, with emerging research focusing on two-dimensional materials, memristive compounds, and organic electronics that promise orders-of-magnitude improvements in efficiency parameters.

The convergence of material science with neuromorphic computing principles represents a crucial frontier, where parameters such as ionic mobility, interface stability, and defect engineering directly impact computational performance. As the field continues to mature, the interplay between material properties and architectural design will likely determine the ultimate success of neuromorphic systems in applications ranging from edge computing to autonomous systems and advanced AI implementations.

The initial phase focused primarily on theoretical foundations and proof-of-concept implementations. Researchers concentrated on understanding how neural processes could be translated into electronic equivalents, with early devices demonstrating basic neural functions but lacking practical computational capabilities. Material constraints during this period limited efficiency, with silicon-based CMOS technology serving as the primary platform despite its limitations in mimicking biological neural dynamics.

The second evolutionary phase, spanning roughly from 2000 to 2015, saw increased emphasis on practical applications and scalability. During this period, researchers began exploring alternative materials beyond traditional silicon, including various metal oxides and phase-change materials that could better emulate synaptic plasticity. The objectives shifted toward creating systems capable of handling specific machine learning tasks while maintaining energy efficiency superior to conventional computing architectures.

Currently, we are in what can be considered the third major phase of neuromorphic computing evolution, characterized by a focus on material-driven efficiency improvements. The primary objectives now encompass achieving ultra-low power consumption, enhanced computational density, and improved learning capabilities through novel material implementations. Materials science has become central to addressing fundamental challenges in neuromorphic computing, with parameters such as switching speed, energy per switching event, and retention time emerging as critical factors.

Looking forward, the field is trending toward several key objectives: achieving biological-level energy efficiency (femtojoules per synaptic operation), developing systems capable of online learning and adaptation, and creating architectures that seamlessly integrate sensing and computing functions. Material innovation stands at the forefront of these goals, with emerging research focusing on two-dimensional materials, memristive compounds, and organic electronics that promise orders-of-magnitude improvements in efficiency parameters.

The convergence of material science with neuromorphic computing principles represents a crucial frontier, where parameters such as ionic mobility, interface stability, and defect engineering directly impact computational performance. As the field continues to mature, the interplay between material properties and architectural design will likely determine the ultimate success of neuromorphic systems in applications ranging from edge computing to autonomous systems and advanced AI implementations.

Market Analysis for Brain-Inspired Computing Solutions

The neuromorphic computing market is experiencing significant growth, driven by increasing demand for AI applications that require efficient processing of complex neural networks. Current market estimates value the global neuromorphic computing sector at approximately $2.5 billion, with projections indicating a compound annual growth rate of 20-25% over the next five years. This growth trajectory is supported by substantial investments from both private and public sectors, with government initiatives in the US, EU, and China allocating dedicated funding for neuromorphic research and development.

The market landscape for brain-inspired computing solutions can be segmented into hardware components, software frameworks, and integrated systems. Hardware components, particularly those focused on material innovation for neuromorphic architectures, represent the largest market segment, accounting for roughly 45% of the total market value. This segment is experiencing the most rapid technological advancement, with material science breakthroughs directly influencing market dynamics.

From an application perspective, the market shows diverse adoption patterns across industries. Healthcare applications, particularly in medical imaging and diagnostic systems, currently represent the largest market share at approximately 28%. This is followed closely by automotive applications (22%), where neuromorphic systems are being integrated into advanced driver assistance systems and autonomous vehicle platforms. Financial services, robotics, and defense sectors collectively account for another 35% of market applications.

Regional analysis reveals that North America currently leads the market with approximately 40% share, followed by Europe (25%) and Asia-Pacific (30%). However, the Asia-Pacific region is demonstrating the fastest growth rate, driven by significant investments in semiconductor manufacturing and neuromorphic research in China, Japan, and South Korea.

Customer demand is increasingly focused on energy efficiency as a primary value proposition. Market research indicates that solutions offering at least a 10x improvement in energy efficiency compared to traditional computing architectures are gaining significant traction. This trend directly correlates with the importance of material parameters in neuromorphic computing, as novel materials can dramatically reduce power consumption while maintaining or improving computational performance.

Market barriers include high initial development costs, technical complexity in implementing neuromorphic architectures, and challenges in scaling manufacturing processes for specialized materials. Despite these obstacles, the market shows strong indicators of continued expansion, particularly as material science innovations address current limitations in power efficiency, switching speed, and integration density of neuromorphic components.

The market landscape for brain-inspired computing solutions can be segmented into hardware components, software frameworks, and integrated systems. Hardware components, particularly those focused on material innovation for neuromorphic architectures, represent the largest market segment, accounting for roughly 45% of the total market value. This segment is experiencing the most rapid technological advancement, with material science breakthroughs directly influencing market dynamics.

From an application perspective, the market shows diverse adoption patterns across industries. Healthcare applications, particularly in medical imaging and diagnostic systems, currently represent the largest market share at approximately 28%. This is followed closely by automotive applications (22%), where neuromorphic systems are being integrated into advanced driver assistance systems and autonomous vehicle platforms. Financial services, robotics, and defense sectors collectively account for another 35% of market applications.

Regional analysis reveals that North America currently leads the market with approximately 40% share, followed by Europe (25%) and Asia-Pacific (30%). However, the Asia-Pacific region is demonstrating the fastest growth rate, driven by significant investments in semiconductor manufacturing and neuromorphic research in China, Japan, and South Korea.

Customer demand is increasingly focused on energy efficiency as a primary value proposition. Market research indicates that solutions offering at least a 10x improvement in energy efficiency compared to traditional computing architectures are gaining significant traction. This trend directly correlates with the importance of material parameters in neuromorphic computing, as novel materials can dramatically reduce power consumption while maintaining or improving computational performance.

Market barriers include high initial development costs, technical complexity in implementing neuromorphic architectures, and challenges in scaling manufacturing processes for specialized materials. Despite these obstacles, the market shows strong indicators of continued expansion, particularly as material science innovations address current limitations in power efficiency, switching speed, and integration density of neuromorphic components.

Material Science Challenges in Neuromorphic Systems

The development of neuromorphic computing systems faces significant material science challenges that must be addressed to achieve the efficiency and functionality required for brain-inspired computing. Current materials used in conventional computing architectures are fundamentally limited when attempting to mimic the brain's parallel processing capabilities and energy efficiency.

A primary challenge lies in developing materials with appropriate resistive switching properties for memristive devices. These materials must demonstrate reliable, reproducible, and stable resistance changes in response to electrical stimuli, while maintaining these states over extended periods. Metal oxides such as HfO₂, TiO₂, and Ta₂O₅ show promise, but issues with cycle-to-cycle and device-to-device variability remain significant hurdles.

Interface engineering presents another critical challenge. The boundary between electrode materials and the active switching layer often determines device performance characteristics. Controlling atomic-level interactions at these interfaces requires precise deposition techniques and material compatibility considerations that are difficult to achieve at scale.

Scaling limitations also pose substantial obstacles. As device dimensions shrink below 10nm, quantum effects and statistical variations in material properties become increasingly prominent, affecting reliability and performance predictability. Additionally, the thermal stability of materials at these scales becomes problematic, with localized heating potentially causing premature device failure or performance degradation.

Energy efficiency constraints demand materials with ultra-low switching energies. While biological synapses operate at femtojoule levels, most current memristive devices require picojoules or higher for switching operations—a gap of several orders of magnitude. Developing materials that can switch states with minimal energy input while maintaining distinguishable resistance levels remains a formidable challenge.

Fabrication compatibility with CMOS processes represents another significant hurdle. Many promising materials for neuromorphic devices require processing conditions incompatible with standard semiconductor manufacturing techniques, limiting integration possibilities with conventional computing systems.

The temporal dynamics of materials also present challenges. Biological synapses exhibit complex time-dependent behaviors crucial for learning and memory formation. Replicating these dynamics requires materials with controllable relaxation times and response characteristics across multiple timescales, from milliseconds to hours or days.

Finally, the environmental stability and longevity of neuromorphic materials remain concerns. Exposure to oxygen, moisture, and temperature variations can significantly alter material properties over time, affecting device reliability and system performance in real-world applications.

A primary challenge lies in developing materials with appropriate resistive switching properties for memristive devices. These materials must demonstrate reliable, reproducible, and stable resistance changes in response to electrical stimuli, while maintaining these states over extended periods. Metal oxides such as HfO₂, TiO₂, and Ta₂O₅ show promise, but issues with cycle-to-cycle and device-to-device variability remain significant hurdles.

Interface engineering presents another critical challenge. The boundary between electrode materials and the active switching layer often determines device performance characteristics. Controlling atomic-level interactions at these interfaces requires precise deposition techniques and material compatibility considerations that are difficult to achieve at scale.

Scaling limitations also pose substantial obstacles. As device dimensions shrink below 10nm, quantum effects and statistical variations in material properties become increasingly prominent, affecting reliability and performance predictability. Additionally, the thermal stability of materials at these scales becomes problematic, with localized heating potentially causing premature device failure or performance degradation.

Energy efficiency constraints demand materials with ultra-low switching energies. While biological synapses operate at femtojoule levels, most current memristive devices require picojoules or higher for switching operations—a gap of several orders of magnitude. Developing materials that can switch states with minimal energy input while maintaining distinguishable resistance levels remains a formidable challenge.

Fabrication compatibility with CMOS processes represents another significant hurdle. Many promising materials for neuromorphic devices require processing conditions incompatible with standard semiconductor manufacturing techniques, limiting integration possibilities with conventional computing systems.

The temporal dynamics of materials also present challenges. Biological synapses exhibit complex time-dependent behaviors crucial for learning and memory formation. Replicating these dynamics requires materials with controllable relaxation times and response characteristics across multiple timescales, from milliseconds to hours or days.

Finally, the environmental stability and longevity of neuromorphic materials remain concerns. Exposure to oxygen, moisture, and temperature variations can significantly alter material properties over time, affecting device reliability and system performance in real-world applications.

Current Material Solutions for Neural Network Hardware

01 Phase-change materials for neuromorphic computing

Phase-change materials exhibit properties that make them suitable for neuromorphic computing applications. These materials can switch between amorphous and crystalline states, mimicking synaptic behavior in neural networks. Their ability to store multiple states enables efficient implementation of artificial neural networks with reduced power consumption and improved processing speed. The reversible phase transitions allow for the creation of non-volatile memory elements that can maintain their state without continuous power supply.- Phase-change materials for neuromorphic computing: Phase-change materials (PCMs) are being utilized in neuromorphic computing systems to mimic synaptic behavior. These materials can switch between amorphous and crystalline states, allowing for multi-level resistance states that simulate synaptic weights. PCMs offer advantages such as non-volatility, high endurance, and fast switching speeds, making them suitable for energy-efficient neuromorphic architectures. Their ability to maintain states without power consumption contributes significantly to the overall efficiency of neuromorphic systems.

- Memristive devices for energy-efficient computing: Memristive devices are being developed as key components for neuromorphic computing due to their ability to simultaneously store and process information. These devices can change their resistance based on the history of applied voltage or current, mimicking biological synapses. By integrating memristive materials into crossbar arrays, highly parallel and energy-efficient computing architectures can be achieved. The non-volatile nature of memristors reduces power consumption by eliminating the need for constant refreshing of memory states.

- 2D materials for low-power neuromorphic systems: Two-dimensional (2D) materials such as graphene, transition metal dichalcogenides, and hexagonal boron nitride are being explored for neuromorphic computing applications. These atomically thin materials offer unique electronic properties, including high carrier mobility and tunable bandgaps. When incorporated into neuromorphic devices, 2D materials enable ultra-low power consumption while maintaining high computational efficiency. Their flexibility and compatibility with existing fabrication techniques make them promising candidates for next-generation neuromorphic hardware.

- Magnetic materials for spintronic neuromorphic devices: Magnetic materials are being utilized in spintronic-based neuromorphic computing to leverage electron spin for information processing. These materials enable magnetic tunnel junctions and domain wall devices that can emulate synaptic and neuronal functions with minimal energy consumption. Spintronic neuromorphic devices offer advantages such as non-volatility, high endurance, and fast operation speeds. The ability to process information using magnetic states rather than electrical charges significantly reduces energy requirements compared to conventional computing approaches.

- Oxide-based materials for adaptive neuromorphic systems: Metal oxide materials, particularly transition metal oxides, are being developed for neuromorphic computing applications due to their tunable resistive switching properties. These materials can form resistive random-access memory (RRAM) devices that mimic biological synapses through changes in oxygen vacancy concentration. Oxide-based neuromorphic systems demonstrate excellent scalability, compatibility with CMOS technology, and low operating voltages. Their ability to implement spike-timing-dependent plasticity and other learning mechanisms makes them particularly suitable for adaptive, energy-efficient neuromorphic architectures.

02 Memristive devices for energy-efficient computing

Memristive devices are key components in neuromorphic computing systems due to their ability to mimic biological synapses. These devices can change their resistance based on the history of applied voltage or current, enabling efficient implementation of learning algorithms. By utilizing materials with memristive properties, neuromorphic systems can achieve significant improvements in energy efficiency compared to conventional computing architectures. These devices enable in-memory computing, reducing the energy costs associated with data movement between memory and processing units.Expand Specific Solutions03 2D materials for neuromorphic architectures

Two-dimensional materials offer unique properties for neuromorphic computing applications, including high carrier mobility, tunable bandgaps, and excellent mechanical flexibility. Materials such as graphene, transition metal dichalcogenides, and hexagonal boron nitride can be integrated into neuromorphic devices to enhance performance and energy efficiency. Their atomic thinness allows for the creation of ultra-compact neuromorphic circuits with reduced parasitic capacitance and improved switching speeds, leading to more efficient neural network implementations.Expand Specific Solutions04 Magnetic materials for spintronic neuromorphic systems

Magnetic materials enable the development of spintronic-based neuromorphic computing systems that leverage electron spin for information processing. These materials facilitate the creation of magnetic tunnel junctions and domain wall devices that can emulate synaptic and neuronal functions with extremely low energy consumption. Spintronic neuromorphic systems offer advantages such as non-volatility, high endurance, and fast switching speeds, making them promising candidates for energy-efficient artificial intelligence applications.Expand Specific Solutions05 Oxide-based materials for neuromorphic devices

Metal oxides and complex oxide materials exhibit properties that make them suitable for creating efficient neuromorphic computing elements. These materials can form resistive switching devices with multiple resistance states, enabling the implementation of artificial synapses and neurons. Oxide-based neuromorphic devices offer advantages such as CMOS compatibility, scalability, and tunable electrical characteristics. By engineering the composition and structure of these oxide materials, researchers can optimize device performance for specific neuromorphic computing applications.Expand Specific Solutions

Leading Organizations in Neuromorphic Materials Research

Neuromorphic computing is currently in an early growth phase, with the market expected to expand significantly due to increasing demand for AI applications requiring energy-efficient processing. The global market size is projected to reach several billion dollars by 2030, driven by applications in edge computing, IoT, and autonomous systems. Leading players like IBM, Samsung, and Thales are advancing the technology through significant R&D investments, while specialized companies such as Syntiant and Innatera are developing innovative neuromorphic chips. Academic-industry collaborations involving institutions like KAIST, University of California, and CNRS are accelerating material innovations. The technology is approaching commercial viability, with early adopters focusing on optimizing material parameters to overcome efficiency and scaling challenges.

International Business Machines Corp.

Technical Solution: IBM has pioneered neuromorphic computing through its TrueNorth and subsequent architectures, focusing on material parameters optimization for energy efficiency. Their approach utilizes phase-change memory (PCM) materials for synaptic elements, achieving analog computation with significantly reduced power consumption compared to traditional CMOS implementations. IBM's research demonstrates that carefully engineered chalcogenide materials in PCM devices can achieve multi-level resistance states necessary for neural network weight storage while maintaining low switching energy (approximately 100 fJ per synaptic event). Their neuromorphic chips incorporate specialized materials with tuned crystallization kinetics that enable both volatile and non-volatile memory functions essential for different neural processing requirements. IBM has also developed specialized magnetic materials for spintronic-based neuromorphic computing, where electron spin rather than charge is used for computation, potentially reducing energy requirements by orders of magnitude. Recent advancements include three-dimensional integration of memory and processing elements using through-silicon vias and advanced packaging techniques to minimize signal transmission distances and associated energy losses.

Strengths: Industry-leading expertise in materials science and integration with conventional CMOS processes; demonstrated functional neuromorphic systems with real-world applications; extensive patent portfolio in neuromorphic materials. Weaknesses: Higher manufacturing costs compared to conventional computing solutions; challenges in scaling production of specialized materials; some material solutions still face reliability and endurance limitations.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed a comprehensive neuromorphic computing strategy centered on advanced material engineering for maximum energy efficiency. Their approach leverages resistive random-access memory (RRAM) technology using hafnium oxide-based materials that demonstrate exceptional switching characteristics with energy consumption as low as 10 fJ per synaptic operation. Samsung's neuromorphic architecture incorporates these RRAM elements in crossbar arrays that enable massively parallel vector-matrix multiplications essential for neural network operations. The company has pioneered the use of 2D materials, particularly transition metal dichalcogenides (TMDs), which offer atomically thin layers that minimize electron transport distances and reduce energy dissipation. Their research shows that MoS2-based synaptic devices can achieve sub-pJ energy consumption per synaptic event while maintaining the analog precision required for complex neural operations. Samsung has also developed specialized doping techniques for these materials to tune their electrical properties and improve switching uniformity across large arrays. Additionally, their heterogeneous integration approach combines different material systems (RRAM, MRAM, and phase-change materials) optimized for specific neural functions, creating a comprehensive neuromorphic system that adapts its material properties to computational requirements.

Strengths: Vertical integration capabilities from materials research to mass production; strong expertise in memory technologies directly applicable to neuromorphic computing; established manufacturing infrastructure for rapid scaling. Weaknesses: Some material solutions still face challenges with cycle-to-cycle variability; integration with existing product lines may require significant architectural modifications; balancing performance with cost remains challenging for consumer applications.

Critical Materials Science Patents and Breakthroughs

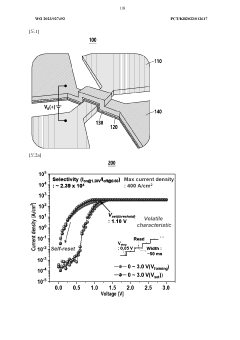

Neuromorphic device based on memristor device, and neuromorphic system using same

PatentWO2023027492A1

Innovation

- A neuromorphic device using a memristor with a switching layer of amorphous germanium sulfide and a source layer of copper telluride, allowing for both artificial neuron and synapse characteristics to be implemented, with a crossbar-type structure that adjusts current density for volatility or non-volatility, enabling efficient memory operations and paired pulse facilitation.

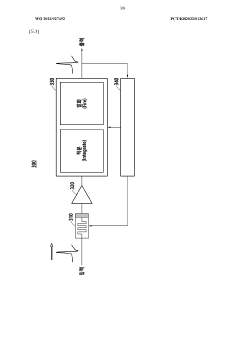

Neuromorphic processing devices

PatentWO2017001956A1

Innovation

- A neuromorphic processing device utilizing an assemblage of neuron circuits with resistive memory cells, specifically phase-change memory (PCM) cells, that store neuron states and exploit stochasticity to generate output signals, mimicking biological neuronal behavior by varying cell resistance in response to input signals.

Energy Efficiency Metrics and Benchmarking

Evaluating energy efficiency in neuromorphic computing systems requires specialized metrics that differ from traditional computing benchmarks. The field has developed several key performance indicators that specifically address the unique characteristics of brain-inspired computing architectures. The primary metric is energy per synaptic operation (measured in femtojoules or picojoules), which quantifies the energy consumed when processing a single synaptic event. This metric provides a direct comparison to biological neural systems, where mammalian brains operate at approximately 1-10 fJ per synaptic operation.

Complementary to energy measurements, researchers also track operations per second per watt, offering insight into computational throughput relative to power consumption. This metric helps evaluate the practical efficiency of neuromorphic systems when deployed in real-world applications with specific performance requirements. The energy-delay product (EDP) has emerged as another critical benchmark, capturing both energy efficiency and computational speed in a single metric, which is particularly valuable for applications with strict latency constraints.

Standardized benchmarking suites have been developed to facilitate fair comparisons across different neuromorphic architectures. These include pattern recognition tasks, sparse coding problems, and temporal sequence learning challenges that mimic real-world neuromorphic applications. The SNN-specific benchmarks focus on spike-based information processing efficiency, while mixed-signal benchmarks evaluate systems that combine analog and digital components.

Material parameters significantly impact these efficiency metrics. For instance, resistive switching materials with lower switching energies directly improve the energy per synaptic operation metric. Similarly, materials with higher on/off ratios enhance signal integrity, reducing error correction overhead and improving overall system efficiency. The temperature stability of materials also affects benchmarking results, as performance degradation under varying thermal conditions can dramatically impact real-world efficiency.

Cross-platform comparison frameworks have been established to normalize results across different material implementations. These frameworks account for differences in technology nodes, operating conditions, and architectural choices, enabling fair evaluation of the fundamental material contributions to efficiency. The neuromorphic community has also adopted application-specific benchmarks that evaluate performance on tasks like image recognition, natural language processing, and autonomous control, providing context-relevant efficiency measurements.

Recent efforts have focused on developing benchmarks that incorporate both accuracy and energy efficiency, recognizing that these factors often involve trade-offs in practical implementations. This holistic approach to benchmarking better reflects the complex design considerations in neuromorphic computing and helps identify optimal material parameters for specific application domains.

Complementary to energy measurements, researchers also track operations per second per watt, offering insight into computational throughput relative to power consumption. This metric helps evaluate the practical efficiency of neuromorphic systems when deployed in real-world applications with specific performance requirements. The energy-delay product (EDP) has emerged as another critical benchmark, capturing both energy efficiency and computational speed in a single metric, which is particularly valuable for applications with strict latency constraints.

Standardized benchmarking suites have been developed to facilitate fair comparisons across different neuromorphic architectures. These include pattern recognition tasks, sparse coding problems, and temporal sequence learning challenges that mimic real-world neuromorphic applications. The SNN-specific benchmarks focus on spike-based information processing efficiency, while mixed-signal benchmarks evaluate systems that combine analog and digital components.

Material parameters significantly impact these efficiency metrics. For instance, resistive switching materials with lower switching energies directly improve the energy per synaptic operation metric. Similarly, materials with higher on/off ratios enhance signal integrity, reducing error correction overhead and improving overall system efficiency. The temperature stability of materials also affects benchmarking results, as performance degradation under varying thermal conditions can dramatically impact real-world efficiency.

Cross-platform comparison frameworks have been established to normalize results across different material implementations. These frameworks account for differences in technology nodes, operating conditions, and architectural choices, enabling fair evaluation of the fundamental material contributions to efficiency. The neuromorphic community has also adopted application-specific benchmarks that evaluate performance on tasks like image recognition, natural language processing, and autonomous control, providing context-relevant efficiency measurements.

Recent efforts have focused on developing benchmarks that incorporate both accuracy and energy efficiency, recognizing that these factors often involve trade-offs in practical implementations. This holistic approach to benchmarking better reflects the complex design considerations in neuromorphic computing and helps identify optimal material parameters for specific application domains.

Manufacturing Scalability Considerations

The scalability of manufacturing processes represents a critical factor in the commercial viability of neuromorphic computing technologies. Current fabrication techniques for neuromorphic devices face significant challenges when transitioning from laboratory prototypes to mass production. Silicon-based neuromorphic chips benefit from established CMOS manufacturing infrastructure, but novel materials like phase-change memory compounds, memristive oxides, and magnetic materials often require specialized deposition techniques that are difficult to scale economically.

Material selection directly impacts manufacturing yield rates, with some promising neuromorphic materials exhibiting high defect densities during large-scale production. For instance, hafnium oxide-based memristors demonstrate excellent switching characteristics in research settings but suffer from compositional variability when manufactured at scale, leading to inconsistent device performance. This variability necessitates tighter process controls and more sophisticated quality assurance protocols, increasing production costs.

Temperature sensitivity during fabrication presents another critical consideration. Many neuromorphic materials require precise thermal management during deposition and annealing processes. Materials with lower thermal budgets offer advantages in manufacturing scalability by reducing energy consumption and minimizing thermal stress on surrounding components. Chalcogenide-based phase change materials, while promising for neuromorphic applications, often require careful thermal cycling that complicates integration with standard semiconductor processes.

Integration density capabilities also vary significantly between material systems. While traditional silicon CMOS can achieve extremely high integration densities, emerging neuromorphic materials must demonstrate comparable scaling potential to remain competitive. Vertical stacking approaches using crossbar architectures have shown promise for materials like tantalum oxide and titanium oxide memristors, potentially enabling higher integration densities than conventional planar architectures.

Equipment compatibility represents another crucial factor affecting manufacturing scalability. Materials compatible with existing semiconductor fabrication equipment offer significant advantages in terms of initial capital investment and production ramp-up time. Conversely, materials requiring specialized deposition or patterning techniques may face adoption barriers despite superior neuromorphic performance characteristics. Recent advances in atomic layer deposition techniques have improved the manufacturing prospects for certain oxide-based memristive materials.

Long-term stability and reliability of neuromorphic materials under standard packaging conditions must also be considered when evaluating manufacturing scalability. Materials susceptible to environmental degradation may require hermetic packaging solutions that add complexity and cost to the manufacturing process. Silicon carbide and diamond-based neuromorphic platforms offer exceptional environmental stability but present significant fabrication challenges compared to conventional materials.

Material selection directly impacts manufacturing yield rates, with some promising neuromorphic materials exhibiting high defect densities during large-scale production. For instance, hafnium oxide-based memristors demonstrate excellent switching characteristics in research settings but suffer from compositional variability when manufactured at scale, leading to inconsistent device performance. This variability necessitates tighter process controls and more sophisticated quality assurance protocols, increasing production costs.

Temperature sensitivity during fabrication presents another critical consideration. Many neuromorphic materials require precise thermal management during deposition and annealing processes. Materials with lower thermal budgets offer advantages in manufacturing scalability by reducing energy consumption and minimizing thermal stress on surrounding components. Chalcogenide-based phase change materials, while promising for neuromorphic applications, often require careful thermal cycling that complicates integration with standard semiconductor processes.

Integration density capabilities also vary significantly between material systems. While traditional silicon CMOS can achieve extremely high integration densities, emerging neuromorphic materials must demonstrate comparable scaling potential to remain competitive. Vertical stacking approaches using crossbar architectures have shown promise for materials like tantalum oxide and titanium oxide memristors, potentially enabling higher integration densities than conventional planar architectures.

Equipment compatibility represents another crucial factor affecting manufacturing scalability. Materials compatible with existing semiconductor fabrication equipment offer significant advantages in terms of initial capital investment and production ramp-up time. Conversely, materials requiring specialized deposition or patterning techniques may face adoption barriers despite superior neuromorphic performance characteristics. Recent advances in atomic layer deposition techniques have improved the manufacturing prospects for certain oxide-based memristive materials.

Long-term stability and reliability of neuromorphic materials under standard packaging conditions must also be considered when evaluating manufacturing scalability. Materials susceptible to environmental degradation may require hermetic packaging solutions that add complexity and cost to the manufacturing process. Silicon carbide and diamond-based neuromorphic platforms offer exceptional environmental stability but present significant fabrication challenges compared to conventional materials.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!