Neuromorphic Computing Materials and Electronics Industry Standards

OCT 27, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

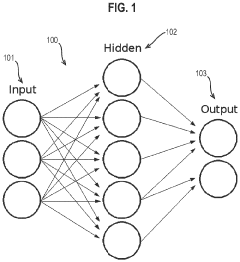

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field has evolved significantly since the introduction of the first artificial neural networks in the 1940s by McCulloch and Pitts. The fundamental objective of neuromorphic computing is to develop hardware systems that mimic the brain's efficiency, adaptability, and parallel processing capabilities, while overcoming the limitations of traditional von Neumann architectures.

The evolution of neuromorphic computing can be traced through several key developmental phases. Initially, research focused on theoretical models and software simulations of neural networks. The 1980s marked a significant turning point with Carver Mead's pioneering work at Caltech, introducing the concept of very-large-scale integration (VLSI) systems that could emulate neural functions in silicon. This laid the groundwork for hardware implementations of neural networks.

In recent decades, the field has experienced accelerated growth driven by advancements in materials science, nanotechnology, and a deeper understanding of neuroscience. The development of memristive devices, phase-change materials, and spintronic components has enabled more efficient and biologically plausible implementations of synaptic functions. These innovations have progressively moved neuromorphic systems closer to achieving brain-like energy efficiency and computational capabilities.

The primary technical objectives of neuromorphic computing include achieving ultra-low power consumption comparable to biological systems, implementing massive parallelism for real-time processing, and developing adaptive learning capabilities that mimic biological plasticity. Additionally, researchers aim to create systems capable of unsupervised learning, fault tolerance, and context-sensitive processing—characteristics inherent to biological neural networks.

Industry standards for neuromorphic computing materials and electronics represent a critical frontier in this evolution. The establishment of standardized benchmarks, testing methodologies, and performance metrics is essential for comparing different neuromorphic implementations and accelerating commercial adoption. Current objectives include developing standards for memristor characterization, defining interface protocols for neuromorphic chips, and establishing energy efficiency metrics specific to brain-inspired computing.

Looking forward, the field is trending toward greater integration of diverse materials and electronic components to create heterogeneous neuromorphic systems. Research is increasingly focused on developing standards that address the unique challenges of neuromorphic computing, including spike-based communication protocols, synaptic plasticity models, and reliability standards for emerging memory technologies used in neural implementations.

The evolution of neuromorphic computing can be traced through several key developmental phases. Initially, research focused on theoretical models and software simulations of neural networks. The 1980s marked a significant turning point with Carver Mead's pioneering work at Caltech, introducing the concept of very-large-scale integration (VLSI) systems that could emulate neural functions in silicon. This laid the groundwork for hardware implementations of neural networks.

In recent decades, the field has experienced accelerated growth driven by advancements in materials science, nanotechnology, and a deeper understanding of neuroscience. The development of memristive devices, phase-change materials, and spintronic components has enabled more efficient and biologically plausible implementations of synaptic functions. These innovations have progressively moved neuromorphic systems closer to achieving brain-like energy efficiency and computational capabilities.

The primary technical objectives of neuromorphic computing include achieving ultra-low power consumption comparable to biological systems, implementing massive parallelism for real-time processing, and developing adaptive learning capabilities that mimic biological plasticity. Additionally, researchers aim to create systems capable of unsupervised learning, fault tolerance, and context-sensitive processing—characteristics inherent to biological neural networks.

Industry standards for neuromorphic computing materials and electronics represent a critical frontier in this evolution. The establishment of standardized benchmarks, testing methodologies, and performance metrics is essential for comparing different neuromorphic implementations and accelerating commercial adoption. Current objectives include developing standards for memristor characterization, defining interface protocols for neuromorphic chips, and establishing energy efficiency metrics specific to brain-inspired computing.

Looking forward, the field is trending toward greater integration of diverse materials and electronic components to create heterogeneous neuromorphic systems. Research is increasingly focused on developing standards that address the unique challenges of neuromorphic computing, including spike-based communication protocols, synaptic plasticity models, and reliability standards for emerging memory technologies used in neural implementations.

Market Analysis for Brain-Inspired Computing Solutions

The neuromorphic computing market is experiencing significant growth, driven by increasing demand for artificial intelligence applications and the limitations of traditional computing architectures. Current market valuations place the global neuromorphic computing sector at approximately $69 million in 2024, with projections indicating a compound annual growth rate of 89% through 2030, potentially reaching a market size of $25 billion. This exponential growth trajectory reflects the transformative potential of brain-inspired computing solutions across multiple industries.

The demand for neuromorphic computing is primarily fueled by applications requiring real-time processing of unstructured data, energy efficiency, and adaptive learning capabilities. Key market segments include automotive systems for autonomous driving, healthcare for medical imaging and diagnostics, robotics for adaptive control systems, and edge computing devices requiring low-power intelligence. The defense sector also represents a significant market, with applications in surveillance, signal processing, and autonomous systems.

Market analysis reveals distinct regional dynamics in adoption patterns. North America currently leads the market with approximately 42% share, driven by substantial research investments and the presence of major technology companies. Europe follows at 28%, with strong academic-industrial partnerships advancing commercialization efforts. The Asia-Pacific region, particularly China, Japan, and South Korea, is experiencing the fastest growth rate at 95% annually, supported by government initiatives and semiconductor manufacturing capabilities.

Customer needs assessment indicates three primary market drivers: energy efficiency requirements (particularly for edge and mobile applications), real-time processing capabilities for time-sensitive applications, and adaptability to novel data patterns without extensive retraining. Organizations are increasingly seeking solutions that can reduce computational power requirements by 90-95% compared to traditional deep learning implementations while maintaining comparable accuracy levels.

Market barriers include the lack of standardized development tools, limited software ecosystems, and integration challenges with existing computing infrastructure. Additionally, the specialized knowledge required for effective implementation restricts widespread adoption beyond research environments and specialized applications. The high initial investment costs for custom neuromorphic hardware also present significant market entry barriers for smaller organizations.

Competitive analysis shows an evolving landscape with both established technology companies and specialized startups. Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida represent commercial neuromorphic solutions with varying approaches to brain-inspired computing. Venture capital funding in this sector has increased by 215% since 2020, indicating strong investor confidence in the market's future growth potential.

The demand for neuromorphic computing is primarily fueled by applications requiring real-time processing of unstructured data, energy efficiency, and adaptive learning capabilities. Key market segments include automotive systems for autonomous driving, healthcare for medical imaging and diagnostics, robotics for adaptive control systems, and edge computing devices requiring low-power intelligence. The defense sector also represents a significant market, with applications in surveillance, signal processing, and autonomous systems.

Market analysis reveals distinct regional dynamics in adoption patterns. North America currently leads the market with approximately 42% share, driven by substantial research investments and the presence of major technology companies. Europe follows at 28%, with strong academic-industrial partnerships advancing commercialization efforts. The Asia-Pacific region, particularly China, Japan, and South Korea, is experiencing the fastest growth rate at 95% annually, supported by government initiatives and semiconductor manufacturing capabilities.

Customer needs assessment indicates three primary market drivers: energy efficiency requirements (particularly for edge and mobile applications), real-time processing capabilities for time-sensitive applications, and adaptability to novel data patterns without extensive retraining. Organizations are increasingly seeking solutions that can reduce computational power requirements by 90-95% compared to traditional deep learning implementations while maintaining comparable accuracy levels.

Market barriers include the lack of standardized development tools, limited software ecosystems, and integration challenges with existing computing infrastructure. Additionally, the specialized knowledge required for effective implementation restricts widespread adoption beyond research environments and specialized applications. The high initial investment costs for custom neuromorphic hardware also present significant market entry barriers for smaller organizations.

Competitive analysis shows an evolving landscape with both established technology companies and specialized startups. Intel's Loihi, IBM's TrueNorth, and BrainChip's Akida represent commercial neuromorphic solutions with varying approaches to brain-inspired computing. Venture capital funding in this sector has increased by 215% since 2020, indicating strong investor confidence in the market's future growth potential.

Current Challenges in Neuromorphic Materials and Electronics

Despite significant advancements in neuromorphic computing materials and electronics, the field faces several critical challenges that impede widespread adoption and standardization. One primary obstacle is the lack of unified industry standards for neuromorphic hardware design, testing methodologies, and performance metrics. This fragmentation creates barriers to interoperability between different neuromorphic systems and complicates the integration of these technologies into existing computing infrastructures.

Material limitations represent another significant hurdle. Current memristive devices and other neuromorphic components often suffer from reliability issues, including cycle-to-cycle variations, limited endurance, and unpredictable degradation patterns. These inconsistencies make it difficult to manufacture neuromorphic systems at scale with predictable performance characteristics, hindering commercial viability.

Energy efficiency, while theoretically superior to traditional computing architectures, remains challenging to optimize in practice. Many experimental neuromorphic materials still consume considerable power during switching operations or exhibit significant leakage currents. The trade-off between computational capability and power consumption continues to be a delicate balance that researchers struggle to perfect.

Fabrication challenges persist as well. The integration of novel neuromorphic materials with conventional CMOS technology presents complex manufacturing issues. Compatibility problems arise from differences in processing temperatures, chemical sensitivities, and structural requirements. These manufacturing hurdles significantly increase production costs and limit mass production capabilities.

The scalability of neuromorphic architectures presents another formidable challenge. As systems grow in size and complexity, maintaining timing precision, signal integrity, and thermal management becomes increasingly difficult. Current designs often struggle to maintain performance consistency when scaled beyond laboratory prototypes.

Knowledge gaps in the fundamental understanding of brain-inspired computing principles further complicate development efforts. The precise mechanisms of biological neural networks remain partially understood, making it difficult to create truly biomimetic artificial systems. This incomplete theoretical foundation leads to suboptimal implementations that fail to capture the full efficiency and adaptability of biological neural networks.

Cross-disciplinary collaboration barriers exist between materials scientists, electrical engineers, computer scientists, and neuroscientists. The highly specialized nature of each field creates communication challenges and slows the integration of insights from different domains, hampering holistic solutions to neuromorphic computing challenges.

Material limitations represent another significant hurdle. Current memristive devices and other neuromorphic components often suffer from reliability issues, including cycle-to-cycle variations, limited endurance, and unpredictable degradation patterns. These inconsistencies make it difficult to manufacture neuromorphic systems at scale with predictable performance characteristics, hindering commercial viability.

Energy efficiency, while theoretically superior to traditional computing architectures, remains challenging to optimize in practice. Many experimental neuromorphic materials still consume considerable power during switching operations or exhibit significant leakage currents. The trade-off between computational capability and power consumption continues to be a delicate balance that researchers struggle to perfect.

Fabrication challenges persist as well. The integration of novel neuromorphic materials with conventional CMOS technology presents complex manufacturing issues. Compatibility problems arise from differences in processing temperatures, chemical sensitivities, and structural requirements. These manufacturing hurdles significantly increase production costs and limit mass production capabilities.

The scalability of neuromorphic architectures presents another formidable challenge. As systems grow in size and complexity, maintaining timing precision, signal integrity, and thermal management becomes increasingly difficult. Current designs often struggle to maintain performance consistency when scaled beyond laboratory prototypes.

Knowledge gaps in the fundamental understanding of brain-inspired computing principles further complicate development efforts. The precise mechanisms of biological neural networks remain partially understood, making it difficult to create truly biomimetic artificial systems. This incomplete theoretical foundation leads to suboptimal implementations that fail to capture the full efficiency and adaptability of biological neural networks.

Cross-disciplinary collaboration barriers exist between materials scientists, electrical engineers, computer scientists, and neuroscientists. The highly specialized nature of each field creates communication challenges and slows the integration of insights from different domains, hampering holistic solutions to neuromorphic computing challenges.

State-of-the-Art Neuromorphic Hardware Implementations

01 Memristive devices for neuromorphic computing

Memristive devices are key components in neuromorphic computing systems, mimicking the behavior of biological synapses. These devices can change their resistance based on the history of applied voltage or current, enabling them to store and process information simultaneously. Materials used in memristive devices include metal oxides, phase-change materials, and ferroelectric materials, which exhibit non-volatile memory characteristics suitable for energy-efficient neuromorphic computing applications.- Memristive devices for neuromorphic computing: Memristive devices are key components in neuromorphic computing systems, mimicking the behavior of biological synapses. These devices can change their resistance based on the history of applied voltage or current, enabling them to store and process information simultaneously. Materials such as metal oxides and phase-change materials are commonly used in memristive devices for neuromorphic applications, offering advantages like low power consumption, high density, and non-volatility.

- Phase-change materials for neuromorphic hardware: Phase-change materials (PCMs) offer unique properties for neuromorphic computing applications. These materials can rapidly switch between amorphous and crystalline states, providing multiple resistance levels that can be used to implement synaptic weights in artificial neural networks. PCM-based neuromorphic devices exhibit excellent scalability, fast switching speeds, and good endurance, making them promising candidates for brain-inspired computing architectures.

- 2D materials for neuromorphic electronics: Two-dimensional (2D) materials such as graphene, transition metal dichalcogenides, and hexagonal boron nitride are being explored for neuromorphic computing applications. These atomically thin materials offer unique electronic properties, high carrier mobility, and mechanical flexibility. When incorporated into neuromorphic devices, 2D materials can enable efficient synaptic functions, low power consumption, and high integration density, potentially leading to more efficient brain-inspired computing systems.

- Organic and polymer-based neuromorphic materials: Organic and polymer-based materials are emerging as promising candidates for neuromorphic computing due to their flexibility, biocompatibility, and tunable properties. These materials can be engineered to exhibit synaptic behaviors such as spike-timing-dependent plasticity and short/long-term potentiation. Organic neuromorphic devices typically operate at lower voltages compared to inorganic counterparts and can be fabricated using low-cost solution processing techniques, making them attractive for large-area, flexible neuromorphic systems.

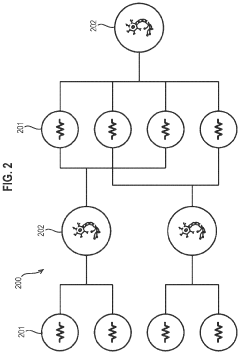

- Neuromorphic architectures and integration technologies: Advanced integration technologies are essential for implementing neuromorphic computing systems that can efficiently process information in a brain-like manner. These include 3D integration techniques, crossbar arrays, and novel circuit designs that enable massive parallelism and co-location of memory and processing. Such architectures aim to overcome the von Neumann bottleneck by mimicking the brain's structure, where computation and memory are distributed throughout the network, leading to more energy-efficient and scalable neuromorphic systems.

02 Phase-change materials for neuromorphic electronics

Phase-change materials (PCMs) offer unique properties for neuromorphic computing applications due to their ability to switch between amorphous and crystalline states. This reversible phase transition results in significant changes in electrical resistance, making PCMs suitable for implementing synaptic functions in neuromorphic systems. These materials enable multi-level storage capabilities, allowing for the implementation of analog computing paradigms that closely resemble biological neural networks.Expand Specific Solutions03 2D materials for neuromorphic computing architectures

Two-dimensional (2D) materials such as graphene, transition metal dichalcogenides, and hexagonal boron nitride offer exceptional properties for neuromorphic computing applications. Their atomically thin nature provides unique electronic properties, high carrier mobility, and mechanical flexibility. These materials can be engineered to create artificial synapses and neurons with low power consumption and high integration density, making them promising candidates for next-generation neuromorphic hardware implementations.Expand Specific Solutions04 Spintronic devices for brain-inspired computing

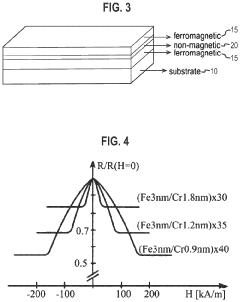

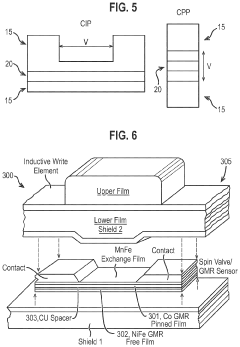

Spintronic devices utilize the spin of electrons rather than their charge for information processing, offering advantages in power efficiency and speed for neuromorphic computing. These devices include magnetic tunnel junctions, spin-orbit torque devices, and domain wall memories that can implement synaptic and neuronal functions. Spintronic neuromorphic systems can perform parallel processing with significantly reduced energy consumption compared to conventional CMOS-based approaches.Expand Specific Solutions05 Organic and bio-inspired materials for neuromorphic electronics

Organic and bio-inspired materials offer unique advantages for neuromorphic computing, including biocompatibility, flexibility, and self-healing properties. These materials can be used to create electronic devices that more closely mimic biological neural systems in both form and function. Organic semiconductors, conducting polymers, and biomolecular materials enable the development of soft, flexible neuromorphic systems that can interface with biological tissues and operate in diverse environments.Expand Specific Solutions

Industry Leaders in Neuromorphic Computing Ecosystem

Neuromorphic Computing Materials and Electronics Industry is currently in its early growth phase, characterized by rapid technological advancements and increasing market interest. The global market size is projected to expand significantly, driven by AI applications requiring energy-efficient computing solutions. In terms of technical maturity, the field is transitioning from research to commercialization with key players demonstrating varying levels of advancement. IBM leads with significant research infrastructure and commercial implementations, while Samsung Electronics and Hewlett Packard Enterprise are making substantial investments. Emerging players include Syntiant with edge AI solutions and specialized research institutions like Zhejiang University, Tsinghua University, and Institute of Automation Chinese Academy of Sciences contributing fundamental breakthroughs. Regional innovation hubs are forming in the US, China, and South Korea, indicating a globally distributed competitive landscape.

International Business Machines Corp.

Technical Solution: IBM has pioneered neuromorphic computing through its TrueNorth and subsequent Brain-inspired Computing architectures. Their approach focuses on creating chips that mimic neural networks using phase-change memory (PCM) materials and specialized synaptic devices. IBM's neuromorphic systems implement spike-timing-dependent plasticity (STDP) learning mechanisms and utilize specialized non-volatile memory materials to create artificial synapses. Their TrueNorth chip contains 5.4 billion transistors organized into 4,096 neurosynaptic cores with 1 million programmable neurons and 256 million configurable synapses[1]. IBM has also developed standards for neuromorphic hardware interfaces and programming models through their Neuromorphic Computing Software Development Kit, which provides a unified framework for programming various neuromorphic hardware platforms. They actively participate in the IEEE Neuromorphic Computing Standards Working Group to establish industry-wide protocols for neuromorphic system integration and benchmarking[2].

Strengths: Extensive research infrastructure and decades of experience in neuromorphic computing; established partnerships with academic institutions; comprehensive approach covering materials, hardware, and software standards. Weaknesses: Proprietary technology ecosystem may limit broader adoption; high implementation costs compared to conventional computing solutions; still facing challenges in scaling neuromorphic systems for commercial applications.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed advanced neuromorphic computing materials and electronics focusing on resistive random-access memory (RRAM) and magnetoresistive random-access memory (MRAM) technologies. Their approach integrates these memory technologies directly with CMOS logic to create efficient neuromorphic processing units. Samsung's neuromorphic architecture employs a crossbar array structure using phase-change materials and metal-oxide memristors that can simultaneously perform storage and computation, significantly reducing the energy consumption compared to conventional von Neumann architectures. Their neuromorphic chips demonstrate power efficiency of less than 20 pJ per synaptic operation[3]. Samsung has also contributed to industry standardization efforts through participation in the International Roadmap for Devices and Systems (IRDS) and has proposed standardized testing methodologies for neuromorphic computing materials and devices. Their research focuses on developing reliable, scalable manufacturing processes for neuromorphic hardware that can be integrated into existing semiconductor production lines[4].

Strengths: Vertical integration capabilities from materials research to device manufacturing; extensive semiconductor fabrication expertise; strong patent portfolio in neuromorphic materials. Weaknesses: Relatively newer entrant to neuromorphic computing compared to IBM; standards proposals still gaining industry acceptance; challenges in achieving consistent performance across large-scale neuromorphic arrays.

Key Patents and Research in Neuromorphic Materials

Neuromorphic computing

PatentPendingUS20240070446A1

Innovation

- The use of magnetoresistive elements, which can be magnetized to adjust resistance values, allowing for power-efficient multiplication and division operations by controlling external magnetic fields, eliminating the need for active voltage supply.

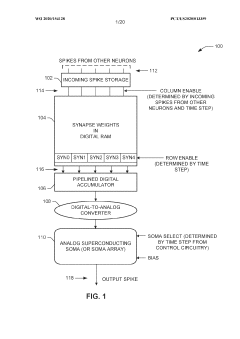

Superconducting neuromorphic core

PatentWO2020154128A1

Innovation

- A superconducting neuromorphic core is developed, incorporating a digital memory array for synapse weight storage, a digital accumulator, and analog soma circuitry to simulate multiple neurons, enabling efficient and scalable neural network operations with improved biological fidelity.

Standardization Frameworks for Neuromorphic Systems

The development of standardization frameworks for neuromorphic systems represents a critical step in the maturation of this emerging technology. Currently, the neuromorphic computing ecosystem faces significant fragmentation due to diverse approaches in materials, device architectures, and system integration methodologies. This fragmentation impedes interoperability, benchmarking, and widespread commercial adoption.

Several international standards organizations have begun addressing this challenge. The IEEE has established working groups focused on neuromorphic computing standards, particularly through its Rebooting Computing initiative. These efforts aim to create common terminology, performance metrics, and testing methodologies that enable fair comparison between different neuromorphic implementations.

The International Electrotechnical Commission (IEC) has also initiated technical committees examining standards for brain-inspired computing systems, with particular attention to the unique requirements of neuromorphic materials and devices. Their work focuses on establishing electrical characterization methods for memristive devices and other neuromorphic components.

Industry consortia play an equally important role in standardization efforts. The Neuromorphic Computing Industry Consortium (NCIC) brings together key stakeholders from academia, industry, and government to develop reference architectures and interface specifications. Their framework addresses both hardware and software aspects, including standard APIs for neuromorphic programming models.

Material characterization standards represent another crucial dimension. Organizations like ASTM International and SEMI have begun developing test methods specifically for neuromorphic materials, addressing properties such as switching endurance, retention time, and analog precision - parameters that differ significantly from conventional semiconductor metrics.

Interoperability standards are emerging to ensure neuromorphic systems can communicate with traditional computing architectures. These include data exchange formats, communication protocols, and hardware interfaces that facilitate integration into existing computing ecosystems while preserving the unique advantages of neuromorphic approaches.

Safety and reliability frameworks are also under development, particularly important as neuromorphic systems find applications in critical domains like autonomous vehicles and medical devices. These standards address fault tolerance, degradation monitoring, and failure prediction methodologies specific to neuromorphic hardware.

The convergence of these standardization efforts will likely accelerate in the next three to five years, creating a more cohesive ecosystem that enables broader adoption of neuromorphic computing technologies across multiple industries.

Several international standards organizations have begun addressing this challenge. The IEEE has established working groups focused on neuromorphic computing standards, particularly through its Rebooting Computing initiative. These efforts aim to create common terminology, performance metrics, and testing methodologies that enable fair comparison between different neuromorphic implementations.

The International Electrotechnical Commission (IEC) has also initiated technical committees examining standards for brain-inspired computing systems, with particular attention to the unique requirements of neuromorphic materials and devices. Their work focuses on establishing electrical characterization methods for memristive devices and other neuromorphic components.

Industry consortia play an equally important role in standardization efforts. The Neuromorphic Computing Industry Consortium (NCIC) brings together key stakeholders from academia, industry, and government to develop reference architectures and interface specifications. Their framework addresses both hardware and software aspects, including standard APIs for neuromorphic programming models.

Material characterization standards represent another crucial dimension. Organizations like ASTM International and SEMI have begun developing test methods specifically for neuromorphic materials, addressing properties such as switching endurance, retention time, and analog precision - parameters that differ significantly from conventional semiconductor metrics.

Interoperability standards are emerging to ensure neuromorphic systems can communicate with traditional computing architectures. These include data exchange formats, communication protocols, and hardware interfaces that facilitate integration into existing computing ecosystems while preserving the unique advantages of neuromorphic approaches.

Safety and reliability frameworks are also under development, particularly important as neuromorphic systems find applications in critical domains like autonomous vehicles and medical devices. These standards address fault tolerance, degradation monitoring, and failure prediction methodologies specific to neuromorphic hardware.

The convergence of these standardization efforts will likely accelerate in the next three to five years, creating a more cohesive ecosystem that enables broader adoption of neuromorphic computing technologies across multiple industries.

Energy Efficiency Benchmarks for Neuromorphic Devices

Energy efficiency has emerged as a critical benchmark for evaluating neuromorphic computing devices, particularly as these brain-inspired systems aim to deliver cognitive capabilities with significantly lower power consumption than traditional computing architectures. Current industry standards for measuring energy efficiency in neuromorphic devices typically focus on metrics such as operations per watt (OPS/W), energy per synaptic operation (pJ/SOP), and total system power consumption under various computational loads. These benchmarks provide essential frameworks for comparing different neuromorphic implementations across diverse material platforms and electronic designs.

Leading research institutions and industry consortia have established several key energy efficiency targets that serve as reference points for neuromorphic device development. The most ambitious benchmark aims for energy consumption in the femtojoule range per synaptic operation, approaching the remarkable efficiency of biological neural systems. Mid-tier benchmarks typically target 1-10 picojoules per operation, while entry-level neuromorphic solutions may operate in the nanojoule range while still offering advantages over conventional computing architectures for specific workloads.

Standardized testing methodologies have been developed to ensure fair comparisons across different neuromorphic implementations. These include standardized neural network topologies, representative workloads for inference and learning tasks, and consistent measurement protocols that account for both active computation and standby power consumption. The Neuromorphic Computing Benchmark Suite (NCBS) has gained significant adoption as it provides a comprehensive framework for evaluating energy efficiency across diverse application scenarios including pattern recognition, anomaly detection, and temporal sequence processing.

Material selection significantly impacts energy efficiency benchmarks, with emerging materials showing promising results. Oxide-based memristive devices have demonstrated energy consumption as low as 10 fJ per synaptic operation in laboratory settings, while phase-change materials typically operate in the 100 fJ to 1 pJ range. Spintronic implementations offer competitive efficiency but face integration challenges with conventional CMOS processes. These material-specific benchmarks help guide research priorities and investment decisions across the neuromorphic computing landscape.

The relationship between energy efficiency and computational accuracy presents an important trade-off that must be carefully considered in benchmark development. Industry standards increasingly incorporate this relationship through Pareto-optimal curves that illustrate the efficiency-accuracy frontier. This approach acknowledges that some applications may prioritize ultra-low power consumption while tolerating moderate accuracy, while others require high precision with reasonable energy constraints. Future benchmark evolution will likely incorporate additional dimensions including scalability, reliability, and integration potential with conventional computing systems.

Leading research institutions and industry consortia have established several key energy efficiency targets that serve as reference points for neuromorphic device development. The most ambitious benchmark aims for energy consumption in the femtojoule range per synaptic operation, approaching the remarkable efficiency of biological neural systems. Mid-tier benchmarks typically target 1-10 picojoules per operation, while entry-level neuromorphic solutions may operate in the nanojoule range while still offering advantages over conventional computing architectures for specific workloads.

Standardized testing methodologies have been developed to ensure fair comparisons across different neuromorphic implementations. These include standardized neural network topologies, representative workloads for inference and learning tasks, and consistent measurement protocols that account for both active computation and standby power consumption. The Neuromorphic Computing Benchmark Suite (NCBS) has gained significant adoption as it provides a comprehensive framework for evaluating energy efficiency across diverse application scenarios including pattern recognition, anomaly detection, and temporal sequence processing.

Material selection significantly impacts energy efficiency benchmarks, with emerging materials showing promising results. Oxide-based memristive devices have demonstrated energy consumption as low as 10 fJ per synaptic operation in laboratory settings, while phase-change materials typically operate in the 100 fJ to 1 pJ range. Spintronic implementations offer competitive efficiency but face integration challenges with conventional CMOS processes. These material-specific benchmarks help guide research priorities and investment decisions across the neuromorphic computing landscape.

The relationship between energy efficiency and computational accuracy presents an important trade-off that must be carefully considered in benchmark development. Industry standards increasingly incorporate this relationship through Pareto-optimal curves that illustrate the efficiency-accuracy frontier. This approach acknowledges that some applications may prioritize ultra-low power consumption while tolerating moderate accuracy, while others require high precision with reasonable energy constraints. Future benchmark evolution will likely incorporate additional dimensions including scalability, reliability, and integration potential with conventional computing systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!