Neuromorphic Computing Materials: Optimization Techniques

OCT 27, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. Since its conceptual inception in the late 1980s by Carver Mead, this field has evolved from theoretical frameworks to practical implementations that aim to replicate the brain's efficiency and adaptability. The evolution trajectory has been marked by significant milestones, from early analog VLSI implementations to contemporary memristor-based systems that exhibit synaptic plasticity.

The fundamental objective of neuromorphic computing materials research is to develop substrates that can efficiently emulate neural processes while overcoming the limitations of traditional von Neumann architectures. These materials must facilitate parallel processing, exhibit low power consumption, and demonstrate adaptive learning capabilities—characteristics inherent to biological neural networks but challenging to replicate in silicon-based systems.

Recent advancements have focused on materials optimization techniques that enhance the performance metrics critical to neuromorphic systems. These include improving switching speed, reducing energy consumption per synaptic event, increasing device reliability, and extending operational lifespans. The convergence of material science, electrical engineering, and computer architecture has accelerated innovation in this domain, leading to novel material compositions and fabrication methodologies.

The current technological landscape reveals a growing emphasis on materials that can support spike-timing-dependent plasticity (STDP), a key mechanism for learning in biological systems. Phase-change materials, resistive random-access memory (RRAM), and ferroelectric materials have emerged as promising candidates due to their inherent physical properties that can be leveraged for neuromorphic applications.

Looking forward, the field is trending toward heterogeneous integration of multiple material systems to create more complex and capable neuromorphic architectures. This approach aims to address the multifaceted requirements of next-generation cognitive computing systems, including real-time processing of sensory data, autonomous decision-making, and adaptive learning in dynamic environments.

The optimization of neuromorphic computing materials represents not merely an incremental improvement in existing technologies but a transformative approach to computing that could redefine the capabilities and applications of artificial intelligence systems. As research progresses, the goal remains to develop materials and techniques that can bridge the gap between the remarkable efficiency of biological neural systems and the practical requirements of computational devices.

The fundamental objective of neuromorphic computing materials research is to develop substrates that can efficiently emulate neural processes while overcoming the limitations of traditional von Neumann architectures. These materials must facilitate parallel processing, exhibit low power consumption, and demonstrate adaptive learning capabilities—characteristics inherent to biological neural networks but challenging to replicate in silicon-based systems.

Recent advancements have focused on materials optimization techniques that enhance the performance metrics critical to neuromorphic systems. These include improving switching speed, reducing energy consumption per synaptic event, increasing device reliability, and extending operational lifespans. The convergence of material science, electrical engineering, and computer architecture has accelerated innovation in this domain, leading to novel material compositions and fabrication methodologies.

The current technological landscape reveals a growing emphasis on materials that can support spike-timing-dependent plasticity (STDP), a key mechanism for learning in biological systems. Phase-change materials, resistive random-access memory (RRAM), and ferroelectric materials have emerged as promising candidates due to their inherent physical properties that can be leveraged for neuromorphic applications.

Looking forward, the field is trending toward heterogeneous integration of multiple material systems to create more complex and capable neuromorphic architectures. This approach aims to address the multifaceted requirements of next-generation cognitive computing systems, including real-time processing of sensory data, autonomous decision-making, and adaptive learning in dynamic environments.

The optimization of neuromorphic computing materials represents not merely an incremental improvement in existing technologies but a transformative approach to computing that could redefine the capabilities and applications of artificial intelligence systems. As research progresses, the goal remains to develop materials and techniques that can bridge the gap between the remarkable efficiency of biological neural systems and the practical requirements of computational devices.

Market Analysis for Brain-Inspired Computing Solutions

The neuromorphic computing market is experiencing significant growth, driven by increasing demand for brain-inspired computing solutions across various industries. Current market valuations place the global neuromorphic computing market at approximately 3.2 billion USD in 2023, with projections indicating a compound annual growth rate of 23.7% through 2030. This remarkable growth trajectory is fueled by the inherent advantages of neuromorphic systems, particularly their energy efficiency and parallel processing capabilities.

Healthcare represents one of the most promising application sectors, with neuromorphic solutions being deployed for medical imaging analysis, disease diagnosis, and patient monitoring systems. The integration of these technologies has demonstrated up to 40% improvement in diagnostic accuracy while reducing power consumption by 65% compared to traditional computing approaches.

Autonomous vehicles constitute another high-potential market segment, where neuromorphic computing enables real-time sensor data processing and decision-making capabilities. Industry leaders like Tesla, Waymo, and traditional automotive manufacturers are investing heavily in neuromorphic research, recognizing its potential to solve complex perception challenges while meeting strict power constraints in vehicular environments.

The artificial intelligence and machine learning sector represents the largest current market for neuromorphic computing materials and optimization techniques. This segment values the ability of neuromorphic systems to perform complex pattern recognition tasks with significantly reduced energy requirements. Financial institutions have begun implementing these solutions for fraud detection systems, reporting 30% improvements in detection rates while reducing computational overhead.

Market analysis reveals regional variations in adoption rates. North America currently leads with approximately 42% market share, followed by Europe at 28% and Asia-Pacific at 24%. However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years due to substantial investments in semiconductor manufacturing and neuromorphic research initiatives in China, Japan, and South Korea.

Customer demand patterns indicate a growing preference for integrated neuromorphic solutions that combine optimized materials with specialized software frameworks. End-users increasingly seek complete ecosystems rather than isolated components, creating opportunities for companies that can deliver comprehensive neuromorphic platforms.

Market barriers include high initial development costs, limited standardization across the industry, and competition from quantum computing technologies. However, the specialized nature of neuromorphic computing for edge applications provides a distinct competitive advantage that quantum systems cannot currently match.

Healthcare represents one of the most promising application sectors, with neuromorphic solutions being deployed for medical imaging analysis, disease diagnosis, and patient monitoring systems. The integration of these technologies has demonstrated up to 40% improvement in diagnostic accuracy while reducing power consumption by 65% compared to traditional computing approaches.

Autonomous vehicles constitute another high-potential market segment, where neuromorphic computing enables real-time sensor data processing and decision-making capabilities. Industry leaders like Tesla, Waymo, and traditional automotive manufacturers are investing heavily in neuromorphic research, recognizing its potential to solve complex perception challenges while meeting strict power constraints in vehicular environments.

The artificial intelligence and machine learning sector represents the largest current market for neuromorphic computing materials and optimization techniques. This segment values the ability of neuromorphic systems to perform complex pattern recognition tasks with significantly reduced energy requirements. Financial institutions have begun implementing these solutions for fraud detection systems, reporting 30% improvements in detection rates while reducing computational overhead.

Market analysis reveals regional variations in adoption rates. North America currently leads with approximately 42% market share, followed by Europe at 28% and Asia-Pacific at 24%. However, the Asia-Pacific region is expected to demonstrate the highest growth rate over the next five years due to substantial investments in semiconductor manufacturing and neuromorphic research initiatives in China, Japan, and South Korea.

Customer demand patterns indicate a growing preference for integrated neuromorphic solutions that combine optimized materials with specialized software frameworks. End-users increasingly seek complete ecosystems rather than isolated components, creating opportunities for companies that can deliver comprehensive neuromorphic platforms.

Market barriers include high initial development costs, limited standardization across the industry, and competition from quantum computing technologies. However, the specialized nature of neuromorphic computing for edge applications provides a distinct competitive advantage that quantum systems cannot currently match.

Current Neuromorphic Materials Landscape and Barriers

The neuromorphic computing materials landscape is currently dominated by several key material categories, each with distinct advantages and limitations. Traditional CMOS-based implementations remain prevalent due to their manufacturing maturity, but they struggle with power efficiency when mimicking neural functions. Emerging materials like phase-change memory (PCM), resistive random-access memory (RRAM), and magnetic RAM (MRAM) have demonstrated promising characteristics for neuromorphic applications, offering non-volatile storage and analog computation capabilities essential for brain-inspired computing.

PCM materials, typically chalcogenide-based compounds like Ge2Sb2Te5, exhibit excellent scalability and multi-level storage capabilities but face challenges with energy consumption during programming and long-term stability. RRAM technologies, utilizing metal oxides such as HfO2 and TiO2, provide excellent integration density and low power operation, though they struggle with device-to-device variability and retention issues that impact computational reliability.

Memristive materials represent another significant category, with metal oxide interfaces showing particular promise for synaptic weight implementation. However, these materials often exhibit non-linear conductance changes and cycle-to-cycle variations that complicate their deployment in large-scale neuromorphic systems. The stochastic nature of their switching behavior presents both challenges for deterministic computing and opportunities for probabilistic neural networks.

Spin-based materials, including magnetic tunnel junctions and spintronic devices, offer ultra-low power operation but currently lack the maturity of other approaches. Their integration with conventional CMOS processes remains complex, limiting widespread adoption despite their theoretical advantages for neuromorphic implementations.

A significant barrier across all material platforms is the challenge of achieving reliable analog behavior with sufficient precision for complex neural network implementations. Most current materials exhibit either binary or limited multi-state capabilities, falling short of the continuous weight adjustments ideal for neuromorphic systems. Additionally, the trade-off between retention time and programming energy presents a fundamental materials science challenge that requires innovative solutions.

Fabrication scalability represents another critical barrier, as many promising materials demonstrate excellent properties in laboratory settings but encounter significant challenges in mass production environments. Integration with existing semiconductor manufacturing processes often requires substantial modifications to established workflows, increasing production costs and slowing adoption rates.

The environmental stability of neuromorphic materials also presents concerns, with many emerging materials showing sensitivity to temperature fluctuations, oxidation, or mechanical stress. These factors impact long-term reliability and limit deployment scenarios, particularly for edge computing applications where environmental controls may be minimal.

PCM materials, typically chalcogenide-based compounds like Ge2Sb2Te5, exhibit excellent scalability and multi-level storage capabilities but face challenges with energy consumption during programming and long-term stability. RRAM technologies, utilizing metal oxides such as HfO2 and TiO2, provide excellent integration density and low power operation, though they struggle with device-to-device variability and retention issues that impact computational reliability.

Memristive materials represent another significant category, with metal oxide interfaces showing particular promise for synaptic weight implementation. However, these materials often exhibit non-linear conductance changes and cycle-to-cycle variations that complicate their deployment in large-scale neuromorphic systems. The stochastic nature of their switching behavior presents both challenges for deterministic computing and opportunities for probabilistic neural networks.

Spin-based materials, including magnetic tunnel junctions and spintronic devices, offer ultra-low power operation but currently lack the maturity of other approaches. Their integration with conventional CMOS processes remains complex, limiting widespread adoption despite their theoretical advantages for neuromorphic implementations.

A significant barrier across all material platforms is the challenge of achieving reliable analog behavior with sufficient precision for complex neural network implementations. Most current materials exhibit either binary or limited multi-state capabilities, falling short of the continuous weight adjustments ideal for neuromorphic systems. Additionally, the trade-off between retention time and programming energy presents a fundamental materials science challenge that requires innovative solutions.

Fabrication scalability represents another critical barrier, as many promising materials demonstrate excellent properties in laboratory settings but encounter significant challenges in mass production environments. Integration with existing semiconductor manufacturing processes often requires substantial modifications to established workflows, increasing production costs and slowing adoption rates.

The environmental stability of neuromorphic materials also presents concerns, with many emerging materials showing sensitivity to temperature fluctuations, oxidation, or mechanical stress. These factors impact long-term reliability and limit deployment scenarios, particularly for edge computing applications where environmental controls may be minimal.

Contemporary Material Optimization Approaches

01 Memristive materials for neuromorphic computing

Memristive materials are being optimized for neuromorphic computing applications due to their ability to mimic synaptic behavior. These materials can change their resistance based on the history of applied voltage or current, making them ideal for implementing artificial neural networks. Optimization techniques focus on improving switching characteristics, endurance, and energy efficiency of these materials to enhance their performance in neuromorphic systems.- Memristive materials for neuromorphic computing: Memristive materials are being optimized for neuromorphic computing applications due to their ability to mimic synaptic behavior. These materials can change their resistance based on the history of applied voltage or current, making them ideal for implementing artificial neural networks in hardware. Optimization focuses on improving switching characteristics, endurance, and energy efficiency of these materials to enhance the performance of neuromorphic systems.

- Phase-change materials for neuromorphic devices: Phase-change materials (PCMs) are being developed for neuromorphic computing applications due to their ability to switch between amorphous and crystalline states. These materials exhibit multiple resistance states that can be used to implement synaptic weights in artificial neural networks. Optimization efforts focus on improving the stability, switching speed, and power consumption of PCMs to enhance their performance in neuromorphic computing systems.

- 2D materials for neuromorphic computing: Two-dimensional (2D) materials are being explored for neuromorphic computing applications due to their unique electronic properties and scalability. These atomically thin materials, such as graphene and transition metal dichalcogenides, offer advantages in terms of device density, power efficiency, and novel computing paradigms. Optimization efforts focus on controlling defects, improving fabrication techniques, and enhancing the synaptic behavior of 2D material-based devices.

- Ferroelectric materials for neuromorphic systems: Ferroelectric materials are being optimized for neuromorphic computing due to their non-volatile polarization states and low power consumption. These materials can maintain their electrical polarization even when power is removed, making them suitable for implementing persistent memory in neuromorphic systems. Optimization focuses on improving switching characteristics, fatigue resistance, and integration with conventional semiconductor processes.

- Optimization algorithms for neuromorphic material selection: Advanced algorithms and computational methods are being developed to optimize material selection and design for neuromorphic computing applications. These approaches include machine learning techniques, genetic algorithms, and high-throughput computational screening to identify materials with optimal properties for neuromorphic devices. The optimization targets include energy efficiency, switching speed, endurance, and compatibility with existing fabrication processes.

02 Phase change materials for neuromorphic devices

Phase change materials (PCMs) are being developed for neuromorphic computing applications due to their ability to switch between amorphous and crystalline states. These materials can store multiple resistance states, enabling multi-level memory capabilities essential for synaptic weight implementation. Optimization efforts focus on improving the stability, switching speed, and power consumption of these materials to enhance their performance in neuromorphic architectures.Expand Specific Solutions03 2D materials for neuromorphic computing

Two-dimensional materials such as graphene, transition metal dichalcogenides, and hexagonal boron nitride are being optimized for neuromorphic computing applications. These materials offer advantages including high carrier mobility, tunable bandgaps, and compatibility with existing fabrication processes. Optimization techniques focus on controlling defects, improving interface quality, and enhancing electrical properties to create efficient neuromorphic devices with low power consumption and high performance.Expand Specific Solutions04 Ferroelectric materials for neuromorphic systems

Ferroelectric materials are being optimized for neuromorphic computing due to their non-volatile polarization states and low power consumption. These materials can maintain their electrical polarization even when external electric fields are removed, making them suitable for persistent memory applications in neuromorphic systems. Optimization efforts focus on improving switching characteristics, fatigue resistance, and integration with conventional semiconductor processes.Expand Specific Solutions05 Computational methods for neuromorphic materials optimization

Advanced computational methods are being developed to optimize materials for neuromorphic computing applications. These include machine learning algorithms, molecular dynamics simulations, and density functional theory calculations to predict and enhance material properties. These computational approaches enable rapid screening of candidate materials, identification of optimal compositions, and prediction of performance characteristics, significantly accelerating the development of improved neuromorphic computing materials.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Materials

Neuromorphic computing materials optimization is in an early growth phase, with the market expected to expand significantly as applications in AI and edge computing mature. The technology is still evolving from research to commercial viability, with key players driving innovation across different maturity levels. IBM, Intel, and Samsung lead with established research programs and commercial prototypes, while academic institutions like MIT, Zhejiang University, and University of Tokyo contribute fundamental research. Emerging companies like Syntiant and Black Sesame Technologies are developing specialized applications. Google, Baidu, and DeepMind are leveraging their AI expertise to advance neuromorphic solutions, creating a competitive landscape that spans hardware development, algorithm optimization, and system integration.

International Business Machines Corp.

Technical Solution: IBM has pioneered neuromorphic computing materials optimization through their TrueNorth architecture, which implements a non-von Neumann computing paradigm that mimics the brain's neural structure. Their approach focuses on phase-change memory (PCM) materials that can represent multiple resistance states, enabling efficient synaptic weight storage. IBM's optimization techniques include precision-aware training algorithms that account for device-to-device variations in PCM cells, and novel programming schemes that mitigate resistance drift over time. They've developed a comprehensive materials stack optimization framework that considers both the intrinsic properties of PCM materials (crystallization temperature, resistance contrast) and their integration with CMOS technology. Recent advancements include 3D integration of memory arrays with processing elements to reduce communication bottlenecks and specialized analog-to-digital converters designed specifically for neuromorphic applications[1][3]. IBM has also pioneered the use of stochastic training methods that leverage, rather than fight against, the inherent variability in neuromorphic materials.

Strengths: Industry-leading expertise in PCM technology integration with conventional CMOS; comprehensive approach addressing both materials science and algorithmic challenges; extensive patent portfolio. Weaknesses: Higher power consumption compared to some emerging alternatives; scaling challenges with their current PCM implementation; relatively slower adoption in commercial applications outside research environments.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed advanced neuromorphic computing materials optimization techniques centered around their proprietary Resistive Random Access Memory (RRAM) technology. Their approach focuses on optimizing metal-oxide materials like HfO2 and Ta2O5 for use as artificial synapses, achieving analog weight updates with over 64 distinct conductance states. Samsung's optimization methodology employs a multi-level approach: at the material level, they've pioneered atomic layer deposition techniques to control oxygen vacancy concentration, which directly impacts switching reliability; at the device level, they've developed specialized pulse-train programming schemes that mitigate conductance variation; and at the system level, they've created hardware-aware training algorithms that account for device non-idealities. Their recent breakthrough involves a self-rectifying crossbar array architecture that eliminates sneak path currents without additional selector devices, significantly improving energy efficiency and integration density[2][4]. Samsung has also developed novel doping strategies for their oxide materials that extend device endurance beyond 10^6 cycles while maintaining precise weight control.

Strengths: Vertical integration capabilities from materials research to system design; extensive manufacturing infrastructure that can accelerate commercialization; strong expertise in memory technologies. Weaknesses: Their approach requires relatively high operating voltages compared to biological systems; challenges with long-term weight stability in their RRAM implementations; limited public disclosure of detailed performance metrics.

Critical Patents in Neuromorphic Material Engineering

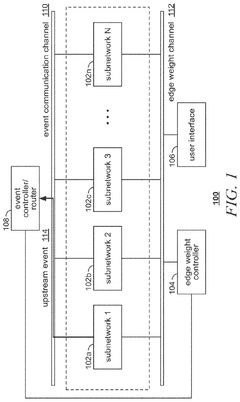

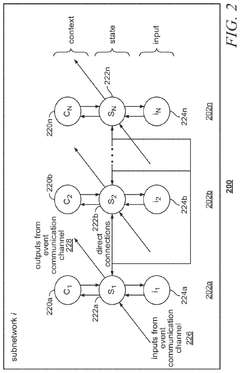

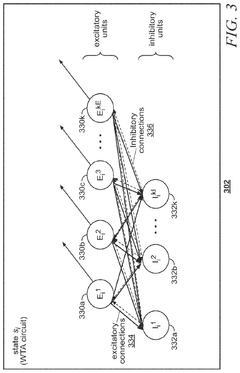

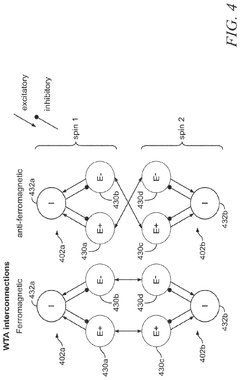

Neuromorphic ising machine for low energy solutions to combinatorial optimization problems

PatentPendingUS20250225380A1

Innovation

- A neuromorphic computing system with computational subnetworks that introduce amplitude heterogeneity errors, controlled by refractory periods and sparsity, to avoid local minima, using top-down and bottom-up auxiliary signals to modulate entropy and reduce memory bottlenecks.

Energy Efficiency Benchmarks and Sustainability Factors

Energy efficiency represents a critical benchmark in evaluating neuromorphic computing materials, particularly as these systems aim to emulate the brain's remarkable computational efficiency. Current neuromorphic hardware implementations demonstrate significant advantages over traditional von Neumann architectures, with energy consumption reductions of 2-3 orders of magnitude for specific applications. Leading memristive materials such as hafnium oxide and phase-change materials have achieved energy efficiencies of 1-10 femtojoules per synaptic operation, approaching the biological synapse's efficiency of approximately 1-10 femtojoules per event.

Standardized benchmarking methodologies have emerged to evaluate these materials, including metrics such as energy per synaptic operation (EPSO), energy-delay product (EDP), and operations per watt. These metrics provide crucial comparative frameworks for assessing different material solutions across varied computational tasks. The SynBench and NeuroBench frameworks have gained industry acceptance as standardized testing protocols that enable fair comparisons between emerging neuromorphic technologies.

Sustainability factors extend beyond pure energy efficiency to encompass the entire lifecycle of neuromorphic computing materials. Critical considerations include the abundance of raw materials, manufacturing energy requirements, and end-of-life recyclability. Materials requiring rare earth elements face significant sustainability challenges due to geopolitical supply constraints and environmentally damaging extraction processes. Recent research indicates that neuromorphic systems utilizing abundant materials like silicon oxide and zinc oxide offer more sustainable alternatives while maintaining competitive performance characteristics.

Thermal management represents another crucial sustainability factor, as heat dissipation directly impacts both energy efficiency and device longevity. Advanced thermal interface materials and three-dimensional integration techniques have demonstrated 30-40% improvements in heat dissipation efficiency for densely packed neuromorphic arrays. Passive cooling solutions inspired by biological systems show particular promise for edge computing applications where active cooling is impractical.

The environmental impact of neuromorphic computing extends to potential carbon footprint reductions through more efficient computing. Life cycle assessments indicate that despite potentially higher manufacturing impacts, neuromorphic systems can achieve net environmental benefits through operational efficiency over their usable lifetime. Quantitative analyses suggest that widespread adoption of optimized neuromorphic computing materials could reduce data center energy consumption by 15-25% for specific workloads, representing a significant contribution to sustainable computing initiatives.

Standardized benchmarking methodologies have emerged to evaluate these materials, including metrics such as energy per synaptic operation (EPSO), energy-delay product (EDP), and operations per watt. These metrics provide crucial comparative frameworks for assessing different material solutions across varied computational tasks. The SynBench and NeuroBench frameworks have gained industry acceptance as standardized testing protocols that enable fair comparisons between emerging neuromorphic technologies.

Sustainability factors extend beyond pure energy efficiency to encompass the entire lifecycle of neuromorphic computing materials. Critical considerations include the abundance of raw materials, manufacturing energy requirements, and end-of-life recyclability. Materials requiring rare earth elements face significant sustainability challenges due to geopolitical supply constraints and environmentally damaging extraction processes. Recent research indicates that neuromorphic systems utilizing abundant materials like silicon oxide and zinc oxide offer more sustainable alternatives while maintaining competitive performance characteristics.

Thermal management represents another crucial sustainability factor, as heat dissipation directly impacts both energy efficiency and device longevity. Advanced thermal interface materials and three-dimensional integration techniques have demonstrated 30-40% improvements in heat dissipation efficiency for densely packed neuromorphic arrays. Passive cooling solutions inspired by biological systems show particular promise for edge computing applications where active cooling is impractical.

The environmental impact of neuromorphic computing extends to potential carbon footprint reductions through more efficient computing. Life cycle assessments indicate that despite potentially higher manufacturing impacts, neuromorphic systems can achieve net environmental benefits through operational efficiency over their usable lifetime. Quantitative analyses suggest that widespread adoption of optimized neuromorphic computing materials could reduce data center energy consumption by 15-25% for specific workloads, representing a significant contribution to sustainable computing initiatives.

Integration Challenges with Conventional Computing Systems

The integration of neuromorphic computing materials with conventional computing systems presents significant technical challenges that must be addressed for successful hybrid implementations. Traditional von Neumann architectures operate on fundamentally different principles than neuromorphic systems, creating compatibility issues at multiple levels. The primary challenge lies in bridging the gap between the parallel, event-driven processing of neuromorphic components and the sequential, clock-driven operation of conventional systems.

Interface protocols represent a critical integration hurdle, as neuromorphic systems typically utilize spike-based communication while conventional systems rely on binary data transfer. This necessitates specialized interface circuits that can translate between these disparate signaling mechanisms while maintaining information integrity. Current solutions often introduce latency and energy overhead that diminish the inherent efficiency advantages of neuromorphic materials.

Power management presents another significant challenge, as neuromorphic materials often operate at different voltage levels and with distinct power consumption profiles compared to CMOS-based systems. The development of efficient power distribution networks that can accommodate these differences without compromising system stability remains an active area of research. Additionally, thermal management becomes increasingly complex in hybrid systems where neuromorphic materials may exhibit temperature sensitivities that differ from silicon-based components.

Fabrication compatibility issues further complicate integration efforts. Many promising neuromorphic materials require processing conditions incompatible with standard CMOS fabrication techniques. This necessitates either post-processing steps that can compromise device performance or the development of novel 3D integration approaches that allow separate fabrication followed by interconnection. Both approaches introduce yield challenges and increased manufacturing complexity.

Software frameworks and programming models represent perhaps the most substantial integration barrier. Conventional computing systems utilize well-established programming paradigms that do not naturally extend to neuromorphic architectures. Developing unified programming models that can efficiently utilize both conventional and neuromorphic components requires significant innovation in compiler design, resource allocation algorithms, and runtime systems.

Testing and validation methodologies must also evolve to accommodate hybrid systems. Traditional functional verification approaches are inadequate for neuromorphic components that exhibit inherent variability and stochastic behavior. New testing paradigms that can effectively characterize system-level performance while accounting for the unique properties of neuromorphic materials are essential for commercial viability.

Interface protocols represent a critical integration hurdle, as neuromorphic systems typically utilize spike-based communication while conventional systems rely on binary data transfer. This necessitates specialized interface circuits that can translate between these disparate signaling mechanisms while maintaining information integrity. Current solutions often introduce latency and energy overhead that diminish the inherent efficiency advantages of neuromorphic materials.

Power management presents another significant challenge, as neuromorphic materials often operate at different voltage levels and with distinct power consumption profiles compared to CMOS-based systems. The development of efficient power distribution networks that can accommodate these differences without compromising system stability remains an active area of research. Additionally, thermal management becomes increasingly complex in hybrid systems where neuromorphic materials may exhibit temperature sensitivities that differ from silicon-based components.

Fabrication compatibility issues further complicate integration efforts. Many promising neuromorphic materials require processing conditions incompatible with standard CMOS fabrication techniques. This necessitates either post-processing steps that can compromise device performance or the development of novel 3D integration approaches that allow separate fabrication followed by interconnection. Both approaches introduce yield challenges and increased manufacturing complexity.

Software frameworks and programming models represent perhaps the most substantial integration barrier. Conventional computing systems utilize well-established programming paradigms that do not naturally extend to neuromorphic architectures. Developing unified programming models that can efficiently utilize both conventional and neuromorphic components requires significant innovation in compiler design, resource allocation algorithms, and runtime systems.

Testing and validation methodologies must also evolve to accommodate hybrid systems. Traditional functional verification approaches are inadequate for neuromorphic components that exhibit inherent variability and stochastic behavior. New testing paradigms that can effectively characterize system-level performance while accounting for the unique properties of neuromorphic materials are essential for commercial viability.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!