How to Calibrate Raman Spectroscopy for Precise Results

SEP 19, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Raman Spectroscopy Calibration Background and Objectives

Raman spectroscopy has evolved significantly since its discovery by C.V. Raman in 1928, transforming from a purely academic analytical technique to an essential tool across numerous industries including pharmaceuticals, materials science, and biomedical applications. The technology leverages the inelastic scattering of photons to provide detailed molecular fingerprints of samples, offering insights into chemical composition and molecular structure without destructive testing.

The evolution of Raman instrumentation has been marked by several breakthrough developments, including the introduction of Fourier Transform Raman spectroscopy in the 1980s, the development of portable Raman devices in the 1990s, and more recently, the integration of advanced computational methods and machine learning algorithms for enhanced spectral analysis and interpretation.

Despite these advancements, calibration remains a critical challenge in Raman spectroscopy. Precise calibration directly impacts measurement accuracy, reproducibility, and the ability to compare results across different instruments and laboratories. The scientific community has increasingly recognized the need for standardized calibration protocols to ensure reliable and comparable data.

The primary technical objective of Raman spectroscopy calibration is to establish a reliable relationship between the measured Raman shift values and the actual vibrational frequencies of molecules. This involves addressing several key aspects: wavelength calibration to ensure accurate Raman shift measurements, intensity calibration to account for instrument response functions, and spectral resolution calibration to optimize peak identification and separation.

Secondary objectives include minimizing systematic errors from environmental factors such as temperature fluctuations and sample positioning, developing robust calibration standards suitable for different application domains, and creating automated calibration procedures that reduce operator dependency and enhance reproducibility.

Recent technological trends in this field include the development of integrated calibration modules within commercial Raman systems, the application of artificial intelligence for automated calibration verification and correction, and the establishment of international standards for calibration methodologies and reference materials.

The trajectory of Raman spectroscopy calibration is moving toward more sophisticated yet user-friendly approaches that combine hardware improvements with advanced software solutions. These developments aim to democratize access to high-precision Raman analysis by reducing the expertise required for proper calibration while simultaneously improving measurement accuracy and reliability.

Achieving these calibration objectives would significantly expand the utility of Raman spectroscopy across diverse fields, from quality control in pharmaceutical manufacturing to point-of-care medical diagnostics and environmental monitoring applications.

The evolution of Raman instrumentation has been marked by several breakthrough developments, including the introduction of Fourier Transform Raman spectroscopy in the 1980s, the development of portable Raman devices in the 1990s, and more recently, the integration of advanced computational methods and machine learning algorithms for enhanced spectral analysis and interpretation.

Despite these advancements, calibration remains a critical challenge in Raman spectroscopy. Precise calibration directly impacts measurement accuracy, reproducibility, and the ability to compare results across different instruments and laboratories. The scientific community has increasingly recognized the need for standardized calibration protocols to ensure reliable and comparable data.

The primary technical objective of Raman spectroscopy calibration is to establish a reliable relationship between the measured Raman shift values and the actual vibrational frequencies of molecules. This involves addressing several key aspects: wavelength calibration to ensure accurate Raman shift measurements, intensity calibration to account for instrument response functions, and spectral resolution calibration to optimize peak identification and separation.

Secondary objectives include minimizing systematic errors from environmental factors such as temperature fluctuations and sample positioning, developing robust calibration standards suitable for different application domains, and creating automated calibration procedures that reduce operator dependency and enhance reproducibility.

Recent technological trends in this field include the development of integrated calibration modules within commercial Raman systems, the application of artificial intelligence for automated calibration verification and correction, and the establishment of international standards for calibration methodologies and reference materials.

The trajectory of Raman spectroscopy calibration is moving toward more sophisticated yet user-friendly approaches that combine hardware improvements with advanced software solutions. These developments aim to democratize access to high-precision Raman analysis by reducing the expertise required for proper calibration while simultaneously improving measurement accuracy and reliability.

Achieving these calibration objectives would significantly expand the utility of Raman spectroscopy across diverse fields, from quality control in pharmaceutical manufacturing to point-of-care medical diagnostics and environmental monitoring applications.

Market Analysis for Precision Raman Spectroscopy Applications

The global market for Raman spectroscopy has experienced significant growth in recent years, driven by increasing demand for precise analytical tools across various industries. The market was valued at approximately $1.8 billion in 2022 and is projected to reach $2.9 billion by 2027, growing at a CAGR of 9.8% during this period.

Pharmaceutical and biotechnology sectors represent the largest application segments, accounting for nearly 35% of the total market share. These industries rely heavily on calibrated Raman systems for drug discovery, formulation analysis, and quality control processes. The ability to provide non-destructive, rapid analysis with minimal sample preparation has positioned Raman spectroscopy as an essential tool in pharmaceutical manufacturing.

Materials science applications follow closely, comprising about 28% of the market. Here, precisely calibrated Raman systems are crucial for analyzing novel materials, polymers, and nanomaterials. The semiconductor industry has also emerged as a significant consumer, utilizing Raman techniques for process monitoring and quality control in chip manufacturing.

Geographically, North America leads the market with approximately 38% share, followed by Europe (30%) and Asia-Pacific (25%). The Asia-Pacific region, particularly China and India, is witnessing the fastest growth rate due to expanding pharmaceutical manufacturing and research facilities.

A notable market trend is the increasing demand for portable and handheld Raman devices, which grew by 15% in 2022 alone. These devices require sophisticated calibration protocols to maintain accuracy despite their compact form factor. This segment is expected to expand further as field applications in environmental monitoring, food safety, and forensic analysis gain prominence.

The market for calibration standards and reference materials has emerged as a specialized sub-segment, growing at 12% annually. This reflects the critical importance of proper calibration in achieving reliable results across all application areas.

Customer surveys indicate that accuracy and reproducibility rank as the top purchasing criteria for Raman systems, with 87% of users citing calibration capabilities as "extremely important" in their decision-making process. This underscores the market's recognition that even the most advanced Raman systems require proper calibration to deliver their full analytical potential.

Pharmaceutical and biotechnology sectors represent the largest application segments, accounting for nearly 35% of the total market share. These industries rely heavily on calibrated Raman systems for drug discovery, formulation analysis, and quality control processes. The ability to provide non-destructive, rapid analysis with minimal sample preparation has positioned Raman spectroscopy as an essential tool in pharmaceutical manufacturing.

Materials science applications follow closely, comprising about 28% of the market. Here, precisely calibrated Raman systems are crucial for analyzing novel materials, polymers, and nanomaterials. The semiconductor industry has also emerged as a significant consumer, utilizing Raman techniques for process monitoring and quality control in chip manufacturing.

Geographically, North America leads the market with approximately 38% share, followed by Europe (30%) and Asia-Pacific (25%). The Asia-Pacific region, particularly China and India, is witnessing the fastest growth rate due to expanding pharmaceutical manufacturing and research facilities.

A notable market trend is the increasing demand for portable and handheld Raman devices, which grew by 15% in 2022 alone. These devices require sophisticated calibration protocols to maintain accuracy despite their compact form factor. This segment is expected to expand further as field applications in environmental monitoring, food safety, and forensic analysis gain prominence.

The market for calibration standards and reference materials has emerged as a specialized sub-segment, growing at 12% annually. This reflects the critical importance of proper calibration in achieving reliable results across all application areas.

Customer surveys indicate that accuracy and reproducibility rank as the top purchasing criteria for Raman systems, with 87% of users citing calibration capabilities as "extremely important" in their decision-making process. This underscores the market's recognition that even the most advanced Raman systems require proper calibration to deliver their full analytical potential.

Current Calibration Challenges and Technical Limitations

Despite significant advancements in Raman spectroscopy technology, calibration remains a persistent challenge that limits the achievement of precise and reproducible results. Current calibration methods face several technical limitations that impact data quality and reliability across different instruments and laboratories.

Wavelength calibration presents a fundamental challenge, as even minor shifts in laser wavelength can significantly alter spectral positions. Many laboratories struggle with maintaining consistent wavelength standards, with variations as small as 0.1 nm potentially causing misidentification of chemical compounds. The lack of universally accepted calibration protocols exacerbates this issue, resulting in data inconsistency when comparing results across different research groups.

Intensity calibration poses another significant hurdle, particularly for quantitative analysis applications. Current methods often fail to account for variations in instrument response functions, detector sensitivity degradation over time, and optical component aging. These factors introduce systematic errors that compromise measurement accuracy, especially when monitoring low-concentration analytes or conducting long-term studies requiring consistent baseline measurements.

Temperature fluctuations represent a critical yet often overlooked calibration challenge. Raman spectra exhibit temperature-dependent shifts that can be misinterpreted as chemical changes if not properly controlled. Most commercial systems lack robust temperature compensation mechanisms, leading to spectral artifacts during extended measurement sessions or when operating in environments with variable ambient conditions.

Sample positioning inconsistencies further complicate calibration efforts. The focal point precision in modern Raman systems can affect signal intensity by up to 30% with minimal positional changes. Current automated focusing systems still struggle with heterogeneous or transparent samples, resulting in reproducibility issues that undermine quantitative analysis.

Reference standard limitations constitute another significant barrier to precise calibration. Existing standards often exhibit stability issues under laser exposure, with photodegradation altering their spectral characteristics over time. Additionally, the limited availability of certified reference materials for specific application domains forces researchers to develop in-house standards with questionable traceability and comparability.

Software-related challenges further complicate calibration processes. Current spectral processing algorithms employ different baseline correction and peak fitting methodologies, introducing variability in interpreted results even when using identical raw data. The proprietary nature of many processing algorithms creates "black box" scenarios where calibration adjustments cannot be fully understood or standardized across platforms.

Cross-instrument calibration remains perhaps the most significant technical limitation, with studies showing up to 15% variation in peak positions and intensities when analyzing identical samples on different spectrometers, even from the same manufacturer. This fundamental challenge undermines multi-site research initiatives and technology transfer efforts in industrial applications.

Wavelength calibration presents a fundamental challenge, as even minor shifts in laser wavelength can significantly alter spectral positions. Many laboratories struggle with maintaining consistent wavelength standards, with variations as small as 0.1 nm potentially causing misidentification of chemical compounds. The lack of universally accepted calibration protocols exacerbates this issue, resulting in data inconsistency when comparing results across different research groups.

Intensity calibration poses another significant hurdle, particularly for quantitative analysis applications. Current methods often fail to account for variations in instrument response functions, detector sensitivity degradation over time, and optical component aging. These factors introduce systematic errors that compromise measurement accuracy, especially when monitoring low-concentration analytes or conducting long-term studies requiring consistent baseline measurements.

Temperature fluctuations represent a critical yet often overlooked calibration challenge. Raman spectra exhibit temperature-dependent shifts that can be misinterpreted as chemical changes if not properly controlled. Most commercial systems lack robust temperature compensation mechanisms, leading to spectral artifacts during extended measurement sessions or when operating in environments with variable ambient conditions.

Sample positioning inconsistencies further complicate calibration efforts. The focal point precision in modern Raman systems can affect signal intensity by up to 30% with minimal positional changes. Current automated focusing systems still struggle with heterogeneous or transparent samples, resulting in reproducibility issues that undermine quantitative analysis.

Reference standard limitations constitute another significant barrier to precise calibration. Existing standards often exhibit stability issues under laser exposure, with photodegradation altering their spectral characteristics over time. Additionally, the limited availability of certified reference materials for specific application domains forces researchers to develop in-house standards with questionable traceability and comparability.

Software-related challenges further complicate calibration processes. Current spectral processing algorithms employ different baseline correction and peak fitting methodologies, introducing variability in interpreted results even when using identical raw data. The proprietary nature of many processing algorithms creates "black box" scenarios where calibration adjustments cannot be fully understood or standardized across platforms.

Cross-instrument calibration remains perhaps the most significant technical limitation, with studies showing up to 15% variation in peak positions and intensities when analyzing identical samples on different spectrometers, even from the same manufacturer. This fundamental challenge undermines multi-site research initiatives and technology transfer efforts in industrial applications.

Contemporary Calibration Protocols and Standards

01 Calibration techniques for improved Raman spectroscopy precision

Various calibration methods are employed to enhance the precision of Raman spectroscopy measurements. These include reference standard calibration, automated wavelength calibration systems, and algorithms that compensate for instrumental drift. Proper calibration ensures consistent and accurate spectral measurements across different samples and over time, significantly improving the reliability of quantitative analysis.- Advanced calibration techniques for Raman spectroscopy: Various calibration methods are employed to enhance the precision of Raman spectroscopy measurements. These techniques include reference standards, automated calibration procedures, and algorithms that compensate for instrumental drift. Proper calibration ensures accurate wavelength positioning, intensity measurements, and spectral resolution, which are critical for reliable quantitative and qualitative analysis across different samples and measurement conditions.

- Signal processing and noise reduction methods: Advanced signal processing algorithms are implemented to improve the precision of Raman spectroscopy by enhancing signal-to-noise ratios. These methods include baseline correction, spectral smoothing, peak deconvolution, and multivariate statistical analysis. By effectively filtering out noise and background interference, these techniques allow for the detection of subtle spectral features and improve the overall measurement precision, particularly for samples with weak Raman signals or complex matrices.

- Hardware innovations for improved precision: Technological advancements in Raman spectroscopy hardware components significantly enhance measurement precision. These innovations include high-resolution spectrometers, stabilized laser sources, improved detector sensitivity, and specialized optical configurations. Precision-engineered components minimize instrumental variations and optimize light collection efficiency, resulting in more consistent and accurate spectral measurements across diverse sample types and environmental conditions.

- Temperature and environmental control systems: Environmental factors significantly impact Raman spectroscopy precision. Specialized systems for controlling temperature, humidity, and mechanical stability help minimize measurement variations. These control mechanisms include temperature-stabilized sample holders, vibration isolation platforms, and environmentally controlled measurement chambers. By maintaining consistent measurement conditions, these systems reduce spectral shifts and intensity fluctuations, enabling more reproducible and precise spectral acquisition.

- Automated measurement and analysis protocols: Standardized measurement protocols and automated analysis systems enhance the precision of Raman spectroscopy by reducing operator-dependent variations. These approaches include automated sample positioning, optimized data acquisition parameters, and standardized analytical workflows. Machine learning algorithms further improve spectral interpretation consistency. Together, these methodologies ensure reproducible measurements across different operators, instruments, and laboratories, leading to more reliable and precise spectral data for both research and industrial applications.

02 Advanced signal processing algorithms for noise reduction

Sophisticated signal processing techniques are implemented to enhance the precision of Raman spectroscopy by reducing noise and improving signal-to-noise ratios. These include wavelet transforms, machine learning algorithms for spectral denoising, and advanced baseline correction methods. By effectively filtering out noise while preserving spectral features, these algorithms enable detection of subtle spectral changes and improve measurement reproducibility.Expand Specific Solutions03 Hardware innovations for enhanced spectral resolution

Novel hardware designs significantly improve the precision of Raman spectroscopy measurements. These innovations include high-precision optical components, temperature-stabilized detectors, and advanced spectrometer configurations. Specialized gratings, improved laser sources with narrow linewidths, and optimized collection optics contribute to higher spectral resolution and improved measurement precision, enabling more accurate identification and quantification of chemical components.Expand Specific Solutions04 Real-time monitoring and feedback systems

Real-time monitoring and feedback systems continuously assess and adjust measurement parameters during Raman spectroscopy analysis. These systems incorporate sensors that detect environmental fluctuations, sample positioning variations, and instrument performance metrics. Automated feedback loops make real-time adjustments to maintain optimal measurement conditions, ensuring consistent precision across extended measurement periods and varying sample conditions.Expand Specific Solutions05 Sample preparation and positioning techniques

Specialized sample preparation and positioning methods significantly impact Raman spectroscopy precision. These include standardized sample holders, automated positioning systems with micrometer precision, and techniques to minimize sample heterogeneity effects. Controlled sample environment chambers that regulate temperature, humidity, and pressure further enhance measurement reproducibility by eliminating environmental variables that could affect spectral quality.Expand Specific Solutions

Leading Manufacturers and Research Institutions in Raman Technology

Raman spectroscopy calibration technology is currently in a mature growth phase, with an estimated global market size of $1.5-2 billion and steady annual growth of 6-8%. The competitive landscape features established instrumentation leaders like Horiba Jobin Yvon, Renishaw Diagnostics, and Endress+Hauser Optical Analysis providing comprehensive calibration solutions, while pharmaceutical giants such as Merck and Philips invest in application-specific developments. Academic institutions including Zhejiang University and King's College London contribute significant research advancements. The technology demonstrates high maturity with standardized protocols, though innovation continues in automated calibration systems and AI-enhanced data processing, particularly from companies like Serstech AB and Nova Ltd. who are developing portable solutions with simplified calibration workflows.

Commissariat à l´énergie atomique et aux énergies Alternatives

Technical Solution: The French Alternative Energies and Atomic Energy Commission (CEA) has developed sophisticated calibration methodologies for Raman spectroscopy focused on high-precision scientific applications. Their approach emphasizes metrological traceability and uncertainty quantification throughout the calibration process. CEA's calibration protocol incorporates multiple reference standards measured under strictly controlled conditions to establish calibration curves with fully characterized uncertainty budgets. For wavelength calibration, they employ a combination of atomic emission lines and molecular standards to achieve accuracy across the entire spectral range. Their methodology includes rigorous evaluation of all potential error sources, including spectrometer non-linearity, detector response variations, and optical aberrations. CEA has pioneered advanced mathematical approaches for spectral correction, including modified polynomial fitting algorithms that minimize calibration errors at spectral extremes. For quantitative applications, they have developed specialized protocols that incorporate Monte Carlo simulations to establish confidence intervals for calibration parameters. Their approach also includes regular inter-laboratory comparison studies to verify calibration consistency across different research facilities.

Strengths: Exceptional metrological rigor with comprehensive uncertainty quantification; advanced mathematical approaches for optimal calibration accuracy; excellent scientific foundation based on fundamental physical principles. Weaknesses: Highly complex calibration procedures may be impractical for routine analytical applications; requires significant expertise to implement fully; calibration protocols prioritize accuracy over speed and simplicity.

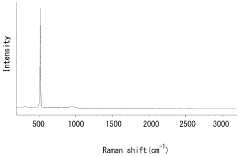

Horiba Jobin Yvon, Inc.

Technical Solution: Horiba has developed a comprehensive calibration approach for Raman spectroscopy that integrates multiple calibration parameters. Their LabRAM systems employ a three-step calibration protocol: wavelength calibration using standard reference materials (typically silicon with its well-defined 520.7 cm-1 peak), intensity calibration using certified luminescence standards, and spectral response correction using white light sources with known emission profiles. The company has pioneered automated calibration routines that minimize human error and ensure reproducibility across different instruments. Their AutoCalib technology enables automated wavelength calibration that can be scheduled at regular intervals to maintain system accuracy. Additionally, Horiba has developed specialized software algorithms that can detect and correct for instrumental drift in real-time, ensuring consistent results even during extended measurement sessions. Their calibration methodology also incorporates environmental monitoring to account for temperature and humidity variations that can affect spectral accuracy.

Strengths: Industry-leading automated calibration routines that reduce operator error; comprehensive approach addressing multiple calibration parameters simultaneously; excellent long-term stability through scheduled calibration protocols. Weaknesses: Higher initial cost compared to some competitors; calibration procedures may require proprietary standards; complex calibration software may require specialized training for operators.

Critical Patents and Innovations in Raman Calibration

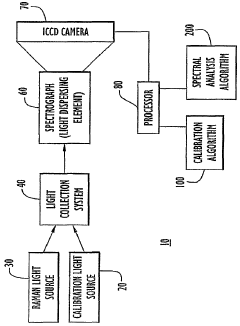

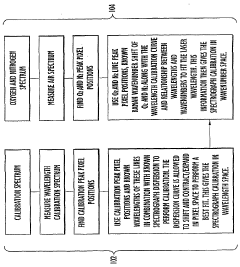

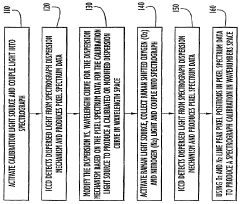

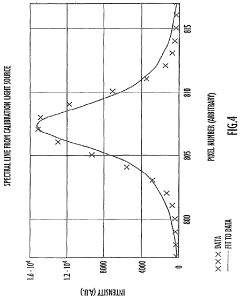

Spectrograph calibration using known light source and raman scattering

PatentWO2007101092A2

Innovation

- A method for calibrating spectrometers using a predetermined dispersion curve modification based on spectrum data from a calibration light source, determining the wavelength of a Raman light source, and computing calibration data from Raman line peak positions to achieve accurate wavelength-to-pixel mapping, accounting for factors like temperature and pressure changes.

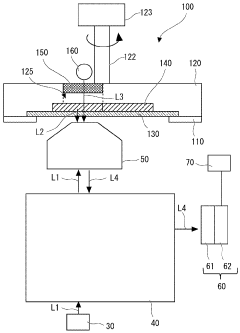

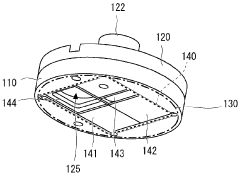

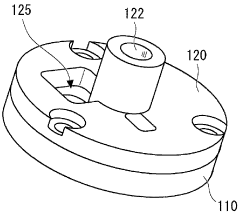

Calibration device, raman spectroscopy measurement device, and wave number calibration method

PatentWO2024085056A1

Innovation

- A calibration device and method that uses a combination of inorganic standard samples and a drive mechanism to sequentially irradiate these samples with laser light, along with a lamp light source generating bright lines, to spectrally measure and convert the calibration wavelength axis into a wavenumber axis, ensuring accurate calibration of the Raman spectrometer.

Validation Metrics and Quality Assurance Frameworks

Establishing robust validation metrics and quality assurance frameworks is essential for ensuring the reliability and reproducibility of Raman spectroscopy calibration processes. These frameworks provide systematic approaches to evaluate calibration accuracy, precision, and overall performance against established standards.

The foundation of any validation framework begins with defining appropriate performance metrics. For Raman spectroscopy, these typically include spectral resolution, signal-to-noise ratio (SNR), wavelength accuracy, and intensity linearity. Each metric must have clearly defined acceptance criteria based on application requirements and industry standards such as ASTM E1840 for wavelength calibration or ISO 17025 for laboratory testing competence.

Statistical validation approaches play a crucial role in quality assurance. Methods such as root mean square error (RMSE) calculation between measured and reference peak positions provide quantitative assessment of calibration accuracy. Repeatability and reproducibility studies, often implemented through Gauge R&R (Repeatability and Reproducibility) analysis, help quantify measurement system variation and establish confidence intervals for calibration parameters.

Uncertainty budgeting represents another vital component of validation frameworks. This involves identifying and quantifying all potential sources of uncertainty in the calibration process, including instrument factors, environmental conditions, sample preparation variables, and operator influence. The combined uncertainty provides a comprehensive measure of calibration reliability and helps establish traceability to recognized standards.

Implementation of control charts and trend analysis enables continuous monitoring of calibration performance over time. Shewhart charts tracking key calibration parameters can detect drift or sudden shifts in instrument response, while cumulative sum (CUSUM) charts are effective for identifying subtle, progressive changes that might otherwise go unnoticed in routine calibration verification.

Proficiency testing and interlaboratory comparisons provide external validation of calibration quality. Participation in round-robin testing programs, where multiple laboratories analyze identical samples, helps identify systematic biases and establish consensus values for calibration standards. These collaborative approaches are particularly valuable for emerging applications where standardized reference materials may be limited.

Documentation and audit trails form the final critical element of quality assurance frameworks. Comprehensive records of calibration procedures, validation results, and corrective actions not only support regulatory compliance but also facilitate troubleshooting and continuous improvement of calibration methodologies. Electronic laboratory information management systems (LIMS) with appropriate data integrity controls are increasingly being adopted to streamline this documentation process.

The foundation of any validation framework begins with defining appropriate performance metrics. For Raman spectroscopy, these typically include spectral resolution, signal-to-noise ratio (SNR), wavelength accuracy, and intensity linearity. Each metric must have clearly defined acceptance criteria based on application requirements and industry standards such as ASTM E1840 for wavelength calibration or ISO 17025 for laboratory testing competence.

Statistical validation approaches play a crucial role in quality assurance. Methods such as root mean square error (RMSE) calculation between measured and reference peak positions provide quantitative assessment of calibration accuracy. Repeatability and reproducibility studies, often implemented through Gauge R&R (Repeatability and Reproducibility) analysis, help quantify measurement system variation and establish confidence intervals for calibration parameters.

Uncertainty budgeting represents another vital component of validation frameworks. This involves identifying and quantifying all potential sources of uncertainty in the calibration process, including instrument factors, environmental conditions, sample preparation variables, and operator influence. The combined uncertainty provides a comprehensive measure of calibration reliability and helps establish traceability to recognized standards.

Implementation of control charts and trend analysis enables continuous monitoring of calibration performance over time. Shewhart charts tracking key calibration parameters can detect drift or sudden shifts in instrument response, while cumulative sum (CUSUM) charts are effective for identifying subtle, progressive changes that might otherwise go unnoticed in routine calibration verification.

Proficiency testing and interlaboratory comparisons provide external validation of calibration quality. Participation in round-robin testing programs, where multiple laboratories analyze identical samples, helps identify systematic biases and establish consensus values for calibration standards. These collaborative approaches are particularly valuable for emerging applications where standardized reference materials may be limited.

Documentation and audit trails form the final critical element of quality assurance frameworks. Comprehensive records of calibration procedures, validation results, and corrective actions not only support regulatory compliance but also facilitate troubleshooting and continuous improvement of calibration methodologies. Electronic laboratory information management systems (LIMS) with appropriate data integrity controls are increasingly being adopted to streamline this documentation process.

Cross-Platform Calibration Transfer Solutions

Cross-platform calibration transfer represents a critical advancement in Raman spectroscopy standardization, addressing the fundamental challenge of maintaining measurement consistency across different instruments. This solution enables spectral data collected on one Raman system to be directly comparable with data acquired on another system, eliminating the need for redundant calibration procedures and sample reanalysis.

The methodology typically involves developing mathematical transformation algorithms that can convert spectral data between platforms while preserving the essential chemical information. Direct standardization (DS) and piecewise direct standardization (PDS) have emerged as foundational approaches, where a transfer function is established using a subset of common reference samples measured on both the primary and secondary instruments.

Advanced machine learning techniques have significantly enhanced cross-platform calibration transfer capabilities. Neural networks can now learn complex non-linear relationships between instrument responses, while transfer learning approaches allow calibration models trained on data-rich primary instruments to be effectively adapted for use on secondary instruments with minimal additional data collection.

Virtual standard generation represents another innovative approach, where computational methods simulate how reference standards would appear on different instruments based on known instrument response functions. This reduces the physical reference materials needed for calibration transfer and enables more frequent recalibration.

Cloud-based calibration transfer solutions have gained traction in industrial and research settings, offering centralized calibration management across distributed instrument networks. These systems maintain a master calibration database that individual instruments can access to update their calibration parameters, ensuring network-wide measurement consistency without requiring physical transfer of standards.

Hybrid hardware-software solutions combine specialized hardware components with sophisticated algorithms to facilitate seamless calibration transfer. These systems often incorporate internal reference materials with known spectral properties that can be automatically measured during routine operation to continuously validate and adjust calibration parameters.

The implementation of standardized protocols for cross-platform calibration transfer has been championed by international metrology organizations, establishing best practices for reference material selection, measurement procedures, and validation metrics. These protocols ensure that calibration transfer solutions deliver reliable results across diverse application domains, from pharmaceutical quality control to environmental monitoring.

The methodology typically involves developing mathematical transformation algorithms that can convert spectral data between platforms while preserving the essential chemical information. Direct standardization (DS) and piecewise direct standardization (PDS) have emerged as foundational approaches, where a transfer function is established using a subset of common reference samples measured on both the primary and secondary instruments.

Advanced machine learning techniques have significantly enhanced cross-platform calibration transfer capabilities. Neural networks can now learn complex non-linear relationships between instrument responses, while transfer learning approaches allow calibration models trained on data-rich primary instruments to be effectively adapted for use on secondary instruments with minimal additional data collection.

Virtual standard generation represents another innovative approach, where computational methods simulate how reference standards would appear on different instruments based on known instrument response functions. This reduces the physical reference materials needed for calibration transfer and enables more frequent recalibration.

Cloud-based calibration transfer solutions have gained traction in industrial and research settings, offering centralized calibration management across distributed instrument networks. These systems maintain a master calibration database that individual instruments can access to update their calibration parameters, ensuring network-wide measurement consistency without requiring physical transfer of standards.

Hybrid hardware-software solutions combine specialized hardware components with sophisticated algorithms to facilitate seamless calibration transfer. These systems often incorporate internal reference materials with known spectral properties that can be automatically measured during routine operation to continuously validate and adjust calibration parameters.

The implementation of standardized protocols for cross-platform calibration transfer has been championed by international metrology organizations, establishing best practices for reference material selection, measurement procedures, and validation metrics. These protocols ensure that calibration transfer solutions deliver reliable results across diverse application domains, from pharmaceutical quality control to environmental monitoring.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!