Scalable cloud architectures for Brain-Computer Interfaces data processing

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

BCI Cloud Architecture Background and Objectives

Brain-Computer Interfaces (BCIs) represent a transformative technology that establishes direct communication pathways between the brain and external devices. The evolution of BCI technology has progressed significantly since the first experimental demonstrations in the 1970s, with recent advancements in neural recording techniques, signal processing algorithms, and machine learning approaches dramatically expanding their capabilities and potential applications.

The current technological trajectory of BCIs is characterized by increasing sophistication in neural signal acquisition, interpretation, and utilization. Modern BCIs generate unprecedented volumes of neural data—often in the range of terabytes per subject per day—creating substantial computational challenges that traditional processing architectures struggle to address efficiently. This data explosion necessitates novel approaches to data management, processing, and analysis.

Cloud computing has emerged as a promising solution to these challenges, offering scalable computational resources, distributed processing capabilities, and flexible storage options. The integration of cloud technologies with BCI systems represents a natural evolution, potentially enabling real-time processing of complex neural signals across distributed networks while maintaining accessibility and security.

The primary objective of developing scalable cloud architectures for BCI data processing is to create infrastructure capable of handling the exponential growth in neural data while supporting increasingly sophisticated analytical techniques. These architectures must balance several critical requirements: processing efficiency to support real-time applications, scalability to accommodate growing data volumes, reliability for mission-critical medical applications, and robust security protocols to protect sensitive neural information.

Additionally, these cloud architectures aim to democratize access to BCI technology by reducing the computational barriers to entry, potentially accelerating research and development across academic, medical, and commercial domains. By establishing standardized platforms for neural data processing, these architectures could facilitate collaboration and knowledge sharing within the global BCI community.

The technological goals extend beyond mere data processing to include adaptive learning systems that can evolve with users, personalized neural interfaces that optimize for individual brain patterns, and interoperable frameworks that support diverse BCI applications ranging from medical rehabilitation to consumer applications in gaming and productivity.

As BCI technology continues to mature, the development of robust cloud architectures represents a critical enabling factor that will significantly influence the pace and direction of innovation in this rapidly evolving field. The successful implementation of these architectures will require interdisciplinary collaboration between neuroscientists, computer engineers, data scientists, and cybersecurity experts to address the unique challenges presented by neural data processing at scale.

The current technological trajectory of BCIs is characterized by increasing sophistication in neural signal acquisition, interpretation, and utilization. Modern BCIs generate unprecedented volumes of neural data—often in the range of terabytes per subject per day—creating substantial computational challenges that traditional processing architectures struggle to address efficiently. This data explosion necessitates novel approaches to data management, processing, and analysis.

Cloud computing has emerged as a promising solution to these challenges, offering scalable computational resources, distributed processing capabilities, and flexible storage options. The integration of cloud technologies with BCI systems represents a natural evolution, potentially enabling real-time processing of complex neural signals across distributed networks while maintaining accessibility and security.

The primary objective of developing scalable cloud architectures for BCI data processing is to create infrastructure capable of handling the exponential growth in neural data while supporting increasingly sophisticated analytical techniques. These architectures must balance several critical requirements: processing efficiency to support real-time applications, scalability to accommodate growing data volumes, reliability for mission-critical medical applications, and robust security protocols to protect sensitive neural information.

Additionally, these cloud architectures aim to democratize access to BCI technology by reducing the computational barriers to entry, potentially accelerating research and development across academic, medical, and commercial domains. By establishing standardized platforms for neural data processing, these architectures could facilitate collaboration and knowledge sharing within the global BCI community.

The technological goals extend beyond mere data processing to include adaptive learning systems that can evolve with users, personalized neural interfaces that optimize for individual brain patterns, and interoperable frameworks that support diverse BCI applications ranging from medical rehabilitation to consumer applications in gaming and productivity.

As BCI technology continues to mature, the development of robust cloud architectures represents a critical enabling factor that will significantly influence the pace and direction of innovation in this rapidly evolving field. The successful implementation of these architectures will require interdisciplinary collaboration between neuroscientists, computer engineers, data scientists, and cybersecurity experts to address the unique challenges presented by neural data processing at scale.

Market Analysis for BCI Cloud Solutions

The global Brain-Computer Interface (BCI) cloud solutions market is experiencing significant growth, driven by advancements in neural technology and increasing applications across healthcare, gaming, and assistive technologies. Current market valuations place the BCI market at approximately $1.9 billion in 2023, with cloud-based BCI data processing solutions representing about 30% of this value. Industry analysts project a compound annual growth rate of 15-17% for cloud-based BCI solutions through 2030, outpacing the overall BCI market growth rate of 12%.

Healthcare remains the dominant sector for BCI cloud solutions, accounting for nearly 45% of market share. This is primarily due to increasing adoption in neurological rehabilitation, prosthetic control systems, and diagnostic applications. The gaming and entertainment sector follows at 25%, with virtual reality and immersive gaming experiences driving demand for real-time neural data processing in cloud environments.

Regional analysis reveals North America leading with approximately 40% market share, followed by Europe (30%) and Asia-Pacific (20%). The Asia-Pacific region, particularly China and South Korea, demonstrates the fastest growth trajectory with annual expansion rates exceeding 20%, largely due to substantial government investments in neural technology research and development.

Consumer-grade BCI devices connected to cloud platforms represent the fastest-growing segment, with unit shipments increasing by 35% annually. This growth is fueled by decreasing hardware costs and the proliferation of subscription-based cloud processing models that lower barriers to entry for consumers and researchers alike.

Key market drivers include the exponential growth in neural data volumes requiring scalable processing solutions, increasing demand for real-time analysis capabilities, and the integration of artificial intelligence with BCI systems. The average BCI system now generates between 1-5 GB of data per hour of use, creating substantial demand for efficient cloud storage and processing architectures.

Market challenges primarily center around data privacy concerns, with 65% of potential users citing security of neural data as their primary adoption concern. Regulatory frameworks for neural data handling remain underdeveloped in most regions, creating market uncertainty. Additionally, latency issues in cloud processing present technical barriers for applications requiring immediate feedback, such as prosthetic control or critical healthcare monitoring.

Emerging market opportunities include hybrid edge-cloud architectures that address latency concerns while maintaining scalability, specialized BCI-as-a-Service (BCIaaS) offerings, and integration with existing IoT and healthcare cloud ecosystems. The convergence of BCI technology with 5G networks is expected to unlock new market segments by enabling truly mobile neural monitoring and feedback systems with cloud-based processing capabilities.

Healthcare remains the dominant sector for BCI cloud solutions, accounting for nearly 45% of market share. This is primarily due to increasing adoption in neurological rehabilitation, prosthetic control systems, and diagnostic applications. The gaming and entertainment sector follows at 25%, with virtual reality and immersive gaming experiences driving demand for real-time neural data processing in cloud environments.

Regional analysis reveals North America leading with approximately 40% market share, followed by Europe (30%) and Asia-Pacific (20%). The Asia-Pacific region, particularly China and South Korea, demonstrates the fastest growth trajectory with annual expansion rates exceeding 20%, largely due to substantial government investments in neural technology research and development.

Consumer-grade BCI devices connected to cloud platforms represent the fastest-growing segment, with unit shipments increasing by 35% annually. This growth is fueled by decreasing hardware costs and the proliferation of subscription-based cloud processing models that lower barriers to entry for consumers and researchers alike.

Key market drivers include the exponential growth in neural data volumes requiring scalable processing solutions, increasing demand for real-time analysis capabilities, and the integration of artificial intelligence with BCI systems. The average BCI system now generates between 1-5 GB of data per hour of use, creating substantial demand for efficient cloud storage and processing architectures.

Market challenges primarily center around data privacy concerns, with 65% of potential users citing security of neural data as their primary adoption concern. Regulatory frameworks for neural data handling remain underdeveloped in most regions, creating market uncertainty. Additionally, latency issues in cloud processing present technical barriers for applications requiring immediate feedback, such as prosthetic control or critical healthcare monitoring.

Emerging market opportunities include hybrid edge-cloud architectures that address latency concerns while maintaining scalability, specialized BCI-as-a-Service (BCIaaS) offerings, and integration with existing IoT and healthcare cloud ecosystems. The convergence of BCI technology with 5G networks is expected to unlock new market segments by enabling truly mobile neural monitoring and feedback systems with cloud-based processing capabilities.

Technical Challenges in BCI Data Processing

Brain-Computer Interface (BCI) data processing presents significant technical challenges due to the complex nature of neural signals and the massive data volumes generated. The primary challenge lies in the high-dimensional, noisy, and non-stationary characteristics of brain signals. EEG data, for instance, typically contains artifacts from muscle movements, eye blinks, and electrical interference that must be filtered out before meaningful analysis can occur.

Real-time processing requirements pose another substantial hurdle. For applications like neuroprosthetics or assistive technologies, the system must interpret neural signals and translate them into commands with minimal latency—ideally under 100 milliseconds. This demands extremely efficient algorithms and processing pipelines that can operate within strict time constraints while maintaining accuracy.

Scalability issues become particularly acute when dealing with high-density recording systems. Modern BCI systems can employ hundreds of electrodes, each sampling at rates of 1,000 Hz or higher, generating terabytes of data during extended recording sessions. Traditional computing infrastructures often struggle to handle this data volume, especially when continuous monitoring is required.

The heterogeneity of data formats across different BCI devices and research platforms creates interoperability challenges. Various manufacturers use proprietary data formats, making it difficult to develop standardized processing pipelines that work across multiple systems. This fragmentation hinders collaborative research and slows technological advancement in the field.

Security and privacy concerns present additional technical challenges, as BCI data contains highly sensitive personal information. Implementing robust encryption and anonymization techniques without compromising processing speed or accuracy remains difficult, particularly in cloud-based architectures where data may traverse multiple processing nodes.

Resource allocation in cloud environments presents unique challenges for BCI applications. Neural signal processing workloads can be highly variable, with sudden spikes in computational demands during specific cognitive tasks or events. Dynamic resource allocation systems must be sophisticated enough to predict these fluctuations and scale accordingly.

Machine learning model training and deployment face particular difficulties in BCI contexts. Models must be continuously updated to account for neural plasticity and signal drift over time, requiring adaptive learning approaches. Additionally, the interpretability of these models remains crucial for clinical applications, adding another layer of complexity to algorithm development.

Real-time processing requirements pose another substantial hurdle. For applications like neuroprosthetics or assistive technologies, the system must interpret neural signals and translate them into commands with minimal latency—ideally under 100 milliseconds. This demands extremely efficient algorithms and processing pipelines that can operate within strict time constraints while maintaining accuracy.

Scalability issues become particularly acute when dealing with high-density recording systems. Modern BCI systems can employ hundreds of electrodes, each sampling at rates of 1,000 Hz or higher, generating terabytes of data during extended recording sessions. Traditional computing infrastructures often struggle to handle this data volume, especially when continuous monitoring is required.

The heterogeneity of data formats across different BCI devices and research platforms creates interoperability challenges. Various manufacturers use proprietary data formats, making it difficult to develop standardized processing pipelines that work across multiple systems. This fragmentation hinders collaborative research and slows technological advancement in the field.

Security and privacy concerns present additional technical challenges, as BCI data contains highly sensitive personal information. Implementing robust encryption and anonymization techniques without compromising processing speed or accuracy remains difficult, particularly in cloud-based architectures where data may traverse multiple processing nodes.

Resource allocation in cloud environments presents unique challenges for BCI applications. Neural signal processing workloads can be highly variable, with sudden spikes in computational demands during specific cognitive tasks or events. Dynamic resource allocation systems must be sophisticated enough to predict these fluctuations and scale accordingly.

Machine learning model training and deployment face particular difficulties in BCI contexts. Models must be continuously updated to account for neural plasticity and signal drift over time, requiring adaptive learning approaches. Additionally, the interpretability of these models remains crucial for clinical applications, adding another layer of complexity to algorithm development.

Current Scalable Cloud Solutions for BCI

01 Elastic resource allocation in cloud architectures

Cloud architectures can dynamically allocate and deallocate computing resources based on demand, allowing for automatic scaling of applications. This elasticity enables systems to handle varying workloads efficiently by provisioning additional resources during peak times and reducing them during periods of low activity, optimizing both performance and cost. The elastic nature of cloud resources provides businesses with the flexibility to scale their infrastructure without significant upfront investment in hardware.- Elastic resource allocation in cloud architectures: Cloud architectures can be designed with elastic resource allocation capabilities that automatically scale computing resources based on demand. This approach enables systems to handle varying workloads efficiently by dynamically provisioning or de-provisioning resources as needed. The elasticity feature ensures optimal performance during peak usage while minimizing costs during periods of lower demand, making it a fundamental aspect of scalable cloud solutions.

- Distributed computing frameworks for scalability: Distributed computing frameworks provide the foundation for scalable cloud architectures by enabling workloads to be processed across multiple nodes. These frameworks facilitate parallel processing, load balancing, and fault tolerance, allowing applications to scale horizontally by adding more computing nodes. By distributing computational tasks across a network of machines, these frameworks can handle large-scale data processing and high-throughput applications while maintaining performance and reliability.

- Containerization and microservices architecture: Containerization technologies and microservices architecture enable highly scalable cloud deployments by breaking down applications into smaller, independently deployable services. This approach allows for granular scaling of specific components based on their individual resource requirements rather than scaling the entire application. Containers provide consistent environments across development and production, facilitating rapid deployment and scaling while microservices architecture improves resilience and enables teams to develop, deploy, and scale services independently.

- Auto-scaling and load balancing mechanisms: Auto-scaling and load balancing mechanisms are essential components of scalable cloud architectures that automatically adjust resources and distribute traffic to maintain optimal performance. These systems monitor application metrics and traffic patterns to make intelligent decisions about when to scale resources up or down. Load balancers distribute incoming requests across multiple instances to prevent any single point of failure and ensure efficient resource utilization, thereby enhancing both scalability and availability of cloud services.

- Multi-region deployment strategies: Multi-region deployment strategies enhance cloud architecture scalability by distributing applications and data across geographically dispersed data centers. This approach not only improves global performance by reducing latency for users in different regions but also provides resilience against regional outages. These strategies typically involve replicating data and services across regions, implementing global load balancing, and designing applications to handle cross-region communication efficiently, resulting in highly available and scalable cloud solutions.

02 Distributed computing frameworks for scalability

Distributed computing frameworks in cloud architectures enable workload distribution across multiple nodes, enhancing processing capabilities and system resilience. These frameworks facilitate parallel processing of large datasets, allowing for horizontal scaling by adding more computing nodes to the network. By distributing computational tasks across multiple servers, these systems can process massive amounts of data more efficiently than traditional centralized architectures, making them ideal for big data applications and high-performance computing needs.Expand Specific Solutions03 Load balancing techniques for cloud scalability

Load balancing mechanisms distribute network traffic or computational workloads across multiple servers or resources to optimize resource utilization, maximize throughput, minimize response time, and avoid overload on any single resource. Advanced load balancing techniques in cloud architectures can automatically detect and redirect traffic based on server health, capacity, and current load. These systems can implement various algorithms to ensure efficient distribution of workloads, enhancing overall system performance and reliability while supporting seamless scaling operations.Expand Specific Solutions04 Containerization and microservices for cloud scalability

Containerization technologies and microservices architectures enable more efficient scaling of cloud applications by breaking down monolithic applications into smaller, independently deployable services. Containers provide lightweight, consistent environments that can be rapidly deployed, scaled, and moved between different computing environments. This approach allows for more granular scaling where only the specific components under load need additional resources, rather than scaling the entire application. Microservices architecture further enhances scalability by allowing independent development, deployment, and scaling of individual services.Expand Specific Solutions05 Auto-scaling and predictive scaling mechanisms

Auto-scaling mechanisms automatically adjust the number of computational resources based on predefined metrics or thresholds, ensuring applications maintain performance during varying load conditions. Predictive scaling goes further by using machine learning algorithms and historical data analysis to anticipate future demand patterns and proactively adjust resources before they're needed. These advanced scaling techniques help maintain optimal performance while minimizing costs by ensuring resources are available when needed but not over-provisioned during periods of low demand.Expand Specific Solutions

Key Industry Players in BCI Cloud Computing

The Brain-Computer Interface (BCI) data processing market is in an early growth phase, characterized by rapid technological advancement and expanding applications. The market size is projected to grow significantly as cloud architectures enable scalable processing of complex neural data. Technologically, the field is maturing with major players developing specialized solutions. Companies like Google, Meta, and IBM are leveraging their cloud infrastructure expertise, while Huawei, Intel, and AMD focus on hardware acceleration for neural processing. Qualcomm and Apple are exploring mobile BCI applications. Academic institutions including Yale, Peking University, and Beihang University are contributing foundational research. The competitive landscape shows a blend of tech giants investing in proprietary platforms and specialized startups developing niche solutions, indicating a dynamic ecosystem poised for substantial growth.

Oracle International Corp.

Technical Solution: Oracle has developed a robust cloud architecture for BCI data processing leveraging their Oracle Cloud Infrastructure (OCI). Their solution focuses on enterprise-grade reliability and security while delivering the performance needed for neural signal processing. Oracle's architecture utilizes their Autonomous Database for storing and analyzing structured BCI metadata, while their Object Storage service handles the massive raw neural signal datasets. The processing pipeline employs Oracle's Cloud HPC (High-Performance Computing) resources with GPU acceleration for computationally intensive neural decoding algorithms[9]. A distinguishing feature of Oracle's approach is their implementation of a comprehensive data governance framework specifically designed for neurotechnology applications, addressing the unique privacy and ethical considerations of brain data. Their system incorporates Oracle GoldenGate for real-time data replication and disaster recovery, ensuring continuous availability of BCI services. Oracle has demonstrated their architecture processing multi-modal BCI data (combining EEG, EMG, and physiological signals) at scale, supporting thousands of concurrent users while maintaining strict data isolation between tenants[10].

Strengths: Enterprise-grade security and compliance features critical for medical and research applications; comprehensive data governance framework specific to neural data; robust disaster recovery capabilities. Weaknesses: Potentially higher costs compared to some cloud providers; less specialized in consumer BCI applications; may require significant expertise to fully implement and optimize the architecture.

Google LLC

Technical Solution: Google has developed a comprehensive cloud architecture for BCI data processing leveraging its Google Cloud Platform (GCP). Their solution incorporates a multi-tiered approach with specialized services for real-time neural data processing. The architecture utilizes Google's BigQuery for massive-scale data analytics, Cloud TPUs (Tensor Processing Units) for accelerated neural network computations, and Dataflow for stream processing of continuous BCI signals[1]. Google's BrainGate collaboration has demonstrated the ability to process neural signals with latency under 50ms, critical for responsive BCI applications[2]. Their architecture implements a microservices approach where specialized containers handle different aspects of the BCI pipeline: signal acquisition, noise filtering, feature extraction, and intent classification. The system scales horizontally through Kubernetes orchestration, automatically adjusting computational resources based on incoming data volume and processing demands.

Strengths: Exceptional scalability through GCP's global infrastructure; advanced ML capabilities through TensorFlow integration; comprehensive data security and compliance features. Weaknesses: Potentially higher costs for sustained high-volume processing; dependency on Google's ecosystem may create vendor lock-in; requires specialized expertise to fully optimize the architecture.

Core Technologies for Neural Data Processing

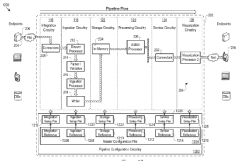

Data pipeline architecture for cloud processing of structured and unstructured data

PatentActiveIN201644042808A

Innovation

- A scalable data processing architecture (DPA) with integration, ingestion, processing, and visualization circuitry, utilizing Apache Camel integration framework, Apache Kafka publish/subscribe message processor, and dynamic pipeline configuration, supports high data throughput through centralized configuration and dynamic processing modes, enabling real-time analytics and visualization.

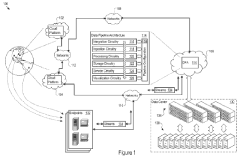

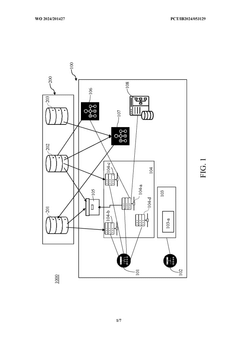

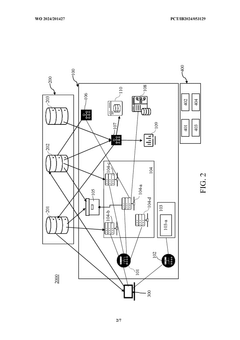

A system for data processing and a method thereof

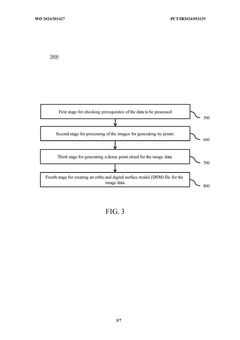

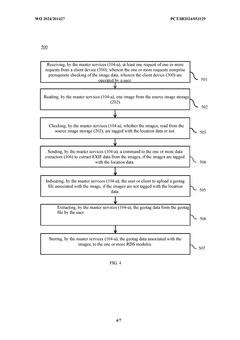

PatentWO2024201427A1

Innovation

- A system architecture utilizing Cloud Computing Infrastructure (CCI) with API load balancers, UI clusters, batch data archivers, data extractors, and relational database systems to process input data from drones, satellites, and other sources, generating point clouds and DSMs for further analysis.

Data Security and Privacy Considerations

Brain-Computer Interface (BCI) systems generate highly sensitive neurological data that requires robust security and privacy frameworks when processed in cloud environments. The intimate nature of brain activity data presents unique challenges beyond traditional data protection concerns. Neural data can potentially reveal cognitive states, emotional responses, and even specific thoughts, making it among the most personal data types collected from individuals.

Cloud-based BCI architectures must implement end-to-end encryption protocols specifically designed for neural data streams. This includes both data-in-transit encryption using TLS 1.3 or higher standards, and data-at-rest encryption with advanced algorithms such as AES-256. Homomorphic encryption techniques are particularly valuable as they enable computational operations on encrypted neural data without decryption, maintaining privacy throughout the processing pipeline.

Access control mechanisms for BCI data require multi-factor authentication systems with granular permission structures. Role-based access control (RBAC) should be complemented by attribute-based access control (ABAC) to create context-aware authorization frameworks. Additionally, just-in-time access provisioning can minimize exposure windows for sensitive neural information.

Regulatory compliance presents significant challenges for cloud-based BCI systems. These architectures must adhere to region-specific frameworks including GDPR in Europe, HIPAA for health-related applications in the US, and emerging neuroethical guidelines. Particular attention must be paid to informed consent processes, which should be dynamic and continuous rather than one-time agreements.

Data anonymization techniques require special consideration for neural data. Traditional methods like k-anonymity often prove insufficient due to the unique "neural fingerprint" characteristics of BCI data. Advanced differential privacy implementations with carefully calibrated noise injection can help preserve analytical utility while protecting individual identities.

Audit trails and monitoring systems must be implemented to track all access and processing activities performed on BCI data. These systems should incorporate anomaly detection capabilities specifically trained to identify unusual patterns in neural data access or processing that might indicate security breaches or privacy violations.

Finally, data residency and sovereignty considerations are critical when designing cloud architectures for BCI applications. Neural data should be processed and stored in jurisdictions with strong privacy protections, potentially utilizing data localization techniques to ensure compliance with regional regulations while maintaining processing efficiency.

Cloud-based BCI architectures must implement end-to-end encryption protocols specifically designed for neural data streams. This includes both data-in-transit encryption using TLS 1.3 or higher standards, and data-at-rest encryption with advanced algorithms such as AES-256. Homomorphic encryption techniques are particularly valuable as they enable computational operations on encrypted neural data without decryption, maintaining privacy throughout the processing pipeline.

Access control mechanisms for BCI data require multi-factor authentication systems with granular permission structures. Role-based access control (RBAC) should be complemented by attribute-based access control (ABAC) to create context-aware authorization frameworks. Additionally, just-in-time access provisioning can minimize exposure windows for sensitive neural information.

Regulatory compliance presents significant challenges for cloud-based BCI systems. These architectures must adhere to region-specific frameworks including GDPR in Europe, HIPAA for health-related applications in the US, and emerging neuroethical guidelines. Particular attention must be paid to informed consent processes, which should be dynamic and continuous rather than one-time agreements.

Data anonymization techniques require special consideration for neural data. Traditional methods like k-anonymity often prove insufficient due to the unique "neural fingerprint" characteristics of BCI data. Advanced differential privacy implementations with carefully calibrated noise injection can help preserve analytical utility while protecting individual identities.

Audit trails and monitoring systems must be implemented to track all access and processing activities performed on BCI data. These systems should incorporate anomaly detection capabilities specifically trained to identify unusual patterns in neural data access or processing that might indicate security breaches or privacy violations.

Finally, data residency and sovereignty considerations are critical when designing cloud architectures for BCI applications. Neural data should be processed and stored in jurisdictions with strong privacy protections, potentially utilizing data localization techniques to ensure compliance with regional regulations while maintaining processing efficiency.

Regulatory Framework for Neural Technologies

The regulatory landscape for Brain-Computer Interface (BCI) technologies is rapidly evolving as these neural technologies advance toward widespread implementation in cloud-based processing architectures. Currently, regulatory frameworks exist in fragmented forms across different jurisdictions, with the FDA in the United States establishing a specialized Digital Health division that has begun classifying BCI devices based on their invasiveness and intended use. The European Union, through its Medical Device Regulation (MDR), has implemented stricter requirements for neural technologies, particularly emphasizing data protection under GDPR when processing sensitive neurological data in cloud environments.

Data privacy regulations present significant challenges for scalable cloud architectures handling BCI data. These regulations typically require explicit consent mechanisms, transparent data processing practices, and robust security measures. The Health Insurance Portability and Accountability Act (HIPAA) in the US and similar healthcare data protection laws worldwide impose additional compliance requirements when BCI applications intersect with medical treatments or diagnostics, necessitating specialized cloud infrastructure designs that incorporate privacy-by-design principles.

Safety standards for neural technologies are being developed by organizations such as IEEE and ISO, with the IEEE P2731 working group specifically addressing standards for BCI technologies. These emerging standards will likely influence cloud architecture requirements, particularly regarding real-time processing capabilities, fault tolerance, and system reliability. Cloud service providers developing BCI data processing solutions must demonstrate compliance with these evolving technical standards while maintaining scalability.

Ethical guidelines from bodies like the OECD and UNESCO have begun addressing neurotechnology applications, emphasizing principles of autonomy, informed consent, and prevention of unauthorized neural data access. These guidelines, while not legally binding, are increasingly influencing regulatory development and corporate governance frameworks for cloud-based neural data processing systems. Companies developing scalable cloud architectures for BCI applications must consider these ethical dimensions in their technical designs.

International harmonization efforts are underway through initiatives like the International Brain Initiative, which seeks to coordinate regulatory approaches across borders. This is particularly important for cloud architectures that inherently operate across jurisdictional boundaries. The lack of global regulatory consistency currently creates compliance challenges for scalable cloud solutions processing BCI data from multiple regions, often requiring complex data localization and segregation strategies.

Data privacy regulations present significant challenges for scalable cloud architectures handling BCI data. These regulations typically require explicit consent mechanisms, transparent data processing practices, and robust security measures. The Health Insurance Portability and Accountability Act (HIPAA) in the US and similar healthcare data protection laws worldwide impose additional compliance requirements when BCI applications intersect with medical treatments or diagnostics, necessitating specialized cloud infrastructure designs that incorporate privacy-by-design principles.

Safety standards for neural technologies are being developed by organizations such as IEEE and ISO, with the IEEE P2731 working group specifically addressing standards for BCI technologies. These emerging standards will likely influence cloud architecture requirements, particularly regarding real-time processing capabilities, fault tolerance, and system reliability. Cloud service providers developing BCI data processing solutions must demonstrate compliance with these evolving technical standards while maintaining scalability.

Ethical guidelines from bodies like the OECD and UNESCO have begun addressing neurotechnology applications, emphasizing principles of autonomy, informed consent, and prevention of unauthorized neural data access. These guidelines, while not legally binding, are increasingly influencing regulatory development and corporate governance frameworks for cloud-based neural data processing systems. Companies developing scalable cloud architectures for BCI applications must consider these ethical dimensions in their technical designs.

International harmonization efforts are underway through initiatives like the International Brain Initiative, which seeks to coordinate regulatory approaches across borders. This is particularly important for cloud architectures that inherently operate across jurisdictional boundaries. The lack of global regulatory consistency currently creates compliance challenges for scalable cloud solutions processing BCI data from multiple regions, often requiring complex data localization and segregation strategies.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!