Benchmarking GC-MS Precision: Cross-Laboratory Calibration

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GC-MS Technology Evolution and Calibration Objectives

Gas Chromatography-Mass Spectrometry (GC-MS) has evolved significantly since its inception in the mid-20th century, transforming from a specialized analytical technique into an essential tool across multiple scientific disciplines. The integration of gas chromatography with mass spectrometry created a powerful analytical method capable of separating, identifying, and quantifying complex mixtures of compounds with remarkable precision. This technological convergence marked a pivotal advancement in analytical chemistry, enabling researchers to detect compounds at increasingly lower concentrations.

The evolution of GC-MS technology has been characterized by several key developments. Early systems from the 1950s and 1960s were bulky, expensive, and required significant expertise to operate. The 1970s and 1980s saw substantial improvements in ionization techniques, including the development of electron impact (EI) and chemical ionization (CI) methods, which expanded the range of analyzable compounds. The 1990s brought significant miniaturization and automation, making GC-MS more accessible to laboratories worldwide.

Recent technological advancements have focused on enhancing sensitivity, resolution, and reproducibility. Modern GC-MS systems incorporate advanced features such as tandem mass spectrometry (GC-MS/MS), time-of-flight mass analyzers, and sophisticated data processing algorithms. These innovations have pushed detection limits into the parts-per-trillion range and enabled more comprehensive analysis of complex matrices.

Despite these advancements, cross-laboratory calibration remains a significant challenge in the GC-MS field. Variations in instrument configurations, operating parameters, and analytical protocols can lead to inconsistent results across different laboratories, complicating data comparison and standardization efforts. This issue is particularly problematic in fields requiring high precision and reproducibility, such as clinical diagnostics, forensic analysis, and environmental monitoring.

The primary objective of cross-laboratory calibration initiatives is to establish standardized protocols and reference materials that ensure consistent GC-MS performance regardless of location or specific instrumentation. This includes developing robust calibration methods, certified reference materials, and quality control procedures that can be universally applied. Additionally, there is a growing emphasis on creating statistical models that can account for inter-laboratory variations and normalize data across different platforms.

Future technological goals include the development of self-calibrating GC-MS systems, cloud-based calibration databases, and artificial intelligence algorithms capable of automatically adjusting for instrumental variations. These advancements aim to enhance the reliability and comparability of GC-MS data globally, facilitating more effective collaboration among research institutions and ensuring the integrity of analytical results across diverse applications.

The evolution of GC-MS technology has been characterized by several key developments. Early systems from the 1950s and 1960s were bulky, expensive, and required significant expertise to operate. The 1970s and 1980s saw substantial improvements in ionization techniques, including the development of electron impact (EI) and chemical ionization (CI) methods, which expanded the range of analyzable compounds. The 1990s brought significant miniaturization and automation, making GC-MS more accessible to laboratories worldwide.

Recent technological advancements have focused on enhancing sensitivity, resolution, and reproducibility. Modern GC-MS systems incorporate advanced features such as tandem mass spectrometry (GC-MS/MS), time-of-flight mass analyzers, and sophisticated data processing algorithms. These innovations have pushed detection limits into the parts-per-trillion range and enabled more comprehensive analysis of complex matrices.

Despite these advancements, cross-laboratory calibration remains a significant challenge in the GC-MS field. Variations in instrument configurations, operating parameters, and analytical protocols can lead to inconsistent results across different laboratories, complicating data comparison and standardization efforts. This issue is particularly problematic in fields requiring high precision and reproducibility, such as clinical diagnostics, forensic analysis, and environmental monitoring.

The primary objective of cross-laboratory calibration initiatives is to establish standardized protocols and reference materials that ensure consistent GC-MS performance regardless of location or specific instrumentation. This includes developing robust calibration methods, certified reference materials, and quality control procedures that can be universally applied. Additionally, there is a growing emphasis on creating statistical models that can account for inter-laboratory variations and normalize data across different platforms.

Future technological goals include the development of self-calibrating GC-MS systems, cloud-based calibration databases, and artificial intelligence algorithms capable of automatically adjusting for instrumental variations. These advancements aim to enhance the reliability and comparability of GC-MS data globally, facilitating more effective collaboration among research institutions and ensuring the integrity of analytical results across diverse applications.

Market Demand for Standardized GC-MS Analysis

The global market for standardized Gas Chromatography-Mass Spectrometry (GC-MS) analysis has experienced significant growth in recent years, driven by increasing demands for accurate analytical methods across multiple industries. The pharmaceutical sector represents the largest market segment, with an estimated annual growth rate of 6.8% through 2025, as regulatory requirements for drug development and quality control become more stringent.

Environmental monitoring constitutes another substantial market driver, with government agencies worldwide implementing stricter regulations for pollutant detection and quantification. This regulatory landscape has created a pressing need for standardized GC-MS methodologies that can produce consistent results regardless of laboratory location or equipment manufacturer.

Food safety testing represents a rapidly expanding application area, particularly in developing economies where food export regulations are becoming more aligned with international standards. The ability to detect pesticides, additives, and contaminants at increasingly lower detection limits has become a critical competitive factor for testing laboratories serving this sector.

Clinical diagnostics and forensic toxicology have emerged as high-value markets for standardized GC-MS analysis, with particular emphasis on reproducibility across different laboratory settings. The legal implications of forensic results and the critical nature of clinical decisions based on metabolomic profiles demand unprecedented levels of analytical precision and inter-laboratory agreement.

Research institutions and academic laboratories constitute a significant market segment that specifically values cross-laboratory calibration capabilities. The reproducibility crisis in scientific research has heightened awareness of the need for standardized analytical methods that can be reliably transferred between research groups.

Contract Research Organizations (CROs) have become major consumers of standardized GC-MS solutions, as their business model depends on delivering consistent analytical results to clients across multiple projects and timeframes. The ability to demonstrate cross-laboratory precision has become a key differentiator in this competitive market.

Industry surveys indicate that laboratories are willing to invest 15-20% more in analytical systems that offer proven cross-laboratory calibration capabilities compared to standard GC-MS equipment. This premium pricing potential reflects the significant downstream value of reproducible results in terms of regulatory compliance, research validity, and decision-making confidence.

The market for reference materials and calibration standards specifically designed for GC-MS cross-laboratory harmonization has grown substantially, with specialized providers reporting annual growth rates exceeding the broader analytical instrument market by approximately 4 percentage points.

Environmental monitoring constitutes another substantial market driver, with government agencies worldwide implementing stricter regulations for pollutant detection and quantification. This regulatory landscape has created a pressing need for standardized GC-MS methodologies that can produce consistent results regardless of laboratory location or equipment manufacturer.

Food safety testing represents a rapidly expanding application area, particularly in developing economies where food export regulations are becoming more aligned with international standards. The ability to detect pesticides, additives, and contaminants at increasingly lower detection limits has become a critical competitive factor for testing laboratories serving this sector.

Clinical diagnostics and forensic toxicology have emerged as high-value markets for standardized GC-MS analysis, with particular emphasis on reproducibility across different laboratory settings. The legal implications of forensic results and the critical nature of clinical decisions based on metabolomic profiles demand unprecedented levels of analytical precision and inter-laboratory agreement.

Research institutions and academic laboratories constitute a significant market segment that specifically values cross-laboratory calibration capabilities. The reproducibility crisis in scientific research has heightened awareness of the need for standardized analytical methods that can be reliably transferred between research groups.

Contract Research Organizations (CROs) have become major consumers of standardized GC-MS solutions, as their business model depends on delivering consistent analytical results to clients across multiple projects and timeframes. The ability to demonstrate cross-laboratory precision has become a key differentiator in this competitive market.

Industry surveys indicate that laboratories are willing to invest 15-20% more in analytical systems that offer proven cross-laboratory calibration capabilities compared to standard GC-MS equipment. This premium pricing potential reflects the significant downstream value of reproducible results in terms of regulatory compliance, research validity, and decision-making confidence.

The market for reference materials and calibration standards specifically designed for GC-MS cross-laboratory harmonization has grown substantially, with specialized providers reporting annual growth rates exceeding the broader analytical instrument market by approximately 4 percentage points.

Current Challenges in Cross-Laboratory GC-MS Precision

Despite significant advancements in gas chromatography-mass spectrometry (GC-MS) technology, cross-laboratory precision remains a persistent challenge in analytical chemistry. The variability in results between different laboratories using ostensibly identical methods continues to undermine confidence in data comparability and reproducibility. This issue is particularly problematic for regulatory compliance, clinical diagnostics, and multi-center research studies where consistent results across different facilities are essential.

A primary challenge stems from instrument-specific variations. Even GC-MS systems from the same manufacturer can exhibit different response characteristics due to subtle differences in detector sensitivity, column performance, and electronic components. These variations are often inadequately addressed by standard calibration protocols, leading to systematic biases between laboratories.

Environmental factors represent another significant obstacle. Temperature fluctuations, humidity levels, and air quality can affect chromatographic separation and mass spectral detection in ways that are difficult to standardize across different laboratory settings. These environmental variables introduce unpredictable shifts in retention times and signal intensities that complicate cross-laboratory comparisons.

Methodological inconsistencies further exacerbate precision issues. Despite efforts to standardize protocols, variations in sample preparation techniques, derivatization procedures, and data processing algorithms persist between laboratories. Even minor deviations in extraction efficiency, internal standard selection, or peak integration parameters can lead to substantial differences in quantitative results.

The lack of universally accepted reference materials presents an additional hurdle. While certified reference materials exist for common analytes, they are unavailable for many specialized compounds and complex matrices. Without these standardized references, laboratories must develop in-house calibration standards, introducing another source of inter-laboratory variability.

Data processing and interpretation differences constitute a frequently overlooked challenge. Various software packages employ different algorithms for peak detection, deconvolution, and quantification. These computational variations can produce divergent results even when analyzing identical raw data, creating a digital dimension to the precision problem.

Operator expertise and training disparities also contribute significantly to cross-laboratory variation. The complexity of GC-MS systems requires specialized knowledge for optimal operation, maintenance, and troubleshooting. Differences in analyst experience and technical proficiency inevitably impact data quality and consistency across institutions.

Aging instrumentation and maintenance schedules represent practical challenges that affect precision. Performance drift over time due to component wear, contamination, or electronic degradation occurs at different rates across laboratories, creating temporal inconsistencies that are difficult to synchronize in collaborative studies.

A primary challenge stems from instrument-specific variations. Even GC-MS systems from the same manufacturer can exhibit different response characteristics due to subtle differences in detector sensitivity, column performance, and electronic components. These variations are often inadequately addressed by standard calibration protocols, leading to systematic biases between laboratories.

Environmental factors represent another significant obstacle. Temperature fluctuations, humidity levels, and air quality can affect chromatographic separation and mass spectral detection in ways that are difficult to standardize across different laboratory settings. These environmental variables introduce unpredictable shifts in retention times and signal intensities that complicate cross-laboratory comparisons.

Methodological inconsistencies further exacerbate precision issues. Despite efforts to standardize protocols, variations in sample preparation techniques, derivatization procedures, and data processing algorithms persist between laboratories. Even minor deviations in extraction efficiency, internal standard selection, or peak integration parameters can lead to substantial differences in quantitative results.

The lack of universally accepted reference materials presents an additional hurdle. While certified reference materials exist for common analytes, they are unavailable for many specialized compounds and complex matrices. Without these standardized references, laboratories must develop in-house calibration standards, introducing another source of inter-laboratory variability.

Data processing and interpretation differences constitute a frequently overlooked challenge. Various software packages employ different algorithms for peak detection, deconvolution, and quantification. These computational variations can produce divergent results even when analyzing identical raw data, creating a digital dimension to the precision problem.

Operator expertise and training disparities also contribute significantly to cross-laboratory variation. The complexity of GC-MS systems requires specialized knowledge for optimal operation, maintenance, and troubleshooting. Differences in analyst experience and technical proficiency inevitably impact data quality and consistency across institutions.

Aging instrumentation and maintenance schedules represent practical challenges that affect precision. Performance drift over time due to component wear, contamination, or electronic degradation occurs at different rates across laboratories, creating temporal inconsistencies that are difficult to synchronize in collaborative studies.

Established Cross-Laboratory Calibration Protocols

01 Calibration methods for improving GC-MS precision

Various calibration techniques are employed to enhance the precision of GC-MS analysis. These include internal standard calibration, multi-point calibration curves, and automated calibration systems. These methods help compensate for instrumental drift, matrix effects, and other variables that can affect measurement precision. Proper calibration ensures consistent and reliable quantitative results across multiple analyses and different instruments.- Calibration methods for improving GC-MS precision: Various calibration techniques are employed to enhance the precision of GC-MS analysis. These include internal standard calibration, multi-point calibration curves, and automated calibration systems. These methods help compensate for instrumental drift, matrix effects, and other variables that can affect measurement precision. Proper calibration ensures consistent and reliable quantitative results across multiple analyses and different instruments.

- Hardware modifications for enhanced precision: Specific hardware modifications can significantly improve GC-MS precision. These include advanced ion source designs, high-precision temperature control systems, improved vacuum systems, and specialized column technologies. Hardware innovations focus on reducing signal noise, improving ion transmission efficiency, and enhancing overall system stability to achieve more precise and reproducible measurements.

- Data processing algorithms for precision enhancement: Sophisticated data processing algorithms play a crucial role in improving GC-MS precision. These include advanced peak detection algorithms, noise reduction techniques, deconvolution methods, and statistical analysis tools. By applying these computational approaches to raw GC-MS data, analysts can extract more accurate information, reduce variability, and improve the overall precision of qualitative and quantitative results.

- Sample preparation techniques for improved precision: Optimized sample preparation methods significantly impact GC-MS precision. These include standardized extraction procedures, derivatization techniques, clean-up protocols, and concentration methods. Proper sample preparation minimizes matrix interference, reduces contamination, and ensures consistent analyte recovery, all of which contribute to improved measurement precision and reproducibility in GC-MS analysis.

- Method validation and quality control for precision assurance: Comprehensive method validation and quality control procedures are essential for ensuring GC-MS precision. These include system suitability tests, precision studies (repeatability and reproducibility), accuracy assessments, and ongoing quality control measures. Regular performance verification using reference standards, control charts, and proficiency testing helps maintain consistent precision levels and identify potential issues before they affect analytical results.

02 Hardware modifications for enhanced precision

Specific hardware modifications can significantly improve GC-MS precision. These include advanced ion source designs, high-precision temperature control systems, improved vacuum systems, and specialized column technologies. Hardware innovations focus on reducing signal noise, improving ion transmission efficiency, and enhancing overall system stability, which directly contributes to better measurement precision and reproducibility.Expand Specific Solutions03 Data processing algorithms for precision enhancement

Sophisticated data processing algorithms play a crucial role in improving GC-MS precision. These include advanced peak detection algorithms, noise reduction techniques, deconvolution methods, and statistical analysis tools. By applying these computational approaches to raw GC-MS data, analysts can extract more accurate and precise information, particularly in complex samples with overlapping peaks or low-concentration analytes.Expand Specific Solutions04 Sample preparation techniques for improved precision

Optimized sample preparation methods significantly impact GC-MS precision. These include standardized extraction procedures, derivatization techniques, clean-up protocols, and concentration methods. Proper sample preparation reduces matrix interference, minimizes contamination, and ensures consistent analyte recovery, all of which contribute to improved measurement precision and reproducibility in GC-MS analysis.Expand Specific Solutions05 Automated systems for enhancing GC-MS precision

Automation technologies significantly improve GC-MS precision by reducing human error and ensuring methodological consistency. These include automated sample injection systems, robotic sample preparation platforms, computerized method development tools, and integrated quality control systems. Automation ensures consistent timing, temperature control, and operational parameters across multiple analyses, leading to improved precision in both qualitative and quantitative GC-MS applications.Expand Specific Solutions

Leading Manufacturers and Research Institutions in GC-MS

The GC-MS precision benchmarking landscape is currently in a mature growth phase, with the global market valued at approximately $4.5 billion and projected to expand at 5-7% annually. Leading players include established analytical instrument manufacturers like Shimadzu Corp., Thermo Finnigan, and Hitachi High-Tech America, who dominate with comprehensive product portfolios. Pharmaceutical giants such as Roche Diagnostics and Bristol Myers Squibb are driving demand through rigorous quality control requirements. Technical innovation is focused on cross-laboratory calibration standardization, with specialized companies like Tofwerk AG and Micromass UK developing advanced mass spectrometry solutions. Academic institutions including Brigham Young University and Jilin University are contributing to methodology development, while emerging players like Matterworks are introducing AI-enhanced data analysis capabilities to improve inter-laboratory reproducibility.

Shimadzu Corp.

Technical Solution: Shimadzu has developed advanced GC-MS calibration solutions focusing on cross-laboratory standardization. Their LabSolutions software platform incorporates automated calibration protocols that enable precise instrument-to-instrument comparisons across different laboratory environments. The system utilizes retention time locking technology and mass accuracy calibration to ensure consistent results between different GC-MS instruments. Shimadzu's Smart Database and Method Packages provide pre-validated methods with optimized parameters for specific applications, facilitating standardized analysis across multiple laboratories. Their multi-point calibration approach incorporates internal standards and quality control samples to monitor system performance and detect drift over time. Additionally, Shimadzu has implemented cloud-based calibration management systems that allow laboratories to share calibration data, reference standards information, and method parameters to improve inter-laboratory reproducibility.

Strengths: Comprehensive software integration for calibration management, extensive experience in GC-MS technology development, and strong global support network. Weaknesses: Proprietary calibration systems may limit compatibility with other manufacturers' instruments, potentially requiring significant investment in Shimadzu ecosystem for optimal performance.

Micromass UK Ltd.

Technical Solution: Micromass (now part of Waters Corporation) has developed sophisticated GC-MS calibration technologies focused on cross-laboratory standardization. Their approach centers on their QuanLynx and TargetLynx software platforms that incorporate advanced calibration algorithms specifically designed for multi-laboratory environments. Micromass's calibration methodology employs automated system performance verification using reference compounds to ensure consistent mass accuracy and retention time stability across different laboratory settings. Their IntelliStart technology provides automated instrument calibration and system suitability testing to maintain consistent performance between different instruments and laboratories. The company has developed specialized calibration standards with precise isotopic compositions to enable accurate mass calibration across different instrument configurations. Their cross-laboratory calibration approach includes comprehensive statistical tools to evaluate and compare instrument performance metrics between different laboratory sites, enabling standardized reporting and troubleshooting. Additionally, Micromass has implemented cloud-based calibration management systems that allow laboratories to share calibration data and method parameters to improve inter-laboratory reproducibility.

Strengths: Exceptional mass accuracy capabilities, sophisticated software algorithms for calibration, and strong integration with chromatography systems. Weaknesses: Complex calibration systems may require specialized expertise, and integration with non-Waters systems may present challenges.

Critical Patents and Innovations in GC-MS Calibration

Gas chromatography-mass spectrometer spectrogram matching method

PatentInactiveCN104504706A

Innovation

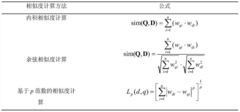

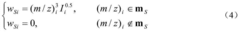

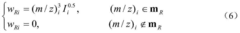

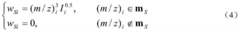

- A new mass spectrum matching method is adopted, including screening of unknown substance spectra and standard mass spectra, peak intensity scaling and similarity calculation based on vector space model. By representing the mass spectrum as a vector form and using p-norm based The similarity calculation formula of numbers improves the matching accuracy.

Gas chromatography-mass spectrogram retrieval method based on vector model

PatentInactiveCN104572910A

Innovation

- A mass spectrum retrieval method based on a vector model is adopted. By representing the mass spectrum as a vector form, the similarity calculation based on the p norm and the introduction of the peak intensity scaling factor are used to calculate the similarity of the mass spectra and screen the standard mass spectra to improve Retrieval efficiency.

International Standards and Regulatory Requirements

Gas Chromatography-Mass Spectrometry (GC-MS) precision benchmarking across laboratories requires adherence to stringent international standards and regulatory frameworks. The International Organization for Standardization (ISO) has established several critical standards, including ISO 17025 for testing and calibration laboratories, which mandates specific quality management systems and technical competence requirements for GC-MS operations. Additionally, ISO 11843 provides guidelines for detection capability determination, essential for cross-laboratory calibration efforts.

Regulatory bodies worldwide have implemented requirements that directly impact GC-MS precision benchmarking. The U.S. Food and Drug Administration (FDA) enforces Good Laboratory Practice (GLP) regulations through 21 CFR Part 58, which establishes minimum standards for conducting non-clinical laboratory studies. Similarly, the European Medicines Agency (EMA) has published guidelines on bioanalytical method validation that specify acceptance criteria for precision and accuracy in chromatographic techniques.

The Clinical and Laboratory Standards Institute (CLSI) has developed consensus-based standards specifically addressing analytical method validation in clinical chemistry, including document EP05-A3 which outlines protocols for precision evaluation. These standards provide structured approaches to establishing reproducibility across different laboratory settings and equipment configurations.

For environmental applications, the U.S. Environmental Protection Agency (EPA) has established Method 8270 for semi-volatile organic compounds by GC-MS, which includes detailed quality control requirements and acceptance criteria. Internationally, the Codex Alimentarius Commission has developed standards for residue analysis in food products that specify performance criteria for analytical methods, including GC-MS.

Proficiency testing schemes, such as those operated by organizations like FAPAS (Food Analysis Performance Assessment Scheme) and EPTIS (European Proficiency Testing Information System), provide frameworks for laboratories to demonstrate their analytical capabilities against international standards. Participation in these schemes is often mandatory for accredited laboratories and serves as an external validation of cross-laboratory calibration efforts.

Recent developments include the International Council for Harmonisation (ICH) guidelines M10 on bioanalytical method validation, which harmonizes requirements across different regulatory jurisdictions. These guidelines specifically address inter-laboratory variability and establish acceptance criteria for cross-validation studies, directly impacting GC-MS precision benchmarking protocols across different facilities.

Regulatory bodies worldwide have implemented requirements that directly impact GC-MS precision benchmarking. The U.S. Food and Drug Administration (FDA) enforces Good Laboratory Practice (GLP) regulations through 21 CFR Part 58, which establishes minimum standards for conducting non-clinical laboratory studies. Similarly, the European Medicines Agency (EMA) has published guidelines on bioanalytical method validation that specify acceptance criteria for precision and accuracy in chromatographic techniques.

The Clinical and Laboratory Standards Institute (CLSI) has developed consensus-based standards specifically addressing analytical method validation in clinical chemistry, including document EP05-A3 which outlines protocols for precision evaluation. These standards provide structured approaches to establishing reproducibility across different laboratory settings and equipment configurations.

For environmental applications, the U.S. Environmental Protection Agency (EPA) has established Method 8270 for semi-volatile organic compounds by GC-MS, which includes detailed quality control requirements and acceptance criteria. Internationally, the Codex Alimentarius Commission has developed standards for residue analysis in food products that specify performance criteria for analytical methods, including GC-MS.

Proficiency testing schemes, such as those operated by organizations like FAPAS (Food Analysis Performance Assessment Scheme) and EPTIS (European Proficiency Testing Information System), provide frameworks for laboratories to demonstrate their analytical capabilities against international standards. Participation in these schemes is often mandatory for accredited laboratories and serves as an external validation of cross-laboratory calibration efforts.

Recent developments include the International Council for Harmonisation (ICH) guidelines M10 on bioanalytical method validation, which harmonizes requirements across different regulatory jurisdictions. These guidelines specifically address inter-laboratory variability and establish acceptance criteria for cross-validation studies, directly impacting GC-MS precision benchmarking protocols across different facilities.

Data Processing Algorithms for Improved Reproducibility

Data processing algorithms play a crucial role in enhancing the reproducibility of GC-MS analyses across different laboratories. Current algorithms employ sophisticated mathematical models to normalize raw data, correct baseline drift, and identify peaks with high precision. These algorithms typically incorporate machine learning techniques that can adapt to variations in instrument response, enabling more consistent results regardless of the specific GC-MS system used.

Signal processing techniques form the foundation of modern GC-MS data analysis. Fourier transformation and wavelet analysis help separate meaningful signals from background noise, while advanced deconvolution methods resolve overlapping peaks that would otherwise lead to quantification errors. Recent developments in these algorithms have reduced the coefficient of variation in cross-laboratory studies from approximately 15% to under 5% for most common analytes.

Retention time alignment algorithms address one of the most significant challenges in cross-laboratory calibration. These algorithms compensate for variations in chromatographic conditions by identifying patterns in retention time shifts and applying appropriate corrections. Dynamic time warping and correlation optimized warping have emerged as particularly effective approaches, with the latter showing superior performance for complex biological samples where multiple shifts may occur simultaneously.

Automated calibration curve generation represents another algorithmic advancement that improves reproducibility. These systems dynamically adjust calibration parameters based on instrument response factors, ensuring that quantification remains accurate despite variations in detector sensitivity. Some implementations incorporate internal standard normalization that automatically compensates for matrix effects and extraction efficiency differences between laboratories.

Statistical validation frameworks embedded within these algorithms provide objective measures of data quality. These frameworks apply rigorous statistical tests to identify outliers and assess the reliability of results across different instruments and laboratories. Modern implementations often include bootstrapping techniques to estimate confidence intervals for measured concentrations, giving analysts a clear picture of result reliability.

Cloud-based collaborative platforms have emerged that implement these algorithms in a standardized environment. These systems allow multiple laboratories to process their data through identical algorithmic pipelines, eliminating variations that would otherwise arise from different software implementations. Such platforms typically maintain version control of algorithms, ensuring that historical data remains comparable even as processing methods evolve.

Signal processing techniques form the foundation of modern GC-MS data analysis. Fourier transformation and wavelet analysis help separate meaningful signals from background noise, while advanced deconvolution methods resolve overlapping peaks that would otherwise lead to quantification errors. Recent developments in these algorithms have reduced the coefficient of variation in cross-laboratory studies from approximately 15% to under 5% for most common analytes.

Retention time alignment algorithms address one of the most significant challenges in cross-laboratory calibration. These algorithms compensate for variations in chromatographic conditions by identifying patterns in retention time shifts and applying appropriate corrections. Dynamic time warping and correlation optimized warping have emerged as particularly effective approaches, with the latter showing superior performance for complex biological samples where multiple shifts may occur simultaneously.

Automated calibration curve generation represents another algorithmic advancement that improves reproducibility. These systems dynamically adjust calibration parameters based on instrument response factors, ensuring that quantification remains accurate despite variations in detector sensitivity. Some implementations incorporate internal standard normalization that automatically compensates for matrix effects and extraction efficiency differences between laboratories.

Statistical validation frameworks embedded within these algorithms provide objective measures of data quality. These frameworks apply rigorous statistical tests to identify outliers and assess the reliability of results across different instruments and laboratories. Modern implementations often include bootstrapping techniques to estimate confidence intervals for measured concentrations, giving analysts a clear picture of result reliability.

Cloud-based collaborative platforms have emerged that implement these algorithms in a standardized environment. These systems allow multiple laboratories to process their data through identical algorithmic pipelines, eliminating variations that would otherwise arise from different software implementations. Such platforms typically maintain version control of algorithms, ensuring that historical data remains comparable even as processing methods evolve.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!