Enhance Replication Accuracy in GC-MS Unknowns Testing

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GC-MS Replication Background and Objectives

Gas Chromatography-Mass Spectrometry (GC-MS) has evolved significantly since its inception in the 1950s, becoming an indispensable analytical technique in various fields including environmental monitoring, forensic science, pharmaceutical research, and food safety. The technology combines the separation capabilities of gas chromatography with the detection specificity of mass spectrometry, allowing for precise identification and quantification of unknown compounds in complex mixtures.

The evolution of GC-MS technology has been marked by continuous improvements in sensitivity, resolution, and automation. Early systems were limited by manual interpretation requirements and significant variability between analyses. Modern systems incorporate advanced data processing algorithms, automated sample preparation, and enhanced detector technologies, yet replication accuracy in unknowns testing remains a persistent challenge.

Current technological trends in GC-MS focus on miniaturization, portability, and integration with artificial intelligence for improved data interpretation. The convergence of these trends presents new opportunities for enhancing replication accuracy, particularly in the analysis of unknown compounds where traditional reference standards may be unavailable.

The primary objective of this technical research is to investigate and develop methodologies that significantly enhance the replication accuracy in GC-MS unknowns testing. Specifically, we aim to achieve a minimum of 95% consistency in results across multiple analyses of the same unknown samples, regardless of operator expertise or environmental conditions.

Secondary objectives include reducing the time required for comprehensive unknown compound identification, minimizing the need for repeated analyses, and developing standardized protocols that can be implemented across different laboratory environments with diverse GC-MS instrumentation configurations.

The scope encompasses both hardware modifications and software enhancements, with particular emphasis on advanced calibration techniques, innovative sample preparation methodologies, and machine learning algorithms for pattern recognition in mass spectral data. We will also explore the potential of reference-free identification approaches that rely on chemical and physical principles rather than comparison with existing libraries.

Success in this endeavor would represent a significant advancement in analytical chemistry, potentially transforming how unknown compound identification is performed across multiple industries. The enhanced replication accuracy would not only improve scientific reliability but also reduce operational costs associated with repeated analyses and inconclusive results.

This research aligns with the broader industry trend toward greater automation and reproducibility in analytical techniques, addressing a critical gap in current GC-MS capabilities that has persisted despite technological advancements in other aspects of the instrumentation.

The evolution of GC-MS technology has been marked by continuous improvements in sensitivity, resolution, and automation. Early systems were limited by manual interpretation requirements and significant variability between analyses. Modern systems incorporate advanced data processing algorithms, automated sample preparation, and enhanced detector technologies, yet replication accuracy in unknowns testing remains a persistent challenge.

Current technological trends in GC-MS focus on miniaturization, portability, and integration with artificial intelligence for improved data interpretation. The convergence of these trends presents new opportunities for enhancing replication accuracy, particularly in the analysis of unknown compounds where traditional reference standards may be unavailable.

The primary objective of this technical research is to investigate and develop methodologies that significantly enhance the replication accuracy in GC-MS unknowns testing. Specifically, we aim to achieve a minimum of 95% consistency in results across multiple analyses of the same unknown samples, regardless of operator expertise or environmental conditions.

Secondary objectives include reducing the time required for comprehensive unknown compound identification, minimizing the need for repeated analyses, and developing standardized protocols that can be implemented across different laboratory environments with diverse GC-MS instrumentation configurations.

The scope encompasses both hardware modifications and software enhancements, with particular emphasis on advanced calibration techniques, innovative sample preparation methodologies, and machine learning algorithms for pattern recognition in mass spectral data. We will also explore the potential of reference-free identification approaches that rely on chemical and physical principles rather than comparison with existing libraries.

Success in this endeavor would represent a significant advancement in analytical chemistry, potentially transforming how unknown compound identification is performed across multiple industries. The enhanced replication accuracy would not only improve scientific reliability but also reduce operational costs associated with repeated analyses and inconclusive results.

This research aligns with the broader industry trend toward greater automation and reproducibility in analytical techniques, addressing a critical gap in current GC-MS capabilities that has persisted despite technological advancements in other aspects of the instrumentation.

Market Demand for Accurate Unknowns Testing

The global market for Gas Chromatography-Mass Spectrometry (GC-MS) unknowns testing has experienced significant growth in recent years, driven primarily by increasing regulatory requirements across multiple industries. The demand for accurate identification of unknown compounds has become critical in environmental monitoring, food safety, pharmaceutical development, forensic analysis, and industrial quality control.

Environmental monitoring agencies worldwide have tightened regulations regarding pollutant detection and quantification, creating a substantial market need for highly accurate GC-MS testing. According to market research, the environmental testing segment alone is projected to grow at a compound annual growth rate of 6.8% through 2027, with accuracy in unknowns testing being a primary concern for regulatory compliance.

The pharmaceutical and biotechnology sectors represent another major market driver, where accurate identification of impurities and degradation products is essential for drug development and quality assurance. These industries demand testing solutions that can reliably detect compounds at increasingly lower concentrations while maintaining reproducibility across multiple analyses.

Food safety testing has emerged as a rapidly expanding application area, particularly as global food supply chains become more complex. Regulatory bodies in North America, Europe, and Asia have implemented stricter standards for contaminant detection, creating demand for more sensitive and accurate GC-MS methodologies. The food safety testing market is expected to reach $29 billion by 2025, with chromatography techniques accounting for a significant portion.

Forensic laboratories face similar challenges, requiring highly accurate and defensible analytical results that can withstand legal scrutiny. The reproducibility of results across different laboratories and instruments has become a critical market requirement in this sector.

Industrial quality control applications, particularly in petrochemical, polymer, and consumer product industries, have also contributed to market growth. These sectors require reliable identification of trace contaminants that could affect product performance or safety.

A key market trend is the growing demand for automated solutions that reduce human error while improving reproducibility. End users increasingly seek integrated systems that combine advanced hardware with sophisticated software algorithms for more consistent unknown compound identification.

The market also shows strong regional variations, with North America and Europe leading in adoption of advanced GC-MS technologies, while Asia-Pacific represents the fastest-growing regional market due to expanding industrial bases and strengthening regulatory frameworks.

Customer pain points consistently highlight the need for improved replication accuracy, reduced false positives/negatives, and better inter-laboratory reproducibility, indicating significant market opportunity for technological solutions addressing these specific challenges.

Environmental monitoring agencies worldwide have tightened regulations regarding pollutant detection and quantification, creating a substantial market need for highly accurate GC-MS testing. According to market research, the environmental testing segment alone is projected to grow at a compound annual growth rate of 6.8% through 2027, with accuracy in unknowns testing being a primary concern for regulatory compliance.

The pharmaceutical and biotechnology sectors represent another major market driver, where accurate identification of impurities and degradation products is essential for drug development and quality assurance. These industries demand testing solutions that can reliably detect compounds at increasingly lower concentrations while maintaining reproducibility across multiple analyses.

Food safety testing has emerged as a rapidly expanding application area, particularly as global food supply chains become more complex. Regulatory bodies in North America, Europe, and Asia have implemented stricter standards for contaminant detection, creating demand for more sensitive and accurate GC-MS methodologies. The food safety testing market is expected to reach $29 billion by 2025, with chromatography techniques accounting for a significant portion.

Forensic laboratories face similar challenges, requiring highly accurate and defensible analytical results that can withstand legal scrutiny. The reproducibility of results across different laboratories and instruments has become a critical market requirement in this sector.

Industrial quality control applications, particularly in petrochemical, polymer, and consumer product industries, have also contributed to market growth. These sectors require reliable identification of trace contaminants that could affect product performance or safety.

A key market trend is the growing demand for automated solutions that reduce human error while improving reproducibility. End users increasingly seek integrated systems that combine advanced hardware with sophisticated software algorithms for more consistent unknown compound identification.

The market also shows strong regional variations, with North America and Europe leading in adoption of advanced GC-MS technologies, while Asia-Pacific represents the fastest-growing regional market due to expanding industrial bases and strengthening regulatory frameworks.

Customer pain points consistently highlight the need for improved replication accuracy, reduced false positives/negatives, and better inter-laboratory reproducibility, indicating significant market opportunity for technological solutions addressing these specific challenges.

Technical Challenges in GC-MS Replication

Gas Chromatography-Mass Spectrometry (GC-MS) faces significant technical challenges in achieving consistent replication accuracy when testing unknown compounds. The complexity of sample matrices presents a primary obstacle, as environmental samples often contain numerous interfering substances that can mask target analytes or produce misleading spectral patterns. These matrix effects vary considerably between samples, making standardization difficult and compromising reproducibility.

Instrument variability constitutes another major challenge. Even within the same laboratory, slight differences in column conditions, detector sensitivity, and calibration status can lead to significant variations in retention times and peak intensities. Cross-laboratory comparisons face even greater hurdles, with different instrument manufacturers, models, and configurations producing substantially different results for identical samples.

Method standardization remains inadequate across the industry. While standard operating procedures exist, they often lack sufficient detail regarding critical parameters such as temperature programming, carrier gas flow rates, and MS tuning specifications. This procedural variability introduces systematic errors that undermine replication efforts, particularly when dealing with unknown compounds that lack reference standards.

Data processing algorithms represent a frequently overlooked source of variability. Different software packages employ distinct peak detection algorithms, baseline correction methods, and spectral deconvolution techniques. These computational differences can significantly alter the final analytical results, especially for complex samples with overlapping peaks or trace-level compounds.

Reference libraries used for compound identification present their own challenges. Spectral databases may contain outdated entries, insufficient metadata, or spectra acquired under different instrumental conditions. The quality and comprehensiveness of these libraries directly impact identification accuracy, with significant variations observed between commercial and open-source databases.

Environmental factors such as laboratory temperature, humidity, and power supply stability introduce additional variability. These parameters can affect instrument performance in subtle ways that accumulate throughout the analytical process, resulting in poor day-to-day reproducibility even within the same laboratory setting.

Human factors further complicate replication efforts. Analyst experience, training, and individual interpretation of ambiguous results contribute to variability. This is particularly problematic for unknown compound identification, where subjective judgment often plays a role in confirming tentative identifications based on library matches and retention indices.

Quality control procedures, while designed to ensure reliability, vary significantly between laboratories. Differences in calibration frequency, internal standard selection, and acceptance criteria for quality control samples directly impact the comparability of results across different testing facilities.

Instrument variability constitutes another major challenge. Even within the same laboratory, slight differences in column conditions, detector sensitivity, and calibration status can lead to significant variations in retention times and peak intensities. Cross-laboratory comparisons face even greater hurdles, with different instrument manufacturers, models, and configurations producing substantially different results for identical samples.

Method standardization remains inadequate across the industry. While standard operating procedures exist, they often lack sufficient detail regarding critical parameters such as temperature programming, carrier gas flow rates, and MS tuning specifications. This procedural variability introduces systematic errors that undermine replication efforts, particularly when dealing with unknown compounds that lack reference standards.

Data processing algorithms represent a frequently overlooked source of variability. Different software packages employ distinct peak detection algorithms, baseline correction methods, and spectral deconvolution techniques. These computational differences can significantly alter the final analytical results, especially for complex samples with overlapping peaks or trace-level compounds.

Reference libraries used for compound identification present their own challenges. Spectral databases may contain outdated entries, insufficient metadata, or spectra acquired under different instrumental conditions. The quality and comprehensiveness of these libraries directly impact identification accuracy, with significant variations observed between commercial and open-source databases.

Environmental factors such as laboratory temperature, humidity, and power supply stability introduce additional variability. These parameters can affect instrument performance in subtle ways that accumulate throughout the analytical process, resulting in poor day-to-day reproducibility even within the same laboratory setting.

Human factors further complicate replication efforts. Analyst experience, training, and individual interpretation of ambiguous results contribute to variability. This is particularly problematic for unknown compound identification, where subjective judgment often plays a role in confirming tentative identifications based on library matches and retention indices.

Quality control procedures, while designed to ensure reliability, vary significantly between laboratories. Differences in calibration frequency, internal standard selection, and acceptance criteria for quality control samples directly impact the comparability of results across different testing facilities.

Current Approaches to Enhance Replication Accuracy

01 Calibration and standardization methods for GC-MS accuracy

Various calibration and standardization methods are employed to enhance the replication accuracy of GC-MS analysis. These include the use of internal standards, calibration curves, and reference materials to ensure consistent and reliable results. Proper calibration procedures help minimize systematic errors and improve the quantitative precision of GC-MS measurements, leading to better reproducibility across different instruments and laboratories.- Calibration methods for improving GC-MS replication accuracy: Various calibration techniques are employed to enhance the replication accuracy of GC-MS analysis. These methods include internal standard calibration, multi-point calibration curves, and automated calibration systems that compensate for instrumental drift. Regular calibration with certified reference materials ensures consistent and reproducible results across multiple analyses, significantly improving the quantitative precision and accuracy of GC-MS measurements.

- Advanced data processing algorithms for GC-MS result consistency: Sophisticated data processing algorithms play a crucial role in ensuring replication accuracy in GC-MS analysis. These algorithms include peak deconvolution methods, automated baseline correction, noise reduction techniques, and advanced spectral matching algorithms. Machine learning approaches are increasingly being applied to improve pattern recognition and reduce variability in results, enabling more consistent identification and quantification of compounds across multiple analyses.

- Hardware modifications and innovations for enhanced reproducibility: Hardware improvements in GC-MS systems significantly contribute to replication accuracy. These include temperature-controlled sample introduction systems, high-precision flow controllers, improved ion source designs, and enhanced detector technologies. Specialized sample preparation devices and automated sample handling systems minimize human error and sample degradation, while advanced column technologies with improved stationary phases provide more consistent separation performance.

- Quality control protocols for ensuring GC-MS measurement consistency: Comprehensive quality control protocols are essential for maintaining GC-MS replication accuracy. These include system suitability tests, regular performance verification, blank runs, duplicate analyses, and the use of quality control samples. Statistical process control methods help monitor system performance over time, while standardized operating procedures ensure consistent analytical conditions. Interlaboratory comparison studies validate method reproducibility across different instruments and operators.

- Sample preparation techniques affecting GC-MS replication precision: Sample preparation methodologies significantly impact the replication accuracy of GC-MS analyses. Standardized extraction procedures, derivatization techniques, and sample clean-up methods help reduce matrix effects and interference. Automated sample preparation systems minimize variability introduced by manual handling, while storage condition optimization prevents sample degradation. The development of novel sample preparation materials and techniques continues to improve the consistency of analyte recovery and detection.

02 Advanced data processing algorithms for improved replication

Sophisticated data processing algorithms play a crucial role in enhancing GC-MS replication accuracy. These algorithms include peak detection, baseline correction, spectral deconvolution, and noise reduction techniques. By implementing advanced computational methods, analysts can extract more reliable information from raw GC-MS data, leading to more consistent and reproducible results even when dealing with complex sample matrices or overlapping peaks.Expand Specific Solutions03 Sample preparation techniques affecting replication accuracy

The methods used for sample preparation significantly impact GC-MS replication accuracy. Techniques such as derivatization, extraction, concentration, and clean-up procedures must be standardized to ensure consistent results. Proper sample handling, storage conditions, and preparation protocols minimize variability in the analytical process, thereby improving the reproducibility of GC-MS analyses across different samples and testing occasions.Expand Specific Solutions04 Instrument design and hardware modifications for enhanced accuracy

Innovations in GC-MS instrument design and hardware components contribute significantly to replication accuracy. These include improvements in ion source stability, detector sensitivity, column technology, and temperature control systems. Hardware modifications such as specialized injection systems, enhanced vacuum technology, and more precise mass analyzers help reduce instrumental variability and drift, resulting in more consistent analytical performance and better replication of results.Expand Specific Solutions05 Quality control protocols and validation procedures

Comprehensive quality control protocols and validation procedures are essential for ensuring GC-MS replication accuracy. These include system suitability tests, regular performance verification, proficiency testing, and uncertainty measurement. Implementing robust quality assurance measures helps identify and address factors affecting reproducibility, such as instrument drift, contamination, or operator variability, thereby maintaining consistent analytical performance over time.Expand Specific Solutions

Leading Manufacturers and Research Institutions

The GC-MS unknowns testing market is currently in a growth phase, with increasing demand for higher replication accuracy across pharmaceutical, environmental, and forensic applications. The global market size is estimated to exceed $2 billion, driven by regulatory requirements and quality control needs. Leading technology providers include Shimadzu Corp. and Agilent Technologies, who offer advanced GC-MS systems with improved reproducibility features. Companies like Roche Diagnostics and F. Hoffmann-La Roche are investing in enhanced analytical methodologies, while specialized firms such as Cerno Bioscience focus on calibration software solutions. Academic institutions including Johns Hopkins University and Zhejiang University contribute significant research advancements, creating a competitive landscape where precision and reliability are key differentiators in this maturing but still-evolving technology sector.

Shimadzu Corp.

Technical Solution: Shimadzu Corporation has developed advanced GC-MS systems with proprietary technologies to enhance replication accuracy in unknowns testing. Their approach combines hardware innovations with sophisticated software solutions. The hardware includes high-sensitivity quadrupole mass analyzers with improved ion optics that maintain sensitivity while reducing noise, and temperature-controlled ion sources that minimize drift between analyses. Their Smart MRM technology optimizes dwell times automatically for each compound, ensuring consistent detection across replicates. Shimadzu's Advanced Peak Detection Algorithm (APDA) employs machine learning to distinguish true peaks from noise even in complex matrices, significantly improving reproducibility in untargeted analyses. Their LabSolutions software incorporates automated quality control checks that flag deviations in retention time, mass accuracy, and response factors between replicate injections. Additionally, Shimadzu has implemented internal standard normalization protocols that compensate for matrix effects and instrument variations, further enhancing quantitative reproducibility.

Strengths: Shimadzu's integrated hardware-software approach provides comprehensive solutions for replication challenges. Their systems excel in maintaining calibration stability over extended periods, reducing the need for frequent recalibration. Weaknesses: Their advanced systems typically come with higher initial costs compared to competitors, and the proprietary nature of some algorithms limits customization options for specialized research applications.

Roche Diagnostics GmbH

Technical Solution: Roche Diagnostics has developed comprehensive solutions for enhancing replication accuracy in GC-MS unknowns testing, particularly focused on clinical and pharmaceutical applications. Their approach integrates automated sample preparation systems with sophisticated data analysis software to minimize variability throughout the analytical workflow. Roche's cobas® systems incorporate standardized protocols for sample extraction and preparation that significantly reduce operator-dependent variations. Their proprietary calibration technologies employ multiple internal standards strategically selected to cover different chemical classes and retention time ranges, ensuring consistent response factors across the chromatographic run. Roche has implemented advanced deconvolution algorithms that can reliably separate co-eluting compounds, improving the reproducibility of peak identification and quantification in complex matrices. Their software includes automated system suitability tests that verify instrument performance before each batch analysis, ensuring that any instrumental drift is detected and corrected before it affects sample results. Additionally, Roche has developed specialized reference materials with certified concentrations of both common and rare compounds, allowing laboratories to validate their entire analytical workflow regularly.

Strengths: Roche's integrated workflow approach addresses variability at each step of the analytical process, from sample preparation to data analysis. Their solutions are particularly robust in regulated environments requiring strict compliance with quality standards. Weaknesses: Their systems are often optimized for targeted analysis of known compounds rather than true unknowns discovery, and the proprietary nature of some components limits flexibility for highly specialized research applications.

Key Innovations in GC-MS Technology

Gas chromatography-mass spectrometer spectrogram matching method

PatentInactiveCN104504706A

Innovation

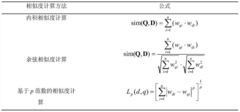

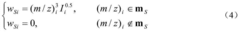

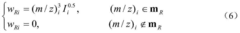

- A new mass spectrum matching method is adopted, including screening of unknown substance spectra and standard mass spectra, peak intensity scaling and similarity calculation based on vector space model. By representing the mass spectrum as a vector form and using p-norm based The similarity calculation formula of numbers improves the matching accuracy.

Gas chromatography-mass spectrogram retrieval method based on vector model

PatentInactiveCN104572910A

Innovation

- A mass spectrum retrieval method based on a vector model is adopted. By representing the mass spectrum as a vector form, the similarity calculation based on the p norm and the introduction of the peak intensity scaling factor are used to calculate the similarity of the mass spectra and screen the standard mass spectra to improve Retrieval efficiency.

Standardization Protocols for GC-MS Testing

Standardization protocols for GC-MS testing represent a critical framework for ensuring consistent and reliable results in unknown compound identification. These protocols establish systematic approaches that minimize variability across different laboratories, instruments, and operators, thereby enhancing the replication accuracy that is essential for scientific validity and regulatory compliance.

The foundation of effective standardization begins with sample preparation guidelines that specify precise methods for extraction, concentration, and derivatization when applicable. These guidelines must account for matrix effects and potential interferences that could compromise analytical outcomes. Detailed procedures for solvent selection, internal standard addition, and sample storage conditions form the cornerstone of reproducible testing.

Instrument calibration represents another crucial component of standardization protocols. Regular calibration using certified reference materials ensures that mass spectrometers maintain optimal performance characteristics. Protocols should specify frequency of calibration, acceptable deviation parameters, and corrective actions when instruments fall outside calibration specifications. This systematic approach to instrument management significantly reduces inter-laboratory variation.

Method validation requirements constitute a third pillar of standardization. These requirements typically include specifications for determining limits of detection, quantification ranges, precision metrics, and recovery rates. Validation protocols should also address matrix-specific challenges and outline procedures for method transfer between different GC-MS systems to maintain consistency across platforms.

Quality control measures embedded within standardization protocols provide ongoing assurance of analytical integrity. These measures include the regular analysis of blanks, duplicates, and quality control samples throughout analytical batches. Statistical process control techniques help monitor system performance over time and identify trends that might indicate developing problems before they affect test results.

Data processing standardization is equally important for replication accuracy. Protocols should define consistent approaches to peak integration, library searching parameters, and match quality thresholds. Standardized reporting formats ensure that critical information about analytical conditions, quality control outcomes, and confidence levels accompanies all test results, facilitating meaningful comparison across different testing events.

Proficiency testing programs serve as external validation mechanisms for standardization protocols. Regular participation in these programs allows laboratories to benchmark their performance against peers and identify opportunities for improvement. Results from proficiency testing provide objective evidence of a laboratory's ability to generate accurate and reproducible results when analyzing unknown compounds.

The foundation of effective standardization begins with sample preparation guidelines that specify precise methods for extraction, concentration, and derivatization when applicable. These guidelines must account for matrix effects and potential interferences that could compromise analytical outcomes. Detailed procedures for solvent selection, internal standard addition, and sample storage conditions form the cornerstone of reproducible testing.

Instrument calibration represents another crucial component of standardization protocols. Regular calibration using certified reference materials ensures that mass spectrometers maintain optimal performance characteristics. Protocols should specify frequency of calibration, acceptable deviation parameters, and corrective actions when instruments fall outside calibration specifications. This systematic approach to instrument management significantly reduces inter-laboratory variation.

Method validation requirements constitute a third pillar of standardization. These requirements typically include specifications for determining limits of detection, quantification ranges, precision metrics, and recovery rates. Validation protocols should also address matrix-specific challenges and outline procedures for method transfer between different GC-MS systems to maintain consistency across platforms.

Quality control measures embedded within standardization protocols provide ongoing assurance of analytical integrity. These measures include the regular analysis of blanks, duplicates, and quality control samples throughout analytical batches. Statistical process control techniques help monitor system performance over time and identify trends that might indicate developing problems before they affect test results.

Data processing standardization is equally important for replication accuracy. Protocols should define consistent approaches to peak integration, library searching parameters, and match quality thresholds. Standardized reporting formats ensure that critical information about analytical conditions, quality control outcomes, and confidence levels accompanies all test results, facilitating meaningful comparison across different testing events.

Proficiency testing programs serve as external validation mechanisms for standardization protocols. Regular participation in these programs allows laboratories to benchmark their performance against peers and identify opportunities for improvement. Results from proficiency testing provide objective evidence of a laboratory's ability to generate accurate and reproducible results when analyzing unknown compounds.

Data Processing Algorithms for Improved Results

Advanced data processing algorithms represent a critical frontier in enhancing replication accuracy for GC-MS unknowns testing. Current algorithms employ sophisticated mathematical models to filter noise, identify peak patterns, and normalize data across multiple sample runs. These algorithms typically utilize machine learning techniques such as random forests and neural networks to recognize complex patterns that might be missed by traditional statistical methods.

Signal processing algorithms have evolved significantly, with wavelet transformation techniques now enabling more precise baseline correction and peak detection. These methods effectively separate meaningful signals from background noise, particularly important when dealing with trace compounds in complex matrices. Adaptive noise reduction algorithms dynamically adjust filtering parameters based on signal characteristics, preserving critical spectral information while eliminating random variations.

Retention time alignment algorithms address one of the most persistent challenges in GC-MS replication. These algorithms compensate for inevitable variations in chromatographic conditions between runs by applying non-linear warping functions to align peaks across multiple samples. Advanced implementations incorporate internal standards as reference points, enabling more accurate alignment even when dealing with complex sample matrices.

Deconvolution algorithms have become increasingly sophisticated, allowing for the separation of overlapping peaks based on differences in mass spectral patterns. Modern approaches utilize blind source separation techniques and independent component analysis to resolve coeluting compounds, significantly improving the reproducibility of quantitative measurements for complex mixtures.

Automated calibration algorithms continuously monitor system performance and apply correction factors to compensate for instrument drift. These algorithms track the response of quality control standards throughout analytical sequences and make real-time adjustments to ensure consistent quantification. Some implementations incorporate transfer learning techniques to maintain calibration models across different instruments within a laboratory network.

Statistical validation algorithms provide objective measures of replication quality through metrics such as relative standard deviation, confidence intervals, and probability-based matching scores. These algorithms enable analysts to establish quantitative acceptance criteria for replication studies and automatically flag results that fall outside established parameters. Advanced implementations incorporate Bayesian statistical approaches to better handle uncertainty in complex analytical scenarios.

Signal processing algorithms have evolved significantly, with wavelet transformation techniques now enabling more precise baseline correction and peak detection. These methods effectively separate meaningful signals from background noise, particularly important when dealing with trace compounds in complex matrices. Adaptive noise reduction algorithms dynamically adjust filtering parameters based on signal characteristics, preserving critical spectral information while eliminating random variations.

Retention time alignment algorithms address one of the most persistent challenges in GC-MS replication. These algorithms compensate for inevitable variations in chromatographic conditions between runs by applying non-linear warping functions to align peaks across multiple samples. Advanced implementations incorporate internal standards as reference points, enabling more accurate alignment even when dealing with complex sample matrices.

Deconvolution algorithms have become increasingly sophisticated, allowing for the separation of overlapping peaks based on differences in mass spectral patterns. Modern approaches utilize blind source separation techniques and independent component analysis to resolve coeluting compounds, significantly improving the reproducibility of quantitative measurements for complex mixtures.

Automated calibration algorithms continuously monitor system performance and apply correction factors to compensate for instrument drift. These algorithms track the response of quality control standards throughout analytical sequences and make real-time adjustments to ensure consistent quantification. Some implementations incorporate transfer learning techniques to maintain calibration models across different instruments within a laboratory network.

Statistical validation algorithms provide objective measures of replication quality through metrics such as relative standard deviation, confidence intervals, and probability-based matching scores. These algorithms enable analysts to establish quantitative acceptance criteria for replication studies and automatically flag results that fall outside established parameters. Advanced implementations incorporate Bayesian statistical approaches to better handle uncertainty in complex analytical scenarios.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!