How to Build a Small-Scale Neuromorphic Testbed: Hardware & Software Stack Guide

AUG 20, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Background and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural networks. This field has evolved significantly since its inception in the late 1980s, driven by the need for more efficient and powerful computing systems capable of handling complex cognitive tasks.

The development of neuromorphic computing has been propelled by advancements in neuroscience, materials science, and computer engineering. Early efforts focused on creating analog circuits that mimicked neural behavior, but recent years have seen a surge in digital and mixed-signal implementations. This evolution has been marked by key milestones such as the development of silicon neurons, the creation of large-scale neuromorphic chips, and the integration of machine learning algorithms with neuromorphic hardware.

The primary objective of neuromorphic computing is to create artificial systems that emulate the brain's ability to process information efficiently, with low power consumption and high parallelism. This goal aligns with the broader aim of advancing artificial intelligence and cognitive computing, potentially leading to breakthroughs in areas such as natural language processing, computer vision, and autonomous systems.

In the context of building a small-scale neuromorphic testbed, the objectives are multifaceted. First, it aims to provide a platform for researchers and developers to experiment with neuromorphic principles without the need for large-scale, expensive infrastructure. This democratization of neuromorphic research is crucial for accelerating innovation in the field.

Secondly, a small-scale testbed serves as an educational tool, allowing students and professionals to gain hands-on experience with neuromorphic concepts. This practical exposure is essential for building a skilled workforce capable of advancing neuromorphic technologies.

Thirdly, such testbeds facilitate the development and testing of neuromorphic algorithms and applications in a controlled environment. This is particularly important for bridging the gap between theoretical neuromorphic models and practical implementations, enabling rapid prototyping and validation of new ideas.

Lastly, small-scale neuromorphic testbeds play a crucial role in exploring the scalability of neuromorphic systems. By starting with manageable, small-scale implementations, researchers can identify and address challenges that may arise when scaling up to larger, more complex systems. This iterative approach is vital for the long-term development of neuromorphic computing technologies that can rival or surpass conventional computing paradigms in specific applications.

The development of neuromorphic computing has been propelled by advancements in neuroscience, materials science, and computer engineering. Early efforts focused on creating analog circuits that mimicked neural behavior, but recent years have seen a surge in digital and mixed-signal implementations. This evolution has been marked by key milestones such as the development of silicon neurons, the creation of large-scale neuromorphic chips, and the integration of machine learning algorithms with neuromorphic hardware.

The primary objective of neuromorphic computing is to create artificial systems that emulate the brain's ability to process information efficiently, with low power consumption and high parallelism. This goal aligns with the broader aim of advancing artificial intelligence and cognitive computing, potentially leading to breakthroughs in areas such as natural language processing, computer vision, and autonomous systems.

In the context of building a small-scale neuromorphic testbed, the objectives are multifaceted. First, it aims to provide a platform for researchers and developers to experiment with neuromorphic principles without the need for large-scale, expensive infrastructure. This democratization of neuromorphic research is crucial for accelerating innovation in the field.

Secondly, a small-scale testbed serves as an educational tool, allowing students and professionals to gain hands-on experience with neuromorphic concepts. This practical exposure is essential for building a skilled workforce capable of advancing neuromorphic technologies.

Thirdly, such testbeds facilitate the development and testing of neuromorphic algorithms and applications in a controlled environment. This is particularly important for bridging the gap between theoretical neuromorphic models and practical implementations, enabling rapid prototyping and validation of new ideas.

Lastly, small-scale neuromorphic testbeds play a crucial role in exploring the scalability of neuromorphic systems. By starting with manageable, small-scale implementations, researchers can identify and address challenges that may arise when scaling up to larger, more complex systems. This iterative approach is vital for the long-term development of neuromorphic computing technologies that can rival or surpass conventional computing paradigms in specific applications.

Market Analysis for Neuromorphic Systems

The neuromorphic computing market is experiencing significant growth, driven by the increasing demand for artificial intelligence (AI) and machine learning applications across various industries. As traditional computing architectures struggle to meet the demands of complex AI workloads, neuromorphic systems offer a promising solution by mimicking the structure and function of biological neural networks.

The global neuromorphic computing market is projected to expand rapidly in the coming years, with estimates suggesting a compound annual growth rate (CAGR) of over 20% from 2021 to 2026. This growth is fueled by the rising adoption of neuromorphic chips in edge computing devices, autonomous vehicles, and smart sensors, as well as their potential applications in robotics, healthcare, and cybersecurity.

Key factors driving market demand include the need for more energy-efficient computing solutions, the increasing complexity of AI algorithms, and the growing interest in brain-inspired computing architectures. Neuromorphic systems offer advantages such as lower power consumption, faster processing speeds, and improved adaptability compared to traditional von Neumann architectures.

The market for neuromorphic systems can be segmented based on application areas, including image recognition, signal processing, data mining, and gesture recognition. Among these, image recognition and signal processing are expected to dominate the market share due to their widespread use in autonomous vehicles, surveillance systems, and medical imaging.

Geographically, North America currently leads the neuromorphic computing market, followed by Europe and Asia-Pacific. The United States, in particular, is at the forefront of neuromorphic research and development, with significant investments from both government agencies and private companies. However, the Asia-Pacific region is anticipated to witness the highest growth rate in the coming years, driven by increasing R&D activities in countries like China, Japan, and South Korea.

Despite the promising outlook, the neuromorphic computing market faces several challenges. These include the high initial costs associated with developing and implementing neuromorphic systems, the lack of standardization in hardware and software platforms, and the need for specialized expertise in neuromorphic engineering. Additionally, the market is still in its early stages, with limited commercial products available and ongoing research to improve the scalability and reliability of neuromorphic architectures.

As the technology matures and becomes more accessible, it is expected that small-scale neuromorphic testbeds will play a crucial role in advancing research and development efforts. These testbeds will enable researchers, developers, and companies to experiment with neuromorphic computing concepts, validate algorithms, and explore potential applications without the need for large-scale infrastructure investments.

The global neuromorphic computing market is projected to expand rapidly in the coming years, with estimates suggesting a compound annual growth rate (CAGR) of over 20% from 2021 to 2026. This growth is fueled by the rising adoption of neuromorphic chips in edge computing devices, autonomous vehicles, and smart sensors, as well as their potential applications in robotics, healthcare, and cybersecurity.

Key factors driving market demand include the need for more energy-efficient computing solutions, the increasing complexity of AI algorithms, and the growing interest in brain-inspired computing architectures. Neuromorphic systems offer advantages such as lower power consumption, faster processing speeds, and improved adaptability compared to traditional von Neumann architectures.

The market for neuromorphic systems can be segmented based on application areas, including image recognition, signal processing, data mining, and gesture recognition. Among these, image recognition and signal processing are expected to dominate the market share due to their widespread use in autonomous vehicles, surveillance systems, and medical imaging.

Geographically, North America currently leads the neuromorphic computing market, followed by Europe and Asia-Pacific. The United States, in particular, is at the forefront of neuromorphic research and development, with significant investments from both government agencies and private companies. However, the Asia-Pacific region is anticipated to witness the highest growth rate in the coming years, driven by increasing R&D activities in countries like China, Japan, and South Korea.

Despite the promising outlook, the neuromorphic computing market faces several challenges. These include the high initial costs associated with developing and implementing neuromorphic systems, the lack of standardization in hardware and software platforms, and the need for specialized expertise in neuromorphic engineering. Additionally, the market is still in its early stages, with limited commercial products available and ongoing research to improve the scalability and reliability of neuromorphic architectures.

As the technology matures and becomes more accessible, it is expected that small-scale neuromorphic testbeds will play a crucial role in advancing research and development efforts. These testbeds will enable researchers, developers, and companies to experiment with neuromorphic computing concepts, validate algorithms, and explore potential applications without the need for large-scale infrastructure investments.

Current Challenges in Neuromorphic Hardware

Neuromorphic hardware development faces several significant challenges that impede widespread adoption and implementation. One of the primary obstacles is the scalability of neuromorphic systems. Current architectures struggle to maintain efficiency and performance as they scale up to larger networks, limiting their applicability to complex real-world problems. This scalability issue is closely tied to power consumption concerns, as larger systems tend to require exponentially more energy, negating one of the key advantages of neuromorphic computing.

Another critical challenge lies in the design and fabrication of neuromorphic chips. The integration of analog and digital components on a single chip presents significant engineering hurdles. Achieving precise and reliable analog computations at nanoscale dimensions remains a formidable task, often resulting in variability and noise issues that can compromise the overall system performance.

The lack of standardization in neuromorphic hardware design and interfaces poses yet another obstacle. Different research groups and companies often develop proprietary architectures and programming models, leading to fragmentation in the field and hindering interoperability and knowledge sharing. This absence of common standards also complicates the development of software tools and algorithms that can be universally applied across different neuromorphic platforms.

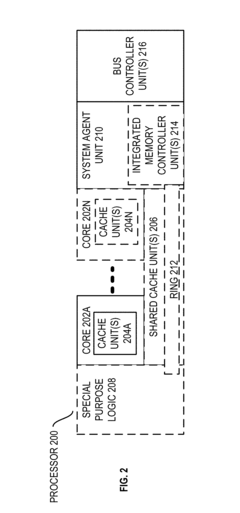

Memory bandwidth and on-chip communication present additional challenges. As neuromorphic systems aim to mimic the massive parallelism and connectivity of biological neural networks, they require high-bandwidth, low-latency communication between processing elements and memory units. Current hardware designs struggle to meet these demands without significant trade-offs in power consumption or chip area.

The development of suitable learning algorithms for neuromorphic hardware remains an ongoing challenge. While traditional machine learning algorithms have been optimized for conventional von Neumann architectures, adapting or creating new algorithms that can fully leverage the unique properties of neuromorphic systems is still an active area of research. This includes developing efficient online learning mechanisms and addressing the limitations of current spike-based learning approaches.

Lastly, the testing and validation of neuromorphic systems present unique challenges. Traditional benchmarking methods and performance metrics used for conventional computing systems may not adequately capture the capabilities and efficiency of neuromorphic hardware. Developing appropriate benchmarks and evaluation methodologies that can fairly assess and compare different neuromorphic architectures is crucial for advancing the field and demonstrating the potential advantages of these systems over conventional computing paradigms.

Another critical challenge lies in the design and fabrication of neuromorphic chips. The integration of analog and digital components on a single chip presents significant engineering hurdles. Achieving precise and reliable analog computations at nanoscale dimensions remains a formidable task, often resulting in variability and noise issues that can compromise the overall system performance.

The lack of standardization in neuromorphic hardware design and interfaces poses yet another obstacle. Different research groups and companies often develop proprietary architectures and programming models, leading to fragmentation in the field and hindering interoperability and knowledge sharing. This absence of common standards also complicates the development of software tools and algorithms that can be universally applied across different neuromorphic platforms.

Memory bandwidth and on-chip communication present additional challenges. As neuromorphic systems aim to mimic the massive parallelism and connectivity of biological neural networks, they require high-bandwidth, low-latency communication between processing elements and memory units. Current hardware designs struggle to meet these demands without significant trade-offs in power consumption or chip area.

The development of suitable learning algorithms for neuromorphic hardware remains an ongoing challenge. While traditional machine learning algorithms have been optimized for conventional von Neumann architectures, adapting or creating new algorithms that can fully leverage the unique properties of neuromorphic systems is still an active area of research. This includes developing efficient online learning mechanisms and addressing the limitations of current spike-based learning approaches.

Lastly, the testing and validation of neuromorphic systems present unique challenges. Traditional benchmarking methods and performance metrics used for conventional computing systems may not adequately capture the capabilities and efficiency of neuromorphic hardware. Developing appropriate benchmarks and evaluation methodologies that can fairly assess and compare different neuromorphic architectures is crucial for advancing the field and demonstrating the potential advantages of these systems over conventional computing paradigms.

Hardware Components for Testbed Construction

01 Neuromorphic hardware testbeds

Development of specialized hardware platforms for testing and evaluating neuromorphic computing systems. These testbeds simulate brain-like architectures and allow researchers to experiment with various neural network models and algorithms in a controlled environment.- Neuromorphic hardware testbeds: Development of specialized hardware platforms designed to simulate and test neuromorphic computing systems. These testbeds provide a controlled environment for evaluating the performance and functionality of brain-inspired computing architectures, allowing researchers to experiment with various neural network models and algorithms.

- Software simulation frameworks for neuromorphic systems: Creation of software-based simulation tools and frameworks specifically tailored for neuromorphic computing. These platforms enable researchers to model and test neuromorphic architectures, synaptic plasticity, and learning algorithms in a virtual environment before implementation in hardware.

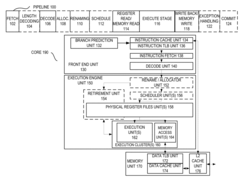

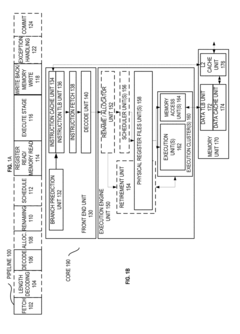

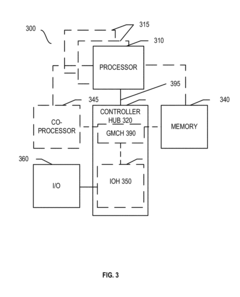

- Integration of neuromorphic components with conventional systems: Development of hybrid systems that combine neuromorphic elements with traditional computing architectures. These testbeds explore the integration of brain-inspired computing modules with conventional processors, memory systems, and I/O interfaces to leverage the strengths of both paradigms.

- Neuromorphic sensor and signal processing testbeds: Creation of specialized testbeds for evaluating neuromorphic approaches to sensor data processing and signal analysis. These platforms focus on bio-inspired methods for efficiently processing and interpreting sensory information, such as visual, auditory, or tactile inputs.

- Scalability and performance evaluation of neuromorphic systems: Development of testbeds and methodologies for assessing the scalability and performance characteristics of neuromorphic computing architectures. These platforms enable researchers to evaluate energy efficiency, processing speed, and learning capabilities of neuromorphic systems at various scales.

02 Software simulation environments for neuromorphic systems

Creation of software-based simulation tools and environments that emulate neuromorphic architectures. These platforms enable researchers to test and optimize neuromorphic algorithms without the need for physical hardware, accelerating the development process.Expand Specific Solutions03 Integration of neuromorphic testbeds with machine learning frameworks

Combining neuromorphic testbeds with existing machine learning frameworks to leverage the strengths of both approaches. This integration allows for the development of hybrid systems that can benefit from both traditional deep learning techniques and brain-inspired computing paradigms.Expand Specific Solutions04 Scalable neuromorphic testbed architectures

Design of scalable neuromorphic testbed architectures that can accommodate varying sizes of neural networks and computational requirements. These systems allow researchers to test the performance and efficiency of neuromorphic algorithms at different scales.Expand Specific Solutions05 Energy-efficient neuromorphic testbed designs

Development of energy-efficient neuromorphic testbed designs that mimic the low power consumption of biological neural systems. These testbeds focus on optimizing power usage while maintaining high computational performance for neuromorphic algorithms.Expand Specific Solutions

Key Players in Neuromorphic Computing

The development of small-scale neuromorphic testbeds is in its early stages, with a growing market driven by increasing interest in brain-inspired computing. The technology is still maturing, with various companies and research institutions exploring different approaches. Key players like Samsung Electronics, Intel, and Toshiba are investing in neuromorphic hardware development, while specialized firms such as Koniku and KnowmTech focus on innovative neuromorphic solutions. Academic institutions, including Peking University and the University of Freiburg, are contributing to fundamental research. As the field progresses, we can expect increased collaboration between industry and academia to advance neuromorphic computing technologies and their applications.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung's approach to neuromorphic testbeds involves both hardware and software components. On the hardware side, Samsung has developed neuromorphic chips using its advanced semiconductor manufacturing processes. These chips incorporate resistive random-access memory (RRAM) technology to create artificial synapses, allowing for efficient implementation of neural networks[5]. For small-scale testbeds, Samsung provides development kits that include their neuromorphic chips along with interface boards for easy integration. The software stack includes Samsung's proprietary Neural Processing Engine (NPE) SDK, which allows developers to design, train, and deploy neuromorphic models on Samsung's hardware. The company also supports popular deep learning frameworks like TensorFlow and PyTorch, with custom layers for neuromorphic operations[6].

Strengths: Advanced manufacturing capabilities, integration with mobile devices. Weaknesses: Proprietary ecosystem may limit open research and collaboration.

Polyn Technology Ltd.

Technical Solution: Polyn Technology specializes in neuromorphic analog AI chips for edge computing. Their approach to building a small-scale neuromorphic testbed involves their Tiny AI technology, which combines analog AI cores with digital processors. For hardware, Polyn offers the NeuroVoice chip, designed for always-on voice processing applications, as a starting point for neuromorphic testbeds[7]. The chip implements neural networks directly in analog circuitry, allowing for extremely low power consumption. Polyn's software stack includes a proprietary neural network compiler that translates trained models into analog implementations suitable for their hardware. They also provide simulation tools that allow developers to test and optimize their neuromorphic designs before deploying them on physical chips[8].

Strengths: Ultra-low power consumption, specialized for edge AI applications. Weaknesses: Limited to specific application domains, may lack flexibility for general-purpose neuromorphic research.

Software Stack for Neuromorphic Systems

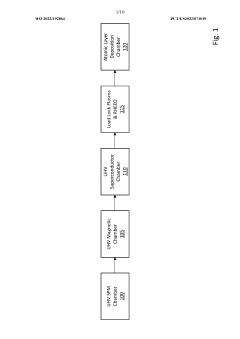

Scalable free-running neuromorphic computer

PatentActiveUS20180189632A1

Innovation

- A scalable, free-running neuromorphic processor design that uses pseudo-random number generators to control spiking activity and enable event-driven spike integration, eliminating the need for spike buffers and global synchronization signals by integrating spikes as they arrive between inter-spike intervals.

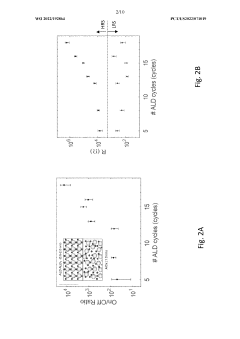

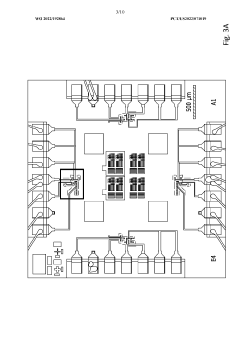

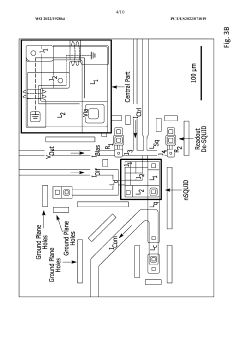

Superconducting neuromorphic computing devices and circuits

PatentWO2022192864A1

Innovation

- The development of neuromorphic computing systems utilizing atomically thin, tunable superconducting memristors as synapses and ultra-sensitive superconducting quantum interference devices (SQUIDs) as neurons, which form neural units capable of performing universal logic gates and are scalable, energy-efficient, and compatible with cryogenic temperatures.

Benchmarking Neuromorphic Systems

Benchmarking neuromorphic systems is a critical aspect of evaluating and comparing the performance of different neuromorphic hardware and software implementations. This process involves developing standardized metrics and test scenarios to assess the efficiency, accuracy, and scalability of neuromorphic architectures.

One of the primary challenges in benchmarking neuromorphic systems is the lack of universally accepted performance metrics. Unlike traditional computing systems, neuromorphic architectures often prioritize energy efficiency and real-time processing over raw computational power. As a result, benchmarks must consider factors such as power consumption, latency, and adaptability to dynamic environments.

To address these challenges, researchers have proposed several benchmark suites specifically designed for neuromorphic systems. These include the Neuromorphic Benchmark Suite (NBS), which focuses on evaluating spiking neural network performance across various tasks, and the Neurorobotics Platform (NRP), which assesses the integration of neuromorphic systems in robotic applications.

When benchmarking a small-scale neuromorphic testbed, it is essential to consider both hardware and software components. On the hardware side, metrics such as energy consumption per spike, maximum neuron count, and synaptic plasticity capabilities should be evaluated. For software, factors like programming model flexibility, simulation accuracy, and ease of integration with existing neural network frameworks are crucial.

A comprehensive benchmarking approach for a neuromorphic testbed should include a variety of tasks that reflect real-world applications. These may range from simple pattern recognition and classification tasks to more complex scenarios involving temporal processing and online learning. By testing across diverse workloads, researchers can gain insights into the strengths and limitations of their neuromorphic implementation.

Furthermore, it is important to compare the performance of the neuromorphic testbed against traditional computing architectures to highlight the unique advantages of neuromorphic computing. This comparison should consider not only raw performance but also factors like energy efficiency and adaptability to changing environments.

As the field of neuromorphic computing continues to evolve, benchmarking methodologies must also adapt to capture the latest advancements. This may involve developing new metrics that better reflect the capabilities of emerging neuromorphic architectures and incorporating more complex, real-world scenarios into benchmark suites.

One of the primary challenges in benchmarking neuromorphic systems is the lack of universally accepted performance metrics. Unlike traditional computing systems, neuromorphic architectures often prioritize energy efficiency and real-time processing over raw computational power. As a result, benchmarks must consider factors such as power consumption, latency, and adaptability to dynamic environments.

To address these challenges, researchers have proposed several benchmark suites specifically designed for neuromorphic systems. These include the Neuromorphic Benchmark Suite (NBS), which focuses on evaluating spiking neural network performance across various tasks, and the Neurorobotics Platform (NRP), which assesses the integration of neuromorphic systems in robotic applications.

When benchmarking a small-scale neuromorphic testbed, it is essential to consider both hardware and software components. On the hardware side, metrics such as energy consumption per spike, maximum neuron count, and synaptic plasticity capabilities should be evaluated. For software, factors like programming model flexibility, simulation accuracy, and ease of integration with existing neural network frameworks are crucial.

A comprehensive benchmarking approach for a neuromorphic testbed should include a variety of tasks that reflect real-world applications. These may range from simple pattern recognition and classification tasks to more complex scenarios involving temporal processing and online learning. By testing across diverse workloads, researchers can gain insights into the strengths and limitations of their neuromorphic implementation.

Furthermore, it is important to compare the performance of the neuromorphic testbed against traditional computing architectures to highlight the unique advantages of neuromorphic computing. This comparison should consider not only raw performance but also factors like energy efficiency and adaptability to changing environments.

As the field of neuromorphic computing continues to evolve, benchmarking methodologies must also adapt to capture the latest advancements. This may involve developing new metrics that better reflect the capabilities of emerging neuromorphic architectures and incorporating more complex, real-world scenarios into benchmark suites.

Energy Efficiency Considerations

Energy efficiency is a critical consideration in the development of small-scale neuromorphic testbeds. These systems aim to emulate the energy-efficient information processing capabilities of biological neural networks, making power consumption a key factor in their design and implementation. When building a neuromorphic testbed, several strategies can be employed to optimize energy efficiency.

One approach involves the use of low-power hardware components. Selecting energy-efficient processors, such as ARM-based systems or specialized neuromorphic chips, can significantly reduce overall power consumption. Additionally, incorporating power-gating techniques allows for selective activation of circuit components, minimizing idle power draw.

Memory management plays a crucial role in energy efficiency. Utilizing on-chip memory and minimizing off-chip data transfers can substantially reduce power consumption associated with memory access. Implementing efficient data compression algorithms and optimizing memory hierarchies further contribute to energy savings.

Neuromorphic architectures often employ event-driven processing, which inherently promotes energy efficiency. By only activating computational units when necessary, based on incoming spikes or events, these systems can achieve significant power savings compared to traditional von Neumann architectures.

Software optimization is equally important in maximizing energy efficiency. Developing efficient algorithms and implementing them using low-level programming languages can reduce computational overhead and power consumption. Utilizing parallel processing techniques and optimizing data flow can also contribute to improved energy efficiency.

Cooling solutions should be carefully considered to maintain optimal operating temperatures while minimizing additional power requirements. Passive cooling techniques, such as heat sinks and strategic component placement, can be effective for small-scale testbeds. In cases where active cooling is necessary, energy-efficient fan systems or liquid cooling solutions should be explored.

Monitoring and profiling tools are essential for identifying power bottlenecks and optimizing energy consumption. Implementing power measurement capabilities within the testbed allows for real-time analysis and fine-tuning of energy usage across different components and operational modes.

By addressing these energy efficiency considerations, developers can create small-scale neuromorphic testbeds that not only emulate biological neural networks effectively but also demonstrate the potential for energy-efficient computing in future large-scale neuromorphic systems.

One approach involves the use of low-power hardware components. Selecting energy-efficient processors, such as ARM-based systems or specialized neuromorphic chips, can significantly reduce overall power consumption. Additionally, incorporating power-gating techniques allows for selective activation of circuit components, minimizing idle power draw.

Memory management plays a crucial role in energy efficiency. Utilizing on-chip memory and minimizing off-chip data transfers can substantially reduce power consumption associated with memory access. Implementing efficient data compression algorithms and optimizing memory hierarchies further contribute to energy savings.

Neuromorphic architectures often employ event-driven processing, which inherently promotes energy efficiency. By only activating computational units when necessary, based on incoming spikes or events, these systems can achieve significant power savings compared to traditional von Neumann architectures.

Software optimization is equally important in maximizing energy efficiency. Developing efficient algorithms and implementing them using low-level programming languages can reduce computational overhead and power consumption. Utilizing parallel processing techniques and optimizing data flow can also contribute to improved energy efficiency.

Cooling solutions should be carefully considered to maintain optimal operating temperatures while minimizing additional power requirements. Passive cooling techniques, such as heat sinks and strategic component placement, can be effective for small-scale testbeds. In cases where active cooling is necessary, energy-efficient fan systems or liquid cooling solutions should be explored.

Monitoring and profiling tools are essential for identifying power bottlenecks and optimizing energy consumption. Implementing power measurement capabilities within the testbed allows for real-time analysis and fine-tuning of energy usage across different components and operational modes.

By addressing these energy efficiency considerations, developers can create small-scale neuromorphic testbeds that not only emulate biological neural networks effectively but also demonstrate the potential for energy-efficient computing in future large-scale neuromorphic systems.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!