How to Implement On-Device Learning on Neuromorphic Chips (online adaptation)

AUG 20, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic On-Device Learning: Background and Objectives

Neuromorphic computing represents a paradigm shift in artificial intelligence, aiming to emulate the structure and function of biological neural networks. This approach has gained significant traction in recent years due to its potential for energy-efficient, real-time processing of complex data. The concept of on-device learning on neuromorphic chips, particularly online adaptation, has emerged as a crucial area of research and development in this field.

The evolution of neuromorphic computing can be traced back to the 1980s, with pioneering work by Carver Mead. However, it is only in the past decade that significant advancements in materials science, nanotechnology, and neuroscience have propelled this technology towards practical implementation. The convergence of these disciplines has led to the development of neuromorphic hardware that can more closely mimic the brain's neural architecture and synaptic plasticity.

On-device learning, specifically online adaptation, represents a key objective in neuromorphic computing. This capability allows neuromorphic systems to continuously learn and adapt to new information without the need for offline training or external computational resources. The goal is to create autonomous systems that can operate efficiently in dynamic, real-world environments, adjusting their behavior based on new inputs and experiences.

The primary technical objectives for implementing on-device learning on neuromorphic chips include developing efficient algorithms for online learning, designing hardware architectures that support synaptic plasticity, and creating scalable systems that can maintain performance as they grow in complexity. Additionally, researchers aim to minimize power consumption while maximizing computational speed, a balance that is crucial for edge computing applications.

Another critical objective is to bridge the gap between neuroscience and engineering, translating biological learning mechanisms into implementable hardware solutions. This interdisciplinary approach seeks to leverage principles such as spike-timing-dependent plasticity (STDP) and homeostatic plasticity to create more biologically plausible and efficient learning systems.

As the field progresses, there is a growing focus on addressing the challenges of reliability, robustness, and generalization in neuromorphic systems. Researchers are exploring ways to ensure that on-device learning remains stable over time and can effectively transfer knowledge across different tasks and domains. This includes developing novel architectures that can balance plasticity and stability, a fundamental issue in both artificial and biological neural networks.

The evolution of neuromorphic computing can be traced back to the 1980s, with pioneering work by Carver Mead. However, it is only in the past decade that significant advancements in materials science, nanotechnology, and neuroscience have propelled this technology towards practical implementation. The convergence of these disciplines has led to the development of neuromorphic hardware that can more closely mimic the brain's neural architecture and synaptic plasticity.

On-device learning, specifically online adaptation, represents a key objective in neuromorphic computing. This capability allows neuromorphic systems to continuously learn and adapt to new information without the need for offline training or external computational resources. The goal is to create autonomous systems that can operate efficiently in dynamic, real-world environments, adjusting their behavior based on new inputs and experiences.

The primary technical objectives for implementing on-device learning on neuromorphic chips include developing efficient algorithms for online learning, designing hardware architectures that support synaptic plasticity, and creating scalable systems that can maintain performance as they grow in complexity. Additionally, researchers aim to minimize power consumption while maximizing computational speed, a balance that is crucial for edge computing applications.

Another critical objective is to bridge the gap between neuroscience and engineering, translating biological learning mechanisms into implementable hardware solutions. This interdisciplinary approach seeks to leverage principles such as spike-timing-dependent plasticity (STDP) and homeostatic plasticity to create more biologically plausible and efficient learning systems.

As the field progresses, there is a growing focus on addressing the challenges of reliability, robustness, and generalization in neuromorphic systems. Researchers are exploring ways to ensure that on-device learning remains stable over time and can effectively transfer knowledge across different tasks and domains. This includes developing novel architectures that can balance plasticity and stability, a fundamental issue in both artificial and biological neural networks.

Market Demand for Adaptive AI Hardware

The market demand for adaptive AI hardware, particularly neuromorphic chips capable of on-device learning, is experiencing significant growth driven by the increasing need for edge computing and real-time decision-making in various industries. This demand is fueled by the limitations of traditional cloud-based AI solutions, which often face challenges related to latency, privacy, and connectivity.

In the automotive sector, there is a growing requirement for adaptive AI hardware to support advanced driver assistance systems (ADAS) and autonomous vehicles. These systems need to continuously learn and adapt to new driving conditions, making on-device learning crucial for real-time decision-making and improved safety. The market for AI chips in automotive applications is expected to grow substantially in the coming years.

The Internet of Things (IoT) and smart home devices represent another significant market segment driving demand for adaptive AI hardware. As these devices become more prevalent, there is an increasing need for local processing and learning capabilities to enhance user experience, reduce network traffic, and ensure data privacy. This trend is particularly evident in smart speakers, security cameras, and home automation systems.

In the healthcare industry, there is a rising demand for wearable devices and medical equipment capable of on-device learning. These devices can provide personalized health monitoring, early disease detection, and adaptive treatment recommendations. The ability to process sensitive medical data locally addresses privacy concerns and enables real-time health insights.

The industrial sector is also showing strong interest in adaptive AI hardware for predictive maintenance, quality control, and process optimization. On-device learning capabilities allow industrial equipment to adapt to changing conditions and improve efficiency without relying on constant cloud connectivity.

Mobile devices represent a significant market opportunity for adaptive AI hardware. Smartphone manufacturers are increasingly integrating AI chips with on-device learning capabilities to enhance features such as voice recognition, image processing, and battery optimization. This trend is expected to continue as consumers demand more intelligent and personalized mobile experiences.

The enterprise market is another key driver for adaptive AI hardware demand. Companies are seeking solutions that can process sensitive data locally, comply with data protection regulations, and reduce dependence on cloud infrastructure. This is particularly relevant in sectors such as finance, where real-time decision-making and data privacy are critical.

As the demand for edge AI continues to grow, the market for neuromorphic chips and other adaptive AI hardware solutions is expected to expand rapidly. This growth is further supported by advancements in chip design, manufacturing processes, and AI algorithms optimized for on-device learning. The ability to implement efficient online adaptation on neuromorphic chips will be a key differentiator in meeting the evolving needs of various industries and applications.

In the automotive sector, there is a growing requirement for adaptive AI hardware to support advanced driver assistance systems (ADAS) and autonomous vehicles. These systems need to continuously learn and adapt to new driving conditions, making on-device learning crucial for real-time decision-making and improved safety. The market for AI chips in automotive applications is expected to grow substantially in the coming years.

The Internet of Things (IoT) and smart home devices represent another significant market segment driving demand for adaptive AI hardware. As these devices become more prevalent, there is an increasing need for local processing and learning capabilities to enhance user experience, reduce network traffic, and ensure data privacy. This trend is particularly evident in smart speakers, security cameras, and home automation systems.

In the healthcare industry, there is a rising demand for wearable devices and medical equipment capable of on-device learning. These devices can provide personalized health monitoring, early disease detection, and adaptive treatment recommendations. The ability to process sensitive medical data locally addresses privacy concerns and enables real-time health insights.

The industrial sector is also showing strong interest in adaptive AI hardware for predictive maintenance, quality control, and process optimization. On-device learning capabilities allow industrial equipment to adapt to changing conditions and improve efficiency without relying on constant cloud connectivity.

Mobile devices represent a significant market opportunity for adaptive AI hardware. Smartphone manufacturers are increasingly integrating AI chips with on-device learning capabilities to enhance features such as voice recognition, image processing, and battery optimization. This trend is expected to continue as consumers demand more intelligent and personalized mobile experiences.

The enterprise market is another key driver for adaptive AI hardware demand. Companies are seeking solutions that can process sensitive data locally, comply with data protection regulations, and reduce dependence on cloud infrastructure. This is particularly relevant in sectors such as finance, where real-time decision-making and data privacy are critical.

As the demand for edge AI continues to grow, the market for neuromorphic chips and other adaptive AI hardware solutions is expected to expand rapidly. This growth is further supported by advancements in chip design, manufacturing processes, and AI algorithms optimized for on-device learning. The ability to implement efficient online adaptation on neuromorphic chips will be a key differentiator in meeting the evolving needs of various industries and applications.

Current Challenges in On-Device Learning Implementation

Implementing on-device learning on neuromorphic chips presents several significant challenges that researchers and engineers are actively working to overcome. One of the primary obstacles is the limited computational resources available on these chips, which constrains the complexity and scale of learning algorithms that can be implemented. Neuromorphic hardware typically operates with low power consumption, making it difficult to perform intensive computations required for many traditional machine learning approaches.

Another major challenge is the need for specialized learning algorithms that can effectively utilize the unique architecture of neuromorphic chips. These chips often employ spiking neural networks, which operate on principles different from conventional artificial neural networks. Developing efficient learning rules that can adapt synaptic weights in real-time while maintaining the energy efficiency and speed advantages of neuromorphic hardware is an ongoing area of research.

The issue of memory limitations also poses a significant hurdle. On-device learning requires storing and updating model parameters, which can be problematic given the restricted memory capacity of neuromorphic chips. This constraint necessitates the development of memory-efficient learning techniques and clever parameter management strategies to enable continuous adaptation without exhausting available resources.

Stability and convergence of learning algorithms in neuromorphic systems present another challenge. The inherent variability and noise in neuromorphic hardware can affect the reliability and reproducibility of learning outcomes. Ensuring that on-device learning algorithms remain stable and converge to useful solutions under these conditions is crucial for practical applications.

The lack of standardized development tools and frameworks for neuromorphic computing further complicates the implementation of on-device learning. Unlike traditional computing platforms, neuromorphic chips often require specialized programming paradigms and tools, which are still in their infancy. This makes it challenging for researchers and developers to efficiently design, implement, and debug learning algorithms for these novel architectures.

Addressing the challenge of scalability is also critical. While current neuromorphic chips can demonstrate on-device learning for small-scale tasks, scaling these capabilities to handle more complex, real-world problems remains an open question. This involves not only increasing the size and complexity of the networks but also ensuring that learning algorithms remain efficient and effective as the scale grows.

Finally, the integration of on-device learning with existing systems and workflows poses practical challenges. Ensuring compatibility with conventional software and hardware ecosystems, as well as developing interfaces that allow seamless interaction between neuromorphic systems and traditional computing environments, is essential for widespread adoption and practical implementation of on-device learning on neuromorphic chips.

Another major challenge is the need for specialized learning algorithms that can effectively utilize the unique architecture of neuromorphic chips. These chips often employ spiking neural networks, which operate on principles different from conventional artificial neural networks. Developing efficient learning rules that can adapt synaptic weights in real-time while maintaining the energy efficiency and speed advantages of neuromorphic hardware is an ongoing area of research.

The issue of memory limitations also poses a significant hurdle. On-device learning requires storing and updating model parameters, which can be problematic given the restricted memory capacity of neuromorphic chips. This constraint necessitates the development of memory-efficient learning techniques and clever parameter management strategies to enable continuous adaptation without exhausting available resources.

Stability and convergence of learning algorithms in neuromorphic systems present another challenge. The inherent variability and noise in neuromorphic hardware can affect the reliability and reproducibility of learning outcomes. Ensuring that on-device learning algorithms remain stable and converge to useful solutions under these conditions is crucial for practical applications.

The lack of standardized development tools and frameworks for neuromorphic computing further complicates the implementation of on-device learning. Unlike traditional computing platforms, neuromorphic chips often require specialized programming paradigms and tools, which are still in their infancy. This makes it challenging for researchers and developers to efficiently design, implement, and debug learning algorithms for these novel architectures.

Addressing the challenge of scalability is also critical. While current neuromorphic chips can demonstrate on-device learning for small-scale tasks, scaling these capabilities to handle more complex, real-world problems remains an open question. This involves not only increasing the size and complexity of the networks but also ensuring that learning algorithms remain efficient and effective as the scale grows.

Finally, the integration of on-device learning with existing systems and workflows poses practical challenges. Ensuring compatibility with conventional software and hardware ecosystems, as well as developing interfaces that allow seamless interaction between neuromorphic systems and traditional computing environments, is essential for widespread adoption and practical implementation of on-device learning on neuromorphic chips.

Existing On-Device Learning Architectures

01 Neuromorphic chip architecture for on-device learning

Neuromorphic chips are designed with architectures that mimic the human brain's neural networks, enabling efficient on-device learning. These chips incorporate specialized hardware structures such as artificial neurons and synapses, allowing for parallel processing and adaptive learning capabilities. This architecture supports real-time learning and adaptation without the need for constant cloud connectivity.- Neuromorphic architecture for on-device learning: Neuromorphic chips are designed with architectures that mimic the human brain's neural networks, enabling efficient on-device learning. These chips incorporate parallel processing, low power consumption, and adaptive synaptic connections to facilitate real-time learning and decision-making without relying on cloud computing.

- Spiking Neural Networks (SNNs) for on-chip learning: Spiking Neural Networks are implemented in neuromorphic chips to enable on-device learning. SNNs use discrete spikes for information processing and learning, closely resembling biological neural networks. This approach allows for energy-efficient and fast computation, making it suitable for edge devices with limited resources.

- Memristive devices for synaptic plasticity: Neuromorphic chips utilize memristive devices to emulate synaptic plasticity, a key feature for on-device learning. These devices can change their resistance based on the history of applied voltage or current, allowing for continuous learning and adaptation. Memristors enable efficient implementation of learning algorithms directly on the chip.

- Unsupervised learning algorithms for neuromorphic systems: On-device learning in neuromorphic chips often employs unsupervised learning algorithms. These algorithms enable the chip to discover patterns and extract features from input data without explicit labeling. This approach is particularly useful for applications in edge computing and IoT devices, where labeled data may be scarce or unavailable.

- Hardware-software co-design for efficient on-device learning: Neuromorphic chips implement hardware-software co-design strategies to optimize on-device learning. This approach involves developing specialized hardware architectures and software algorithms that work in tandem to maximize learning efficiency and minimize power consumption. The co-design enables the creation of compact, energy-efficient systems capable of continuous learning and adaptation.

02 Spiking Neural Networks (SNNs) for energy-efficient computing

Spiking Neural Networks are implemented in neuromorphic chips to achieve energy-efficient computing for on-device learning. SNNs process information in a manner similar to biological neurons, using discrete spikes rather than continuous values. This approach reduces power consumption and enables more efficient processing of temporal data, making it suitable for edge devices with limited resources.Expand Specific Solutions03 Memristive devices for synaptic plasticity

Memristive devices are integrated into neuromorphic chips to emulate synaptic plasticity, a key feature for on-device learning. These devices can change their resistance based on the history of applied voltage or current, mimicking the strengthening or weakening of synaptic connections in biological brains. This enables continuous learning and adaptation in neuromorphic systems without the need for external memory updates.Expand Specific Solutions04 Unsupervised learning algorithms for neuromorphic chips

Neuromorphic chips implement unsupervised learning algorithms to enable autonomous feature extraction and pattern recognition without labeled data. These algorithms, such as Hebbian learning and spike-timing-dependent plasticity (STDP), allow the chip to adapt and learn from input data in real-time. This capability is crucial for applications in edge computing and IoT devices where labeled data may not be available.Expand Specific Solutions05 Hardware-software co-design for optimized on-device learning

The development of neuromorphic chips involves hardware-software co-design to optimize on-device learning performance. This approach ensures that the hardware architecture and learning algorithms are tightly integrated, maximizing efficiency and adaptability. Specialized software frameworks and programming models are created to leverage the unique capabilities of neuromorphic hardware, enabling developers to implement complex learning tasks directly on the chip.Expand Specific Solutions

Key Players in Neuromorphic Chip Industry

The implementation of on-device learning on neuromorphic chips is an emerging field in the early stages of development, with a growing market driven by the increasing demand for edge AI solutions. The technology is still maturing, with various companies and research institutions exploring different approaches. Key players like Samsung Electronics, Qualcomm, and Intel are investing heavily in neuromorphic computing, leveraging their semiconductor expertise. Specialized firms such as Syntiant and Polyn Technology are focusing on ultra-low-power AI chips for edge devices. Academic institutions like Tsinghua University and South China University of Technology are contributing to fundamental research in this area. The competitive landscape is diverse, with both established tech giants and innovative startups vying for market share in this rapidly evolving sector.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed a neuromorphic chip called Aquabolt-XL, which incorporates on-device learning capabilities. The chip utilizes a novel architecture that combines processing-in-memory (PIM) technology with neuromorphic computing principles[1]. This allows for real-time adaptation of neural networks directly on the chip, enabling online learning without the need for external data transfers. The Aquabolt-XL employs spike-based computing and synaptic plasticity mechanisms to mimic biological neural networks, facilitating efficient on-device learning[2]. Samsung's implementation includes a specialized memory array that can perform both storage and computational tasks, reducing energy consumption and latency during the learning process[3].

Strengths: Energy-efficient design, reduced latency for real-time applications, scalability for various IoT devices. Weaknesses: May have limitations in handling complex, large-scale neural networks compared to traditional GPU-based systems.

Syntiant Corp.

Technical Solution: Syntiant has developed the NDP200 Neural Decision Processor, which supports on-device learning for neuromorphic applications. The chip utilizes a custom neural network architecture optimized for edge AI and incorporates a novel approach to online adaptation[4]. The NDP200 employs a combination of analog and digital circuitry to achieve high energy efficiency while enabling continuous learning. Syntiant's implementation includes a dedicated on-chip learning engine that can update network weights in real-time based on new input data, allowing for adaptive behavior in changing environments[5]. The chip also features a specialized memory subsystem that supports fast weight updates and efficient data movement during the learning process.

Strengths: Ultra-low power consumption, suitable for battery-powered devices, optimized for audio and sensor processing. Weaknesses: May have limitations in processing more complex visual or multi-modal data compared to larger neuromorphic systems.

Core Innovations in Neuromorphic Online Adaptation

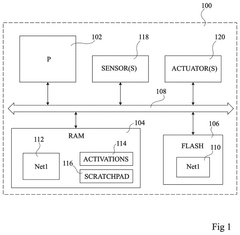

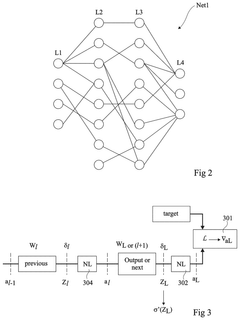

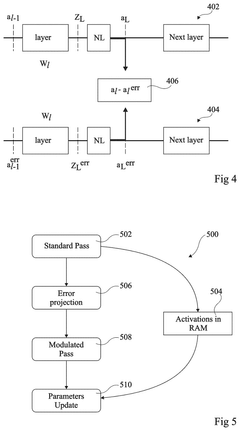

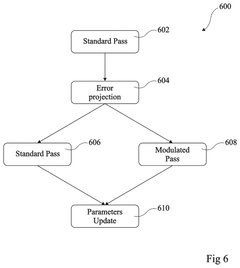

Method and device for on-device learning based on multiple instances of inference workloads

PatentPendingUS20250053807A1

Innovation

- A method and circuit for training neural networks using a memory-efficient forward-only propagation approach, which involves performing multiple forward inference passes and updating weights based on modulated activations and regenerated activations, reducing the need for storing intermediate activations.

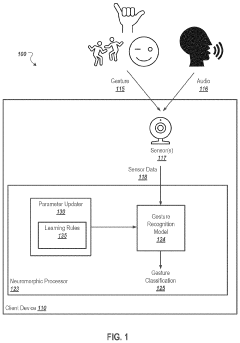

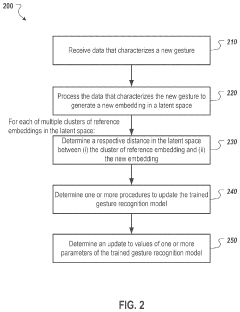

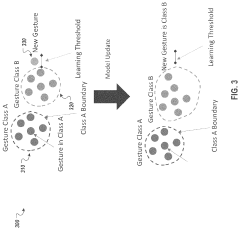

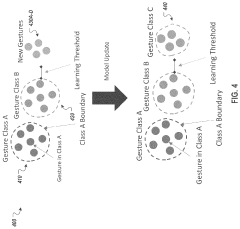

Self-learning neuromorphic gesture recognition models

PatentActiveUS20240169696A1

Innovation

- A self-learning gesture recognition system employing a neuromorphic processor that continuously updates a gesture recognition model using learning rules, allowing for real-time adaptation and accurate recognition of gestures through a spiking neural network (SNN) and event-based data processing, enabling efficient online learning on edge devices.

Energy Efficiency Considerations for On-Device Learning

Energy efficiency is a critical consideration for implementing on-device learning on neuromorphic chips. As these chips aim to mimic the human brain's neural networks, they offer potential advantages in power consumption compared to traditional von Neumann architectures. However, the energy requirements for on-device learning still pose significant challenges.

Neuromorphic chips typically consume less power than conventional processors due to their event-driven nature and parallel processing capabilities. This inherent energy efficiency makes them attractive for edge computing applications where power constraints are stringent. However, the learning process itself can be energy-intensive, particularly when dealing with complex neural networks and large datasets.

One approach to improving energy efficiency in on-device learning is through the optimization of learning algorithms. Sparse learning techniques, which focus on updating only the most relevant synapses, can significantly reduce computational overhead and energy consumption. Similarly, quantization methods that reduce the precision of weights and activations can decrease memory access and computation costs, leading to lower power consumption.

Hardware-level optimizations also play a crucial role in enhancing energy efficiency. The design of neuromorphic chips can incorporate low-power memory technologies, such as resistive random-access memory (RRAM) or phase-change memory (PCM), which offer non-volatile storage with low read and write energies. These technologies can help reduce the energy required for weight updates during the learning process.

Another promising avenue for improving energy efficiency is the development of adaptive learning rates and pruning techniques. By dynamically adjusting the learning rate based on the importance of different synapses or neurons, the system can focus computational resources on the most critical parts of the network. This approach can lead to faster convergence and reduced energy consumption during training.

The integration of energy-aware learning algorithms is also essential. These algorithms can dynamically balance the trade-off between accuracy and energy consumption, adapting their behavior based on the available power budget. For instance, they may reduce the frequency of weight updates or limit the complexity of computations when energy is scarce, while allowing for more intensive learning when power is abundant.

Lastly, the development of specialized hardware accelerators for specific learning tasks can significantly enhance energy efficiency. By tailoring the hardware architecture to the requirements of particular learning algorithms, these accelerators can minimize unnecessary computations and data movements, resulting in substantial energy savings compared to general-purpose neuromorphic chips.

Neuromorphic chips typically consume less power than conventional processors due to their event-driven nature and parallel processing capabilities. This inherent energy efficiency makes them attractive for edge computing applications where power constraints are stringent. However, the learning process itself can be energy-intensive, particularly when dealing with complex neural networks and large datasets.

One approach to improving energy efficiency in on-device learning is through the optimization of learning algorithms. Sparse learning techniques, which focus on updating only the most relevant synapses, can significantly reduce computational overhead and energy consumption. Similarly, quantization methods that reduce the precision of weights and activations can decrease memory access and computation costs, leading to lower power consumption.

Hardware-level optimizations also play a crucial role in enhancing energy efficiency. The design of neuromorphic chips can incorporate low-power memory technologies, such as resistive random-access memory (RRAM) or phase-change memory (PCM), which offer non-volatile storage with low read and write energies. These technologies can help reduce the energy required for weight updates during the learning process.

Another promising avenue for improving energy efficiency is the development of adaptive learning rates and pruning techniques. By dynamically adjusting the learning rate based on the importance of different synapses or neurons, the system can focus computational resources on the most critical parts of the network. This approach can lead to faster convergence and reduced energy consumption during training.

The integration of energy-aware learning algorithms is also essential. These algorithms can dynamically balance the trade-off between accuracy and energy consumption, adapting their behavior based on the available power budget. For instance, they may reduce the frequency of weight updates or limit the complexity of computations when energy is scarce, while allowing for more intensive learning when power is abundant.

Lastly, the development of specialized hardware accelerators for specific learning tasks can significantly enhance energy efficiency. By tailoring the hardware architecture to the requirements of particular learning algorithms, these accelerators can minimize unnecessary computations and data movements, resulting in substantial energy savings compared to general-purpose neuromorphic chips.

Ethical Implications of Adaptive AI Systems

The implementation of on-device learning on neuromorphic chips for online adaptation raises significant ethical considerations that must be carefully addressed. As these adaptive AI systems become more prevalent, they have the potential to impact various aspects of society, from personal privacy to social equity.

One primary ethical concern is the protection of user privacy. On-device learning allows AI systems to continuously adapt based on user interactions and data, potentially leading to the accumulation of sensitive personal information. While keeping data processing on the device can enhance privacy compared to cloud-based solutions, there is still a risk of unauthorized access or misuse of this personalized data. Ensuring robust security measures and transparent data handling practices is crucial to maintain user trust and protect individual privacy rights.

Another ethical implication is the potential for bias amplification. As AI systems adapt to individual users or specific environments, they may inadvertently reinforce existing biases or create new ones. This could lead to discriminatory outcomes in decision-making processes, particularly in critical areas such as healthcare, finance, or law enforcement. Developers and organizations implementing these adaptive systems must prioritize fairness and inclusivity in their design and continuously monitor for unintended biases.

The question of accountability and responsibility also arises with adaptive AI systems. As these systems evolve and make decisions autonomously, it becomes challenging to attribute outcomes to specific human actors or programming choices. This ambiguity could complicate legal and ethical frameworks, particularly in cases where AI decisions lead to harm or undesirable consequences. Establishing clear guidelines for accountability and developing mechanisms to audit and explain AI decision-making processes is essential.

Furthermore, the implementation of on-device learning raises concerns about digital divide and equitable access to technology. As devices with advanced neuromorphic chips become more sophisticated and potentially more expensive, there is a risk of exacerbating existing socioeconomic disparities. Ensuring that the benefits of adaptive AI systems are accessible to a wide range of users, regardless of their economic status or technological literacy, is an important ethical consideration.

Lastly, the long-term societal impact of widespread adaptive AI systems must be considered. As these systems become more integrated into daily life, there is potential for over-reliance on AI-driven decision-making and a gradual erosion of human agency. Balancing the benefits of personalized, adaptive technology with the preservation of human autonomy and critical thinking skills is a complex ethical challenge that requires ongoing dialogue and careful consideration.

One primary ethical concern is the protection of user privacy. On-device learning allows AI systems to continuously adapt based on user interactions and data, potentially leading to the accumulation of sensitive personal information. While keeping data processing on the device can enhance privacy compared to cloud-based solutions, there is still a risk of unauthorized access or misuse of this personalized data. Ensuring robust security measures and transparent data handling practices is crucial to maintain user trust and protect individual privacy rights.

Another ethical implication is the potential for bias amplification. As AI systems adapt to individual users or specific environments, they may inadvertently reinforce existing biases or create new ones. This could lead to discriminatory outcomes in decision-making processes, particularly in critical areas such as healthcare, finance, or law enforcement. Developers and organizations implementing these adaptive systems must prioritize fairness and inclusivity in their design and continuously monitor for unintended biases.

The question of accountability and responsibility also arises with adaptive AI systems. As these systems evolve and make decisions autonomously, it becomes challenging to attribute outcomes to specific human actors or programming choices. This ambiguity could complicate legal and ethical frameworks, particularly in cases where AI decisions lead to harm or undesirable consequences. Establishing clear guidelines for accountability and developing mechanisms to audit and explain AI decision-making processes is essential.

Furthermore, the implementation of on-device learning raises concerns about digital divide and equitable access to technology. As devices with advanced neuromorphic chips become more sophisticated and potentially more expensive, there is a risk of exacerbating existing socioeconomic disparities. Ensuring that the benefits of adaptive AI systems are accessible to a wide range of users, regardless of their economic status or technological literacy, is an important ethical consideration.

Lastly, the long-term societal impact of widespread adaptive AI systems must be considered. As these systems become more integrated into daily life, there is potential for over-reliance on AI-driven decision-making and a gradual erosion of human agency. Balancing the benefits of personalized, adaptive technology with the preservation of human autonomy and critical thinking skills is a complex ethical challenge that requires ongoing dialogue and careful consideration.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!