How to Use Neuromorphic Chips for Event-Based Vision: Pipelines and Algorithms

AUG 20, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Vision Background and Objectives

Neuromorphic vision represents a paradigm shift in visual processing, drawing inspiration from the human brain's neural architecture. This approach aims to overcome limitations of traditional computer vision systems by mimicking the efficiency and adaptability of biological visual systems. The field has evolved significantly since its inception in the late 1980s, driven by advancements in neuroscience, microelectronics, and artificial intelligence.

The primary objective of neuromorphic vision is to develop systems that can process visual information with high speed, low power consumption, and robust performance in dynamic environments. This aligns with the broader goals of neuromorphic computing, which seeks to create hardware and algorithms that emulate the brain's information processing capabilities.

Event-based vision, a key component of neuromorphic vision, represents a fundamental shift from frame-based image processing. Instead of capturing and processing entire images at fixed intervals, event-based sensors detect and transmit only local pixel-level changes in brightness. This approach drastically reduces data redundancy and power consumption while enabling microsecond-level temporal resolution.

The development of neuromorphic chips specifically designed for event-based vision has been a crucial technological advancement. These chips integrate sensing and processing capabilities, allowing for real-time, low-latency visual information processing. The goal is to create systems that can perform complex visual tasks with the efficiency and adaptability observed in biological systems.

Current research in neuromorphic vision focuses on several key objectives. These include improving the spatial and temporal resolution of event-based sensors, developing more efficient and scalable neuromorphic architectures, and creating algorithms that can effectively process and interpret event-based data. Additionally, there is a strong emphasis on bridging the gap between neuromorphic hardware and practical applications in fields such as robotics, autonomous vehicles, and surveillance systems.

Another important objective is to enhance the integration of neuromorphic vision systems with other sensory modalities and cognitive functions. This multi-modal approach aims to create more comprehensive and robust perception systems that can operate effectively in complex, real-world environments.

As the field progresses, researchers are also exploring ways to leverage neuromorphic vision for advanced machine learning tasks. This includes developing neuromorphic implementations of deep learning algorithms and investigating novel learning paradigms inspired by biological visual systems. The ultimate goal is to create artificial visual systems that not only match but potentially surpass human visual capabilities in specific tasks, while maintaining energy efficiency and adaptability.

The primary objective of neuromorphic vision is to develop systems that can process visual information with high speed, low power consumption, and robust performance in dynamic environments. This aligns with the broader goals of neuromorphic computing, which seeks to create hardware and algorithms that emulate the brain's information processing capabilities.

Event-based vision, a key component of neuromorphic vision, represents a fundamental shift from frame-based image processing. Instead of capturing and processing entire images at fixed intervals, event-based sensors detect and transmit only local pixel-level changes in brightness. This approach drastically reduces data redundancy and power consumption while enabling microsecond-level temporal resolution.

The development of neuromorphic chips specifically designed for event-based vision has been a crucial technological advancement. These chips integrate sensing and processing capabilities, allowing for real-time, low-latency visual information processing. The goal is to create systems that can perform complex visual tasks with the efficiency and adaptability observed in biological systems.

Current research in neuromorphic vision focuses on several key objectives. These include improving the spatial and temporal resolution of event-based sensors, developing more efficient and scalable neuromorphic architectures, and creating algorithms that can effectively process and interpret event-based data. Additionally, there is a strong emphasis on bridging the gap between neuromorphic hardware and practical applications in fields such as robotics, autonomous vehicles, and surveillance systems.

Another important objective is to enhance the integration of neuromorphic vision systems with other sensory modalities and cognitive functions. This multi-modal approach aims to create more comprehensive and robust perception systems that can operate effectively in complex, real-world environments.

As the field progresses, researchers are also exploring ways to leverage neuromorphic vision for advanced machine learning tasks. This includes developing neuromorphic implementations of deep learning algorithms and investigating novel learning paradigms inspired by biological visual systems. The ultimate goal is to create artificial visual systems that not only match but potentially surpass human visual capabilities in specific tasks, while maintaining energy efficiency and adaptability.

Market Analysis for Event-Based Vision Systems

The event-based vision systems market is experiencing significant growth, driven by the increasing demand for high-speed, low-latency visual processing in various applications. This market segment is closely tied to the development and adoption of neuromorphic chips, which are specifically designed to handle event-based data efficiently.

The global market for event-based vision systems is projected to expand rapidly in the coming years, with key applications in autonomous vehicles, robotics, industrial automation, and surveillance. The automotive sector, in particular, is expected to be a major driver of growth, as event-based vision systems offer superior performance in challenging lighting conditions and high-speed scenarios compared to traditional frame-based cameras.

In the industrial automation sector, event-based vision systems are gaining traction for quality control and high-speed manufacturing processes. These systems can detect rapid changes and anomalies more effectively than conventional vision systems, leading to improved production efficiency and reduced errors.

The robotics industry is another significant market for event-based vision, with applications in navigation, object recognition, and human-robot interaction. The low latency and high dynamic range of event-based sensors make them particularly suitable for fast-moving robots and drones.

Geographically, North America and Europe are currently leading the market due to their strong technological infrastructure and early adoption of advanced vision technologies. However, the Asia-Pacific region is expected to show the highest growth rate in the coming years, driven by increasing investments in automation and robotics in countries like China, Japan, and South Korea.

Key players in the event-based vision market include established sensor manufacturers, neuromorphic chip developers, and specialized startups. These companies are focusing on improving sensor resolution, reducing power consumption, and developing more efficient algorithms for processing event-based data.

Despite the promising outlook, the market faces challenges such as the need for standardization, limited awareness among potential end-users, and the current higher cost compared to traditional vision systems. However, as technology advances and production scales up, these barriers are expected to diminish, leading to wider adoption across various industries.

In conclusion, the market for event-based vision systems shows strong growth potential, driven by the unique advantages offered by neuromorphic computing in handling dynamic visual information. As the technology matures and finds more applications, it is poised to become a significant segment within the broader computer vision and sensor market.

The global market for event-based vision systems is projected to expand rapidly in the coming years, with key applications in autonomous vehicles, robotics, industrial automation, and surveillance. The automotive sector, in particular, is expected to be a major driver of growth, as event-based vision systems offer superior performance in challenging lighting conditions and high-speed scenarios compared to traditional frame-based cameras.

In the industrial automation sector, event-based vision systems are gaining traction for quality control and high-speed manufacturing processes. These systems can detect rapid changes and anomalies more effectively than conventional vision systems, leading to improved production efficiency and reduced errors.

The robotics industry is another significant market for event-based vision, with applications in navigation, object recognition, and human-robot interaction. The low latency and high dynamic range of event-based sensors make them particularly suitable for fast-moving robots and drones.

Geographically, North America and Europe are currently leading the market due to their strong technological infrastructure and early adoption of advanced vision technologies. However, the Asia-Pacific region is expected to show the highest growth rate in the coming years, driven by increasing investments in automation and robotics in countries like China, Japan, and South Korea.

Key players in the event-based vision market include established sensor manufacturers, neuromorphic chip developers, and specialized startups. These companies are focusing on improving sensor resolution, reducing power consumption, and developing more efficient algorithms for processing event-based data.

Despite the promising outlook, the market faces challenges such as the need for standardization, limited awareness among potential end-users, and the current higher cost compared to traditional vision systems. However, as technology advances and production scales up, these barriers are expected to diminish, leading to wider adoption across various industries.

In conclusion, the market for event-based vision systems shows strong growth potential, driven by the unique advantages offered by neuromorphic computing in handling dynamic visual information. As the technology matures and finds more applications, it is poised to become a significant segment within the broader computer vision and sensor market.

Current State of Neuromorphic Chip Technology

Neuromorphic chip technology has made significant strides in recent years, particularly in the realm of event-based vision processing. These chips, designed to mimic the structure and function of biological neural networks, offer unique advantages in processing visual information efficiently and in real-time.

Currently, several major players in the semiconductor industry are actively developing and refining neuromorphic chip technologies. Companies like IBM, Intel, and BrainChip have made notable advancements, with each offering distinct approaches to neuromorphic computing. IBM's TrueNorth chip, for instance, has demonstrated impressive capabilities in pattern recognition and sensory processing tasks.

The state-of-the-art neuromorphic chips for event-based vision typically feature high-density arrays of artificial neurons and synapses, capable of processing visual information in a manner similar to the human visual cortex. These chips excel in tasks that require rapid, low-power processing of dynamic visual scenes, such as object tracking, motion detection, and gesture recognition.

One of the key strengths of current neuromorphic chip technology is its ability to process event-based data from sensors like Dynamic Vision Sensors (DVS). Unlike traditional frame-based cameras, DVS capture changes in the visual scene asynchronously, resulting in sparse, temporally precise data. Neuromorphic chips are inherently well-suited to process this type of information, offering significant advantages in terms of power efficiency and processing speed.

However, the technology still faces several challenges. Integration with conventional computing systems remains complex, and there is a need for more standardized development tools and programming paradigms. Additionally, scaling up the number of neurons and synapses while maintaining energy efficiency is an ongoing area of research.

Recent advancements have focused on improving the scalability and versatility of neuromorphic chips. Some researchers are exploring 3D chip architectures to increase neuron density, while others are developing hybrid systems that combine neuromorphic elements with traditional digital processors.

In the context of event-based vision, current neuromorphic chips are being optimized for specific visual processing tasks. For example, some chips are designed to excel at edge detection and feature extraction, crucial for real-time object recognition in dynamic environments. Others are tailored for temporal pattern recognition, enabling applications in gesture control and human-machine interfaces.

The integration of on-chip learning capabilities is another significant trend in neuromorphic chip development. This allows the chips to adapt and improve their performance over time, much like biological neural networks. Such capabilities are particularly valuable for event-based vision applications in dynamic, unpredictable environments.

Currently, several major players in the semiconductor industry are actively developing and refining neuromorphic chip technologies. Companies like IBM, Intel, and BrainChip have made notable advancements, with each offering distinct approaches to neuromorphic computing. IBM's TrueNorth chip, for instance, has demonstrated impressive capabilities in pattern recognition and sensory processing tasks.

The state-of-the-art neuromorphic chips for event-based vision typically feature high-density arrays of artificial neurons and synapses, capable of processing visual information in a manner similar to the human visual cortex. These chips excel in tasks that require rapid, low-power processing of dynamic visual scenes, such as object tracking, motion detection, and gesture recognition.

One of the key strengths of current neuromorphic chip technology is its ability to process event-based data from sensors like Dynamic Vision Sensors (DVS). Unlike traditional frame-based cameras, DVS capture changes in the visual scene asynchronously, resulting in sparse, temporally precise data. Neuromorphic chips are inherently well-suited to process this type of information, offering significant advantages in terms of power efficiency and processing speed.

However, the technology still faces several challenges. Integration with conventional computing systems remains complex, and there is a need for more standardized development tools and programming paradigms. Additionally, scaling up the number of neurons and synapses while maintaining energy efficiency is an ongoing area of research.

Recent advancements have focused on improving the scalability and versatility of neuromorphic chips. Some researchers are exploring 3D chip architectures to increase neuron density, while others are developing hybrid systems that combine neuromorphic elements with traditional digital processors.

In the context of event-based vision, current neuromorphic chips are being optimized for specific visual processing tasks. For example, some chips are designed to excel at edge detection and feature extraction, crucial for real-time object recognition in dynamic environments. Others are tailored for temporal pattern recognition, enabling applications in gesture control and human-machine interfaces.

The integration of on-chip learning capabilities is another significant trend in neuromorphic chip development. This allows the chips to adapt and improve their performance over time, much like biological neural networks. Such capabilities are particularly valuable for event-based vision applications in dynamic, unpredictable environments.

Existing Pipelines for Event-Based Vision

01 Event-based vision sensors for neuromorphic chips

Neuromorphic chips incorporate event-based vision sensors that mimic the human visual system. These sensors capture changes in the visual scene asynchronously, reducing data redundancy and power consumption. This approach enables efficient processing of visual information in real-time, making it suitable for applications such as autonomous vehicles and robotics.- Event-based vision sensors for neuromorphic chips: Neuromorphic chips incorporate event-based vision sensors that mimic the human visual system. These sensors capture changes in the visual scene asynchronously, reducing data redundancy and power consumption. This approach enables efficient processing of visual information in real-time, making it suitable for applications such as autonomous vehicles and robotics.

- Spiking neural networks for event-based vision processing: Neuromorphic chips utilize spiking neural networks to process event-based vision data. These networks are designed to handle sparse, asynchronous inputs from event-based sensors, enabling efficient and low-latency processing of visual information. This approach allows for more natural and biologically-inspired computation in artificial vision systems.

- Hardware acceleration for event-based vision algorithms: Neuromorphic chips incorporate specialized hardware accelerators designed to optimize the processing of event-based vision algorithms. These accelerators are tailored to handle the unique characteristics of event-based data, enabling faster and more energy-efficient computation compared to traditional vision processing systems.

- Integration of event-based vision with other neuromorphic components: Neuromorphic chips combine event-based vision processing with other neuromorphic components, such as memory and learning modules. This integration allows for more comprehensive and adaptable artificial intelligence systems that can learn and process visual information in a manner similar to biological systems.

- Low-power event-based vision processing for edge devices: Neuromorphic chips with event-based vision capabilities are designed for low-power operation, making them suitable for edge computing devices. This enables the implementation of advanced vision processing capabilities in resource-constrained environments, such as IoT devices and wearable technology, while maintaining energy efficiency.

02 Spiking neural networks for event-based vision processing

Neuromorphic chips implement spiking neural networks to process event-based vision data. These networks use sparse, temporal coding to efficiently represent and process visual information. This approach allows for low-latency, energy-efficient processing of dynamic visual scenes, making it ideal for applications requiring real-time decision-making.Expand Specific Solutions03 Hardware acceleration for event-based vision algorithms

Neuromorphic chips feature specialized hardware accelerators designed to optimize the execution of event-based vision algorithms. These accelerators enable parallel processing of sparse, asynchronous visual data, significantly improving performance and energy efficiency compared to traditional computer vision approaches on conventional hardware.Expand Specific Solutions04 On-chip learning and adaptation for event-based vision

Advanced neuromorphic chips incorporate on-chip learning capabilities for event-based vision systems. This allows the chip to adapt to changing visual environments and improve its performance over time. The ability to learn and update neural network parameters directly on the chip reduces the need for frequent retraining and enables more autonomous operation in dynamic scenarios.Expand Specific Solutions05 Integration of event-based vision with other sensor modalities

Neuromorphic chips designed for event-based vision often integrate multiple sensor modalities, such as inertial measurement units or audio sensors. This multi-modal approach enables more robust and context-aware perception, enhancing the chip's ability to interpret complex environments and make informed decisions based on diverse sensory inputs.Expand Specific Solutions

Core Innovations in Neuromorphic Algorithms

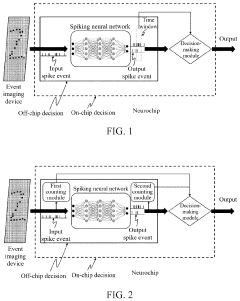

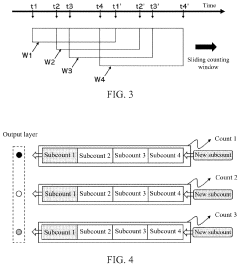

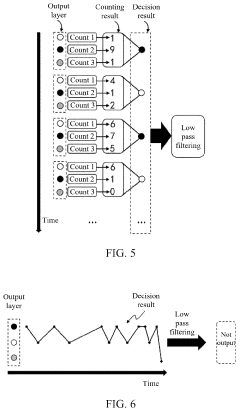

Spike event decision-making device, method, chip and electronic device

PatentPendingUS20240086690A1

Innovation

- A spike event decision-making device and method that utilizes counting modules to determine decision-making results based on the number of spike events fired by neurons in a spiking neural network, allowing for adaptive decision-making without fixed time windows, and incorporating sub-counters to improve reliability and accuracy by considering transition rates and occurrence ratios.

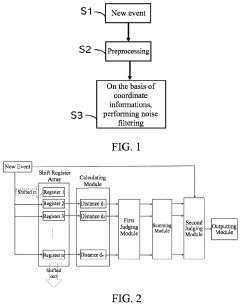

Noise filtering for dynamic vision sensor

PatentActiveUS20240064422A1

Innovation

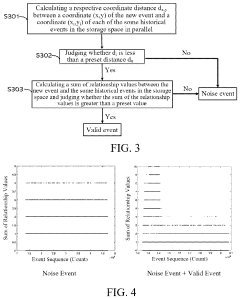

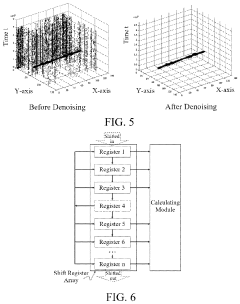

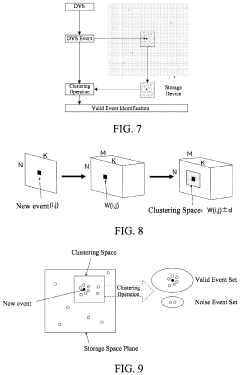

- A denoising device that calculates coordinate distances between new and historical events, judges validity based on relationship values, and filters out noise events using a combination of storage and processing modules, including a shift register array and band-pass filtering, to differentiate between valid and noise events.

Energy Efficiency Considerations

Energy efficiency is a critical consideration in the development and implementation of neuromorphic chips for event-based vision systems. These chips, designed to mimic the human brain's neural networks, offer significant advantages in terms of power consumption compared to traditional computing architectures. The event-driven nature of neuromorphic systems aligns well with the sparse and asynchronous characteristics of event-based vision, leading to substantial energy savings.

One of the primary energy efficiency benefits of neuromorphic chips in event-based vision lies in their ability to process information only when relevant events occur. Unlike conventional frame-based systems that continuously process data, event-based vision systems respond solely to changes in the visual scene. This approach dramatically reduces the amount of data that needs to be processed, thereby lowering power consumption.

Neuromorphic architectures also leverage parallel processing capabilities, allowing for efficient distribution of computational tasks. This parallelism enables the system to handle multiple events simultaneously, further enhancing energy efficiency. Additionally, the use of spiking neural networks in neuromorphic chips allows for sparse and efficient information encoding, reducing the overall energy required for data transmission and processing.

The design of neuromorphic chips often incorporates low-power analog circuits and mixed-signal processing elements. These components contribute to reduced power consumption compared to traditional digital circuits. Furthermore, the ability to perform in-memory computing in neuromorphic architectures minimizes data movement between memory and processing units, a significant source of energy consumption in conventional computing systems.

When implementing event-based vision pipelines and algorithms on neuromorphic chips, developers must carefully consider energy-efficient coding practices. This includes optimizing algorithms to minimize unnecessary computations and leveraging the inherent sparsity of event data. Techniques such as event filtering, clustering, and adaptive thresholding can be employed to reduce the computational load while maintaining system performance.

As the field of neuromorphic computing advances, researchers are exploring novel materials and device technologies to further enhance energy efficiency. These include the development of memristive devices and other emerging non-volatile memory technologies that can enable ultra-low-power operation in neuromorphic systems. Such advancements hold the potential to push the boundaries of energy efficiency in event-based vision applications.

In conclusion, the energy efficiency considerations of neuromorphic chips for event-based vision are multifaceted, encompassing hardware design, algorithmic optimization, and emerging technologies. By leveraging these aspects, developers can create highly efficient vision systems that offer significant advantages in power consumption, making them ideal for a wide range of applications, from mobile and embedded devices to large-scale vision processing systems.

One of the primary energy efficiency benefits of neuromorphic chips in event-based vision lies in their ability to process information only when relevant events occur. Unlike conventional frame-based systems that continuously process data, event-based vision systems respond solely to changes in the visual scene. This approach dramatically reduces the amount of data that needs to be processed, thereby lowering power consumption.

Neuromorphic architectures also leverage parallel processing capabilities, allowing for efficient distribution of computational tasks. This parallelism enables the system to handle multiple events simultaneously, further enhancing energy efficiency. Additionally, the use of spiking neural networks in neuromorphic chips allows for sparse and efficient information encoding, reducing the overall energy required for data transmission and processing.

The design of neuromorphic chips often incorporates low-power analog circuits and mixed-signal processing elements. These components contribute to reduced power consumption compared to traditional digital circuits. Furthermore, the ability to perform in-memory computing in neuromorphic architectures minimizes data movement between memory and processing units, a significant source of energy consumption in conventional computing systems.

When implementing event-based vision pipelines and algorithms on neuromorphic chips, developers must carefully consider energy-efficient coding practices. This includes optimizing algorithms to minimize unnecessary computations and leveraging the inherent sparsity of event data. Techniques such as event filtering, clustering, and adaptive thresholding can be employed to reduce the computational load while maintaining system performance.

As the field of neuromorphic computing advances, researchers are exploring novel materials and device technologies to further enhance energy efficiency. These include the development of memristive devices and other emerging non-volatile memory technologies that can enable ultra-low-power operation in neuromorphic systems. Such advancements hold the potential to push the boundaries of energy efficiency in event-based vision applications.

In conclusion, the energy efficiency considerations of neuromorphic chips for event-based vision are multifaceted, encompassing hardware design, algorithmic optimization, and emerging technologies. By leveraging these aspects, developers can create highly efficient vision systems that offer significant advantages in power consumption, making them ideal for a wide range of applications, from mobile and embedded devices to large-scale vision processing systems.

Neuromorphic Hardware-Software Co-design

Neuromorphic hardware-software co-design is a crucial approach for optimizing event-based vision systems using neuromorphic chips. This methodology involves the simultaneous development of hardware architectures and software algorithms, ensuring seamless integration and maximum efficiency. In the context of event-based vision, this co-design process focuses on leveraging the unique characteristics of neuromorphic chips to process sparse, asynchronous visual data effectively.

The hardware aspect of co-design involves tailoring neuromorphic chip architectures to efficiently handle event-based data streams. This includes optimizing the chip's neuron and synapse models, implementing specialized event-driven processing units, and designing low-latency communication pathways. These hardware optimizations enable rapid and energy-efficient processing of visual events, mimicking the parallel and asynchronous nature of biological visual systems.

On the software side, co-design efforts concentrate on developing algorithms and processing pipelines that exploit the inherent advantages of neuromorphic hardware. This involves creating event-driven algorithms that operate directly on sparse, time-stamped visual events rather than traditional frame-based representations. Key software components include event filtering, feature extraction, and object recognition algorithms specifically designed to work with the neuromorphic hardware's unique processing paradigm.

The co-design process also addresses the challenges of mapping event-based vision algorithms onto neuromorphic hardware. This includes developing efficient data structures and memory management techniques to handle the asynchronous nature of event data. Additionally, co-design efforts focus on optimizing the distribution of computational tasks between neuromorphic cores and conventional processors, ensuring optimal utilization of available resources.

One critical aspect of neuromorphic hardware-software co-design is the development of simulation and emulation tools. These tools allow researchers and developers to prototype and evaluate different hardware-software configurations before committing to physical chip designs. Such simulations help identify potential bottlenecks, optimize resource allocation, and refine algorithms for improved performance on neuromorphic platforms.

The co-design approach also emphasizes the importance of scalability and adaptability. As neuromorphic chip technologies evolve, software frameworks must be designed to accommodate new hardware features and capabilities. This flexibility ensures that event-based vision systems can leverage advancements in neuromorphic computing without requiring complete redesigns of existing software pipelines.

The hardware aspect of co-design involves tailoring neuromorphic chip architectures to efficiently handle event-based data streams. This includes optimizing the chip's neuron and synapse models, implementing specialized event-driven processing units, and designing low-latency communication pathways. These hardware optimizations enable rapid and energy-efficient processing of visual events, mimicking the parallel and asynchronous nature of biological visual systems.

On the software side, co-design efforts concentrate on developing algorithms and processing pipelines that exploit the inherent advantages of neuromorphic hardware. This involves creating event-driven algorithms that operate directly on sparse, time-stamped visual events rather than traditional frame-based representations. Key software components include event filtering, feature extraction, and object recognition algorithms specifically designed to work with the neuromorphic hardware's unique processing paradigm.

The co-design process also addresses the challenges of mapping event-based vision algorithms onto neuromorphic hardware. This includes developing efficient data structures and memory management techniques to handle the asynchronous nature of event data. Additionally, co-design efforts focus on optimizing the distribution of computational tasks between neuromorphic cores and conventional processors, ensuring optimal utilization of available resources.

One critical aspect of neuromorphic hardware-software co-design is the development of simulation and emulation tools. These tools allow researchers and developers to prototype and evaluate different hardware-software configurations before committing to physical chip designs. Such simulations help identify potential bottlenecks, optimize resource allocation, and refine algorithms for improved performance on neuromorphic platforms.

The co-design approach also emphasizes the importance of scalability and adaptability. As neuromorphic chip technologies evolve, software frameworks must be designed to accommodate new hardware features and capabilities. This flexibility ensures that event-based vision systems can leverage advancements in neuromorphic computing without requiring complete redesigns of existing software pipelines.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!