Neuromorphic Benchmark Suite: Proposed Tests for Fair Comparison (proposal + code)

AUG 20, 20258 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Benchmarking Evolution and Objectives

Neuromorphic computing has evolved significantly since its inception, driven by the goal of emulating the human brain's efficiency and adaptability in artificial systems. The field's objectives have shifted from simple neural network implementations to creating complex, brain-inspired architectures capable of cognitive tasks. Initially, the focus was on developing hardware that could mimic basic neuronal functions, but as technology advanced, the aims expanded to include energy efficiency, scalability, and real-time learning capabilities.

The evolution of neuromorphic benchmarking reflects the field's maturation. Early benchmarks primarily assessed the speed and accuracy of simple pattern recognition tasks. However, as neuromorphic systems grew more sophisticated, the need for more comprehensive and standardized evaluation methods became apparent. This led to the development of benchmarks that could assess a wider range of cognitive functions, including learning, memory, and decision-making processes.

The objectives of neuromorphic benchmarking have also expanded over time. While performance metrics remain crucial, modern benchmarks aim to evaluate additional aspects such as energy efficiency, adaptability to new tasks, and robustness in noisy environments. These objectives align with the broader goals of neuromorphic computing: to create systems that not only perform well but also exhibit the flexibility and efficiency of biological neural networks.

The proposed Neuromorphic Benchmark Suite represents a significant step forward in this evolution. By offering a standardized set of tests for fair comparison, it addresses a critical need in the field. The suite's objectives likely include providing a comprehensive evaluation of neuromorphic systems across various dimensions, enabling researchers and developers to assess and compare different architectures objectively.

Furthermore, the inclusion of both a proposal and code in the benchmark suite suggests a practical approach to implementation. This combination aims to bridge the gap between theoretical benchmarking concepts and their practical application, ensuring that the tests are not only well-defined but also readily executable across different neuromorphic platforms.

As the field continues to advance, the objectives of neuromorphic benchmarking are expected to evolve further. Future benchmarks may incorporate tests for more complex cognitive functions, assess the ability of systems to generalize across diverse tasks, and evaluate their potential for integration with conventional computing paradigms. The ongoing refinement of benchmarking objectives will play a crucial role in guiding the development of next-generation neuromorphic systems, pushing the boundaries of what these brain-inspired architectures can achieve.

The evolution of neuromorphic benchmarking reflects the field's maturation. Early benchmarks primarily assessed the speed and accuracy of simple pattern recognition tasks. However, as neuromorphic systems grew more sophisticated, the need for more comprehensive and standardized evaluation methods became apparent. This led to the development of benchmarks that could assess a wider range of cognitive functions, including learning, memory, and decision-making processes.

The objectives of neuromorphic benchmarking have also expanded over time. While performance metrics remain crucial, modern benchmarks aim to evaluate additional aspects such as energy efficiency, adaptability to new tasks, and robustness in noisy environments. These objectives align with the broader goals of neuromorphic computing: to create systems that not only perform well but also exhibit the flexibility and efficiency of biological neural networks.

The proposed Neuromorphic Benchmark Suite represents a significant step forward in this evolution. By offering a standardized set of tests for fair comparison, it addresses a critical need in the field. The suite's objectives likely include providing a comprehensive evaluation of neuromorphic systems across various dimensions, enabling researchers and developers to assess and compare different architectures objectively.

Furthermore, the inclusion of both a proposal and code in the benchmark suite suggests a practical approach to implementation. This combination aims to bridge the gap between theoretical benchmarking concepts and their practical application, ensuring that the tests are not only well-defined but also readily executable across different neuromorphic platforms.

As the field continues to advance, the objectives of neuromorphic benchmarking are expected to evolve further. Future benchmarks may incorporate tests for more complex cognitive functions, assess the ability of systems to generalize across diverse tasks, and evaluate their potential for integration with conventional computing paradigms. The ongoing refinement of benchmarking objectives will play a crucial role in guiding the development of next-generation neuromorphic systems, pushing the boundaries of what these brain-inspired architectures can achieve.

Market Demand for Standardized Neuromorphic Testing

The market demand for standardized neuromorphic testing is rapidly growing as the field of neuromorphic computing continues to advance. This demand is driven by several key factors in the industry. Firstly, as more companies and research institutions develop neuromorphic hardware and software solutions, there is an increasing need for fair and consistent methods to evaluate and compare these different systems. Without standardized benchmarks, it becomes challenging to assess the relative performance, efficiency, and capabilities of various neuromorphic implementations.

Secondly, investors and potential adopters of neuromorphic technologies require reliable metrics to make informed decisions. Standardized testing suites provide a common language and set of criteria that can be used to evaluate the potential of different neuromorphic solutions, helping to guide investment and adoption strategies. This is particularly important as the neuromorphic computing market is expected to grow significantly in the coming years, with some estimates projecting a compound annual growth rate of over 20% through 2026.

Furthermore, the demand for standardized testing is fueled by the diverse range of applications for neuromorphic computing. From edge computing devices to large-scale AI systems, neuromorphic technologies are being explored for use in autonomous vehicles, robotics, natural language processing, and more. Each of these application domains has unique requirements and performance metrics, necessitating a comprehensive suite of benchmarks that can evaluate neuromorphic systems across various use cases.

The academic and research communities also contribute to the demand for standardized testing. As neuromorphic computing becomes a more prominent field of study, researchers require consistent methods to validate their work and compare results across different institutions and approaches. Standardized benchmarks facilitate reproducibility in research and accelerate the pace of innovation by providing clear targets for improvement.

Additionally, as neuromorphic computing moves closer to commercial viability, industry standards bodies and regulatory agencies are beginning to take notice. There is a growing recognition of the need for established testing methodologies to ensure the reliability, safety, and performance of neuromorphic systems, particularly in critical applications such as healthcare and autonomous systems. This regulatory interest further drives the demand for comprehensive and widely accepted neuromorphic benchmarks.

Secondly, investors and potential adopters of neuromorphic technologies require reliable metrics to make informed decisions. Standardized testing suites provide a common language and set of criteria that can be used to evaluate the potential of different neuromorphic solutions, helping to guide investment and adoption strategies. This is particularly important as the neuromorphic computing market is expected to grow significantly in the coming years, with some estimates projecting a compound annual growth rate of over 20% through 2026.

Furthermore, the demand for standardized testing is fueled by the diverse range of applications for neuromorphic computing. From edge computing devices to large-scale AI systems, neuromorphic technologies are being explored for use in autonomous vehicles, robotics, natural language processing, and more. Each of these application domains has unique requirements and performance metrics, necessitating a comprehensive suite of benchmarks that can evaluate neuromorphic systems across various use cases.

The academic and research communities also contribute to the demand for standardized testing. As neuromorphic computing becomes a more prominent field of study, researchers require consistent methods to validate their work and compare results across different institutions and approaches. Standardized benchmarks facilitate reproducibility in research and accelerate the pace of innovation by providing clear targets for improvement.

Additionally, as neuromorphic computing moves closer to commercial viability, industry standards bodies and regulatory agencies are beginning to take notice. There is a growing recognition of the need for established testing methodologies to ensure the reliability, safety, and performance of neuromorphic systems, particularly in critical applications such as healthcare and autonomous systems. This regulatory interest further drives the demand for comprehensive and widely accepted neuromorphic benchmarks.

Current Challenges in Neuromorphic Benchmarking

Neuromorphic benchmarking faces several significant challenges in the current landscape. One of the primary issues is the lack of standardization across different neuromorphic hardware platforms and algorithms. This diversity makes it difficult to establish a common ground for fair comparisons, as each system may have unique architectures, processing capabilities, and energy consumption profiles.

Another challenge lies in the complexity of neuromorphic systems, which often integrate various components such as sensors, processing units, and memory elements. This intricate nature makes it challenging to isolate and measure specific performance metrics accurately. Furthermore, the dynamic and adaptive nature of neuromorphic systems adds another layer of complexity to benchmarking efforts, as their performance can change over time or in response to different inputs.

The absence of widely accepted benchmark tasks specifically designed for neuromorphic systems is also a significant hurdle. While traditional computer benchmarks exist, they may not adequately capture the unique characteristics and potential advantages of neuromorphic computing. This gap necessitates the development of new benchmark suites that can effectively evaluate neuromorphic systems' capabilities in areas such as pattern recognition, real-time processing, and energy efficiency.

Scalability presents another challenge in neuromorphic benchmarking. As neuromorphic systems continue to grow in size and complexity, ensuring that benchmarks remain relevant and applicable across different scales becomes increasingly difficult. This scalability issue is particularly crucial when comparing systems of vastly different sizes or when evaluating the potential for future large-scale neuromorphic implementations.

Moreover, the interdisciplinary nature of neuromorphic computing, which spans neuroscience, computer science, and electrical engineering, complicates the benchmarking process. Developing comprehensive benchmarks that address all relevant aspects of neuromorphic systems requires expertise from multiple domains, making it challenging to create universally accepted testing methodologies.

Lastly, the rapid pace of advancement in neuromorphic computing poses a challenge for benchmarking efforts. As new hardware architectures and algorithms emerge, benchmarks must evolve to remain relevant and capture the latest innovations in the field. This constant evolution necessitates ongoing collaboration and consensus-building within the neuromorphic research community to maintain up-to-date and meaningful benchmarking standards.

Another challenge lies in the complexity of neuromorphic systems, which often integrate various components such as sensors, processing units, and memory elements. This intricate nature makes it challenging to isolate and measure specific performance metrics accurately. Furthermore, the dynamic and adaptive nature of neuromorphic systems adds another layer of complexity to benchmarking efforts, as their performance can change over time or in response to different inputs.

The absence of widely accepted benchmark tasks specifically designed for neuromorphic systems is also a significant hurdle. While traditional computer benchmarks exist, they may not adequately capture the unique characteristics and potential advantages of neuromorphic computing. This gap necessitates the development of new benchmark suites that can effectively evaluate neuromorphic systems' capabilities in areas such as pattern recognition, real-time processing, and energy efficiency.

Scalability presents another challenge in neuromorphic benchmarking. As neuromorphic systems continue to grow in size and complexity, ensuring that benchmarks remain relevant and applicable across different scales becomes increasingly difficult. This scalability issue is particularly crucial when comparing systems of vastly different sizes or when evaluating the potential for future large-scale neuromorphic implementations.

Moreover, the interdisciplinary nature of neuromorphic computing, which spans neuroscience, computer science, and electrical engineering, complicates the benchmarking process. Developing comprehensive benchmarks that address all relevant aspects of neuromorphic systems requires expertise from multiple domains, making it challenging to create universally accepted testing methodologies.

Lastly, the rapid pace of advancement in neuromorphic computing poses a challenge for benchmarking efforts. As new hardware architectures and algorithms emerge, benchmarks must evolve to remain relevant and capture the latest innovations in the field. This constant evolution necessitates ongoing collaboration and consensus-building within the neuromorphic research community to maintain up-to-date and meaningful benchmarking standards.

Existing Neuromorphic Benchmark Suites and Methodologies

01 Standardized benchmarking for neuromorphic systems

Developing standardized benchmark suites for fair comparison of neuromorphic computing systems. These suites include diverse tasks and metrics to evaluate performance, energy efficiency, and scalability across different neuromorphic architectures.- Standardized benchmarking for neuromorphic systems: Developing standardized benchmark suites for fair comparison of neuromorphic systems. These suites include diverse tasks and metrics to evaluate performance, energy efficiency, and scalability across different neuromorphic architectures.

- Performance evaluation of spiking neural networks: Creating specific benchmarks for spiking neural networks, focusing on their unique characteristics such as temporal dynamics and event-driven processing. These benchmarks assess accuracy, latency, and energy consumption in neuromorphic hardware implementations.

- Comparative analysis of neuromorphic hardware platforms: Establishing methods for fair comparison between different neuromorphic hardware platforms, considering factors like architecture, processing capabilities, and power consumption. This enables objective evaluation of various neuromorphic computing solutions.

- Benchmark datasets for neuromorphic applications: Curating and developing specialized datasets tailored for neuromorphic computing applications. These datasets cover various domains such as computer vision, speech recognition, and robotics, allowing for comprehensive evaluation of neuromorphic systems across different use cases.

- Metrics for energy efficiency and scalability: Defining and implementing specific metrics to assess the energy efficiency and scalability of neuromorphic systems. These metrics help in comparing different architectures based on their power consumption, throughput, and ability to handle increasing computational demands.

02 Performance evaluation of spiking neural networks

Creating specific benchmarks for spiking neural networks, focusing on their unique characteristics such as temporal dynamics and event-driven processing. These benchmarks assess accuracy, latency, and energy consumption in neuromorphic hardware implementations.Expand Specific Solutions03 Comparative analysis of neuromorphic hardware platforms

Establishing methods for fair comparison between different neuromorphic hardware platforms, considering factors like architecture, scalability, and programming models. This includes developing platform-agnostic benchmarks and standardized evaluation criteria.Expand Specific Solutions04 Neuromorphic algorithms and software benchmarking

Designing benchmark suites for neuromorphic algorithms and software frameworks, focusing on aspects such as learning capabilities, adaptability, and computational efficiency. These benchmarks help in comparing different algorithmic approaches and software implementations.Expand Specific Solutions05 Real-world application benchmarks for neuromorphic systems

Developing benchmark suites that simulate real-world applications for neuromorphic systems, such as robotics, computer vision, and natural language processing. These benchmarks assess the practical performance and adaptability of neuromorphic systems in complex, dynamic environments.Expand Specific Solutions

Key Players in Neuromorphic Hardware and Benchmarking

The neuromorphic benchmark suite competition landscape is in its early stages, with the market still developing and relatively small. The technology is not yet fully mature, but shows promising potential for advancing AI and machine learning applications. Key players in this emerging field include IBM, Samsung, and Huawei, who are investing in neuromorphic computing research and development. Academic institutions like Huazhong University of Science & Technology and Xi'an Jiaotong University are also contributing to advancements. As the technology progresses, we can expect increased collaboration between industry and academia to establish standardized benchmarks and drive innovation in neuromorphic computing systems.

International Business Machines Corp.

Technical Solution: IBM has developed a comprehensive Neuromorphic Benchmark Suite for fair comparison of neuromorphic systems. Their approach includes a set of standardized tests that evaluate various aspects of neuromorphic computing, such as energy efficiency, speed, and accuracy. The suite incorporates both synthetic and real-world datasets, covering tasks like image classification, speech recognition, and time series prediction. IBM's benchmark suite utilizes their TrueNorth neuromorphic chip architecture, which emulates the brain's neural networks using a highly parallel, event-driven approach[1][3]. The suite also includes software tools for performance analysis and visualization, enabling researchers to easily compare different neuromorphic implementations[2].

Strengths: Comprehensive evaluation framework, industry-standard datasets, and integration with IBM's advanced neuromorphic hardware. Weaknesses: Potential bias towards IBM's own neuromorphic architecture, may require adaptation for other platforms.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed a Neuromorphic Processing Unit (NPU) benchmark suite focusing on energy efficiency and real-time processing for edge devices. Their approach includes a set of tests specifically designed for mobile and IoT applications, evaluating performance on tasks such as object detection, natural language processing, and sensor data analysis. Samsung's benchmark suite is optimized for their neuromorphic chip designs, which utilize a spike-based computing model to achieve high energy efficiency[4]. The suite includes both low-level microbenchmarks and application-level tests, allowing for a comprehensive evaluation of neuromorphic systems across different scales[5].

Strengths: Tailored for edge computing and mobile devices, focus on energy efficiency. Weaknesses: May be less comprehensive for large-scale neuromorphic systems, potential bias towards Samsung's hardware architecture.

Core Innovations in Proposed Neuromorphic Benchmark Tests

Neuron circuit, operating method thereof, and neuromorphic device including neuron circuit

PatentPendingUS20230135011A1

Innovation

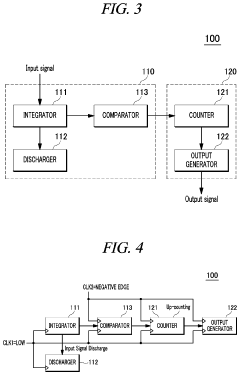

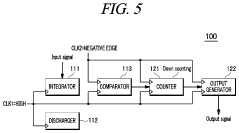

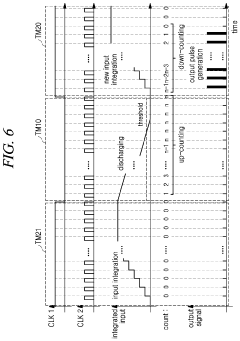

- A neuron circuit configured with digital logic, comprising an input unit to integrate and discharge signals, and an output unit to perform up-counting and down-counting, utilizing clock signals to control operations of integrators, comparators, and counters for precise signal processing.

Standardization Efforts in Neuromorphic Benchmarking

The field of neuromorphic computing has seen significant growth in recent years, with various hardware platforms and algorithms being developed to mimic the functionality of biological neural systems. However, the lack of standardized benchmarks has hindered fair comparisons between different neuromorphic systems and approaches. Recognizing this challenge, several initiatives have emerged to establish standardized benchmarking suites for neuromorphic computing.

One notable effort is the Neuromorphic Benchmark Suite, which proposes a set of tests designed to evaluate the performance of neuromorphic systems across various domains. This suite aims to provide a comprehensive framework for assessing the capabilities of different neuromorphic architectures and algorithms. The proposed tests cover a wide range of tasks, including image classification, speech recognition, and reinforcement learning, allowing for a holistic evaluation of neuromorphic systems.

Another important standardization effort is the development of the Neuromorphic Computing Benchmark (NCB) by a consortium of research institutions and industry partners. The NCB focuses on creating a set of standardized metrics and evaluation methodologies specifically tailored to neuromorphic hardware and algorithms. This initiative aims to establish a common ground for comparing the energy efficiency, computational speed, and accuracy of different neuromorphic solutions.

The Neuromorphic Engineering Workshop (NEW) has also played a crucial role in promoting standardization efforts. Through annual meetings and collaborative discussions, NEW has been instrumental in fostering consensus among researchers and practitioners regarding benchmark requirements and evaluation criteria. These efforts have led to the development of guidelines for reporting neuromorphic system performance and the establishment of a shared repository of benchmark datasets and tasks.

In addition to these initiatives, several open-source projects have emerged to support standardization efforts in neuromorphic benchmarking. For instance, the Neuromorphic Evaluation Toolkit (NET) provides a collection of software tools and libraries for implementing and running standardized benchmarks on various neuromorphic platforms. This toolkit facilitates the reproducibility of results and enables researchers to easily compare their systems against established baselines.

The IEEE Neuromorphic Computing Standards Working Group has been actively working on developing formal standards for neuromorphic computing. Their efforts include defining common terminology, specifying performance metrics, and establishing guidelines for reporting experimental results. These standards aim to create a unified framework for evaluating and comparing neuromorphic systems across different research groups and industry players.

One notable effort is the Neuromorphic Benchmark Suite, which proposes a set of tests designed to evaluate the performance of neuromorphic systems across various domains. This suite aims to provide a comprehensive framework for assessing the capabilities of different neuromorphic architectures and algorithms. The proposed tests cover a wide range of tasks, including image classification, speech recognition, and reinforcement learning, allowing for a holistic evaluation of neuromorphic systems.

Another important standardization effort is the development of the Neuromorphic Computing Benchmark (NCB) by a consortium of research institutions and industry partners. The NCB focuses on creating a set of standardized metrics and evaluation methodologies specifically tailored to neuromorphic hardware and algorithms. This initiative aims to establish a common ground for comparing the energy efficiency, computational speed, and accuracy of different neuromorphic solutions.

The Neuromorphic Engineering Workshop (NEW) has also played a crucial role in promoting standardization efforts. Through annual meetings and collaborative discussions, NEW has been instrumental in fostering consensus among researchers and practitioners regarding benchmark requirements and evaluation criteria. These efforts have led to the development of guidelines for reporting neuromorphic system performance and the establishment of a shared repository of benchmark datasets and tasks.

In addition to these initiatives, several open-source projects have emerged to support standardization efforts in neuromorphic benchmarking. For instance, the Neuromorphic Evaluation Toolkit (NET) provides a collection of software tools and libraries for implementing and running standardized benchmarks on various neuromorphic platforms. This toolkit facilitates the reproducibility of results and enables researchers to easily compare their systems against established baselines.

The IEEE Neuromorphic Computing Standards Working Group has been actively working on developing formal standards for neuromorphic computing. Their efforts include defining common terminology, specifying performance metrics, and establishing guidelines for reporting experimental results. These standards aim to create a unified framework for evaluating and comparing neuromorphic systems across different research groups and industry players.

Ethical Implications of Neuromorphic Computing Advancements

As neuromorphic computing continues to advance, it is crucial to consider the ethical implications of this technology. The development of brain-inspired computing systems raises significant questions about privacy, data security, and the potential for unintended consequences. One primary concern is the protection of personal information, as neuromorphic systems may process and store vast amounts of sensitive data related to human cognition and behavior.

The increasing capabilities of neuromorphic systems also bring forth questions about autonomy and decision-making. As these systems become more sophisticated, there is a need to establish clear guidelines for their use in critical applications, such as healthcare, finance, and autonomous vehicles. Ensuring transparency and accountability in the decision-making processes of neuromorphic systems is essential to maintain public trust and prevent potential harm.

Another ethical consideration is the impact of neuromorphic computing on employment and the workforce. As these systems become more capable of performing complex cognitive tasks, there is a risk of job displacement in various industries. This raises questions about the need for reskilling and upskilling programs to prepare workers for a changing job market.

The potential for neuromorphic systems to enhance human cognitive abilities also presents ethical dilemmas. While such advancements could lead to significant improvements in areas like medical diagnosis and scientific research, they also raise concerns about equity and access. Ensuring fair distribution of these technologies and preventing the creation of a cognitive divide between those who have access and those who do not is a critical ethical challenge.

Furthermore, the development of neuromorphic systems that closely mimic human brain function raises philosophical questions about consciousness and the nature of intelligence. As these systems become more advanced, it will be necessary to establish ethical frameworks for their treatment and rights, particularly if they begin to exhibit characteristics traditionally associated with sentience.

Lastly, the ethical use of neuromorphic computing in military and defense applications requires careful consideration. The potential for these systems to enhance decision-making in combat situations must be balanced against the risks of autonomous weapons and the ethical implications of removing human judgment from warfare.

The increasing capabilities of neuromorphic systems also bring forth questions about autonomy and decision-making. As these systems become more sophisticated, there is a need to establish clear guidelines for their use in critical applications, such as healthcare, finance, and autonomous vehicles. Ensuring transparency and accountability in the decision-making processes of neuromorphic systems is essential to maintain public trust and prevent potential harm.

Another ethical consideration is the impact of neuromorphic computing on employment and the workforce. As these systems become more capable of performing complex cognitive tasks, there is a risk of job displacement in various industries. This raises questions about the need for reskilling and upskilling programs to prepare workers for a changing job market.

The potential for neuromorphic systems to enhance human cognitive abilities also presents ethical dilemmas. While such advancements could lead to significant improvements in areas like medical diagnosis and scientific research, they also raise concerns about equity and access. Ensuring fair distribution of these technologies and preventing the creation of a cognitive divide between those who have access and those who do not is a critical ethical challenge.

Furthermore, the development of neuromorphic systems that closely mimic human brain function raises philosophical questions about consciousness and the nature of intelligence. As these systems become more advanced, it will be necessary to establish ethical frameworks for their treatment and rights, particularly if they begin to exhibit characteristics traditionally associated with sentience.

Lastly, the ethical use of neuromorphic computing in military and defense applications requires careful consideration. The potential for these systems to enhance decision-making in combat situations must be balanced against the risks of autonomous weapons and the ethical implications of removing human judgment from warfare.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!