Neuromorphic Learning Rules: STDP, Homeostasis and Practical Implementations

AUG 20, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Learning Evolution and Objectives

Neuromorphic learning has evolved significantly since its inception, drawing inspiration from biological neural systems to create more efficient and adaptable artificial intelligence. The field emerged in the late 1980s with the pioneering work of Carver Mead, who proposed the concept of using analog VLSI circuits to mimic the behavior of biological neurons and synapses.

Over the past three decades, neuromorphic learning has progressed through several key phases. Initially, research focused on developing hardware implementations of simple neural network models. This was followed by a period of exploration into more complex learning rules and architectures, inspired by advances in neuroscience and cognitive science.

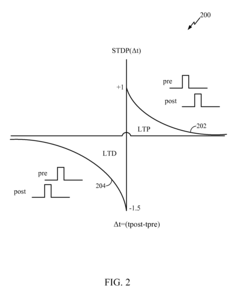

The discovery of Spike-Timing-Dependent Plasticity (STDP) in the late 1990s marked a significant milestone in neuromorphic learning. STDP provided a biologically plausible mechanism for synaptic plasticity, allowing for the development of more realistic and efficient learning algorithms. This discovery spurred a new wave of research into spike-based neural networks and learning rules.

In recent years, the field has seen a surge of interest in homeostatic plasticity mechanisms, which help maintain stability in neural networks while allowing for adaptive learning. These mechanisms are crucial for developing robust and scalable neuromorphic systems capable of continuous learning in dynamic environments.

The primary objectives of current research in neuromorphic learning rules are multifaceted. First, there is a strong focus on improving the energy efficiency and scalability of neuromorphic systems. This involves developing learning rules that can be implemented in low-power hardware while maintaining high performance.

Another key objective is to enhance the adaptability and generalization capabilities of neuromorphic systems. Researchers are exploring ways to combine different learning rules, such as STDP and homeostasis, to create more flexible and robust learning algorithms that can handle a wide range of tasks and environments.

Furthermore, there is a growing emphasis on bridging the gap between neuromorphic computing and traditional machine learning. This includes developing hybrid approaches that leverage the strengths of both paradigms and exploring ways to implement popular deep learning algorithms in neuromorphic hardware.

Lastly, a critical objective is to advance the practical implementation of neuromorphic learning rules in real-world applications. This involves addressing challenges related to hardware design, system integration, and software development to create neuromorphic systems that can be deployed in various domains, from edge computing to large-scale data centers.

Over the past three decades, neuromorphic learning has progressed through several key phases. Initially, research focused on developing hardware implementations of simple neural network models. This was followed by a period of exploration into more complex learning rules and architectures, inspired by advances in neuroscience and cognitive science.

The discovery of Spike-Timing-Dependent Plasticity (STDP) in the late 1990s marked a significant milestone in neuromorphic learning. STDP provided a biologically plausible mechanism for synaptic plasticity, allowing for the development of more realistic and efficient learning algorithms. This discovery spurred a new wave of research into spike-based neural networks and learning rules.

In recent years, the field has seen a surge of interest in homeostatic plasticity mechanisms, which help maintain stability in neural networks while allowing for adaptive learning. These mechanisms are crucial for developing robust and scalable neuromorphic systems capable of continuous learning in dynamic environments.

The primary objectives of current research in neuromorphic learning rules are multifaceted. First, there is a strong focus on improving the energy efficiency and scalability of neuromorphic systems. This involves developing learning rules that can be implemented in low-power hardware while maintaining high performance.

Another key objective is to enhance the adaptability and generalization capabilities of neuromorphic systems. Researchers are exploring ways to combine different learning rules, such as STDP and homeostasis, to create more flexible and robust learning algorithms that can handle a wide range of tasks and environments.

Furthermore, there is a growing emphasis on bridging the gap between neuromorphic computing and traditional machine learning. This includes developing hybrid approaches that leverage the strengths of both paradigms and exploring ways to implement popular deep learning algorithms in neuromorphic hardware.

Lastly, a critical objective is to advance the practical implementation of neuromorphic learning rules in real-world applications. This involves addressing challenges related to hardware design, system integration, and software development to create neuromorphic systems that can be deployed in various domains, from edge computing to large-scale data centers.

Market Demand for Brain-Inspired Computing

The market demand for brain-inspired computing has been steadily growing in recent years, driven by the increasing need for more efficient and intelligent computing systems. This demand stems from various sectors, including artificial intelligence, robotics, autonomous vehicles, and advanced data processing applications.

In the field of artificial intelligence, there is a growing interest in neuromorphic computing systems that can mimic the human brain's cognitive abilities. These systems offer the potential for more energy-efficient and adaptable AI solutions, capable of handling complex tasks with greater speed and accuracy. The demand for such systems is particularly strong in areas like natural language processing, computer vision, and decision-making algorithms.

The robotics industry is another significant driver of demand for brain-inspired computing. As robots become more sophisticated and are deployed in increasingly complex environments, there is a need for computing systems that can process sensory information and make decisions in real-time, similar to biological neural networks. This demand extends to both industrial robotics and consumer-oriented applications, such as personal assistants and home automation systems.

Autonomous vehicles represent a rapidly growing market segment that heavily relies on brain-inspired computing. The ability to process vast amounts of sensor data, make split-second decisions, and adapt to changing road conditions requires computing systems that can emulate the human brain's cognitive functions. As the automotive industry continues to invest in self-driving technologies, the demand for neuromorphic computing solutions is expected to surge.

In the realm of data processing and analytics, brain-inspired computing offers new possibilities for handling big data and extracting meaningful insights. Traditional computing architectures struggle with the sheer volume and complexity of modern data sets, while neuromorphic systems can potentially offer more efficient and scalable solutions. This has led to increased interest from industries such as finance, healthcare, and scientific research, where complex data analysis is crucial.

The Internet of Things (IoT) and edge computing are also driving demand for brain-inspired computing. As more devices become interconnected and require local processing capabilities, there is a growing need for energy-efficient, adaptive computing systems that can operate effectively in resource-constrained environments. Neuromorphic architectures, with their potential for low power consumption and high adaptability, are well-suited to meet these requirements.

In the field of artificial intelligence, there is a growing interest in neuromorphic computing systems that can mimic the human brain's cognitive abilities. These systems offer the potential for more energy-efficient and adaptable AI solutions, capable of handling complex tasks with greater speed and accuracy. The demand for such systems is particularly strong in areas like natural language processing, computer vision, and decision-making algorithms.

The robotics industry is another significant driver of demand for brain-inspired computing. As robots become more sophisticated and are deployed in increasingly complex environments, there is a need for computing systems that can process sensory information and make decisions in real-time, similar to biological neural networks. This demand extends to both industrial robotics and consumer-oriented applications, such as personal assistants and home automation systems.

Autonomous vehicles represent a rapidly growing market segment that heavily relies on brain-inspired computing. The ability to process vast amounts of sensor data, make split-second decisions, and adapt to changing road conditions requires computing systems that can emulate the human brain's cognitive functions. As the automotive industry continues to invest in self-driving technologies, the demand for neuromorphic computing solutions is expected to surge.

In the realm of data processing and analytics, brain-inspired computing offers new possibilities for handling big data and extracting meaningful insights. Traditional computing architectures struggle with the sheer volume and complexity of modern data sets, while neuromorphic systems can potentially offer more efficient and scalable solutions. This has led to increased interest from industries such as finance, healthcare, and scientific research, where complex data analysis is crucial.

The Internet of Things (IoT) and edge computing are also driving demand for brain-inspired computing. As more devices become interconnected and require local processing capabilities, there is a growing need for energy-efficient, adaptive computing systems that can operate effectively in resource-constrained environments. Neuromorphic architectures, with their potential for low power consumption and high adaptability, are well-suited to meet these requirements.

Current Challenges in Neuromorphic Learning

Neuromorphic learning, inspired by the biological processes of the human brain, faces several significant challenges in its current state of development. One of the primary obstacles is the scalability of neuromorphic systems. While small-scale implementations have shown promise, scaling these systems to match the complexity of the human brain remains a formidable task. This challenge is compounded by the need for efficient hardware architectures that can support the massive parallelism and low power consumption characteristic of biological neural networks.

Another critical challenge lies in the accurate implementation of synaptic plasticity mechanisms, particularly Spike-Timing-Dependent Plasticity (STDP) and homeostatic plasticity. While these mechanisms are well-understood in biological systems, translating them into artificial neuromorphic systems with high fidelity is complex. The precise timing and magnitude of synaptic weight changes in STDP, for instance, require sophisticated control mechanisms that are difficult to replicate in hardware.

The issue of energy efficiency also presents a significant hurdle. Biological brains are remarkably energy-efficient, operating on a fraction of the power required by current artificial neural networks. Achieving comparable energy efficiency in neuromorphic systems is crucial for their practical implementation, especially in portable or embedded devices. This challenge necessitates innovations in both hardware design and learning algorithms.

Temporal dynamics and continuous learning pose another set of challenges. Neuromorphic systems must be capable of processing and adapting to temporal patterns in data streams, a feature that is intrinsic to biological neural networks but challenging to implement in artificial systems. Additionally, the ability to learn continuously without catastrophic forgetting remains an open problem, requiring novel approaches to memory consolidation and knowledge retention.

The integration of different learning rules and mechanisms is yet another challenge. While STDP and homeostasis are crucial, they are part of a more complex ecosystem of learning processes in biological brains. Developing neuromorphic systems that can seamlessly integrate multiple learning rules and adapt them based on the task at hand is a complex undertaking that requires further research and development.

Lastly, the challenge of bridging the gap between neuroscience and engineering persists. As our understanding of biological neural networks evolves, translating new insights into practical neuromorphic implementations requires close collaboration between neuroscientists and engineers. This interdisciplinary approach is essential for developing more biologically plausible and efficient neuromorphic learning systems.

Another critical challenge lies in the accurate implementation of synaptic plasticity mechanisms, particularly Spike-Timing-Dependent Plasticity (STDP) and homeostatic plasticity. While these mechanisms are well-understood in biological systems, translating them into artificial neuromorphic systems with high fidelity is complex. The precise timing and magnitude of synaptic weight changes in STDP, for instance, require sophisticated control mechanisms that are difficult to replicate in hardware.

The issue of energy efficiency also presents a significant hurdle. Biological brains are remarkably energy-efficient, operating on a fraction of the power required by current artificial neural networks. Achieving comparable energy efficiency in neuromorphic systems is crucial for their practical implementation, especially in portable or embedded devices. This challenge necessitates innovations in both hardware design and learning algorithms.

Temporal dynamics and continuous learning pose another set of challenges. Neuromorphic systems must be capable of processing and adapting to temporal patterns in data streams, a feature that is intrinsic to biological neural networks but challenging to implement in artificial systems. Additionally, the ability to learn continuously without catastrophic forgetting remains an open problem, requiring novel approaches to memory consolidation and knowledge retention.

The integration of different learning rules and mechanisms is yet another challenge. While STDP and homeostasis are crucial, they are part of a more complex ecosystem of learning processes in biological brains. Developing neuromorphic systems that can seamlessly integrate multiple learning rules and adapt them based on the task at hand is a complex undertaking that requires further research and development.

Lastly, the challenge of bridging the gap between neuroscience and engineering persists. As our understanding of biological neural networks evolves, translating new insights into practical neuromorphic implementations requires close collaboration between neuroscientists and engineers. This interdisciplinary approach is essential for developing more biologically plausible and efficient neuromorphic learning systems.

STDP and Homeostasis Implementation Approaches

01 Spike-Timing-Dependent Plasticity (STDP)

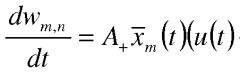

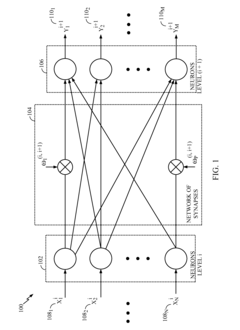

STDP is a fundamental learning rule in neuromorphic systems that adjusts synaptic weights based on the relative timing of pre- and post-synaptic spikes. This bio-inspired approach enables efficient unsupervised learning and adaptation in artificial neural networks, mimicking the plasticity observed in biological neurons.- Spike-Timing-Dependent Plasticity (STDP): STDP is a fundamental learning rule in neuromorphic systems that adjusts synaptic weights based on the relative timing of pre- and post-synaptic spikes. This bio-inspired approach enables efficient unsupervised learning and adaptation in artificial neural networks, mimicking the plasticity observed in biological neurons.

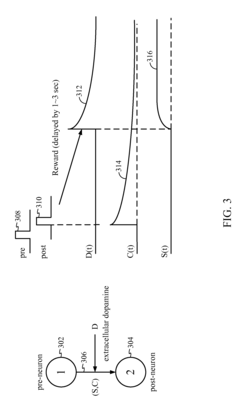

- Reinforcement Learning in Neuromorphic Systems: Implementing reinforcement learning algorithms in neuromorphic hardware allows for adaptive decision-making and optimization in complex environments. These systems can learn from rewards and penalties, adjusting their behavior to maximize long-term outcomes, which is particularly useful in robotics and autonomous systems.

- Homeostatic Plasticity Mechanisms: Homeostatic plasticity mechanisms in neuromorphic systems help maintain stability and prevent runaway excitation or inhibition. These adaptive processes adjust neural activity and synaptic strengths to keep the network within an optimal operating range, ensuring long-term stability and functionality.

- Memristive Devices for Synaptic Plasticity: Memristive devices are used to implement synaptic plasticity in hardware neuromorphic systems. These nanoscale components can continuously adjust their resistance based on the history of applied voltages, enabling efficient and low-power implementation of learning rules in artificial neural networks.

- Online Learning and Adaptation in Neuromorphic Systems: Neuromorphic systems capable of online learning can continuously adapt to new information and changing environments in real-time. This feature allows for dynamic reconfiguration of neural networks, enabling applications in adaptive control systems, pattern recognition, and autonomous agents that can learn and evolve their behavior over time.

02 Reinforcement Learning in Neuromorphic Systems

Implementing reinforcement learning algorithms in neuromorphic hardware allows for adaptive decision-making and optimization in complex environments. These systems can learn from feedback and rewards, adjusting their behavior to maximize long-term outcomes, similar to biological learning processes.Expand Specific Solutions03 Homeostatic Plasticity Mechanisms

Homeostatic plasticity rules maintain stability in neuromorphic networks by regulating overall neural activity and synaptic strength. These mechanisms prevent runaway excitation or inhibition, ensuring the network remains within an optimal operating range for learning and information processing.Expand Specific Solutions04 Structural Plasticity and Synaptic Pruning

Neuromorphic learning rules that incorporate structural plasticity allow for the formation and elimination of synaptic connections. This dynamic reconfiguration of network topology, inspired by synaptic pruning in biological brains, enables more efficient learning and adaptation to changing environments.Expand Specific Solutions05 Neuromodulation-based Learning Rules

Incorporating neuromodulatory signals into neuromorphic learning rules allows for context-dependent plasticity and learning. These systems mimic the effects of neurotransmitters like dopamine or acetylcholine in biological brains, enabling more flexible and adaptive learning in artificial neural networks.Expand Specific Solutions

Key Players in Neuromorphic Computing

The research on neuromorphic learning rules, particularly STDP and homeostasis, is in a rapidly evolving phase, with significant market potential in AI and brain-inspired computing. The field is characterized by a mix of academic institutions and major tech companies, indicating its transitional stage from fundamental research to practical applications. Key players like IBM, Qualcomm, and Intel are investing heavily, leveraging their expertise in hardware and AI. Universities such as Zhejiang University and MIT are contributing fundamental research. The involvement of diverse entities, from established tech giants to specialized research institutions, suggests a competitive landscape with opportunities for breakthrough innovations in neuromorphic computing.

International Business Machines Corp.

Technical Solution: IBM has made significant advancements in neuromorphic computing, particularly in the implementation of STDP and homeostasis learning rules. Their TrueNorth chip architecture incorporates these principles, allowing for efficient processing of neural networks[1]. IBM's approach involves using phase-change memory (PCM) devices to emulate synaptic plasticity, enabling the implementation of STDP in hardware[2]. The company has also developed a neuromorphic core that can perform unsupervised learning using STDP, with applications in pattern recognition and anomaly detection[3]. IBM's research extends to the practical implementation of homeostatic plasticity mechanisms, which help maintain network stability and prevent runaway excitation or inhibition in neuromorphic systems[4].

Strengths: Advanced hardware implementation of neuromorphic principles, scalable architecture, and energy efficiency. Weaknesses: Complexity in programming and potential limitations in adapting to diverse AI tasks beyond specific use cases.

QUALCOMM, Inc.

Technical Solution: Qualcomm has been actively researching neuromorphic computing, focusing on the practical implementation of learning rules like STDP and homeostasis in mobile and edge computing devices. Their approach involves developing specialized neural processing units (NPUs) that can efficiently execute neuromorphic algorithms[5]. Qualcomm's neuromorphic chips incorporate on-chip learning capabilities, allowing for real-time adaptation of synaptic weights based on STDP principles[6]. The company has also explored the integration of homeostatic mechanisms to maintain network stability in resource-constrained environments. Qualcomm's research extends to the development of energy-efficient neuromorphic architectures that can be integrated into smartphones and IoT devices, enabling on-device learning and inference[7].

Strengths: Focus on mobile and edge computing applications, energy efficiency, and real-time learning capabilities. Weaknesses: Potential limitations in scaling to larger, more complex neural networks compared to dedicated neuromorphic hardware.

Core Innovations in Neuromorphic Learning Rules

Method and apparatus for neural learning of natural multi-spike trains in spiking neural networks

PatentWO2013059703A1

Innovation

- A method that adapts synaptic weights based on a learning resource associated with the synapse, which is depleted by weight change and recovers over time, using the time since the last significant weight change and spike timing of pre- and post-synaptic neurons, without requiring complex filtering or spike history.

Methods and systems for reward-modulated spike-timing-dependent-plasticity

PatentActiveUS20120036099A1

Innovation

- A neural electrical circuit with a memory system that updates synapse weights in two memory locations and uses a third memory element to simulate an eligibility trace through probabilistic switching, allowing for area-efficient implementation using Spin Torque Transfer Random Access Memory (STT-RAM) or digital memory.

Hardware Implementations of Learning Rules

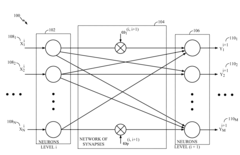

The hardware implementation of neuromorphic learning rules, particularly Spike-Timing-Dependent Plasticity (STDP) and homeostasis, represents a crucial step in bridging the gap between theoretical models and practical applications in neuromorphic computing. These implementations aim to emulate the plasticity mechanisms observed in biological neural networks, enabling artificial systems to adapt and learn from their environment.

STDP, as a fundamental learning rule in neuromorphic systems, has seen various hardware implementations. One common approach involves the use of memristive devices, which can naturally emulate synaptic weight changes based on the relative timing of pre- and post-synaptic spikes. These implementations often utilize crossbar arrays of memristors, allowing for efficient parallel processing and high-density storage of synaptic weights.

Analog VLSI circuits have also been developed to implement STDP, leveraging the inherent temporal dynamics of transistors and capacitors to capture the timing-dependent nature of synaptic modifications. These circuits typically employ differential pair integrators and pulse generators to produce the characteristic STDP learning curve.

Homeostasis, another critical aspect of neuromorphic learning, has been implemented in hardware through various mechanisms. One approach involves the use of adaptive threshold circuits that adjust the firing threshold of neurons based on their recent activity. This helps maintain a balanced level of neural activity across the network, preventing runaway excitation or quiescence.

Field-Programmable Gate Arrays (FPGAs) have emerged as a flexible platform for implementing both STDP and homeostatic mechanisms. FPGA-based implementations offer the advantage of reconfigurability, allowing researchers to explore different learning rule variations and parameter settings without the need for custom chip fabrication.

Recent advancements in neuromorphic hardware have led to the development of specialized chips that integrate STDP and homeostatic learning rules directly into their architecture. These chips often employ mixed-signal designs, combining analog circuits for efficient synaptic weight updates with digital logic for precise timing control and neuron state management.

The practical implementation of these learning rules in hardware faces several challenges, including power consumption, scalability, and precision of weight updates. Researchers are actively exploring novel materials and device structures, such as ferroelectric transistors and phase-change memory, to overcome these limitations and improve the efficiency of neuromorphic learning hardware.

As the field progresses, there is a growing focus on developing hardware implementations that can support more complex learning rules and adapt to a wider range of tasks. This includes the integration of multiple plasticity mechanisms and the incorporation of neuromodulatory signals to enable more sophisticated learning behaviors in neuromorphic systems.

STDP, as a fundamental learning rule in neuromorphic systems, has seen various hardware implementations. One common approach involves the use of memristive devices, which can naturally emulate synaptic weight changes based on the relative timing of pre- and post-synaptic spikes. These implementations often utilize crossbar arrays of memristors, allowing for efficient parallel processing and high-density storage of synaptic weights.

Analog VLSI circuits have also been developed to implement STDP, leveraging the inherent temporal dynamics of transistors and capacitors to capture the timing-dependent nature of synaptic modifications. These circuits typically employ differential pair integrators and pulse generators to produce the characteristic STDP learning curve.

Homeostasis, another critical aspect of neuromorphic learning, has been implemented in hardware through various mechanisms. One approach involves the use of adaptive threshold circuits that adjust the firing threshold of neurons based on their recent activity. This helps maintain a balanced level of neural activity across the network, preventing runaway excitation or quiescence.

Field-Programmable Gate Arrays (FPGAs) have emerged as a flexible platform for implementing both STDP and homeostatic mechanisms. FPGA-based implementations offer the advantage of reconfigurability, allowing researchers to explore different learning rule variations and parameter settings without the need for custom chip fabrication.

Recent advancements in neuromorphic hardware have led to the development of specialized chips that integrate STDP and homeostatic learning rules directly into their architecture. These chips often employ mixed-signal designs, combining analog circuits for efficient synaptic weight updates with digital logic for precise timing control and neuron state management.

The practical implementation of these learning rules in hardware faces several challenges, including power consumption, scalability, and precision of weight updates. Researchers are actively exploring novel materials and device structures, such as ferroelectric transistors and phase-change memory, to overcome these limitations and improve the efficiency of neuromorphic learning hardware.

As the field progresses, there is a growing focus on developing hardware implementations that can support more complex learning rules and adapt to a wider range of tasks. This includes the integration of multiple plasticity mechanisms and the incorporation of neuromodulatory signals to enable more sophisticated learning behaviors in neuromorphic systems.

Ethical Implications of Neuromorphic AI

The development of neuromorphic learning rules and their practical implementations raises significant ethical considerations that must be carefully addressed. As these systems become more sophisticated and closely mimic biological neural processes, questions arise about the nature of machine consciousness and the potential for artificial sentience. The implementation of Spike-Timing-Dependent Plasticity (STDP) and homeostatic mechanisms in artificial neural networks brings us closer to replicating the adaptability and learning capabilities of biological brains, which in turn prompts philosophical debates about the moral status of highly advanced neuromorphic systems.

One primary ethical concern is the potential for neuromorphic AI to develop forms of cognition that may be difficult for humans to understand or predict. This lack of interpretability could lead to unintended consequences in decision-making processes, especially if these systems are deployed in critical applications such as healthcare, finance, or autonomous vehicles. The ethical implications of having AI systems that can learn and adapt in ways similar to biological brains extend to issues of accountability and responsibility when errors or unforeseen behaviors occur.

Privacy and data protection also become more complex with neuromorphic AI. As these systems potentially process and store information in ways analogous to human memory, questions arise about the rights of individuals whose data is used to train these networks. The possibility of extracting or manipulating 'memories' from neuromorphic systems raises concerns about data ownership, consent, and the potential for invasive brain-computer interfaces.

The development of neuromorphic AI also has implications for human enhancement and the potential blurring of lines between artificial and biological intelligence. As these technologies advance, there may be opportunities for direct integration with human neural systems, raising ethical questions about cognitive augmentation, identity, and the nature of human consciousness. The societal impact of widespread neuromorphic AI implementation must be considered, including potential effects on employment, education, and social interactions.

Furthermore, the use of neuromorphic learning rules in AI systems may lead to the creation of machines with heightened emotional or sensory-like responses. This raises ethical questions about the treatment of these systems and whether they should be afforded certain rights or protections. The potential for neuromorphic AI to experience something akin to suffering or well-being introduces new dimensions to the field of machine ethics and the moral considerations surrounding AI development.

One primary ethical concern is the potential for neuromorphic AI to develop forms of cognition that may be difficult for humans to understand or predict. This lack of interpretability could lead to unintended consequences in decision-making processes, especially if these systems are deployed in critical applications such as healthcare, finance, or autonomous vehicles. The ethical implications of having AI systems that can learn and adapt in ways similar to biological brains extend to issues of accountability and responsibility when errors or unforeseen behaviors occur.

Privacy and data protection also become more complex with neuromorphic AI. As these systems potentially process and store information in ways analogous to human memory, questions arise about the rights of individuals whose data is used to train these networks. The possibility of extracting or manipulating 'memories' from neuromorphic systems raises concerns about data ownership, consent, and the potential for invasive brain-computer interfaces.

The development of neuromorphic AI also has implications for human enhancement and the potential blurring of lines between artificial and biological intelligence. As these technologies advance, there may be opportunities for direct integration with human neural systems, raising ethical questions about cognitive augmentation, identity, and the nature of human consciousness. The societal impact of widespread neuromorphic AI implementation must be considered, including potential effects on employment, education, and social interactions.

Furthermore, the use of neuromorphic learning rules in AI systems may lead to the creation of machines with heightened emotional or sensory-like responses. This raises ethical questions about the treatment of these systems and whether they should be afforded certain rights or protections. The potential for neuromorphic AI to experience something akin to suffering or well-being introduces new dimensions to the field of machine ethics and the moral considerations surrounding AI development.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!