Neuromorphic Memory & Storage: Architectures for Sparse, Event-Driven Workloads

AUG 20, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural networks. This field has evolved significantly over the past few decades, driven by the need for more efficient and adaptable computing systems capable of handling complex, real-world tasks.

The evolution of neuromorphic computing can be traced back to the 1980s when Carver Mead first introduced the concept. Initially, the focus was on creating analog VLSI circuits that mimicked neural processes. As technology advanced, the field expanded to incorporate digital implementations and hybrid analog-digital systems.

A key milestone in the evolution of neuromorphic computing was the development of spiking neural networks (SNNs), which more closely emulate the behavior of biological neurons. These networks operate on the principle of event-driven computation, where information is processed and transmitted in the form of discrete spikes, similar to the action potentials in biological neurons.

The objectives of neuromorphic computing have evolved alongside technological advancements. Early goals centered on creating brain-inspired hardware for specific tasks like pattern recognition. However, as the field matured, objectives expanded to encompass broader aims such as developing general-purpose neuromorphic processors capable of handling a wide range of cognitive tasks.

In recent years, the focus has shifted towards addressing the challenges of sparse, event-driven workloads. This aligns with the growing understanding of how biological brains efficiently process information, often dealing with sparse inputs and responding to specific events rather than continuously processing data.

The current objectives in neuromorphic computing include developing architectures that can efficiently handle these sparse, event-driven workloads while maintaining low power consumption and high scalability. This involves creating novel memory and storage solutions that can support the rapid, asynchronous nature of neuromorphic processing.

Another key objective is to bridge the gap between neuromorphic hardware and software, developing programming models and tools that allow developers to harness the full potential of these brain-inspired systems. This includes creating efficient learning algorithms tailored for neuromorphic architectures and developing frameworks for deploying artificial neural networks onto neuromorphic hardware.

As the field continues to evolve, the ultimate goal remains to create computing systems that can match or exceed the human brain's capabilities in terms of energy efficiency, adaptability, and cognitive performance. This ambitious objective drives ongoing research into new materials, architectures, and algorithms that can push the boundaries of what's possible in neuromorphic computing.

The evolution of neuromorphic computing can be traced back to the 1980s when Carver Mead first introduced the concept. Initially, the focus was on creating analog VLSI circuits that mimicked neural processes. As technology advanced, the field expanded to incorporate digital implementations and hybrid analog-digital systems.

A key milestone in the evolution of neuromorphic computing was the development of spiking neural networks (SNNs), which more closely emulate the behavior of biological neurons. These networks operate on the principle of event-driven computation, where information is processed and transmitted in the form of discrete spikes, similar to the action potentials in biological neurons.

The objectives of neuromorphic computing have evolved alongside technological advancements. Early goals centered on creating brain-inspired hardware for specific tasks like pattern recognition. However, as the field matured, objectives expanded to encompass broader aims such as developing general-purpose neuromorphic processors capable of handling a wide range of cognitive tasks.

In recent years, the focus has shifted towards addressing the challenges of sparse, event-driven workloads. This aligns with the growing understanding of how biological brains efficiently process information, often dealing with sparse inputs and responding to specific events rather than continuously processing data.

The current objectives in neuromorphic computing include developing architectures that can efficiently handle these sparse, event-driven workloads while maintaining low power consumption and high scalability. This involves creating novel memory and storage solutions that can support the rapid, asynchronous nature of neuromorphic processing.

Another key objective is to bridge the gap between neuromorphic hardware and software, developing programming models and tools that allow developers to harness the full potential of these brain-inspired systems. This includes creating efficient learning algorithms tailored for neuromorphic architectures and developing frameworks for deploying artificial neural networks onto neuromorphic hardware.

As the field continues to evolve, the ultimate goal remains to create computing systems that can match or exceed the human brain's capabilities in terms of energy efficiency, adaptability, and cognitive performance. This ambitious objective drives ongoing research into new materials, architectures, and algorithms that can push the boundaries of what's possible in neuromorphic computing.

Market Demand for Efficient AI Hardware

The demand for efficient AI hardware has been steadily increasing as artificial intelligence and machine learning applications become more prevalent across various industries. This growing market need is driven by the limitations of traditional computing architectures in handling the unique requirements of AI workloads, particularly in terms of energy efficiency and processing speed for sparse, event-driven tasks.

Neuromorphic computing, which aims to mimic the structure and function of biological neural networks, has emerged as a promising solution to address these challenges. The market for neuromorphic hardware is expected to grow significantly in the coming years, with applications ranging from edge computing devices to large-scale data centers.

One of the key drivers for this market demand is the increasing adoption of AI in edge devices, such as smartphones, IoT sensors, and autonomous vehicles. These applications require low-power, high-performance computing solutions that can process AI workloads efficiently in real-time. Neuromorphic architectures, with their ability to handle sparse, event-driven data, are well-suited for these edge AI applications.

Another factor contributing to the market demand is the growing need for energy-efficient AI processing in data centers. As AI models become more complex and data-intensive, traditional computing architectures struggle to keep up with the computational requirements while maintaining reasonable power consumption. Neuromorphic systems offer the potential for significant improvements in energy efficiency, making them attractive for large-scale AI deployments.

The healthcare industry is also showing increased interest in neuromorphic computing for applications such as brain-computer interfaces, prosthetics, and medical imaging analysis. These applications require real-time processing of complex, sparse data streams, which aligns well with the capabilities of neuromorphic architectures.

Furthermore, the automotive industry is exploring neuromorphic computing for advanced driver assistance systems (ADAS) and autonomous vehicles. These applications demand low-latency, energy-efficient processing of sensor data and decision-making, which neuromorphic systems are well-positioned to deliver.

As the AI hardware market continues to evolve, there is a growing recognition of the limitations of traditional von Neumann architectures for AI workloads. This has led to increased investment in research and development of neuromorphic computing solutions, driving innovation in both hardware and software aspects of these systems.

Neuromorphic computing, which aims to mimic the structure and function of biological neural networks, has emerged as a promising solution to address these challenges. The market for neuromorphic hardware is expected to grow significantly in the coming years, with applications ranging from edge computing devices to large-scale data centers.

One of the key drivers for this market demand is the increasing adoption of AI in edge devices, such as smartphones, IoT sensors, and autonomous vehicles. These applications require low-power, high-performance computing solutions that can process AI workloads efficiently in real-time. Neuromorphic architectures, with their ability to handle sparse, event-driven data, are well-suited for these edge AI applications.

Another factor contributing to the market demand is the growing need for energy-efficient AI processing in data centers. As AI models become more complex and data-intensive, traditional computing architectures struggle to keep up with the computational requirements while maintaining reasonable power consumption. Neuromorphic systems offer the potential for significant improvements in energy efficiency, making them attractive for large-scale AI deployments.

The healthcare industry is also showing increased interest in neuromorphic computing for applications such as brain-computer interfaces, prosthetics, and medical imaging analysis. These applications require real-time processing of complex, sparse data streams, which aligns well with the capabilities of neuromorphic architectures.

Furthermore, the automotive industry is exploring neuromorphic computing for advanced driver assistance systems (ADAS) and autonomous vehicles. These applications demand low-latency, energy-efficient processing of sensor data and decision-making, which neuromorphic systems are well-positioned to deliver.

As the AI hardware market continues to evolve, there is a growing recognition of the limitations of traditional von Neumann architectures for AI workloads. This has led to increased investment in research and development of neuromorphic computing solutions, driving innovation in both hardware and software aspects of these systems.

Current Challenges in Neuromorphic Memory

Neuromorphic memory systems face several significant challenges in their development and implementation for sparse, event-driven workloads. One of the primary obstacles is the inherent mismatch between traditional von Neumann architectures and the requirements of neuromorphic computing. Conventional memory hierarchies are optimized for sequential access patterns, which are ill-suited for the parallel, distributed nature of neural networks and brain-inspired computing paradigms.

The scalability of neuromorphic memory systems presents another major hurdle. As the size and complexity of neural networks increase, the memory requirements grow exponentially. This scaling issue is particularly pronounced in sparse, event-driven workloads where efficient representation and storage of sparse data structures become critical. Current memory technologies struggle to provide the necessary density and energy efficiency to support large-scale neuromorphic systems.

Power consumption remains a significant challenge for neuromorphic memory architectures. While neuromorphic computing aims to emulate the energy efficiency of biological brains, current implementations often fall short of this goal. The constant power draw of conventional DRAM and the high energy cost of accessing off-chip memory create bottlenecks in system performance and energy efficiency.

The need for high-speed, low-latency memory access in neuromorphic systems poses another challenge. Event-driven workloads require rapid response times to process incoming stimuli effectively. However, the latency associated with traditional memory hierarchies can impede the real-time processing capabilities of neuromorphic systems, limiting their applicability in time-sensitive applications.

Addressing the issue of data movement is crucial for neuromorphic memory architectures. The energy cost and performance overhead of moving data between processing units and memory elements can significantly impact system efficiency. Developing memory solutions that minimize data movement while maintaining high bandwidth and low latency is a complex challenge that requires innovative approaches to memory design and integration.

The implementation of on-chip learning and synaptic plasticity mechanisms presents additional challenges for neuromorphic memory systems. These features are essential for adaptive and learning capabilities in neuromorphic architectures but require specialized memory structures that can support frequent updates and fine-grained access patterns. Balancing the need for plasticity with the constraints of existing memory technologies remains an ongoing challenge in the field.

The scalability of neuromorphic memory systems presents another major hurdle. As the size and complexity of neural networks increase, the memory requirements grow exponentially. This scaling issue is particularly pronounced in sparse, event-driven workloads where efficient representation and storage of sparse data structures become critical. Current memory technologies struggle to provide the necessary density and energy efficiency to support large-scale neuromorphic systems.

Power consumption remains a significant challenge for neuromorphic memory architectures. While neuromorphic computing aims to emulate the energy efficiency of biological brains, current implementations often fall short of this goal. The constant power draw of conventional DRAM and the high energy cost of accessing off-chip memory create bottlenecks in system performance and energy efficiency.

The need for high-speed, low-latency memory access in neuromorphic systems poses another challenge. Event-driven workloads require rapid response times to process incoming stimuli effectively. However, the latency associated with traditional memory hierarchies can impede the real-time processing capabilities of neuromorphic systems, limiting their applicability in time-sensitive applications.

Addressing the issue of data movement is crucial for neuromorphic memory architectures. The energy cost and performance overhead of moving data between processing units and memory elements can significantly impact system efficiency. Developing memory solutions that minimize data movement while maintaining high bandwidth and low latency is a complex challenge that requires innovative approaches to memory design and integration.

The implementation of on-chip learning and synaptic plasticity mechanisms presents additional challenges for neuromorphic memory systems. These features are essential for adaptive and learning capabilities in neuromorphic architectures but require specialized memory structures that can support frequent updates and fine-grained access patterns. Balancing the need for plasticity with the constraints of existing memory technologies remains an ongoing challenge in the field.

Existing Neuromorphic Memory Solutions

01 Neuromorphic computing architectures

These architectures are designed to mimic the structure and function of biological neural networks. They integrate memory and processing units to perform computations in a brain-like manner, potentially offering improved efficiency and performance for certain tasks compared to traditional computing systems.- Neuromorphic computing architectures: These architectures are designed to mimic the structure and function of biological neural networks. They integrate memory and processing units to perform computations in a brain-like manner, offering potential advantages in energy efficiency and parallel processing for AI applications.

- Memristive devices for neuromorphic systems: Memristive devices are used to implement synaptic functions in neuromorphic systems. These devices can store and process information simultaneously, enabling efficient implementation of neural network algorithms and reducing the energy consumption of neuromorphic systems.

- In-memory computing for neuromorphic applications: In-memory computing architectures perform computations directly within memory units, reducing data movement and improving energy efficiency. This approach is particularly suitable for neuromorphic systems, as it allows for parallel processing of neural network operations.

- Spiking neural networks and neuromorphic hardware: Spiking neural networks are implemented in neuromorphic hardware to more closely emulate biological neural systems. These networks use discrete spikes for information processing and can be more energy-efficient than traditional artificial neural networks when implemented in specialized neuromorphic hardware.

- 3D integration for neuromorphic architectures: Three-dimensional integration techniques are used to create compact and efficient neuromorphic systems. This approach allows for higher density of neural elements and shorter interconnects, improving performance and energy efficiency of neuromorphic memory and storage architectures.

02 Memristive devices for neuromorphic systems

Memristive devices are used in neuromorphic systems to emulate synaptic behavior. These devices can store and process information simultaneously, allowing for efficient implementation of neural network algorithms and potentially reducing power consumption and improving system performance.Expand Specific Solutions03 In-memory computing for neuromorphic applications

In-memory computing approaches are utilized in neuromorphic systems to perform computations directly within memory units. This reduces data movement between separate processing and memory components, potentially improving energy efficiency and processing speed for neural network operations.Expand Specific Solutions04 Spiking neural networks and neuromorphic hardware

Spiking neural networks are implemented in neuromorphic hardware to more closely emulate biological neural systems. These networks use discrete spikes for information processing and can be more energy-efficient for certain tasks compared to traditional artificial neural networks.Expand Specific Solutions05 3D integration for neuromorphic architectures

Three-dimensional integration techniques are applied to neuromorphic architectures to increase connectivity and density of neural elements. This approach can lead to improved performance, reduced power consumption, and more compact neuromorphic systems by enabling closer integration of memory and processing units.Expand Specific Solutions

Key Players in Neuromorphic Computing

The neuromorphic memory and storage market for sparse, event-driven workloads is in its early growth stage, with increasing interest from both academia and industry. The market size is expanding as more companies recognize the potential of brain-inspired computing architectures. Technologically, the field is rapidly evolving, with key players like IBM, Intel, and Samsung leading research and development efforts. Startups such as Syntiant and Innatera are also making significant contributions, pushing the boundaries of energy-efficient AI processing. Universities like Tsinghua, KAIST, and Zhejiang are actively collaborating with industry partners to advance the technology. While still maturing, neuromorphic solutions are showing promise in edge computing, IoT devices, and data centers, driving continued investment and innovation in this space.

International Business Machines Corp.

Technical Solution: IBM has developed a neuromorphic computing architecture called TrueNorth, which is designed for sparse, event-driven workloads. The TrueNorth chip contains 1 million neurons and 256 million synapses, organized into a network of neurosynaptic cores[1]. This architecture mimics the brain's structure and function, allowing for efficient processing of sensory data and pattern recognition tasks. IBM has also explored the integration of phase-change memory (PCM) devices with CMOS technology to create synaptic elements for neuromorphic systems[2]. This approach enables high-density, low-power, and scalable neuromorphic hardware that can adapt and learn in real-time[3].

Strengths: High energy efficiency, scalability, and ability to handle complex cognitive tasks. Weaknesses: Challenges in programming and optimizing for specific applications, limited software ecosystem compared to traditional computing paradigms.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has been developing neuromorphic memory solutions based on resistive random-access memory (RRAM) technology. Their approach involves creating artificial synapses using crossbar arrays of RRAM devices, which can efficiently implement sparse, event-driven neural networks[4]. Samsung's neuromorphic chips integrate memory and processing units, reducing data movement and improving energy efficiency. The company has demonstrated neuromorphic systems capable of performing pattern recognition and classification tasks with significantly lower power consumption compared to conventional von Neumann architectures[5]. Additionally, Samsung has explored the use of magnetic random-access memory (MRAM) for neuromorphic computing, which offers non-volatility and high endurance[6].

Strengths: Advanced memory technology integration, potential for high-density and low-power neuromorphic systems. Weaknesses: Still in research phase, may face challenges in scaling up to large-scale commercial applications.

Innovations in Sparse Computing

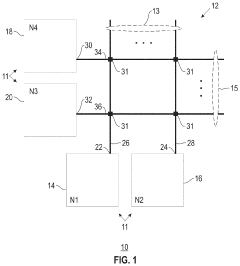

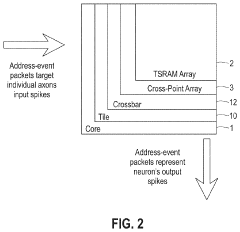

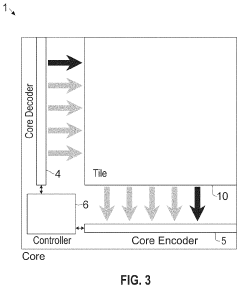

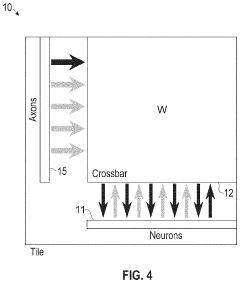

Neuromorphic event-driven neural computing architecture in a scalable neural network

PatentActiveUS11580366B2

Innovation

- The development of a neuromorphic and synaptronic event-driven neural computing architecture featuring a scalable low-power network with digital CMOS spiking circuits, incorporating a crossbar memory synapse array and electronic neurons that integrate input spikes to generate spike events, and utilize a scheduler for deterministic event delivery, implementing STDP learning rules.

Neuromorphic memory device and neuromorphic system using the same

PatentPendingUS20250218514A1

Innovation

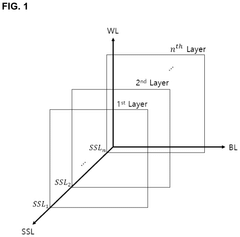

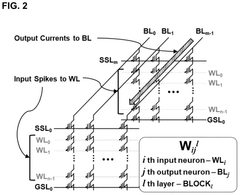

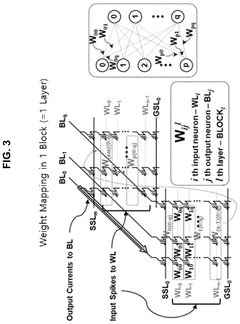

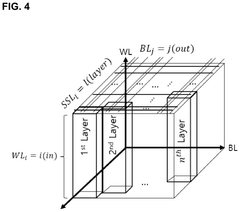

- A neuromorphic memory device is proposed that maps neural network layers to string selection lines in a one-to-one basis, utilizing a three-dimensional memory element with bit lines, word lines, and string selection lines to configure network topologies, enabling efficient weight storage and low-power operations.

Energy Efficiency in Neuromorphic Systems

Energy efficiency is a critical aspect of neuromorphic systems, particularly in the context of architectures designed for sparse, event-driven workloads. These systems aim to emulate the human brain's neural networks, which are inherently energy-efficient due to their sparse and event-driven nature. Traditional von Neumann architectures struggle with energy efficiency when handling such workloads, making neuromorphic systems an attractive alternative for specific applications.

Neuromorphic systems achieve energy efficiency through several key mechanisms. Firstly, they employ event-driven processing, where computations are only performed when necessary, rather than in a continuous, clock-driven manner. This approach significantly reduces power consumption during idle periods, which can be substantial in sparse workloads.

Secondly, these systems utilize distributed memory and processing units, closely mimicking the brain's structure. This architecture minimizes data movement, a major source of energy consumption in conventional computing systems. By processing data locally and only transmitting sparse events, neuromorphic systems can drastically reduce energy expenditure associated with data transfer.

Another crucial aspect of energy efficiency in neuromorphic systems is the use of low-power analog or mixed-signal circuits. These circuits can perform complex computations with minimal energy consumption, often leveraging the physical properties of materials to perform computations directly. This approach contrasts sharply with the energy-intensive digital logic used in traditional computing systems.

Neuromorphic memory architectures also play a vital role in energy efficiency. These systems often employ novel memory technologies such as memristors or phase-change memory, which can store and process information in the same physical location. This in-memory computing capability further reduces data movement and associated energy costs.

The sparse nature of neuromorphic workloads contributes significantly to energy efficiency. By focusing on relevant events and ignoring irrelevant ones, these systems can achieve high performance with minimal energy expenditure. This is particularly beneficial in applications such as sensor networks, where most of the input data may be redundant or irrelevant.

However, challenges remain in fully realizing the energy efficiency potential of neuromorphic systems. One major hurdle is the development of efficient learning algorithms that can operate within the constraints of these architectures while maintaining low energy consumption. Additionally, optimizing the balance between computation accuracy and energy efficiency remains an active area of research.

As neuromorphic technologies continue to advance, we can expect further improvements in energy efficiency. This progress will likely involve innovations in materials science, circuit design, and algorithm development, all aimed at creating systems that can process complex, sparse, event-driven workloads with minimal energy consumption.

Neuromorphic systems achieve energy efficiency through several key mechanisms. Firstly, they employ event-driven processing, where computations are only performed when necessary, rather than in a continuous, clock-driven manner. This approach significantly reduces power consumption during idle periods, which can be substantial in sparse workloads.

Secondly, these systems utilize distributed memory and processing units, closely mimicking the brain's structure. This architecture minimizes data movement, a major source of energy consumption in conventional computing systems. By processing data locally and only transmitting sparse events, neuromorphic systems can drastically reduce energy expenditure associated with data transfer.

Another crucial aspect of energy efficiency in neuromorphic systems is the use of low-power analog or mixed-signal circuits. These circuits can perform complex computations with minimal energy consumption, often leveraging the physical properties of materials to perform computations directly. This approach contrasts sharply with the energy-intensive digital logic used in traditional computing systems.

Neuromorphic memory architectures also play a vital role in energy efficiency. These systems often employ novel memory technologies such as memristors or phase-change memory, which can store and process information in the same physical location. This in-memory computing capability further reduces data movement and associated energy costs.

The sparse nature of neuromorphic workloads contributes significantly to energy efficiency. By focusing on relevant events and ignoring irrelevant ones, these systems can achieve high performance with minimal energy expenditure. This is particularly beneficial in applications such as sensor networks, where most of the input data may be redundant or irrelevant.

However, challenges remain in fully realizing the energy efficiency potential of neuromorphic systems. One major hurdle is the development of efficient learning algorithms that can operate within the constraints of these architectures while maintaining low energy consumption. Additionally, optimizing the balance between computation accuracy and energy efficiency remains an active area of research.

As neuromorphic technologies continue to advance, we can expect further improvements in energy efficiency. This progress will likely involve innovations in materials science, circuit design, and algorithm development, all aimed at creating systems that can process complex, sparse, event-driven workloads with minimal energy consumption.

Neuromorphic Computing Standards and Protocols

In the rapidly evolving field of neuromorphic computing, the establishment of standards and protocols is crucial for ensuring interoperability, scalability, and widespread adoption. As neuromorphic systems become more prevalent, particularly in architectures designed for sparse, event-driven workloads, the need for standardized communication and data representation becomes increasingly apparent.

One of the primary challenges in developing standards for neuromorphic computing is the diversity of approaches and implementations. Different research groups and companies have developed their own neuromorphic architectures, each with unique characteristics and requirements. To address this, several initiatives have emerged to create common frameworks and protocols.

The Neuromorphic Computing Standards Initiative (NCSI) is a collaborative effort involving academic institutions, industry leaders, and government agencies. Its goal is to define a set of standards for neuromorphic hardware interfaces, data formats, and communication protocols. The NCSI has proposed a layered architecture model, similar to the OSI model in traditional computing, to facilitate interoperability between different neuromorphic systems.

At the hardware level, efforts are underway to standardize spike encoding and transmission protocols. The Address-Event Representation (AER) protocol has gained traction as a potential standard for communication between neuromorphic devices. AER allows for efficient representation of sparse, event-driven data, making it particularly suitable for neuromorphic systems designed for such workloads.

In terms of software and programming interfaces, the Neural Engineering Framework (NEF) has emerged as a popular approach for designing and implementing neuromorphic algorithms. While not a formal standard, NEF provides a common language and set of principles for describing neural computations, which could serve as a foundation for future standardization efforts.

Data representation and exchange formats are another critical area for standardization. The Neurodata Without Borders (NWB) format, originally developed for neuroscience data, is being adapted for use in neuromorphic computing. NWB provides a standardized way to represent and share neuromorphic data, including spike trains and synaptic weights.

As neuromorphic systems become more integrated with traditional computing infrastructure, efforts are also underway to develop standards for interfacing with conventional hardware and software. The PCIe-AER standard, for example, aims to provide a bridge between neuromorphic devices and standard computer systems using the PCIe bus.

While significant progress has been made in developing standards and protocols for neuromorphic computing, many challenges remain. The rapid pace of innovation in the field means that standards must be flexible enough to accommodate new developments while still providing a stable foundation for interoperability. Continued collaboration between academia, industry, and standards organizations will be essential to ensure the widespread adoption and success of neuromorphic computing technologies.

One of the primary challenges in developing standards for neuromorphic computing is the diversity of approaches and implementations. Different research groups and companies have developed their own neuromorphic architectures, each with unique characteristics and requirements. To address this, several initiatives have emerged to create common frameworks and protocols.

The Neuromorphic Computing Standards Initiative (NCSI) is a collaborative effort involving academic institutions, industry leaders, and government agencies. Its goal is to define a set of standards for neuromorphic hardware interfaces, data formats, and communication protocols. The NCSI has proposed a layered architecture model, similar to the OSI model in traditional computing, to facilitate interoperability between different neuromorphic systems.

At the hardware level, efforts are underway to standardize spike encoding and transmission protocols. The Address-Event Representation (AER) protocol has gained traction as a potential standard for communication between neuromorphic devices. AER allows for efficient representation of sparse, event-driven data, making it particularly suitable for neuromorphic systems designed for such workloads.

In terms of software and programming interfaces, the Neural Engineering Framework (NEF) has emerged as a popular approach for designing and implementing neuromorphic algorithms. While not a formal standard, NEF provides a common language and set of principles for describing neural computations, which could serve as a foundation for future standardization efforts.

Data representation and exchange formats are another critical area for standardization. The Neurodata Without Borders (NWB) format, originally developed for neuroscience data, is being adapted for use in neuromorphic computing. NWB provides a standardized way to represent and share neuromorphic data, including spike trains and synaptic weights.

As neuromorphic systems become more integrated with traditional computing infrastructure, efforts are also underway to develop standards for interfacing with conventional hardware and software. The PCIe-AER standard, for example, aims to provide a bridge between neuromorphic devices and standard computer systems using the PCIe bus.

While significant progress has been made in developing standards and protocols for neuromorphic computing, many challenges remain. The rapid pace of innovation in the field means that standards must be flexible enough to accommodate new developments while still providing a stable foundation for interoperability. Continued collaboration between academia, industry, and standards organizations will be essential to ensure the widespread adoption and success of neuromorphic computing technologies.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!