How to Quantify the Trade-off Between Accuracy and Energy on SNNs

AUG 20, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SNN Accuracy-Energy Trade-off Background

Spiking Neural Networks (SNNs) have emerged as a promising paradigm in the field of neuromorphic computing, offering potential advantages in energy efficiency and biological plausibility compared to traditional artificial neural networks. The trade-off between accuracy and energy consumption in SNNs has become a critical area of research, as it directly impacts the practical implementation and performance of these networks in real-world applications.

The background of this trade-off stems from the fundamental nature of SNNs, which operate based on discrete spike events rather than continuous values. This event-driven processing can lead to significant energy savings, particularly in hardware implementations. However, the binary nature of spikes can also limit the representational capacity and potentially impact the accuracy of the network.

Historically, the development of SNNs has been driven by the desire to create more brain-like computing systems. As our understanding of biological neural networks has advanced, so too has the sophistication of SNN models. Early SNN implementations focused primarily on biological plausibility, often at the expense of computational efficiency and accuracy. However, recent years have seen a shift towards balancing biological inspiration with practical performance considerations.

The accuracy-energy trade-off in SNNs is influenced by several key factors. Spike encoding schemes, which convert input data into spike trains, play a crucial role in determining both the accuracy of information representation and the energy consumption of the network. More complex encoding methods may improve accuracy but often at the cost of increased computational overhead and energy usage.

Network architecture and neuron models also significantly impact this trade-off. More sophisticated neuron models, such as those incorporating adaptive thresholds or complex synaptic dynamics, can potentially improve accuracy but may require more energy to simulate. Similarly, larger network architectures with more neurons and synapses can achieve higher accuracy but at the expense of increased energy consumption.

Training algorithms for SNNs have evolved to address this trade-off explicitly. While early training methods often struggled to achieve high accuracy, newer approaches such as surrogate gradient learning and conversion techniques from ANNs to SNNs have shown promising results in improving accuracy. However, these methods often come with their own energy costs, particularly during the training phase.

The quantification of this trade-off has become increasingly important as SNNs move from theoretical models to practical implementations. Researchers and engineers are now faced with the challenge of developing metrics and methodologies that can accurately capture both the computational accuracy and energy efficiency of SNN systems. This quantification is essential for optimizing SNNs for specific applications and hardware platforms, where the balance between accuracy and energy consumption may vary depending on the use case.

The background of this trade-off stems from the fundamental nature of SNNs, which operate based on discrete spike events rather than continuous values. This event-driven processing can lead to significant energy savings, particularly in hardware implementations. However, the binary nature of spikes can also limit the representational capacity and potentially impact the accuracy of the network.

Historically, the development of SNNs has been driven by the desire to create more brain-like computing systems. As our understanding of biological neural networks has advanced, so too has the sophistication of SNN models. Early SNN implementations focused primarily on biological plausibility, often at the expense of computational efficiency and accuracy. However, recent years have seen a shift towards balancing biological inspiration with practical performance considerations.

The accuracy-energy trade-off in SNNs is influenced by several key factors. Spike encoding schemes, which convert input data into spike trains, play a crucial role in determining both the accuracy of information representation and the energy consumption of the network. More complex encoding methods may improve accuracy but often at the cost of increased computational overhead and energy usage.

Network architecture and neuron models also significantly impact this trade-off. More sophisticated neuron models, such as those incorporating adaptive thresholds or complex synaptic dynamics, can potentially improve accuracy but may require more energy to simulate. Similarly, larger network architectures with more neurons and synapses can achieve higher accuracy but at the expense of increased energy consumption.

Training algorithms for SNNs have evolved to address this trade-off explicitly. While early training methods often struggled to achieve high accuracy, newer approaches such as surrogate gradient learning and conversion techniques from ANNs to SNNs have shown promising results in improving accuracy. However, these methods often come with their own energy costs, particularly during the training phase.

The quantification of this trade-off has become increasingly important as SNNs move from theoretical models to practical implementations. Researchers and engineers are now faced with the challenge of developing metrics and methodologies that can accurately capture both the computational accuracy and energy efficiency of SNN systems. This quantification is essential for optimizing SNNs for specific applications and hardware platforms, where the balance between accuracy and energy consumption may vary depending on the use case.

Market Demand Analysis

The market demand for efficient Spiking Neural Networks (SNNs) is rapidly growing, driven by the increasing need for energy-efficient AI solutions in edge computing and IoT devices. As the Internet of Things (IoT) ecosystem expands, with projections estimating over 75 billion connected devices by 2025, the demand for low-power, high-performance neural networks is becoming critical.

SNNs offer a promising solution to the energy consumption challenges faced by traditional artificial neural networks. Their event-driven nature and ability to process information with discrete spikes make them inherently more energy-efficient. This aligns well with the market's push towards green AI and sustainable computing solutions, especially in battery-powered devices and resource-constrained environments.

The automotive industry is emerging as a significant market for SNNs, particularly in advanced driver-assistance systems (ADAS) and autonomous vehicles. These applications require real-time processing of sensor data with minimal latency and power consumption, making SNNs an attractive option. The global ADAS market is expected to reach $134.9 billion by 2027, indicating substantial growth potential for SNN technologies.

In the healthcare sector, there's an increasing demand for wearable devices and implantable medical technologies that can perform complex computations with minimal energy usage. SNNs are well-suited for these applications, offering the potential for long-lasting, efficient neural interfaces and diagnostic tools. The global wearable medical devices market is projected to grow at a CAGR of 26.8% from 2021 to 2028, presenting a significant opportunity for SNN-based solutions.

However, the market also demands a balance between energy efficiency and accuracy in SNNs. While energy conservation is crucial, it cannot come at the cost of significantly reduced accuracy, especially in critical applications like healthcare diagnostics or autonomous driving. This creates a need for quantifiable methods to assess and optimize the trade-off between accuracy and energy consumption in SNNs.

The robotics industry is another key market driver for SNNs. As robots become more sophisticated and are deployed in diverse environments, from manufacturing floors to disaster response scenarios, the need for energy-efficient, adaptive neural networks becomes paramount. The global robotics market is expected to reach $260 billion by 2030, with a significant portion requiring advanced, energy-efficient AI capabilities.

In conclusion, the market demand for SNNs is multifaceted, spanning various industries and applications. The ability to quantify and optimize the trade-off between accuracy and energy consumption in SNNs is becoming increasingly important as the technology moves from research labs to real-world applications. This optimization will be crucial in meeting the diverse needs of different market segments and driving the widespread adoption of SNN technology.

SNNs offer a promising solution to the energy consumption challenges faced by traditional artificial neural networks. Their event-driven nature and ability to process information with discrete spikes make them inherently more energy-efficient. This aligns well with the market's push towards green AI and sustainable computing solutions, especially in battery-powered devices and resource-constrained environments.

The automotive industry is emerging as a significant market for SNNs, particularly in advanced driver-assistance systems (ADAS) and autonomous vehicles. These applications require real-time processing of sensor data with minimal latency and power consumption, making SNNs an attractive option. The global ADAS market is expected to reach $134.9 billion by 2027, indicating substantial growth potential for SNN technologies.

In the healthcare sector, there's an increasing demand for wearable devices and implantable medical technologies that can perform complex computations with minimal energy usage. SNNs are well-suited for these applications, offering the potential for long-lasting, efficient neural interfaces and diagnostic tools. The global wearable medical devices market is projected to grow at a CAGR of 26.8% from 2021 to 2028, presenting a significant opportunity for SNN-based solutions.

However, the market also demands a balance between energy efficiency and accuracy in SNNs. While energy conservation is crucial, it cannot come at the cost of significantly reduced accuracy, especially in critical applications like healthcare diagnostics or autonomous driving. This creates a need for quantifiable methods to assess and optimize the trade-off between accuracy and energy consumption in SNNs.

The robotics industry is another key market driver for SNNs. As robots become more sophisticated and are deployed in diverse environments, from manufacturing floors to disaster response scenarios, the need for energy-efficient, adaptive neural networks becomes paramount. The global robotics market is expected to reach $260 billion by 2030, with a significant portion requiring advanced, energy-efficient AI capabilities.

In conclusion, the market demand for SNNs is multifaceted, spanning various industries and applications. The ability to quantify and optimize the trade-off between accuracy and energy consumption in SNNs is becoming increasingly important as the technology moves from research labs to real-world applications. This optimization will be crucial in meeting the diverse needs of different market segments and driving the widespread adoption of SNN technology.

Current SNN Challenges

Spiking Neural Networks (SNNs) have emerged as a promising paradigm for energy-efficient neuromorphic computing. However, several challenges currently hinder their widespread adoption and practical implementation. One of the most significant challenges is the trade-off between accuracy and energy consumption.

The accuracy of SNNs is heavily dependent on the number of time steps used for inference. Increasing the number of time steps generally leads to higher accuracy but also results in increased energy consumption. This creates a fundamental tension between achieving high performance and maintaining low power usage, which is particularly critical for edge computing and mobile applications.

Another challenge lies in the training of SNNs. Traditional backpropagation algorithms used in conventional neural networks are not directly applicable to SNNs due to the discrete nature of spike events. This has led to the development of various approximation methods and surrogate gradient techniques, which often struggle to achieve the same level of accuracy as their non-spiking counterparts.

The choice of neuron model also presents a significant challenge. While more complex neuron models can capture biological neural dynamics more accurately, they often come at the cost of increased computational complexity and energy consumption. Simpler models, on the other hand, may be more energy-efficient but might not be able to represent complex temporal patterns effectively.

Spike encoding is another area of concern. Converting continuous input data into spike trains is not straightforward and can lead to information loss. Different encoding schemes, such as rate coding or temporal coding, have their own trade-offs in terms of accuracy and efficiency, further complicating the design process of SNNs.

Hardware implementation of SNNs poses additional challenges. While neuromorphic hardware has shown promise in implementing SNNs efficiently, there is still a lack of standardized hardware platforms that can fully exploit the potential of SNNs. This makes it difficult to compare different SNN architectures and optimization techniques across different hardware implementations.

Lastly, the lack of standardized benchmarks and evaluation metrics specifically designed for SNNs makes it challenging to quantify and compare the performance of different SNN models and architectures. This is particularly true when it comes to evaluating the trade-off between accuracy and energy consumption, as existing metrics may not capture the unique characteristics of spike-based computation.

The accuracy of SNNs is heavily dependent on the number of time steps used for inference. Increasing the number of time steps generally leads to higher accuracy but also results in increased energy consumption. This creates a fundamental tension between achieving high performance and maintaining low power usage, which is particularly critical for edge computing and mobile applications.

Another challenge lies in the training of SNNs. Traditional backpropagation algorithms used in conventional neural networks are not directly applicable to SNNs due to the discrete nature of spike events. This has led to the development of various approximation methods and surrogate gradient techniques, which often struggle to achieve the same level of accuracy as their non-spiking counterparts.

The choice of neuron model also presents a significant challenge. While more complex neuron models can capture biological neural dynamics more accurately, they often come at the cost of increased computational complexity and energy consumption. Simpler models, on the other hand, may be more energy-efficient but might not be able to represent complex temporal patterns effectively.

Spike encoding is another area of concern. Converting continuous input data into spike trains is not straightforward and can lead to information loss. Different encoding schemes, such as rate coding or temporal coding, have their own trade-offs in terms of accuracy and efficiency, further complicating the design process of SNNs.

Hardware implementation of SNNs poses additional challenges. While neuromorphic hardware has shown promise in implementing SNNs efficiently, there is still a lack of standardized hardware platforms that can fully exploit the potential of SNNs. This makes it difficult to compare different SNN architectures and optimization techniques across different hardware implementations.

Lastly, the lack of standardized benchmarks and evaluation metrics specifically designed for SNNs makes it challenging to quantify and compare the performance of different SNN models and architectures. This is particularly true when it comes to evaluating the trade-off between accuracy and energy consumption, as existing metrics may not capture the unique characteristics of spike-based computation.

Existing Trade-off Solutions

01 Improving SNN accuracy through network architecture optimization

Various techniques are employed to enhance the accuracy of Spiking Neural Networks (SNNs) by optimizing their architecture. This includes developing novel neuron models, adjusting synaptic connections, and implementing advanced learning algorithms. These improvements aim to increase the network's ability to process and classify complex spatio-temporal patterns, leading to better performance in tasks such as image recognition and natural language processing.- Improving SNN accuracy through network architecture optimization: Various techniques are employed to enhance the accuracy of Spiking Neural Networks (SNNs) by optimizing their architecture. This includes developing novel neuron models, adjusting synaptic connections, and implementing advanced learning algorithms. These improvements aim to increase the network's ability to process and classify complex spatiotemporal data more accurately.

- Energy-efficient SNN implementations: Researchers are focusing on developing energy-efficient SNN implementations to reduce power consumption while maintaining high performance. This involves optimizing hardware designs, utilizing low-power computing techniques, and developing specialized neuromorphic chips. These approaches aim to make SNNs more suitable for deployment in resource-constrained environments and edge computing applications.

- Hybrid SNN architectures for improved performance: Hybrid architectures combining SNNs with traditional artificial neural networks or other machine learning techniques are being explored to leverage the strengths of both approaches. These hybrid systems aim to improve overall accuracy and energy efficiency by utilizing the precise timing information of spiking neurons alongside conventional deep learning methods.

- SNN training algorithms for enhanced accuracy: Novel training algorithms are being developed to improve the accuracy of SNNs. These include spike-timing-dependent plasticity (STDP) variants, backpropagation-inspired methods adapted for spiking networks, and reinforcement learning approaches. The goal is to enable more effective learning of complex patterns and representations in SNNs, leading to improved classification and prediction accuracy.

- Hardware acceleration for energy-efficient SNN processing: Specialized hardware accelerators are being designed to enable energy-efficient processing of SNNs. These include neuromorphic chips, FPGA implementations, and custom ASIC designs optimized for spiking neural computations. By closely matching the hardware architecture to the SNN's computational requirements, significant improvements in energy efficiency and processing speed can be achieved.

02 Energy-efficient SNN implementations

Researchers are focusing on developing energy-efficient SNN implementations to address the high power consumption issues in traditional neural networks. This involves designing low-power neuromorphic hardware, optimizing spike encoding schemes, and implementing sparse coding techniques. These approaches significantly reduce energy consumption while maintaining or improving computational performance, making SNNs suitable for edge computing and IoT applications.Expand Specific Solutions03 Hybrid SNN architectures for improved accuracy and efficiency

Hybrid architectures combining SNNs with traditional artificial neural networks or other machine learning techniques are being explored to leverage the strengths of both approaches. These hybrid models aim to achieve higher accuracy while maintaining the energy efficiency of SNNs. Such architectures often involve using SNNs for feature extraction and traditional networks for classification, or incorporating SNN-inspired elements into conventional deep learning frameworks.Expand Specific Solutions04 SNN training algorithms for enhanced accuracy

Novel training algorithms are being developed to improve the accuracy of SNNs while considering their unique temporal dynamics. These include spike-timing-dependent plasticity (STDP) based methods, backpropagation through time for spiking networks, and evolutionary optimization techniques. The goal is to enable SNNs to learn complex representations from data more effectively, closing the performance gap with traditional deep learning models.Expand Specific Solutions05 Hardware acceleration for energy-efficient SNN computation

Specialized hardware accelerators are being designed to enable energy-efficient computation of SNNs. These include neuromorphic chips, FPGA implementations, and custom ASIC designs that leverage the sparse and event-driven nature of spiking neural networks. Such hardware solutions aim to dramatically reduce power consumption while enabling real-time processing of spiking neural data, making SNNs viable for a wide range of applications from mobile devices to large-scale AI systems.Expand Specific Solutions

Key SNN Industry Players

The field of quantifying trade-offs between accuracy and energy in Spiking Neural Networks (SNNs) is in an early development stage, with growing market potential as energy-efficient AI becomes more critical. The technology is still maturing, with research institutions like EPFL and companies such as Intel, IBM, and Samsung leading the way. These players are exploring novel architectures and algorithms to optimize the balance between computational accuracy and energy consumption in SNNs. As the field progresses, we can expect increased collaboration between academia and industry to drive innovation and practical applications in areas like edge computing and IoT devices.

Intel Corp.

Technical Solution: Intel's approach to quantifying the trade-off between accuracy and energy in SNNs centers around their neuromorphic research chip, Loihi. The chip implements a novel neural network architecture that closely mimics the brain's basic mechanics, allowing for efficient processing of spikes. Intel has developed a framework that enables researchers to map SNNs onto Loihi, providing tools to measure both accuracy and energy consumption. Their studies have shown that Loihi can solve certain optimization problems up to 1000 times more efficiently than conventional methods[3]. Intel's software toolkit, Lava, allows for the development and benchmarking of neuromorphic algorithms, facilitating the quantification of energy-accuracy trade-offs across different SNN configurations[4].

Strengths: Proprietary neuromorphic hardware (Loihi) and software ecosystem for SNNs. Weaknesses: Limited to their own hardware platform, which may not be as widely accessible as general-purpose GPUs.

Applied Brain Research, Inc.

Technical Solution: Applied Brain Research has developed Nengo, a neural engineering framework that supports the implementation of Spiking Neural Networks (SNNs). Their approach to quantifying the trade-off between accuracy and energy in SNNs involves using neuromorphic hardware and software co-design. They employ adaptive synapses and neurons to optimize energy consumption while maintaining accuracy. The company has demonstrated up to 100x improvements in energy efficiency compared to traditional deep learning approaches[1]. Their SNN models can be deployed on various neuromorphic hardware platforms, including Intel's Loihi chip, which allows for real-time learning and inference with significantly reduced power consumption[2].

Strengths: Specialized in neuromorphic computing and SNNs, with proven energy efficiency gains. Weaknesses: Limited to specific hardware platforms, which may restrict widespread adoption.

Core SNN Quantification Methods

Novel activation function with hardware realization for recurrent neuromorphic networks

PatentWO2022118340A1

Innovation

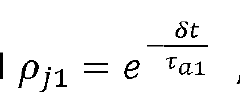

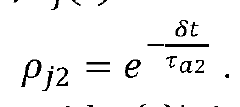

- A double exponential adaptive threshold neuron model with a dynamically varying threshold voltage, implemented using emerging memory devices and a circuit comprising CMOS transistors and resistive memory devices, allowing for reconfigurable decay time constants and dual decay constants.

Design method and recording medium

PatentWO2022249308A1

Innovation

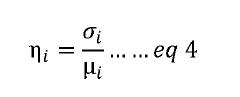

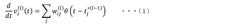

- The design method sets the product of the step size of firing time and weight in the mathematical model equal to the product of the firing threshold voltage and capacitance, allowing for discretization of firing times and weights in the spike generator and synaptic circuit, thereby maintaining high learning accuracy.

SNN Hardware Implementations

The implementation of Spiking Neural Networks (SNNs) in hardware has gained significant attention due to their potential for energy-efficient neuromorphic computing. Various hardware platforms have been developed to realize SNNs, each with its own advantages and challenges in terms of accuracy and energy efficiency.

Neuromorphic chips, such as IBM's TrueNorth and Intel's Loihi, have been designed specifically for SNN implementations. These chips utilize specialized architectures that closely mimic the structure and function of biological neural networks. TrueNorth, for instance, features a modular design with 4096 neurosynaptic cores, each containing 256 neurons and 256 synapses. This architecture allows for efficient parallel processing and low power consumption, making it suitable for large-scale SNN deployments.

Field-Programmable Gate Arrays (FPGAs) have also been widely used for SNN implementations due to their flexibility and reconfigurability. FPGA-based SNN designs can be optimized for specific applications, allowing for a balance between accuracy and energy efficiency. Researchers have demonstrated various FPGA implementations of SNNs, including those that focus on minimizing resource utilization and power consumption while maintaining acceptable accuracy levels.

Application-Specific Integrated Circuits (ASICs) offer another avenue for SNN hardware implementation. ASICs can be tailored to specific SNN architectures and algorithms, potentially achieving higher performance and energy efficiency compared to general-purpose platforms. However, the development of ASICs for SNNs requires significant investment and longer design cycles.

Memristor-based hardware has emerged as a promising technology for SNN implementation. Memristors can emulate synaptic behavior and enable efficient in-memory computing, potentially reducing the energy consumption associated with data movement between memory and processing units. Several research groups have demonstrated memristor-based SNN implementations that show promising results in terms of energy efficiency and scalability.

The trade-off between accuracy and energy efficiency in SNN hardware implementations is a critical consideration. Different hardware platforms offer varying levels of precision and energy consumption, which can significantly impact the overall performance of the SNN. For example, analog implementations may offer higher energy efficiency but can suffer from reduced accuracy due to noise and variability. Digital implementations, on the other hand, may provide higher accuracy but at the cost of increased power consumption.

To quantify this trade-off, researchers have developed various metrics and benchmarking methodologies. These include energy per synaptic operation, throughput per watt, and accuracy-energy product. By evaluating SNN hardware implementations using these metrics, designers can make informed decisions about the most suitable platform for their specific application requirements.

Neuromorphic chips, such as IBM's TrueNorth and Intel's Loihi, have been designed specifically for SNN implementations. These chips utilize specialized architectures that closely mimic the structure and function of biological neural networks. TrueNorth, for instance, features a modular design with 4096 neurosynaptic cores, each containing 256 neurons and 256 synapses. This architecture allows for efficient parallel processing and low power consumption, making it suitable for large-scale SNN deployments.

Field-Programmable Gate Arrays (FPGAs) have also been widely used for SNN implementations due to their flexibility and reconfigurability. FPGA-based SNN designs can be optimized for specific applications, allowing for a balance between accuracy and energy efficiency. Researchers have demonstrated various FPGA implementations of SNNs, including those that focus on minimizing resource utilization and power consumption while maintaining acceptable accuracy levels.

Application-Specific Integrated Circuits (ASICs) offer another avenue for SNN hardware implementation. ASICs can be tailored to specific SNN architectures and algorithms, potentially achieving higher performance and energy efficiency compared to general-purpose platforms. However, the development of ASICs for SNNs requires significant investment and longer design cycles.

Memristor-based hardware has emerged as a promising technology for SNN implementation. Memristors can emulate synaptic behavior and enable efficient in-memory computing, potentially reducing the energy consumption associated with data movement between memory and processing units. Several research groups have demonstrated memristor-based SNN implementations that show promising results in terms of energy efficiency and scalability.

The trade-off between accuracy and energy efficiency in SNN hardware implementations is a critical consideration. Different hardware platforms offer varying levels of precision and energy consumption, which can significantly impact the overall performance of the SNN. For example, analog implementations may offer higher energy efficiency but can suffer from reduced accuracy due to noise and variability. Digital implementations, on the other hand, may provide higher accuracy but at the cost of increased power consumption.

To quantify this trade-off, researchers have developed various metrics and benchmarking methodologies. These include energy per synaptic operation, throughput per watt, and accuracy-energy product. By evaluating SNN hardware implementations using these metrics, designers can make informed decisions about the most suitable platform for their specific application requirements.

Ethical AI Considerations

The ethical considerations surrounding the trade-off between accuracy and energy consumption in Spiking Neural Networks (SNNs) are multifaceted and require careful examination. As AI systems become more prevalent in society, it is crucial to address the ethical implications of their development and deployment, particularly in the context of SNNs.

One primary ethical concern is the potential for bias in the accuracy-energy trade-off. If energy efficiency is prioritized over accuracy, there is a risk that the resulting SNN models may perform poorly on certain subgroups or underrepresented populations. This could lead to unfair or discriminatory outcomes, especially if these systems are used in critical decision-making processes such as healthcare diagnostics or criminal justice.

Another ethical consideration is the environmental impact of AI systems. While SNNs are generally more energy-efficient than traditional neural networks, the pursuit of higher accuracy may lead to increased energy consumption. This raises questions about the responsibility of AI developers and organizations to balance performance improvements with environmental sustainability.

Transparency and explainability are also crucial ethical concerns in the context of SNNs. As researchers work to quantify the accuracy-energy trade-off, it is essential to ensure that the methods and metrics used are transparent and can be easily understood by stakeholders. This transparency is vital for building trust in AI systems and allowing for meaningful public discourse on their development and deployment.

The ethical implications of data usage in training and evaluating SNNs must also be considered. Researchers must ensure that the data used to quantify the accuracy-energy trade-off is collected and used ethically, with proper consent and privacy protections in place. This is particularly important when dealing with sensitive or personal data.

Furthermore, the potential for misuse or dual-use of SNNs optimized for specific accuracy-energy trade-offs must be addressed. For example, highly efficient SNNs could be used in surveillance systems, raising concerns about privacy and civil liberties. Ethical guidelines and regulations may be necessary to prevent the misuse of this technology.

Lastly, there is an ethical responsibility to consider the long-term societal impacts of advancing SNN technology. As these systems become more efficient and accurate, they may displace human workers in certain industries. It is crucial to consider the ethical implications of such displacement and to develop strategies for mitigating negative impacts on affected communities.

One primary ethical concern is the potential for bias in the accuracy-energy trade-off. If energy efficiency is prioritized over accuracy, there is a risk that the resulting SNN models may perform poorly on certain subgroups or underrepresented populations. This could lead to unfair or discriminatory outcomes, especially if these systems are used in critical decision-making processes such as healthcare diagnostics or criminal justice.

Another ethical consideration is the environmental impact of AI systems. While SNNs are generally more energy-efficient than traditional neural networks, the pursuit of higher accuracy may lead to increased energy consumption. This raises questions about the responsibility of AI developers and organizations to balance performance improvements with environmental sustainability.

Transparency and explainability are also crucial ethical concerns in the context of SNNs. As researchers work to quantify the accuracy-energy trade-off, it is essential to ensure that the methods and metrics used are transparent and can be easily understood by stakeholders. This transparency is vital for building trust in AI systems and allowing for meaningful public discourse on their development and deployment.

The ethical implications of data usage in training and evaluating SNNs must also be considered. Researchers must ensure that the data used to quantify the accuracy-energy trade-off is collected and used ethically, with proper consent and privacy protections in place. This is particularly important when dealing with sensitive or personal data.

Furthermore, the potential for misuse or dual-use of SNNs optimized for specific accuracy-energy trade-offs must be addressed. For example, highly efficient SNNs could be used in surveillance systems, raising concerns about privacy and civil liberties. Ethical guidelines and regulations may be necessary to prevent the misuse of this technology.

Lastly, there is an ethical responsibility to consider the long-term societal impacts of advancing SNN technology. As these systems become more efficient and accurate, they may displace human workers in certain industries. It is crucial to consider the ethical implications of such displacement and to develop strategies for mitigating negative impacts on affected communities.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!