Neuromorphic versus FPGA: Which Platform for Edge Inference in 2025?

AUG 20, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic and FPGA Edge Inference Landscape

The edge computing landscape is witnessing a transformative shift as neuromorphic and FPGA technologies compete for dominance in inference tasks. Neuromorphic computing, inspired by the human brain's neural networks, offers a promising approach to efficient and low-power edge inference. These systems utilize artificial neurons and synapses to process information in a manner similar to biological neural networks, potentially offering significant advantages in energy efficiency and real-time processing capabilities.

On the other hand, Field-Programmable Gate Arrays (FPGAs) have long been a staple in edge computing due to their flexibility and reconfigurability. FPGAs allow for custom hardware implementations of inference algorithms, providing a balance between performance and power efficiency. Their ability to be reprogrammed in the field makes them adaptable to evolving AI models and inference requirements.

As we approach 2025, the landscape for edge inference is becoming increasingly complex. Neuromorphic chips are making strides in energy efficiency and are particularly well-suited for tasks that require continuous learning and adaptation. Companies like Intel, with its Loihi chip, and IBM, with its TrueNorth architecture, are pushing the boundaries of neuromorphic computing for edge applications.

FPGA manufacturers are not standing still, however. Xilinx (now part of AMD) and Intel's Altera division are developing more AI-optimized FPGA architectures. These new FPGAs incorporate dedicated AI acceleration blocks and improved memory architectures to enhance inference performance while maintaining the flexibility that FPGAs are known for.

The choice between neuromorphic and FPGA platforms for edge inference in 2025 will likely depend on specific application requirements. Neuromorphic systems may excel in scenarios requiring ultra-low power consumption and continuous learning, such as in IoT sensors and wearable devices. FPGAs, with their versatility, may remain the preferred choice for applications that demand high throughput and the ability to quickly adapt to new algorithms.

Emerging hybrid solutions that combine elements of both neuromorphic and FPGA technologies are also entering the market. These platforms aim to leverage the strengths of both approaches, offering the energy efficiency of neuromorphic computing with the flexibility of FPGAs. Such hybrid solutions could potentially provide a best-of-both-worlds scenario for edge inference applications.

As the edge computing market continues to grow, driven by the increasing demand for real-time AI processing in autonomous vehicles, smart cities, and industrial IoT, both neuromorphic and FPGA technologies are likely to find their niches. The landscape in 2025 may not see a clear winner, but rather a diversified ecosystem where each technology thrives in its optimal use cases.

On the other hand, Field-Programmable Gate Arrays (FPGAs) have long been a staple in edge computing due to their flexibility and reconfigurability. FPGAs allow for custom hardware implementations of inference algorithms, providing a balance between performance and power efficiency. Their ability to be reprogrammed in the field makes them adaptable to evolving AI models and inference requirements.

As we approach 2025, the landscape for edge inference is becoming increasingly complex. Neuromorphic chips are making strides in energy efficiency and are particularly well-suited for tasks that require continuous learning and adaptation. Companies like Intel, with its Loihi chip, and IBM, with its TrueNorth architecture, are pushing the boundaries of neuromorphic computing for edge applications.

FPGA manufacturers are not standing still, however. Xilinx (now part of AMD) and Intel's Altera division are developing more AI-optimized FPGA architectures. These new FPGAs incorporate dedicated AI acceleration blocks and improved memory architectures to enhance inference performance while maintaining the flexibility that FPGAs are known for.

The choice between neuromorphic and FPGA platforms for edge inference in 2025 will likely depend on specific application requirements. Neuromorphic systems may excel in scenarios requiring ultra-low power consumption and continuous learning, such as in IoT sensors and wearable devices. FPGAs, with their versatility, may remain the preferred choice for applications that demand high throughput and the ability to quickly adapt to new algorithms.

Emerging hybrid solutions that combine elements of both neuromorphic and FPGA technologies are also entering the market. These platforms aim to leverage the strengths of both approaches, offering the energy efficiency of neuromorphic computing with the flexibility of FPGAs. Such hybrid solutions could potentially provide a best-of-both-worlds scenario for edge inference applications.

As the edge computing market continues to grow, driven by the increasing demand for real-time AI processing in autonomous vehicles, smart cities, and industrial IoT, both neuromorphic and FPGA technologies are likely to find their niches. The landscape in 2025 may not see a clear winner, but rather a diversified ecosystem where each technology thrives in its optimal use cases.

Market Demand for Edge AI Solutions

The demand for edge AI solutions is experiencing rapid growth, driven by the increasing need for real-time processing, reduced latency, and enhanced data privacy in various industries. As organizations seek to leverage artificial intelligence capabilities closer to the data source, the market for edge AI hardware platforms, including neuromorphic computing and FPGAs, is expanding significantly.

In the context of edge inference, both neuromorphic computing and FPGAs offer unique advantages that cater to different aspects of market demand. Neuromorphic computing, inspired by the human brain's neural structure, promises energy efficiency and the ability to handle complex, unstructured data. This aligns well with the growing demand for AI solutions in power-constrained environments and applications requiring adaptive learning.

FPGAs, on the other hand, provide flexibility and reconfigurability, allowing for customized hardware acceleration of AI algorithms. This adaptability is particularly attractive in rapidly evolving AI landscapes where algorithms and models are frequently updated. The market demand for FPGAs in edge AI is driven by their ability to balance performance, power efficiency, and adaptability.

The automotive industry is emerging as a significant driver of edge AI demand, with applications ranging from advanced driver assistance systems (ADAS) to autonomous vehicles. Both neuromorphic and FPGA solutions are being explored to meet the stringent requirements of real-time processing and safety-critical operations in automotive environments.

In the industrial sector, edge AI is gaining traction for predictive maintenance, quality control, and process optimization. The demand for robust, low-latency inference capabilities in harsh industrial environments favors both neuromorphic and FPGA platforms, depending on the specific use case and deployment constraints.

The healthcare industry is another key market for edge AI solutions, with applications in medical imaging, patient monitoring, and personalized medicine. The need for privacy-preserving AI inference at the edge is driving interest in both neuromorphic and FPGA-based solutions that can process sensitive medical data locally.

Smart cities and IoT applications represent a burgeoning market for edge AI, encompassing areas such as surveillance, traffic management, and environmental monitoring. The diverse requirements of these applications create opportunities for both neuromorphic and FPGA platforms to demonstrate their respective strengths in energy efficiency and adaptability.

As we approach 2025, the market demand for edge AI solutions is expected to further diversify, with increasing emphasis on specialized hardware that can deliver optimal performance for specific AI workloads. This trend may lead to a more nuanced market landscape where neuromorphic computing and FPGAs coexist, each finding its niche based on the unique requirements of different edge AI applications and industries.

In the context of edge inference, both neuromorphic computing and FPGAs offer unique advantages that cater to different aspects of market demand. Neuromorphic computing, inspired by the human brain's neural structure, promises energy efficiency and the ability to handle complex, unstructured data. This aligns well with the growing demand for AI solutions in power-constrained environments and applications requiring adaptive learning.

FPGAs, on the other hand, provide flexibility and reconfigurability, allowing for customized hardware acceleration of AI algorithms. This adaptability is particularly attractive in rapidly evolving AI landscapes where algorithms and models are frequently updated. The market demand for FPGAs in edge AI is driven by their ability to balance performance, power efficiency, and adaptability.

The automotive industry is emerging as a significant driver of edge AI demand, with applications ranging from advanced driver assistance systems (ADAS) to autonomous vehicles. Both neuromorphic and FPGA solutions are being explored to meet the stringent requirements of real-time processing and safety-critical operations in automotive environments.

In the industrial sector, edge AI is gaining traction for predictive maintenance, quality control, and process optimization. The demand for robust, low-latency inference capabilities in harsh industrial environments favors both neuromorphic and FPGA platforms, depending on the specific use case and deployment constraints.

The healthcare industry is another key market for edge AI solutions, with applications in medical imaging, patient monitoring, and personalized medicine. The need for privacy-preserving AI inference at the edge is driving interest in both neuromorphic and FPGA-based solutions that can process sensitive medical data locally.

Smart cities and IoT applications represent a burgeoning market for edge AI, encompassing areas such as surveillance, traffic management, and environmental monitoring. The diverse requirements of these applications create opportunities for both neuromorphic and FPGA platforms to demonstrate their respective strengths in energy efficiency and adaptability.

As we approach 2025, the market demand for edge AI solutions is expected to further diversify, with increasing emphasis on specialized hardware that can deliver optimal performance for specific AI workloads. This trend may lead to a more nuanced market landscape where neuromorphic computing and FPGAs coexist, each finding its niche based on the unique requirements of different edge AI applications and industries.

Current State of Neuromorphic and FPGA Technologies

Neuromorphic computing and Field-Programmable Gate Arrays (FPGAs) represent two distinct approaches to edge inference, each with its unique strengths and challenges. As of 2023, both technologies have made significant strides in their development and application.

Neuromorphic computing, inspired by the structure and function of biological neural networks, has seen remarkable advancements. Companies like Intel, IBM, and BrainChip have developed neuromorphic chips that demonstrate impressive energy efficiency and real-time processing capabilities. These chips excel in tasks such as pattern recognition, sensory processing, and adaptive learning, making them particularly suitable for edge AI applications.

The current state of neuromorphic technology showcases its potential for ultra-low power consumption and high-speed parallel processing. For instance, Intel's Loihi 2 chip, released in 2021, features up to 1 million neurons per chip and can be scaled to larger systems. This technology has shown promise in applications ranging from robotics to autonomous vehicles, where real-time decision-making and energy efficiency are crucial.

On the other hand, FPGAs have long been established as versatile and reconfigurable hardware platforms. Leading FPGA manufacturers like Xilinx (now part of AMD) and Intel have been continuously improving their offerings, focusing on enhanced performance, reduced power consumption, and increased integration of AI acceleration features.

Modern FPGAs are equipped with dedicated AI inference engines, high-bandwidth memory, and optimized architectures for deep learning workloads. For example, Xilinx's Versal AI Edge series, introduced in 2021, combines FPGA programmability with built-in AI engines, offering a flexible platform for edge inference tasks.

The current state of FPGA technology for edge inference is characterized by its ability to provide customizable, high-performance computing solutions. FPGAs excel in applications requiring real-time processing, low latency, and adaptability to changing algorithms or standards. They have found widespread use in industries such as telecommunications, automotive, and industrial automation.

Both neuromorphic and FPGA technologies face challenges in their adoption for edge inference. Neuromorphic computing, while promising, is still in a relatively early stage of development and lacks the extensive software ecosystem and developer familiarity that FPGAs enjoy. FPGAs, on the other hand, typically consume more power than neuromorphic chips and may require more complex programming.

As we approach 2025, both technologies are expected to continue evolving, with neuromorphic computing likely to see increased commercialization and FPGAs further optimizing their AI inference capabilities. The choice between the two platforms for edge inference will depend on specific application requirements, power constraints, and the maturity of supporting ecosystems.

Neuromorphic computing, inspired by the structure and function of biological neural networks, has seen remarkable advancements. Companies like Intel, IBM, and BrainChip have developed neuromorphic chips that demonstrate impressive energy efficiency and real-time processing capabilities. These chips excel in tasks such as pattern recognition, sensory processing, and adaptive learning, making them particularly suitable for edge AI applications.

The current state of neuromorphic technology showcases its potential for ultra-low power consumption and high-speed parallel processing. For instance, Intel's Loihi 2 chip, released in 2021, features up to 1 million neurons per chip and can be scaled to larger systems. This technology has shown promise in applications ranging from robotics to autonomous vehicles, where real-time decision-making and energy efficiency are crucial.

On the other hand, FPGAs have long been established as versatile and reconfigurable hardware platforms. Leading FPGA manufacturers like Xilinx (now part of AMD) and Intel have been continuously improving their offerings, focusing on enhanced performance, reduced power consumption, and increased integration of AI acceleration features.

Modern FPGAs are equipped with dedicated AI inference engines, high-bandwidth memory, and optimized architectures for deep learning workloads. For example, Xilinx's Versal AI Edge series, introduced in 2021, combines FPGA programmability with built-in AI engines, offering a flexible platform for edge inference tasks.

The current state of FPGA technology for edge inference is characterized by its ability to provide customizable, high-performance computing solutions. FPGAs excel in applications requiring real-time processing, low latency, and adaptability to changing algorithms or standards. They have found widespread use in industries such as telecommunications, automotive, and industrial automation.

Both neuromorphic and FPGA technologies face challenges in their adoption for edge inference. Neuromorphic computing, while promising, is still in a relatively early stage of development and lacks the extensive software ecosystem and developer familiarity that FPGAs enjoy. FPGAs, on the other hand, typically consume more power than neuromorphic chips and may require more complex programming.

As we approach 2025, both technologies are expected to continue evolving, with neuromorphic computing likely to see increased commercialization and FPGAs further optimizing their AI inference capabilities. The choice between the two platforms for edge inference will depend on specific application requirements, power constraints, and the maturity of supporting ecosystems.

Existing Edge Inference Solutions

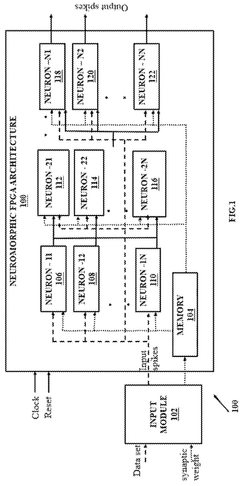

01 Neuromorphic computing architectures on FPGAs

Implementation of neuromorphic computing architectures on FPGAs to improve performance and efficiency. This approach combines the flexibility of FPGAs with the brain-inspired processing of neuromorphic systems, enabling efficient parallel processing and adaptability for various AI applications.- Neuromorphic computing architectures on FPGAs: Implementation of neuromorphic computing architectures on FPGAs to improve performance and efficiency. This approach combines the flexibility of FPGAs with the brain-inspired processing of neuromorphic systems, enabling parallel processing and adaptive learning capabilities.

- Optimization techniques for FPGA-based neuromorphic systems: Development of optimization techniques specifically tailored for FPGA-based neuromorphic systems. These techniques focus on improving resource utilization, reducing power consumption, and enhancing overall system performance through efficient mapping of neural networks onto FPGA hardware.

- Integration of machine learning algorithms with FPGA-based neuromorphic computing: Incorporation of machine learning algorithms into FPGA-based neuromorphic computing systems to enhance their capabilities. This integration allows for improved adaptability, learning, and decision-making processes in neuromorphic architectures implemented on FPGAs.

- Hardware acceleration for neuromorphic computing using FPGAs: Utilization of FPGAs as hardware accelerators for neuromorphic computing tasks. This approach leverages the reconfigurable nature of FPGAs to create specialized circuits that can significantly speed up neuromorphic computations and improve overall system performance.

- Scalable and reconfigurable neuromorphic architectures on FPGAs: Design and implementation of scalable and reconfigurable neuromorphic architectures on FPGAs. These architectures allow for flexible adaptation to different neural network sizes and structures, enabling efficient processing of various neuromorphic computing tasks on a single FPGA platform.

02 Optimization techniques for FPGA-based neuromorphic systems

Development of optimization techniques specifically tailored for FPGA-based neuromorphic systems. These techniques focus on improving resource utilization, reducing power consumption, and enhancing overall system performance through efficient mapping of neural network algorithms to FPGA architectures.Expand Specific Solutions03 Integration of memristive devices in FPGA-based neuromorphic systems

Incorporation of memristive devices into FPGA-based neuromorphic computing systems to enhance synaptic plasticity and learning capabilities. This integration aims to improve the energy efficiency and computational density of neuromorphic hardware implementations on FPGAs.Expand Specific Solutions04 Real-time learning and adaptation in FPGA-based neuromorphic systems

Development of techniques for real-time learning and adaptation in FPGA-based neuromorphic systems. These approaches enable on-chip learning, allowing the system to continuously update and improve its performance based on incoming data and changing environmental conditions.Expand Specific Solutions05 Scalability and interconnectivity of FPGA-based neuromorphic systems

Addressing scalability and interconnectivity challenges in FPGA-based neuromorphic systems. This includes developing efficient communication protocols and interconnect architectures to enable the creation of large-scale neuromorphic systems composed of multiple FPGAs, improving overall system performance and capabilities.Expand Specific Solutions

Key Players in Neuromorphic and FPGA Industries

The competition between neuromorphic and FPGA platforms for edge inference in 2025 is in a dynamic phase, with the market still evolving. While FPGAs are more mature and widely adopted, neuromorphic computing is gaining traction due to its potential for energy efficiency and brain-like processing. Key players like Alibaba, Inspur, and Efinix are advancing FPGA technologies, while companies such as Polyn Technology and Synsense are pushing neuromorphic solutions. Academic institutions, including Tsinghua University and the University of California, are contributing significant research to both fields. The market size is expected to grow substantially, driven by increasing demand for AI at the edge across various industries.

Tsinghua University

Technical Solution: Tsinghua University has developed a neuromorphic computing platform called Tianjic, which integrates both neuromorphic and von Neumann architectures. This hybrid approach allows for flexible and efficient edge inference. The Tianjic chip achieves a peak performance of 1.28 TOPS/W and can process complex AI tasks with low power consumption[1]. The platform supports various neural network models and can be optimized for specific edge applications, making it suitable for autonomous drones, robots, and other edge devices requiring real-time processing[2].

Strengths: Hybrid architecture combining neuromorphic and traditional computing, high energy efficiency, flexibility for various AI models. Weaknesses: May require specialized programming and optimization for full potential, limited commercial availability compared to established FPGA solutions.

Efinix, Inc.

Technical Solution: Efinix specializes in FPGA technology and has developed the Trion and Titanium FPGA families, which are optimized for edge inference applications. Their FPGAs utilize a unique Quantum architecture that provides up to 4x area efficiency compared to traditional FPGAs[5]. For edge inference in 2025, Efinix is focusing on improving their FPGA fabric to support AI acceleration, including the integration of DSP blocks and optimized memory structures. Their roadmap includes developing FPGAs with higher logic density and improved power efficiency, targeting a 2x improvement in performance per watt by 2025[6].

Strengths: Compact and power-efficient FPGA designs, scalable solutions for various edge applications. Weaknesses: Limited market share compared to larger FPGA manufacturers, may face challenges in competing with specialized AI accelerators.

Core Innovations in Neuromorphic and FPGA Technologies

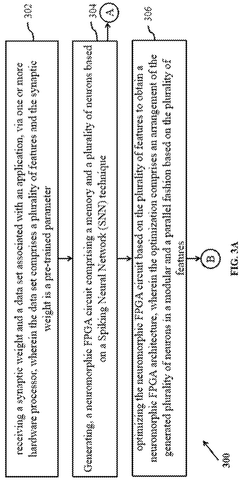

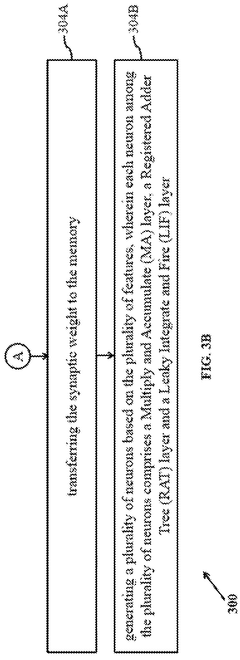

Field programmable gate array (FPGA) based neuromorphic computing architecture

PatentActiveUS12314845B2

Innovation

- The development of an FPGA-based neuromorphic computing architecture that utilizes a Spiking Neural Network (SNN) technique, comprising a memory and a plurality of neurons with MA, RAT, and LIF layers, optimized for modular and parallel processing to enhance energy efficiency and reduce latency.

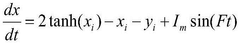

FPGA-technology-based neuron circuit implementation method using multi-segment linear fitting

PatentWO2025035585A1

Innovation

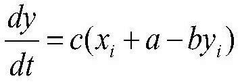

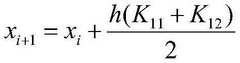

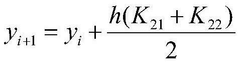

- Using a polylinear fitting method, combining the parity and value range of hyperbolic tangent function, a hyperbolic tangent function with high accuracy requirements is realized in FPGA devices, reducing resource consumption, and generating it through the second-order Runge-Kutta calculation method. Complex neuronal discharge signals.

Energy Efficiency Comparison

Energy efficiency is a critical factor in determining the suitability of hardware platforms for edge inference applications. As we look towards 2025, the comparison between neuromorphic computing and Field-Programmable Gate Arrays (FPGAs) in terms of energy efficiency becomes increasingly relevant.

Neuromorphic computing, inspired by the human brain's neural networks, offers significant potential for energy-efficient computing. These systems utilize specialized hardware architectures that mimic the behavior of biological neurons and synapses. By leveraging event-driven processing and sparse, asynchronous communication, neuromorphic systems can achieve remarkable energy efficiency for certain types of workloads, particularly those involving pattern recognition and sensory processing.

FPGAs, on the other hand, provide a flexible and reconfigurable hardware platform that can be optimized for specific inference tasks. While traditionally not as energy-efficient as Application-Specific Integrated Circuits (ASICs), FPGAs have made substantial progress in recent years. Advanced FPGA architectures, coupled with improved design tools and optimization techniques, have significantly reduced power consumption while maintaining high performance for inference tasks.

When comparing the energy efficiency of neuromorphic systems and FPGAs for edge inference in 2025, several factors come into play. Neuromorphic systems excel in tasks that align well with their brain-inspired architecture, such as real-time sensor processing and anomaly detection. These systems can achieve ultra-low power consumption, often operating in the milliwatt range, making them ideal for battery-powered edge devices with continuous monitoring requirements.

FPGAs, while generally consuming more power than neuromorphic systems, offer greater flexibility and adaptability. They can be optimized for a wider range of inference tasks and can be reconfigured in the field to accommodate evolving algorithms. Recent advancements in FPGA technology, such as the integration of hard AI accelerators and the use of lower-power process nodes, have significantly improved their energy efficiency for inference workloads.

As we approach 2025, the choice between neuromorphic computing and FPGAs for edge inference will largely depend on the specific application requirements. Neuromorphic systems are likely to dominate in scenarios where ultra-low power consumption is paramount, and the workload aligns well with their architecture. FPGAs will continue to be preferred in applications requiring flexibility, higher computational throughput, and the ability to adapt to changing algorithms.

It's worth noting that hybrid approaches combining neuromorphic elements with FPGA technology are also emerging, potentially offering the best of both worlds in terms of energy efficiency and flexibility. These hybrid solutions may play an increasingly important role in edge inference applications by 2025, providing optimized performance across a broader range of use cases.

Neuromorphic computing, inspired by the human brain's neural networks, offers significant potential for energy-efficient computing. These systems utilize specialized hardware architectures that mimic the behavior of biological neurons and synapses. By leveraging event-driven processing and sparse, asynchronous communication, neuromorphic systems can achieve remarkable energy efficiency for certain types of workloads, particularly those involving pattern recognition and sensory processing.

FPGAs, on the other hand, provide a flexible and reconfigurable hardware platform that can be optimized for specific inference tasks. While traditionally not as energy-efficient as Application-Specific Integrated Circuits (ASICs), FPGAs have made substantial progress in recent years. Advanced FPGA architectures, coupled with improved design tools and optimization techniques, have significantly reduced power consumption while maintaining high performance for inference tasks.

When comparing the energy efficiency of neuromorphic systems and FPGAs for edge inference in 2025, several factors come into play. Neuromorphic systems excel in tasks that align well with their brain-inspired architecture, such as real-time sensor processing and anomaly detection. These systems can achieve ultra-low power consumption, often operating in the milliwatt range, making them ideal for battery-powered edge devices with continuous monitoring requirements.

FPGAs, while generally consuming more power than neuromorphic systems, offer greater flexibility and adaptability. They can be optimized for a wider range of inference tasks and can be reconfigured in the field to accommodate evolving algorithms. Recent advancements in FPGA technology, such as the integration of hard AI accelerators and the use of lower-power process nodes, have significantly improved their energy efficiency for inference workloads.

As we approach 2025, the choice between neuromorphic computing and FPGAs for edge inference will largely depend on the specific application requirements. Neuromorphic systems are likely to dominate in scenarios where ultra-low power consumption is paramount, and the workload aligns well with their architecture. FPGAs will continue to be preferred in applications requiring flexibility, higher computational throughput, and the ability to adapt to changing algorithms.

It's worth noting that hybrid approaches combining neuromorphic elements with FPGA technology are also emerging, potentially offering the best of both worlds in terms of energy efficiency and flexibility. These hybrid solutions may play an increasingly important role in edge inference applications by 2025, providing optimized performance across a broader range of use cases.

Scalability and Adaptability Analysis

Scalability and adaptability are crucial factors in determining the viability of neuromorphic and FPGA platforms for edge inference in 2025. Both technologies offer unique advantages and challenges in terms of scaling to meet future demands and adapting to evolving computational requirements.

Neuromorphic systems, inspired by the human brain's architecture, demonstrate promising scalability potential. These systems can theoretically scale to billions of neurons and synapses, mirroring the complexity of biological neural networks. This scalability is particularly advantageous for handling large-scale, parallel processing tasks common in edge inference applications. As neuromorphic hardware continues to evolve, we can expect improvements in energy efficiency and processing speed, making it increasingly suitable for edge deployment.

However, the adaptability of neuromorphic systems presents some challenges. While they excel at certain types of computations, particularly those involving pattern recognition and sensory processing, they may struggle with traditional algorithmic tasks. This limitation could potentially restrict their applicability in diverse edge computing scenarios that require a mix of neural network-based and conventional computations.

FPGAs, on the other hand, offer excellent adaptability due to their reconfigurable nature. This flexibility allows FPGAs to be reprogrammed on-the-fly to accommodate different types of algorithms and computational models. In the context of edge inference, this adaptability is particularly valuable as it enables rapid deployment of new AI models and algorithms without hardware changes. FPGAs can also be optimized for specific tasks, potentially offering better performance and energy efficiency for certain applications compared to general-purpose processors.

Scalability in FPGAs is primarily limited by their physical size and power consumption. While FPGA technology continues to advance, with increasing logic density and improved power efficiency, scaling to very large neural networks may still pose challenges. However, for many edge inference tasks, the scalability of current and near-future FPGAs is likely to be sufficient.

Looking towards 2025, both neuromorphic and FPGA technologies are expected to see significant advancements. Neuromorphic systems may benefit from improved manufacturing processes and novel materials, potentially leading to more compact and efficient designs suitable for edge deployment. FPGAs are likely to see enhancements in logic density, power efficiency, and specialized AI acceleration features, further improving their suitability for edge inference tasks.

The choice between neuromorphic and FPGA platforms for edge inference in 2025 will largely depend on the specific application requirements and the pace of technological advancements in both fields. Applications requiring massive parallelism and brain-like processing may favor neuromorphic solutions, while those demanding high flexibility and adaptability to diverse computational tasks may lean towards FPGAs. Hybrid solutions combining elements of both technologies could also emerge, offering a balance of scalability and adaptability for edge inference applications.

Neuromorphic systems, inspired by the human brain's architecture, demonstrate promising scalability potential. These systems can theoretically scale to billions of neurons and synapses, mirroring the complexity of biological neural networks. This scalability is particularly advantageous for handling large-scale, parallel processing tasks common in edge inference applications. As neuromorphic hardware continues to evolve, we can expect improvements in energy efficiency and processing speed, making it increasingly suitable for edge deployment.

However, the adaptability of neuromorphic systems presents some challenges. While they excel at certain types of computations, particularly those involving pattern recognition and sensory processing, they may struggle with traditional algorithmic tasks. This limitation could potentially restrict their applicability in diverse edge computing scenarios that require a mix of neural network-based and conventional computations.

FPGAs, on the other hand, offer excellent adaptability due to their reconfigurable nature. This flexibility allows FPGAs to be reprogrammed on-the-fly to accommodate different types of algorithms and computational models. In the context of edge inference, this adaptability is particularly valuable as it enables rapid deployment of new AI models and algorithms without hardware changes. FPGAs can also be optimized for specific tasks, potentially offering better performance and energy efficiency for certain applications compared to general-purpose processors.

Scalability in FPGAs is primarily limited by their physical size and power consumption. While FPGA technology continues to advance, with increasing logic density and improved power efficiency, scaling to very large neural networks may still pose challenges. However, for many edge inference tasks, the scalability of current and near-future FPGAs is likely to be sufficient.

Looking towards 2025, both neuromorphic and FPGA technologies are expected to see significant advancements. Neuromorphic systems may benefit from improved manufacturing processes and novel materials, potentially leading to more compact and efficient designs suitable for edge deployment. FPGAs are likely to see enhancements in logic density, power efficiency, and specialized AI acceleration features, further improving their suitability for edge inference tasks.

The choice between neuromorphic and FPGA platforms for edge inference in 2025 will largely depend on the specific application requirements and the pace of technological advancements in both fields. Applications requiring massive parallelism and brain-like processing may favor neuromorphic solutions, while those demanding high flexibility and adaptability to diverse computational tasks may lean towards FPGAs. Hybrid solutions combining elements of both technologies could also emerge, offering a balance of scalability and adaptability for edge inference applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!