How to Convert Trained ANN Models to SNNs for Deployment on Neuromorphic Hardware

AUG 20, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

ANN to SNN Conversion Background and Objectives

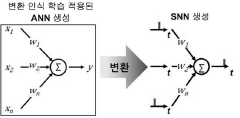

The conversion of Artificial Neural Networks (ANNs) to Spiking Neural Networks (SNNs) for deployment on neuromorphic hardware represents a significant advancement in the field of neural network implementation. This transition aims to bridge the gap between the high performance of ANNs and the energy efficiency of brain-inspired neuromorphic systems.

The development of ANNs has led to remarkable achievements in various domains, including image recognition, natural language processing, and decision-making systems. However, these networks typically require substantial computational resources and energy consumption when deployed on traditional von Neumann architectures. In contrast, SNNs operate on principles more closely aligned with biological neural systems, utilizing discrete spikes for information processing and transmission.

The primary objective of ANN to SNN conversion is to leverage the best of both worlds: the well-established training methodologies and performance of ANNs, combined with the energy efficiency and potential for real-time processing offered by SNNs when implemented on neuromorphic hardware. This conversion process seeks to maintain the functional capabilities of the original ANN while adapting it to the spiking paradigm.

Historically, the concept of spiking neural networks dates back to the mid-20th century, with early models like the Hodgkin-Huxley model. However, the practical implementation of large-scale SNNs has only become feasible in recent years with advancements in neuromorphic hardware and conversion techniques.

The evolution of ANN to SNN conversion techniques has seen several key milestones. Early approaches focused on direct weight transfer and threshold adjustment, while more recent methods involve sophisticated rate coding schemes and temporal coding strategies. The development of specialized neuromorphic hardware platforms, such as IBM's TrueNorth and Intel's Loihi, has further accelerated research in this area.

Current technological trends indicate a growing interest in edge computing and low-power AI applications, making ANN to SNN conversion increasingly relevant. The potential for SNNs to perform efficient inference with minimal energy consumption aligns well with the demands of IoT devices, autonomous systems, and other resource-constrained environments.

Looking forward, the field of ANN to SNN conversion faces several challenges and opportunities. These include improving the accuracy of converted models, reducing the latency of spiking networks, and developing more sophisticated training algorithms specifically tailored for SNNs. Additionally, there is a push towards creating hybrid systems that can seamlessly integrate both ANN and SNN components to optimize performance across different tasks and hardware configurations.

The development of ANNs has led to remarkable achievements in various domains, including image recognition, natural language processing, and decision-making systems. However, these networks typically require substantial computational resources and energy consumption when deployed on traditional von Neumann architectures. In contrast, SNNs operate on principles more closely aligned with biological neural systems, utilizing discrete spikes for information processing and transmission.

The primary objective of ANN to SNN conversion is to leverage the best of both worlds: the well-established training methodologies and performance of ANNs, combined with the energy efficiency and potential for real-time processing offered by SNNs when implemented on neuromorphic hardware. This conversion process seeks to maintain the functional capabilities of the original ANN while adapting it to the spiking paradigm.

Historically, the concept of spiking neural networks dates back to the mid-20th century, with early models like the Hodgkin-Huxley model. However, the practical implementation of large-scale SNNs has only become feasible in recent years with advancements in neuromorphic hardware and conversion techniques.

The evolution of ANN to SNN conversion techniques has seen several key milestones. Early approaches focused on direct weight transfer and threshold adjustment, while more recent methods involve sophisticated rate coding schemes and temporal coding strategies. The development of specialized neuromorphic hardware platforms, such as IBM's TrueNorth and Intel's Loihi, has further accelerated research in this area.

Current technological trends indicate a growing interest in edge computing and low-power AI applications, making ANN to SNN conversion increasingly relevant. The potential for SNNs to perform efficient inference with minimal energy consumption aligns well with the demands of IoT devices, autonomous systems, and other resource-constrained environments.

Looking forward, the field of ANN to SNN conversion faces several challenges and opportunities. These include improving the accuracy of converted models, reducing the latency of spiking networks, and developing more sophisticated training algorithms specifically tailored for SNNs. Additionally, there is a push towards creating hybrid systems that can seamlessly integrate both ANN and SNN components to optimize performance across different tasks and hardware configurations.

Neuromorphic Hardware Market Analysis

The neuromorphic hardware market is experiencing significant growth and transformation, driven by the increasing demand for energy-efficient computing solutions and the rise of artificial intelligence applications. This market segment is poised for substantial expansion in the coming years, with several key factors contributing to its development.

Neuromorphic hardware, designed to mimic the structure and function of biological neural networks, offers unique advantages in terms of power efficiency and parallel processing capabilities. These characteristics make it particularly attractive for edge computing, Internet of Things (IoT) devices, and AI-driven applications where energy consumption and real-time processing are critical factors.

The market for neuromorphic hardware is currently in its nascent stages but is expected to grow rapidly. Major technology companies and research institutions are investing heavily in the development of neuromorphic chips and systems, recognizing their potential to revolutionize computing paradigms. This investment is likely to accelerate market growth and drive innovation in the field.

One of the primary drivers of the neuromorphic hardware market is the increasing adoption of AI and machine learning technologies across various industries. As these technologies become more prevalent, the demand for specialized hardware capable of efficiently running neural network models is expected to surge. Neuromorphic hardware, with its ability to process information in a manner similar to the human brain, is well-positioned to meet this demand.

The automotive industry is emerging as a significant market for neuromorphic hardware, particularly in the development of autonomous vehicles. These systems require real-time processing of vast amounts of sensor data, making neuromorphic chips an attractive solution due to their low latency and energy efficiency. Similarly, the healthcare sector is exploring neuromorphic hardware for applications such as brain-computer interfaces and medical imaging analysis.

Despite the promising outlook, the neuromorphic hardware market faces several challenges. The technology is still in its early stages, and there is a need for standardization and ecosystem development to facilitate wider adoption. Additionally, the complexity of designing and manufacturing neuromorphic chips presents a barrier to entry for smaller companies, potentially limiting market competition.

Looking ahead, the neuromorphic hardware market is expected to see continued growth and innovation. As the technology matures and becomes more accessible, it is likely to find applications in an increasingly diverse range of fields, from robotics to financial modeling. The convergence of neuromorphic hardware with other emerging technologies, such as quantum computing and 5G networks, may also open up new possibilities and market opportunities.

Neuromorphic hardware, designed to mimic the structure and function of biological neural networks, offers unique advantages in terms of power efficiency and parallel processing capabilities. These characteristics make it particularly attractive for edge computing, Internet of Things (IoT) devices, and AI-driven applications where energy consumption and real-time processing are critical factors.

The market for neuromorphic hardware is currently in its nascent stages but is expected to grow rapidly. Major technology companies and research institutions are investing heavily in the development of neuromorphic chips and systems, recognizing their potential to revolutionize computing paradigms. This investment is likely to accelerate market growth and drive innovation in the field.

One of the primary drivers of the neuromorphic hardware market is the increasing adoption of AI and machine learning technologies across various industries. As these technologies become more prevalent, the demand for specialized hardware capable of efficiently running neural network models is expected to surge. Neuromorphic hardware, with its ability to process information in a manner similar to the human brain, is well-positioned to meet this demand.

The automotive industry is emerging as a significant market for neuromorphic hardware, particularly in the development of autonomous vehicles. These systems require real-time processing of vast amounts of sensor data, making neuromorphic chips an attractive solution due to their low latency and energy efficiency. Similarly, the healthcare sector is exploring neuromorphic hardware for applications such as brain-computer interfaces and medical imaging analysis.

Despite the promising outlook, the neuromorphic hardware market faces several challenges. The technology is still in its early stages, and there is a need for standardization and ecosystem development to facilitate wider adoption. Additionally, the complexity of designing and manufacturing neuromorphic chips presents a barrier to entry for smaller companies, potentially limiting market competition.

Looking ahead, the neuromorphic hardware market is expected to see continued growth and innovation. As the technology matures and becomes more accessible, it is likely to find applications in an increasingly diverse range of fields, from robotics to financial modeling. The convergence of neuromorphic hardware with other emerging technologies, such as quantum computing and 5G networks, may also open up new possibilities and market opportunities.

ANN-SNN Conversion Challenges

The conversion of trained Artificial Neural Networks (ANNs) to Spiking Neural Networks (SNNs) for deployment on neuromorphic hardware presents several significant challenges. One of the primary obstacles is the fundamental difference in information encoding between these two types of networks. ANNs typically use continuous-valued activations, while SNNs rely on discrete spike events to transmit information.

This disparity necessitates a careful translation of the ANN's learned representations into a spike-based format, which can lead to a loss of precision and accuracy. The conversion process must ensure that the temporal dynamics of spiking neurons accurately capture the computational logic embedded in the ANN's weights and activations.

Another major challenge lies in maintaining the performance of the converted SNN compared to the original ANN. The discretization of continuous values into spike trains can introduce quantization errors, potentially degrading the network's overall accuracy. This issue is particularly pronounced for deep neural networks, where small errors can propagate and amplify through multiple layers.

The conversion process also faces difficulties in handling non-linear activation functions commonly used in ANNs, such as ReLU or sigmoid. These functions need to be approximated using spike-based mechanisms, which can alter the network's behavior and require careful calibration to preserve the original functionality.

Energy efficiency, while a potential advantage of SNNs, presents its own set of challenges during conversion. Optimizing the spike encoding to minimize power consumption while maintaining computational accuracy is a delicate balancing act that requires sophisticated algorithms and fine-tuning.

Temporal dynamics introduce another layer of complexity. SNNs operate in the time domain, and the conversion process must account for the temporal aspects of information processing, which are not explicitly present in standard ANNs. This includes determining appropriate time constants for neurons and synapses, as well as managing the trade-off between processing speed and accuracy.

Lastly, the hardware constraints of neuromorphic systems pose additional challenges. The limited precision of synaptic weights, restrictions on network connectivity, and specific neuron models supported by the hardware must all be considered during the conversion process. Adapting the ANN architecture to fit within these constraints while preserving its functionality is a non-trivial task that often requires iterative refinement and optimization.

This disparity necessitates a careful translation of the ANN's learned representations into a spike-based format, which can lead to a loss of precision and accuracy. The conversion process must ensure that the temporal dynamics of spiking neurons accurately capture the computational logic embedded in the ANN's weights and activations.

Another major challenge lies in maintaining the performance of the converted SNN compared to the original ANN. The discretization of continuous values into spike trains can introduce quantization errors, potentially degrading the network's overall accuracy. This issue is particularly pronounced for deep neural networks, where small errors can propagate and amplify through multiple layers.

The conversion process also faces difficulties in handling non-linear activation functions commonly used in ANNs, such as ReLU or sigmoid. These functions need to be approximated using spike-based mechanisms, which can alter the network's behavior and require careful calibration to preserve the original functionality.

Energy efficiency, while a potential advantage of SNNs, presents its own set of challenges during conversion. Optimizing the spike encoding to minimize power consumption while maintaining computational accuracy is a delicate balancing act that requires sophisticated algorithms and fine-tuning.

Temporal dynamics introduce another layer of complexity. SNNs operate in the time domain, and the conversion process must account for the temporal aspects of information processing, which are not explicitly present in standard ANNs. This includes determining appropriate time constants for neurons and synapses, as well as managing the trade-off between processing speed and accuracy.

Lastly, the hardware constraints of neuromorphic systems pose additional challenges. The limited precision of synaptic weights, restrictions on network connectivity, and specific neuron models supported by the hardware must all be considered during the conversion process. Adapting the ANN architecture to fit within these constraints while preserving its functionality is a non-trivial task that often requires iterative refinement and optimization.

Current ANN-SNN Conversion Methods

01 Conversion techniques for ANN to SNN

Various methods are employed to convert Artificial Neural Networks (ANNs) to Spiking Neural Networks (SNNs). These techniques often involve mapping ANN parameters to SNN equivalents, adjusting activation functions, and optimizing for spike-based computations. The conversion process aims to maintain the accuracy of the original ANN while leveraging the energy efficiency and biological plausibility of SNNs.- Conversion techniques for ANN to SNN: Various methods and algorithms are developed to convert Artificial Neural Networks (ANNs) to Spiking Neural Networks (SNNs). These techniques aim to preserve the functionality and accuracy of the original ANN while adapting it to the spiking neuron model. The conversion process often involves mapping ANN activation functions to spike rates or timing, and adjusting synaptic weights to maintain network performance.

- Optimization of SNN conversion for energy efficiency: Researchers focus on optimizing the ANN to SNN conversion process to improve energy efficiency in neuromorphic hardware implementations. This includes developing techniques to reduce the number of spikes required for computation, minimizing power consumption, and maximizing the utilization of neuromorphic architectures.

- Temporal coding in ANN to SNN conversion: Exploration of temporal coding schemes in the conversion process from ANNs to SNNs. This approach aims to leverage the time-based nature of spiking neurons to encode information more efficiently and potentially improve the performance of converted networks in tasks requiring temporal processing.

- Hardware-aware ANN to SNN conversion: Development of conversion techniques that take into account the specific characteristics and constraints of neuromorphic hardware platforms. These methods aim to optimize the converted SNN for implementation on specific neuromorphic chips or systems, considering factors such as limited precision, connectivity constraints, and available neuron models.

- Hybrid ANN-SNN architectures: Exploration of hybrid architectures that combine elements of both ANNs and SNNs. These approaches aim to leverage the strengths of both paradigms, potentially allowing for more efficient conversion processes or improved performance in specific tasks. Hybrid models may incorporate spiking layers within traditional ANN architectures or use ANN-like processing in SNN frameworks.

02 Temporal coding in ANN to SNN conversion

Temporal coding strategies are crucial in the conversion of ANNs to SNNs. These methods focus on encoding information in the timing of spikes rather than just their rate. Techniques may include time-to-first-spike encoding, phase coding, or inter-spike interval coding. Such approaches can help preserve the temporal dynamics of the original ANN and improve the efficiency of the resulting SNN.Expand Specific Solutions03 Hardware implementation of ANN to SNN conversion

The conversion of ANNs to SNNs often considers hardware implementation aspects. This includes optimizing the converted models for neuromorphic hardware, designing specialized circuits for spike processing, and developing efficient memory architectures. The goal is to create SNNs that can be deployed on energy-efficient, brain-inspired computing platforms.Expand Specific Solutions04 Training algorithms for ANN to SNN conversion

Specialized training algorithms are developed to facilitate the conversion of ANNs to SNNs. These algorithms may involve adjusting learning rules, modifying backpropagation techniques for spiking networks, or developing hybrid training approaches. The focus is on preserving or enhancing the performance of the original ANN while adapting it to the spiking domain.Expand Specific Solutions05 Performance optimization in ANN to SNN conversion

Various techniques are employed to optimize the performance of converted SNNs. This includes methods for reducing latency, improving energy efficiency, and maintaining or enhancing accuracy. Approaches may involve pruning unnecessary connections, quantizing weights, or developing novel neuron models that better approximate the behavior of ANNs in the spiking domain.Expand Specific Solutions

Key Players in Neuromorphic Computing

The field of converting trained Artificial Neural Networks (ANNs) to Spiking Neural Networks (SNNs) for neuromorphic hardware deployment is in its early growth stage. The market size is expanding as neuromorphic computing gains traction, driven by demand for energy-efficient AI solutions. While the technology is still maturing, several key players are advancing its development. Companies like IBM, Intel, and Qualcomm are investing heavily in neuromorphic hardware, while academic institutions such as Zhejiang University and the University of Hong Kong are contributing significant research. The competition is intensifying as both established tech giants and specialized startups like Mipsology work to overcome challenges in accuracy and efficiency of ANN-to-SNN conversion.

International Business Machines Corp.

Technical Solution: IBM has developed a comprehensive approach for converting trained artificial neural networks (ANNs) to spiking neural networks (SNNs) for neuromorphic hardware deployment. Their method involves several key steps: 1) Analyzing the ANN architecture and adjusting it for SNN compatibility. 2) Converting ANN activation functions to spike-based equivalents. 3) Implementing spike encoding schemes to represent continuous input data. 4) Optimizing synaptic weights and neuron parameters for efficient spiking behavior. 5) Applying temporal coding techniques to improve information density in spike trains. IBM's approach also includes a novel technique called "spike-timing-dependent backpropagation" to fine-tune the converted SNN, achieving accuracy levels comparable to the original ANN [1][3]. Additionally, they've developed specialized neuromorphic hardware, such as the TrueNorth chip, designed to efficiently run these converted SNNs [2].

Strengths: Comprehensive conversion pipeline, hardware-software co-design approach, and high accuracy retention. Weaknesses: Potential complexity in implementation and optimization for different ANN architectures.

Micron Technology, Inc.

Technical Solution: Micron Technology has developed a unique approach to converting ANNs to SNNs for neuromorphic hardware, focusing on memory-centric computing. Their method leverages in-memory computing capabilities of their advanced memory technologies, such as 3D XPoint and MRAM, to efficiently implement SNN operations. The conversion process involves: 1) Mapping ANN weights to memristive devices within memory arrays. 2) Implementing neuron dynamics using analog circuitry integrated with memory. 3) Developing spike-based learning rules compatible with in-memory computing paradigms. Micron's approach allows for direct implementation of SNNs on their neuromorphic hardware without the need for extensive off-chip data movement, significantly reducing power consumption and latency [4]. They have demonstrated up to 100x improvement in energy efficiency compared to conventional von Neumann architectures for certain neural network tasks [5].

Strengths: Highly energy-efficient, leverages advanced memory technologies, reduces data movement. Weaknesses: May require specialized hardware, potential limitations in scaling to very large networks.

Core Innovations in Conversion Algorithms

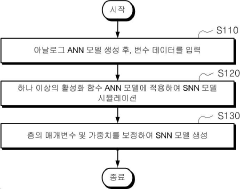

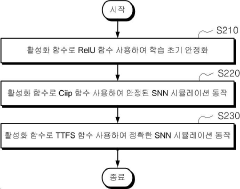

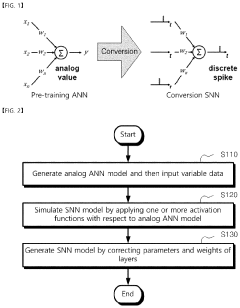

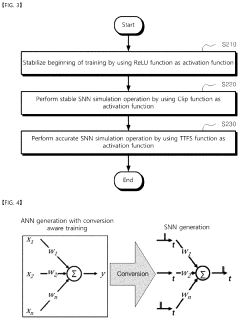

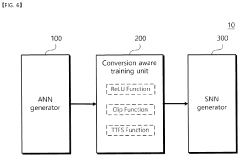

Method and System for Spiking Neural Network Based Conversion Aware Training

PatentPendingKR1020240041798A

Innovation

- A method and system that utilizes transformation-aware learning by generating an ANN model, applying activation functions like ReLU, Clip, and TTFS to simulate an SNN model, and adjusting layer parameters and weights based on simulation results to minimize data loss.

Method and system of training spiking neural network based conversion aware training

PatentPendingUS20240112024A1

Innovation

- A conversion aware training method and system that generates an SNN model by simulating a spiking neural network using activation functions like ReLU, Clip, and Time to First Spike (TTFS) on an analog ANN model, correcting parameters and weights to minimize data loss and improve accuracy.

Energy Efficiency Considerations

Energy efficiency is a critical consideration when converting trained Artificial Neural Network (ANN) models to Spiking Neural Networks (SNNs) for deployment on neuromorphic hardware. The primary motivation behind this conversion is to leverage the inherent energy efficiency of neuromorphic systems, which mimic the brain's biological neural networks. These systems operate on sparse, event-driven principles, potentially offering significant power savings compared to traditional von Neumann architectures.

When converting ANNs to SNNs, several factors impact energy efficiency. The choice of neuron model is crucial, with simpler models like Integrate-and-Fire (IF) or Leaky Integrate-and-Fire (LIF) neurons generally consuming less energy than more complex models. However, this simplification must be balanced against the need for accurate representation of the original ANN's functionality.

The encoding scheme used to convert continuous ANN activations into discrete spike trains also affects energy consumption. Rate coding, where information is encoded in the frequency of spikes, is straightforward but can be energy-intensive. Temporal coding schemes, which encode information in the precise timing of spikes, may offer improved energy efficiency but can be more challenging to implement accurately.

Spike sparsity is another key factor in energy efficiency. SNNs that generate fewer spikes generally consume less energy, as each spike event requires power. Techniques such as threshold adjustment and careful weight scaling during the conversion process can help maintain high accuracy while promoting spike sparsity.

The hardware implementation of SNNs also plays a crucial role in energy efficiency. Neuromorphic chips designed specifically for SNN computation, such as IBM's TrueNorth or Intel's Loihi, can offer significant energy savings compared to running SNNs on traditional GPUs or CPUs. These specialized chips often incorporate features like local memory and event-driven processing, which align well with the sparse, asynchronous nature of SNNs.

Optimizing network topology during the conversion process can further enhance energy efficiency. This may involve pruning unnecessary connections, reducing the precision of weights, or employing techniques like quantization to minimize the computational and memory requirements of the network.

Finally, the training methodology used in the conversion process can impact energy efficiency. Techniques such as surrogate gradient learning or direct training of SNNs may lead to more energy-efficient networks compared to straightforward ANN-to-SNN conversion methods. These approaches can potentially result in SNNs that are better optimized for the target neuromorphic hardware, maximizing energy savings while maintaining high performance.

When converting ANNs to SNNs, several factors impact energy efficiency. The choice of neuron model is crucial, with simpler models like Integrate-and-Fire (IF) or Leaky Integrate-and-Fire (LIF) neurons generally consuming less energy than more complex models. However, this simplification must be balanced against the need for accurate representation of the original ANN's functionality.

The encoding scheme used to convert continuous ANN activations into discrete spike trains also affects energy consumption. Rate coding, where information is encoded in the frequency of spikes, is straightforward but can be energy-intensive. Temporal coding schemes, which encode information in the precise timing of spikes, may offer improved energy efficiency but can be more challenging to implement accurately.

Spike sparsity is another key factor in energy efficiency. SNNs that generate fewer spikes generally consume less energy, as each spike event requires power. Techniques such as threshold adjustment and careful weight scaling during the conversion process can help maintain high accuracy while promoting spike sparsity.

The hardware implementation of SNNs also plays a crucial role in energy efficiency. Neuromorphic chips designed specifically for SNN computation, such as IBM's TrueNorth or Intel's Loihi, can offer significant energy savings compared to running SNNs on traditional GPUs or CPUs. These specialized chips often incorporate features like local memory and event-driven processing, which align well with the sparse, asynchronous nature of SNNs.

Optimizing network topology during the conversion process can further enhance energy efficiency. This may involve pruning unnecessary connections, reducing the precision of weights, or employing techniques like quantization to minimize the computational and memory requirements of the network.

Finally, the training methodology used in the conversion process can impact energy efficiency. Techniques such as surrogate gradient learning or direct training of SNNs may lead to more energy-efficient networks compared to straightforward ANN-to-SNN conversion methods. These approaches can potentially result in SNNs that are better optimized for the target neuromorphic hardware, maximizing energy savings while maintaining high performance.

Neuromorphic Hardware Compatibility

Neuromorphic hardware presents a unique set of challenges and opportunities for deploying Spiking Neural Networks (SNNs) converted from Artificial Neural Networks (ANNs). The compatibility between these converted models and neuromorphic hardware is crucial for efficient and effective implementation.

Neuromorphic hardware is designed to mimic the structure and function of biological neural networks, utilizing spike-based communication and parallel processing. This architecture offers significant advantages in terms of energy efficiency and real-time processing capabilities. However, it also introduces specific constraints that must be considered when converting ANN models to SNNs for deployment.

One of the primary compatibility issues is the representation of neural activations. While ANNs typically use continuous-valued activations, SNNs operate on discrete spike events. This fundamental difference requires careful consideration during the conversion process to ensure that the information encoded in the ANN's activations is accurately translated into spike patterns.

Temporal dynamics play a crucial role in neuromorphic hardware compatibility. SNNs inherently incorporate time as a dimension in their computations, whereas traditional ANNs do not. Converting ANN models to SNNs must account for this temporal aspect, including the integration of incoming spikes over time and the generation of output spikes based on neuron membrane potentials.

Memory constraints are another important factor in neuromorphic hardware compatibility. Neuromorphic chips often have limited on-chip memory, necessitating efficient representation of synaptic weights and neuron states. Conversion techniques must consider these limitations and potentially implement strategies such as weight quantization or sparse connectivity to optimize memory usage.

Power efficiency is a key advantage of neuromorphic hardware, and converted SNN models should be designed to leverage this benefit. This involves minimizing unnecessary spike activity and optimizing neuron activation thresholds to achieve a balance between computational accuracy and energy consumption.

Lastly, the specific architecture and capabilities of the target neuromorphic hardware must be taken into account during the conversion process. Different neuromorphic platforms may have varying neuron models, synaptic plasticity mechanisms, and connectivity patterns. Ensuring compatibility requires tailoring the converted SNN to align with the hardware's specific features and limitations.

Neuromorphic hardware is designed to mimic the structure and function of biological neural networks, utilizing spike-based communication and parallel processing. This architecture offers significant advantages in terms of energy efficiency and real-time processing capabilities. However, it also introduces specific constraints that must be considered when converting ANN models to SNNs for deployment.

One of the primary compatibility issues is the representation of neural activations. While ANNs typically use continuous-valued activations, SNNs operate on discrete spike events. This fundamental difference requires careful consideration during the conversion process to ensure that the information encoded in the ANN's activations is accurately translated into spike patterns.

Temporal dynamics play a crucial role in neuromorphic hardware compatibility. SNNs inherently incorporate time as a dimension in their computations, whereas traditional ANNs do not. Converting ANN models to SNNs must account for this temporal aspect, including the integration of incoming spikes over time and the generation of output spikes based on neuron membrane potentials.

Memory constraints are another important factor in neuromorphic hardware compatibility. Neuromorphic chips often have limited on-chip memory, necessitating efficient representation of synaptic weights and neuron states. Conversion techniques must consider these limitations and potentially implement strategies such as weight quantization or sparse connectivity to optimize memory usage.

Power efficiency is a key advantage of neuromorphic hardware, and converted SNN models should be designed to leverage this benefit. This involves minimizing unnecessary spike activity and optimizing neuron activation thresholds to achieve a balance between computational accuracy and energy consumption.

Lastly, the specific architecture and capabilities of the target neuromorphic hardware must be taken into account during the conversion process. Different neuromorphic platforms may have varying neuron models, synaptic plasticity mechanisms, and connectivity patterns. Ensuring compatibility requires tailoring the converted SNN to align with the hardware's specific features and limitations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!