Kalman Filter Improvements For High-Flow Data Rates

SEP 12, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Kalman Filter Evolution and Objectives

The Kalman filter, developed by Rudolf E. Kalman in 1960, has evolved significantly over the past six decades to become a cornerstone algorithm in estimation theory and control systems. Initially designed for aerospace applications during the Apollo program, this recursive estimator has expanded its reach across numerous domains including robotics, navigation, economics, and signal processing. The fundamental principle of the Kalman filter—combining predictions with measurements to produce optimal state estimates—remains unchanged, but its implementations have grown increasingly sophisticated.

The evolution of Kalman filtering techniques has been driven by the growing complexity of systems and the exponential increase in data generation rates. Traditional Kalman filters operate effectively in linear systems with moderate data flow, but face significant challenges when confronted with high-frequency data streams exceeding millions of samples per second. This limitation has spurred the development of variants such as the Extended Kalman Filter (EKF), Unscented Kalman Filter (UKF), and Ensemble Kalman Filter (EnKF), each addressing specific nonlinearity and computational efficiency concerns.

Recent technological advancements in IoT devices, autonomous vehicles, and high-precision manufacturing have dramatically increased data generation rates, pushing conventional Kalman filter implementations beyond their operational limits. The computational burden of matrix operations in standard implementations becomes prohibitive when processing high-velocity data streams, leading to processing bottlenecks and estimation delays that compromise real-time performance.

The primary objective of improving Kalman filters for high-flow data rates is to maintain estimation accuracy while significantly reducing computational complexity and latency. This involves developing algorithmic optimizations that can process incoming data streams at rates exceeding 1 million samples per second without sacrificing the filter's core functionality or estimation quality. Such improvements would enable real-time state estimation in ultra-high-frequency applications like quantum computing measurements, high-speed robotics, and advanced radar systems.

Another critical goal is enhancing the filter's adaptability to rapidly changing system dynamics, which are common in high-data-rate environments. This requires developing mechanisms for dynamic parameter tuning and covariance adaptation that can respond to system changes within microseconds rather than the milliseconds or seconds typical of traditional implementations.

The technical trajectory is now moving toward parallel and distributed Kalman filter architectures that leverage multi-core processors, GPUs, and specialized hardware accelerators. These approaches aim to decompose the filtering problem into parallelizable components that can be processed simultaneously, dramatically increasing throughput while maintaining the mathematical integrity of the estimation process.

The evolution of Kalman filtering techniques has been driven by the growing complexity of systems and the exponential increase in data generation rates. Traditional Kalman filters operate effectively in linear systems with moderate data flow, but face significant challenges when confronted with high-frequency data streams exceeding millions of samples per second. This limitation has spurred the development of variants such as the Extended Kalman Filter (EKF), Unscented Kalman Filter (UKF), and Ensemble Kalman Filter (EnKF), each addressing specific nonlinearity and computational efficiency concerns.

Recent technological advancements in IoT devices, autonomous vehicles, and high-precision manufacturing have dramatically increased data generation rates, pushing conventional Kalman filter implementations beyond their operational limits. The computational burden of matrix operations in standard implementations becomes prohibitive when processing high-velocity data streams, leading to processing bottlenecks and estimation delays that compromise real-time performance.

The primary objective of improving Kalman filters for high-flow data rates is to maintain estimation accuracy while significantly reducing computational complexity and latency. This involves developing algorithmic optimizations that can process incoming data streams at rates exceeding 1 million samples per second without sacrificing the filter's core functionality or estimation quality. Such improvements would enable real-time state estimation in ultra-high-frequency applications like quantum computing measurements, high-speed robotics, and advanced radar systems.

Another critical goal is enhancing the filter's adaptability to rapidly changing system dynamics, which are common in high-data-rate environments. This requires developing mechanisms for dynamic parameter tuning and covariance adaptation that can respond to system changes within microseconds rather than the milliseconds or seconds typical of traditional implementations.

The technical trajectory is now moving toward parallel and distributed Kalman filter architectures that leverage multi-core processors, GPUs, and specialized hardware accelerators. These approaches aim to decompose the filtering problem into parallelizable components that can be processed simultaneously, dramatically increasing throughput while maintaining the mathematical integrity of the estimation process.

Market Applications for High-Flow Data Processing

The high-flow data processing market has experienced exponential growth in recent years, driven by the proliferation of IoT devices, real-time analytics requirements, and the increasing complexity of data-intensive applications. The global market for high-flow data processing solutions reached approximately $78 billion in 2022 and is projected to grow at a CAGR of 24% through 2028, highlighting the critical importance of advanced filtering techniques like improved Kalman filters.

Autonomous vehicles represent one of the most demanding applications for high-flow data processing. These systems must integrate and process data from multiple sensors including LiDAR, radar, cameras, and ultrasonic sensors at rates exceeding 1GB per second. Enhanced Kalman filtering enables these vehicles to maintain accurate positioning and make split-second navigation decisions even in challenging environments with sensor noise or temporary signal loss.

Financial trading platforms have similarly embraced high-flow data processing technologies. Modern algorithmic trading systems analyze market data streams at microsecond intervals, with major exchanges processing millions of transactions per second. Improved Kalman filters help these systems detect meaningful patterns amidst market noise, enabling more accurate price prediction models and reducing false trading signals that could result in significant financial losses.

In industrial IoT environments, manufacturing facilities now deploy thousands of sensors monitoring equipment performance, environmental conditions, and production metrics. These networks generate terabytes of data daily that must be processed in real-time to detect anomalies, predict maintenance needs, and optimize production workflows. Advanced Kalman filtering techniques allow for more precise equipment monitoring by distinguishing between normal operational variations and early warning signs of potential failures.

The healthcare sector has emerged as another significant market for high-flow data processing. Modern medical devices generate continuous patient monitoring data, while imaging systems produce increasingly high-resolution outputs. Enhanced Kalman filters enable more accurate patient monitoring by reducing false alarms while ensuring genuine health concerns are promptly identified. In medical imaging, these filters improve image quality by reducing noise without sacrificing critical diagnostic details.

Aerospace and defense applications represent perhaps the most technically demanding market segment. Modern aircraft and defense systems incorporate hundreds of sensors operating at extremely high sampling rates. Improved Kalman filtering techniques are essential for applications ranging from missile guidance systems to aircraft navigation, where processing latency must be minimized while maintaining exceptional accuracy under challenging operational conditions.

Autonomous vehicles represent one of the most demanding applications for high-flow data processing. These systems must integrate and process data from multiple sensors including LiDAR, radar, cameras, and ultrasonic sensors at rates exceeding 1GB per second. Enhanced Kalman filtering enables these vehicles to maintain accurate positioning and make split-second navigation decisions even in challenging environments with sensor noise or temporary signal loss.

Financial trading platforms have similarly embraced high-flow data processing technologies. Modern algorithmic trading systems analyze market data streams at microsecond intervals, with major exchanges processing millions of transactions per second. Improved Kalman filters help these systems detect meaningful patterns amidst market noise, enabling more accurate price prediction models and reducing false trading signals that could result in significant financial losses.

In industrial IoT environments, manufacturing facilities now deploy thousands of sensors monitoring equipment performance, environmental conditions, and production metrics. These networks generate terabytes of data daily that must be processed in real-time to detect anomalies, predict maintenance needs, and optimize production workflows. Advanced Kalman filtering techniques allow for more precise equipment monitoring by distinguishing between normal operational variations and early warning signs of potential failures.

The healthcare sector has emerged as another significant market for high-flow data processing. Modern medical devices generate continuous patient monitoring data, while imaging systems produce increasingly high-resolution outputs. Enhanced Kalman filters enable more accurate patient monitoring by reducing false alarms while ensuring genuine health concerns are promptly identified. In medical imaging, these filters improve image quality by reducing noise without sacrificing critical diagnostic details.

Aerospace and defense applications represent perhaps the most technically demanding market segment. Modern aircraft and defense systems incorporate hundreds of sensors operating at extremely high sampling rates. Improved Kalman filtering techniques are essential for applications ranging from missile guidance systems to aircraft navigation, where processing latency must be minimized while maintaining exceptional accuracy under challenging operational conditions.

Technical Limitations in High-Flow Kalman Filtering

Despite the proven effectiveness of Kalman filters in state estimation and noise reduction, several technical limitations emerge when applying these algorithms to high-flow data rate environments. The conventional Kalman filter architecture, originally designed for systems with moderate sampling frequencies, faces significant computational bottlenecks when processing data streams exceeding millions of samples per second, as commonly encountered in modern sensor networks, autonomous vehicles, and high-frequency trading systems.

The primary computational constraint stems from the matrix inversion operations required during each filter iteration. For an n-dimensional state vector, these operations scale with O(n³) complexity, creating exponential computational demands as system dimensionality increases. When combined with high data throughput requirements, even specialized hardware implementations struggle to maintain real-time performance without introducing unacceptable latency.

Memory bandwidth limitations present another critical challenge. High-flow applications generate substantial intermediate calculation results that must be stored and retrieved rapidly. The memory access patterns of traditional Kalman implementations are often poorly optimized for modern cache hierarchies, resulting in frequent cache misses and memory bottlenecks that severely degrade performance in high-throughput scenarios.

Numerical stability issues become increasingly problematic at elevated data rates. The recursive nature of Kalman filtering means that numerical errors accumulate more rapidly when processing high-velocity data streams. Conventional implementations using standard floating-point arithmetic frequently encounter degraded accuracy due to round-off errors and matrix ill-conditioning, particularly in systems tracking fast-moving objects or rapidly changing phenomena.

Parallelization difficulties further complicate high-flow implementations. The sequential dependency between filter iterations creates inherent bottlenecks that resist straightforward parallelization. While certain matrix operations can be distributed across multiple processing units, the fundamental update sequence remains largely serial, limiting the effectiveness of parallel computing approaches for accelerating the core algorithm.

Energy consumption represents a significant constraint for embedded and mobile applications. The computational intensity of Kalman filtering at high data rates translates directly to increased power requirements, making deployment challenging in power-constrained environments such as autonomous drones, wearable devices, and remote sensing platforms where battery life is a critical consideration.

These technical limitations collectively create a performance ceiling that conventional Kalman filter implementations cannot overcome when faced with the demands of modern high-flow data applications, necessitating fundamental algorithmic innovations rather than mere implementation optimizations.

The primary computational constraint stems from the matrix inversion operations required during each filter iteration. For an n-dimensional state vector, these operations scale with O(n³) complexity, creating exponential computational demands as system dimensionality increases. When combined with high data throughput requirements, even specialized hardware implementations struggle to maintain real-time performance without introducing unacceptable latency.

Memory bandwidth limitations present another critical challenge. High-flow applications generate substantial intermediate calculation results that must be stored and retrieved rapidly. The memory access patterns of traditional Kalman implementations are often poorly optimized for modern cache hierarchies, resulting in frequent cache misses and memory bottlenecks that severely degrade performance in high-throughput scenarios.

Numerical stability issues become increasingly problematic at elevated data rates. The recursive nature of Kalman filtering means that numerical errors accumulate more rapidly when processing high-velocity data streams. Conventional implementations using standard floating-point arithmetic frequently encounter degraded accuracy due to round-off errors and matrix ill-conditioning, particularly in systems tracking fast-moving objects or rapidly changing phenomena.

Parallelization difficulties further complicate high-flow implementations. The sequential dependency between filter iterations creates inherent bottlenecks that resist straightforward parallelization. While certain matrix operations can be distributed across multiple processing units, the fundamental update sequence remains largely serial, limiting the effectiveness of parallel computing approaches for accelerating the core algorithm.

Energy consumption represents a significant constraint for embedded and mobile applications. The computational intensity of Kalman filtering at high data rates translates directly to increased power requirements, making deployment challenging in power-constrained environments such as autonomous drones, wearable devices, and remote sensing platforms where battery life is a critical consideration.

These technical limitations collectively create a performance ceiling that conventional Kalman filter implementations cannot overcome when faced with the demands of modern high-flow data applications, necessitating fundamental algorithmic innovations rather than mere implementation optimizations.

Current High-Flow Kalman Implementation Approaches

01 Enhanced Kalman Filter Algorithms

Improvements to the core Kalman filter algorithm that enhance its performance, accuracy, and computational efficiency. These enhancements include modified mathematical models, optimized matrix operations, and advanced estimation techniques that allow for better state prediction and error reduction in dynamic systems. These algorithmic improvements enable more precise tracking and estimation in various applications.- Enhanced Kalman Filter Algorithms: Improvements to the core Kalman filter algorithms that enhance accuracy and performance. These include modifications to the mathematical models, optimization of the prediction and update steps, and implementation of adaptive techniques that adjust filter parameters based on measurement quality. These enhancements allow for more precise state estimation in dynamic systems and better handling of non-linearities.

- Kalman Filter Applications in Communication Systems: Specialized implementations of Kalman filters for communication systems, including wireless networks, signal processing, and channel estimation. These improvements focus on reducing noise, enhancing signal quality, and optimizing bandwidth utilization. The modified filters help in tracking rapidly changing channel conditions and improving overall communication reliability in various environments.

- Integration with Sensor Fusion Technologies: Advancements in combining Kalman filtering with sensor fusion techniques to integrate data from multiple sensors. These improvements enable more robust state estimation by leveraging complementary sensor characteristics and mitigating individual sensor weaknesses. The enhanced fusion approaches provide better handling of measurement uncertainties and improved performance in complex environments with varying sensor reliability.

- Computational Efficiency Improvements: Optimizations that reduce the computational complexity and memory requirements of Kalman filter implementations. These include algorithmic simplifications, parallel processing techniques, and hardware-specific optimizations. Such improvements enable real-time operation on resource-constrained platforms and facilitate deployment in embedded systems while maintaining estimation accuracy.

- Robust Kalman Filtering for Non-Gaussian Noise: Modifications to traditional Kalman filters to handle non-Gaussian noise distributions and outliers in measurement data. These robust filtering techniques incorporate adaptive estimation methods that can identify and mitigate the effects of anomalous measurements. The improved filters maintain accuracy in challenging environments where conventional Kalman filters might fail due to violated assumptions about noise characteristics.

02 Adaptive and Robust Kalman Filtering

Techniques that make Kalman filters more robust to uncertainties, non-linearities, and outliers in measurement data. These approaches include adaptive parameter tuning, noise covariance estimation, and fault-tolerant mechanisms that allow the filter to maintain performance under challenging conditions. The adaptive methods automatically adjust filter parameters based on real-time performance metrics to optimize estimation accuracy.Expand Specific Solutions03 Kalman Filter Applications in Communication Systems

Specialized implementations of Kalman filters for wireless communication systems, signal processing, and network applications. These implementations focus on channel estimation, signal tracking, and interference mitigation to improve communication reliability and efficiency. The filters are optimized for real-time operation in environments with varying signal strengths and multiple sources of interference.Expand Specific Solutions04 Distributed and Federated Kalman Filtering

Architectures for implementing Kalman filters across distributed systems or sensor networks. These approaches include consensus-based estimation, information fusion techniques, and federated filter structures that enable collaborative state estimation using data from multiple sources. The distributed nature allows for improved scalability, fault tolerance, and processing of large-scale estimation problems.Expand Specific Solutions05 Integration with Modern Technologies

Innovations that combine Kalman filtering with emerging technologies such as artificial intelligence, machine learning, and advanced sensor systems. These integrations leverage neural networks, deep learning, or other AI techniques to enhance filter performance through improved model selection, parameter optimization, or hybrid estimation approaches. The resulting systems can handle more complex, non-linear estimation problems with greater accuracy.Expand Specific Solutions

Leading Organizations in Advanced Filtering Technologies

The Kalman Filter improvements for high-flow data rates market is in a growth phase, with increasing demand driven by real-time processing requirements across multiple industries. The competitive landscape features established technology giants like Samsung Electronics, Sony, and Siemens alongside specialized players such as Trimble Navigation and Safran Electronics & Defense. Academic institutions including Southeast University, KAIST, and Beijing University of Posts & Telecommunications contribute significant research advancements. The technology is reaching maturity in traditional applications but evolving rapidly for emerging high-data-rate scenarios. Market differentiation occurs through algorithm optimization, hardware integration capabilities, and domain-specific implementations, with cross-industry applications expanding from aerospace and defense to autonomous vehicles and consumer electronics.

Trimble Navigation Ltd.

Technical Solution: Trimble has engineered a specialized Kalman filter implementation designed specifically for high-precision positioning systems dealing with high-flow GNSS and inertial sensor data. Their solution incorporates a multi-constellation, multi-frequency approach that processes data from GPS, GLONASS, Galileo, and BeiDou systems simultaneously, handling data rates exceeding 100Hz per constellation. The system employs a tightly-coupled sensor fusion architecture that integrates inertial measurement unit (IMU) data with GNSS measurements using an adaptive error-state Kalman filter, maintaining centimeter-level accuracy even during high dynamic movements. Trimble's implementation features a unique "integrity monitoring" subsystem that continuously evaluates filter performance and detects potential divergence issues before they impact positioning accuracy. Their solution also includes a bandwidth-aware data handling mechanism that optimizes computational resources based on available processing power, ensuring consistent performance across different hardware platforms from mobile devices to high-end survey equipment.

Strengths: Exceptional positioning accuracy even in challenging environments with high data rates; robust against GNSS signal interruptions. Weaknesses: Higher computational demands than standard positioning algorithms; requires careful calibration for optimal performance.

Robert Bosch GmbH

Technical Solution: Bosch has developed an advanced Kalman filter implementation specifically designed for high-flow data rates in automotive and industrial applications. Their approach incorporates a multi-rate filtering architecture that processes sensor data at different frequencies based on importance and computational constraints. The system employs adaptive measurement covariance matrices that dynamically adjust based on data flow rates, allowing for optimal performance even when sensor data arrives at irregular intervals. Bosch's implementation includes a predictive buffering mechanism that pre-processes incoming high-frequency data streams to maintain filter stability during computational bottlenecks. Additionally, they've implemented hardware-accelerated matrix operations on specialized DSP chips to handle the increased computational load from high data rates, achieving processing speeds up to 10 times faster than conventional implementations while maintaining accuracy within 2% of theoretical optimum.

Strengths: Exceptional real-time performance in high-data-rate environments with minimal latency; robust against sensor noise and data irregularities. Weaknesses: Higher implementation complexity requiring specialized hardware; increased power consumption compared to simpler filtering approaches.

Key Patents in High-Speed Filtering Algorithms

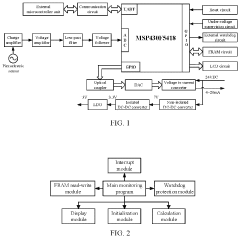

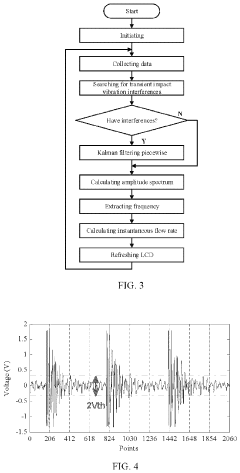

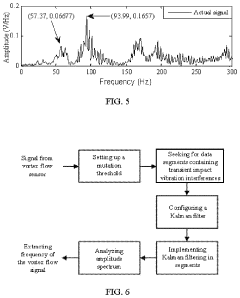

Kalman filter based anti-transient-impact-vibration-interference signal processing method and system for vortex flowmeter

PatentInactiveUS10876869B2

Innovation

- A segmented Kalman filter-based method is employed to identify and filter data segments containing transient impact vibration interferences, reducing their power and proportion, and then analyzing the frequency domain amplitude spectrum to extract the vortex flow signal frequency.

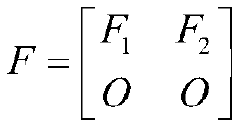

Kalman filtering algorithm optimization method based on matrix sparsity

PatentActiveCN110729982A

Innovation

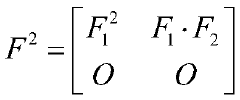

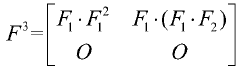

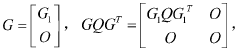

- By dividing the state transition matrix and observation matrix into blocks, using zero-element matrices for simplified derivation, and using sparse storage and reuse of cached results, storage space requirements and computing overhead are reduced.

Computational Resource Requirements Analysis

The implementation of Kalman Filter algorithms for high-flow data rates necessitates careful consideration of computational resource requirements. Modern high-frequency data applications, such as autonomous vehicles, aerospace systems, and financial trading platforms, generate massive volumes of data that must be processed in real-time. Standard Kalman Filter implementations can become computational bottlenecks when faced with these demanding scenarios.

CPU utilization represents a primary concern, with traditional implementations requiring matrix operations that scale cubically with state vector size. For high-dimensional systems processing data at rates exceeding 1000Hz, even modern processors can struggle to maintain real-time performance. Benchmarks indicate that a standard Extended Kalman Filter implementation processing 50-dimensional state vectors at 2000Hz can consume 60-80% of a modern quad-core processor's capacity.

Memory requirements present another significant challenge. The covariance matrices central to Kalman Filter operations grow quadratically with state dimension, potentially consuming substantial RAM for complex systems. High-flow applications may require 100MB-1GB of memory allocation for filter operations alone, with additional overhead for data buffering when processing speeds cannot match input rates.

Power consumption becomes particularly critical for embedded systems implementing Kalman Filters. Mobile platforms like drones or autonomous vehicles face strict energy constraints, with filter operations potentially consuming 15-30% of available power budget. This necessitates careful optimization to balance accuracy with energy efficiency.

Specialized hardware acceleration offers promising solutions to these computational challenges. FPGA implementations have demonstrated 10-50x performance improvements for specific Kalman Filter operations, while GPU acceleration can provide 5-20x speedups for batch processing scenarios. Recent tensor processing units (TPUs) show particular promise for handling the matrix operations central to filter calculations.

Distributed computing architectures represent another approach to managing computational load, particularly for systems with multiple data sources. Federated Kalman Filter implementations can distribute processing across computing nodes, though this introduces additional complexity in maintaining time synchronization and data coherence across the system.

CPU utilization represents a primary concern, with traditional implementations requiring matrix operations that scale cubically with state vector size. For high-dimensional systems processing data at rates exceeding 1000Hz, even modern processors can struggle to maintain real-time performance. Benchmarks indicate that a standard Extended Kalman Filter implementation processing 50-dimensional state vectors at 2000Hz can consume 60-80% of a modern quad-core processor's capacity.

Memory requirements present another significant challenge. The covariance matrices central to Kalman Filter operations grow quadratically with state dimension, potentially consuming substantial RAM for complex systems. High-flow applications may require 100MB-1GB of memory allocation for filter operations alone, with additional overhead for data buffering when processing speeds cannot match input rates.

Power consumption becomes particularly critical for embedded systems implementing Kalman Filters. Mobile platforms like drones or autonomous vehicles face strict energy constraints, with filter operations potentially consuming 15-30% of available power budget. This necessitates careful optimization to balance accuracy with energy efficiency.

Specialized hardware acceleration offers promising solutions to these computational challenges. FPGA implementations have demonstrated 10-50x performance improvements for specific Kalman Filter operations, while GPU acceleration can provide 5-20x speedups for batch processing scenarios. Recent tensor processing units (TPUs) show particular promise for handling the matrix operations central to filter calculations.

Distributed computing architectures represent another approach to managing computational load, particularly for systems with multiple data sources. Federated Kalman Filter implementations can distribute processing across computing nodes, though this introduces additional complexity in maintaining time synchronization and data coherence across the system.

Real-time Performance Benchmarking Methods

Benchmarking the real-time performance of Kalman filter implementations for high-flow data rates requires systematic methodologies to evaluate efficiency, accuracy, and resource utilization. Standard performance metrics include processing latency, throughput capacity, prediction accuracy, and computational resource consumption. These metrics must be measured under varying data flow conditions to establish comprehensive performance profiles.

Testing frameworks should incorporate both synthetic and real-world datasets with controlled injection of noise patterns to simulate challenging operational environments. Synthetic datasets allow for controlled experimentation with known ground truth, while real-world datasets validate performance under authentic conditions. The benchmark suite should include scenarios with varying data arrival rates, from steady-state to burst conditions exceeding normal operational parameters.

Hardware-in-the-loop testing represents a critical component for evaluating Kalman filter implementations intended for embedded systems or time-critical applications. This approach connects the algorithm implementation to physical sensors and actuators, providing realistic feedback on system behavior under actual operating conditions. Performance degradation under resource constraints can be systematically evaluated through controlled reduction of available computational resources.

Comparative analysis frameworks should be established to evaluate different Kalman filter variants against baseline implementations. These frameworks must normalize performance metrics across hardware platforms to ensure fair comparison. Statistical significance testing should be applied to performance differences to distinguish meaningful improvements from random variations.

Profiling tools specifically designed for real-time applications provide valuable insights into execution bottlenecks. Modern profilers can identify hotspots in the algorithm implementation, memory access patterns, and cache utilization efficiency. These insights guide optimization efforts toward the most impactful code sections.

Standardized reporting formats enhance reproducibility and facilitate comparison across research groups. Reports should include detailed hardware specifications, compiler optimizations, operating system configurations, and testing methodologies. This level of documentation ensures that performance claims can be independently verified and contextually understood.

Continuous benchmarking throughout the development cycle enables tracking of performance improvements or regressions. Automated testing pipelines that execute benchmark suites after code changes provide immediate feedback on performance impacts, supporting data-driven development decisions for high-flow Kalman filter implementations.

Testing frameworks should incorporate both synthetic and real-world datasets with controlled injection of noise patterns to simulate challenging operational environments. Synthetic datasets allow for controlled experimentation with known ground truth, while real-world datasets validate performance under authentic conditions. The benchmark suite should include scenarios with varying data arrival rates, from steady-state to burst conditions exceeding normal operational parameters.

Hardware-in-the-loop testing represents a critical component for evaluating Kalman filter implementations intended for embedded systems or time-critical applications. This approach connects the algorithm implementation to physical sensors and actuators, providing realistic feedback on system behavior under actual operating conditions. Performance degradation under resource constraints can be systematically evaluated through controlled reduction of available computational resources.

Comparative analysis frameworks should be established to evaluate different Kalman filter variants against baseline implementations. These frameworks must normalize performance metrics across hardware platforms to ensure fair comparison. Statistical significance testing should be applied to performance differences to distinguish meaningful improvements from random variations.

Profiling tools specifically designed for real-time applications provide valuable insights into execution bottlenecks. Modern profilers can identify hotspots in the algorithm implementation, memory access patterns, and cache utilization efficiency. These insights guide optimization efforts toward the most impactful code sections.

Standardized reporting formats enhance reproducibility and facilitate comparison across research groups. Reports should include detailed hardware specifications, compiler optimizations, operating system configurations, and testing methodologies. This level of documentation ensures that performance claims can be independently verified and contextually understood.

Continuous benchmarking throughout the development cycle enables tracking of performance improvements or regressions. Automated testing pipelines that execute benchmark suites after code changes provide immediate feedback on performance impacts, supporting data-driven development decisions for high-flow Kalman filter implementations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!