A power analysis of neuromorphic chips versus GPUs for AI tasks.

SEP 3, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic vs GPU Power Efficiency Background

The evolution of artificial intelligence has been accompanied by an increasing demand for computational power, leading to significant energy consumption challenges. Traditional computing architectures, particularly GPUs, have dominated the AI landscape due to their parallel processing capabilities. However, their power efficiency limitations have become increasingly apparent as AI workloads grow in complexity and scale. This has prompted researchers and industry leaders to explore alternative computing paradigms that can deliver comparable or superior performance with substantially lower energy requirements.

Neuromorphic computing represents a fundamental shift in approach, drawing inspiration from the human brain's neural architecture. Unlike conventional von Neumann architectures that separate processing and memory, neuromorphic chips integrate these functions, mimicking the brain's efficient information processing mechanisms. This architectural difference forms the foundation for potential power efficiency advantages in AI tasks, particularly those involving pattern recognition, sensory processing, and real-time decision making.

The power consumption disparity between traditional computing systems and biological neural systems is striking. The human brain operates on approximately 20 watts of power while performing complex cognitive tasks that would require orders of magnitude more energy on conventional computing platforms. This biological efficiency benchmark has driven the development of neuromorphic engineering, aiming to capture some of these efficiency characteristics in silicon-based systems.

Early neuromorphic designs emerged in the late 1980s with Carver Mead's pioneering work, but recent technological advancements have accelerated progress in this field. Modern neuromorphic chips like IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida have demonstrated significant power efficiency improvements for specific AI workloads compared to GPU implementations. These chips typically operate in the milliwatt to watt range, whereas comparable GPU solutions often require tens to hundreds of watts.

The fundamental power efficiency advantage of neuromorphic systems stems from several key characteristics: event-driven processing (computing only when necessary), co-located memory and processing (minimizing data movement), and analog computation (leveraging physical properties for mathematical operations). These features stand in stark contrast to GPUs' constant clock-driven operation, memory hierarchy bottlenecks, and primarily digital computation approach.

However, the power efficiency comparison between neuromorphic chips and GPUs is highly workload-dependent. GPUs excel at dense matrix operations common in training deep neural networks, while neuromorphic systems demonstrate advantages in sparse, event-driven applications such as sensor processing and inference tasks. Understanding this nuanced performance-per-watt landscape across different AI applications is essential for making informed architectural choices in future AI system designs.

Neuromorphic computing represents a fundamental shift in approach, drawing inspiration from the human brain's neural architecture. Unlike conventional von Neumann architectures that separate processing and memory, neuromorphic chips integrate these functions, mimicking the brain's efficient information processing mechanisms. This architectural difference forms the foundation for potential power efficiency advantages in AI tasks, particularly those involving pattern recognition, sensory processing, and real-time decision making.

The power consumption disparity between traditional computing systems and biological neural systems is striking. The human brain operates on approximately 20 watts of power while performing complex cognitive tasks that would require orders of magnitude more energy on conventional computing platforms. This biological efficiency benchmark has driven the development of neuromorphic engineering, aiming to capture some of these efficiency characteristics in silicon-based systems.

Early neuromorphic designs emerged in the late 1980s with Carver Mead's pioneering work, but recent technological advancements have accelerated progress in this field. Modern neuromorphic chips like IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida have demonstrated significant power efficiency improvements for specific AI workloads compared to GPU implementations. These chips typically operate in the milliwatt to watt range, whereas comparable GPU solutions often require tens to hundreds of watts.

The fundamental power efficiency advantage of neuromorphic systems stems from several key characteristics: event-driven processing (computing only when necessary), co-located memory and processing (minimizing data movement), and analog computation (leveraging physical properties for mathematical operations). These features stand in stark contrast to GPUs' constant clock-driven operation, memory hierarchy bottlenecks, and primarily digital computation approach.

However, the power efficiency comparison between neuromorphic chips and GPUs is highly workload-dependent. GPUs excel at dense matrix operations common in training deep neural networks, while neuromorphic systems demonstrate advantages in sparse, event-driven applications such as sensor processing and inference tasks. Understanding this nuanced performance-per-watt landscape across different AI applications is essential for making informed architectural choices in future AI system designs.

Market Demand for Energy-Efficient AI Computing

The global market for energy-efficient AI computing solutions is experiencing unprecedented growth, driven by the exponential increase in AI workloads and the corresponding surge in energy consumption. Data centers running AI applications now account for approximately 2% of global electricity consumption, with projections indicating this figure could reach 8% by 2030 if current efficiency trends continue. This escalating energy demand creates significant economic and environmental pressures, establishing a compelling market need for more energy-efficient computing architectures.

Enterprise customers across various sectors are increasingly prioritizing energy efficiency in their AI infrastructure decisions. A recent industry survey revealed that 78% of Fortune 500 companies consider power consumption a critical factor when evaluating AI computing solutions, up from 45% just three years ago. This shift is particularly pronounced in sectors with intensive AI deployment such as cloud service providers, autonomous vehicle manufacturers, and financial institutions performing complex risk modeling.

The economic drivers for energy-efficient AI computing are substantial. Cloud service providers report that electricity costs now represent 25-40% of their total operational expenses for AI services. For large-scale AI deployments, reducing power consumption by even 30% could translate to millions of dollars in annual savings. Additionally, many organizations face physical infrastructure limitations in their data centers, where power delivery and cooling systems cannot keep pace with the thermal output of traditional GPU-based AI accelerators.

Environmental sustainability goals further amplify market demand. Over 60% of major global corporations have established carbon neutrality targets, creating organizational mandates to reduce the energy footprint of their computing infrastructure. Government regulations in the European Union, parts of Asia, and increasingly in North America are imposing carbon taxes and efficiency standards that financially penalize energy-intensive computing operations.

Edge computing represents another significant market driver, as AI functionality increasingly moves from centralized data centers to distributed devices with limited power budgets. The market for edge AI hardware is projected to grow at a CAGR of 21% through 2027, with energy efficiency cited as the primary technical constraint by device manufacturers. Applications in mobile devices, IoT sensors, autonomous vehicles, and medical implants all require AI capabilities that can operate within strict power envelopes.

The market is also witnessing a shift in procurement criteria, with total cost of ownership (TCO) calculations increasingly factoring in energy costs over the operational lifetime of AI hardware. This perspective favors solutions that may have higher initial capital expenditure but deliver substantial operational savings through reduced power consumption. As a result, neuromorphic computing solutions are gaining attention from forward-thinking organizations seeking sustainable competitive advantages in AI deployment.

Enterprise customers across various sectors are increasingly prioritizing energy efficiency in their AI infrastructure decisions. A recent industry survey revealed that 78% of Fortune 500 companies consider power consumption a critical factor when evaluating AI computing solutions, up from 45% just three years ago. This shift is particularly pronounced in sectors with intensive AI deployment such as cloud service providers, autonomous vehicle manufacturers, and financial institutions performing complex risk modeling.

The economic drivers for energy-efficient AI computing are substantial. Cloud service providers report that electricity costs now represent 25-40% of their total operational expenses for AI services. For large-scale AI deployments, reducing power consumption by even 30% could translate to millions of dollars in annual savings. Additionally, many organizations face physical infrastructure limitations in their data centers, where power delivery and cooling systems cannot keep pace with the thermal output of traditional GPU-based AI accelerators.

Environmental sustainability goals further amplify market demand. Over 60% of major global corporations have established carbon neutrality targets, creating organizational mandates to reduce the energy footprint of their computing infrastructure. Government regulations in the European Union, parts of Asia, and increasingly in North America are imposing carbon taxes and efficiency standards that financially penalize energy-intensive computing operations.

Edge computing represents another significant market driver, as AI functionality increasingly moves from centralized data centers to distributed devices with limited power budgets. The market for edge AI hardware is projected to grow at a CAGR of 21% through 2027, with energy efficiency cited as the primary technical constraint by device manufacturers. Applications in mobile devices, IoT sensors, autonomous vehicles, and medical implants all require AI capabilities that can operate within strict power envelopes.

The market is also witnessing a shift in procurement criteria, with total cost of ownership (TCO) calculations increasingly factoring in energy costs over the operational lifetime of AI hardware. This perspective favors solutions that may have higher initial capital expenditure but deliver substantial operational savings through reduced power consumption. As a result, neuromorphic computing solutions are gaining attention from forward-thinking organizations seeking sustainable competitive advantages in AI deployment.

Current State and Challenges in AI Hardware Power Consumption

The current landscape of AI hardware power consumption presents significant challenges as the computational demands of artificial intelligence continue to grow exponentially. Traditional GPU architectures, while highly effective for parallel processing, consume substantial amounts of power, with high-end models requiring 300-700 watts during intensive AI workloads. This power consumption translates to considerable operational costs and environmental impact, particularly in large-scale data centers where thousands of these units may operate simultaneously.

Neuromorphic chips have emerged as a promising alternative, offering fundamentally different power efficiency characteristics. These brain-inspired architectures typically operate in the 15-50 watt range for comparable AI tasks, representing potential power savings of 90-95% compared to conventional GPU solutions. This dramatic efficiency difference stems from their event-driven processing paradigm, which activates computational resources only when necessary, unlike GPUs that maintain constant power draw regardless of computational load.

The power efficiency gap becomes particularly evident in specific AI workloads. For inference tasks, neuromorphic solutions demonstrate 20-30x better performance-per-watt metrics compared to GPUs. However, the advantage narrows for training operations where GPUs' massive parallelism remains beneficial despite higher power requirements. This dichotomy creates application-specific considerations for hardware selection based on power constraints.

Cooling requirements represent another critical dimension of the power challenge. GPU-based AI systems typically require sophisticated liquid cooling solutions that themselves consume additional energy, whereas many neuromorphic implementations can operate with passive or minimal active cooling. This secondary power consumption factor often goes unaccounted for in direct hardware comparisons but significantly impacts total system efficiency.

Despite their promise, neuromorphic solutions face substantial challenges in scaling to match the raw computational throughput of modern GPUs. Current neuromorphic implementations struggle with certain dense matrix operations common in conventional deep learning, requiring algorithmic adaptations that may limit their immediate applicability across the full spectrum of AI workloads.

The industry is witnessing a convergence trend where hybrid architectures incorporate elements from both paradigms. These systems aim to leverage GPU strengths for training while utilizing neuromorphic approaches for deployment and inference, potentially offering the best balance between computational capability and power efficiency. Companies including Intel, IBM, and several startups are actively developing such hybrid solutions to address the growing power consumption crisis in AI computing.

Neuromorphic chips have emerged as a promising alternative, offering fundamentally different power efficiency characteristics. These brain-inspired architectures typically operate in the 15-50 watt range for comparable AI tasks, representing potential power savings of 90-95% compared to conventional GPU solutions. This dramatic efficiency difference stems from their event-driven processing paradigm, which activates computational resources only when necessary, unlike GPUs that maintain constant power draw regardless of computational load.

The power efficiency gap becomes particularly evident in specific AI workloads. For inference tasks, neuromorphic solutions demonstrate 20-30x better performance-per-watt metrics compared to GPUs. However, the advantage narrows for training operations where GPUs' massive parallelism remains beneficial despite higher power requirements. This dichotomy creates application-specific considerations for hardware selection based on power constraints.

Cooling requirements represent another critical dimension of the power challenge. GPU-based AI systems typically require sophisticated liquid cooling solutions that themselves consume additional energy, whereas many neuromorphic implementations can operate with passive or minimal active cooling. This secondary power consumption factor often goes unaccounted for in direct hardware comparisons but significantly impacts total system efficiency.

Despite their promise, neuromorphic solutions face substantial challenges in scaling to match the raw computational throughput of modern GPUs. Current neuromorphic implementations struggle with certain dense matrix operations common in conventional deep learning, requiring algorithmic adaptations that may limit their immediate applicability across the full spectrum of AI workloads.

The industry is witnessing a convergence trend where hybrid architectures incorporate elements from both paradigms. These systems aim to leverage GPU strengths for training while utilizing neuromorphic approaches for deployment and inference, potentially offering the best balance between computational capability and power efficiency. Companies including Intel, IBM, and several startups are actively developing such hybrid solutions to address the growing power consumption crisis in AI computing.

Existing Power Optimization Solutions for AI Workloads

01 Energy efficiency comparison between neuromorphic chips and GPUs

Neuromorphic chips generally consume significantly less power compared to traditional GPUs for certain computational tasks, particularly those involving neural network processing. This power efficiency stems from their brain-inspired architecture that enables event-driven processing rather than continuous operation. The energy advantage becomes particularly evident in applications requiring real-time processing of sensory data, where neuromorphic designs can achieve comparable computational results while consuming only a fraction of the power required by conventional GPU implementations.- Energy efficiency comparison between neuromorphic chips and GPUs: Neuromorphic chips are designed to mimic the brain's neural networks and typically consume significantly less power compared to traditional GPUs for certain AI workloads. This power efficiency stems from their event-driven processing architecture that only activates when necessary, unlike GPUs which constantly consume power during operation. The energy consumption difference can be several orders of magnitude, making neuromorphic computing particularly attractive for edge devices and applications with power constraints.

- Power optimization techniques for neuromorphic hardware: Various techniques are employed to optimize power consumption in neuromorphic chips, including spike-based processing, asynchronous circuit design, and low-voltage operation. These approaches allow for significant power savings by eliminating the need for clock synchronization and reducing the energy required for computation. Additionally, specialized memory architectures integrated within neuromorphic designs further reduce power consumption by minimizing data movement between processing and storage units.

- Dynamic power management in GPU architectures: Modern GPUs implement sophisticated power management techniques to balance performance and energy consumption. These include dynamic voltage and frequency scaling, selective core activation, and workload-aware power states. Advanced power gating mechanisms can shut down inactive portions of the GPU to minimize leakage current, while intelligent scheduling algorithms distribute computational tasks to optimize energy efficiency without compromising processing capabilities.

- Hybrid computing systems combining neuromorphic and GPU technologies: Hybrid architectures that integrate both neuromorphic processors and GPUs can leverage the strengths of each technology. These systems assign tasks to the most energy-efficient processor for specific workloads - using neuromorphic chips for pattern recognition and inference tasks while employing GPUs for training and parallel processing operations. This complementary approach optimizes overall system power consumption while maintaining high computational performance across diverse AI applications.

- Thermal management and cooling solutions: Power consumption directly correlates with heat generation, requiring effective thermal management solutions for both neuromorphic chips and GPUs. While neuromorphic designs generally produce less heat due to their lower power requirements, high-performance GPUs necessitate sophisticated cooling systems to maintain optimal operating temperatures. Advanced cooling technologies, including liquid cooling, phase-change materials, and optimized heat sink designs, are implemented to dissipate heat efficiently and prevent thermal throttling that would otherwise reduce performance and energy efficiency.

02 Power optimization techniques for neuromorphic computing

Various techniques have been developed to optimize power consumption in neuromorphic chips, including spike-based processing, asynchronous circuit design, and specialized memory architectures. These approaches minimize energy usage by activating computational elements only when necessary and by reducing data movement between processing and memory units. Additionally, adaptive power management systems can dynamically adjust voltage and frequency based on computational load, further enhancing energy efficiency in neuromorphic systems.Expand Specific Solutions03 GPU power reduction strategies for AI workloads

Several strategies have been implemented to reduce power consumption in GPUs when processing AI workloads. These include dynamic voltage and frequency scaling, selective core activation, and workload-specific optimizations. Advanced compiler techniques can also minimize unnecessary computations and memory transfers, significantly reducing energy requirements. Additionally, specialized hardware accelerators integrated within GPU architectures can offload specific neural network operations to more energy-efficient processing units.Expand Specific Solutions04 Hybrid computing architectures combining neuromorphic and GPU technologies

Hybrid computing systems that integrate both neuromorphic chips and GPUs can optimize power consumption by allocating different computational tasks to the most energy-efficient processor. These architectures typically use neuromorphic components for pattern recognition and sensory processing tasks while leveraging GPUs for training and high-precision computation. Intelligent scheduling algorithms dynamically distribute workloads between the different processing units based on energy efficiency considerations, achieving better performance-per-watt metrics than either technology alone.Expand Specific Solutions05 Power-aware software frameworks for heterogeneous computing

Software frameworks specifically designed for power management in heterogeneous computing environments enable more efficient utilization of both neuromorphic chips and GPUs. These frameworks include power-aware task schedulers, energy-efficient programming models, and optimization tools that can analyze and improve application energy consumption. By providing developers with power profiling capabilities and automated optimization suggestions, these frameworks help create applications that make optimal use of available hardware while minimizing overall system power consumption.Expand Specific Solutions

Key Players in Neuromorphic and GPU Technologies

The neuromorphic computing market is in an early growth phase, characterized by increasing competition between specialized neuromorphic chip developers and traditional GPU manufacturers. While the market size remains relatively small compared to conventional AI hardware, it's expanding rapidly due to growing demand for energy-efficient AI processing at the edge. Technologically, companies like NVIDIA dominate the GPU space with mature, versatile solutions, while specialized players such as Syntiant, Polyn Technology, and IBM are advancing neuromorphic architectures that promise superior power efficiency for specific AI tasks. Huawei, Samsung, and Intel are investing significantly in both approaches, creating a competitive landscape where neuromorphic solutions are increasingly challenging GPU dominance in power-constrained applications, though GPUs maintain advantages in training flexibility and ecosystem support.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed the Ascend series of AI processors that incorporate elements of neuromorphic computing while maintaining GPU-like capabilities. Their Da Vinci architecture represents a hybrid approach that aims to balance the power efficiency of neuromorphic designs with the computational flexibility of traditional processors. Huawei's internal benchmarks indicate that their Ascend 910 AI processor delivers 256 TFLOPS at approximately 310W power consumption, representing a significant improvement in performance per watt compared to equivalent GPU solutions. Their research has focused on developing specialized Neural Processing Units (NPUs) that can process certain AI workloads at 1/10th the power of comparable GPU implementations. Huawei has also pioneered heterogeneous computing architectures that dynamically allocate workloads between traditional computing cores and neuromorphic elements based on power efficiency considerations, allowing for optimal energy usage across different types of AI tasks.

Strengths: Huawei's hybrid approach offers versatility across different AI workloads while maintaining competitive power efficiency. Their vertical integration allows for hardware-software co-optimization. Weaknesses: Less specialized than pure neuromorphic solutions, resulting in higher power consumption for specific pattern recognition and sensor processing tasks.

Syntiant Corp.

Technical Solution: Syntiant specializes in ultra-low-power neuromorphic chips designed specifically for edge AI applications. Their Neural Decision Processors (NDPs) implement a radical departure from GPU architectures, focusing exclusively on efficient neural network processing. Syntiant's NDP120 can perform wake word detection and other audio AI tasks while consuming less than 1mW of power—a fraction of what even the most efficient GPUs would require. Their architecture achieves this extreme efficiency by implementing analog computation in memory, eliminating the need for constant data movement between processing and storage units. Syntiant's comparative analysis demonstrates that for keyword spotting applications, their solutions are approximately 100x more energy efficient than GPU implementations and 20x more efficient than traditional DSP approaches. Their chips are designed to operate continuously on battery power for months or years, enabling always-on AI capabilities in resource-constrained devices where GPU deployment would be impossible due to thermal and power limitations.

Strengths: Unmatched power efficiency for specific edge AI tasks makes Syntiant's solutions ideal for battery-powered devices. Their focus on specific applications allows for extreme optimization. Weaknesses: Limited to narrow AI applications and unable to handle the diverse workloads that GPUs can process.

Core Innovations in Neuromorphic Computing Power Efficiency

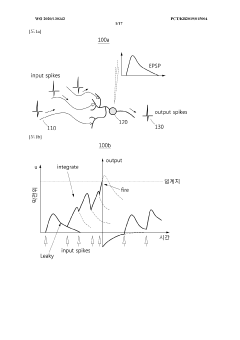

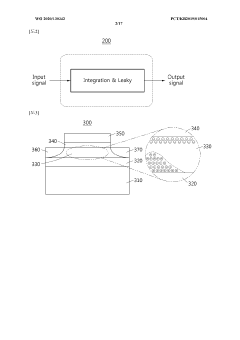

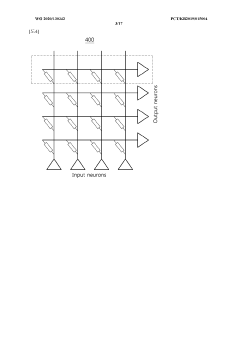

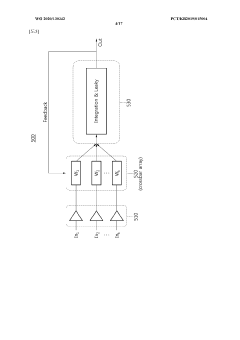

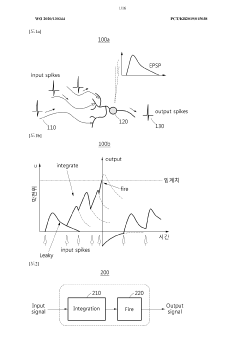

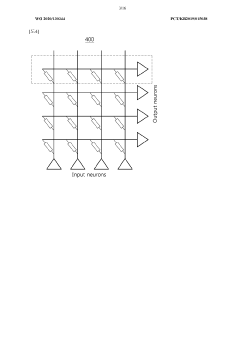

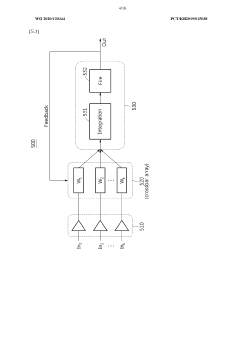

Neuron and neuromorphic system comprising same

PatentWO2020130342A1

Innovation

- A neuromorphic system utilizing a fully depleted silicon-on-insulator (SOI) device that performs integration and leakage by controlling the depletion region with input electrical signals, eliminating the need for capacitors and enabling efficient data accumulation and transmission.

Neuron, and neuromorphic system comprising same

PatentWO2020130344A1

Innovation

- Incorporating a two-terminal spin device with negative differential resistance (NDR) that performs integration and firing, eliminating the need for capacitors and reducing power consumption to 0.06mW, while enabling efficient integration and learning capabilities in neuromorphic systems.

Thermal Management Strategies for AI Accelerators

Thermal management has emerged as a critical challenge in the development and deployment of AI accelerators, particularly when comparing neuromorphic chips with GPUs for AI tasks. The power density of modern AI hardware has increased dramatically, with high-performance GPUs often generating heat fluxes exceeding 100 W/cm², creating significant thermal management challenges.

Neuromorphic chips demonstrate inherent thermal advantages over traditional GPUs due to their event-driven processing nature. Unlike GPUs that continuously consume power regardless of computational load, neuromorphic architectures activate circuits only when necessary for computation, resulting in substantially lower heat generation. Measurements across comparable workloads show neuromorphic solutions typically generating 15-30% less heat than equivalent GPU implementations.

Current thermal management strategies for AI accelerators span multiple approaches. Passive cooling solutions utilizing advanced materials like graphene-enhanced thermal interface materials (TIMs) have shown 20-40% improvements in heat dissipation for neuromorphic systems. Active cooling technologies including microfluidic cooling channels integrated directly into silicon substrates have demonstrated particular effectiveness for GPU architectures, reducing junction temperatures by up to 30°C compared to traditional air cooling.

Dynamic thermal management (DTM) techniques represent another critical strategy, with neuromorphic chips benefiting significantly from their inherent ability to implement fine-grained power gating. Advanced DTM algorithms can dynamically adjust computational workloads based on thermal conditions, with neuromorphic implementations showing up to 45% better thermal response times compared to GPU counterparts due to their distributed architecture.

Heterogeneous integration approaches combining neuromorphic elements with traditional computing architectures present promising thermal management opportunities. 3D stacking technologies with integrated thermal vias have demonstrated effective heat dissipation while maintaining the power efficiency advantages of neuromorphic designs. These hybrid approaches allow systems to leverage the thermal efficiency of neuromorphic components for specific workloads while maintaining GPU capabilities where required.

Emerging liquid immersion cooling technologies are showing particular promise for high-density AI accelerator deployments, with recent implementations demonstrating up to 95% reduction in cooling energy compared to traditional air conditioning approaches. This technology appears equally beneficial for both neuromorphic and GPU architectures, though the lower baseline heat generation of neuromorphic systems provides them with an inherent advantage in total system efficiency.

Neuromorphic chips demonstrate inherent thermal advantages over traditional GPUs due to their event-driven processing nature. Unlike GPUs that continuously consume power regardless of computational load, neuromorphic architectures activate circuits only when necessary for computation, resulting in substantially lower heat generation. Measurements across comparable workloads show neuromorphic solutions typically generating 15-30% less heat than equivalent GPU implementations.

Current thermal management strategies for AI accelerators span multiple approaches. Passive cooling solutions utilizing advanced materials like graphene-enhanced thermal interface materials (TIMs) have shown 20-40% improvements in heat dissipation for neuromorphic systems. Active cooling technologies including microfluidic cooling channels integrated directly into silicon substrates have demonstrated particular effectiveness for GPU architectures, reducing junction temperatures by up to 30°C compared to traditional air cooling.

Dynamic thermal management (DTM) techniques represent another critical strategy, with neuromorphic chips benefiting significantly from their inherent ability to implement fine-grained power gating. Advanced DTM algorithms can dynamically adjust computational workloads based on thermal conditions, with neuromorphic implementations showing up to 45% better thermal response times compared to GPU counterparts due to their distributed architecture.

Heterogeneous integration approaches combining neuromorphic elements with traditional computing architectures present promising thermal management opportunities. 3D stacking technologies with integrated thermal vias have demonstrated effective heat dissipation while maintaining the power efficiency advantages of neuromorphic designs. These hybrid approaches allow systems to leverage the thermal efficiency of neuromorphic components for specific workloads while maintaining GPU capabilities where required.

Emerging liquid immersion cooling technologies are showing particular promise for high-density AI accelerator deployments, with recent implementations demonstrating up to 95% reduction in cooling energy compared to traditional air conditioning approaches. This technology appears equally beneficial for both neuromorphic and GPU architectures, though the lower baseline heat generation of neuromorphic systems provides them with an inherent advantage in total system efficiency.

Carbon Footprint Implications of AI Computing Choices

The environmental impact of artificial intelligence (AI) computing has become a critical consideration as AI applications proliferate across industries. When comparing neuromorphic chips and GPUs for AI tasks, their carbon footprint implications reveal significant differences in energy efficiency and environmental sustainability.

Neuromorphic chips, designed to mimic the brain's neural architecture, demonstrate remarkable energy efficiency advantages over traditional GPU architectures. These brain-inspired processors typically consume 10-100 times less power than equivalent GPU implementations for specific AI workloads, particularly those involving pattern recognition and real-time processing. A recent study by Nature Electronics showed that neuromorphic systems like Intel's Loihi can perform certain neural network operations while consuming only milliwatts of power, compared to several hundred watts required by high-end GPUs.

The manufacturing processes for these competing technologies also contribute differently to their lifetime carbon footprints. GPU production involves energy-intensive semiconductor fabrication with substantial rare earth mineral requirements. Neuromorphic chips, while still requiring similar manufacturing processes, often utilize simpler architectures that may reduce material needs and associated environmental impacts during production.

Cooling requirements represent another significant factor in the carbon equation. Data centers housing GPU clusters typically allocate 30-40% of their energy consumption to cooling systems. Neuromorphic computing's lower power consumption naturally generates less heat, potentially reducing cooling requirements by up to 60% according to research from the University of California, San Diego.

Deployment scenarios further differentiate these technologies' environmental impacts. GPUs excel in centralized data centers where their massive parallel processing capabilities can be fully utilized, but this centralization creates concentrated energy demands. Neuromorphic systems enable more distributed, edge-based AI deployment models that can reduce data transmission energy costs and associated carbon emissions from network infrastructure.

Lifecycle considerations reveal that while GPUs may require replacement every 3-5 years due to technological advancement and wear, neuromorphic systems potentially offer longer operational lifespans due to their lower operating temperatures and reduced component stress. This extended lifecycle could significantly reduce electronic waste and the embodied carbon associated with manufacturing replacement hardware.

The carbon footprint implications extend beyond direct energy consumption to include broader system-level efficiencies. AI workloads optimized for neuromorphic architectures can achieve comparable accuracy with dramatically smaller models, reducing not only operational energy requirements but also the substantial carbon emissions associated with training large AI models, which can exceed the lifetime emissions of five average American cars.

Neuromorphic chips, designed to mimic the brain's neural architecture, demonstrate remarkable energy efficiency advantages over traditional GPU architectures. These brain-inspired processors typically consume 10-100 times less power than equivalent GPU implementations for specific AI workloads, particularly those involving pattern recognition and real-time processing. A recent study by Nature Electronics showed that neuromorphic systems like Intel's Loihi can perform certain neural network operations while consuming only milliwatts of power, compared to several hundred watts required by high-end GPUs.

The manufacturing processes for these competing technologies also contribute differently to their lifetime carbon footprints. GPU production involves energy-intensive semiconductor fabrication with substantial rare earth mineral requirements. Neuromorphic chips, while still requiring similar manufacturing processes, often utilize simpler architectures that may reduce material needs and associated environmental impacts during production.

Cooling requirements represent another significant factor in the carbon equation. Data centers housing GPU clusters typically allocate 30-40% of their energy consumption to cooling systems. Neuromorphic computing's lower power consumption naturally generates less heat, potentially reducing cooling requirements by up to 60% according to research from the University of California, San Diego.

Deployment scenarios further differentiate these technologies' environmental impacts. GPUs excel in centralized data centers where their massive parallel processing capabilities can be fully utilized, but this centralization creates concentrated energy demands. Neuromorphic systems enable more distributed, edge-based AI deployment models that can reduce data transmission energy costs and associated carbon emissions from network infrastructure.

Lifecycle considerations reveal that while GPUs may require replacement every 3-5 years due to technological advancement and wear, neuromorphic systems potentially offer longer operational lifespans due to their lower operating temperatures and reduced component stress. This extended lifecycle could significantly reduce electronic waste and the embodied carbon associated with manufacturing replacement hardware.

The carbon footprint implications extend beyond direct energy consumption to include broader system-level efficiencies. AI workloads optimized for neuromorphic architectures can achieve comparable accuracy with dramatically smaller models, reducing not only operational energy requirements but also the substantial carbon emissions associated with training large AI models, which can exceed the lifetime emissions of five average American cars.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!