The design of event routing protocols in many-core neuromorphic chips.

SEP 3, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Chip Event Routing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the human brain's neural networks to create energy-efficient, parallel processing systems. The evolution of event routing protocols in many-core neuromorphic chips has been marked by significant milestones over the past three decades, beginning with Carver Mead's pioneering work in the late 1980s that established the foundational concepts of neuromorphic engineering.

The field progressed substantially in the early 2000s with the development of Address Event Representation (AER) protocols, which enabled efficient communication between neuromorphic components by transmitting only significant events rather than continuous data streams. This approach mimics the brain's spike-based communication and has become a cornerstone of neuromorphic design.

Recent years have witnessed an acceleration in neuromorphic chip development, driven by the limitations of traditional von Neumann architectures in handling AI workloads. Major research initiatives like IBM's TrueNorth, Intel's Loihi, and SpiNNaker from the University of Manchester have pushed the boundaries of neuromorphic design, each implementing novel approaches to event routing that balance efficiency, scalability, and biological fidelity.

The technical objectives in this domain are multifaceted and challenging. Primary among these is achieving ultra-low power consumption while maintaining computational capability, a goal that requires sophisticated event routing protocols that minimize unnecessary communications. Current designs aim to reduce power requirements to the milliwatt range for complex cognitive tasks, representing orders of magnitude improvement over conventional processors.

Scalability presents another critical objective, as neuromorphic systems must efficiently route events across thousands or millions of cores without creating bottlenecks. This necessitates decentralized routing protocols that can dynamically adapt to changing network conditions and processing demands.

Biological plausibility remains an important consideration, with researchers seeking to implement routing mechanisms that more accurately reflect the brain's communication patterns. This includes incorporating features like spike-timing-dependent plasticity (STDP) and homeostatic mechanisms into routing decisions.

Real-time processing capability represents a fourth key objective, as neuromorphic systems are increasingly targeted at applications requiring immediate responses, such as autonomous vehicles, robotics, and advanced prosthetics. Event routing protocols must therefore minimize latency while maintaining deterministic behavior under varying loads.

The convergence of these objectives is driving innovation toward adaptive, hierarchical routing protocols that can efficiently handle the complex, dynamic communication patterns characteristic of brain-inspired computing systems.

The field progressed substantially in the early 2000s with the development of Address Event Representation (AER) protocols, which enabled efficient communication between neuromorphic components by transmitting only significant events rather than continuous data streams. This approach mimics the brain's spike-based communication and has become a cornerstone of neuromorphic design.

Recent years have witnessed an acceleration in neuromorphic chip development, driven by the limitations of traditional von Neumann architectures in handling AI workloads. Major research initiatives like IBM's TrueNorth, Intel's Loihi, and SpiNNaker from the University of Manchester have pushed the boundaries of neuromorphic design, each implementing novel approaches to event routing that balance efficiency, scalability, and biological fidelity.

The technical objectives in this domain are multifaceted and challenging. Primary among these is achieving ultra-low power consumption while maintaining computational capability, a goal that requires sophisticated event routing protocols that minimize unnecessary communications. Current designs aim to reduce power requirements to the milliwatt range for complex cognitive tasks, representing orders of magnitude improvement over conventional processors.

Scalability presents another critical objective, as neuromorphic systems must efficiently route events across thousands or millions of cores without creating bottlenecks. This necessitates decentralized routing protocols that can dynamically adapt to changing network conditions and processing demands.

Biological plausibility remains an important consideration, with researchers seeking to implement routing mechanisms that more accurately reflect the brain's communication patterns. This includes incorporating features like spike-timing-dependent plasticity (STDP) and homeostatic mechanisms into routing decisions.

Real-time processing capability represents a fourth key objective, as neuromorphic systems are increasingly targeted at applications requiring immediate responses, such as autonomous vehicles, robotics, and advanced prosthetics. Event routing protocols must therefore minimize latency while maintaining deterministic behavior under varying loads.

The convergence of these objectives is driving innovation toward adaptive, hierarchical routing protocols that can efficiently handle the complex, dynamic communication patterns characteristic of brain-inspired computing systems.

Market Analysis for Many-Core Neuromorphic Computing

The neuromorphic computing market is experiencing significant growth, driven by the increasing demand for AI applications that require efficient processing of neural networks. The global neuromorphic computing market was valued at approximately $2.5 billion in 2022 and is projected to reach $8.3 billion by 2028, growing at a CAGR of 22.1% during the forecast period. This growth trajectory underscores the expanding commercial interest in brain-inspired computing architectures.

Many-core neuromorphic chips represent a specialized segment within this market, characterized by their ability to process information in a manner similar to biological neural systems. These chips are particularly valuable for applications requiring real-time processing of sensory data, pattern recognition, and autonomous decision-making capabilities.

The primary market drivers for many-core neuromorphic computing include the exponential growth in IoT devices, the increasing adoption of AI in edge computing scenarios, and the limitations of traditional von Neumann architectures in handling neural network workloads efficiently. The energy efficiency of neuromorphic systems—often consuming only a fraction of the power required by conventional processors—makes them particularly attractive for mobile and embedded applications.

Key application sectors showing strong demand include autonomous vehicles, where real-time processing of sensor data is critical; healthcare, particularly for neural signal processing and brain-computer interfaces; industrial automation, where pattern recognition and anomaly detection are essential; and consumer electronics, especially in next-generation smart devices requiring on-device AI capabilities.

Geographically, North America currently leads the market with approximately 40% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to witness the highest growth rate, driven by substantial investments in AI research and semiconductor manufacturing in countries like China, South Korea, and Japan.

The market landscape is characterized by both established semiconductor giants and specialized neuromorphic computing startups. Major players include Intel with its Loihi chip, IBM with TrueNorth, and Qualcomm with its Zeroth platform. Emerging companies like BrainChip, SynSense, and GrAI Matter Labs are also making significant contributions with innovative neuromorphic architectures.

Customer segments are diversifying beyond research institutions to include commercial entities in automotive, aerospace, defense, and consumer electronics sectors. This broadening customer base indicates the transition of neuromorphic computing from purely research-oriented applications to commercial deployment, signaling market maturation.

Many-core neuromorphic chips represent a specialized segment within this market, characterized by their ability to process information in a manner similar to biological neural systems. These chips are particularly valuable for applications requiring real-time processing of sensory data, pattern recognition, and autonomous decision-making capabilities.

The primary market drivers for many-core neuromorphic computing include the exponential growth in IoT devices, the increasing adoption of AI in edge computing scenarios, and the limitations of traditional von Neumann architectures in handling neural network workloads efficiently. The energy efficiency of neuromorphic systems—often consuming only a fraction of the power required by conventional processors—makes them particularly attractive for mobile and embedded applications.

Key application sectors showing strong demand include autonomous vehicles, where real-time processing of sensor data is critical; healthcare, particularly for neural signal processing and brain-computer interfaces; industrial automation, where pattern recognition and anomaly detection are essential; and consumer electronics, especially in next-generation smart devices requiring on-device AI capabilities.

Geographically, North America currently leads the market with approximately 40% share, followed by Europe and Asia-Pacific. However, the Asia-Pacific region is expected to witness the highest growth rate, driven by substantial investments in AI research and semiconductor manufacturing in countries like China, South Korea, and Japan.

The market landscape is characterized by both established semiconductor giants and specialized neuromorphic computing startups. Major players include Intel with its Loihi chip, IBM with TrueNorth, and Qualcomm with its Zeroth platform. Emerging companies like BrainChip, SynSense, and GrAI Matter Labs are also making significant contributions with innovative neuromorphic architectures.

Customer segments are diversifying beyond research institutions to include commercial entities in automotive, aerospace, defense, and consumer electronics sectors. This broadening customer base indicates the transition of neuromorphic computing from purely research-oriented applications to commercial deployment, signaling market maturation.

Current Challenges in Event Routing Protocols

Despite significant advancements in neuromorphic computing, event routing protocols in many-core neuromorphic chips face several critical challenges that impede optimal performance and scalability. The primary challenge lies in managing the asynchronous nature of spike-based communication, which differs fundamentally from traditional synchronous data transfer in conventional computing systems. This asynchronicity creates unpredictable traffic patterns that can lead to congestion and deadlock scenarios in densely connected networks.

Scalability presents another significant hurdle as neuromorphic architectures expand to incorporate thousands or millions of cores. Current routing protocols struggle to maintain efficiency when the number of neurons and synapses increases exponentially. The overhead associated with routing tables and addressing schemes grows disproportionately with system size, creating bottlenecks in both performance and power consumption.

Power efficiency remains a critical constraint, particularly for edge applications where neuromorphic chips must operate within strict energy budgets. Existing routing protocols often consume excessive power during high-activity periods, negating the inherent energy advantages of neuromorphic computing. The trade-off between routing flexibility and energy consumption has not been adequately resolved in current implementations.

Latency management poses another substantial challenge, especially for real-time applications requiring rapid response to sensory inputs. The variability in routing delays can significantly impact temporal coding schemes that rely on precise spike timing. Current protocols struggle to provide deterministic latency guarantees while maintaining the flexibility needed for dynamic neural network topologies.

Fault tolerance and reliability concerns are increasingly prominent as neuromorphic systems scale. Hardware failures in individual cores or communication channels can severely disrupt network functionality without robust routing mechanisms that can dynamically reroute traffic. Most current protocols lack sophisticated fault detection and recovery capabilities necessary for long-term deployment in critical applications.

Implementation complexity presents practical challenges for hardware designers. The intricate balance between hardware-efficient routing and biologically-inspired communication patterns often results in compromises that limit either computational capability or biological fidelity. Current hardware implementations frequently sacrifice one aspect to optimize the other.

Finally, the lack of standardized benchmarks and evaluation metrics makes it difficult to objectively compare different routing approaches. The neuromorphic community has yet to establish consensus on performance indicators that accurately reflect the unique requirements of event-based communication, hampering systematic improvement of routing protocols across different architectural paradigms.

Scalability presents another significant hurdle as neuromorphic architectures expand to incorporate thousands or millions of cores. Current routing protocols struggle to maintain efficiency when the number of neurons and synapses increases exponentially. The overhead associated with routing tables and addressing schemes grows disproportionately with system size, creating bottlenecks in both performance and power consumption.

Power efficiency remains a critical constraint, particularly for edge applications where neuromorphic chips must operate within strict energy budgets. Existing routing protocols often consume excessive power during high-activity periods, negating the inherent energy advantages of neuromorphic computing. The trade-off between routing flexibility and energy consumption has not been adequately resolved in current implementations.

Latency management poses another substantial challenge, especially for real-time applications requiring rapid response to sensory inputs. The variability in routing delays can significantly impact temporal coding schemes that rely on precise spike timing. Current protocols struggle to provide deterministic latency guarantees while maintaining the flexibility needed for dynamic neural network topologies.

Fault tolerance and reliability concerns are increasingly prominent as neuromorphic systems scale. Hardware failures in individual cores or communication channels can severely disrupt network functionality without robust routing mechanisms that can dynamically reroute traffic. Most current protocols lack sophisticated fault detection and recovery capabilities necessary for long-term deployment in critical applications.

Implementation complexity presents practical challenges for hardware designers. The intricate balance between hardware-efficient routing and biologically-inspired communication patterns often results in compromises that limit either computational capability or biological fidelity. Current hardware implementations frequently sacrifice one aspect to optimize the other.

Finally, the lack of standardized benchmarks and evaluation metrics makes it difficult to objectively compare different routing approaches. The neuromorphic community has yet to establish consensus on performance indicators that accurately reflect the unique requirements of event-based communication, hampering systematic improvement of routing protocols across different architectural paradigms.

State-of-the-Art Event Routing Architectures

01 Dynamic routing protocols for efficient network traffic management

Dynamic routing protocols adapt to changing network conditions by automatically updating routing tables based on real-time traffic patterns and network topology changes. These protocols improve routing efficiency by selecting optimal paths, reducing latency, and balancing network load. They employ various algorithms to determine the best routes for data transmission while minimizing congestion and maximizing throughput in complex network environments.- Dynamic routing protocols for network efficiency: Dynamic routing protocols can significantly improve network efficiency by automatically adapting to changing network conditions. These protocols enable routers to exchange information about network topology and make intelligent routing decisions based on current conditions. By implementing dynamic routing, networks can optimize path selection, reduce latency, and improve overall throughput, leading to more efficient event routing across distributed systems.

- Quality of Service (QoS) based routing mechanisms: QoS-based routing mechanisms prioritize network traffic based on specific requirements such as bandwidth, latency, and packet loss. By implementing QoS policies in event routing protocols, networks can ensure that critical events receive appropriate priority and resources. This approach enhances routing efficiency by allocating network resources according to the importance and urgency of different types of events, resulting in improved performance for time-sensitive applications.

- Load balancing techniques for event distribution: Load balancing techniques distribute network traffic across multiple paths or servers to optimize resource utilization and prevent overloading. In event routing protocols, load balancing algorithms can analyze network conditions and event characteristics to determine the most efficient routing paths. These techniques improve routing efficiency by preventing bottlenecks, reducing congestion, and ensuring even distribution of processing load across available resources.

- Adaptive routing based on real-time network analytics: Adaptive routing protocols leverage real-time network analytics to continuously optimize routing decisions. By monitoring network performance metrics and analyzing traffic patterns, these protocols can dynamically adjust routing parameters to maintain optimal efficiency. This approach enables event routing systems to respond quickly to changing conditions, predict potential issues, and proactively optimize routing paths for improved performance and reliability.

- Energy-efficient routing protocols for resource-constrained environments: Energy-efficient routing protocols are designed to minimize power consumption while maintaining acceptable performance levels. These protocols are particularly important in resource-constrained environments such as wireless sensor networks and IoT deployments. By optimizing routing decisions based on energy considerations, these protocols can extend network lifetime, reduce operational costs, and improve the sustainability of event routing infrastructure while maintaining efficient event delivery.

02 Event-driven routing optimization techniques

Event-driven routing protocols respond to specific network events such as link failures, congestion, or topology changes to optimize routing decisions. These protocols monitor network conditions and trigger routing updates only when necessary, reducing control overhead while maintaining routing efficiency. By focusing on significant network events rather than periodic updates, these techniques provide faster convergence times and more efficient use of network resources.Expand Specific Solutions03 Hierarchical routing structures for scalable networks

Hierarchical routing protocols organize networks into multiple levels or zones to improve scalability and efficiency. By dividing the network into manageable segments, these protocols reduce routing table size and update frequency. This hierarchical approach enables efficient routing in large-scale networks by localizing traffic within zones when possible and providing optimized paths for inter-zone communication, resulting in reduced processing overhead and improved overall network performance.Expand Specific Solutions04 Quality of Service (QoS) aware routing mechanisms

QoS-aware routing protocols prioritize network traffic based on service requirements such as bandwidth, delay, and reliability. These mechanisms enhance routing efficiency by ensuring critical applications receive appropriate network resources while maintaining overall network performance. By considering application-specific needs when making routing decisions, these protocols optimize resource allocation and improve user experience for diverse services operating simultaneously on the network.Expand Specific Solutions05 Energy-efficient routing for resource-constrained networks

Energy-efficient routing protocols are designed to minimize power consumption in networks with limited energy resources, such as wireless sensor networks or IoT deployments. These protocols optimize routing paths to balance network load, reduce transmission distances, and extend network lifetime. By considering energy constraints in routing decisions, these techniques maintain network connectivity and performance while significantly reducing overall energy consumption.Expand Specific Solutions

Leading Companies in Neuromorphic Hardware

The design of event routing protocols in many-core neuromorphic chips is currently in an early growth phase, with the market expected to expand significantly as neuromorphic computing gains traction in AI applications. The global market remains relatively small but is projected to grow rapidly as these specialized chips demonstrate advantages in energy efficiency and real-time processing. Technologically, the field shows varying maturity levels across competitors. IBM leads with its TrueNorth architecture, while Intel's Loihi platform demonstrates significant advances. Academic institutions like Tsinghua University and University of Zurich are making notable research contributions. Emerging players such as Innatera Nanosystems and Tenstorrent are introducing innovative approaches, while established semiconductor companies like Huawei and GLOBALFOUNDRIES are leveraging their manufacturing expertise to enter this specialized domain.

International Business Machines Corp.

Technical Solution: IBM has developed TrueNorth, a groundbreaking neuromorphic chip architecture that implements a novel event-driven routing protocol. Their approach uses a crossbar architecture with distributed routing tables that enable efficient spike event transmission between neurons across multiple cores. IBM's protocol employs Address-Event Representation (AER) with time-stamping to maintain temporal precision of neural events. The TrueNorth chip contains 4,096 neurosynaptic cores with 1 million programmable neurons and 256 million configurable synapses, all interconnected through a packet-switched network. The event routing protocol implements a two-stage routing mechanism: intra-core routing for local connections and inter-core routing for long-distance communication, with specialized router modules at the boundaries of each core to handle the transition between these domains. This hierarchical approach significantly reduces routing complexity and power consumption.

Strengths: Highly scalable architecture that maintains real-time performance even with large neural networks; extremely energy efficient (70mW for 1 million neurons); proven reliability in real-world applications. Weaknesses: Complex programming model requires specialized knowledge; limited flexibility for implementing certain types of neural networks compared to more general-purpose solutions.

Intel Corp.

Technical Solution: Intel's Loihi neuromorphic research chip implements a sophisticated event routing protocol designed specifically for spiking neural networks. The architecture features a hierarchical, mesh-based Network-on-Chip (NoC) that efficiently routes neural spike events between its 128 neuromorphic cores. Each core contains 1,024 neural units, and the routing protocol employs a packet-based communication scheme with programmable synaptic weights. Intel's approach uses a time-multiplexed event delivery system with priority queuing to maintain temporal accuracy of spike events. The protocol incorporates both deterministic and probabilistic routing strategies to balance traffic load across the chip. Loihi's event routing includes an innovative congestion management mechanism that dynamically adjusts routing paths based on network traffic conditions, preventing bottlenecks during high neural activity periods. The chip also implements sparse connectivity optimization, where routing tables are compressed to store only active connections, significantly reducing memory requirements for the routing infrastructure.

Strengths: Highly efficient for sparse, event-driven workloads; programmable routing policies allow application-specific optimizations; demonstrated 1000x energy efficiency improvement over conventional architectures for certain neural network tasks. Weaknesses: Programming complexity requires specialized tools and expertise; limited ecosystem compared to traditional computing platforms; optimal performance requires careful network design.

Key Patents in Neuromorphic Event Routing

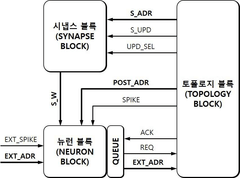

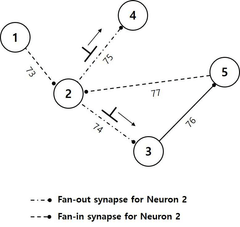

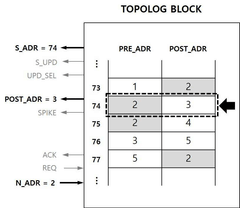

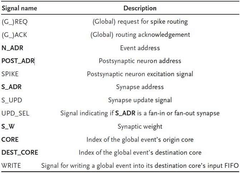

Multi-core neuromodule device and global routing method performed on the same

PatentActiveKR1020210004348A

Innovation

- A multicore neuromorphic device with a reconfigurable neural network architecture, utilizing a source and destination neuromorphic core with an input FIFO buffer and a global router, along with a global routing method that includes LUTs for address conversion and spike processing, to connect multiple cores in parallel and manage spike routing efficiently.

Patent

Innovation

- Adaptive routing protocols that dynamically adjust packet paths based on network congestion levels in many-core neuromorphic chips, reducing latency and improving overall system performance.

- Hierarchical event routing architecture that separates local and global communication, enabling efficient handling of both sparse and dense neural connectivity patterns.

- Energy-efficient routing mechanisms that selectively activate communication pathways based on neural activity patterns, significantly reducing power consumption in neuromorphic systems.

Power Efficiency Considerations in Event Routing

Power efficiency represents a critical constraint in the design of event routing protocols for many-core neuromorphic chips. As these systems aim to emulate the brain's remarkable computational efficiency, their power consumption must be minimized while maintaining high performance. Current neuromorphic architectures typically consume between 20-100mW during operation, significantly lower than traditional computing systems but still higher than biological neural networks.

Event-driven communication offers inherent power advantages by activating circuits only when necessary. This approach eliminates the constant power drain associated with clock-driven systems. However, the routing infrastructure itself can become a major power bottleneck as chip sizes increase and more cores are integrated. Measurements from recent implementations show that routing infrastructure can consume up to 35% of the total chip power budget.

Several strategies have emerged to address power efficiency in event routing. Hierarchical routing structures reduce the average path length of events, decreasing the energy required per communication. These architectures organize cores into clusters with local routing capabilities, minimizing long-distance transmission. Data from the SpiNNaker and Loihi platforms demonstrates that hierarchical approaches can reduce routing power by 40-60% compared to flat topologies.

Asynchronous circuit design techniques further enhance power efficiency by eliminating clock distribution networks. These circuits operate on demand, consuming power only when processing events. Recent implementations show that asynchronous routers consume 3-5x less power than their synchronous counterparts when handling sparse event traffic typical in neuromorphic applications.

Event compression and filtering mechanisms also contribute significantly to power savings. By aggregating similar events or filtering redundant information before transmission, these techniques reduce the overall communication volume. Studies indicate that appropriate filtering can decrease network traffic by up to 70% in certain neural network applications, with corresponding power reductions.

Adaptive power management represents another promising direction, where routing resources dynamically scale based on computational demands. During periods of low activity, portions of the routing infrastructure can enter low-power states. Implementation data shows that adaptive approaches can achieve additional 15-30% power savings without significant performance penalties.

The trade-off between routing flexibility and power efficiency remains a central challenge. More sophisticated routing algorithms typically offer better network utilization but require more complex hardware with higher power demands. Finding the optimal balance for specific application domains continues to drive research in this field.

Event-driven communication offers inherent power advantages by activating circuits only when necessary. This approach eliminates the constant power drain associated with clock-driven systems. However, the routing infrastructure itself can become a major power bottleneck as chip sizes increase and more cores are integrated. Measurements from recent implementations show that routing infrastructure can consume up to 35% of the total chip power budget.

Several strategies have emerged to address power efficiency in event routing. Hierarchical routing structures reduce the average path length of events, decreasing the energy required per communication. These architectures organize cores into clusters with local routing capabilities, minimizing long-distance transmission. Data from the SpiNNaker and Loihi platforms demonstrates that hierarchical approaches can reduce routing power by 40-60% compared to flat topologies.

Asynchronous circuit design techniques further enhance power efficiency by eliminating clock distribution networks. These circuits operate on demand, consuming power only when processing events. Recent implementations show that asynchronous routers consume 3-5x less power than their synchronous counterparts when handling sparse event traffic typical in neuromorphic applications.

Event compression and filtering mechanisms also contribute significantly to power savings. By aggregating similar events or filtering redundant information before transmission, these techniques reduce the overall communication volume. Studies indicate that appropriate filtering can decrease network traffic by up to 70% in certain neural network applications, with corresponding power reductions.

Adaptive power management represents another promising direction, where routing resources dynamically scale based on computational demands. During periods of low activity, portions of the routing infrastructure can enter low-power states. Implementation data shows that adaptive approaches can achieve additional 15-30% power savings without significant performance penalties.

The trade-off between routing flexibility and power efficiency remains a central challenge. More sophisticated routing algorithms typically offer better network utilization but require more complex hardware with higher power demands. Finding the optimal balance for specific application domains continues to drive research in this field.

Scalability Issues in Many-Core Neuromorphic Systems

As neuromorphic computing systems scale to incorporate hundreds or thousands of cores, significant scalability challenges emerge that threaten system performance and efficiency. The exponential growth in interconnections between cores creates communication bottlenecks that can severely limit the practical utility of large-scale neuromorphic architectures. Current many-core designs struggle to maintain performance scaling proportional to the number of cores, with communication overhead becoming the dominant limiting factor.

Network congestion represents a primary scalability concern, as event-based communication patterns in neuromorphic systems tend to create hotspots where multiple cores attempt to route events through the same network paths simultaneously. This congestion leads to increased latency and potential packet loss, compromising the temporal precision that is critical for neuromorphic computing applications.

Power consumption scales non-linearly with system size, presenting another significant challenge. The energy required for inter-core communication grows disproportionately as the number of cores increases, potentially negating the inherent energy efficiency advantages of neuromorphic computing. In some experimental many-core neuromorphic systems, communication energy has been observed to account for over 60% of total system power consumption at scale.

Memory bandwidth limitations further exacerbate scalability issues. As core counts increase, the shared memory resources become contention points, with multiple cores competing for access. This contention can lead to substantial performance degradation, particularly for neuromorphic applications with high event rates or dense connectivity patterns.

The programming model complexity also increases dramatically with scale. Developers must manage complex event routing topologies and handle synchronization across distributed cores, often with limited debugging capabilities. This complexity barrier can significantly slow development cycles and limit adoption of large-scale neuromorphic systems.

Hardware resource utilization becomes increasingly imbalanced at scale, with some cores experiencing high utilization while others remain underutilized due to workload distribution challenges. This imbalance reduces overall system efficiency and complicates performance optimization efforts.

Fault tolerance emerges as a critical concern in large-scale systems, as the probability of component failure increases with system size. Current neuromorphic architectures generally lack robust fault detection and recovery mechanisms, making them vulnerable to performance degradation or complete system failure when scaling to many cores.

Network congestion represents a primary scalability concern, as event-based communication patterns in neuromorphic systems tend to create hotspots where multiple cores attempt to route events through the same network paths simultaneously. This congestion leads to increased latency and potential packet loss, compromising the temporal precision that is critical for neuromorphic computing applications.

Power consumption scales non-linearly with system size, presenting another significant challenge. The energy required for inter-core communication grows disproportionately as the number of cores increases, potentially negating the inherent energy efficiency advantages of neuromorphic computing. In some experimental many-core neuromorphic systems, communication energy has been observed to account for over 60% of total system power consumption at scale.

Memory bandwidth limitations further exacerbate scalability issues. As core counts increase, the shared memory resources become contention points, with multiple cores competing for access. This contention can lead to substantial performance degradation, particularly for neuromorphic applications with high event rates or dense connectivity patterns.

The programming model complexity also increases dramatically with scale. Developers must manage complex event routing topologies and handle synchronization across distributed cores, often with limited debugging capabilities. This complexity barrier can significantly slow development cycles and limit adoption of large-scale neuromorphic systems.

Hardware resource utilization becomes increasingly imbalanced at scale, with some cores experiencing high utilization while others remain underutilized due to workload distribution challenges. This imbalance reduces overall system efficiency and complicates performance optimization efforts.

Fault tolerance emerges as a critical concern in large-scale systems, as the probability of component failure increases with system size. Current neuromorphic architectures generally lack robust fault detection and recovery mechanisms, making them vulnerable to performance degradation or complete system failure when scaling to many cores.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!