Online, one-shot learning capabilities of neuromorphic systems.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field has evolved significantly since Carver Mead coined the term in the late 1980s, initially focusing on mimicking neural structures using analog VLSI circuits. The evolution trajectory has moved from simple silicon neurons to complex systems capable of implementing spiking neural networks (SNNs) with various learning mechanisms.

The early developmental phase (1980s-1990s) concentrated on creating basic neuromorphic elements, while the intermediate phase (2000s-early 2010s) saw the emergence of larger-scale neuromorphic chips and systems. The current advanced phase (mid-2010s-present) has witnessed remarkable breakthroughs with projects like IBM's TrueNorth, Intel's Loihi, and BrainScaleS, demonstrating unprecedented energy efficiency and computational capabilities for specific tasks.

Online, one-shot learning represents a critical frontier in neuromorphic computing development. Unlike traditional deep learning approaches requiring extensive training data and iterations, biological systems can often learn from single exposures—a capability that current AI systems struggle to replicate. The objective of implementing online, one-shot learning in neuromorphic systems aims to enable real-time adaptation to novel stimuli without requiring extensive retraining or energy consumption.

This capability would revolutionize applications requiring immediate learning and adaptation, such as autonomous vehicles navigating unfamiliar environments, robotic systems performing novel tasks, or edge devices operating in dynamic conditions with limited power resources. The technical objectives include developing spike-timing-dependent plasticity (STDP) mechanisms that can rapidly form meaningful representations from limited examples while maintaining stability in previously learned patterns.

The convergence of neuromorphic hardware with online, one-shot learning algorithms represents a promising direction toward achieving artificial general intelligence (AGI) systems that combine the efficiency of biological neural systems with the precision of digital computing. Current research trends indicate growing interest in hybrid approaches that integrate traditional deep learning techniques with neuromorphic principles to leverage the strengths of both paradigms.

The ultimate objective extends beyond mere technical achievement—it aims to create computing systems that can perceive, learn, and adapt with the efficiency and versatility of biological brains while overcoming their limitations in terms of speed and precision for specific computational tasks. This vision drives current research efforts toward developing neuromorphic systems capable of continuous, online learning from minimal examples across diverse application domains.

Market Analysis for Online Learning Systems

The neuromorphic computing market is experiencing significant growth, driven by the increasing demand for artificial intelligence systems capable of online, one-shot learning. Current market valuations place the global neuromorphic computing sector at approximately $3.2 billion in 2023, with projections indicating a compound annual growth rate of 24.7% through 2030. This remarkable expansion reflects the growing recognition of neuromorphic systems' potential to revolutionize machine learning applications across various industries.

The market for online learning capabilities in neuromorphic systems is particularly robust in sectors requiring real-time adaptation and decision-making. Healthcare represents the largest market segment, accounting for roughly 28% of current applications, with implementations ranging from patient monitoring systems to adaptive medical imaging analysis. The financial services sector follows closely at 22%, leveraging neuromorphic systems for fraud detection and algorithmic trading that can adapt to market fluctuations in real-time.

Autonomous vehicles constitute another rapidly expanding market segment, growing at 31% annually, as manufacturers seek systems capable of learning from new road conditions or obstacles with minimal training examples. This one-shot learning capability significantly reduces the data requirements that have traditionally bottlenecked AI implementation in safety-critical applications.

Geographically, North America leads the market with approximately 42% share, followed by Europe (27%) and Asia-Pacific (24%). However, the Asia-Pacific region is demonstrating the fastest growth rate at 29.3% annually, driven primarily by substantial investments in neuromorphic research and development in China, Japan, and South Korea.

Consumer demand for more intuitive and adaptive personal electronics is creating a new market segment expected to grow by 35% annually over the next five years. This includes smart home devices, wearables, and personal assistants that can learn user preferences from minimal interactions, significantly enhancing user experience while reducing computational overhead.

Enterprise adoption of neuromorphic systems with online learning capabilities is accelerating, with 63% of Fortune 500 companies either implementing or actively exploring these technologies. This represents a 17% increase from just two years ago, indicating rapidly growing market acceptance. The primary drivers cited by enterprise customers include reduced training time (76%), lower energy consumption (68%), and improved adaptability to changing conditions (82%).

Current Limitations in One-Shot Learning Technologies

Despite significant advancements in neuromorphic computing systems, current one-shot learning implementations face several critical limitations. The primary challenge lies in the fundamental trade-off between plasticity and stability. Neuromorphic systems designed for rapid learning from single examples often struggle to maintain previously acquired knowledge, leading to catastrophic forgetting when new information is introduced. This stability-plasticity dilemma remains particularly pronounced in hardware implementations where synaptic weight adjustments must balance immediate adaptation with long-term memory preservation.

Energy efficiency presents another significant constraint. While neuromorphic architectures generally offer power advantages over traditional computing paradigms, the computational demands of online one-shot learning algorithms can substantially increase energy consumption. Current systems typically require multiple processing cycles and complex weight update mechanisms to effectively encode new information from limited examples, diminishing the energy benefits that neuromorphic computing promises.

Hardware limitations further impede progress in this domain. Existing neuromorphic chips offer limited precision in synaptic weight representation, restricting the fidelity of learned representations. Additionally, the stochastic nature of emerging memory technologies like memristors introduces variability in learning outcomes, compromising reliability in one-shot learning scenarios where each example carries significant informational weight.

The generalization capability of current one-shot learning implementations remains suboptimal. Systems trained on single examples frequently overfit to specific features of the training instance rather than capturing generalizable concepts. This limitation becomes particularly evident when neuromorphic systems encounter variations in input patterns that deviate from the original training example, resulting in poor recognition performance across diverse real-world conditions.

Scalability issues present additional barriers. As neuromorphic architectures grow in size to accommodate more complex tasks, the coordination of learning across distributed synaptic elements becomes increasingly challenging. Current approaches struggle to maintain coherent learning when scaling to larger networks, often resulting in degraded performance or requiring prohibitively complex control mechanisms.

Temporal dynamics pose unique challenges for online one-shot learning in neuromorphic systems. The precise timing of spike-based information processing is critical for effective learning, yet maintaining temporal precision across varying operational conditions remains difficult. Current implementations often fail to adequately capture temporal relationships in data when learning from single examples, limiting their effectiveness in sequence-dependent applications.

Current Approaches to Online Learning Implementation

01 Neuromorphic architectures for one-shot learning

Neuromorphic systems can be designed with specialized architectures that enable one-shot learning capabilities. These architectures often mimic the brain's neural networks and synaptic plasticity mechanisms to allow for rapid learning from limited examples. By incorporating features such as spike-timing-dependent plasticity and parallel processing units, these systems can recognize patterns and adapt to new information with minimal training data, making them suitable for online learning scenarios where continuous adaptation is required.- Neuromorphic architectures for one-shot learning: Neuromorphic systems can be designed with specialized architectures that enable one-shot learning capabilities. These architectures often mimic the brain's ability to learn from limited examples by incorporating features such as spike-timing-dependent plasticity, memory-augmented neural networks, and attention mechanisms. Such systems can rapidly adapt to new information with minimal training examples, making them suitable for online learning scenarios where data availability is limited.

- Online learning algorithms for neuromorphic hardware: Specialized algorithms have been developed to enable online, one-shot learning in neuromorphic systems. These algorithms allow the system to continuously update its knowledge base as new data becomes available, without requiring extensive retraining. They often incorporate techniques such as incremental learning, transfer learning, and meta-learning to facilitate rapid adaptation to new information with minimal computational resources and energy consumption.

- Memory mechanisms for efficient one-shot learning: Neuromorphic systems can implement specialized memory mechanisms that support one-shot learning capabilities. These include episodic memory structures, working memory components, and associative memory networks that can rapidly encode and retrieve information from limited examples. By efficiently storing and accessing relevant information, these memory mechanisms enable the system to learn new concepts or adapt to changing environments with minimal training data.

- Spiking neural networks for online learning: Spiking neural networks (SNNs) offer unique advantages for implementing online, one-shot learning in neuromorphic systems. These networks process information through discrete spikes, similar to biological neurons, allowing for efficient temporal information processing. SNNs can be designed with adaptive synaptic plasticity rules that enable rapid learning from few examples, making them particularly suitable for real-time applications requiring continuous adaptation to new data.

- Applications of neuromorphic one-shot learning systems: Neuromorphic systems with one-shot learning capabilities have diverse applications across multiple domains. These include computer vision tasks such as object recognition and scene understanding, natural language processing, autonomous navigation, robotics, and adaptive control systems. The ability to learn from limited examples makes these systems particularly valuable in environments where data collection is difficult or expensive, or where rapid adaptation to new conditions is required.

02 Memory-based approaches for efficient online learning

Memory-based approaches in neuromorphic systems enhance one-shot learning capabilities by efficiently storing and retrieving information. These systems utilize specialized memory structures such as content-addressable memory or associative memory to quickly access relevant information based on partial inputs. By implementing memory mechanisms that support rapid encoding and retrieval of patterns, these neuromorphic systems can perform online learning tasks with minimal examples while maintaining computational efficiency and reducing the need for extensive retraining.Expand Specific Solutions03 Bio-inspired learning algorithms for neuromorphic computing

Bio-inspired learning algorithms enhance the one-shot learning capabilities of neuromorphic systems by mimicking biological learning processes. These algorithms incorporate principles from neuroscience such as Hebbian learning, homeostatic plasticity, and neuromodulation to enable rapid adaptation to new information. By implementing these biologically plausible mechanisms, neuromorphic systems can achieve efficient online learning with minimal examples, allowing them to continuously update their knowledge base and adapt to changing environments in real-time applications.Expand Specific Solutions04 Hardware implementations for accelerated one-shot learning

Specialized hardware implementations can significantly enhance the performance of neuromorphic systems for one-shot learning applications. These implementations include custom analog/digital circuits, memristive devices, and specialized processors designed to efficiently execute neural network operations. By optimizing hardware for neuromorphic computing, these systems can achieve low-power, high-speed processing capabilities necessary for online learning scenarios, enabling real-time adaptation and inference with minimal training examples while maintaining energy efficiency.Expand Specific Solutions05 Integration of neuromorphic systems with traditional computing for enhanced learning

Hybrid approaches that integrate neuromorphic systems with traditional computing architectures can enhance one-shot learning capabilities. These systems combine the strengths of neuromorphic computing (parallel processing, energy efficiency, and adaptive learning) with conventional computing methods (precise calculations and established algorithms). By leveraging this integration, these hybrid systems can perform complex online learning tasks more efficiently, enabling applications such as real-time pattern recognition, adaptive control systems, and intelligent sensors that can learn from limited examples while maintaining computational efficiency.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing

The neuromorphic systems market for online, one-shot learning is in its early growth phase, characterized by increasing research activity but limited commercial deployment. The global market is projected to expand significantly as neuromorphic computing gains traction in edge AI applications. Technologically, the field shows varying maturity levels across key players: IBM leads with its TrueNorth and subsequent neuromorphic architectures, while academic institutions like Tsinghua University and Zhejiang University contribute fundamental research. Samsung and Intel are advancing hardware implementations, with Google focusing on algorithmic approaches. Companies like Syntiant and HRL Laboratories are developing specialized neuromorphic chips for edge applications. The ecosystem reflects a collaborative environment between established technology corporations, research universities, and specialized startups working to overcome the challenges of efficient one-shot learning in resource-constrained environments.

International Business Machines Corp.

Samsung Electronics Co., Ltd.

Key Innovations in One-Shot Learning Algorithms

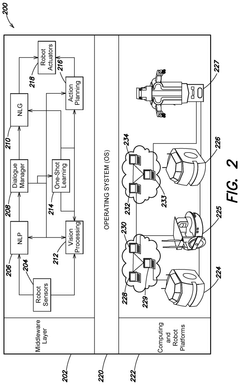

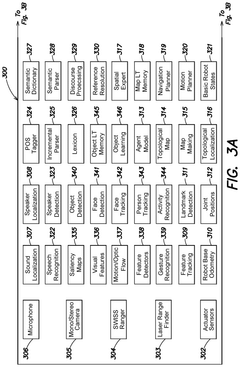

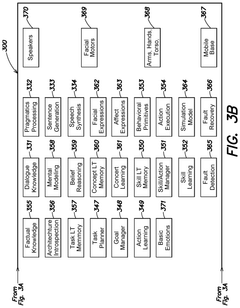

- Intelligent systems can learn new tasks through one-shot instruction from a human, utilizing natural language input to acquire knowledge immediately and apply it without prior training, leveraging components like Natural Language Processing, Task Learning, and Knowledge Sharing subsystems to generate executable scripts.

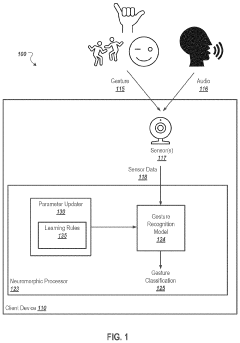

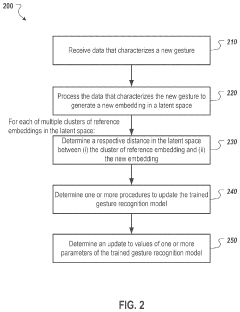

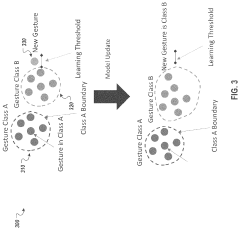

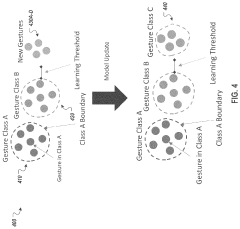

- A self-learning gesture recognition system employing a neuromorphic processor that continuously updates a gesture recognition model using learning rules, allowing for real-time adaptation and accurate recognition of gestures through a spiking neural network (SNN) and event-based data processing, enabling efficient online learning on edge devices.

Energy Efficiency Considerations in Neuromorphic Computing

Neuromorphic computing systems, inspired by the brain's architecture, offer significant energy efficiency advantages over traditional von Neumann architectures, particularly for online, one-shot learning applications. The fundamental energy efficiency of these systems stems from their event-driven processing paradigm, where computation occurs only when necessary, eliminating the constant power draw characteristic of clock-driven conventional systems. This approach can reduce energy consumption by orders of magnitude for sparse temporal data processing.

The implementation of local learning rules, such as Spike-Timing-Dependent Plasticity (STDP), further enhances energy efficiency by eliminating the need for separate memory access operations during learning. These biologically-inspired mechanisms enable weight updates to occur directly at the synapse level, significantly reducing data movement—a major source of energy consumption in computing systems.

Recent advancements in neuromorphic hardware have demonstrated remarkable energy efficiency metrics. IBM's TrueNorth achieves approximately 400 pJ per synaptic operation, while Intel's Loihi demonstrates even greater efficiency at around 23.6 pJ per synaptic event. These figures represent improvements of several orders of magnitude compared to GPU implementations of neural networks for similar tasks.

For online, one-shot learning scenarios, neuromorphic systems exhibit particularly favorable energy profiles. The ability to learn from single examples without extensive retraining cycles dramatically reduces the computational burden and associated energy costs. Experimental results show that neuromorphic implementations can achieve up to 1000x energy reduction for online learning tasks compared to conventional deep learning approaches requiring multiple training iterations.

Material innovations are further driving energy efficiency improvements. The development of novel memristive devices enables non-volatile memory with extremely low switching energies (femtojoules per switch), allowing for persistent weight storage without static power consumption. These devices, when integrated into neuromorphic architectures, create synapse-like structures that maintain learned patterns with minimal energy expenditure during idle periods.

Despite these advantages, challenges remain in optimizing the energy-accuracy tradeoff. Current neuromorphic systems often sacrifice some computational precision to achieve higher energy efficiency, which can impact learning performance in complex tasks. Research efforts are increasingly focused on developing adaptive precision mechanisms that dynamically allocate energy resources based on task requirements, potentially offering optimal energy utilization without compromising learning capabilities.

Hardware-Software Co-Design Challenges

The effective implementation of online, one-shot learning in neuromorphic systems requires seamless integration between hardware architectures and software frameworks. This integration presents significant challenges due to the fundamental differences between traditional computing paradigms and brain-inspired neuromorphic approaches. The hardware components must be specifically designed to support the rapid weight updates and adaptive mechanisms required for one-shot learning, while software frameworks need to efficiently utilize these specialized hardware capabilities.

One primary challenge lies in developing hardware that can support the temporal dynamics necessary for spike-timing-dependent plasticity (STDP) and other biologically plausible learning rules. Current neuromorphic chips often struggle with implementing the precise timing mechanisms and local memory access patterns required for online learning without significant power consumption or latency issues. The memory-compute co-location paradigm, essential for neuromorphic efficiency, further complicates the design process as it requires rethinking traditional von Neumann architectures.

Software frameworks face equally challenging obstacles in efficiently mapping neural algorithms to specialized neuromorphic hardware. The abstraction layers between high-level learning algorithms and low-level hardware implementations often introduce performance bottlenecks or limit the exploitation of hardware-specific optimizations. Programming models for neuromorphic systems remain immature compared to those for conventional computing platforms, creating a significant barrier for algorithm developers without specialized hardware knowledge.

The heterogeneity of neuromorphic hardware implementations further exacerbates these challenges. Different neuromorphic platforms employ varying approaches to neuron models, synaptic plasticity, and network connectivity, making it difficult to develop portable software solutions. This fragmentation in the ecosystem necessitates either platform-specific optimizations or compromises in performance and functionality when aiming for cross-platform compatibility.

Energy efficiency considerations add another dimension to the co-design challenge. While neuromorphic hardware promises significant energy advantages over conventional computing for neural processing, implementing online learning capabilities can substantially increase power requirements. Balancing the energy budget between inference and learning operations requires careful hardware-software co-optimization strategies that consider both algorithmic efficiency and circuit-level power management techniques.

Finally, validation and debugging tools for neuromorphic systems remain underdeveloped compared to traditional computing environments. The complex, parallel, and event-driven nature of neuromorphic computation makes it difficult to trace execution paths and identify performance bottlenecks or functional errors, significantly complicating the co-design process and extending development cycles for new learning capabilities.