How can neuromorphic systems learn continuously without catastrophic forgetting?

SEP 3, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Learning Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field has evolved significantly since its conceptual inception in the late 1980s by Carver Mead, who first proposed using very-large-scale integration (VLSI) systems to mimic neurobiological architectures. The evolution trajectory has moved from simple analog circuit implementations to sophisticated hybrid systems incorporating both analog and digital components that more accurately emulate neural processing.

The fundamental objective of neuromorphic computing is to develop computational systems that process information in a manner analogous to biological neural networks, characterized by massive parallelism, event-driven processing, and co-located memory and computation. These systems aim to overcome the von Neumann bottleneck inherent in traditional computing architectures, where the separation of memory and processing units creates inefficiencies in data-intensive applications.

A critical learning objective in neuromorphic computing research is addressing the challenge of continuous learning without catastrophic forgetting. Biological neural systems demonstrate remarkable plasticity, allowing for the acquisition of new knowledge while preserving previously learned information. In contrast, artificial neural networks typically suffer from catastrophic forgetting, where learning new tasks often erases performance on previously learned tasks.

Recent advancements in neuromorphic hardware platforms such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida have established important milestones in the field. These platforms implement various forms of spike-based computing and on-chip learning mechanisms that more closely approximate biological neural processing. The progression from purely academic research to commercial applications signals the growing maturity of neuromorphic technologies.

The technical evolution in this domain has been marked by several key developments: the implementation of spike-timing-dependent plasticity (STDP), the development of efficient spiking neural network (SNN) algorithms, and the creation of specialized hardware that supports sparse, event-driven computation. These advances have collectively pushed the field toward more biologically plausible and computationally efficient systems.

Looking forward, the primary learning objectives for neuromorphic systems include developing more sophisticated on-chip learning algorithms that can support continual learning, improving energy efficiency to enable deployment in edge computing scenarios, and creating more seamless interfaces between neuromorphic hardware and conventional computing systems to facilitate broader adoption across various application domains.

The fundamental objective of neuromorphic computing is to develop computational systems that process information in a manner analogous to biological neural networks, characterized by massive parallelism, event-driven processing, and co-located memory and computation. These systems aim to overcome the von Neumann bottleneck inherent in traditional computing architectures, where the separation of memory and processing units creates inefficiencies in data-intensive applications.

A critical learning objective in neuromorphic computing research is addressing the challenge of continuous learning without catastrophic forgetting. Biological neural systems demonstrate remarkable plasticity, allowing for the acquisition of new knowledge while preserving previously learned information. In contrast, artificial neural networks typically suffer from catastrophic forgetting, where learning new tasks often erases performance on previously learned tasks.

Recent advancements in neuromorphic hardware platforms such as IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida have established important milestones in the field. These platforms implement various forms of spike-based computing and on-chip learning mechanisms that more closely approximate biological neural processing. The progression from purely academic research to commercial applications signals the growing maturity of neuromorphic technologies.

The technical evolution in this domain has been marked by several key developments: the implementation of spike-timing-dependent plasticity (STDP), the development of efficient spiking neural network (SNN) algorithms, and the creation of specialized hardware that supports sparse, event-driven computation. These advances have collectively pushed the field toward more biologically plausible and computationally efficient systems.

Looking forward, the primary learning objectives for neuromorphic systems include developing more sophisticated on-chip learning algorithms that can support continual learning, improving energy efficiency to enable deployment in edge computing scenarios, and creating more seamless interfaces between neuromorphic hardware and conventional computing systems to facilitate broader adoption across various application domains.

Market Analysis for Continuous Learning AI Systems

The continuous learning AI systems market is experiencing rapid growth, driven by the increasing demand for neuromorphic computing solutions that can adapt and learn without suffering from catastrophic forgetting. Current market estimates value this segment at approximately $2.3 billion in 2023, with projections indicating a compound annual growth rate of 27% through 2030, potentially reaching $12.5 billion by the end of the decade.

Healthcare represents the largest vertical market for continuous learning systems, accounting for roughly 31% of current implementations. These systems are particularly valuable for analyzing patient data over time, adapting to new medical research, and personalizing treatment protocols without losing previously acquired knowledge. The ability to incrementally learn from new medical cases while maintaining expertise on rare conditions addresses a critical need in clinical decision support systems.

Manufacturing and industrial automation constitute the second-largest market segment at 24%, where continuous learning systems enable predictive maintenance that evolves with changing equipment conditions and production environments. These systems can detect anomalies while continuously updating their understanding of "normal" operations across seasonal variations and equipment aging.

Financial services (19%) and autonomous vehicles (15%) represent the next significant market segments. In finance, continuous learning systems adapt to evolving market conditions and fraud patterns without forgetting established risk models. For autonomous vehicles, these systems must incorporate new driving scenarios while maintaining safety protocols learned from millions of previous driving miles.

Market research indicates that enterprise adoption faces three primary barriers: implementation complexity (cited by 68% of potential customers), integration with legacy systems (54%), and concerns about reliability when systems continuously update (47%). These challenges have created a growing market for middleware solutions that facilitate the deployment of continuous learning systems within existing IT infrastructures.

Geographically, North America leads adoption with 42% market share, followed by Europe (27%), Asia-Pacific (23%), and rest of world (8%). However, the Asia-Pacific region shows the fastest growth rate at 34% annually, driven by significant investments in AI infrastructure in China, Japan, and South Korea.

Customer surveys reveal that organizations implementing continuous learning systems report average operational efficiency improvements of 23% compared to traditional AI systems that require periodic complete retraining. This efficiency gain represents a compelling return on investment that is accelerating market adoption despite the technical challenges involved in implementing neuromorphic computing solutions.

Healthcare represents the largest vertical market for continuous learning systems, accounting for roughly 31% of current implementations. These systems are particularly valuable for analyzing patient data over time, adapting to new medical research, and personalizing treatment protocols without losing previously acquired knowledge. The ability to incrementally learn from new medical cases while maintaining expertise on rare conditions addresses a critical need in clinical decision support systems.

Manufacturing and industrial automation constitute the second-largest market segment at 24%, where continuous learning systems enable predictive maintenance that evolves with changing equipment conditions and production environments. These systems can detect anomalies while continuously updating their understanding of "normal" operations across seasonal variations and equipment aging.

Financial services (19%) and autonomous vehicles (15%) represent the next significant market segments. In finance, continuous learning systems adapt to evolving market conditions and fraud patterns without forgetting established risk models. For autonomous vehicles, these systems must incorporate new driving scenarios while maintaining safety protocols learned from millions of previous driving miles.

Market research indicates that enterprise adoption faces three primary barriers: implementation complexity (cited by 68% of potential customers), integration with legacy systems (54%), and concerns about reliability when systems continuously update (47%). These challenges have created a growing market for middleware solutions that facilitate the deployment of continuous learning systems within existing IT infrastructures.

Geographically, North America leads adoption with 42% market share, followed by Europe (27%), Asia-Pacific (23%), and rest of world (8%). However, the Asia-Pacific region shows the fastest growth rate at 34% annually, driven by significant investments in AI infrastructure in China, Japan, and South Korea.

Customer surveys reveal that organizations implementing continuous learning systems report average operational efficiency improvements of 23% compared to traditional AI systems that require periodic complete retraining. This efficiency gain represents a compelling return on investment that is accelerating market adoption despite the technical challenges involved in implementing neuromorphic computing solutions.

Current Challenges in Neuromorphic Continuous Learning

Neuromorphic computing systems face significant challenges in achieving continuous learning capabilities without catastrophic forgetting. The primary obstacle lies in the stability-plasticity dilemma, where systems must balance the ability to acquire new knowledge while preserving previously learned information. Traditional artificial neural networks typically overwrite existing knowledge when learning new tasks, resulting in catastrophic forgetting that severely limits their practical applications in dynamic environments.

Current neuromorphic architectures struggle with memory consolidation mechanisms that differ substantially from biological systems. While the human brain efficiently transfers information from short-term to long-term memory through complex synaptic processes, artificial systems lack comparable mechanisms for selective memory retention and gradual knowledge integration. This fundamental limitation prevents neuromorphic systems from adapting to new information while maintaining performance on previously learned tasks.

Resource constraints present another significant challenge. Neuromorphic systems designed for edge computing applications face severe limitations in power consumption, memory capacity, and computational resources. These constraints make implementing sophisticated continual learning algorithms particularly difficult, as many current approaches require substantial memory overhead to store previous examples or model parameters, contradicting the efficiency goals of neuromorphic computing.

The absence of standardized benchmarks and evaluation metrics specifically designed for continuous learning in neuromorphic systems further complicates progress. Researchers employ diverse and often incomparable methodologies to assess performance, making it challenging to objectively evaluate and compare different approaches. This lack of standardization hinders collaborative advancement and slows the overall pace of innovation in the field.

Current synaptic plasticity models implemented in hardware neuromorphic systems often fail to capture the complexity and adaptability of biological synapses. While biological systems utilize multiple timescales of plasticity and neuromodulation to regulate learning, hardware implementations typically rely on simplified models like Spike-Timing-Dependent Plasticity (STDP) that lack the sophistication needed for effective continuous learning without forgetting.

The integration of local learning rules with global optimization objectives represents another significant challenge. Neuromorphic systems generally employ local learning rules for efficiency and biological plausibility, but these rules often struggle to optimize toward global objectives necessary for complex task learning. This disconnect between local adaptation and global optimization creates fundamental limitations in system capabilities.

Finally, current neuromorphic architectures lack effective attention and memory recall mechanisms that could help mitigate catastrophic forgetting. Biological systems utilize complex attention processes to selectively encode important information and recall relevant past experiences when facing new situations, capabilities that remain largely underdeveloped in existing neuromorphic implementations.

Current neuromorphic architectures struggle with memory consolidation mechanisms that differ substantially from biological systems. While the human brain efficiently transfers information from short-term to long-term memory through complex synaptic processes, artificial systems lack comparable mechanisms for selective memory retention and gradual knowledge integration. This fundamental limitation prevents neuromorphic systems from adapting to new information while maintaining performance on previously learned tasks.

Resource constraints present another significant challenge. Neuromorphic systems designed for edge computing applications face severe limitations in power consumption, memory capacity, and computational resources. These constraints make implementing sophisticated continual learning algorithms particularly difficult, as many current approaches require substantial memory overhead to store previous examples or model parameters, contradicting the efficiency goals of neuromorphic computing.

The absence of standardized benchmarks and evaluation metrics specifically designed for continuous learning in neuromorphic systems further complicates progress. Researchers employ diverse and often incomparable methodologies to assess performance, making it challenging to objectively evaluate and compare different approaches. This lack of standardization hinders collaborative advancement and slows the overall pace of innovation in the field.

Current synaptic plasticity models implemented in hardware neuromorphic systems often fail to capture the complexity and adaptability of biological synapses. While biological systems utilize multiple timescales of plasticity and neuromodulation to regulate learning, hardware implementations typically rely on simplified models like Spike-Timing-Dependent Plasticity (STDP) that lack the sophistication needed for effective continuous learning without forgetting.

The integration of local learning rules with global optimization objectives represents another significant challenge. Neuromorphic systems generally employ local learning rules for efficiency and biological plausibility, but these rules often struggle to optimize toward global objectives necessary for complex task learning. This disconnect between local adaptation and global optimization creates fundamental limitations in system capabilities.

Finally, current neuromorphic architectures lack effective attention and memory recall mechanisms that could help mitigate catastrophic forgetting. Biological systems utilize complex attention processes to selectively encode important information and recall relevant past experiences when facing new situations, capabilities that remain largely underdeveloped in existing neuromorphic implementations.

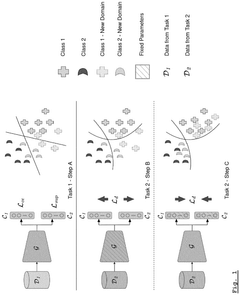

Existing Approaches to Mitigate Catastrophic Forgetting

01 Synaptic plasticity mechanisms for continuous learning

Neuromorphic systems implement biologically-inspired synaptic plasticity mechanisms to enable continuous learning without catastrophic forgetting. These systems mimic the brain's ability to adjust connection strengths between neurons based on activity patterns. By incorporating mechanisms like spike-timing-dependent plasticity (STDP) and homeostatic plasticity, these systems can learn new information while preserving previously acquired knowledge, effectively addressing the catastrophic forgetting problem in neural networks.- Synaptic plasticity mechanisms for continuous learning: Neuromorphic systems implement synaptic plasticity mechanisms that mimic biological neural networks to enable continuous learning without catastrophic forgetting. These systems use adaptive synaptic weights and specialized memory structures that can be modified based on new inputs while preserving previously learned information. By incorporating both short-term and long-term plasticity mechanisms, these systems can balance stability and plasticity, allowing for incremental learning while maintaining performance on previously learned tasks.

- Dual-memory architectures to prevent forgetting: Dual-memory architectures separate recent and consolidated memories to prevent catastrophic forgetting in neuromorphic systems. These architectures typically include a fast-learning component for acquiring new information and a slow-changing component for preserving established knowledge. The system gradually transfers information from the fast to the slow memory through controlled consolidation processes, allowing continuous learning while maintaining stability of previously acquired knowledge. This approach mimics the complementary learning systems found in mammalian brains.

- Elastic weight consolidation techniques: Elastic weight consolidation techniques protect important parameters in neuromorphic networks during continuous learning. These methods identify and selectively preserve weights that are critical for previously learned tasks while allowing less important weights to be modified for new learning. By assigning importance metrics to different network parameters and applying constraints proportional to these metrics during training, the system can accommodate new information without disrupting existing knowledge. This approach enables efficient parameter sharing across multiple learning tasks.

- Replay and pseudo-rehearsal mechanisms: Replay and pseudo-rehearsal mechanisms periodically reintroduce previously learned patterns during new learning phases to maintain representation of old knowledge. These techniques generate synthetic examples that capture the essence of previously learned data, allowing the network to refresh its memory of past tasks without requiring storage of the original training data. By interleaving new learning with replayed experiences, neuromorphic systems can maintain performance across multiple sequential tasks and prevent catastrophic interference between old and new information.

- Sparse distributed representations and structural plasticity: Neuromorphic systems employ sparse distributed representations and structural plasticity to enable continuous learning. These approaches maintain network capacity by dynamically allocating resources for new information while preserving existing knowledge. Sparse coding ensures that only a small subset of neurons is active for any given input, reducing interference between representations of different concepts. Structural plasticity allows the network to grow new connections or prune unnecessary ones, creating separate pathways for different tasks while maintaining overall network efficiency.

02 Dual-memory architectures for knowledge preservation

Neuromorphic systems employ dual-memory architectures that separate short-term and long-term memory processes to prevent catastrophic forgetting. These architectures typically consist of a fast-learning component for acquiring new information and a slow-changing component for preserving existing knowledge. This approach allows the system to integrate new information while maintaining stability in previously learned representations, enabling continuous learning without disrupting established neural pathways.Expand Specific Solutions03 Sparse coding and selective activation techniques

Neuromorphic systems utilize sparse coding and selective neuronal activation to mitigate catastrophic forgetting during continuous learning. By ensuring that only a small subset of neurons is active for any given input, these systems reduce interference between different learning tasks. Sparse representations help maintain separation between memory traces, allowing new information to be encoded without overwriting existing knowledge, thus enabling continuous learning while preserving previously acquired information.Expand Specific Solutions04 Adaptive learning rates and regularization methods

Neuromorphic systems implement adaptive learning rates and regularization techniques to balance stability and plasticity during continuous learning. These methods dynamically adjust how quickly the system adapts to new information based on factors such as input novelty and network confidence. By incorporating techniques like elastic weight consolidation and synaptic intelligence, these systems can learn continuously while protecting important parameters associated with previously acquired knowledge, effectively preventing catastrophic forgetting.Expand Specific Solutions05 Replay and generative mechanisms for memory consolidation

Neuromorphic systems employ memory replay and generative mechanisms to consolidate knowledge and prevent catastrophic forgetting. These approaches periodically reactivate patterns representing previously learned information, reinforcing important neural connections. By interleaving the learning of new information with the replay of existing knowledge, these systems can maintain performance on earlier tasks while acquiring new capabilities, enabling truly continuous learning without the typical degradation associated with catastrophic forgetting.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing Research

Neuromorphic computing is currently in an early growth phase, with the market expected to expand significantly as continuous learning capabilities advance. The global market size is projected to reach $8-10 billion by 2028, driven by applications in edge computing and AI. Technologically, solutions for catastrophic forgetting remain at varying maturity levels. Leading players include academic institutions like Tsinghua University and Northwestern Polytechnical University conducting foundational research, while companies such as Huawei, Samsung, and DeepMind are developing practical implementations. Industry leaders are exploring complementary approaches including sparse representations, memory replay mechanisms, and elastic weight consolidation to enable neuromorphic systems that can learn continuously while preserving previous knowledge.

Tsinghua University

Technical Solution: Tsinghua University has developed an innovative neuromorphic architecture called "DualNet" specifically designed to address catastrophic forgetting in continuous learning scenarios. Their approach implements a biologically-inspired dual-memory system that mimics the complementary learning systems in the human brain. The architecture features a fast-learning component that rapidly acquires new information and a more stable component that preserves long-term knowledge. Tsinghua's researchers have implemented novel synaptic plasticity mechanisms that dynamically adjust learning rates based on the importance of connections for previously learned tasks. Their system incorporates sparse coding techniques that reduce representational overlap between different tasks, minimizing interference during sequential learning. Additionally, they've developed a selective memory consolidation process that identifies and strengthens critical neural pathways associated with fundamental knowledge while allowing adaptation to new information. The architecture employs context-dependent gating mechanisms that activate different subnetworks based on task requirements, effectively compartmentalizing knowledge while maintaining transfer learning capabilities.

Strengths: Tsinghua's approach demonstrates excellent performance on diverse sequential learning benchmarks with minimal forgetting of earlier tasks. Their architecture shows strong biological plausibility while maintaining computational efficiency. Weaknesses: The system requires careful initialization and parameter tuning to achieve optimal performance across varied task domains, and the dual-memory approach introduces additional architectural complexity.

Huawei Technologies Co., Ltd.

Technical Solution: Huawei has developed a neuromorphic computing architecture called "Da Vinci" that addresses continuous learning without catastrophic forgetting through a multi-tiered memory system. This system implements a biologically-inspired approach combining short-term and long-term memory components with selective consolidation mechanisms. Their solution employs synaptic plasticity models with metaplasticity features that allow synapses to adjust their learning rates based on importance. Huawei's architecture incorporates experience replay buffers that strategically retain and reintroduce critical past experiences during new learning phases, maintaining representation of previously learned tasks. Additionally, they've implemented sparse distributed representations that reduce interference between different memory traces, allowing for better preservation of existing knowledge while accommodating new information.

Strengths: Huawei's solution offers excellent scalability for large-scale AI applications and integrates well with their existing hardware ecosystem. Their approach demonstrates strong performance in edge computing scenarios with limited power consumption. Weaknesses: The system requires significant computational resources for experience replay mechanisms and may face challenges with extremely large continuous learning tasks requiring extensive memory resources.

Key Innovations in Synaptic Plasticity Mechanisms

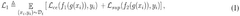

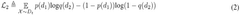

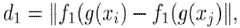

A computer-implemented method for domain incremental continual learning in an artificial neural network using maximum discrepancy loss to counteract representation drift

PatentPendingEP4432164A1

Innovation

- The method employs a computer-implemented approach using maximum discrepancy loss and metric learning to regulate the encoder's representation clusters, ensuring that new tasks' representations are adapted according to previously learned tasks, thereby mitigating catastrophic forgetting by maintaining stable and plastic representations across tasks.

Computer-implemented method for domain incremental continual learning in an artificial neural network using maximum discrepancy loss to counteract representation drift

PatentPendingUS20240330673A1

Innovation

- The method employs a computer-implemented approach using maximum discrepancy loss between two classifiers in an artificial neural network architecture, which processes image data from autonomous vehicles, incorporating a memory buffer and an encoder to adapt representation clusters from new tasks according to previously learned tasks, thereby regulating the encoder to maintain stable representations across tasks.

Hardware-Software Co-design for Neuromorphic Systems

Addressing catastrophic forgetting in neuromorphic systems requires an integrated approach where hardware and software components are designed in tandem. The co-design methodology enables the creation of systems that can learn continuously while preserving previously acquired knowledge. This approach leverages the unique characteristics of neuromorphic hardware, such as spike-based computation and local learning rules, to implement more efficient continual learning algorithms.

Hardware considerations in this co-design approach include the development of specialized memory structures that support synaptic consolidation. These structures can dynamically adjust synaptic plasticity based on the importance of connections for previously learned tasks. For example, memristive devices with multiple stable states can implement physical representations of synaptic importance, allowing for hardware-level protection of critical knowledge.

On the software side, algorithms must be adapted to work within the constraints of neuromorphic hardware while exploiting its advantages. This includes developing sparse coding techniques that reduce interference between representations of different tasks, and implementing local learning rules that can operate without global optimization procedures. Spike-timing-dependent plasticity (STDP) variants can be modified to incorporate task-specific consolidation signals.

The communication interface between hardware and software layers represents a critical component of the co-design approach. Real-time feedback mechanisms allow software algorithms to monitor the state of hardware synapses and adjust learning parameters accordingly. This bidirectional flow of information enables adaptive plasticity control, where learning rates are modulated based on detected interference patterns.

Energy efficiency emerges as a significant advantage of this co-design methodology. By implementing continual learning mechanisms directly in hardware, the system can avoid the high energy costs associated with frequent memory transfers between processing and storage units in conventional architectures. This is particularly important for edge computing applications where power constraints are strict.

Testing frameworks for hardware-software co-designed systems must evaluate both computational performance and learning stability across sequential tasks. Benchmarks should include metrics for forward transfer (how learning new tasks improves performance on future related tasks) and backward transfer (how well performance on previous tasks is maintained), alongside traditional measures of accuracy and energy consumption.

Hardware considerations in this co-design approach include the development of specialized memory structures that support synaptic consolidation. These structures can dynamically adjust synaptic plasticity based on the importance of connections for previously learned tasks. For example, memristive devices with multiple stable states can implement physical representations of synaptic importance, allowing for hardware-level protection of critical knowledge.

On the software side, algorithms must be adapted to work within the constraints of neuromorphic hardware while exploiting its advantages. This includes developing sparse coding techniques that reduce interference between representations of different tasks, and implementing local learning rules that can operate without global optimization procedures. Spike-timing-dependent plasticity (STDP) variants can be modified to incorporate task-specific consolidation signals.

The communication interface between hardware and software layers represents a critical component of the co-design approach. Real-time feedback mechanisms allow software algorithms to monitor the state of hardware synapses and adjust learning parameters accordingly. This bidirectional flow of information enables adaptive plasticity control, where learning rates are modulated based on detected interference patterns.

Energy efficiency emerges as a significant advantage of this co-design methodology. By implementing continual learning mechanisms directly in hardware, the system can avoid the high energy costs associated with frequent memory transfers between processing and storage units in conventional architectures. This is particularly important for edge computing applications where power constraints are strict.

Testing frameworks for hardware-software co-designed systems must evaluate both computational performance and learning stability across sequential tasks. Benchmarks should include metrics for forward transfer (how learning new tasks improves performance on future related tasks) and backward transfer (how well performance on previous tasks is maintained), alongside traditional measures of accuracy and energy consumption.

Benchmarking Methodologies for Continuous Learning Performance

Evaluating the performance of continuous learning systems requires robust and standardized benchmarking methodologies that can accurately measure a system's ability to acquire new knowledge while retaining previously learned information. Current benchmarking approaches for neuromorphic systems often fail to adequately capture the nuances of catastrophic forgetting, necessitating more sophisticated evaluation frameworks.

The most widely adopted metric for continuous learning is the retention-plasticity trade-off measurement, which quantifies how well a system maintains previous knowledge (retention) while adapting to new information (plasticity). This metric typically involves calculating accuracy on both old and new tasks after each learning phase, with particular attention to accuracy degradation on previously learned tasks.

Task-independent metrics have also emerged as critical evaluation tools, including Forward Transfer (FT), which measures how learning a task impacts performance on future tasks, and Backward Transfer (BT), which assesses how learning new tasks affects performance on previously learned ones. The stability-plasticity index (SPI) combines these measures into a single value, offering a holistic view of continuous learning capability.

Benchmark datasets for continuous learning have evolved significantly, moving beyond traditional static datasets like MNIST and CIFAR. Specialized datasets such as CORe50, which features 50 objects across multiple sessions with varying conditions, and Stream-51, which provides natural temporal correlations in data streams, better simulate real-world continuous learning scenarios. These datasets enable more realistic evaluation of catastrophic forgetting in neuromorphic systems.

Evaluation protocols have also become more sophisticated, with three primary paradigms emerging: task-incremental learning (where task identifiers are provided during inference), domain-incremental learning (where data distributions change but the task remains the same), and class-incremental learning (where new classes are continuously added without task identifiers). Each paradigm presents unique challenges for neuromorphic systems and requires specific evaluation approaches.

Time-based performance metrics are particularly relevant for neuromorphic computing, measuring not just accuracy but also learning speed, adaptation time, and energy efficiency during continuous operation. These metrics acknowledge the real-time processing advantages that neuromorphic systems potentially offer over traditional computing architectures in continuous learning scenarios.

The most widely adopted metric for continuous learning is the retention-plasticity trade-off measurement, which quantifies how well a system maintains previous knowledge (retention) while adapting to new information (plasticity). This metric typically involves calculating accuracy on both old and new tasks after each learning phase, with particular attention to accuracy degradation on previously learned tasks.

Task-independent metrics have also emerged as critical evaluation tools, including Forward Transfer (FT), which measures how learning a task impacts performance on future tasks, and Backward Transfer (BT), which assesses how learning new tasks affects performance on previously learned ones. The stability-plasticity index (SPI) combines these measures into a single value, offering a holistic view of continuous learning capability.

Benchmark datasets for continuous learning have evolved significantly, moving beyond traditional static datasets like MNIST and CIFAR. Specialized datasets such as CORe50, which features 50 objects across multiple sessions with varying conditions, and Stream-51, which provides natural temporal correlations in data streams, better simulate real-world continuous learning scenarios. These datasets enable more realistic evaluation of catastrophic forgetting in neuromorphic systems.

Evaluation protocols have also become more sophisticated, with three primary paradigms emerging: task-incremental learning (where task identifiers are provided during inference), domain-incremental learning (where data distributions change but the task remains the same), and class-incremental learning (where new classes are continuously added without task identifiers). Each paradigm presents unique challenges for neuromorphic systems and requires specific evaluation approaches.

Time-based performance metrics are particularly relevant for neuromorphic computing, measuring not just accuracy but also learning speed, adaptation time, and energy efficiency during continuous operation. These metrics acknowledge the real-time processing advantages that neuromorphic systems potentially offer over traditional computing architectures in continuous learning scenarios.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!