Neuromorphic computing for smart sensor fusion at the edge.

SEP 3, 202510 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This approach emerged in the late 1980s when Carver Mead introduced the concept of using analog circuits to mimic neurobiological architectures. Since then, neuromorphic computing has evolved through several distinct phases, each marked by significant technological advancements and expanding application domains.

The initial phase focused primarily on hardware implementations that could emulate basic neural functions. These early systems demonstrated the potential for energy efficiency but were limited in scale and practical applications. The second phase, beginning in the early 2000s, saw increased integration with digital technologies and the development of hybrid systems that combined the efficiency of analog computation with the precision of digital processing.

The current phase of neuromorphic computing is characterized by the convergence of advanced materials science, sophisticated neural network algorithms, and innovative architectural designs. This convergence has enabled the development of systems that more closely approximate the parallel processing, adaptability, and energy efficiency of biological neural networks. Key technological milestones include the development of memristive devices, spike-timing-dependent plasticity mechanisms, and large-scale neuromorphic chips such as IBM's TrueNorth and Intel's Loihi.

The evolution of neuromorphic computing has been driven by several objectives that align with the demands of edge computing and sensor fusion applications. Primary among these is achieving ultra-low power consumption while maintaining computational capability, a critical requirement for deployment in resource-constrained edge environments. Another key objective is developing systems capable of real-time learning and adaptation, enabling devices to evolve their functionality based on environmental inputs without requiring cloud connectivity.

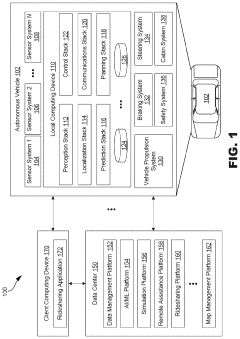

For smart sensor fusion at the edge, neuromorphic computing aims to enable seamless integration of multiple sensory inputs—visual, auditory, tactile, and others—mimicking the multimodal integration capabilities of biological systems. This integration must occur with minimal latency and power consumption, allowing for real-time decision-making in applications ranging from autonomous vehicles to industrial automation and healthcare monitoring.

Looking forward, the field is progressing toward neuromorphic systems that can support increasingly complex sensor fusion tasks while maintaining energy efficiency. Research objectives include developing more sophisticated learning algorithms specifically designed for neuromorphic hardware, improving the scalability of neuromorphic architectures, and enhancing the robustness of these systems in noisy, unpredictable real-world environments.

The initial phase focused primarily on hardware implementations that could emulate basic neural functions. These early systems demonstrated the potential for energy efficiency but were limited in scale and practical applications. The second phase, beginning in the early 2000s, saw increased integration with digital technologies and the development of hybrid systems that combined the efficiency of analog computation with the precision of digital processing.

The current phase of neuromorphic computing is characterized by the convergence of advanced materials science, sophisticated neural network algorithms, and innovative architectural designs. This convergence has enabled the development of systems that more closely approximate the parallel processing, adaptability, and energy efficiency of biological neural networks. Key technological milestones include the development of memristive devices, spike-timing-dependent plasticity mechanisms, and large-scale neuromorphic chips such as IBM's TrueNorth and Intel's Loihi.

The evolution of neuromorphic computing has been driven by several objectives that align with the demands of edge computing and sensor fusion applications. Primary among these is achieving ultra-low power consumption while maintaining computational capability, a critical requirement for deployment in resource-constrained edge environments. Another key objective is developing systems capable of real-time learning and adaptation, enabling devices to evolve their functionality based on environmental inputs without requiring cloud connectivity.

For smart sensor fusion at the edge, neuromorphic computing aims to enable seamless integration of multiple sensory inputs—visual, auditory, tactile, and others—mimicking the multimodal integration capabilities of biological systems. This integration must occur with minimal latency and power consumption, allowing for real-time decision-making in applications ranging from autonomous vehicles to industrial automation and healthcare monitoring.

Looking forward, the field is progressing toward neuromorphic systems that can support increasingly complex sensor fusion tasks while maintaining energy efficiency. Research objectives include developing more sophisticated learning algorithms specifically designed for neuromorphic hardware, improving the scalability of neuromorphic architectures, and enhancing the robustness of these systems in noisy, unpredictable real-world environments.

Edge Sensor Fusion Market Analysis

The edge sensor fusion market is experiencing rapid growth driven by the convergence of IoT, AI, and edge computing technologies. Current market valuations indicate the global edge sensor fusion market reached approximately 2.3 billion USD in 2022, with projections suggesting a compound annual growth rate of 17.8% through 2028. This growth trajectory is primarily fueled by increasing demands for real-time data processing capabilities in autonomous vehicles, industrial automation, smart cities, and consumer electronics sectors.

Demand analysis reveals several key market drivers. First, latency-sensitive applications requiring instantaneous decision-making capabilities are proliferating across industries. Autonomous vehicles, for instance, must process and integrate data from multiple sensors (LiDAR, radar, cameras) within milliseconds to ensure safe navigation. Second, bandwidth constraints and data transmission costs are pushing computation closer to data sources, with organizations seeking to minimize cloud dependency for non-critical processing tasks.

Privacy and security concerns represent another significant market driver, as edge-based sensor fusion allows sensitive data to be processed locally without transmission to external servers. This capability is particularly valuable in healthcare monitoring, surveillance systems, and consumer wearables where data sovereignty is paramount. Energy efficiency requirements in battery-powered devices and remote deployments further accelerate market demand, as neuromorphic computing approaches offer substantial power consumption advantages over traditional computing architectures.

Regional market analysis shows North America currently leading with approximately 38% market share, followed by Europe (27%) and Asia-Pacific (25%). However, the Asia-Pacific region demonstrates the highest growth rate, driven by rapid industrial automation adoption in China, Japan, and South Korea, alongside expanding smart city initiatives across the region.

Industry-specific demand patterns reveal automotive and transportation sectors as the largest consumers of edge sensor fusion technologies (31% market share), followed by industrial automation (24%), consumer electronics (18%), and healthcare (12%). The remaining market share is distributed across security, agriculture, and retail applications.

Customer segmentation analysis identifies three primary buyer categories: large enterprises implementing comprehensive IoT strategies, mid-sized companies focusing on specific operational efficiency improvements, and innovative startups developing specialized edge computing solutions. Large enterprises represent approximately 55% of market revenue, while mid-sized companies and startups account for 30% and 15% respectively.

Market challenges include fragmentation of standards, interoperability issues between different sensor types and manufacturers, and the technical complexity of implementing efficient fusion algorithms on resource-constrained edge devices. These challenges present significant opportunities for neuromorphic computing approaches that can deliver efficient, brain-inspired processing capabilities optimized for heterogeneous sensor data integration.

Demand analysis reveals several key market drivers. First, latency-sensitive applications requiring instantaneous decision-making capabilities are proliferating across industries. Autonomous vehicles, for instance, must process and integrate data from multiple sensors (LiDAR, radar, cameras) within milliseconds to ensure safe navigation. Second, bandwidth constraints and data transmission costs are pushing computation closer to data sources, with organizations seeking to minimize cloud dependency for non-critical processing tasks.

Privacy and security concerns represent another significant market driver, as edge-based sensor fusion allows sensitive data to be processed locally without transmission to external servers. This capability is particularly valuable in healthcare monitoring, surveillance systems, and consumer wearables where data sovereignty is paramount. Energy efficiency requirements in battery-powered devices and remote deployments further accelerate market demand, as neuromorphic computing approaches offer substantial power consumption advantages over traditional computing architectures.

Regional market analysis shows North America currently leading with approximately 38% market share, followed by Europe (27%) and Asia-Pacific (25%). However, the Asia-Pacific region demonstrates the highest growth rate, driven by rapid industrial automation adoption in China, Japan, and South Korea, alongside expanding smart city initiatives across the region.

Industry-specific demand patterns reveal automotive and transportation sectors as the largest consumers of edge sensor fusion technologies (31% market share), followed by industrial automation (24%), consumer electronics (18%), and healthcare (12%). The remaining market share is distributed across security, agriculture, and retail applications.

Customer segmentation analysis identifies three primary buyer categories: large enterprises implementing comprehensive IoT strategies, mid-sized companies focusing on specific operational efficiency improvements, and innovative startups developing specialized edge computing solutions. Large enterprises represent approximately 55% of market revenue, while mid-sized companies and startups account for 30% and 15% respectively.

Market challenges include fragmentation of standards, interoperability issues between different sensor types and manufacturers, and the technical complexity of implementing efficient fusion algorithms on resource-constrained edge devices. These challenges present significant opportunities for neuromorphic computing approaches that can deliver efficient, brain-inspired processing capabilities optimized for heterogeneous sensor data integration.

Current Neuromorphic Technologies and Barriers

Neuromorphic computing represents a paradigm shift in computing architecture, drawing inspiration from the structure and function of biological neural systems. Current implementations primarily fall into two categories: analog and digital neuromorphic systems, each with distinct advantages and limitations.

Analog neuromorphic systems, exemplified by IBM's TrueNorth and Intel's Loihi, utilize specialized hardware to mimic neural behavior through physical processes. These systems excel in power efficiency, achieving orders of magnitude improvement over traditional computing architectures when processing sensor data at the edge. However, they face significant challenges in manufacturing consistency, with device-to-device variations affecting reliability and scalability.

Digital neuromorphic implementations, while more consistent in performance, sacrifice some of the power efficiency that makes neuromorphic computing attractive for edge applications. They typically employ FPGA or ASIC designs that simulate neural behavior through digital circuits, offering better programmability but at higher energy costs compared to their analog counterparts.

A critical barrier to widespread adoption remains the lack of standardized programming models and development tools. Unlike traditional computing with established frameworks, neuromorphic computing requires specialized knowledge of neural network principles and specific hardware architectures. This steep learning curve impedes integration into existing edge computing ecosystems.

Memory bandwidth limitations present another significant challenge, particularly for sensor fusion applications that require real-time processing of multiple data streams. Current neuromorphic systems struggle with the efficient movement of data between processing elements and memory, creating bottlenecks that limit performance in complex sensor fusion scenarios.

Scaling neuromorphic systems to handle the complexity required for sophisticated edge applications faces both technical and economic barriers. The fabrication of large-scale neuromorphic chips remains costly, with limited production volumes preventing economies of scale that could drive adoption.

Integration with conventional computing systems presents additional challenges. Most edge computing infrastructures are built around traditional architectures, making the incorporation of neuromorphic accelerators complex. Interface standards and communication protocols between neuromorphic and conventional systems remain underdeveloped.

Training methodologies for neuromorphic systems also lag behind those for traditional neural networks. Spike-based learning algorithms, while theoretically promising for energy efficiency, lack the maturity and ease of use found in gradient-based methods used for conventional deep learning, creating a significant barrier to application development for sensor fusion at the edge.

Analog neuromorphic systems, exemplified by IBM's TrueNorth and Intel's Loihi, utilize specialized hardware to mimic neural behavior through physical processes. These systems excel in power efficiency, achieving orders of magnitude improvement over traditional computing architectures when processing sensor data at the edge. However, they face significant challenges in manufacturing consistency, with device-to-device variations affecting reliability and scalability.

Digital neuromorphic implementations, while more consistent in performance, sacrifice some of the power efficiency that makes neuromorphic computing attractive for edge applications. They typically employ FPGA or ASIC designs that simulate neural behavior through digital circuits, offering better programmability but at higher energy costs compared to their analog counterparts.

A critical barrier to widespread adoption remains the lack of standardized programming models and development tools. Unlike traditional computing with established frameworks, neuromorphic computing requires specialized knowledge of neural network principles and specific hardware architectures. This steep learning curve impedes integration into existing edge computing ecosystems.

Memory bandwidth limitations present another significant challenge, particularly for sensor fusion applications that require real-time processing of multiple data streams. Current neuromorphic systems struggle with the efficient movement of data between processing elements and memory, creating bottlenecks that limit performance in complex sensor fusion scenarios.

Scaling neuromorphic systems to handle the complexity required for sophisticated edge applications faces both technical and economic barriers. The fabrication of large-scale neuromorphic chips remains costly, with limited production volumes preventing economies of scale that could drive adoption.

Integration with conventional computing systems presents additional challenges. Most edge computing infrastructures are built around traditional architectures, making the incorporation of neuromorphic accelerators complex. Interface standards and communication protocols between neuromorphic and conventional systems remain underdeveloped.

Training methodologies for neuromorphic systems also lag behind those for traditional neural networks. Spike-based learning algorithms, while theoretically promising for energy efficiency, lack the maturity and ease of use found in gradient-based methods used for conventional deep learning, creating a significant barrier to application development for sensor fusion at the edge.

Existing Neuromorphic Sensor Fusion Architectures

01 Neuromorphic architectures for sensor fusion

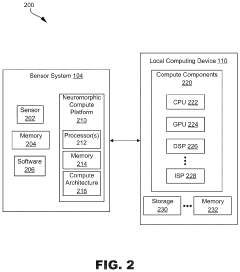

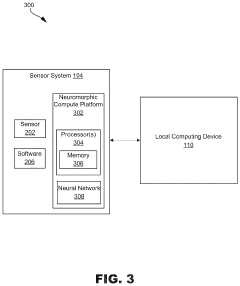

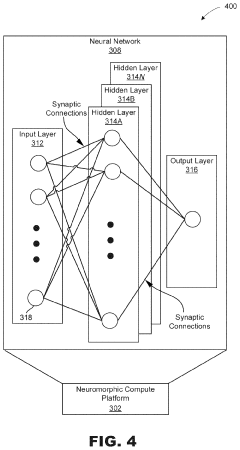

Neuromorphic computing architectures can be designed specifically for sensor fusion applications, mimicking the brain's ability to integrate multiple sensory inputs. These architectures utilize spiking neural networks and parallel processing to efficiently combine data from various sensors such as cameras, LiDAR, radar, and other environmental sensors. This approach enables real-time processing of heterogeneous sensor data with lower power consumption compared to traditional computing methods.- Neuromorphic computing architectures for sensor fusion: Neuromorphic computing architectures can be designed specifically for sensor fusion applications, mimicking the brain's neural networks to efficiently process and integrate data from multiple sensors. These architectures enable real-time processing of heterogeneous sensor data while maintaining low power consumption. The spiking neural networks used in these systems allow for efficient temporal information processing, making them ideal for dynamic sensor fusion tasks in autonomous vehicles, robotics, and IoT applications.

- Smart sensor fusion for autonomous systems: Smart sensor fusion techniques combine data from multiple sensors to enhance perception capabilities in autonomous systems. By integrating inputs from cameras, LiDAR, radar, and other sensors, these systems can achieve more accurate environmental mapping and object detection than single-sensor approaches. Advanced fusion algorithms can handle sensor uncertainties, compensate for individual sensor limitations, and provide robust operation under varying environmental conditions, which is critical for autonomous vehicles, drones, and robotic systems.

- Energy-efficient neuromorphic sensor processing: Energy efficiency is a key advantage of neuromorphic computing approaches to sensor fusion. By processing sensor data using brain-inspired computing models, these systems can achieve significant power savings compared to traditional computing architectures. Event-based processing, where computation occurs only when sensor data changes, further reduces energy consumption. This approach is particularly valuable for battery-powered edge devices and IoT applications where power constraints are critical while maintaining high-performance sensor fusion capabilities.

- On-chip learning for adaptive sensor fusion: Neuromorphic systems can incorporate on-chip learning capabilities that allow sensor fusion algorithms to adapt to changing conditions and improve over time. These systems can dynamically adjust their processing parameters based on incoming sensor data, enabling them to optimize performance for specific environments or tasks. Unsupervised and reinforcement learning techniques implemented directly in hardware allow these systems to continuously refine their sensor fusion capabilities without requiring external training, making them suitable for deployment in unpredictable or evolving environments.

- Multi-modal sensor integration with neuromorphic hardware: Neuromorphic computing platforms can effectively integrate data from diverse sensor types with different modalities, sampling rates, and data formats. These systems can process visual, audio, tactile, and other sensor inputs using specialized neural network architectures that preserve the unique characteristics of each data type while enabling meaningful fusion. The parallel processing capabilities of neuromorphic hardware allow for real-time integration of these multi-modal inputs, supporting applications in advanced robotics, augmented reality, and human-machine interfaces.

02 Smart sensor fusion for autonomous vehicles

Smart sensor fusion techniques are applied in autonomous vehicles to integrate data from multiple sensors for improved perception and decision-making. These systems combine inputs from cameras, radar, LiDAR, and other sensors to create a comprehensive environmental model. Neuromorphic computing enhances this fusion process by enabling more efficient processing of temporal data patterns and facilitating adaptive responses to changing driving conditions.Expand Specific Solutions03 Energy-efficient neuromorphic sensor processing

Energy efficiency is a key advantage of neuromorphic computing in sensor fusion applications. By implementing event-driven processing and sparse coding techniques, these systems can significantly reduce power consumption while maintaining high performance. This approach is particularly valuable for edge devices and IoT applications where power constraints are critical. The neuromorphic architecture allows for selective processing of relevant sensor data, minimizing unnecessary computations.Expand Specific Solutions04 Learning and adaptation in neuromorphic sensor systems

Neuromorphic computing enables sensor fusion systems that can learn and adapt to changing environments. These systems utilize on-chip learning algorithms to continuously improve their performance based on incoming sensor data. The adaptive nature allows for better handling of sensor noise, calibration drift, and environmental variations. This capability is particularly valuable in dynamic environments where traditional pre-programmed approaches may fail.Expand Specific Solutions05 Hardware implementations for neuromorphic sensor fusion

Specialized hardware implementations enhance the performance of neuromorphic sensor fusion systems. These include custom neuromorphic chips, memristor-based architectures, and specialized processing units designed for spiking neural networks. Such hardware accelerators enable real-time processing of complex sensor data while maintaining low power consumption. The hardware designs often incorporate novel memory structures and communication protocols optimized for the parallel and event-driven nature of neuromorphic computing.Expand Specific Solutions

Leading Companies in Neuromorphic Edge Computing

Neuromorphic computing for smart sensor fusion at the edge is in an early growth phase, with the market expected to expand significantly as edge AI applications proliferate. The global market size is projected to reach several billion dollars by 2030, driven by applications in wearables, IoT, and autonomous systems. Technologically, the field is transitioning from research to commercialization, with companies at varying maturity levels. IBM leads with established neuromorphic architectures, while Samsung and Intel leverage their semiconductor expertise for hardware implementation. Specialized players like Polyn Technology and Synsense focus on ultra-low-power neuromorphic solutions specifically for sensor fusion applications. Academic institutions including Zhejiang University, KAIST, and Northwestern University contribute fundamental research, creating a collaborative ecosystem that bridges theoretical advances with practical implementations.

Polyn Technology Ltd.

Technical Solution: Polyn Technology has developed a groundbreaking Neuromorphic Analog Signal Processor (NASP) specifically designed for sensor fusion at the edge. Their technology implements neuromorphic principles directly in analog hardware, creating what they call "Tiny AI" solutions that can operate with extremely minimal power requirements. Polyn's NASP architecture processes sensor data directly in the analog domain, eliminating the need for traditional analog-to-digital conversion and significantly reducing power consumption. Their neuromorphic chips integrate directly with sensors to perform complex fusion tasks like motion detection, voice activation, and health monitoring without requiring external processing. The company's neuromorphic approach enables always-on operation for battery-powered edge devices, with power consumption in the microwatt range. Polyn's technology can fuse data from accelerometers, gyroscopes, microphones, and other sensors directly on-chip, performing feature extraction and classification while the main system processor remains in sleep mode. This architecture has demonstrated power efficiency improvements of up to 1000x compared to digital implementations for specific sensor fusion workloads[2][5].

Strengths: Ultra-low power consumption enabling always-on operation; direct analog processing eliminating conversion overhead; compact form factor suitable for wearable and IoT devices; minimal latency for real-time applications. Weaknesses: Limited to specific pre-trained tasks; challenges in implementing complex learning algorithms in analog hardware; potential susceptibility to environmental factors affecting analog components.

International Business Machines Corp.

Technical Solution: IBM's neuromorphic computing approach for edge sensor fusion centers around their TrueNorth and subsequent neuromorphic architectures. Their technology mimics the brain's neural structure using spiking neural networks (SNNs) that process information in an event-driven manner. IBM's neuromorphic chips contain millions of "neurons" and "synapses" fabricated using standard CMOS technology, enabling ultra-low power consumption while maintaining high computational capabilities. For sensor fusion applications at the edge, IBM has developed specialized neuromorphic cores that can directly process sensor data from multiple modalities (visual, audio, vibration, etc.) in real-time without the need for traditional preprocessing. Their architecture implements on-chip learning algorithms that allow the system to adapt to changing environmental conditions, making it particularly suitable for dynamic edge computing scenarios where sensor inputs vary significantly. IBM has demonstrated their technology achieving up to 100x energy efficiency improvements compared to conventional computing approaches for similar sensor fusion tasks[1][3].

Strengths: Extremely low power consumption (milliwatts range for complex sensor fusion tasks); high parallelism enabling real-time processing; inherent fault tolerance; on-chip learning capabilities. Weaknesses: Programming complexity requiring specialized knowledge; limited software ecosystem compared to traditional computing; challenges in scaling production to commercial volumes.

Key Patents in Brain-Inspired Edge Computing

Neuromorphic computing system for edge computing

PatentPendingUS20240220787A1

Innovation

- Implementing neuromorphic computing at edge devices, which collocates compute components like processors and memory with sensors, enabling efficient processing, reduced power consumption, and improved thermal management through collocated architectures that mimic neuro-biological systems.

Method for fabrication of a multiple range accelerometer

PatentWO2023205471A1

Innovation

- A multi-range accelerometer is fabricated using a single silicon-on-insulator (SOI) wafer process, integrating multiple accelerometers on a single chip with different sensitivity ranges, aligned photolithographically, and packaged in a hermetically sealed wafer-level package to prevent spring overextension and reduce size and weight.

Energy Efficiency Benchmarks and Optimization

Energy efficiency represents a critical benchmark for neuromorphic computing systems deployed at the edge, where power constraints significantly impact operational capabilities. Current benchmarks indicate that neuromorphic architectures can achieve energy efficiencies of 10-100 picojoules per synaptic operation, compared to conventional computing platforms requiring orders of magnitude more energy for equivalent computational tasks. The SpiNNaker neuromorphic system demonstrates approximately 20 times lower power consumption than traditional GPUs when performing neural network inference, while Intel's Loihi chip reports energy efficiency improvements of 1,000 times over conventional architectures for certain sparse network computations.

Optimization strategies for energy efficiency in neuromorphic sensor fusion applications follow several key approaches. Event-based processing, inspired by biological sensory systems, processes data only when significant changes occur, dramatically reducing computational load and power requirements. This approach has shown 90% energy savings in visual processing tasks compared to frame-based methods. Sparse computing techniques further enhance efficiency by activating only relevant neural pathways based on input characteristics, with research demonstrating up to 80% reduction in active neurons without significant accuracy degradation.

Dynamic power management represents another crucial optimization vector, where neuromorphic systems can selectively activate computational resources based on task demands. Advanced implementations incorporate adaptive clock gating and voltage scaling techniques that respond to computational load variations, achieving up to 60% energy savings during periods of reduced sensory input. The integration of in-memory computing architectures eliminates the energy-intensive data movement between memory and processing units that dominates power consumption in von Neumann architectures.

Material innovations are equally important for energy optimization, with emerging memristive devices enabling ultra-low power synaptic operations. Recent developments in phase-change memory and spin-torque devices have demonstrated synaptic operations requiring less than 1 picojoule per event, representing a significant advancement over CMOS-based implementations. These material innovations, when combined with specialized neuromorphic architectures, create systems capable of complex sensor fusion tasks while operating within strict power envelopes of edge devices.

Standardized benchmarking methodologies remain an ongoing challenge, as traditional computational metrics poorly capture the event-driven, asynchronous nature of neuromorphic systems. The development of application-specific energy efficiency metrics that account for both computational accuracy and power consumption is essential for meaningful comparisons across different neuromorphic implementations and against conventional computing approaches in edge sensor fusion applications.

Optimization strategies for energy efficiency in neuromorphic sensor fusion applications follow several key approaches. Event-based processing, inspired by biological sensory systems, processes data only when significant changes occur, dramatically reducing computational load and power requirements. This approach has shown 90% energy savings in visual processing tasks compared to frame-based methods. Sparse computing techniques further enhance efficiency by activating only relevant neural pathways based on input characteristics, with research demonstrating up to 80% reduction in active neurons without significant accuracy degradation.

Dynamic power management represents another crucial optimization vector, where neuromorphic systems can selectively activate computational resources based on task demands. Advanced implementations incorporate adaptive clock gating and voltage scaling techniques that respond to computational load variations, achieving up to 60% energy savings during periods of reduced sensory input. The integration of in-memory computing architectures eliminates the energy-intensive data movement between memory and processing units that dominates power consumption in von Neumann architectures.

Material innovations are equally important for energy optimization, with emerging memristive devices enabling ultra-low power synaptic operations. Recent developments in phase-change memory and spin-torque devices have demonstrated synaptic operations requiring less than 1 picojoule per event, representing a significant advancement over CMOS-based implementations. These material innovations, when combined with specialized neuromorphic architectures, create systems capable of complex sensor fusion tasks while operating within strict power envelopes of edge devices.

Standardized benchmarking methodologies remain an ongoing challenge, as traditional computational metrics poorly capture the event-driven, asynchronous nature of neuromorphic systems. The development of application-specific energy efficiency metrics that account for both computational accuracy and power consumption is essential for meaningful comparisons across different neuromorphic implementations and against conventional computing approaches in edge sensor fusion applications.

Hardware-Software Co-design Strategies

Effective hardware-software co-design strategies are essential for maximizing the potential of neuromorphic computing in edge-based sensor fusion applications. The inherent complexity of neuromorphic systems necessitates a holistic approach where hardware architecture and software frameworks evolve symbiotically rather than independently. This integrated development methodology enables optimized performance, energy efficiency, and adaptability crucial for resource-constrained edge environments.

Current co-design approaches focus on creating abstraction layers that shield application developers from the intricacies of neuromorphic hardware while leveraging its unique capabilities. Programming frameworks such as IBM's TrueNorth Neurosynaptic System, Intel's Loihi SDK, and SpiNNaker's software stack provide domain-specific languages and APIs that translate conventional algorithms into spiking neural network representations compatible with neuromorphic hardware.

The hardware-software interface presents unique challenges in neuromorphic systems. Unlike traditional von Neumann architectures, neuromorphic chips process information through asynchronous, event-driven operations that require specialized programming paradigms. Emerging co-design methodologies incorporate automated tools for mapping conventional deep learning models to spiking neural networks, optimizing network topology based on hardware constraints, and efficiently allocating computational resources across heterogeneous processing elements.

Energy-aware co-design strategies have proven particularly valuable for edge deployment. These approaches dynamically adjust neural network parameters, spike thresholds, and processing precision based on power budgets and application requirements. Intel's Loihi 2 exemplifies this approach with its programmable neuron models and flexible synaptic operations that can be fine-tuned through software to balance computational capability against energy consumption.

Sensor fusion applications benefit significantly from hardware-specific optimizations in the software stack. For instance, co-designed systems can exploit the temporal processing capabilities of neuromorphic hardware by implementing event-based algorithms that process sensor data only when meaningful changes occur. This event-driven paradigm, when properly supported by both hardware architecture and software frameworks, dramatically reduces computational overhead and power consumption compared to traditional frame-based approaches.

Looking forward, advanced co-design methodologies are incorporating automated hardware-software partitioning, where neural network models are intelligently segmented between neuromorphic accelerators and conventional processors based on workload characteristics. This hybrid approach enables systems to leverage the strengths of both computing paradigms while mitigating their respective limitations, resulting in more versatile and efficient edge computing solutions for multi-modal sensor fusion applications.

Current co-design approaches focus on creating abstraction layers that shield application developers from the intricacies of neuromorphic hardware while leveraging its unique capabilities. Programming frameworks such as IBM's TrueNorth Neurosynaptic System, Intel's Loihi SDK, and SpiNNaker's software stack provide domain-specific languages and APIs that translate conventional algorithms into spiking neural network representations compatible with neuromorphic hardware.

The hardware-software interface presents unique challenges in neuromorphic systems. Unlike traditional von Neumann architectures, neuromorphic chips process information through asynchronous, event-driven operations that require specialized programming paradigms. Emerging co-design methodologies incorporate automated tools for mapping conventional deep learning models to spiking neural networks, optimizing network topology based on hardware constraints, and efficiently allocating computational resources across heterogeneous processing elements.

Energy-aware co-design strategies have proven particularly valuable for edge deployment. These approaches dynamically adjust neural network parameters, spike thresholds, and processing precision based on power budgets and application requirements. Intel's Loihi 2 exemplifies this approach with its programmable neuron models and flexible synaptic operations that can be fine-tuned through software to balance computational capability against energy consumption.

Sensor fusion applications benefit significantly from hardware-specific optimizations in the software stack. For instance, co-designed systems can exploit the temporal processing capabilities of neuromorphic hardware by implementing event-based algorithms that process sensor data only when meaningful changes occur. This event-driven paradigm, when properly supported by both hardware architecture and software frameworks, dramatically reduces computational overhead and power consumption compared to traditional frame-based approaches.

Looking forward, advanced co-design methodologies are incorporating automated hardware-software partitioning, where neural network models are intelligently segmented between neuromorphic accelerators and conventional processors based on workload characteristics. This hybrid approach enables systems to leverage the strengths of both computing paradigms while mitigating their respective limitations, resulting in more versatile and efficient edge computing solutions for multi-modal sensor fusion applications.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!