Hardware-Software Co-design for Sparse Neuromorphic Workloads.

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Computing Evolution and Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. This field has evolved significantly since its conceptual inception in the late 1980s when Carver Mead first proposed the idea of using analog circuits to mimic neurobiological architectures. The evolution of neuromorphic computing has been characterized by progressive advancements in both hardware implementations and theoretical frameworks, moving from simple analog circuits to sophisticated systems-on-chip that incorporate spiking neural networks.

The early 2000s marked a significant transition period when researchers began developing dedicated hardware platforms specifically designed for neural computation. Projects such as IBM's TrueNorth, Intel's Loihi, and BrainScaleS emerged as pioneering efforts to create scalable neuromorphic architectures. These developments coincided with the growing recognition of the limitations of traditional von Neumann computing architectures, particularly regarding energy efficiency and parallel processing capabilities for certain classes of problems.

The current technological landscape has witnessed an acceleration in neuromorphic research, driven by the convergence of advances in materials science, integrated circuit design, and computational neuroscience. This convergence has enabled the development of more sophisticated neuromorphic systems that can better emulate the brain's efficiency in processing sensory information and pattern recognition tasks while consuming minimal power.

Sparse neuromorphic workloads represent a particularly promising direction in this field. Unlike dense computational models that utilize all available resources regardless of input characteristics, sparse computation activates only the necessary components based on input features, similar to how biological neural networks operate. This approach offers significant advantages in terms of energy efficiency and computational speed for specific applications.

The primary objectives of hardware-software co-design for sparse neuromorphic workloads include developing architectures that can efficiently handle the irregular and event-driven nature of neuromorphic computation, creating programming models that abstract the complexity of the underlying hardware while preserving its efficiency benefits, and establishing benchmarking methodologies that accurately reflect the performance characteristics of these novel systems.

Looking forward, the field aims to bridge the gap between theoretical neuroscience models and practical computing systems, with the ultimate goal of creating computing platforms that can approach the energy efficiency and adaptability of biological neural systems while offering practical advantages for real-world applications such as edge computing, autonomous systems, and advanced pattern recognition tasks.

The early 2000s marked a significant transition period when researchers began developing dedicated hardware platforms specifically designed for neural computation. Projects such as IBM's TrueNorth, Intel's Loihi, and BrainScaleS emerged as pioneering efforts to create scalable neuromorphic architectures. These developments coincided with the growing recognition of the limitations of traditional von Neumann computing architectures, particularly regarding energy efficiency and parallel processing capabilities for certain classes of problems.

The current technological landscape has witnessed an acceleration in neuromorphic research, driven by the convergence of advances in materials science, integrated circuit design, and computational neuroscience. This convergence has enabled the development of more sophisticated neuromorphic systems that can better emulate the brain's efficiency in processing sensory information and pattern recognition tasks while consuming minimal power.

Sparse neuromorphic workloads represent a particularly promising direction in this field. Unlike dense computational models that utilize all available resources regardless of input characteristics, sparse computation activates only the necessary components based on input features, similar to how biological neural networks operate. This approach offers significant advantages in terms of energy efficiency and computational speed for specific applications.

The primary objectives of hardware-software co-design for sparse neuromorphic workloads include developing architectures that can efficiently handle the irregular and event-driven nature of neuromorphic computation, creating programming models that abstract the complexity of the underlying hardware while preserving its efficiency benefits, and establishing benchmarking methodologies that accurately reflect the performance characteristics of these novel systems.

Looking forward, the field aims to bridge the gap between theoretical neuroscience models and practical computing systems, with the ultimate goal of creating computing platforms that can approach the energy efficiency and adaptability of biological neural systems while offering practical advantages for real-world applications such as edge computing, autonomous systems, and advanced pattern recognition tasks.

Market Analysis for Sparse Neural Network Applications

The sparse neural network applications market is experiencing significant growth driven by the increasing demand for efficient AI solutions across various industries. The global market for neuromorphic computing, which encompasses sparse neural network technologies, is projected to reach $8.3 billion by 2028, growing at a CAGR of 23.7% from 2023. This growth is primarily fueled by the need for energy-efficient computing solutions that can process complex AI workloads with minimal power consumption.

Healthcare represents one of the largest application segments, with sparse neural networks being deployed for medical imaging analysis, patient monitoring systems, and drug discovery processes. The precision and efficiency of these networks make them particularly valuable for analyzing large medical datasets while maintaining high accuracy levels.

The automotive industry is another significant market, where sparse neural networks are increasingly utilized in advanced driver-assistance systems (ADAS) and autonomous driving technologies. These applications require real-time processing capabilities with strict power constraints, making sparse neuromorphic systems an ideal solution. Market research indicates that automotive AI applications will grow at a CAGR of 27.5% through 2027.

Edge computing devices constitute a rapidly expanding market segment for sparse neural networks. As IoT deployments continue to proliferate across industrial, consumer, and smart city applications, the demand for on-device AI processing capabilities that minimize data transmission and power consumption is surging. Industry analysts predict that by 2025, over 75% of enterprise-generated data will be processed at the edge, creating substantial opportunities for sparse neuromorphic solutions.

The telecommunications sector is increasingly adopting sparse neural network technologies for network optimization, predictive maintenance, and security applications. With the ongoing deployment of 5G infrastructure and the anticipated transition to 6G, telecommunications companies are seeking more efficient computational approaches to manage the exponential growth in data traffic.

Geographically, North America currently leads the market for sparse neural network applications, accounting for approximately 42% of global market share. However, the Asia-Pacific region is expected to witness the fastest growth rate, driven by substantial investments in AI technologies by countries like China, Japan, and South Korea.

Market challenges include the current high implementation costs, limited standardization across platforms, and the need for specialized expertise in hardware-software co-design approaches. Despite these challenges, the compelling value proposition of reduced power consumption, decreased latency, and improved performance continues to drive market expansion for sparse neuromorphic workloads across diverse industry verticals.

Healthcare represents one of the largest application segments, with sparse neural networks being deployed for medical imaging analysis, patient monitoring systems, and drug discovery processes. The precision and efficiency of these networks make them particularly valuable for analyzing large medical datasets while maintaining high accuracy levels.

The automotive industry is another significant market, where sparse neural networks are increasingly utilized in advanced driver-assistance systems (ADAS) and autonomous driving technologies. These applications require real-time processing capabilities with strict power constraints, making sparse neuromorphic systems an ideal solution. Market research indicates that automotive AI applications will grow at a CAGR of 27.5% through 2027.

Edge computing devices constitute a rapidly expanding market segment for sparse neural networks. As IoT deployments continue to proliferate across industrial, consumer, and smart city applications, the demand for on-device AI processing capabilities that minimize data transmission and power consumption is surging. Industry analysts predict that by 2025, over 75% of enterprise-generated data will be processed at the edge, creating substantial opportunities for sparse neuromorphic solutions.

The telecommunications sector is increasingly adopting sparse neural network technologies for network optimization, predictive maintenance, and security applications. With the ongoing deployment of 5G infrastructure and the anticipated transition to 6G, telecommunications companies are seeking more efficient computational approaches to manage the exponential growth in data traffic.

Geographically, North America currently leads the market for sparse neural network applications, accounting for approximately 42% of global market share. However, the Asia-Pacific region is expected to witness the fastest growth rate, driven by substantial investments in AI technologies by countries like China, Japan, and South Korea.

Market challenges include the current high implementation costs, limited standardization across platforms, and the need for specialized expertise in hardware-software co-design approaches. Despite these challenges, the compelling value proposition of reduced power consumption, decreased latency, and improved performance continues to drive market expansion for sparse neuromorphic workloads across diverse industry verticals.

Current Challenges in Hardware-Software Co-design

Despite significant advancements in neuromorphic computing, hardware-software co-design for sparse neuromorphic workloads faces several critical challenges. The fundamental issue lies in the inherent mismatch between traditional computing architectures and the unique requirements of brain-inspired computing models. Conventional von Neumann architectures, with their separate processing and memory units, create bottlenecks when handling the highly parallel and event-driven nature of neuromorphic workloads.

The sparsity characteristic of neuromorphic computing presents a particular challenge. While sparsity offers theoretical efficiency advantages, current hardware designs struggle to effectively exploit this property. Most existing accelerators are optimized for dense matrix operations, resulting in significant inefficiencies when processing sparse neural networks where many weights and activations are zero.

Memory access patterns represent another major hurdle. Neuromorphic algorithms often require irregular memory access patterns that poorly align with conventional memory hierarchies designed for spatial and temporal locality. This misalignment leads to cache thrashing and memory bandwidth limitations that severely impact performance and energy efficiency.

Power consumption remains a critical constraint, especially for edge applications where neuromorphic computing holds particular promise. Current hardware solutions often fail to achieve the ultra-low power operation necessary for continuous, real-time processing in resource-constrained environments like IoT devices or wearable technology.

From the software perspective, programming models for neuromorphic systems lack standardization and maturity. Developers face a steep learning curve when transitioning from traditional programming paradigms to event-based, spike-driven computational models. The absence of unified programming abstractions and development tools creates significant barriers to adoption and innovation.

Simulation and debugging tools for neuromorphic systems remain primitive compared to those available for conventional computing. This deficiency makes it difficult to accurately predict performance, optimize designs, and identify bottlenecks in hardware-software interactions before physical implementation.

The co-design process itself suffers from a lack of integrated development environments that can simultaneously model hardware constraints and software requirements. This separation forces designers to work in isolated domains, often leading to suboptimal solutions that fail to leverage the full potential of neuromorphic principles.

Cross-layer optimization techniques that span from algorithms to circuits are still in their infancy. Without effective methods to propagate design decisions across abstraction layers, opportunities for holistic optimization are frequently missed, resulting in implementations that achieve only a fraction of the theoretical efficiency gains promised by neuromorphic computing.

The sparsity characteristic of neuromorphic computing presents a particular challenge. While sparsity offers theoretical efficiency advantages, current hardware designs struggle to effectively exploit this property. Most existing accelerators are optimized for dense matrix operations, resulting in significant inefficiencies when processing sparse neural networks where many weights and activations are zero.

Memory access patterns represent another major hurdle. Neuromorphic algorithms often require irregular memory access patterns that poorly align with conventional memory hierarchies designed for spatial and temporal locality. This misalignment leads to cache thrashing and memory bandwidth limitations that severely impact performance and energy efficiency.

Power consumption remains a critical constraint, especially for edge applications where neuromorphic computing holds particular promise. Current hardware solutions often fail to achieve the ultra-low power operation necessary for continuous, real-time processing in resource-constrained environments like IoT devices or wearable technology.

From the software perspective, programming models for neuromorphic systems lack standardization and maturity. Developers face a steep learning curve when transitioning from traditional programming paradigms to event-based, spike-driven computational models. The absence of unified programming abstractions and development tools creates significant barriers to adoption and innovation.

Simulation and debugging tools for neuromorphic systems remain primitive compared to those available for conventional computing. This deficiency makes it difficult to accurately predict performance, optimize designs, and identify bottlenecks in hardware-software interactions before physical implementation.

The co-design process itself suffers from a lack of integrated development environments that can simultaneously model hardware constraints and software requirements. This separation forces designers to work in isolated domains, often leading to suboptimal solutions that fail to leverage the full potential of neuromorphic principles.

Cross-layer optimization techniques that span from algorithms to circuits are still in their infancy. Without effective methods to propagate design decisions across abstraction layers, opportunities for holistic optimization are frequently missed, resulting in implementations that achieve only a fraction of the theoretical efficiency gains promised by neuromorphic computing.

Existing Co-design Approaches for Sparse Workloads

01 Hardware-software co-simulation for neuromorphic systems

Co-simulation techniques enable the integrated development of hardware and software components for neuromorphic computing systems. These methods allow designers to validate system behavior before physical implementation, optimizing performance for sparse neural network workloads. The co-simulation approach helps identify bottlenecks and optimize resource allocation between hardware and software components, particularly important for the irregular computation patterns found in sparse neuromorphic workloads.- Hardware-software co-simulation for neuromorphic systems: Co-simulation techniques enable the integrated testing of hardware and software components for neuromorphic computing systems. These methods allow developers to validate the performance of sparse neural network implementations before physical deployment. By simulating both hardware constraints and software algorithms together, designers can optimize system performance, identify bottlenecks, and ensure compatibility between the hardware architecture and neuromorphic workloads.

- Optimization techniques for sparse neural networks: Various optimization techniques can be applied to sparse neural networks to improve their efficiency on neuromorphic hardware. These include pruning unnecessary connections, quantization of weights, and specialized data structures for sparse matrix operations. By reducing computational complexity and memory requirements, these optimizations enable more efficient implementation of neuromorphic workloads on hardware platforms with limited resources.

- Hardware architecture design for neuromorphic computing: Specialized hardware architectures can be designed to efficiently execute sparse neuromorphic workloads. These architectures may include custom processing elements, memory hierarchies optimized for sparse data access patterns, and interconnect networks tailored for neural computation. By co-designing hardware specifically for neuromorphic algorithms, significant improvements in performance and energy efficiency can be achieved compared to general-purpose computing platforms.

- Software frameworks for neuromorphic application development: Software frameworks provide programming models and tools for developing applications targeting neuromorphic hardware. These frameworks abstract hardware details while exposing key capabilities needed for sparse neural network implementation. They may include libraries for common neuromorphic operations, compilers that map high-level neural network descriptions to hardware-specific code, and runtime systems that manage execution on the target platform.

- Performance analysis and verification methods: Specialized methods for analyzing and verifying the performance of hardware-software co-designed neuromorphic systems are essential for ensuring correctness and efficiency. These include techniques for measuring power consumption, latency, and throughput of sparse neural network operations. Verification approaches may combine formal methods with empirical testing to validate that the implemented system meets its specifications across various neuromorphic workloads.

02 Sparse neural network acceleration architectures

Specialized hardware architectures designed specifically for sparse neural network computations can significantly improve performance and energy efficiency. These architectures exploit the inherent sparsity in neuromorphic workloads by implementing hardware-level optimizations such as sparse matrix operations, efficient memory access patterns, and dedicated processing elements. The co-design approach ensures that both hardware and software components are optimized together to handle the unique characteristics of sparse neural networks.Expand Specific Solutions03 Memory hierarchy optimization for sparse workloads

Efficient memory management is crucial for sparse neuromorphic workloads due to their irregular access patterns. Hardware-software co-design approaches focus on optimizing the memory hierarchy to reduce data movement and improve locality. This includes specialized caching mechanisms, data compression techniques, and memory access scheduling algorithms that are aware of the sparsity patterns in neuromorphic computations. These optimizations help minimize the memory bottleneck that often limits performance in neuromorphic systems.Expand Specific Solutions04 Energy-efficient scheduling for neuromorphic computing

Energy efficiency is a primary concern in neuromorphic computing systems. Hardware-software co-design approaches include developing specialized scheduling algorithms that dynamically allocate computational resources based on workload characteristics. These techniques leverage the sparsity in neuromorphic workloads to power down unused components and optimize execution paths. The co-design methodology ensures that energy management policies are implemented across both hardware and software layers for maximum efficiency.Expand Specific Solutions05 Verification and validation frameworks for neuromorphic systems

Comprehensive verification and validation frameworks are essential for ensuring the correctness and reliability of hardware-software co-designed neuromorphic systems. These frameworks include specialized testing methodologies, performance analysis tools, and debugging capabilities tailored to sparse neural network workloads. The co-design approach enables end-to-end validation across the hardware-software boundary, ensuring that optimizations for sparse workloads do not compromise system functionality or accuracy.Expand Specific Solutions

Leading Organizations in Neuromorphic Computing

The hardware-software co-design for sparse neuromorphic workloads market is currently in its growth phase, characterized by increasing research activity and early commercial applications. The global neuromorphic computing market is projected to reach approximately $8-10 billion by 2025, with sparse computing architectures gaining significant traction. Intel leads the field with its Loihi neuromorphic research chip, while IBM has made substantial contributions through its TrueNorth architecture. Emerging players like Syntiant and Cambricon are developing specialized edge AI solutions. Academic institutions including Tsinghua University, University of Michigan, and KAIST are advancing fundamental research. The technology remains in mid-maturity, with companies like Samsung, Huawei, and Xilinx investing in hardware acceleration for sparse neural networks, indicating a competitive landscape balancing established semiconductor giants and specialized neuromorphic startups.

Intel Corp.

Technical Solution: 英特尔在稀疏神经形态工作负载的硬件-软件协同设计领域开发了Loihi神经形态研究芯片,这是一种专为脉冲神经网络(SNN)设计的处理器。Loihi芯片包含128个神经核心,每个核心集成128个神经元和128KB的突触内存,总共支持超过13万个数字神经元和1.3亿个突触。英特尔的技术方案采用了完全异步的事件驱动架构,只在神经元发放脉冲时才进行计算,天然适合处理稀疏的神经形态工作负载。在软件层面,英特尔开发了Nengo神经编程框架和Lava软件框架,提供了高级API和编译工具,使研究人员能够轻松将神经算法映射到Loihi硬件上。英特尔还实现了创新的在线学习机制,允许神经网络在硬件上实时学习和适应,无需传统的反向传播训练。最新的Loihi 2芯片进一步提高了处理效率,支持更灵活的神经元模型和突触连接模式,并改进了稀疏计算的硬件加速能力。

优势:英特尔Loihi在能效方面表现卓越,处理特定神经形态任务时比传统处理器能效高出1000倍以上。其事件驱动架构天然适合处理稀疏数据和连续学习任务,在实时响应和自适应学习方面具有独特优势。劣势:Loihi主要面向研究社区,尚未广泛商业化,与主流深度学习框架的集成仍在发展中,开发者生态系统相对有限。

International Business Machines Corp.

Technical Solution: IBM在神经形态计算领域开发了TrueNorth芯片,这是一种专为稀疏神经网络工作负载设计的硬件。TrueNorth采用了创新的硬件-软件协同设计方法,包含100万个数字神经元和2.56亿个突触,功耗仅为70mW。IBM还开发了Corelet编程语言和SyNAPSE芯片架构,专门针对稀疏神经形态工作负载优化。其最新的研究成果包括将传统深度学习模型转换为适合神经形态硬件的稀疏表示技术,以及开发了专门的编译器工具链,能够自动将神经网络算法映射到神经形态硬件上,同时保持稀疏性以提高能效。IBM的协同设计方法还包括硬件感知训练算法,可以在训练阶段考虑硬件约束,从而在部署时获得更好的性能。

优势:IBM拥有成熟的神经形态计算技术栈和丰富的研究经验,其TrueNorth芯片在能效方面表现卓越,每瓦可处理的神经网络操作数远超传统GPU。劣势:其神经形态系统与主流深度学习框架的兼容性有限,需要专门的编程模型和工具,增加了开发者的学习成本。

Critical Patents in Neuromorphic Hardware-Software Integration

Hardware-software co-design for efficient transformer training and inference

PatentPendingUS20250037028A1

Innovation

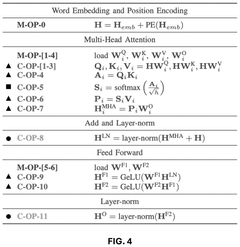

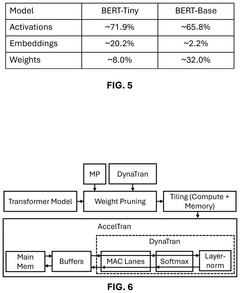

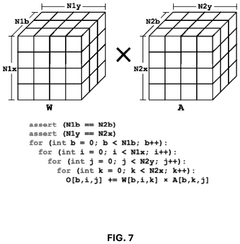

- A hardware-software co-design method that generates a computational graph and transformer model using a transformer embedding, simulates training and inference tasks with an accelerator embedding, and optimizes hardware performance and model accuracy using a co-design optimizer to produce a transformer-accelerator or transformer-edge-device pair.

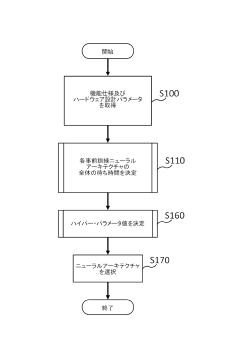

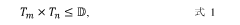

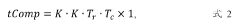

Hardware and neural architecture co-search

PatentActiveJP2022017993A

Innovation

- A computer program product that performs joint hardware and neural architecture exploration by utilizing pre-trained neural architectures, determining latency and accuracy, and selecting optimal architectures based on overall latency and accuracy, incorporating techniques like pattern pruning, channel cutting, and quantization to reduce latency.

Energy Efficiency Benchmarks and Optimization

Energy efficiency benchmarks for neuromorphic systems reveal significant advantages over traditional computing architectures when processing sparse workloads. Recent comparative analyses demonstrate that neuromorphic hardware can achieve 10-100x better energy efficiency for sparse neural network operations compared to conventional GPUs and CPUs. The SNN-based TrueNorth architecture, for instance, operates at approximately 70 milliwatts while delivering performance equivalent to much higher-powered traditional systems for sparse pattern recognition tasks.

Benchmark methodologies specifically designed for sparse neuromorphic workloads have emerged, including SparseMark and NeurobenchX, which evaluate performance across varying degrees of activation sparsity (10-99%). These benchmarks measure both static power consumption and dynamic energy scaling with computational load, providing more accurate efficiency metrics for event-driven computing paradigms.

Optimization techniques for energy efficiency in neuromorphic systems focus on exploiting workload sparsity through hardware-software co-design approaches. At the hardware level, clock-gating and power-gating techniques selectively deactivate unused neural processing elements, while event-driven computation ensures energy consumption correlates directly with neural activity. Novel memory architectures utilizing in-memory computing reduce the energy-intensive data movement between processing and storage units.

Software-level optimizations include sparse encoding schemes that efficiently represent neural activations with minimal bits, and pruning algorithms specifically designed to create hardware-friendly sparse network topologies. Adaptive threshold mechanisms dynamically adjust neuron firing thresholds based on input activity, further reducing unnecessary computations and associated energy costs.

Co-design strategies that simultaneously optimize both hardware and software components have demonstrated the most promising results. For example, the SpiNNaker-2 system implements specialized instruction sets for sparse operations while its software framework automatically maps networks to minimize communication overhead. Similarly, Intel's Loihi architecture pairs its neuromorphic cores with programming frameworks that optimize spike encoding for energy efficiency.

Recent research indicates that optimal energy efficiency requires balancing sparsity levels with computational accuracy. While extreme sparsity (>95%) offers theoretical energy advantages, maintaining functional accuracy often requires hybrid approaches that combine dense and sparse processing elements, strategically deployed based on workload characteristics.

Benchmark methodologies specifically designed for sparse neuromorphic workloads have emerged, including SparseMark and NeurobenchX, which evaluate performance across varying degrees of activation sparsity (10-99%). These benchmarks measure both static power consumption and dynamic energy scaling with computational load, providing more accurate efficiency metrics for event-driven computing paradigms.

Optimization techniques for energy efficiency in neuromorphic systems focus on exploiting workload sparsity through hardware-software co-design approaches. At the hardware level, clock-gating and power-gating techniques selectively deactivate unused neural processing elements, while event-driven computation ensures energy consumption correlates directly with neural activity. Novel memory architectures utilizing in-memory computing reduce the energy-intensive data movement between processing and storage units.

Software-level optimizations include sparse encoding schemes that efficiently represent neural activations with minimal bits, and pruning algorithms specifically designed to create hardware-friendly sparse network topologies. Adaptive threshold mechanisms dynamically adjust neuron firing thresholds based on input activity, further reducing unnecessary computations and associated energy costs.

Co-design strategies that simultaneously optimize both hardware and software components have demonstrated the most promising results. For example, the SpiNNaker-2 system implements specialized instruction sets for sparse operations while its software framework automatically maps networks to minimize communication overhead. Similarly, Intel's Loihi architecture pairs its neuromorphic cores with programming frameworks that optimize spike encoding for energy efficiency.

Recent research indicates that optimal energy efficiency requires balancing sparsity levels with computational accuracy. While extreme sparsity (>95%) offers theoretical energy advantages, maintaining functional accuracy often requires hybrid approaches that combine dense and sparse processing elements, strategically deployed based on workload characteristics.

Standardization Efforts in Neuromorphic Interfaces

The standardization of neuromorphic interfaces represents a critical development area for the advancement of hardware-software co-design approaches for sparse neuromorphic workloads. Currently, the field faces significant fragmentation with various research groups and companies developing proprietary interfaces and protocols, creating barriers to interoperability and widespread adoption.

Several key standardization initiatives have emerged in recent years to address these challenges. The Neuro-Inspired Computational Elements (NICE) workshop series has been instrumental in bringing together researchers and industry professionals to discuss common frameworks for neuromorphic systems. Their efforts have led to preliminary proposals for standardized communication protocols between neuromorphic hardware and software components.

The IEEE Neuromorphic Computing Standards Working Group, established in 2019, focuses specifically on developing standards for neuromorphic interface specifications. Their work encompasses signal encoding schemes, data formats for spike-based communication, and API definitions for neuromorphic programming models. The working group has published draft specifications that are currently under industry review.

On the software side, PyNN has emerged as a potential standard for neuromorphic programming, offering a common interface for various neuromorphic platforms. This Python-based framework allows developers to write code once and deploy it across different neuromorphic hardware systems, significantly reducing development overhead for sparse workload optimization.

The Neuromorphic Engineering Community (NEC) consortium has been working on standardizing event-based data formats, particularly addressing the unique requirements of sparse neuromorphic workloads. Their Address-Event Representation (AER) protocol has gained traction as a potential standard for spike-based communication between neuromorphic components.

Industry leaders including IBM, Intel, and Qualcomm have recently formed the Neuromorphic Computing Industry Alliance (NCIA), committing to developing open standards for hardware-software interfaces. Their roadmap includes specifications for memory access patterns optimized for sparse operations, event-driven programming models, and power management interfaces specific to neuromorphic architectures.

Academic institutions have contributed significantly through the Open Neuromorphic Exchange (ONE) initiative, which provides reference implementations of interface standards and testing frameworks. This collaborative effort has accelerated the validation and refinement of proposed standards across diverse neuromorphic platforms.

The convergence toward standardized interfaces represents a crucial enabler for hardware-software co-design approaches targeting sparse neuromorphic workloads, potentially accelerating innovation and commercial adoption in this rapidly evolving field.

Several key standardization initiatives have emerged in recent years to address these challenges. The Neuro-Inspired Computational Elements (NICE) workshop series has been instrumental in bringing together researchers and industry professionals to discuss common frameworks for neuromorphic systems. Their efforts have led to preliminary proposals for standardized communication protocols between neuromorphic hardware and software components.

The IEEE Neuromorphic Computing Standards Working Group, established in 2019, focuses specifically on developing standards for neuromorphic interface specifications. Their work encompasses signal encoding schemes, data formats for spike-based communication, and API definitions for neuromorphic programming models. The working group has published draft specifications that are currently under industry review.

On the software side, PyNN has emerged as a potential standard for neuromorphic programming, offering a common interface for various neuromorphic platforms. This Python-based framework allows developers to write code once and deploy it across different neuromorphic hardware systems, significantly reducing development overhead for sparse workload optimization.

The Neuromorphic Engineering Community (NEC) consortium has been working on standardizing event-based data formats, particularly addressing the unique requirements of sparse neuromorphic workloads. Their Address-Event Representation (AER) protocol has gained traction as a potential standard for spike-based communication between neuromorphic components.

Industry leaders including IBM, Intel, and Qualcomm have recently formed the Neuromorphic Computing Industry Alliance (NCIA), committing to developing open standards for hardware-software interfaces. Their roadmap includes specifications for memory access patterns optimized for sparse operations, event-driven programming models, and power management interfaces specific to neuromorphic architectures.

Academic institutions have contributed significantly through the Open Neuromorphic Exchange (ONE) initiative, which provides reference implementations of interface standards and testing frameworks. This collaborative effort has accelerated the validation and refinement of proposed standards across diverse neuromorphic platforms.

The convergence toward standardized interfaces represents a crucial enabler for hardware-software co-design approaches targeting sparse neuromorphic workloads, potentially accelerating innovation and commercial adoption in this rapidly evolving field.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!