On-Chip Learning with Hebbian-based Rules in Neuromorphic Hardware.

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic Hardware Evolution and Learning Objectives

Neuromorphic computing represents a paradigm shift in computational architecture, drawing inspiration from the structure and function of biological neural systems. The evolution of neuromorphic hardware has progressed through several distinct phases since its conceptual inception in the late 1980s by Carver Mead. Initially, these systems focused primarily on mimicking basic neural functions through analog VLSI implementations, with limited learning capabilities.

The first generation of neuromorphic hardware (1990s-early 2000s) consisted primarily of custom analog circuits that modeled neuron behavior but lacked sophisticated on-chip learning mechanisms. These systems required external programming and parameter tuning, limiting their adaptability and practical applications.

The second generation (mid-2000s to early 2010s) saw the integration of digital components alongside analog circuits, creating hybrid systems with improved programmability. During this period, researchers began exploring simple forms of on-chip plasticity, though learning capabilities remained relatively primitive and often required offline training.

The current generation of neuromorphic hardware (2010s-present) has made significant strides in incorporating on-chip learning mechanisms, particularly those inspired by Hebbian learning principles. This biological learning rule, often summarized as "neurons that fire together, wire together," forms the foundation for implementing adaptive synaptic plasticity directly in hardware.

Key learning objectives in modern neuromorphic systems include achieving real-time unsupervised learning, implementing spike-timing-dependent plasticity (STDP), and developing energy-efficient learning algorithms that can operate within the constraints of neuromorphic architectures. These objectives align with the broader goals of creating autonomous, adaptive systems capable of continuous learning in dynamic environments.

Recent technological advancements have enabled more sophisticated implementations of Hebbian-based learning rules in hardware, including variations such as triplet-STDP and reward-modulated STDP. These mechanisms allow neuromorphic systems to perform complex pattern recognition, classification, and adaptation tasks without requiring external training infrastructure.

The learning objectives for next-generation neuromorphic hardware focus on bridging the gap between biological and artificial neural systems, particularly in terms of energy efficiency, adaptability, and cognitive capabilities. Researchers aim to develop systems that can learn from sparse data, transfer knowledge between tasks, and maintain stability while continuously acquiring new information—challenges that remain difficult for conventional deep learning approaches.

As neuromorphic hardware continues to evolve, the integration of increasingly sophisticated on-chip learning mechanisms represents a critical frontier, potentially enabling new applications in edge computing, autonomous systems, and brain-computer interfaces where adaptive learning in resource-constrained environments is essential.

The first generation of neuromorphic hardware (1990s-early 2000s) consisted primarily of custom analog circuits that modeled neuron behavior but lacked sophisticated on-chip learning mechanisms. These systems required external programming and parameter tuning, limiting their adaptability and practical applications.

The second generation (mid-2000s to early 2010s) saw the integration of digital components alongside analog circuits, creating hybrid systems with improved programmability. During this period, researchers began exploring simple forms of on-chip plasticity, though learning capabilities remained relatively primitive and often required offline training.

The current generation of neuromorphic hardware (2010s-present) has made significant strides in incorporating on-chip learning mechanisms, particularly those inspired by Hebbian learning principles. This biological learning rule, often summarized as "neurons that fire together, wire together," forms the foundation for implementing adaptive synaptic plasticity directly in hardware.

Key learning objectives in modern neuromorphic systems include achieving real-time unsupervised learning, implementing spike-timing-dependent plasticity (STDP), and developing energy-efficient learning algorithms that can operate within the constraints of neuromorphic architectures. These objectives align with the broader goals of creating autonomous, adaptive systems capable of continuous learning in dynamic environments.

Recent technological advancements have enabled more sophisticated implementations of Hebbian-based learning rules in hardware, including variations such as triplet-STDP and reward-modulated STDP. These mechanisms allow neuromorphic systems to perform complex pattern recognition, classification, and adaptation tasks without requiring external training infrastructure.

The learning objectives for next-generation neuromorphic hardware focus on bridging the gap between biological and artificial neural systems, particularly in terms of energy efficiency, adaptability, and cognitive capabilities. Researchers aim to develop systems that can learn from sparse data, transfer knowledge between tasks, and maintain stability while continuously acquiring new information—challenges that remain difficult for conventional deep learning approaches.

As neuromorphic hardware continues to evolve, the integration of increasingly sophisticated on-chip learning mechanisms represents a critical frontier, potentially enabling new applications in edge computing, autonomous systems, and brain-computer interfaces where adaptive learning in resource-constrained environments is essential.

Market Analysis for On-Chip Learning Solutions

The market for on-chip learning solutions with Hebbian-based rules in neuromorphic hardware is experiencing significant growth, driven by increasing demands for edge computing capabilities and energy-efficient AI processing. Current market valuations indicate that the neuromorphic computing sector is projected to reach $8.9 billion by 2025, with a compound annual growth rate of approximately 23% from 2020.

The primary market segments for on-chip learning solutions include autonomous vehicles, industrial automation, healthcare monitoring devices, and consumer electronics. Each segment presents unique requirements and growth trajectories. Autonomous vehicles represent the fastest-growing segment, with manufacturers seeking real-time learning capabilities that can adapt to changing road conditions without cloud connectivity.

Industrial automation applications are increasingly adopting neuromorphic solutions for predictive maintenance and process optimization, where Hebbian learning provides advantages in pattern recognition and anomaly detection with minimal power consumption. This segment values the ability to learn continuously from sensor data without requiring frequent model updates from centralized systems.

Healthcare applications represent another substantial market opportunity, particularly for implantable and wearable devices that must operate under strict power constraints while processing complex biological signals. The ability of Hebbian-based systems to learn from physiological patterns makes them particularly valuable in this domain.

Market analysis reveals several key customer requirements driving adoption: energy efficiency (measured in operations per watt), on-device learning speed, integration capabilities with existing systems, and resilience to noisy data environments. Hebbian learning approaches address these requirements more effectively than traditional deep learning implementations for certain applications, particularly where power constraints are significant.

Regional market distribution shows North America leading in research and development investments, while Asia-Pacific demonstrates the fastest adoption rate in manufacturing applications. Europe shows particular strength in automotive and healthcare implementations of neuromorphic technology.

Customer feedback indicates growing interest in hybrid solutions that combine traditional computing architectures with neuromorphic components, allowing gradual integration rather than wholesale replacement of existing systems. This presents an opportunity for modular neuromorphic solutions with standardized interfaces.

Market barriers include concerns about reliability in mission-critical applications, lack of standardized development tools, and limited understanding of Hebbian learning principles among traditional software developers. These challenges represent opportunities for companies that can provide comprehensive development ecosystems alongside their hardware offerings.

The primary market segments for on-chip learning solutions include autonomous vehicles, industrial automation, healthcare monitoring devices, and consumer electronics. Each segment presents unique requirements and growth trajectories. Autonomous vehicles represent the fastest-growing segment, with manufacturers seeking real-time learning capabilities that can adapt to changing road conditions without cloud connectivity.

Industrial automation applications are increasingly adopting neuromorphic solutions for predictive maintenance and process optimization, where Hebbian learning provides advantages in pattern recognition and anomaly detection with minimal power consumption. This segment values the ability to learn continuously from sensor data without requiring frequent model updates from centralized systems.

Healthcare applications represent another substantial market opportunity, particularly for implantable and wearable devices that must operate under strict power constraints while processing complex biological signals. The ability of Hebbian-based systems to learn from physiological patterns makes them particularly valuable in this domain.

Market analysis reveals several key customer requirements driving adoption: energy efficiency (measured in operations per watt), on-device learning speed, integration capabilities with existing systems, and resilience to noisy data environments. Hebbian learning approaches address these requirements more effectively than traditional deep learning implementations for certain applications, particularly where power constraints are significant.

Regional market distribution shows North America leading in research and development investments, while Asia-Pacific demonstrates the fastest adoption rate in manufacturing applications. Europe shows particular strength in automotive and healthcare implementations of neuromorphic technology.

Customer feedback indicates growing interest in hybrid solutions that combine traditional computing architectures with neuromorphic components, allowing gradual integration rather than wholesale replacement of existing systems. This presents an opportunity for modular neuromorphic solutions with standardized interfaces.

Market barriers include concerns about reliability in mission-critical applications, lack of standardized development tools, and limited understanding of Hebbian learning principles among traditional software developers. These challenges represent opportunities for companies that can provide comprehensive development ecosystems alongside their hardware offerings.

Hebbian Learning Implementation Challenges

Implementing Hebbian learning mechanisms in neuromorphic hardware presents several significant challenges that researchers and engineers must overcome. The fundamental principle of "neurons that fire together, wire together" requires precise timing and weight adjustment capabilities that are difficult to realize in physical circuits.

One of the primary challenges is the accurate implementation of synaptic plasticity mechanisms. While Hebbian learning conceptually appears straightforward, translating the biological processes of long-term potentiation (LTP) and long-term depression (LTD) into silicon requires sophisticated circuit designs. Current hardware implementations struggle to capture the temporal dynamics and non-linear characteristics of biological synapses, often resulting in simplified approximations that limit learning capabilities.

Power consumption poses another critical challenge. Neuromorphic systems aim to achieve brain-like efficiency, yet implementing on-chip learning mechanisms significantly increases power requirements. The continuous weight updates during learning phases consume substantial energy, particularly when implementing spike-timing-dependent plasticity (STDP) variants of Hebbian learning that require precise timing circuits and complex weight update mechanisms.

Scalability issues further complicate implementation efforts. As network size increases, the hardware resources required for implementing learning rules grow substantially. Current architectures face limitations in terms of the number of plastic synapses they can support while maintaining real-time operation and reasonable power budgets. This creates a fundamental trade-off between network complexity and learning capability.

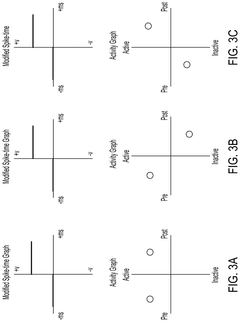

The stability-plasticity dilemma represents a fundamental challenge in Hebbian-based learning implementations. Hardware systems must balance the ability to learn new patterns (plasticity) while preserving previously acquired knowledge (stability). Without additional regulatory mechanisms, pure Hebbian learning tends to lead to runaway dynamics where synaptic weights saturate at their maximum or minimum values, resulting in unstable network behavior.

Precision and variability in manufacturing processes introduce additional complications. Analog implementations of synaptic weights are particularly susceptible to device mismatch and parameter variations, which can significantly impact learning performance. Digital implementations offer better precision but come at the cost of increased area and power consumption.

Finally, there is a significant gap between theoretical models of Hebbian learning and practical hardware implementations. Many theoretical models assume idealized conditions that cannot be realized in physical systems, requiring substantial modifications and compromises when translating to hardware designs. This theory-implementation gap necessitates novel approaches that can preserve the essential computational properties while accommodating hardware constraints.

One of the primary challenges is the accurate implementation of synaptic plasticity mechanisms. While Hebbian learning conceptually appears straightforward, translating the biological processes of long-term potentiation (LTP) and long-term depression (LTD) into silicon requires sophisticated circuit designs. Current hardware implementations struggle to capture the temporal dynamics and non-linear characteristics of biological synapses, often resulting in simplified approximations that limit learning capabilities.

Power consumption poses another critical challenge. Neuromorphic systems aim to achieve brain-like efficiency, yet implementing on-chip learning mechanisms significantly increases power requirements. The continuous weight updates during learning phases consume substantial energy, particularly when implementing spike-timing-dependent plasticity (STDP) variants of Hebbian learning that require precise timing circuits and complex weight update mechanisms.

Scalability issues further complicate implementation efforts. As network size increases, the hardware resources required for implementing learning rules grow substantially. Current architectures face limitations in terms of the number of plastic synapses they can support while maintaining real-time operation and reasonable power budgets. This creates a fundamental trade-off between network complexity and learning capability.

The stability-plasticity dilemma represents a fundamental challenge in Hebbian-based learning implementations. Hardware systems must balance the ability to learn new patterns (plasticity) while preserving previously acquired knowledge (stability). Without additional regulatory mechanisms, pure Hebbian learning tends to lead to runaway dynamics where synaptic weights saturate at their maximum or minimum values, resulting in unstable network behavior.

Precision and variability in manufacturing processes introduce additional complications. Analog implementations of synaptic weights are particularly susceptible to device mismatch and parameter variations, which can significantly impact learning performance. Digital implementations offer better precision but come at the cost of increased area and power consumption.

Finally, there is a significant gap between theoretical models of Hebbian learning and practical hardware implementations. Many theoretical models assume idealized conditions that cannot be realized in physical systems, requiring substantial modifications and compromises when translating to hardware designs. This theory-implementation gap necessitates novel approaches that can preserve the essential computational properties while accommodating hardware constraints.

Current On-Chip Hebbian Learning Architectures

01 Implementation of Hebbian learning in neuromorphic hardware

Neuromorphic hardware systems can implement Hebbian learning rules to enable on-chip learning capabilities. These systems use specialized circuits that mimic the biological processes of synaptic plasticity, allowing for weight adjustments based on the correlation between pre-synaptic and post-synaptic neuron activities. This approach enables efficient, low-power learning directly on the hardware without requiring external processing.- Implementation of Hebbian learning in neuromorphic hardware: Neuromorphic hardware systems can implement Hebbian learning rules to enable on-chip learning capabilities. These systems use specialized circuits that mimic the biological processes of synaptic plasticity, allowing for weight adjustments based on the correlation between pre-synaptic and post-synaptic neuron activities. This approach enables efficient, low-power learning directly on hardware without requiring external processing.

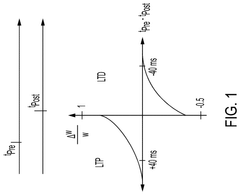

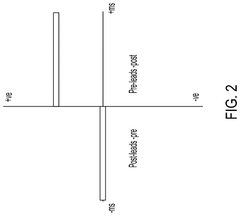

- Spike-timing-dependent plasticity (STDP) for on-chip learning: Spike-timing-dependent plasticity (STDP) is a specific form of Hebbian learning implemented in neuromorphic hardware for on-chip learning. STDP adjusts synaptic weights based on the precise timing between pre-synaptic and post-synaptic spikes, strengthening connections when pre-synaptic spikes precede post-synaptic ones and weakening them otherwise. This temporal learning mechanism enables efficient pattern recognition and classification directly on neuromorphic chips.

- Memristive devices for Hebbian learning implementation: Memristive devices are used to implement Hebbian learning rules in neuromorphic hardware. These devices can change their resistance based on the history of current flow, making them ideal for representing synaptic weights. When integrated into neuromorphic circuits, memristors enable efficient on-chip learning by physically embodying the weight changes required by Hebbian learning rules, resulting in low power consumption and high density integration for neural networks.

- Hardware-efficient learning algorithms for neuromorphic systems: Hardware-efficient adaptations of Hebbian learning algorithms are designed specifically for neuromorphic systems. These algorithms simplify traditional Hebbian rules to make them more amenable to hardware implementation while preserving their essential learning capabilities. Techniques include quantized weight updates, simplified activation functions, and reduced precision computations that maintain learning performance while significantly reducing hardware complexity and power consumption.

- Integrated learning and inference in neuromorphic processors: Neuromorphic processors integrate both learning and inference capabilities on the same chip using Hebbian-based rules. This integration allows for continuous learning during operation, enabling the system to adapt to new data without requiring offline training. The architecture typically includes specialized circuits for weight updates alongside neural processing elements, creating a complete system that can learn from and respond to its environment in real-time.

02 Spike-timing-dependent plasticity (STDP) for on-chip learning

Spike-timing-dependent plasticity (STDP) is a specific form of Hebbian learning implemented in neuromorphic hardware. STDP adjusts synaptic weights based on the precise timing between pre-synaptic and post-synaptic spikes, strengthening connections when pre-synaptic activity precedes post-synaptic firing and weakening them otherwise. This temporal learning mechanism enables efficient pattern recognition and classification directly on neuromorphic chips.Expand Specific Solutions03 Memristive devices for implementing Hebbian learning

Memristive devices are used to implement Hebbian-based learning rules in neuromorphic hardware. These devices can change their resistance based on the history of applied voltage or current, making them ideal for modeling synaptic plasticity. By integrating memristors into neuromorphic circuits, systems can achieve efficient on-chip learning with low power consumption and high density, enabling the implementation of complex neural networks directly in hardware.Expand Specific Solutions04 Hardware-software co-design for neuromorphic learning

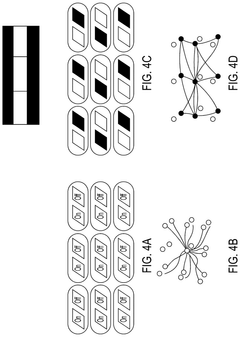

Hardware-software co-design approaches optimize the implementation of Hebbian learning in neuromorphic systems. These methods involve developing specialized hardware architectures alongside tailored algorithms that efficiently implement learning rules while managing constraints like power consumption and chip area. This integrated approach enables more effective on-chip learning by balancing computational capabilities with hardware limitations.Expand Specific Solutions05 Scalable architectures for on-chip Hebbian learning

Scalable neuromorphic architectures enable the implementation of Hebbian learning across large networks. These designs incorporate modular components, efficient communication protocols, and distributed learning mechanisms to maintain performance as the network size increases. Such architectures address challenges related to connectivity, weight storage, and update mechanisms, allowing for the deployment of complex learning systems that can adapt to various applications while maintaining energy efficiency.Expand Specific Solutions

Leading Organizations in Neuromorphic Hardware Development

On-Chip Learning with Hebbian-based Rules in Neuromorphic Hardware is currently in an early growth phase, with the market expected to expand significantly as AI hardware acceleration demands increase. The global neuromorphic computing market is projected to reach $8-10 billion by 2028, driven by edge AI applications requiring energy-efficient learning capabilities. Technology maturity varies across players: IBM, Samsung, and HP are advancing commercial neuromorphic platforms, while Syntiant and SilicoSapien are developing specialized Hebbian-based chips for edge applications. Academic institutions like Tsinghua University and KAUST are contributing fundamental research, while Toyota and Boeing explore industrial applications. The ecosystem is characterized by a mix of established semiconductor companies and specialized startups collaborating with research institutions to overcome implementation challenges in hardware-based learning systems.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed neuromorphic processing units (NPUs) that incorporate on-chip Hebbian learning capabilities using their advanced semiconductor manufacturing technologies. Their approach combines traditional digital processing elements with analog computing circuits specifically designed for implementing synaptic plasticity rules. Samsung's neuromorphic chips feature a hierarchical memory architecture that enables efficient weight updates based on temporal correlations between neural activations. The company has implemented various Hebbian-based learning rules, including STDP and BCM (Bienenstock-Cooper-Munro) rules, directly in hardware using their proprietary resistive RAM (RRAM) technology. Samsung's implementation achieves power efficiency improvements of up to 50x compared to conventional deep learning accelerators while maintaining comparable accuracy for pattern recognition tasks[4]. Their architecture includes specialized timing circuits that detect coincident neural activations to trigger appropriate weight modifications according to Hebbian principles. Samsung has demonstrated successful on-chip learning for image classification and speech recognition applications using their neuromorphic hardware platform.

Strengths: Integration with advanced semiconductor manufacturing capabilities; balanced performance between accuracy and energy efficiency; potential for mass production. Weaknesses: Less specialized than pure neuromorphic players; higher power consumption than some dedicated neuromorphic solutions; still in research phase for many applications.

International Business Machines Corp.

Technical Solution: IBM has developed TrueNorth neuromorphic chip architecture that implements on-chip Hebbian learning capabilities. Their approach integrates spike-timing-dependent plasticity (STDP) mechanisms directly into hardware, allowing for real-time synaptic weight adjustments. The system utilizes a crossbar array architecture with non-volatile memory elements that can efficiently implement Hebbian learning rules while consuming minimal power. IBM's implementation includes specialized circuits for detecting temporal correlations between pre- and post-synaptic neurons, enabling unsupervised learning directly on the chip. Their recent advancements have demonstrated successful on-chip training for pattern recognition tasks with energy efficiency improvements of up to 1000x compared to conventional computing approaches[1]. IBM has also developed a programming framework that allows researchers to implement various Hebbian-based learning rules tailored to specific applications, making their neuromorphic hardware highly adaptable for different cognitive computing tasks.

Strengths: Industry-leading energy efficiency for on-chip learning; mature development ecosystem; proven scalability to complex applications. Weaknesses: Higher manufacturing costs compared to conventional chips; requires specialized programming knowledge; limited compatibility with existing AI frameworks.

Breakthrough Patents in Synaptic Plasticity Implementation

Neuromorphic artificial neural network architecture based on distributed binary (all-or-non) neural representation

PatentPendingUS20240403619A1

Innovation

- The development of an artificial neuromorphic neural network architecture that associates qualitative elements of sensory inputs through distributed qualitative neural representations and replicates neural selectivity, using a learning method based on Hebbian learning principles with modified spike-time dependent synaptic plasticity to form and strengthen connections.

Information processing device, information processing method, and program

PatentWO2023210816A1

Innovation

- A one-layer neural network comprising a feedforward circuit applying a spatio-temporal learning rule and a feedback recursive circuit using Hebbian learning, which balances cooperation ratio and learning speed parameters to enhance pattern separation and completion, mimicking the human brain's information processing.

Energy Efficiency Considerations for On-Chip Learning

Energy efficiency represents a critical consideration in the development and implementation of on-chip learning systems utilizing Hebbian-based rules in neuromorphic hardware. The power consumption profile of these systems directly impacts their viability for deployment in resource-constrained environments such as edge computing devices, IoT nodes, and mobile platforms.

Traditional von Neumann architectures face significant energy inefficiencies when implementing neural network operations due to the constant data movement between processing and memory units, commonly referred to as the "memory wall" problem. Neuromorphic hardware with on-chip learning capabilities offers a promising solution by collocating computation and memory, substantially reducing energy costs associated with data transfer.

Hebbian-based learning rules present particular advantages from an energy perspective. Their local nature—where weight updates depend only on pre- and post-synaptic neuron activities—eliminates the need for global information propagation across the network. This locality principle translates to reduced communication overhead and lower power consumption compared to backpropagation-based approaches that require extensive gradient calculations and distribution.

Recent implementations have demonstrated remarkable energy efficiency metrics. For instance, IBM's TrueNorth architecture achieves approximately 26 pJ per synaptic operation, while Intel's Loihi chip operates at around 23.6 pJ per synaptic event. These figures represent orders of magnitude improvement over conventional GPU implementations of neural networks.

The energy efficiency of on-chip Hebbian learning systems can be further optimized through several approaches. Sparse activation patterns, where only a small subset of neurons fire at any given time, significantly reduce dynamic power consumption. Additionally, implementing variable precision computation allows the system to adapt its energy expenditure based on task requirements—using lower precision for less critical operations while maintaining higher precision where necessary.

Emerging non-volatile memory technologies, such as resistive RAM (RRAM), phase-change memory (PCM), and magnetic RAM (MRAM), offer promising pathways for further energy optimization. These technologies enable persistent storage of synaptic weights without static power consumption and can directly implement Hebbian plasticity mechanisms through their inherent physical properties, potentially reducing energy requirements for weight updates by 10-100× compared to CMOS-based implementations.

Event-driven computation represents another significant energy-saving strategy in neuromorphic systems. By processing information only when relevant changes occur rather than at fixed clock cycles, these systems can remain in low-power states during periods of inactivity, dramatically reducing overall energy consumption in real-world applications where input data often arrives sporadically.

Traditional von Neumann architectures face significant energy inefficiencies when implementing neural network operations due to the constant data movement between processing and memory units, commonly referred to as the "memory wall" problem. Neuromorphic hardware with on-chip learning capabilities offers a promising solution by collocating computation and memory, substantially reducing energy costs associated with data transfer.

Hebbian-based learning rules present particular advantages from an energy perspective. Their local nature—where weight updates depend only on pre- and post-synaptic neuron activities—eliminates the need for global information propagation across the network. This locality principle translates to reduced communication overhead and lower power consumption compared to backpropagation-based approaches that require extensive gradient calculations and distribution.

Recent implementations have demonstrated remarkable energy efficiency metrics. For instance, IBM's TrueNorth architecture achieves approximately 26 pJ per synaptic operation, while Intel's Loihi chip operates at around 23.6 pJ per synaptic event. These figures represent orders of magnitude improvement over conventional GPU implementations of neural networks.

The energy efficiency of on-chip Hebbian learning systems can be further optimized through several approaches. Sparse activation patterns, where only a small subset of neurons fire at any given time, significantly reduce dynamic power consumption. Additionally, implementing variable precision computation allows the system to adapt its energy expenditure based on task requirements—using lower precision for less critical operations while maintaining higher precision where necessary.

Emerging non-volatile memory technologies, such as resistive RAM (RRAM), phase-change memory (PCM), and magnetic RAM (MRAM), offer promising pathways for further energy optimization. These technologies enable persistent storage of synaptic weights without static power consumption and can directly implement Hebbian plasticity mechanisms through their inherent physical properties, potentially reducing energy requirements for weight updates by 10-100× compared to CMOS-based implementations.

Event-driven computation represents another significant energy-saving strategy in neuromorphic systems. By processing information only when relevant changes occur rather than at fixed clock cycles, these systems can remain in low-power states during periods of inactivity, dramatically reducing overall energy consumption in real-world applications where input data often arrives sporadically.

Benchmarking Frameworks for Neuromorphic Systems

Benchmarking frameworks play a crucial role in evaluating and comparing the performance of neuromorphic systems implementing on-chip learning with Hebbian-based rules. These frameworks provide standardized methodologies, metrics, and datasets that enable researchers and developers to assess the efficiency, accuracy, and scalability of their neuromorphic hardware implementations.

The neuromorphic community has developed several specialized benchmarking frameworks tailored to the unique characteristics of brain-inspired computing systems. SNNTorch and Norse represent Python-based frameworks that facilitate the simulation and evaluation of spiking neural networks with Hebbian learning capabilities. These tools offer pre-defined network architectures, learning rule implementations, and performance metrics specifically designed for neuromorphic applications.

For hardware-specific evaluation, frameworks like SNN-TB (Spiking Neural Network Toolbox Benchmark) provide comprehensive test suites that measure critical parameters such as energy consumption, processing speed, and learning convergence rates. These benchmarks are particularly valuable for assessing the efficiency of on-chip Hebbian learning implementations across different neuromorphic hardware platforms.

The Neuromorphic Benchmarks Initiative (NBI) has established standardized datasets and evaluation protocols specifically targeting on-chip learning capabilities. These include pattern recognition tasks, temporal sequence learning, and unsupervised feature extraction challenges that effectively test the Hebbian learning mechanisms implemented in hardware. The initiative promotes reproducibility and fair comparison across different neuromorphic systems.

Performance metrics in these frameworks typically include energy efficiency (measured in operations per joule), learning speed (iterations to convergence), memory footprint, and task-specific accuracy. More sophisticated benchmarks also evaluate the system's resilience to noise, ability to adapt to changing input distributions, and scalability of the learning rules as network size increases.

Recent advancements in benchmarking frameworks have incorporated neuromorphic-specific metrics such as spike efficiency, temporal encoding capacity, and synaptic plasticity dynamics. These metrics provide deeper insights into how effectively Hebbian-based learning rules are implemented in hardware and how they compare to theoretical models and biological systems.

Cross-platform benchmarking tools like NeuroTools and BrainScaleS Benchmarking Suite enable direct comparison between different neuromorphic hardware implementations, from custom ASIC designs to FPGA-based systems, highlighting the strengths and limitations of various approaches to implementing Hebbian learning on silicon.

The neuromorphic community has developed several specialized benchmarking frameworks tailored to the unique characteristics of brain-inspired computing systems. SNNTorch and Norse represent Python-based frameworks that facilitate the simulation and evaluation of spiking neural networks with Hebbian learning capabilities. These tools offer pre-defined network architectures, learning rule implementations, and performance metrics specifically designed for neuromorphic applications.

For hardware-specific evaluation, frameworks like SNN-TB (Spiking Neural Network Toolbox Benchmark) provide comprehensive test suites that measure critical parameters such as energy consumption, processing speed, and learning convergence rates. These benchmarks are particularly valuable for assessing the efficiency of on-chip Hebbian learning implementations across different neuromorphic hardware platforms.

The Neuromorphic Benchmarks Initiative (NBI) has established standardized datasets and evaluation protocols specifically targeting on-chip learning capabilities. These include pattern recognition tasks, temporal sequence learning, and unsupervised feature extraction challenges that effectively test the Hebbian learning mechanisms implemented in hardware. The initiative promotes reproducibility and fair comparison across different neuromorphic systems.

Performance metrics in these frameworks typically include energy efficiency (measured in operations per joule), learning speed (iterations to convergence), memory footprint, and task-specific accuracy. More sophisticated benchmarks also evaluate the system's resilience to noise, ability to adapt to changing input distributions, and scalability of the learning rules as network size increases.

Recent advancements in benchmarking frameworks have incorporated neuromorphic-specific metrics such as spike efficiency, temporal encoding capacity, and synaptic plasticity dynamics. These metrics provide deeper insights into how effectively Hebbian-based learning rules are implemented in hardware and how they compare to theoretical models and biological systems.

Cross-platform benchmarking tools like NeuroTools and BrainScaleS Benchmarking Suite enable direct comparison between different neuromorphic hardware implementations, from custom ASIC designs to FPGA-based systems, highlighting the strengths and limitations of various approaches to implementing Hebbian learning on silicon.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!