Principles of Spiking Neural Networks (SNNs) for Neuromorphic Systems.

SEP 2, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

SNN Fundamentals and Research Objectives

Spiking Neural Networks (SNNs) represent a biologically inspired computing paradigm that mimics the functioning of the human brain more closely than traditional artificial neural networks. Unlike conventional neural networks that process continuous values, SNNs operate using discrete spikes or action potentials, similar to biological neurons. This fundamental difference enables SNNs to process temporal information more efficiently and with significantly lower power consumption, making them particularly suitable for neuromorphic computing systems.

The evolution of SNNs can be traced back to the pioneering work of Hodgkin and Huxley in the 1950s, who developed the first mathematical model of action potential generation in neurons. Subsequent models like the Leaky Integrate-and-Fire (LIF) and Izhikevich models simplified these concepts while maintaining biological plausibility. Over the past decade, SNNs have gained substantial attention due to advancements in neuromorphic hardware and the increasing demand for energy-efficient computing solutions for edge devices.

Current research in SNNs focuses on several key objectives. First, developing more efficient training algorithms remains crucial, as traditional backpropagation methods are not directly applicable to spiking networks due to the non-differentiable nature of spike events. Approaches such as surrogate gradient methods, spike-timing-dependent plasticity (STDP), and ANN-to-SNN conversion techniques are being actively explored to address this challenge.

Second, researchers aim to enhance the computational capabilities of SNNs to match or exceed those of conventional deep learning models while maintaining their energy efficiency advantages. This includes improving their performance on complex tasks such as image classification, speech recognition, and reinforcement learning problems.

Third, bridging the gap between neuromorphic hardware implementations and SNN algorithms represents another critical research direction. This involves developing architectures and algorithms that can fully leverage the unique characteristics of neuromorphic chips like IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida.

The long-term vision for SNN research extends beyond mere computational efficiency. These networks offer the potential to advance our understanding of brain function and cognition, potentially leading to more robust AI systems capable of unsupervised learning, adaptation, and reasoning. Additionally, SNNs may enable new applications in brain-machine interfaces, neuroprosthetics, and neuromorphic sensors that can process information in real-time with minimal power requirements.

As we move forward, integrating SNNs with other emerging technologies such as quantum computing and novel materials for neuromorphic devices could unlock unprecedented computational capabilities while maintaining the energy efficiency that makes these networks so promising for future computing paradigms.

The evolution of SNNs can be traced back to the pioneering work of Hodgkin and Huxley in the 1950s, who developed the first mathematical model of action potential generation in neurons. Subsequent models like the Leaky Integrate-and-Fire (LIF) and Izhikevich models simplified these concepts while maintaining biological plausibility. Over the past decade, SNNs have gained substantial attention due to advancements in neuromorphic hardware and the increasing demand for energy-efficient computing solutions for edge devices.

Current research in SNNs focuses on several key objectives. First, developing more efficient training algorithms remains crucial, as traditional backpropagation methods are not directly applicable to spiking networks due to the non-differentiable nature of spike events. Approaches such as surrogate gradient methods, spike-timing-dependent plasticity (STDP), and ANN-to-SNN conversion techniques are being actively explored to address this challenge.

Second, researchers aim to enhance the computational capabilities of SNNs to match or exceed those of conventional deep learning models while maintaining their energy efficiency advantages. This includes improving their performance on complex tasks such as image classification, speech recognition, and reinforcement learning problems.

Third, bridging the gap between neuromorphic hardware implementations and SNN algorithms represents another critical research direction. This involves developing architectures and algorithms that can fully leverage the unique characteristics of neuromorphic chips like IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida.

The long-term vision for SNN research extends beyond mere computational efficiency. These networks offer the potential to advance our understanding of brain function and cognition, potentially leading to more robust AI systems capable of unsupervised learning, adaptation, and reasoning. Additionally, SNNs may enable new applications in brain-machine interfaces, neuroprosthetics, and neuromorphic sensors that can process information in real-time with minimal power requirements.

As we move forward, integrating SNNs with other emerging technologies such as quantum computing and novel materials for neuromorphic devices could unlock unprecedented computational capabilities while maintaining the energy efficiency that makes these networks so promising for future computing paradigms.

Market Applications for Neuromorphic Computing

Neuromorphic computing represents a revolutionary approach to information processing that mimics the structure and function of the human brain. The market for this technology is expanding rapidly across multiple sectors, driven by increasing demands for energy-efficient computing solutions capable of handling complex AI workloads.

The healthcare industry presents one of the most promising application areas for neuromorphic systems. These technologies enable real-time processing of biosignals, facilitating advanced prosthetics that respond naturally to neural impulses. Brain-computer interfaces powered by SNNs offer unprecedented capabilities for patients with mobility impairments. Additionally, neuromorphic systems excel at pattern recognition in medical imaging, potentially revolutionizing early disease detection through more efficient processing of complex diagnostic data.

Autonomous vehicles constitute another significant market opportunity. Neuromorphic processors can process visual and sensor data with remarkable energy efficiency, enabling real-time decision-making crucial for navigation and obstacle avoidance. The event-driven nature of SNNs aligns perfectly with the unpredictable environments autonomous vehicles must navigate, providing advantages in terms of both performance and power consumption compared to traditional computing architectures.

The Internet of Things (IoT) ecosystem represents a vast potential market for neuromorphic computing. Edge devices with severe power constraints can benefit tremendously from the energy efficiency of SNN-based processors. Applications range from smart home systems to industrial sensors that require continuous operation with minimal power consumption. Market analysts project substantial growth in this segment as manufacturers seek solutions to extend battery life while enhancing computational capabilities.

In robotics, neuromorphic systems enable more sophisticated sensorimotor integration and adaptive learning capabilities. Robots equipped with SNN-based processors demonstrate improved performance in unstructured environments, learning from experience rather than relying solely on pre-programmed responses. This capability opens new possibilities for collaborative robots in manufacturing, service robots in commercial settings, and exploration robots in hazardous environments.

Defense and security applications represent another significant market segment. Neuromorphic systems excel at anomaly detection and pattern recognition tasks critical for surveillance and threat identification. Their low power requirements make them ideal for deployment in remote or mobile security systems where energy availability is limited.

The consumer electronics sector is beginning to explore neuromorphic computing for next-generation smart devices. Applications include more natural voice and facial recognition systems, context-aware personal assistants, and augmented reality devices that can process environmental data with minimal latency and power consumption.

The healthcare industry presents one of the most promising application areas for neuromorphic systems. These technologies enable real-time processing of biosignals, facilitating advanced prosthetics that respond naturally to neural impulses. Brain-computer interfaces powered by SNNs offer unprecedented capabilities for patients with mobility impairments. Additionally, neuromorphic systems excel at pattern recognition in medical imaging, potentially revolutionizing early disease detection through more efficient processing of complex diagnostic data.

Autonomous vehicles constitute another significant market opportunity. Neuromorphic processors can process visual and sensor data with remarkable energy efficiency, enabling real-time decision-making crucial for navigation and obstacle avoidance. The event-driven nature of SNNs aligns perfectly with the unpredictable environments autonomous vehicles must navigate, providing advantages in terms of both performance and power consumption compared to traditional computing architectures.

The Internet of Things (IoT) ecosystem represents a vast potential market for neuromorphic computing. Edge devices with severe power constraints can benefit tremendously from the energy efficiency of SNN-based processors. Applications range from smart home systems to industrial sensors that require continuous operation with minimal power consumption. Market analysts project substantial growth in this segment as manufacturers seek solutions to extend battery life while enhancing computational capabilities.

In robotics, neuromorphic systems enable more sophisticated sensorimotor integration and adaptive learning capabilities. Robots equipped with SNN-based processors demonstrate improved performance in unstructured environments, learning from experience rather than relying solely on pre-programmed responses. This capability opens new possibilities for collaborative robots in manufacturing, service robots in commercial settings, and exploration robots in hazardous environments.

Defense and security applications represent another significant market segment. Neuromorphic systems excel at anomaly detection and pattern recognition tasks critical for surveillance and threat identification. Their low power requirements make them ideal for deployment in remote or mobile security systems where energy availability is limited.

The consumer electronics sector is beginning to explore neuromorphic computing for next-generation smart devices. Applications include more natural voice and facial recognition systems, context-aware personal assistants, and augmented reality devices that can process environmental data with minimal latency and power consumption.

SNN Technical Barriers and Global Development Status

Spiking Neural Networks (SNNs) face several significant technical barriers that currently limit their widespread adoption in neuromorphic computing systems. The primary challenge lies in the development of efficient training algorithms. Unlike traditional artificial neural networks that use backpropagation, SNNs operate with discrete spike events, making gradient-based optimization difficult. Current approaches such as SpikeProp and surrogate gradient methods show promise but still struggle with deep architectures and temporal dynamics.

Hardware implementation presents another substantial barrier. Neuromorphic chips designed for SNNs require specialized circuits to model biological neurons accurately while maintaining energy efficiency. Current designs like IBM's TrueNorth and Intel's Loihi make trade-offs between biological fidelity and computational efficiency, limiting their application scope. Additionally, the lack of standardized hardware platforms hampers software development and algorithm testing.

Energy efficiency, while theoretically superior in SNNs, remains challenging to fully realize in practical implementations. The event-driven nature of spike-based computation should reduce power consumption, but current hardware solutions still consume significant energy during idle periods and spike transmission. Optimizing these aspects requires innovations in both circuit design and system architecture.

Globally, SNN development shows distinct regional characteristics. The United States leads in neuromorphic hardware development through companies like Intel and IBM, alongside DARPA-funded research programs. The European Union has established strong research networks through initiatives like the Human Brain Project, focusing on biologically accurate neural models. In Asia, China has rapidly increased investments in neuromorphic computing, while Japan maintains expertise in specialized hardware through companies like NEC.

Academic research in SNNs is concentrated in institutions such as ETH Zurich, University of Manchester, and Stanford University. These centers focus on different aspects ranging from theoretical foundations to practical applications. Industry participation has grown significantly in the past five years, with technology companies establishing dedicated neuromorphic research teams.

The scalability of SNNs remains problematic, with current implementations typically limited to thousands or millions of neurons, far from the billions found in mammalian brains. This limitation stems from both hardware constraints and algorithmic inefficiencies. Additionally, the lack of standardized benchmarks specifically designed for spike-based computation makes comparative evaluation difficult, hindering progress in the field.

Despite these challenges, recent advances in neuromorphic materials, event-based sensors, and hybrid computing approaches suggest promising pathways for overcoming current technical barriers in SNN implementation.

Hardware implementation presents another substantial barrier. Neuromorphic chips designed for SNNs require specialized circuits to model biological neurons accurately while maintaining energy efficiency. Current designs like IBM's TrueNorth and Intel's Loihi make trade-offs between biological fidelity and computational efficiency, limiting their application scope. Additionally, the lack of standardized hardware platforms hampers software development and algorithm testing.

Energy efficiency, while theoretically superior in SNNs, remains challenging to fully realize in practical implementations. The event-driven nature of spike-based computation should reduce power consumption, but current hardware solutions still consume significant energy during idle periods and spike transmission. Optimizing these aspects requires innovations in both circuit design and system architecture.

Globally, SNN development shows distinct regional characteristics. The United States leads in neuromorphic hardware development through companies like Intel and IBM, alongside DARPA-funded research programs. The European Union has established strong research networks through initiatives like the Human Brain Project, focusing on biologically accurate neural models. In Asia, China has rapidly increased investments in neuromorphic computing, while Japan maintains expertise in specialized hardware through companies like NEC.

Academic research in SNNs is concentrated in institutions such as ETH Zurich, University of Manchester, and Stanford University. These centers focus on different aspects ranging from theoretical foundations to practical applications. Industry participation has grown significantly in the past five years, with technology companies establishing dedicated neuromorphic research teams.

The scalability of SNNs remains problematic, with current implementations typically limited to thousands or millions of neurons, far from the billions found in mammalian brains. This limitation stems from both hardware constraints and algorithmic inefficiencies. Additionally, the lack of standardized benchmarks specifically designed for spike-based computation makes comparative evaluation difficult, hindering progress in the field.

Despite these challenges, recent advances in neuromorphic materials, event-based sensors, and hybrid computing approaches suggest promising pathways for overcoming current technical barriers in SNN implementation.

Current SNN Implementation Approaches

01 SNN Architecture and Implementation

Spiking Neural Networks (SNNs) are implemented with specific architectures that mimic biological neural systems. These implementations include hardware designs for neuromorphic computing, specialized circuits for spike processing, and architectural frameworks that enable efficient execution of spike-based computations. The architecture typically involves neurons that communicate through discrete spikes rather than continuous values, allowing for more biologically realistic neural processing and potentially more energy-efficient computation.- Fundamental architecture and operation of SNNs: Spiking Neural Networks (SNNs) are biologically-inspired neural networks that mimic the behavior of biological neurons by using discrete spikes for information processing. Unlike traditional artificial neural networks, SNNs operate on the principle of temporal coding where information is encoded in the timing of spikes. This architecture allows for more efficient processing of temporal data and potentially lower power consumption, making them suitable for neuromorphic computing applications.

- Learning algorithms and training methods for SNNs: Various learning algorithms have been developed specifically for training Spiking Neural Networks. These include spike-timing-dependent plasticity (STDP), backpropagation-based methods adapted for spiking neurons, and reinforcement learning approaches. These training methods address the challenges of the non-differentiable nature of spike events and enable SNNs to learn from temporal patterns in data, allowing them to perform complex recognition and classification tasks.

- Hardware implementations of SNNs: Specialized hardware architectures have been developed to efficiently implement Spiking Neural Networks. These neuromorphic computing systems are designed to leverage the event-driven nature of SNNs, resulting in significant improvements in energy efficiency compared to traditional computing architectures. These implementations include dedicated neuromorphic chips, FPGA-based designs, and specialized circuits that can process spike-based information in parallel with minimal power consumption.

- Applications of SNNs in pattern recognition and signal processing: Spiking Neural Networks have shown promising results in various pattern recognition and signal processing applications. Their ability to process temporal information makes them particularly suitable for tasks such as speech recognition, image classification, and time-series analysis. SNNs can efficiently encode and process sensory data, enabling more natural and efficient solutions for problems involving temporal patterns and real-time processing requirements.

- Integration of SNNs with other AI techniques: Recent advancements involve integrating Spiking Neural Networks with other artificial intelligence techniques to create hybrid systems that leverage the strengths of different approaches. These hybrid architectures combine SNNs with traditional deep learning, reinforcement learning, or evolutionary algorithms to enhance performance, efficiency, and adaptability. Such integrations enable more robust AI systems that can handle complex, real-world problems while maintaining energy efficiency.

02 Learning and Training Methods for SNNs

Various learning algorithms and training methods have been developed specifically for Spiking Neural Networks. These include spike-timing-dependent plasticity (STDP), backpropagation adaptations for spiking neurons, reinforcement learning approaches, and unsupervised learning techniques. These methods enable SNNs to learn from temporal patterns in data and adapt their synaptic weights based on the timing of spikes, allowing for efficient processing of temporal information and event-based data.Expand Specific Solutions03 Applications of SNNs in Pattern Recognition and Signal Processing

Spiking Neural Networks are particularly effective for pattern recognition tasks and signal processing applications. They excel at processing temporal data, recognizing patterns in time-series information, and handling event-based sensory inputs. Applications include image and speech recognition, anomaly detection, sensor data processing, and real-time signal analysis. The spike-based processing approach allows for efficient handling of sparse, temporal data patterns that are common in real-world sensing applications.Expand Specific Solutions04 Energy-Efficient Computing with SNNs

One of the key advantages of Spiking Neural Networks is their potential for energy-efficient computing. By communicating through discrete spikes rather than continuous values, SNNs can significantly reduce power consumption compared to traditional artificial neural networks. This makes them particularly suitable for edge computing, IoT devices, and other applications where power efficiency is critical. Various hardware and software optimizations have been developed to further enhance the energy efficiency of SNN implementations.Expand Specific Solutions05 Integration of SNNs with Conventional Neural Networks

Research has focused on integrating Spiking Neural Networks with conventional artificial neural networks to leverage the advantages of both approaches. These hybrid systems combine the energy efficiency and temporal processing capabilities of SNNs with the well-established training methods and performance of conventional networks. Techniques include conversion methods to transform trained conventional networks into spiking equivalents, hybrid architectures that incorporate both types of neurons, and specialized interfaces between spiking and non-spiking components.Expand Specific Solutions

Leading Organizations in SNN Research and Development

Spiking Neural Networks (SNNs) for neuromorphic systems are currently in an early growth phase, with the market expected to expand significantly as AI hardware demands increase. The global neuromorphic computing market is projected to reach $8-10 billion by 2025, growing at over 20% CAGR. Technologically, SNNs are advancing rapidly but remain less mature than conventional neural networks. Leading players include Intel, IBM, and Huawei, who are developing specialized neuromorphic chips like Intel's Loihi and IBM's TrueNorth. Academic institutions such as Zhejiang University and Nanjing University collaborate with industry partners to bridge theoretical research and practical applications. Specialized startups like Innatera Nanosystems and BrainChip are emerging with innovative SNN implementations, while established semiconductor companies including Micron and STMicroelectronics are integrating neuromorphic elements into their product roadmaps.

Intel Corp.

Technical Solution: Intel's Loihi neuromorphic research chip implements a sophisticated SNN architecture designed specifically for neuromorphic computing. Loihi features 128 neuromorphic cores, each implementing 1,024 spiking neurons (for a total of 131,072 neurons) and 130 million synapses. The chip incorporates a programmable learning engine that supports various spike-timing-dependent plasticity (STDP) rules, allowing on-chip learning. Intel's implementation uses a digital asynchronous design with each neuron capable of communicating with any other neuron on the chip. The neurons follow a programmable leaky integrate-and-fire model with configurable parameters, including thresholds, refractory periods, and synaptic decay rates. Loihi 2, released in 2021, further advances these capabilities with up to 10x faster processing and greater energy efficiency[3]. Intel has demonstrated Loihi solving complex optimization problems, performing gesture recognition, and learning new patterns in real-time while consuming only tens of milliwatts of power. Their neuromorphic systems can process information up to 1,000 times faster and 10,000 times more efficiently than traditional processors for certain workloads[4].

Strengths: Highly programmable architecture allowing for flexible neuron models; on-chip learning capabilities through programmable learning rules; excellent energy efficiency for sparse, event-driven workloads. Weaknesses: Still primarily a research platform rather than a commercial product; requires specialized programming approaches different from mainstream AI frameworks; limited ecosystem of development tools compared to traditional computing platforms.

Micron Technology, Inc.

Technical Solution: Micron's neuromorphic approach centers on their Automata Processor (AP) technology, which implements SNN principles through a novel non-von Neumann architecture. Rather than using traditional integrate-and-fire neurons, Micron's implementation leverages in-memory computing with memory arrays serving as both storage and computational elements. Their architecture uses a fabric of state machines that can be configured to implement various neuron models, with particular efficiency for sparse, event-driven computations characteristic of SNNs. The AP can process thousands of input symbols simultaneously against a pattern ruleset stored in memory, making it particularly efficient for pattern recognition tasks[7]. Micron has demonstrated applications in genomic sequence analysis, network security, and image recognition. Their technology achieves parallelism by having memory elements actively participate in computation rather than shuttling data between separate memory and processing units. This approach reduces the energy costs associated with data movement in traditional architectures. Micron has reported performance improvements of 100-3000x compared to conventional CPU implementations for certain pattern matching workloads, with significantly lower power consumption[8].

Strengths: Highly parallel architecture well-suited for pattern recognition; eliminates memory-processor bottleneck through in-memory computing; excellent performance for certain classes of problems like regular expression matching. Weaknesses: Less flexible than general-purpose neuromorphic chips; more specialized application focus than other neuromorphic solutions; requires specific programming approaches different from mainstream neural network frameworks.

Critical SNN Algorithms and Learning Methods

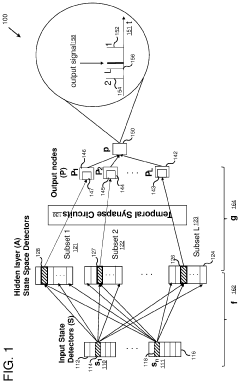

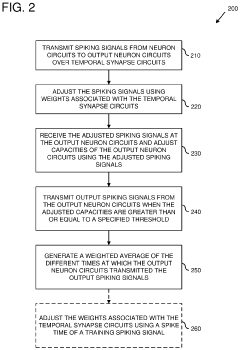

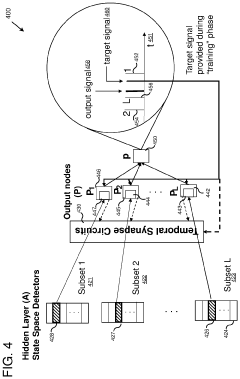

Spatio-temporal spiking neural networks in neuromorphic hardware systems

PatentActiveUS10671912B2

Innovation

- Implementing temporal and spatio-temporal SNNs using neuron circuits and temporal synapse circuits that encode information through spiking signals, allowing for dynamic weight adjustments and efficient information processing, which enables faster learning and energy-efficient operations.

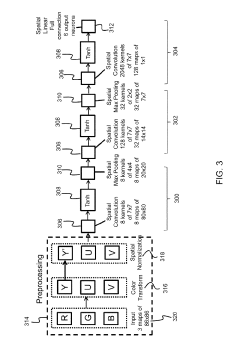

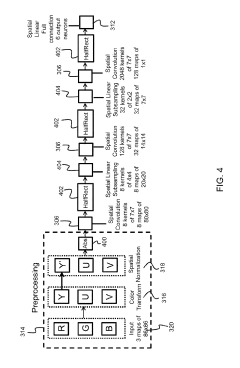

Method for neuromorphic implementation of convolutional neural networks

PatentActiveUS10387774B1

Innovation

- The system adapts CNN architecture by making output values positive, removing biases, replacing sigmoid functions with HalfRect functions, and using spatial linear subsampling instead of max-pooling, allowing the converted SNN to be implemented on neuromorphic hardware with minimal performance loss.

Hardware-Software Co-design for SNNs

Effective implementation of Spiking Neural Networks (SNNs) in neuromorphic computing systems requires sophisticated hardware-software co-design approaches that optimize both computational efficiency and biological fidelity. This integration presents unique challenges due to the temporal dynamics and event-driven nature of SNNs, which differ fundamentally from traditional artificial neural networks.

Hardware platforms for SNNs must efficiently handle sparse, asynchronous spike events while minimizing power consumption. Current neuromorphic architectures like Intel's Loihi, IBM's TrueNorth, and SpiNNaker employ specialized circuits that mimic biological neurons and synapses, featuring distributed memory and parallel processing capabilities. These designs prioritize energy efficiency through event-driven computation, activating circuits only when spikes occur.

Software frameworks must complement these hardware innovations through specialized programming models and simulation environments. TensorFlow-based SNN extensions, PyNN, and Brian provide abstraction layers that shield developers from hardware complexities while enabling efficient spike-based computation. The co-design challenge lies in balancing high-level programming accessibility with low-level hardware optimization.

Memory management represents a critical co-design consideration, as SNNs require efficient storage and retrieval of synaptic weights and neuronal states. On-chip memory hierarchies must be carefully designed to minimize data movement, while software must implement intelligent memory allocation strategies that exploit locality in neural connectivity patterns.

Spike encoding schemes form another essential co-design element, with rate coding, temporal coding, and population coding offering different trade-offs between information density and implementation complexity. The hardware must support the chosen encoding method through appropriate timing circuits and event-handling mechanisms, while software provides the algorithmic framework for encoding and decoding information.

Training methodologies for SNNs present unique co-design challenges due to the non-differentiable nature of spike events. Surrogate gradient methods, spike-timing-dependent plasticity (STDP), and converted ANN-to-SNN approaches each require specific hardware support for efficient implementation, such as on-chip learning circuits or specialized gradient approximation units.

Successful hardware-software co-design for SNNs ultimately requires cross-disciplinary collaboration between neuromorphic hardware engineers, computational neuroscientists, and machine learning specialists. This integrated approach ensures that algorithmic innovations can be efficiently mapped to hardware constraints, while hardware designs evolve to better support emerging SNN algorithms and applications.

Hardware platforms for SNNs must efficiently handle sparse, asynchronous spike events while minimizing power consumption. Current neuromorphic architectures like Intel's Loihi, IBM's TrueNorth, and SpiNNaker employ specialized circuits that mimic biological neurons and synapses, featuring distributed memory and parallel processing capabilities. These designs prioritize energy efficiency through event-driven computation, activating circuits only when spikes occur.

Software frameworks must complement these hardware innovations through specialized programming models and simulation environments. TensorFlow-based SNN extensions, PyNN, and Brian provide abstraction layers that shield developers from hardware complexities while enabling efficient spike-based computation. The co-design challenge lies in balancing high-level programming accessibility with low-level hardware optimization.

Memory management represents a critical co-design consideration, as SNNs require efficient storage and retrieval of synaptic weights and neuronal states. On-chip memory hierarchies must be carefully designed to minimize data movement, while software must implement intelligent memory allocation strategies that exploit locality in neural connectivity patterns.

Spike encoding schemes form another essential co-design element, with rate coding, temporal coding, and population coding offering different trade-offs between information density and implementation complexity. The hardware must support the chosen encoding method through appropriate timing circuits and event-handling mechanisms, while software provides the algorithmic framework for encoding and decoding information.

Training methodologies for SNNs present unique co-design challenges due to the non-differentiable nature of spike events. Surrogate gradient methods, spike-timing-dependent plasticity (STDP), and converted ANN-to-SNN approaches each require specific hardware support for efficient implementation, such as on-chip learning circuits or specialized gradient approximation units.

Successful hardware-software co-design for SNNs ultimately requires cross-disciplinary collaboration between neuromorphic hardware engineers, computational neuroscientists, and machine learning specialists. This integrated approach ensures that algorithmic innovations can be efficiently mapped to hardware constraints, while hardware designs evolve to better support emerging SNN algorithms and applications.

Energy Efficiency Benchmarks for Neuromorphic Systems

Neuromorphic computing systems have demonstrated remarkable energy efficiency advantages compared to traditional computing architectures when implementing Spiking Neural Networks (SNNs). Current benchmarks indicate that neuromorphic hardware can achieve energy efficiencies of 1-100 picojoules per synaptic operation, representing orders of magnitude improvement over conventional GPU implementations which typically consume 10-100 nanojoules per equivalent operation.

The IBM TrueNorth neuromorphic chip serves as a prominent benchmark, achieving approximately 26 pJ per synaptic event while implementing complex SNN architectures. This efficiency stems from the event-driven nature of SNNs, where computation occurs only when neurons fire, eliminating the constant power drain characteristic of traditional computing paradigms.

Intel's Loihi neuromorphic research chip provides another significant benchmark, demonstrating energy efficiency improvements of 1,000x compared to conventional architectures when solving certain optimization problems. The chip's sparse activity-dependent processing contributes substantially to these efficiency gains, with power consumption scaling dynamically based on neural activity levels.

SpiNNaker systems, developed at the University of Manchester, offer additional comparative benchmarks, showing that large-scale SNN implementations can operate at approximately 20 pJ per synaptic event. These systems excel particularly in applications requiring real-time processing of sensory data streams, where traditional architectures struggle to maintain efficiency.

Benchmark comparisons across different neuromorphic implementations reveal that analog implementations generally achieve higher energy efficiency (0.1-10 pJ per synaptic operation) compared to digital implementations (10-100 pJ). However, this efficiency advantage comes with trade-offs in precision and reliability that must be considered for specific applications.

Application-specific benchmarks demonstrate that neuromorphic systems implementing SNNs achieve particularly impressive efficiency gains in pattern recognition tasks. For instance, gesture recognition implementations on neuromorphic hardware have shown 100-1000x energy efficiency improvements compared to conventional deep learning approaches on standard processors.

The energy efficiency advantages of neuromorphic systems become even more pronounced in edge computing scenarios, where power constraints are significant. Benchmarks for always-on sensory processing applications show that SNN implementations on neuromorphic hardware can operate continuously for months on small batteries, a capability unattainable with traditional computing approaches.

The IBM TrueNorth neuromorphic chip serves as a prominent benchmark, achieving approximately 26 pJ per synaptic event while implementing complex SNN architectures. This efficiency stems from the event-driven nature of SNNs, where computation occurs only when neurons fire, eliminating the constant power drain characteristic of traditional computing paradigms.

Intel's Loihi neuromorphic research chip provides another significant benchmark, demonstrating energy efficiency improvements of 1,000x compared to conventional architectures when solving certain optimization problems. The chip's sparse activity-dependent processing contributes substantially to these efficiency gains, with power consumption scaling dynamically based on neural activity levels.

SpiNNaker systems, developed at the University of Manchester, offer additional comparative benchmarks, showing that large-scale SNN implementations can operate at approximately 20 pJ per synaptic event. These systems excel particularly in applications requiring real-time processing of sensory data streams, where traditional architectures struggle to maintain efficiency.

Benchmark comparisons across different neuromorphic implementations reveal that analog implementations generally achieve higher energy efficiency (0.1-10 pJ per synaptic operation) compared to digital implementations (10-100 pJ). However, this efficiency advantage comes with trade-offs in precision and reliability that must be considered for specific applications.

Application-specific benchmarks demonstrate that neuromorphic systems implementing SNNs achieve particularly impressive efficiency gains in pattern recognition tasks. For instance, gesture recognition implementations on neuromorphic hardware have shown 100-1000x energy efficiency improvements compared to conventional deep learning approaches on standard processors.

The energy efficiency advantages of neuromorphic systems become even more pronounced in edge computing scenarios, where power constraints are significant. Benchmarks for always-on sensory processing applications show that SNN implementations on neuromorphic hardware can operate continuously for months on small batteries, a capability unattainable with traditional computing approaches.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!