Cross-paradigm comparison: Neuromorphic vs. In-Memory Computing.

SEP 8, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

Neuromorphic and In-Memory Computing Evolution and Objectives

The evolution of computing paradigms has witnessed significant transformations over the decades, with traditional von Neumann architectures facing increasing limitations in energy efficiency and performance scaling. Neuromorphic computing and In-Memory Computing (IMC) have emerged as two promising alternative paradigms addressing these challenges, albeit through fundamentally different approaches.

Neuromorphic computing traces its conceptual origins to the 1980s when Carver Mead proposed hardware systems that mimic the structure and function of biological neural networks. The field has evolved from simple analog circuit implementations to sophisticated spike-based processing systems. The primary objective of neuromorphic computing is to replicate the brain's remarkable energy efficiency and parallel processing capabilities, enabling cognitive tasks with significantly reduced power consumption compared to conventional computing systems.

In contrast, In-Memory Computing has developed as a response to the memory wall problem—the growing disparity between processor and memory speeds. By integrating computation directly within memory arrays, IMC aims to eliminate the costly data movement between separate processing and storage units. This paradigm has progressed from early proposals in the 1990s to recent implementations using various memory technologies including SRAM, DRAM, and emerging non-volatile memories.

Both paradigms share the overarching goal of overcoming von Neumann bottlenecks, but their technical objectives diverge in important ways. Neuromorphic systems primarily target cognitive applications requiring adaptive learning and pattern recognition, with an emphasis on brain-inspired information processing. IMC, meanwhile, focuses on accelerating a broader range of computational workloads by minimizing data movement, with particular strength in matrix operations common in modern AI applications.

The technological trajectory indicates convergence in certain application domains, particularly artificial intelligence and edge computing, where both paradigms offer significant advantages over conventional architectures. Recent research demonstrates that hybrid approaches combining elements from both paradigms may yield optimal solutions for specific workloads.

Looking forward, the evolution of these technologies is expected to continue along several dimensions: device-level innovations in materials and fabrication, architecture-level advancements in scalable designs, and algorithm-level developments that can effectively leverage the unique characteristics of each paradigm. The ultimate objective for both approaches remains achieving orders-of-magnitude improvements in computational efficiency while maintaining or enhancing performance for next-generation computing applications.

Neuromorphic computing traces its conceptual origins to the 1980s when Carver Mead proposed hardware systems that mimic the structure and function of biological neural networks. The field has evolved from simple analog circuit implementations to sophisticated spike-based processing systems. The primary objective of neuromorphic computing is to replicate the brain's remarkable energy efficiency and parallel processing capabilities, enabling cognitive tasks with significantly reduced power consumption compared to conventional computing systems.

In contrast, In-Memory Computing has developed as a response to the memory wall problem—the growing disparity between processor and memory speeds. By integrating computation directly within memory arrays, IMC aims to eliminate the costly data movement between separate processing and storage units. This paradigm has progressed from early proposals in the 1990s to recent implementations using various memory technologies including SRAM, DRAM, and emerging non-volatile memories.

Both paradigms share the overarching goal of overcoming von Neumann bottlenecks, but their technical objectives diverge in important ways. Neuromorphic systems primarily target cognitive applications requiring adaptive learning and pattern recognition, with an emphasis on brain-inspired information processing. IMC, meanwhile, focuses on accelerating a broader range of computational workloads by minimizing data movement, with particular strength in matrix operations common in modern AI applications.

The technological trajectory indicates convergence in certain application domains, particularly artificial intelligence and edge computing, where both paradigms offer significant advantages over conventional architectures. Recent research demonstrates that hybrid approaches combining elements from both paradigms may yield optimal solutions for specific workloads.

Looking forward, the evolution of these technologies is expected to continue along several dimensions: device-level innovations in materials and fabrication, architecture-level advancements in scalable designs, and algorithm-level developments that can effectively leverage the unique characteristics of each paradigm. The ultimate objective for both approaches remains achieving orders-of-magnitude improvements in computational efficiency while maintaining or enhancing performance for next-generation computing applications.

Market Demand Analysis for Energy-Efficient Computing Solutions

The global market for energy-efficient computing solutions is experiencing unprecedented growth, driven by the exponential increase in data processing demands and the limitations of traditional computing architectures. Current projections indicate that data center energy consumption alone could reach 8% of global electricity by 2030, creating an urgent need for alternative computing paradigms that can deliver higher performance per watt.

Both neuromorphic and in-memory computing represent revolutionary approaches to address this market demand. The energy efficiency gap between conventional von Neumann architectures and these emerging paradigms is substantial, with potential energy savings of up to 1000x for specific workloads. This efficiency differential is particularly critical as AI and machine learning applications proliferate across industries.

Market research reveals that the combined neuromorphic and in-memory computing market is expected to grow at a CAGR of 25% through 2028, reflecting strong industry recognition of their potential. Edge computing applications represent a particularly promising segment, with forecasts suggesting 75 billion connected IoT devices by 2025, many requiring real-time processing with strict power constraints.

The financial services sector has emerged as an early adopter, leveraging these technologies for high-frequency trading and risk analysis where microsecond advantages translate to significant competitive edges. Healthcare applications follow closely, with neuromorphic systems showing particular promise for processing complex biological signals and medical imaging data while maintaining patient privacy through localized processing.

Enterprise customers increasingly cite energy costs as a primary concern in their computing infrastructure decisions, with 68% of Fortune 500 companies now including energy efficiency metrics in their technology procurement criteria. This shift represents a fundamental change from previous decades when raw performance was the dominant consideration.

The automotive and aerospace industries are driving demand for embedded neuromorphic solutions that can enable advanced sensing and decision-making capabilities within strict power envelopes. These sectors value the fault tolerance and graceful degradation characteristics inherent to neuromorphic designs, which align well with safety-critical applications.

Government and defense sectors are also significant market drivers, with substantial research funding directed toward developing sovereign capabilities in these technologies. This investment reflects recognition of their strategic importance for applications ranging from autonomous systems to signal intelligence.

The market demand analysis clearly indicates that while general-purpose computing will continue to dominate in the near term, specialized neuromorphic and in-memory computing solutions addressing specific high-value applications will establish initial market footholds, gradually expanding as the technologies mature and manufacturing scales.

Both neuromorphic and in-memory computing represent revolutionary approaches to address this market demand. The energy efficiency gap between conventional von Neumann architectures and these emerging paradigms is substantial, with potential energy savings of up to 1000x for specific workloads. This efficiency differential is particularly critical as AI and machine learning applications proliferate across industries.

Market research reveals that the combined neuromorphic and in-memory computing market is expected to grow at a CAGR of 25% through 2028, reflecting strong industry recognition of their potential. Edge computing applications represent a particularly promising segment, with forecasts suggesting 75 billion connected IoT devices by 2025, many requiring real-time processing with strict power constraints.

The financial services sector has emerged as an early adopter, leveraging these technologies for high-frequency trading and risk analysis where microsecond advantages translate to significant competitive edges. Healthcare applications follow closely, with neuromorphic systems showing particular promise for processing complex biological signals and medical imaging data while maintaining patient privacy through localized processing.

Enterprise customers increasingly cite energy costs as a primary concern in their computing infrastructure decisions, with 68% of Fortune 500 companies now including energy efficiency metrics in their technology procurement criteria. This shift represents a fundamental change from previous decades when raw performance was the dominant consideration.

The automotive and aerospace industries are driving demand for embedded neuromorphic solutions that can enable advanced sensing and decision-making capabilities within strict power envelopes. These sectors value the fault tolerance and graceful degradation characteristics inherent to neuromorphic designs, which align well with safety-critical applications.

Government and defense sectors are also significant market drivers, with substantial research funding directed toward developing sovereign capabilities in these technologies. This investment reflects recognition of their strategic importance for applications ranging from autonomous systems to signal intelligence.

The market demand analysis clearly indicates that while general-purpose computing will continue to dominate in the near term, specialized neuromorphic and in-memory computing solutions addressing specific high-value applications will establish initial market footholds, gradually expanding as the technologies mature and manufacturing scales.

Current Technological Landscape and Implementation Challenges

The current technological landscape of neuromorphic and in-memory computing reveals two distinct yet complementary paradigms addressing the von Neumann bottleneck. Neuromorphic computing has seen significant advancements with IBM's TrueNorth, Intel's Loihi, and BrainChip's Akida neuromorphic processors demonstrating practical implementations. These systems have achieved remarkable energy efficiency, with power consumption reductions of up to 1000x compared to traditional computing architectures for specific neural network tasks.

In-memory computing has similarly progressed with commercial offerings from companies like Mythic and Syntiant, which have developed analog matrix processors that perform computations directly within memory arrays. Samsung and Micron have also demonstrated MRAM and ReRAM-based in-memory computing solutions that achieve 10-100x improvements in energy efficiency for machine learning inference tasks.

Despite these advances, both paradigms face substantial implementation challenges. For neuromorphic systems, the development of efficient learning algorithms remains problematic. While spiking neural networks (SNNs) offer theoretical advantages, training these networks effectively continues to be more difficult than conventional deep learning approaches. The lack of standardized programming models and development tools further impedes widespread adoption.

In-memory computing confronts material science challenges, particularly regarding the reliability and endurance of resistive memory elements. Current ReRAM and PCM technologies typically demonstrate endurance of 10^6 to 10^9 cycles, which falls short of requirements for intensive computing applications. Additionally, analog computing precision is limited by device-to-device variations and temporal drift in resistance values.

Both paradigms also struggle with integration into existing computing ecosystems. The hardware-software co-design necessary for optimal performance requires significant expertise across multiple domains. This integration challenge is compounded by the lack of standardized benchmarks for fair comparison between these novel architectures and conventional systems.

Scaling presents another critical challenge. While small-scale demonstrations have shown promise, manufacturing large-scale neuromorphic and in-memory computing systems with billions of components while maintaining yield, reliability, and performance consistency remains technically demanding. Current fabrication processes optimized for conventional CMOS technology require adaptation for these novel architectures.

Market adoption faces the "chicken-and-egg" problem: software developers hesitate to target platforms with limited deployment, while hardware manufacturers struggle to justify large investments without established software ecosystems. This creates a significant barrier to commercialization despite the technical merits of both approaches.

In-memory computing has similarly progressed with commercial offerings from companies like Mythic and Syntiant, which have developed analog matrix processors that perform computations directly within memory arrays. Samsung and Micron have also demonstrated MRAM and ReRAM-based in-memory computing solutions that achieve 10-100x improvements in energy efficiency for machine learning inference tasks.

Despite these advances, both paradigms face substantial implementation challenges. For neuromorphic systems, the development of efficient learning algorithms remains problematic. While spiking neural networks (SNNs) offer theoretical advantages, training these networks effectively continues to be more difficult than conventional deep learning approaches. The lack of standardized programming models and development tools further impedes widespread adoption.

In-memory computing confronts material science challenges, particularly regarding the reliability and endurance of resistive memory elements. Current ReRAM and PCM technologies typically demonstrate endurance of 10^6 to 10^9 cycles, which falls short of requirements for intensive computing applications. Additionally, analog computing precision is limited by device-to-device variations and temporal drift in resistance values.

Both paradigms also struggle with integration into existing computing ecosystems. The hardware-software co-design necessary for optimal performance requires significant expertise across multiple domains. This integration challenge is compounded by the lack of standardized benchmarks for fair comparison between these novel architectures and conventional systems.

Scaling presents another critical challenge. While small-scale demonstrations have shown promise, manufacturing large-scale neuromorphic and in-memory computing systems with billions of components while maintaining yield, reliability, and performance consistency remains technically demanding. Current fabrication processes optimized for conventional CMOS technology require adaptation for these novel architectures.

Market adoption faces the "chicken-and-egg" problem: software developers hesitate to target platforms with limited deployment, while hardware manufacturers struggle to justify large investments without established software ecosystems. This creates a significant barrier to commercialization despite the technical merits of both approaches.

Comparative Analysis of Current Implementation Approaches

01 Neuromorphic computing architectures

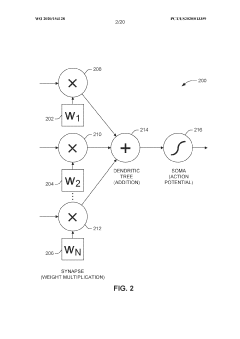

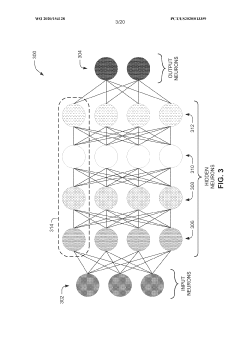

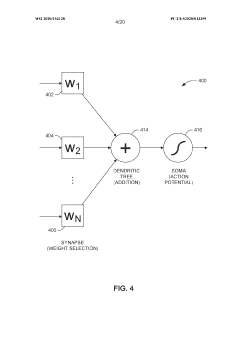

Neuromorphic computing architectures mimic the structure and function of the human brain to achieve higher computational efficiency. These architectures utilize artificial neural networks implemented in hardware, enabling parallel processing and reduced power consumption. By integrating memory and processing units, neuromorphic systems can perform complex cognitive tasks with significantly lower energy requirements compared to traditional computing paradigms.- Neuromorphic hardware architectures: Neuromorphic computing architectures mimic the structure and function of the human brain to achieve higher computational efficiency. These architectures typically employ specialized hardware designs that integrate processing and memory elements to reduce data movement, which is a major source of energy consumption in conventional computing systems. By implementing brain-inspired circuits and components, these systems can perform parallel processing of information with significantly lower power consumption compared to traditional von Neumann architectures.

- In-memory computing techniques: In-memory computing performs computational operations directly within memory arrays, eliminating the need to transfer data between separate processing and storage units. This approach significantly reduces energy consumption and latency associated with data movement in conventional computing architectures. Various memory technologies, including resistive RAM, phase-change memory, and magnetic RAM, can be utilized to implement in-memory computing, enabling efficient matrix operations and vector processing that are essential for many computational tasks.

- Energy efficiency optimization methods: Various techniques can be employed to optimize the energy efficiency of neuromorphic and in-memory computing systems. These include implementing sparse neural networks, utilizing approximate computing techniques, optimizing memory access patterns, and developing specialized training algorithms that account for hardware constraints. Additionally, dynamic voltage and frequency scaling, power gating, and event-driven processing can further reduce power consumption while maintaining computational performance.

- Novel memory device technologies: Advanced memory technologies are being developed specifically for neuromorphic and in-memory computing applications. These include memristive devices, spintronic elements, phase-change materials, and other emerging non-volatile memory technologies that can efficiently represent synaptic weights and perform computational operations. These devices offer advantages such as non-volatility, high density, analog computation capability, and low power consumption, making them ideal for implementing neural networks in hardware.

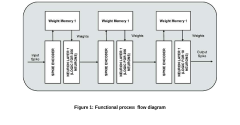

- System-level integration and applications: System-level integration approaches combine neuromorphic and in-memory computing techniques with conventional computing systems to create hybrid architectures that leverage the strengths of each paradigm. These integrated systems can be applied to various domains including image and speech recognition, natural language processing, autonomous vehicles, robotics, and edge computing applications. The integration often involves specialized compilers, programming models, and software frameworks that enable efficient mapping of algorithms to the underlying hardware.

02 In-memory computing techniques

In-memory computing techniques perform computational operations directly within memory units, eliminating the need for data transfer between separate processing and memory components. This approach addresses the von Neumann bottleneck by reducing data movement, which is a major source of energy consumption in conventional computing systems. These techniques enable significant improvements in computational efficiency, especially for data-intensive applications like machine learning and big data analytics.Expand Specific Solutions03 Resistive memory-based computing

Resistive memory technologies, such as Resistive Random Access Memory (ReRAM) and memristors, are utilized to implement efficient neuromorphic and in-memory computing systems. These non-volatile memory elements can store information as resistance states and perform computational operations simultaneously. The analog nature of resistive memory devices allows them to efficiently implement neural network operations like matrix multiplication, significantly improving energy efficiency for AI workloads.Expand Specific Solutions04 Hardware-software co-design for computing efficiency

Hardware-software co-design approaches optimize the entire computing stack for neuromorphic and in-memory computing systems. This involves developing specialized algorithms that leverage the unique characteristics of the hardware while designing hardware architectures that efficiently execute these algorithms. Such holistic optimization enables significant improvements in energy efficiency, processing speed, and overall system performance for AI and machine learning applications.Expand Specific Solutions05 Novel materials and fabrication techniques

Advanced materials and fabrication techniques are being developed to enhance the performance of neuromorphic and in-memory computing devices. These include emerging nanomaterials, 3D integration technologies, and novel semiconductor processes that enable higher density, lower power consumption, and improved reliability. Such innovations address fundamental physical limitations of conventional CMOS technology and pave the way for more efficient computing architectures.Expand Specific Solutions

Leading Organizations and Research Institutions in Computing Paradigms

The neuromorphic and in-memory computing landscape is currently in a transitional phase from research to early commercialization, with the market expected to grow significantly as these technologies mature. The competitive field features established tech giants like IBM, Samsung, and Intel developing comprehensive solutions, alongside specialized players such as Syntiant focusing on edge AI applications. Academic institutions, particularly in China (Tsinghua, Peking, Zhejiang Universities) and South Korea (KAIST, KIST), are driving fundamental research advancements. The technology maturity varies, with in-memory computing closer to commercial viability while neuromorphic systems remain more experimental. Cross-sector collaborations between industry leaders and research institutions are accelerating development, with applications emerging in edge computing, data centers, and AI acceleration.

International Business Machines Corp.

Technical Solution: IBM has pioneered significant advancements in both neuromorphic and in-memory computing paradigms. For neuromorphic computing, IBM developed TrueNorth, a neurosynaptic chip with 1 million programmable neurons and 256 million synapses organized into 4,096 neurosynaptic cores[1]. This architecture mimics the brain's structure, enabling efficient pattern recognition and sensory processing while consuming only 70mW of power. More recently, IBM introduced analog AI hardware that performs computation within memory arrays using phase-change memory (PCM) technology[2]. Their in-memory computing approach utilizes non-volatile memory devices arranged in crossbar arrays to perform matrix operations directly within memory, achieving over 100x improvement in energy efficiency compared to conventional von Neumann architectures[3]. IBM's research demonstrates how these technologies can be complementary: neuromorphic systems excel at event-driven, sparse data processing, while in-memory computing provides efficient dense matrix operations for deep learning workloads.

Strengths: IBM possesses extensive intellectual property in both domains with mature hardware implementations and software ecosystems. Their solutions demonstrate exceptional energy efficiency and have been validated in real-world applications. Weaknesses: IBM's neuromorphic systems require specialized programming models that differ significantly from conventional computing paradigms, creating adoption barriers. Their in-memory computing solutions face challenges with device variability and limited precision that can affect computational accuracy.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed comprehensive solutions in both neuromorphic and in-memory computing domains. Their neuromorphic approach focuses on brain-inspired hardware that mimics neural networks using specialized CMOS circuits and emerging memory technologies. Samsung's neuromorphic processors implement spike-based computing with time-domain signal processing, achieving significant power efficiency for pattern recognition tasks[1]. In parallel, Samsung has pioneered Processing-In-Memory (PIM) technology, integrating DRAM with computing elements to overcome the memory wall. Their HBM-PIM (High Bandwidth Memory with Processing-In-Memory) architecture embeds computing capabilities directly into memory banks, enabling data processing where it's stored[2]. This approach reduces data movement between memory and processors, decreasing energy consumption by approximately 70% while improving performance by 2x for AI workloads[3]. Samsung has also developed MRAM-based in-memory computing solutions that leverage the inherent physics of magnetic devices to perform matrix multiplication operations directly within memory arrays, further enhancing computational efficiency for neural network inference.

Strengths: Samsung possesses manufacturing expertise in both memory technologies and semiconductor fabrication, allowing vertical integration of their solutions. Their in-memory computing implementations are compatible with existing memory standards, facilitating adoption. Weaknesses: Samsung's neuromorphic solutions remain primarily research-focused with limited commercial deployment compared to their conventional semiconductor products. Their in-memory computing approaches face challenges with scaling precision for complex AI workloads requiring high numerical accuracy.

Breakthrough Technologies and Fundamental Research Advancements

Neuromorphic computing: brain-inspired hardware for efficient ai processing

PatentPendingIN202411005149A

Innovation

- Neuromorphic computing systems mimic the brain's neural networks and synapses to enable parallel and adaptive processing, leveraging advances in neuroscience and hardware to create energy-efficient AI systems that can learn and adapt in real-time.

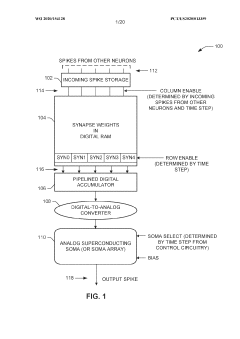

Superconducting neuromorphic core

PatentWO2020154128A1

Innovation

- A superconducting neuromorphic core is developed, incorporating a digital memory array for synapse weight storage, a digital accumulator, and analog soma circuitry to simulate multiple neurons, enabling efficient and scalable neural network operations with improved biological fidelity.

Hardware-Software Co-Design Considerations

Effective hardware-software co-design represents a critical success factor when implementing either neuromorphic or in-memory computing architectures. These paradigms fundamentally challenge traditional von Neumann computing models, necessitating novel approaches to system integration that optimize the unique capabilities of each technology.

For neuromorphic systems, hardware-software co-design must address the event-driven, asynchronous nature of spiking neural networks. Development frameworks like IBM's TrueNorth Neurosynaptic System and Intel's Loihi require specialized programming models that differ significantly from conventional computing paradigms. These systems demand software abstractions capable of mapping biological neural principles to hardware implementations while maintaining energy efficiency advantages.

In-memory computing presents different co-design challenges, primarily focused on optimizing data movement between processing and storage elements. Software frameworks must efficiently manage the parallel nature of computational memory arrays while addressing precision limitations inherent in analog computing approaches. Companies like Mythic and Syntiant have developed specialized compiler toolchains that translate traditional neural network models into optimized representations for their in-memory computing hardware.

Both paradigms share common co-design considerations around managing the memory hierarchy. Effective software must intelligently distribute computation across different memory levels, balancing performance against energy consumption. This requires sophisticated scheduling algorithms and memory management techniques that understand the underlying hardware architecture's unique characteristics.

Programming models represent another critical co-design element. Neuromorphic systems typically employ spike-based programming approaches, while in-memory computing may utilize more conventional matrix operation abstractions. In both cases, abstraction layers must shield application developers from hardware complexities while enabling performance optimization.

Simulation environments play a vital role in the co-design process, allowing software development to proceed in parallel with hardware implementation. Tools like NEST for neuromorphic systems and specialized simulators for in-memory architectures enable algorithm development and testing before physical hardware becomes available.

The co-design process must also address system integration challenges, particularly when incorporating these novel computing paradigms into existing technology stacks. Software interfaces that enable seamless integration with conventional computing resources are essential for practical deployment, requiring careful consideration of data formats, communication protocols, and synchronization mechanisms.

For neuromorphic systems, hardware-software co-design must address the event-driven, asynchronous nature of spiking neural networks. Development frameworks like IBM's TrueNorth Neurosynaptic System and Intel's Loihi require specialized programming models that differ significantly from conventional computing paradigms. These systems demand software abstractions capable of mapping biological neural principles to hardware implementations while maintaining energy efficiency advantages.

In-memory computing presents different co-design challenges, primarily focused on optimizing data movement between processing and storage elements. Software frameworks must efficiently manage the parallel nature of computational memory arrays while addressing precision limitations inherent in analog computing approaches. Companies like Mythic and Syntiant have developed specialized compiler toolchains that translate traditional neural network models into optimized representations for their in-memory computing hardware.

Both paradigms share common co-design considerations around managing the memory hierarchy. Effective software must intelligently distribute computation across different memory levels, balancing performance against energy consumption. This requires sophisticated scheduling algorithms and memory management techniques that understand the underlying hardware architecture's unique characteristics.

Programming models represent another critical co-design element. Neuromorphic systems typically employ spike-based programming approaches, while in-memory computing may utilize more conventional matrix operation abstractions. In both cases, abstraction layers must shield application developers from hardware complexities while enabling performance optimization.

Simulation environments play a vital role in the co-design process, allowing software development to proceed in parallel with hardware implementation. Tools like NEST for neuromorphic systems and specialized simulators for in-memory architectures enable algorithm development and testing before physical hardware becomes available.

The co-design process must also address system integration challenges, particularly when incorporating these novel computing paradigms into existing technology stacks. Software interfaces that enable seamless integration with conventional computing resources are essential for practical deployment, requiring careful consideration of data formats, communication protocols, and synchronization mechanisms.

Standardization and Benchmarking Frameworks

The standardization and benchmarking landscape for neuromorphic and in-memory computing technologies remains fragmented, presenting significant challenges for cross-paradigm comparisons. Currently, there exists no universally accepted framework for evaluating these distinct computing approaches, making objective performance assessment difficult for researchers and industry stakeholders.

For neuromorphic computing, several benchmarking initiatives have emerged, including the Neuromorphic Intelligence Benchmark (NIB) and SNN-specific performance metrics that evaluate spike timing, energy efficiency, and computational accuracy. These frameworks typically focus on bio-inspired tasks such as pattern recognition, sensory processing, and temporal sequence learning. However, they often fail to provide meaningful comparisons with traditional computing paradigms or in-memory computing approaches.

In-memory computing benchmarks, conversely, tend to emphasize metrics like computational density, memory access latency, and power efficiency. The Processing-in-Memory Benchmark Suite (PIMBS) and Compute Express Link (CXL) performance standards have gained traction, though these primarily address conventional computational tasks rather than neuromorphic applications.

The IEEE Neuromorphic Computing Standards Working Group has begun addressing this gap by developing standardized evaluation methodologies that span both paradigms. Their framework proposes common metrics including energy per operation, computational density, learning capability, and fault tolerance. Similarly, the In-Memory Computing Benchmarking Coordination (IMCBC) consortium is working to establish cross-platform evaluation criteria.

Recent collaborative efforts between academic institutions and industry leaders have produced promising hybrid benchmarking approaches. The Heterogeneous Computing Performance Index (HCPI) attempts to normalize performance across neuromorphic and in-memory architectures using workload-specific efficiency metrics rather than raw computational power. This enables more meaningful comparisons between fundamentally different architectural approaches.

For effective standardization to advance, several critical challenges must be addressed. First, the development of representative workloads that can meaningfully exercise both computing paradigms remains essential. Second, metrics must evolve beyond traditional performance indicators to capture the unique advantages of each approach. Finally, hardware-software co-design considerations must be incorporated into benchmarking methodologies, as both neuromorphic and in-memory computing performance is heavily influenced by algorithm implementation and optimization.

The establishment of comprehensive standardization and benchmarking frameworks represents a crucial step toward enabling fair comparisons between these emerging computing paradigms, ultimately accelerating their adoption in commercial applications and guiding future research directions.

For neuromorphic computing, several benchmarking initiatives have emerged, including the Neuromorphic Intelligence Benchmark (NIB) and SNN-specific performance metrics that evaluate spike timing, energy efficiency, and computational accuracy. These frameworks typically focus on bio-inspired tasks such as pattern recognition, sensory processing, and temporal sequence learning. However, they often fail to provide meaningful comparisons with traditional computing paradigms or in-memory computing approaches.

In-memory computing benchmarks, conversely, tend to emphasize metrics like computational density, memory access latency, and power efficiency. The Processing-in-Memory Benchmark Suite (PIMBS) and Compute Express Link (CXL) performance standards have gained traction, though these primarily address conventional computational tasks rather than neuromorphic applications.

The IEEE Neuromorphic Computing Standards Working Group has begun addressing this gap by developing standardized evaluation methodologies that span both paradigms. Their framework proposes common metrics including energy per operation, computational density, learning capability, and fault tolerance. Similarly, the In-Memory Computing Benchmarking Coordination (IMCBC) consortium is working to establish cross-platform evaluation criteria.

Recent collaborative efforts between academic institutions and industry leaders have produced promising hybrid benchmarking approaches. The Heterogeneous Computing Performance Index (HCPI) attempts to normalize performance across neuromorphic and in-memory architectures using workload-specific efficiency metrics rather than raw computational power. This enables more meaningful comparisons between fundamentally different architectural approaches.

For effective standardization to advance, several critical challenges must be addressed. First, the development of representative workloads that can meaningfully exercise both computing paradigms remains essential. Second, metrics must evolve beyond traditional performance indicators to capture the unique advantages of each approach. Finally, hardware-software co-design considerations must be incorporated into benchmarking methodologies, as both neuromorphic and in-memory computing performance is heavily influenced by algorithm implementation and optimization.

The establishment of comprehensive standardization and benchmarking frameworks represents a crucial step toward enabling fair comparisons between these emerging computing paradigms, ultimately accelerating their adoption in commercial applications and guiding future research directions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!