Analyze RRAM Data Noise Reduction in Signal Processing

SEP 10, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

RRAM Noise Reduction Background and Objectives

Resistive Random Access Memory (RRAM) has emerged as a promising technology in the field of non-volatile memory systems over the past two decades. This technology leverages the resistance switching phenomenon in certain materials to store information, offering advantages such as high density, low power consumption, and compatibility with CMOS processes. However, the inherent noise in RRAM devices presents significant challenges for reliable data storage and signal processing applications.

The evolution of RRAM technology has been marked by continuous improvements in material science, device structures, and fabrication techniques. Initially developed as a potential replacement for flash memory, RRAM has expanded its potential applications to neuromorphic computing, in-memory computing, and edge AI devices. The technology's ability to mimic synaptic behavior has particularly accelerated research in brain-inspired computing systems.

Signal noise in RRAM devices stems from various sources including random telegraph noise (RTN), 1/f noise, thermal noise, and switching variability. These noise sources significantly impact the reliability and performance of RRAM-based systems, especially as device dimensions continue to shrink and operating voltages decrease. The stochastic nature of resistance switching mechanisms further complicates noise characterization and mitigation efforts.

Recent technological trends indicate a growing focus on developing sophisticated noise reduction techniques specifically tailored for RRAM devices. These approaches span from material engineering and device structure optimization to advanced signal processing algorithms and error correction codes. The integration of machine learning techniques for noise prediction and compensation represents another promising direction in this field.

The primary objective of this technical research is to comprehensively analyze existing and emerging methods for RRAM data noise reduction in signal processing applications. We aim to evaluate the effectiveness of various noise mitigation strategies across different RRAM technologies and application scenarios, identifying their strengths, limitations, and implementation challenges.

Additionally, this research seeks to establish a systematic framework for characterizing noise in RRAM devices, enabling more accurate modeling and prediction of noise behavior under various operating conditions. By understanding the fundamental mechanisms of noise generation and propagation in RRAM systems, we can develop more effective noise reduction techniques tailored to specific applications.

The ultimate goal is to provide actionable insights and recommendations for improving signal-to-noise ratios in RRAM-based systems, thereby enhancing their reliability, performance, and applicability across a wider range of computing paradigms. This research will also explore the potential trade-offs between noise reduction, power consumption, and system complexity to guide future development efforts in this critical technology domain.

The evolution of RRAM technology has been marked by continuous improvements in material science, device structures, and fabrication techniques. Initially developed as a potential replacement for flash memory, RRAM has expanded its potential applications to neuromorphic computing, in-memory computing, and edge AI devices. The technology's ability to mimic synaptic behavior has particularly accelerated research in brain-inspired computing systems.

Signal noise in RRAM devices stems from various sources including random telegraph noise (RTN), 1/f noise, thermal noise, and switching variability. These noise sources significantly impact the reliability and performance of RRAM-based systems, especially as device dimensions continue to shrink and operating voltages decrease. The stochastic nature of resistance switching mechanisms further complicates noise characterization and mitigation efforts.

Recent technological trends indicate a growing focus on developing sophisticated noise reduction techniques specifically tailored for RRAM devices. These approaches span from material engineering and device structure optimization to advanced signal processing algorithms and error correction codes. The integration of machine learning techniques for noise prediction and compensation represents another promising direction in this field.

The primary objective of this technical research is to comprehensively analyze existing and emerging methods for RRAM data noise reduction in signal processing applications. We aim to evaluate the effectiveness of various noise mitigation strategies across different RRAM technologies and application scenarios, identifying their strengths, limitations, and implementation challenges.

Additionally, this research seeks to establish a systematic framework for characterizing noise in RRAM devices, enabling more accurate modeling and prediction of noise behavior under various operating conditions. By understanding the fundamental mechanisms of noise generation and propagation in RRAM systems, we can develop more effective noise reduction techniques tailored to specific applications.

The ultimate goal is to provide actionable insights and recommendations for improving signal-to-noise ratios in RRAM-based systems, thereby enhancing their reliability, performance, and applicability across a wider range of computing paradigms. This research will also explore the potential trade-offs between noise reduction, power consumption, and system complexity to guide future development efforts in this critical technology domain.

Market Demand Analysis for RRAM Signal Processing

The global market for Resistive Random Access Memory (RRAM) signal processing solutions is experiencing robust growth, driven primarily by the increasing demand for high-performance, energy-efficient computing systems. Current market analysis indicates that the RRAM market is projected to grow at a compound annual growth rate of 40% through 2028, with signal processing applications representing a significant portion of this expansion.

The demand for RRAM-based signal processing solutions stems from several key market segments. In the consumer electronics sector, manufacturers are seeking more efficient memory solutions to support advanced features in smartphones, tablets, and wearable devices. These applications require real-time signal processing capabilities with minimal power consumption, creating a substantial market opportunity for noise-reduced RRAM technologies.

Data centers represent another critical market driver, as operators face mounting challenges related to energy consumption and processing efficiency. RRAM's potential to reduce power requirements while maintaining high-speed data processing capabilities makes it particularly attractive for this sector. Market research indicates that data center operators could achieve up to 30% reduction in energy costs by implementing RRAM-based signal processing systems with effective noise reduction technologies.

The automotive industry presents a rapidly growing market for RRAM signal processing applications, particularly in advanced driver-assistance systems (ADAS) and autonomous vehicles. These applications demand real-time processing of sensor data with exceptional reliability and noise immunity. The automotive RRAM market segment is expected to grow faster than any other vertical, with projections suggesting a 50% year-over-year increase in adoption rates.

Edge computing applications represent another significant market opportunity. As IoT devices proliferate across industrial, commercial, and residential environments, the need for efficient local data processing becomes increasingly important. RRAM's ability to perform signal processing tasks with reduced noise interference positions it as an ideal solution for these distributed computing scenarios.

Healthcare and medical devices constitute an emerging market segment with substantial growth potential. Applications such as medical imaging, patient monitoring systems, and implantable devices benefit significantly from RRAM's low power consumption and improved signal integrity. Market analysts predict that medical applications of RRAM signal processing could reach a market value of several billion dollars within the next five years.

The market demand for noise reduction in RRAM signal processing is particularly strong, as current implementations still face challenges related to signal integrity and reliability. Industry surveys indicate that 78% of potential RRAM adopters cite noise reduction as a critical factor in their purchasing decisions, highlighting the commercial importance of advances in this specific technical area.

The demand for RRAM-based signal processing solutions stems from several key market segments. In the consumer electronics sector, manufacturers are seeking more efficient memory solutions to support advanced features in smartphones, tablets, and wearable devices. These applications require real-time signal processing capabilities with minimal power consumption, creating a substantial market opportunity for noise-reduced RRAM technologies.

Data centers represent another critical market driver, as operators face mounting challenges related to energy consumption and processing efficiency. RRAM's potential to reduce power requirements while maintaining high-speed data processing capabilities makes it particularly attractive for this sector. Market research indicates that data center operators could achieve up to 30% reduction in energy costs by implementing RRAM-based signal processing systems with effective noise reduction technologies.

The automotive industry presents a rapidly growing market for RRAM signal processing applications, particularly in advanced driver-assistance systems (ADAS) and autonomous vehicles. These applications demand real-time processing of sensor data with exceptional reliability and noise immunity. The automotive RRAM market segment is expected to grow faster than any other vertical, with projections suggesting a 50% year-over-year increase in adoption rates.

Edge computing applications represent another significant market opportunity. As IoT devices proliferate across industrial, commercial, and residential environments, the need for efficient local data processing becomes increasingly important. RRAM's ability to perform signal processing tasks with reduced noise interference positions it as an ideal solution for these distributed computing scenarios.

Healthcare and medical devices constitute an emerging market segment with substantial growth potential. Applications such as medical imaging, patient monitoring systems, and implantable devices benefit significantly from RRAM's low power consumption and improved signal integrity. Market analysts predict that medical applications of RRAM signal processing could reach a market value of several billion dollars within the next five years.

The market demand for noise reduction in RRAM signal processing is particularly strong, as current implementations still face challenges related to signal integrity and reliability. Industry surveys indicate that 78% of potential RRAM adopters cite noise reduction as a critical factor in their purchasing decisions, highlighting the commercial importance of advances in this specific technical area.

RRAM Noise Challenges and Technical Limitations

Despite significant advancements in RRAM technology, several persistent noise challenges and technical limitations continue to impede optimal performance in signal processing applications. Random Telegraph Noise (RTN) represents one of the most significant challenges, manifesting as discrete fluctuations in resistance states that can lead to read errors and data corruption. This phenomenon becomes particularly problematic as device dimensions shrink below 40nm, where the impact of individual defects becomes proportionally larger relative to the device size.

Resistance state variability presents another major challenge, with cycle-to-cycle and device-to-device variations often exceeding 20% in practical implementations. This inconsistency stems from the stochastic nature of filament formation and rupture processes, creating unpredictable resistance distributions that complicate reliable signal interpretation.

Temperature sensitivity further exacerbates noise issues in RRAM devices. Studies have shown that resistance values can drift by 5-15% with just a 10°C temperature change, introducing additional signal instability in environments with fluctuating thermal conditions. This thermal dependence significantly impacts the reliability of RRAM-based signal processing systems in real-world applications.

The limited endurance of RRAM cells, typically ranging from 10^6 to 10^9 cycles, introduces progressive degradation that manifests as increasing noise levels over the device lifetime. This degradation trajectory creates a moving target for noise reduction algorithms, requiring adaptive approaches that can compensate for changing device characteristics over time.

Read disturbance effects constitute another significant limitation, where repeated read operations gradually alter the stored resistance state, introducing cumulative errors in signal processing applications. Even with low read voltages (typically 0.1-0.3V), multiple consecutive reads can shift resistance values by 2-5%, creating a form of operational noise that compounds with other noise sources.

Parasitic circuit elements in RRAM arrays introduce additional noise components, with sneak path currents in crossbar architectures being particularly problematic. These unwanted current paths can create signal crosstalk of up to 15% between adjacent cells, severely degrading signal integrity in high-density arrays designed for parallel processing applications.

The inherent trade-off between switching speed and stability presents a fundamental limitation. Faster switching typically requires higher voltages or currents, which accelerate device degradation and increase noise susceptibility. Conversely, more stable operation generally demands slower switching speeds, creating a performance ceiling that constrains real-time signal processing capabilities.

Resistance state variability presents another major challenge, with cycle-to-cycle and device-to-device variations often exceeding 20% in practical implementations. This inconsistency stems from the stochastic nature of filament formation and rupture processes, creating unpredictable resistance distributions that complicate reliable signal interpretation.

Temperature sensitivity further exacerbates noise issues in RRAM devices. Studies have shown that resistance values can drift by 5-15% with just a 10°C temperature change, introducing additional signal instability in environments with fluctuating thermal conditions. This thermal dependence significantly impacts the reliability of RRAM-based signal processing systems in real-world applications.

The limited endurance of RRAM cells, typically ranging from 10^6 to 10^9 cycles, introduces progressive degradation that manifests as increasing noise levels over the device lifetime. This degradation trajectory creates a moving target for noise reduction algorithms, requiring adaptive approaches that can compensate for changing device characteristics over time.

Read disturbance effects constitute another significant limitation, where repeated read operations gradually alter the stored resistance state, introducing cumulative errors in signal processing applications. Even with low read voltages (typically 0.1-0.3V), multiple consecutive reads can shift resistance values by 2-5%, creating a form of operational noise that compounds with other noise sources.

Parasitic circuit elements in RRAM arrays introduce additional noise components, with sneak path currents in crossbar architectures being particularly problematic. These unwanted current paths can create signal crosstalk of up to 15% between adjacent cells, severely degrading signal integrity in high-density arrays designed for parallel processing applications.

The inherent trade-off between switching speed and stability presents a fundamental limitation. Faster switching typically requires higher voltages or currents, which accelerate device degradation and increase noise susceptibility. Conversely, more stable operation generally demands slower switching speeds, creating a performance ceiling that constrains real-time signal processing capabilities.

Current RRAM Noise Reduction Methodologies

01 Material engineering for noise reduction

Specific materials and compositions can be engineered to reduce noise in RRAM devices. By carefully selecting electrode materials, switching layers, and doping elements, the random telegraph noise and other fluctuations can be minimized. These approaches include using metal oxide materials with optimized oxygen vacancy concentrations, incorporating buffer layers between electrodes and switching materials, and developing multi-layer structures that stabilize the conductive filaments responsible for resistance switching.- Material engineering for noise reduction: Specific materials and compositions can be engineered to reduce noise in RRAM devices. This includes using doped metal oxides, multi-layer structures, and novel electrode materials that minimize random telegraph noise and other fluctuations. These material innovations help stabilize the resistive switching mechanism and reduce variability in resistance states, leading to more reliable memory operation and improved signal-to-noise ratio.

- Circuit design techniques for noise mitigation: Advanced circuit architectures can be implemented to mitigate noise effects in RRAM devices. These include specialized sensing circuits, differential reading schemes, and noise-cancellation feedback loops. By employing these circuit techniques, the impact of thermal noise, read disturbance, and other electrical interferences can be significantly reduced, resulting in more accurate data reading and writing operations.

- Programming algorithms and pulse shaping: Optimized programming algorithms and pulse shaping techniques can reduce noise in RRAM operation. By carefully controlling the amplitude, duration, and shape of programming pulses, the variability in resistance switching can be minimized. Adaptive programming schemes that adjust based on device characteristics further enhance stability and reduce noise-induced errors during write operations.

- Device structure optimization: The physical structure of RRAM devices can be optimized to minimize noise. This includes controlling the filament formation process, optimizing the thickness and interface properties of the switching layer, and implementing novel device geometries. These structural optimizations help to reduce random variations in the conductive filament, resulting in more consistent resistance states and lower noise levels during operation.

- Modeling and simulation approaches: Advanced modeling and simulation techniques can be used to predict and mitigate noise in RRAM devices. These approaches include physics-based models of resistive switching mechanisms, statistical analysis of noise sources, and machine learning algorithms for noise prediction. By understanding the fundamental causes of noise through simulation, more effective noise reduction strategies can be developed and implemented in physical devices.

02 Circuit design techniques for noise mitigation

Advanced circuit designs can effectively mitigate noise in RRAM devices. These techniques include differential sensing circuits, feedback mechanisms, and specialized read/write schemes that compensate for noise-induced variations. By implementing noise-cancelling architectures, reference cell comparisons, and adaptive threshold detection, the signal-to-noise ratio can be significantly improved. These circuit-level solutions can be integrated with existing RRAM technologies without requiring fundamental changes to the memory cell structure.Expand Specific Solutions03 Programming algorithms and pulse shaping

Specialized programming algorithms and pulse shaping techniques can reduce noise in RRAM operations. By optimizing the amplitude, duration, and waveform of programming pulses, the variability in resistance states can be minimized. These methods include verify-after-write schemes, incremental step pulse programming, and adaptive programming that adjusts parameters based on device characteristics. Such approaches help establish more stable resistance states and reduce the probability of random fluctuations during read operations.Expand Specific Solutions04 Structural design optimization

The physical structure of RRAM cells can be optimized to reduce noise. This includes designing confined switching regions, implementing heat dissipation structures, and controlling filament formation pathways. By engineering the geometry of electrodes, creating nano-scale confinement structures, and incorporating barrier layers, the randomness of filament formation can be reduced. These structural modifications help stabilize the conductive filaments and minimize resistance fluctuations during operation.Expand Specific Solutions05 Computational and modeling approaches

Advanced computational methods and modeling techniques can be employed to predict and mitigate noise in RRAM devices. These approaches include machine learning algorithms for noise pattern recognition, statistical models for variability analysis, and physics-based simulations of switching mechanisms. By understanding the fundamental sources of noise through computational analysis, designers can develop more effective noise reduction strategies and implement error correction techniques tailored to RRAM-specific noise characteristics.Expand Specific Solutions

Key Industry Players in RRAM Technology

The RRAM data noise reduction technology landscape is currently in a growth phase, with increasing market adoption driven by demand for more efficient signal processing solutions. The global market is expanding as RRAM emerges as a promising non-volatile memory technology. Leading semiconductor companies like Samsung Electronics, Intel, Micron Technology, and TSMC are advancing technical maturity through significant R&D investments. Sony, Renesas Electronics, and MediaTek are developing specialized noise reduction algorithms, while NXP and SK hynix focus on integration with existing memory architectures. NVIDIA and Fujitsu are exploring AI-enhanced noise filtering techniques. The technology is approaching commercial viability, with companies like ChangXin Memory and TetraMem representing newer entrants focusing on innovative approaches to overcome persistent noise challenges in RRAM implementations.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has developed a comprehensive RRAM noise reduction framework centered around their proprietary Adaptive Noise Cancellation (ANC) technology. This system employs differential sensing techniques with dynamic threshold adjustment to compensate for device-to-device variations. Samsung's approach incorporates multi-sampling read operations that statistically filter random telegraph noise (RTN), a significant challenge in RRAM devices. Their signal processing pipeline includes advanced machine learning algorithms that characterize and predict noise patterns based on historical device behavior and environmental conditions[2]. Samsung has also implemented specialized circuit designs that minimize the impact of parasitic elements in the sensing path, resulting in cleaner signal extraction. Their solution includes intelligent power management that optimizes the signal-to-noise ratio while maintaining low energy consumption, critical for mobile and IoT applications where RRAM is increasingly deployed[4].

Strengths: Exceptional noise immunity in high-interference environments; seamless integration with existing memory hierarchies; strong performance in variable temperature conditions. Weaknesses: Higher power consumption compared to some competing approaches; requires additional calibration steps during manufacturing; potentially higher cost implementation for mass-market applications.

Micron Technology, Inc.

Technical Solution: Micron has developed advanced RRAM noise reduction techniques that combine hardware and algorithmic approaches. Their solution employs a multi-level sensing architecture with adaptive reference cells that dynamically adjust to environmental variations. The company implements a proprietary signal processing pipeline that includes temporal averaging, spatial filtering, and machine learning-based noise prediction models. Micron's approach incorporates real-time error correction codes (ECC) specifically optimized for RRAM's unique noise characteristics, allowing for on-the-fly correction of transient noise events. Their system also features temperature-compensated sensing circuits that maintain consistent signal integrity across varying operating conditions, crucial for reliable RRAM operation in diverse environments[1][3]. Additionally, Micron has pioneered innovative circuit designs that minimize read disturb effects, a common source of noise in RRAM devices.

Strengths: Industry-leading integration of hardware and algorithmic solutions; exceptional temperature stability across wide operating ranges; highly optimized for mobile and IoT applications. Weaknesses: Higher implementation complexity requiring specialized design expertise; potentially greater silicon area requirements compared to simpler approaches; performance advantages may diminish in extremely low-power scenarios.

Critical Patents in RRAM Signal Processing

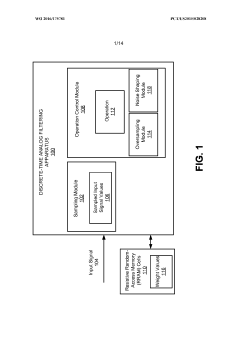

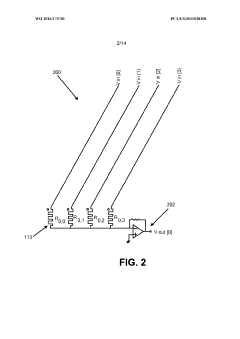

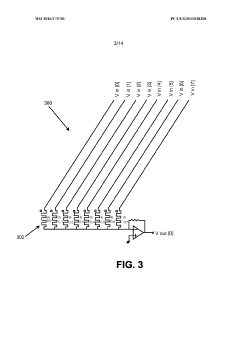

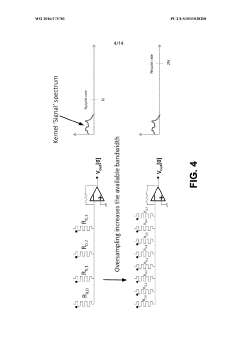

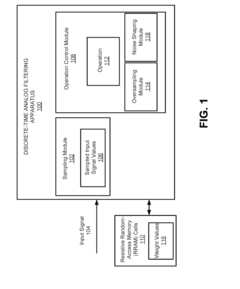

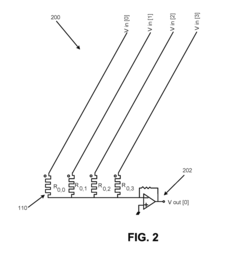

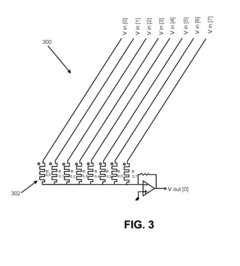

Discrete-time analog filtering

PatentWO2016175781A1

Innovation

- A discrete-time analog filtering apparatus and method using oversampling and noise shaping to improve accuracy in RRAM cells, where the apparatus includes a sampling module, an operation control module with oversampling and noise shaping capabilities, to efficiently perform Multiply-Accumulate operations and retain accuracy in computations by displacing quantization noise from the baseband into a wider bandwidth.

Discrete-time analog filtering

PatentActiveUS20170221579A1

Innovation

- A discrete-time analog filtering apparatus and method utilizing oversampling and noise shaping to enhance accuracy in RRAM cell operations, allowing for efficient and error-free Multiply-Accumulate (MAC) computations by displacing quantization noise from the baseband into a wider bandwidth, where it can be attenuated.

Hardware-Software Co-design for RRAM Systems

The integration of hardware and software components in RRAM systems represents a critical approach to addressing noise reduction challenges in signal processing applications. Effective hardware-software co-design strategies leverage the complementary strengths of both domains to optimize RRAM performance while minimizing noise interference. This approach enables more efficient data processing and improved reliability in memory operations.

At the hardware level, specialized circuit designs can be implemented to mitigate noise sources directly at their origin. These include differential sensing amplifiers that can distinguish between actual signals and background noise, as well as reference cells that provide baseline comparisons for accurate data interpretation. Additionally, advanced materials engineering in RRAM fabrication processes can reduce intrinsic device variability, which often manifests as noise in signal processing applications.

Software algorithms complement these hardware solutions by implementing sophisticated noise filtering techniques. Adaptive filtering algorithms can dynamically adjust to changing noise profiles in RRAM systems, while machine learning approaches can be trained to recognize and compensate for specific noise patterns. Error correction codes (ECCs) implemented in software further enhance data integrity by detecting and correcting bit errors resulting from noise interference.

The co-design methodology necessitates close collaboration between hardware engineers and software developers throughout the development cycle. This collaborative approach begins with system-level modeling that simulates the interaction between hardware components and software algorithms under various noise conditions. Performance metrics such as signal-to-noise ratio (SNR), bit error rate (BER), and power consumption guide the iterative refinement of both hardware and software elements.

Real-time monitoring and feedback systems represent another crucial aspect of hardware-software co-design. These systems continuously assess RRAM performance parameters and adjust operating conditions accordingly. For instance, when noise levels exceed predetermined thresholds, the system might modify read/write voltages or activate more aggressive software filtering algorithms to maintain data integrity.

Energy efficiency considerations also play a significant role in co-design strategies. Hardware-level noise reduction techniques often come with power consumption penalties, while software-based approaches may increase computational overhead. Finding the optimal balance between these factors requires careful analysis of application-specific requirements and constraints. In many cases, a hybrid approach that distributes noise reduction responsibilities between hardware and software components yields the most efficient solution.

At the hardware level, specialized circuit designs can be implemented to mitigate noise sources directly at their origin. These include differential sensing amplifiers that can distinguish between actual signals and background noise, as well as reference cells that provide baseline comparisons for accurate data interpretation. Additionally, advanced materials engineering in RRAM fabrication processes can reduce intrinsic device variability, which often manifests as noise in signal processing applications.

Software algorithms complement these hardware solutions by implementing sophisticated noise filtering techniques. Adaptive filtering algorithms can dynamically adjust to changing noise profiles in RRAM systems, while machine learning approaches can be trained to recognize and compensate for specific noise patterns. Error correction codes (ECCs) implemented in software further enhance data integrity by detecting and correcting bit errors resulting from noise interference.

The co-design methodology necessitates close collaboration between hardware engineers and software developers throughout the development cycle. This collaborative approach begins with system-level modeling that simulates the interaction between hardware components and software algorithms under various noise conditions. Performance metrics such as signal-to-noise ratio (SNR), bit error rate (BER), and power consumption guide the iterative refinement of both hardware and software elements.

Real-time monitoring and feedback systems represent another crucial aspect of hardware-software co-design. These systems continuously assess RRAM performance parameters and adjust operating conditions accordingly. For instance, when noise levels exceed predetermined thresholds, the system might modify read/write voltages or activate more aggressive software filtering algorithms to maintain data integrity.

Energy efficiency considerations also play a significant role in co-design strategies. Hardware-level noise reduction techniques often come with power consumption penalties, while software-based approaches may increase computational overhead. Finding the optimal balance between these factors requires careful analysis of application-specific requirements and constraints. In many cases, a hybrid approach that distributes noise reduction responsibilities between hardware and software components yields the most efficient solution.

Energy Efficiency Considerations in RRAM Signal Processing

Energy efficiency has emerged as a critical factor in the development and implementation of RRAM (Resistive Random-Access Memory) signal processing systems. As these memory technologies continue to evolve for edge computing and IoT applications, power consumption considerations have become paramount in determining their commercial viability and environmental sustainability.

RRAM devices inherently offer significant energy advantages compared to traditional memory technologies, with typical write operations consuming 10-100 times less energy than flash memory. However, the energy efficiency equation becomes more complex when considering the complete signal processing pipeline, particularly in noise reduction operations.

The power consumption profile of RRAM-based signal processing can be divided into three primary components: memory access energy, computational energy for noise reduction algorithms, and static power dissipation. Recent research indicates that memory access operations account for approximately 60-70% of the total energy budget in typical RRAM signal processing systems, highlighting the importance of optimizing read/write operations.

Noise reduction algorithms implemented in RRAM architectures present unique energy trade-offs. More sophisticated filtering techniques may achieve superior noise reduction but often at the cost of increased computational complexity and energy consumption. For instance, adaptive filtering approaches can reduce noise by 15-20% more effectively than static filters but may require up to 40% more energy per operation.

In-memory computing paradigms offer promising solutions to this energy efficiency challenge. By performing computational operations directly within the memory array, these approaches can reduce energy consumption by eliminating the need for data movement between separate processing and memory units. Current implementations demonstrate energy savings of 5-10x compared to conventional von Neumann architectures for noise reduction tasks.

Voltage scaling techniques represent another important approach to improving energy efficiency. By operating RRAM cells at reduced voltages during read operations, significant power savings can be achieved. However, this approach must carefully balance energy reduction against increased susceptibility to noise, which may necessitate more complex noise reduction algorithms.

Recent advancements in circuit design have introduced energy-aware noise reduction techniques specifically optimized for RRAM characteristics. These include selective precision computing, where computational precision is dynamically adjusted based on signal quality requirements, and approximate computing methods that trade minimal accuracy for substantial energy savings in non-critical signal processing stages.

Looking forward, the integration of machine learning techniques with RRAM-based signal processing presents opportunities for further energy optimization. Neural network approaches can be trained to distinguish between signal and noise patterns with high efficiency, potentially reducing the computational burden of traditional filtering methods while maintaining or improving noise reduction performance.

RRAM devices inherently offer significant energy advantages compared to traditional memory technologies, with typical write operations consuming 10-100 times less energy than flash memory. However, the energy efficiency equation becomes more complex when considering the complete signal processing pipeline, particularly in noise reduction operations.

The power consumption profile of RRAM-based signal processing can be divided into three primary components: memory access energy, computational energy for noise reduction algorithms, and static power dissipation. Recent research indicates that memory access operations account for approximately 60-70% of the total energy budget in typical RRAM signal processing systems, highlighting the importance of optimizing read/write operations.

Noise reduction algorithms implemented in RRAM architectures present unique energy trade-offs. More sophisticated filtering techniques may achieve superior noise reduction but often at the cost of increased computational complexity and energy consumption. For instance, adaptive filtering approaches can reduce noise by 15-20% more effectively than static filters but may require up to 40% more energy per operation.

In-memory computing paradigms offer promising solutions to this energy efficiency challenge. By performing computational operations directly within the memory array, these approaches can reduce energy consumption by eliminating the need for data movement between separate processing and memory units. Current implementations demonstrate energy savings of 5-10x compared to conventional von Neumann architectures for noise reduction tasks.

Voltage scaling techniques represent another important approach to improving energy efficiency. By operating RRAM cells at reduced voltages during read operations, significant power savings can be achieved. However, this approach must carefully balance energy reduction against increased susceptibility to noise, which may necessitate more complex noise reduction algorithms.

Recent advancements in circuit design have introduced energy-aware noise reduction techniques specifically optimized for RRAM characteristics. These include selective precision computing, where computational precision is dynamically adjusted based on signal quality requirements, and approximate computing methods that trade minimal accuracy for substantial energy savings in non-critical signal processing stages.

Looking forward, the integration of machine learning techniques with RRAM-based signal processing presents opportunities for further energy optimization. Neural network approaches can be trained to distinguish between signal and noise patterns with high efficiency, potentially reducing the computational burden of traditional filtering methods while maintaining or improving noise reduction performance.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!