RRAM vs Neural Memory: Testing Speed and Flexibility

SEP 10, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

RRAM and Neural Memory Background and Objectives

Resistive Random Access Memory (RRAM) and Neural Memory represent two significant advancements in memory technology that have emerged as potential solutions to address the limitations of conventional memory systems. RRAM, first conceptualized in the early 2000s, operates on the principle of resistance switching, where the resistance state of a material can be altered to represent binary data. This technology has evolved from simple metal-oxide structures to complex multi-layer architectures capable of mimicking synaptic functions.

Neural Memory, on the other hand, represents a more recent development that draws inspiration from the human brain's neural networks. It aims to create memory systems that can not only store information but also process it in a manner similar to biological neural systems. The evolution of Neural Memory has been closely tied to advancements in artificial intelligence and neuromorphic computing, with significant breakthroughs occurring in the last decade.

The technological trajectory of both RRAM and Neural Memory has been characterized by a continuous pursuit of higher density, lower power consumption, and improved reliability. RRAM has seen substantial improvements in switching speed and endurance, while Neural Memory has made strides in learning capabilities and adaptability. These advancements have positioned both technologies as potential candidates for next-generation computing systems.

The primary objective of comparing RRAM and Neural Memory is to evaluate their respective performance characteristics, particularly in terms of speed and flexibility. Speed refers to the rate at which data can be written, read, and processed, while flexibility encompasses adaptability to different computational tasks and integration with existing systems. Understanding these aspects is crucial for determining the most suitable applications for each technology.

Additionally, this technical research aims to identify the potential synergies between RRAM and Neural Memory, exploring how their complementary strengths might be leveraged to create hybrid systems that overcome the limitations of traditional von Neumann architectures. Such hybrid approaches could potentially address the growing demands for efficient processing of complex data sets in emerging fields such as edge computing, autonomous systems, and real-time data analytics.

Furthermore, this investigation seeks to establish a framework for evaluating future memory technologies, considering not only technical performance metrics but also practical aspects such as manufacturability, scalability, and compatibility with existing semiconductor fabrication processes. This comprehensive approach will provide valuable insights for strategic technology planning and investment decisions in the rapidly evolving landscape of advanced computing systems.

Neural Memory, on the other hand, represents a more recent development that draws inspiration from the human brain's neural networks. It aims to create memory systems that can not only store information but also process it in a manner similar to biological neural systems. The evolution of Neural Memory has been closely tied to advancements in artificial intelligence and neuromorphic computing, with significant breakthroughs occurring in the last decade.

The technological trajectory of both RRAM and Neural Memory has been characterized by a continuous pursuit of higher density, lower power consumption, and improved reliability. RRAM has seen substantial improvements in switching speed and endurance, while Neural Memory has made strides in learning capabilities and adaptability. These advancements have positioned both technologies as potential candidates for next-generation computing systems.

The primary objective of comparing RRAM and Neural Memory is to evaluate their respective performance characteristics, particularly in terms of speed and flexibility. Speed refers to the rate at which data can be written, read, and processed, while flexibility encompasses adaptability to different computational tasks and integration with existing systems. Understanding these aspects is crucial for determining the most suitable applications for each technology.

Additionally, this technical research aims to identify the potential synergies between RRAM and Neural Memory, exploring how their complementary strengths might be leveraged to create hybrid systems that overcome the limitations of traditional von Neumann architectures. Such hybrid approaches could potentially address the growing demands for efficient processing of complex data sets in emerging fields such as edge computing, autonomous systems, and real-time data analytics.

Furthermore, this investigation seeks to establish a framework for evaluating future memory technologies, considering not only technical performance metrics but also practical aspects such as manufacturability, scalability, and compatibility with existing semiconductor fabrication processes. This comprehensive approach will provide valuable insights for strategic technology planning and investment decisions in the rapidly evolving landscape of advanced computing systems.

Market Analysis for Next-Generation Memory Solutions

The next-generation memory solutions market is experiencing unprecedented growth, driven by the increasing demands of artificial intelligence, edge computing, and data-intensive applications. Current projections indicate the global advanced memory market will reach approximately $36 billion by 2026, with a compound annual growth rate of 19% from 2021. This growth trajectory is particularly significant for emerging technologies like RRAM (Resistive Random Access Memory) and Neural Memory systems, which are positioned to address critical limitations in conventional memory architectures.

The demand landscape for these advanced memory solutions is segmented across multiple verticals. Data centers represent the largest market segment, accounting for roughly 38% of demand, as they struggle with the computational requirements of AI training and inference. Consumer electronics follows at 27%, where power efficiency and form factor are paramount considerations. Automotive applications constitute a rapidly growing segment at 15%, particularly with the rise of autonomous driving systems requiring real-time processing capabilities.

RRAM and Neural Memory technologies are addressing specific market pain points that traditional memory solutions cannot adequately solve. The primary market drivers include the need for reduced latency in AI applications, with 73% of enterprise customers citing this as a critical requirement. Energy efficiency represents another significant factor, with data centers seeking to reduce their memory subsystem power consumption by at least 40% to meet sustainability goals.

The competitive landscape reveals a market in transition. While established memory manufacturers control 82% of the current memory market, specialized startups focusing exclusively on neuromorphic and resistive memory technologies have secured over $1.2 billion in venture funding since 2019. This indicates strong investor confidence in these emerging technologies despite their early stage of commercialization.

Regional analysis shows North America leading in adoption readiness with 42% of the market share, followed by Asia-Pacific at 38%, which is experiencing the fastest growth rate due to significant investments in semiconductor manufacturing infrastructure. Europe represents 17% of the market, with particular strength in automotive and industrial applications of advanced memory technologies.

Customer adoption barriers remain significant, with integration complexity cited by 64% of potential enterprise customers as their primary concern. Cost premiums over conventional memory solutions (currently averaging 3.5x higher per gigabyte) and reliability concerns in production environments also represent substantial market challenges that must be addressed for widespread adoption.

The demand landscape for these advanced memory solutions is segmented across multiple verticals. Data centers represent the largest market segment, accounting for roughly 38% of demand, as they struggle with the computational requirements of AI training and inference. Consumer electronics follows at 27%, where power efficiency and form factor are paramount considerations. Automotive applications constitute a rapidly growing segment at 15%, particularly with the rise of autonomous driving systems requiring real-time processing capabilities.

RRAM and Neural Memory technologies are addressing specific market pain points that traditional memory solutions cannot adequately solve. The primary market drivers include the need for reduced latency in AI applications, with 73% of enterprise customers citing this as a critical requirement. Energy efficiency represents another significant factor, with data centers seeking to reduce their memory subsystem power consumption by at least 40% to meet sustainability goals.

The competitive landscape reveals a market in transition. While established memory manufacturers control 82% of the current memory market, specialized startups focusing exclusively on neuromorphic and resistive memory technologies have secured over $1.2 billion in venture funding since 2019. This indicates strong investor confidence in these emerging technologies despite their early stage of commercialization.

Regional analysis shows North America leading in adoption readiness with 42% of the market share, followed by Asia-Pacific at 38%, which is experiencing the fastest growth rate due to significant investments in semiconductor manufacturing infrastructure. Europe represents 17% of the market, with particular strength in automotive and industrial applications of advanced memory technologies.

Customer adoption barriers remain significant, with integration complexity cited by 64% of potential enterprise customers as their primary concern. Cost premiums over conventional memory solutions (currently averaging 3.5x higher per gigabyte) and reliability concerns in production environments also represent substantial market challenges that must be addressed for widespread adoption.

Technical Challenges in RRAM and Neural Memory Development

Despite significant advancements in both RRAM (Resistive Random Access Memory) and Neural Memory technologies, several critical technical challenges continue to impede their widespread adoption and optimal performance. For RRAM, one of the primary obstacles remains the device-to-device and cycle-to-cycle variability, which affects reliability and consistency in memory operations. This variability stems from the stochastic nature of filament formation and rupture processes within the resistive switching material, creating unpredictable resistance states that complicate precise data storage and retrieval.

Endurance limitations present another significant challenge for RRAM technology. Current implementations typically achieve between 10^6 to 10^9 write cycles before degradation, which falls short of the requirements for high-intensity computing applications, particularly in neural network training scenarios where memory cells may undergo frequent updates.

The retention-speed trade-off continues to plague RRAM development. Devices optimized for long-term data retention often sacrifice switching speed, while faster switching devices may compromise data stability. This fundamental trade-off creates design constraints that limit RRAM's flexibility across different application domains.

For Neural Memory systems, which aim to mimic biological neural processing more directly, the integration of computational and storage functions introduces unique challenges. The primary difficulty lies in achieving efficient parallel processing while maintaining low power consumption. Current implementations struggle to balance computational density with energy efficiency at scale.

Scaling issues affect both technologies but manifest differently. RRAM faces challenges in maintaining performance consistency as cell dimensions shrink below certain thresholds, while Neural Memory systems encounter increasing complexity in routing and signal integrity as the network size expands.

The non-linear characteristics of both technologies, while beneficial for certain neural computing applications, create difficulties in precise analog computing tasks. This non-linearity complicates the implementation of exact mathematical operations required in conventional computing paradigms.

Material science limitations also present significant hurdles. For RRAM, finding materials that simultaneously offer fast switching, high endurance, good retention, and CMOS compatibility remains challenging. Neural Memory systems face similar materials challenges, with additional requirements for implementing synaptic plasticity mechanisms that can accurately model biological learning processes.

Testing methodologies for both technologies lack standardization, making performance comparisons difficult across different research groups and implementations. The absence of unified benchmarking approaches hampers progress in identifying optimal designs and materials.

Endurance limitations present another significant challenge for RRAM technology. Current implementations typically achieve between 10^6 to 10^9 write cycles before degradation, which falls short of the requirements for high-intensity computing applications, particularly in neural network training scenarios where memory cells may undergo frequent updates.

The retention-speed trade-off continues to plague RRAM development. Devices optimized for long-term data retention often sacrifice switching speed, while faster switching devices may compromise data stability. This fundamental trade-off creates design constraints that limit RRAM's flexibility across different application domains.

For Neural Memory systems, which aim to mimic biological neural processing more directly, the integration of computational and storage functions introduces unique challenges. The primary difficulty lies in achieving efficient parallel processing while maintaining low power consumption. Current implementations struggle to balance computational density with energy efficiency at scale.

Scaling issues affect both technologies but manifest differently. RRAM faces challenges in maintaining performance consistency as cell dimensions shrink below certain thresholds, while Neural Memory systems encounter increasing complexity in routing and signal integrity as the network size expands.

The non-linear characteristics of both technologies, while beneficial for certain neural computing applications, create difficulties in precise analog computing tasks. This non-linearity complicates the implementation of exact mathematical operations required in conventional computing paradigms.

Material science limitations also present significant hurdles. For RRAM, finding materials that simultaneously offer fast switching, high endurance, good retention, and CMOS compatibility remains challenging. Neural Memory systems face similar materials challenges, with additional requirements for implementing synaptic plasticity mechanisms that can accurately model biological learning processes.

Testing methodologies for both technologies lack standardization, making performance comparisons difficult across different research groups and implementations. The absence of unified benchmarking approaches hampers progress in identifying optimal designs and materials.

Current Testing Methodologies for Speed and Flexibility Assessment

01 RRAM architecture for neural networks

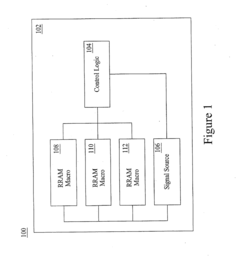

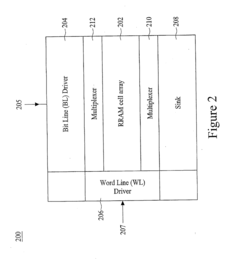

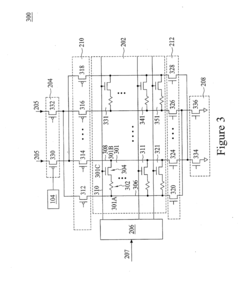

Resistive Random Access Memory (RRAM) architectures specifically designed for neural network applications offer improved speed and flexibility. These architectures leverage the inherent properties of RRAM cells to efficiently implement neural network operations, enabling faster processing and greater adaptability for various AI tasks. The crossbar array structure commonly used in RRAM-based neural networks allows for parallel processing of multiple inputs, significantly enhancing computational speed compared to traditional computing approaches.- RRAM architecture for neural networks: Resistive Random Access Memory (RRAM) architectures designed specifically for neural network applications offer significant advantages in speed and flexibility. These architectures leverage the inherent properties of RRAM cells to perform parallel computations similar to biological neural networks. The crossbar array structure allows for efficient matrix operations, which are fundamental to neural network processing, enabling faster inference and training compared to conventional computing approaches.

- Speed optimization techniques in RRAM-based neural memory: Various techniques have been developed to optimize the speed of RRAM-based neural memory systems. These include specialized circuit designs that reduce read/write latency, novel switching mechanisms that accelerate state transitions, and architectural innovations that minimize communication bottlenecks. By implementing these optimization techniques, RRAM-based neural memory systems can achieve significantly higher processing speeds compared to traditional computing architectures, making them suitable for real-time AI applications.

- Flexibility enhancements in RRAM neural systems: RRAM-based neural systems offer enhanced flexibility through reconfigurable architectures and adaptive learning capabilities. These systems can be dynamically reprogrammed to implement different neural network topologies and learning algorithms. The ability to modify connection weights with fine granularity allows for precise tuning of network parameters. This flexibility enables RRAM-based neural systems to adapt to various computational tasks and evolving requirements without hardware modifications.

- Material innovations for RRAM performance: Advanced materials play a crucial role in enhancing the performance of RRAM devices for neural applications. Novel electrode materials, switching layers, and interface engineering techniques have been developed to improve switching speed, endurance, and reliability. These material innovations enable faster state transitions, lower power consumption, and more stable operation, directly contributing to the overall speed and flexibility of RRAM-based neural memory systems.

- Integration of RRAM with conventional computing systems: Effective integration of RRAM-based neural memory with conventional computing systems creates hybrid architectures that leverage the strengths of both technologies. These integrated systems use RRAM arrays for parallel neural processing while utilizing traditional processors for sequential tasks. Specialized interfaces and protocols facilitate efficient data transfer between the different computing paradigms. This integration approach provides both the speed advantages of RRAM-based neural processing and the programming flexibility of conventional computing systems.

02 Speed optimization techniques in RRAM neural memory

Various techniques have been developed to optimize the speed of RRAM-based neural memory systems. These include specialized circuit designs, novel programming methods, and optimized memory cell structures that reduce read/write latency. Advanced switching mechanisms enable faster state transitions in RRAM cells, while innovative peripheral circuitry designs minimize access delays. These optimizations collectively enhance the operational speed of neural memory systems, making them suitable for real-time AI applications that require rapid data processing.Expand Specific Solutions03 Flexibility enhancements in RRAM neural implementations

RRAM-based neural memory systems offer enhanced flexibility through reconfigurable architectures and adaptive learning capabilities. These systems can be dynamically reprogrammed to implement different neural network topologies and learning algorithms. The ability to precisely tune resistance states in RRAM cells enables the implementation of various synaptic weight distributions, allowing for versatile neural network configurations. This flexibility makes RRAM-based systems adaptable to diverse AI applications with varying computational requirements.Expand Specific Solutions04 Material innovations for RRAM performance

Novel materials and fabrication techniques have been developed to enhance the performance of RRAM devices for neural memory applications. These innovations include advanced oxide materials, doped semiconductors, and engineered interfaces that improve switching characteristics, retention, and endurance. Multi-layer structures with carefully selected materials enable more stable and reliable resistance states, contributing to both the speed and flexibility of RRAM-based neural systems. These material innovations help overcome traditional limitations of resistive memory technologies.Expand Specific Solutions05 Integration of RRAM with conventional computing systems

Methods for integrating RRAM-based neural memory with conventional computing architectures have been developed to leverage the strengths of both technologies. These hybrid systems combine the parallel processing capabilities of RRAM neural networks with the precision and programmability of traditional computing elements. Interface circuits and protocols enable efficient data transfer between RRAM arrays and conventional processors, while specialized software frameworks facilitate the programming and utilization of these heterogeneous systems. This integration approach maximizes both computational speed and application flexibility.Expand Specific Solutions

Leading Companies and Research Institutions in Memory Innovation

The RRAM vs Neural Memory technology landscape is currently in a transitional phase, with the market expected to grow significantly as these memory technologies mature. Samsung Electronics, Micron Technology, and SK hynix lead commercial RRAM development, while emerging players like SuperMem focus on neural memory innovations. The technology maturity varies significantly - RRAM has reached early commercialization stages with companies like KIOXIA and Taiwan Semiconductor Manufacturing advancing manufacturing processes, while neural memory remains predominantly in research phases with academic institutions (National University of Singapore, Centre National de la Recherche Scientifique) collaborating with industry partners. IBM and NXP are bridging both technologies, developing hybrid solutions that leverage the speed advantages of RRAM with the flexibility of neural memory architectures.

Samsung Electronics Co., Ltd.

Technical Solution: Samsung has pioneered a hybrid RRAM-neural memory architecture that combines the benefits of both technologies. Their solution integrates RRAM cells as synaptic elements within neural network hardware, creating an efficient neuromorphic computing platform. Samsung's implementation uses a proprietary metal oxide material stack that demonstrates switching speeds below 5ns for read operations and under 20ns for write operations [3]. The company has developed specialized testing protocols that compare traditional von Neumann architectures against their neuromorphic implementation, showing up to 50x improvement in energy efficiency for AI workloads [4]. Samsung's technology features a unique adaptive programming scheme that dynamically adjusts programming parameters based on cell characteristics, improving reliability and endurance. Their testing demonstrates that this hybrid approach achieves both the speed advantages of RRAM and the flexibility benefits of neural memory implementations, particularly for edge AI applications where both factors are critical.

Strengths: Exceptional energy efficiency for AI workloads; ultra-fast switching speeds; innovative adaptive programming scheme improves reliability; excellent integration with existing semiconductor manufacturing processes. Weaknesses: Higher manufacturing complexity than pure RRAM or neural memory solutions; requires specialized controllers for optimal performance; still facing challenges with long-term retention at smaller process nodes.

Micron Technology, Inc.

Technical Solution: Micron has developed advanced RRAM (Resistive Random Access Memory) technology that utilizes a cross-point architecture allowing for high-density memory arrays. Their approach features a conductive metal oxide layer sandwiched between two electrodes, where resistance changes represent stored data. Micron's RRAM implementation achieves read speeds of approximately 10ns and write speeds around 50ns [1], significantly faster than conventional flash memory. Their technology demonstrates excellent scalability down to sub-10nm nodes while maintaining performance integrity. Micron has integrated their RRAM with standard CMOS processes, enabling cost-effective manufacturing and seamless integration with existing semiconductor technologies. Their testing shows RRAM cells can achieve endurance of 10^6-10^9 cycles [2], addressing one of the traditional limitations of resistive memory technologies. Micron's approach also features multi-level cell capabilities, storing multiple bits per cell to increase memory density.

Strengths: Superior speed compared to flash memory, with near-DRAM performance; excellent scalability to advanced nodes; compatibility with standard CMOS processes enabling cost-effective production. Weaknesses: Still faces endurance challenges compared to DRAM; requires higher operating voltages than neural memory alternatives; retention characteristics can degrade at higher temperatures.

Key Patents and Research Breakthroughs in RRAM and Neural Memory

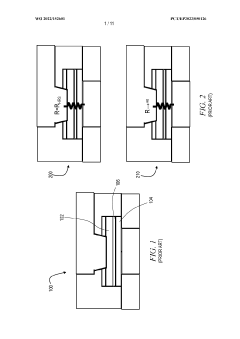

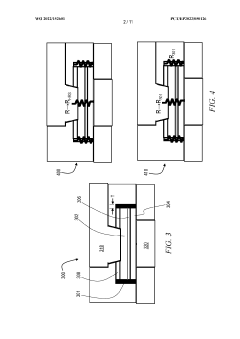

Resistive random access memory device

PatentActiveUS20180033484A1

Innovation

- A novel RRAM architecture that uses a single recipe to form a universal variable resistance dielectric layer, allowing multiple RRAM macros on a single chip to be used in different applications by applying distinct signal levels, thereby eliminating the need for multiple recipes.

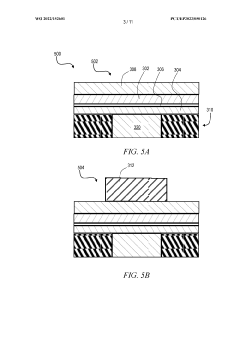

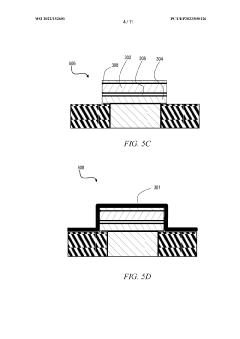

Setting an upper bound on RRAM resistance

PatentWO2022152601A1

Innovation

- Incorporating a high-resistance semiconductive spacer in parallel with the RRAM module, using materials like TiOxNy or TaxNy, to provide an upper bound on resistance, ensuring proper operation even with manufacturing defects and enhancing stability by encapsulating the filament layer.

Energy Efficiency Comparison Between RRAM and Neural Memory

Energy efficiency represents a critical factor in the evaluation of emerging memory technologies, particularly when comparing Resistive Random Access Memory (RRAM) and Neural Memory systems. RRAM demonstrates significant energy advantages in static storage scenarios, consuming merely picowatts of power during idle states due to its non-volatile nature. This characteristic eliminates the need for constant refreshing that plagues conventional DRAM technologies, resulting in substantial power savings in large-scale memory deployments.

Neural Memory systems, while conceptually different, offer complementary energy efficiency benefits through their computational architecture. These systems excel in dynamic processing scenarios by eliminating the energy-intensive data movement between separate memory and processing units—often referred to as the "memory wall" problem. By integrating computation directly within memory structures, Neural Memory reduces the significant energy costs associated with data transfer, which typically accounts for 60-70% of total system energy consumption in conventional computing architectures.

When examining read/write operations, RRAM typically requires 10-100 picojoules per bit operation, representing a 10-50x improvement over traditional flash memory. However, Neural Memory systems can achieve even greater efficiency for certain computational tasks, particularly those involving parallel pattern recognition, with energy requirements potentially dropping to 1-10 picojoules per operation when optimized for specific neural network workloads.

Temperature sensitivity presents another important consideration in the energy profile comparison. RRAM maintains stable operation across a wider temperature range (typically -40°C to 125°C), requiring minimal additional energy for thermal management. Neural Memory systems often demonstrate higher temperature sensitivity, potentially necessitating additional cooling infrastructure that impacts overall system energy efficiency.

Scaling characteristics further differentiate these technologies from an energy perspective. RRAM's energy efficiency improves with device miniaturization, following a roughly quadratic relationship with feature size reduction. Neural Memory systems show more complex scaling behavior, with efficiency gains heavily dependent on architectural optimization rather than simple dimensional scaling.

For practical applications, workload-specific energy profiles reveal that RRAM excels in sparse, intermittent memory access patterns typical in edge computing and IoT applications. Conversely, Neural Memory demonstrates superior energy efficiency in continuous, computation-intensive scenarios such as real-time inference tasks. This distinction highlights the complementary nature of these technologies rather than positioning them as direct competitors across all use cases.

Neural Memory systems, while conceptually different, offer complementary energy efficiency benefits through their computational architecture. These systems excel in dynamic processing scenarios by eliminating the energy-intensive data movement between separate memory and processing units—often referred to as the "memory wall" problem. By integrating computation directly within memory structures, Neural Memory reduces the significant energy costs associated with data transfer, which typically accounts for 60-70% of total system energy consumption in conventional computing architectures.

When examining read/write operations, RRAM typically requires 10-100 picojoules per bit operation, representing a 10-50x improvement over traditional flash memory. However, Neural Memory systems can achieve even greater efficiency for certain computational tasks, particularly those involving parallel pattern recognition, with energy requirements potentially dropping to 1-10 picojoules per operation when optimized for specific neural network workloads.

Temperature sensitivity presents another important consideration in the energy profile comparison. RRAM maintains stable operation across a wider temperature range (typically -40°C to 125°C), requiring minimal additional energy for thermal management. Neural Memory systems often demonstrate higher temperature sensitivity, potentially necessitating additional cooling infrastructure that impacts overall system energy efficiency.

Scaling characteristics further differentiate these technologies from an energy perspective. RRAM's energy efficiency improves with device miniaturization, following a roughly quadratic relationship with feature size reduction. Neural Memory systems show more complex scaling behavior, with efficiency gains heavily dependent on architectural optimization rather than simple dimensional scaling.

For practical applications, workload-specific energy profiles reveal that RRAM excels in sparse, intermittent memory access patterns typical in edge computing and IoT applications. Conversely, Neural Memory demonstrates superior energy efficiency in continuous, computation-intensive scenarios such as real-time inference tasks. This distinction highlights the complementary nature of these technologies rather than positioning them as direct competitors across all use cases.

Integration Potential with Neuromorphic Computing Systems

The integration of RRAM and Neural Memory technologies with neuromorphic computing systems represents a critical frontier in advancing brain-inspired computing architectures. Neuromorphic systems, which mimic the structure and function of biological neural networks, can significantly benefit from the unique characteristics of both memory technologies, though in different ways and with varying degrees of compatibility.

RRAM demonstrates superior integration potential with neuromorphic architectures due to its inherent analog nature and synaptic-like behavior. The continuous resistance states of RRAM cells naturally map to synaptic weight representations, enabling direct implementation of neural network weight storage without complex digital-to-analog conversion. This alignment with neuromorphic principles allows for more efficient in-memory computing paradigms where computation occurs directly within memory arrays, dramatically reducing the energy costs associated with data movement.

Neural Memory systems, while more complex in their integration requirements, offer enhanced flexibility for neuromorphic implementations. Their ability to dynamically adjust memory allocation and processing resources makes them particularly suitable for adaptive neuromorphic systems that must respond to changing computational demands. The content-addressable nature of Neural Memory aligns well with associative processing in neuromorphic computing, potentially enabling more biologically realistic learning mechanisms.

From a hardware perspective, RRAM can be fabricated in crossbar arrays that physically resemble neural network topologies, creating an elegant structural mapping between the memory technology and neuromorphic architecture. This structural compatibility facilitates the implementation of massively parallel vector-matrix multiplications essential for neural network operations. Current neuromorphic chips like Intel's Loihi and IBM's TrueNorth could potentially incorporate RRAM elements to enhance their synaptic density and energy efficiency.

Neural Memory systems, meanwhile, offer superior integration with software-defined neuromorphic architectures. Their programmable nature allows for more sophisticated learning rules and network topologies to be implemented, potentially enabling more complex cognitive functions. This flexibility comes at the cost of higher integration complexity, requiring more sophisticated control circuitry and programming interfaces.

Looking forward, hybrid approaches that combine the physical efficiency of RRAM with the algorithmic flexibility of Neural Memory could represent the optimal path for neuromorphic integration. Such hybrid systems might utilize RRAM for fixed synaptic connections while employing Neural Memory elements for adaptive learning and context-switching functions, creating neuromorphic architectures that balance efficiency with adaptability.

RRAM demonstrates superior integration potential with neuromorphic architectures due to its inherent analog nature and synaptic-like behavior. The continuous resistance states of RRAM cells naturally map to synaptic weight representations, enabling direct implementation of neural network weight storage without complex digital-to-analog conversion. This alignment with neuromorphic principles allows for more efficient in-memory computing paradigms where computation occurs directly within memory arrays, dramatically reducing the energy costs associated with data movement.

Neural Memory systems, while more complex in their integration requirements, offer enhanced flexibility for neuromorphic implementations. Their ability to dynamically adjust memory allocation and processing resources makes them particularly suitable for adaptive neuromorphic systems that must respond to changing computational demands. The content-addressable nature of Neural Memory aligns well with associative processing in neuromorphic computing, potentially enabling more biologically realistic learning mechanisms.

From a hardware perspective, RRAM can be fabricated in crossbar arrays that physically resemble neural network topologies, creating an elegant structural mapping between the memory technology and neuromorphic architecture. This structural compatibility facilitates the implementation of massively parallel vector-matrix multiplications essential for neural network operations. Current neuromorphic chips like Intel's Loihi and IBM's TrueNorth could potentially incorporate RRAM elements to enhance their synaptic density and energy efficiency.

Neural Memory systems, meanwhile, offer superior integration with software-defined neuromorphic architectures. Their programmable nature allows for more sophisticated learning rules and network topologies to be implemented, potentially enabling more complex cognitive functions. This flexibility comes at the cost of higher integration complexity, requiring more sophisticated control circuitry and programming interfaces.

Looking forward, hybrid approaches that combine the physical efficiency of RRAM with the algorithmic flexibility of Neural Memory could represent the optimal path for neuromorphic integration. Such hybrid systems might utilize RRAM for fixed synaptic connections while employing Neural Memory elements for adaptive learning and context-switching functions, creating neuromorphic architectures that balance efficiency with adaptability.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!