GC-MS Analysis of Fatty Acids: Sensitivity Parameters

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GC-MS Fatty Acid Analysis Background and Objectives

Gas Chromatography-Mass Spectrometry (GC-MS) has evolved as a cornerstone analytical technique for fatty acid analysis since its inception in the 1950s. The technology has progressed from rudimentary separation methods to sophisticated hyphenated systems capable of detecting trace amounts of fatty acids in complex matrices. This evolution has been driven by increasing demands for higher sensitivity, improved resolution, and more accurate quantification in various fields including nutrition, clinical diagnostics, and environmental monitoring.

The trajectory of GC-MS technology for fatty acid analysis has seen significant advancements in column technology, from packed columns to high-efficiency capillary columns with specialized stationary phases optimized for fatty acid methyl esters (FAMEs). Detector technology has similarly progressed from single quadrupole mass analyzers to triple quadrupole and time-of-flight systems, dramatically enhancing sensitivity parameters.

Current trends in the field focus on miniaturization, automation, and integration with artificial intelligence for data processing. The push toward portable GC-MS systems represents a paradigm shift from laboratory-confined analysis to point-of-need applications, particularly relevant for clinical diagnostics and field research in lipid biochemistry.

The primary technical objective of optimizing sensitivity parameters in GC-MS fatty acid analysis is to achieve lower detection limits while maintaining analytical precision. This involves systematic evaluation and optimization of multiple instrumental parameters including injection techniques, temperature programming, ionization conditions, and mass analyzer settings. Additionally, sample preparation protocols significantly impact sensitivity and require standardization.

Secondary objectives include enhancing throughput capabilities through faster analysis times without compromising separation efficiency, developing robust methods capable of handling diverse sample matrices, and establishing standardized protocols for quantitative analysis that ensure inter-laboratory reproducibility.

Long-term goals in this technical domain include the development of comprehensive databases for automated fatty acid identification, integration with other -omics platforms for systems biology approaches, and creation of predictive models that correlate fatty acid profiles with biological outcomes. These advancements would significantly expand the utility of GC-MS in precision medicine, nutritional science, and environmental monitoring.

The optimization of sensitivity parameters represents not merely an incremental improvement in analytical capability but a fundamental enhancement that could potentially unlock new applications in metabolomics, lipidomics, and biomarker discovery where trace-level detection of fatty acids is critical for meaningful biological insights.

The trajectory of GC-MS technology for fatty acid analysis has seen significant advancements in column technology, from packed columns to high-efficiency capillary columns with specialized stationary phases optimized for fatty acid methyl esters (FAMEs). Detector technology has similarly progressed from single quadrupole mass analyzers to triple quadrupole and time-of-flight systems, dramatically enhancing sensitivity parameters.

Current trends in the field focus on miniaturization, automation, and integration with artificial intelligence for data processing. The push toward portable GC-MS systems represents a paradigm shift from laboratory-confined analysis to point-of-need applications, particularly relevant for clinical diagnostics and field research in lipid biochemistry.

The primary technical objective of optimizing sensitivity parameters in GC-MS fatty acid analysis is to achieve lower detection limits while maintaining analytical precision. This involves systematic evaluation and optimization of multiple instrumental parameters including injection techniques, temperature programming, ionization conditions, and mass analyzer settings. Additionally, sample preparation protocols significantly impact sensitivity and require standardization.

Secondary objectives include enhancing throughput capabilities through faster analysis times without compromising separation efficiency, developing robust methods capable of handling diverse sample matrices, and establishing standardized protocols for quantitative analysis that ensure inter-laboratory reproducibility.

Long-term goals in this technical domain include the development of comprehensive databases for automated fatty acid identification, integration with other -omics platforms for systems biology approaches, and creation of predictive models that correlate fatty acid profiles with biological outcomes. These advancements would significantly expand the utility of GC-MS in precision medicine, nutritional science, and environmental monitoring.

The optimization of sensitivity parameters represents not merely an incremental improvement in analytical capability but a fundamental enhancement that could potentially unlock new applications in metabolomics, lipidomics, and biomarker discovery where trace-level detection of fatty acids is critical for meaningful biological insights.

Market Demand for High-Sensitivity Fatty Acid Analysis

The global market for high-sensitivity fatty acid analysis has experienced substantial growth over the past decade, primarily driven by increasing applications in clinical diagnostics, pharmaceutical research, and food safety testing. The demand for precise fatty acid profiling has become critical in various sectors, with healthcare leading the expansion due to the growing recognition of fatty acids as biomarkers for numerous diseases including cardiovascular disorders, metabolic syndromes, and neurological conditions.

In the clinical diagnostics segment, the market value for fatty acid analysis instruments and reagents reached approximately $2.3 billion in 2022, with a projected annual growth rate of 7.8% through 2028. This growth is largely attributed to the rising prevalence of lifestyle-related diseases and the increasing adoption of personalized medicine approaches that rely on detailed metabolic profiling.

The pharmaceutical industry represents another significant market segment, where high-sensitivity fatty acid analysis is essential for drug development, particularly in the areas of lipid-based drug delivery systems and the evaluation of drug effects on lipid metabolism. Market research indicates that pharmaceutical companies invested over $1.7 billion in advanced analytical technologies for lipid research in 2022 alone.

Food and beverage industry applications constitute the third largest market segment, valued at approximately $1.2 billion, with particular emphasis on quality control, authenticity verification, and nutritional labeling. The increasing consumer awareness regarding healthy fats and oils has propelled manufacturers to implement more rigorous testing protocols, thereby driving demand for high-sensitivity analytical methods.

Geographically, North America dominates the market with a 38% share, followed by Europe (29%) and Asia-Pacific (24%). The Asia-Pacific region, however, is experiencing the fastest growth rate at 9.2% annually, primarily due to expanding healthcare infrastructure, increasing research activities, and growing regulatory requirements for food safety in countries like China, Japan, and India.

Market analysis reveals that end-users are increasingly demanding analytical systems with lower detection limits, higher reproducibility, and faster processing times. A survey of laboratory managers indicated that 76% consider sensitivity improvements as the most critical factor when upgrading their GC-MS systems for fatty acid analysis, followed by automation capabilities (58%) and data processing software (52%).

The consumables segment, including derivatization reagents, standards, and columns specifically designed for fatty acid analysis, represents a significant recurring revenue stream, estimated at $850 million annually with steady growth projections.

In the clinical diagnostics segment, the market value for fatty acid analysis instruments and reagents reached approximately $2.3 billion in 2022, with a projected annual growth rate of 7.8% through 2028. This growth is largely attributed to the rising prevalence of lifestyle-related diseases and the increasing adoption of personalized medicine approaches that rely on detailed metabolic profiling.

The pharmaceutical industry represents another significant market segment, where high-sensitivity fatty acid analysis is essential for drug development, particularly in the areas of lipid-based drug delivery systems and the evaluation of drug effects on lipid metabolism. Market research indicates that pharmaceutical companies invested over $1.7 billion in advanced analytical technologies for lipid research in 2022 alone.

Food and beverage industry applications constitute the third largest market segment, valued at approximately $1.2 billion, with particular emphasis on quality control, authenticity verification, and nutritional labeling. The increasing consumer awareness regarding healthy fats and oils has propelled manufacturers to implement more rigorous testing protocols, thereby driving demand for high-sensitivity analytical methods.

Geographically, North America dominates the market with a 38% share, followed by Europe (29%) and Asia-Pacific (24%). The Asia-Pacific region, however, is experiencing the fastest growth rate at 9.2% annually, primarily due to expanding healthcare infrastructure, increasing research activities, and growing regulatory requirements for food safety in countries like China, Japan, and India.

Market analysis reveals that end-users are increasingly demanding analytical systems with lower detection limits, higher reproducibility, and faster processing times. A survey of laboratory managers indicated that 76% consider sensitivity improvements as the most critical factor when upgrading their GC-MS systems for fatty acid analysis, followed by automation capabilities (58%) and data processing software (52%).

The consumables segment, including derivatization reagents, standards, and columns specifically designed for fatty acid analysis, represents a significant recurring revenue stream, estimated at $850 million annually with steady growth projections.

Current Challenges in GC-MS Fatty Acid Detection

Despite significant advancements in GC-MS technology, several persistent challenges continue to impact the sensitivity and reliability of fatty acid detection. The complexity of biological matrices presents a primary obstacle, as fatty acids often exist within intricate sample environments containing numerous interfering compounds. These matrices can mask signals, create co-elution problems, and contribute to ion suppression effects, ultimately compromising detection limits and quantification accuracy.

Sample preparation remains a critical bottleneck in the analytical workflow. Current extraction and derivatization procedures for fatty acids are time-consuming, labor-intensive, and prone to variability. The efficiency of derivatization reactions, particularly for polyunsaturated fatty acids (PUFAs), can be inconsistent, leading to incomplete conversion and subsequent underestimation of certain fatty acid species. Additionally, oxidative degradation during sample handling introduces artifacts that confound accurate analysis.

Instrumental limitations further compound these challenges. The thermal lability of certain fatty acids, especially long-chain polyunsaturated varieties, can lead to degradation during GC separation. Current column technologies struggle to provide adequate resolution for structurally similar fatty acid isomers, particularly cis/trans configurations and compounds differing only in double bond positions. This results in overlapping peaks and ambiguous identification.

Detection sensitivity represents another significant hurdle, particularly for low-abundance fatty acids in complex samples. Conventional electron ionization (EI) at 70 eV, while providing reproducible fragmentation patterns, often results in extensive fragmentation that diminishes molecular ion abundance, thereby reducing detection sensitivity. Alternative soft ionization techniques show promise but lack standardized protocols and comprehensive spectral libraries.

Quantification accuracy is compromised by matrix effects and the variable response factors of different fatty acid species. Current calibration approaches often fail to account for these variations, leading to systematic biases in quantitative results. The limited dynamic range of many GC-MS systems further restricts simultaneous analysis of high- and low-abundance fatty acids within the same sample.

Data processing presents additional challenges, as current automated peak integration algorithms struggle with complex chromatograms containing partially resolved peaks, baseline drift, and matrix interferences. Manual intervention remains necessary in many cases, introducing subjectivity and reducing throughput. Furthermore, the lack of standardized data reporting formats hampers inter-laboratory comparisons and meta-analyses of fatty acid profiles across different studies.

Sample preparation remains a critical bottleneck in the analytical workflow. Current extraction and derivatization procedures for fatty acids are time-consuming, labor-intensive, and prone to variability. The efficiency of derivatization reactions, particularly for polyunsaturated fatty acids (PUFAs), can be inconsistent, leading to incomplete conversion and subsequent underestimation of certain fatty acid species. Additionally, oxidative degradation during sample handling introduces artifacts that confound accurate analysis.

Instrumental limitations further compound these challenges. The thermal lability of certain fatty acids, especially long-chain polyunsaturated varieties, can lead to degradation during GC separation. Current column technologies struggle to provide adequate resolution for structurally similar fatty acid isomers, particularly cis/trans configurations and compounds differing only in double bond positions. This results in overlapping peaks and ambiguous identification.

Detection sensitivity represents another significant hurdle, particularly for low-abundance fatty acids in complex samples. Conventional electron ionization (EI) at 70 eV, while providing reproducible fragmentation patterns, often results in extensive fragmentation that diminishes molecular ion abundance, thereby reducing detection sensitivity. Alternative soft ionization techniques show promise but lack standardized protocols and comprehensive spectral libraries.

Quantification accuracy is compromised by matrix effects and the variable response factors of different fatty acid species. Current calibration approaches often fail to account for these variations, leading to systematic biases in quantitative results. The limited dynamic range of many GC-MS systems further restricts simultaneous analysis of high- and low-abundance fatty acids within the same sample.

Data processing presents additional challenges, as current automated peak integration algorithms struggle with complex chromatograms containing partially resolved peaks, baseline drift, and matrix interferences. Manual intervention remains necessary in many cases, introducing subjectivity and reducing throughput. Furthermore, the lack of standardized data reporting formats hampers inter-laboratory comparisons and meta-analyses of fatty acid profiles across different studies.

Current Methodologies for Optimizing GC-MS Sensitivity

01 Sample preparation techniques for enhanced GC-MS sensitivity

Various sample preparation methods can significantly improve GC-MS sensitivity. These include extraction techniques, concentration steps, and derivatization processes that enhance the detection of target analytes. Proper sample preparation reduces matrix interference and increases the signal-to-noise ratio, allowing for detection of compounds at lower concentrations. These methods are particularly important when analyzing complex samples or when targeting trace-level compounds.- Enhancing GC-MS sensitivity through sample preparation techniques: Various sample preparation techniques can significantly enhance the sensitivity of GC-MS analysis. These include extraction methods, concentration procedures, and derivatization approaches that improve the volatility and stability of analytes. Proper sample preparation can reduce matrix effects, minimize interference, and increase the signal-to-noise ratio, thereby improving detection limits and overall sensitivity of the GC-MS system.

- Instrumental modifications for improved GC-MS sensitivity: Modifications to GC-MS instrumentation can substantially enhance sensitivity. These include improvements in ion source design, detector technology, and column technology. Advanced ion sources increase ionization efficiency, while high-performance detectors with lower noise levels improve signal detection. Specialized columns with optimized stationary phases and dimensions can also enhance separation efficiency and reduce peak broadening, leading to better sensitivity.

- Software and data processing approaches for sensitivity enhancement: Advanced software algorithms and data processing techniques can significantly improve GC-MS sensitivity. These include signal averaging, noise reduction algorithms, deconvolution methods, and selective ion monitoring. By filtering out background noise and enhancing signal recognition, these computational approaches can detect compounds at lower concentrations and improve the overall sensitivity of the analysis.

- Tandem MS and hybrid techniques for enhanced sensitivity: Tandem mass spectrometry (MS/MS) and hybrid techniques combine multiple analytical approaches to achieve higher sensitivity. These methods involve multiple stages of mass analysis, which can significantly reduce chemical noise and increase selectivity. By focusing on specific ion transitions, these techniques can detect trace amounts of analytes in complex matrices, offering substantial improvements in sensitivity compared to conventional GC-MS.

- Calibration and optimization strategies for maximizing sensitivity: Proper calibration and optimization of GC-MS parameters are crucial for achieving maximum sensitivity. This includes optimizing temperature programs, carrier gas flow rates, injection techniques, and MS parameters such as ionization energy and detector voltage. Regular instrument maintenance, tuning, and validation using standard reference materials ensure consistent performance and optimal sensitivity. Strategic method development tailored to specific analytes can further enhance detection capabilities.

02 Ionization and detection system improvements

Enhancements to ionization sources and detection systems can dramatically improve GC-MS sensitivity. Advanced ionization techniques such as chemical ionization, electron impact optimization, and specialized ion sources allow for more efficient generation of ions. Coupled with improved detector designs featuring higher electron multiplier voltages, enhanced signal processing, and reduced electronic noise, these innovations enable detection of analytes at much lower concentrations than conventional systems.Expand Specific Solutions03 Column technology and chromatographic separation optimization

Specialized column technologies and optimized chromatographic conditions significantly impact GC-MS sensitivity. Advanced stationary phases, reduced column internal diameters, and optimized film thicknesses improve separation efficiency and reduce band broadening. Temperature programming, carrier gas optimization, and flow rate control further enhance peak resolution and detection limits. These improvements collectively result in sharper peaks with higher signal intensity, enabling detection of trace compounds.Expand Specific Solutions04 Data processing and analytical software solutions

Sophisticated data processing algorithms and analytical software significantly enhance GC-MS sensitivity. Advanced signal processing techniques such as noise filtering, baseline correction, and peak deconvolution improve the extraction of meaningful signals from background noise. Machine learning approaches for spectral analysis, automated calibration procedures, and specialized quantification methods further improve detection capabilities, particularly for complex samples with multiple analytes at varying concentrations.Expand Specific Solutions05 Integrated system designs and multi-dimensional approaches

Integrated system designs and multi-dimensional analytical approaches provide comprehensive solutions for enhancing GC-MS sensitivity. These include tandem mass spectrometry (GC-MS/MS), comprehensive two-dimensional gas chromatography (GCxGC-MS), and hybrid systems combining multiple separation or detection techniques. Such integrated approaches offer improved selectivity, reduced chemical noise, and enhanced separation capabilities, resulting in significantly lower detection limits for complex analytical challenges.Expand Specific Solutions

Leading Manufacturers and Research Groups in GC-MS Technology

The GC-MS analysis of fatty acids market is in a growth phase, driven by increasing applications in pharmaceutical, food, and environmental sectors. The global market size is expanding due to rising demand for precise analytical techniques in research and quality control. Technologically, the field shows moderate maturity with ongoing innovations in sensitivity parameters. Leading players include established analytical instrument manufacturers like Shimadzu Corp. and Waters Technology Corp., alongside pharmaceutical companies such as Pfizer and Fresenius Kabi. Research institutions like TNO and Helmholtz Zentrum München contribute significantly to advancing methodologies. Companies like MARA Renewables and BASF Plant Science are leveraging GC-MS technology for sustainable product development, indicating cross-industry adoption and future growth potential.

China Natl Tobacco Corp. Zhengzhou Tobacco Research Inst

Technical Solution: The Zhengzhou Tobacco Research Institute has developed specialized GC-MS methodologies for analyzing fatty acids in tobacco products with enhanced sensitivity parameters. Their approach incorporates a modified derivatization protocol using trimethylsilyl (TMS) reagents that improves detection of low-abundance fatty acids in complex tobacco matrices[5]. The institute has optimized split/splitless injection parameters specifically for tobacco-derived fatty acids, achieving detection limits in the low picogram range. Their method incorporates specialized temperature programming with slow ramps during elution of critical fatty acid regions to enhance chromatographic resolution of isomeric species. The institute has also developed a unique internal standard mixture containing deuterated fatty acids across different chain lengths to ensure accurate quantification across the analytical range. Their data processing algorithms incorporate automatic adjustment of integration parameters based on fatty acid structural characteristics, improving quantitative accuracy for minor components in complex tobacco samples[6].

Strengths: Highly specialized methods for complex tobacco matrices; excellent detection limits for trace fatty acids; robust quantification using custom internal standard approaches. Weaknesses: Methods highly specialized for tobacco applications with limited transferability; requires specialized expertise in tobacco chemistry; optimization primarily focused on tobacco-specific fatty acid profiles.

Waters Technology Corp.

Technical Solution: Waters has pioneered innovative GC-MS solutions for fatty acid analysis with their Xevo series, featuring StepWave ion-guide technology that significantly enhances sensitivity parameters for fatty acid detection. Their systems incorporate Atmospheric Pressure Gas Chromatography (APGC) ionization, which provides softer ionization compared to traditional electron ionization, preserving molecular ions of fatty acids and improving detection of unsaturated species[3]. Waters' QuanOptimize technology automatically determines optimal sensitivity parameters for each fatty acid compound, adjusting dwell times, cone voltages, and collision energies. Their UNIFI software platform integrates with their GC-MS systems to provide automated method development workflows specifically for fatty acid analysis, with built-in libraries containing retention time and mass spectral information for hundreds of fatty acid species. Waters has also developed specialized sample preparation protocols that minimize degradation of polyunsaturated fatty acids, maintaining analytical integrity throughout the workflow[4].

Strengths: Superior soft ionization technology preserving molecular structure; comprehensive software integration; automated parameter optimization for different fatty acid classes. Weaknesses: Higher cost compared to conventional GC-MS systems; more complex operation requiring specialized training; some methods require additional specialized consumables.

Key Technical Innovations in Fatty Acid Detection

Improved method for the detection of polyunsaturated fatty acids

PatentWO2011087367A1

Innovation

- Derivatization of fatty acids and bile acids with glycidyltrimethylammonium chloride (GTMAC) allows for direct analysis by ultra-high pressure liquid chromatography coupled with mass spectrometry (UHPLC-MS) without prior fractionation, under aqueous conditions, and at mild temperatures, enabling the detection of both free and bound fatty acids, including positional isomers, in a single analysis.

Validation and Standardization Protocols

Validation and standardization protocols are essential for ensuring the reliability and reproducibility of GC-MS analysis of fatty acids. These protocols must address multiple aspects of the analytical process to maintain consistent sensitivity parameters across different laboratories and experimental conditions.

The development of robust validation protocols begins with the establishment of system suitability tests that should be performed prior to each analytical run. These tests typically include the evaluation of resolution, peak symmetry, and retention time reproducibility using standard reference materials. For fatty acid analysis, certified reference materials containing known concentrations of saturated, monounsaturated, and polyunsaturated fatty acids should be analyzed regularly to verify system performance.

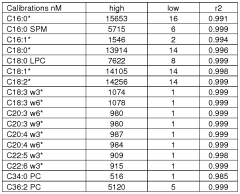

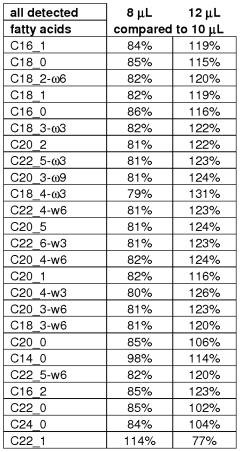

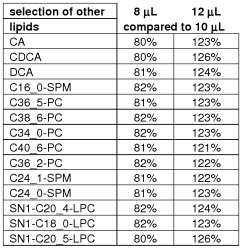

Method validation parameters must be comprehensively assessed according to international guidelines such as ICH, FDA, or ISO standards. These parameters include linearity (typically covering at least three orders of magnitude), precision (intra-day and inter-day), accuracy (recovery studies at multiple concentration levels), limit of detection (LOD), limit of quantification (LOQ), specificity, and robustness. For fatty acid analysis, special attention should be paid to the validation of derivatization procedures, as these can significantly impact sensitivity parameters.

Standardization of sample preparation techniques is particularly critical for fatty acid analysis. This includes standardized protocols for lipid extraction, hydrolysis conditions, and derivatization procedures. The choice of derivatization reagent (e.g., BSTFA, MSTFA, or MTBSTFA for trimethylsilyl derivatives) must be consistent, with clearly defined reaction conditions including temperature, time, and reagent concentrations to ensure complete conversion of fatty acids to their respective derivatives.

Instrument parameter standardization is equally important, encompassing injection techniques (split/splitless ratios, injection temperature), column specifications (stationary phase, dimensions, film thickness), temperature programming, carrier gas flow rates, and MS detection parameters (ionization mode, selected ions for monitoring, dwell times). These parameters should be optimized specifically for fatty acid analysis to maximize sensitivity while maintaining chromatographic resolution.

Quality control measures must be integrated into routine analysis, including the use of internal standards (ideally isotopically labeled analogs of target fatty acids), system suitability checks, and regular participation in proficiency testing programs. Laboratories should establish acceptance criteria for quality control samples and implement corrective action procedures when these criteria are not met.

Documentation and training standardization completes the validation framework, ensuring that all analysts follow identical procedures and can interpret results consistently. Standard operating procedures (SOPs) should be developed, validated, and regularly updated to reflect technological advancements and evolving best practices in GC-MS analysis of fatty acids.

The development of robust validation protocols begins with the establishment of system suitability tests that should be performed prior to each analytical run. These tests typically include the evaluation of resolution, peak symmetry, and retention time reproducibility using standard reference materials. For fatty acid analysis, certified reference materials containing known concentrations of saturated, monounsaturated, and polyunsaturated fatty acids should be analyzed regularly to verify system performance.

Method validation parameters must be comprehensively assessed according to international guidelines such as ICH, FDA, or ISO standards. These parameters include linearity (typically covering at least three orders of magnitude), precision (intra-day and inter-day), accuracy (recovery studies at multiple concentration levels), limit of detection (LOD), limit of quantification (LOQ), specificity, and robustness. For fatty acid analysis, special attention should be paid to the validation of derivatization procedures, as these can significantly impact sensitivity parameters.

Standardization of sample preparation techniques is particularly critical for fatty acid analysis. This includes standardized protocols for lipid extraction, hydrolysis conditions, and derivatization procedures. The choice of derivatization reagent (e.g., BSTFA, MSTFA, or MTBSTFA for trimethylsilyl derivatives) must be consistent, with clearly defined reaction conditions including temperature, time, and reagent concentrations to ensure complete conversion of fatty acids to their respective derivatives.

Instrument parameter standardization is equally important, encompassing injection techniques (split/splitless ratios, injection temperature), column specifications (stationary phase, dimensions, film thickness), temperature programming, carrier gas flow rates, and MS detection parameters (ionization mode, selected ions for monitoring, dwell times). These parameters should be optimized specifically for fatty acid analysis to maximize sensitivity while maintaining chromatographic resolution.

Quality control measures must be integrated into routine analysis, including the use of internal standards (ideally isotopically labeled analogs of target fatty acids), system suitability checks, and regular participation in proficiency testing programs. Laboratories should establish acceptance criteria for quality control samples and implement corrective action procedures when these criteria are not met.

Documentation and training standardization completes the validation framework, ensuring that all analysts follow identical procedures and can interpret results consistently. Standard operating procedures (SOPs) should be developed, validated, and regularly updated to reflect technological advancements and evolving best practices in GC-MS analysis of fatty acids.

Environmental and Sample Matrix Considerations

The environmental conditions and sample matrix composition significantly impact the sensitivity and accuracy of GC-MS analysis of fatty acids. Temperature fluctuations in the laboratory environment can alter sample stability, potentially leading to degradation of unsaturated fatty acids through oxidation processes. Humidity levels above 60% may introduce moisture into samples, affecting derivatization efficiency and column performance. Therefore, maintaining controlled environmental conditions is essential for reproducible fatty acid analysis.

Sample matrix complexity presents considerable challenges for fatty acid detection. Biological matrices such as plasma, tissue homogenates, and food samples contain numerous interfering compounds that can co-elute with target fatty acids. Phospholipids, sterols, and other lipid classes frequently mask signals from less abundant fatty acids, necessitating effective sample preparation strategies. The presence of high molecular weight proteins in biological samples can also adsorb fatty acids, reducing extraction efficiency and analytical sensitivity.

Matrix effects directly influence ionization efficiency in mass spectrometry. Ion suppression or enhancement occurs when matrix components compete with analytes during the ionization process, altering signal intensity and compromising quantitative accuracy. Studies have demonstrated that matrix-matched calibration can improve quantification by up to 30% compared to solvent-based standards when analyzing complex biological samples for fatty acid content.

The pH of the sample matrix critically affects derivatization reactions commonly employed in fatty acid analysis. Methylation efficiency decreases significantly in highly acidic (pH < 3) or basic (pH > 9) environments, leading to incomplete conversion and underestimation of fatty acid concentrations. Buffer selection during sample preparation must account for these pH dependencies to optimize derivatization yield.

Salt concentration in the sample matrix influences extraction efficiency and chromatographic behavior of fatty acids. High salt content (>100 mM) can reduce partition coefficients during liquid-liquid extraction, decreasing recovery rates particularly for medium-chain fatty acids (C8-C12). Additionally, residual salts may accumulate in the GC inlet, causing active sites that promote degradation of thermally labile polyunsaturated fatty acids.

Antioxidant addition strategies must be tailored to specific sample matrices. Lipid-rich samples require higher concentrations of butylated hydroxytoluene (BHT) or other antioxidants (typically 0.05-0.1% w/v) to prevent oxidative degradation during storage and analysis. The effectiveness of antioxidants varies with matrix composition, with greater protection needed for samples containing high levels of transition metals that catalyze oxidation reactions.

Sample matrix complexity presents considerable challenges for fatty acid detection. Biological matrices such as plasma, tissue homogenates, and food samples contain numerous interfering compounds that can co-elute with target fatty acids. Phospholipids, sterols, and other lipid classes frequently mask signals from less abundant fatty acids, necessitating effective sample preparation strategies. The presence of high molecular weight proteins in biological samples can also adsorb fatty acids, reducing extraction efficiency and analytical sensitivity.

Matrix effects directly influence ionization efficiency in mass spectrometry. Ion suppression or enhancement occurs when matrix components compete with analytes during the ionization process, altering signal intensity and compromising quantitative accuracy. Studies have demonstrated that matrix-matched calibration can improve quantification by up to 30% compared to solvent-based standards when analyzing complex biological samples for fatty acid content.

The pH of the sample matrix critically affects derivatization reactions commonly employed in fatty acid analysis. Methylation efficiency decreases significantly in highly acidic (pH < 3) or basic (pH > 9) environments, leading to incomplete conversion and underestimation of fatty acid concentrations. Buffer selection during sample preparation must account for these pH dependencies to optimize derivatization yield.

Salt concentration in the sample matrix influences extraction efficiency and chromatographic behavior of fatty acids. High salt content (>100 mM) can reduce partition coefficients during liquid-liquid extraction, decreasing recovery rates particularly for medium-chain fatty acids (C8-C12). Additionally, residual salts may accumulate in the GC inlet, causing active sites that promote degradation of thermally labile polyunsaturated fatty acids.

Antioxidant addition strategies must be tailored to specific sample matrices. Lipid-rich samples require higher concentrations of butylated hydroxytoluene (BHT) or other antioxidants (typically 0.05-0.1% w/v) to prevent oxidative degradation during storage and analysis. The effectiveness of antioxidants varies with matrix composition, with greater protection needed for samples containing high levels of transition metals that catalyze oxidation reactions.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!