GC-MS Systematic Error Minimization: Lab Practices

SEP 22, 20259 MIN READ

Generate Your Research Report Instantly with AI Agent

Patsnap Eureka helps you evaluate technical feasibility & market potential.

GC-MS Technology Evolution and Precision Goals

Gas Chromatography-Mass Spectrometry (GC-MS) has evolved significantly since its inception in the 1950s, transforming from a specialized analytical technique to an essential tool in modern laboratories. The initial systems featured rudimentary gas chromatographs coupled with basic mass spectrometers, offering limited resolution and sensitivity. By the 1970s, computerized data systems began to enhance analytical capabilities, marking a pivotal advancement in data processing and interpretation.

The 1980s and 1990s witnessed substantial improvements in ionization techniques, with the development of electron impact (EI) and chemical ionization (CI) methods that expanded the range of analyzable compounds. Concurrently, mass analyzers evolved from simple quadrupole systems to more sophisticated time-of-flight (TOF) and ion trap technologies, dramatically improving mass accuracy and resolution.

Recent decades have seen remarkable progress in miniaturization, automation, and integration. Modern GC-MS systems feature advanced temperature programming, high-efficiency capillary columns, and enhanced detector sensitivity, collectively reducing analysis times while improving detection limits. The integration of artificial intelligence and machine learning algorithms has further revolutionized data analysis, enabling more accurate compound identification and quantification.

The precision goals for GC-MS technology have become increasingly demanding as applications expand across pharmaceutical, environmental, forensic, and clinical fields. Current objectives focus on achieving sub-parts-per-billion detection limits, reducing systematic errors to below 1%, and enhancing reproducibility across different laboratories and instruments. These goals are driven by regulatory requirements and the need for reliable data in critical decision-making processes.

Systematic error minimization has emerged as a central focus in GC-MS development, with particular emphasis on reducing variability in sample preparation, improving injection precision, and enhancing calibration methodologies. The industry is moving toward standardized protocols that incorporate internal standards, quality control samples, and rigorous validation procedures to ensure data integrity and comparability.

Future evolution trajectories point toward fully automated systems with self-calibration capabilities, real-time error detection and correction mechanisms, and comprehensive quality assurance features. The integration of digital twin technology and predictive maintenance algorithms promises to further reduce systematic errors by anticipating and mitigating potential instrument failures before they impact analytical results.

The 1980s and 1990s witnessed substantial improvements in ionization techniques, with the development of electron impact (EI) and chemical ionization (CI) methods that expanded the range of analyzable compounds. Concurrently, mass analyzers evolved from simple quadrupole systems to more sophisticated time-of-flight (TOF) and ion trap technologies, dramatically improving mass accuracy and resolution.

Recent decades have seen remarkable progress in miniaturization, automation, and integration. Modern GC-MS systems feature advanced temperature programming, high-efficiency capillary columns, and enhanced detector sensitivity, collectively reducing analysis times while improving detection limits. The integration of artificial intelligence and machine learning algorithms has further revolutionized data analysis, enabling more accurate compound identification and quantification.

The precision goals for GC-MS technology have become increasingly demanding as applications expand across pharmaceutical, environmental, forensic, and clinical fields. Current objectives focus on achieving sub-parts-per-billion detection limits, reducing systematic errors to below 1%, and enhancing reproducibility across different laboratories and instruments. These goals are driven by regulatory requirements and the need for reliable data in critical decision-making processes.

Systematic error minimization has emerged as a central focus in GC-MS development, with particular emphasis on reducing variability in sample preparation, improving injection precision, and enhancing calibration methodologies. The industry is moving toward standardized protocols that incorporate internal standards, quality control samples, and rigorous validation procedures to ensure data integrity and comparability.

Future evolution trajectories point toward fully automated systems with self-calibration capabilities, real-time error detection and correction mechanisms, and comprehensive quality assurance features. The integration of digital twin technology and predictive maintenance algorithms promises to further reduce systematic errors by anticipating and mitigating potential instrument failures before they impact analytical results.

Market Demand for High-Accuracy Analytical Chemistry

The analytical chemistry market has witnessed substantial growth in recent years, driven primarily by increasing demands for high-accuracy measurements across various industries. The global analytical instrumentation market, valued at approximately $58 billion in 2022, is projected to reach $81 billion by 2027, with GC-MS systems representing a significant segment of this market. This growth trajectory underscores the critical importance of precision analytical techniques in modern scientific and industrial applications.

Pharmaceutical and biotechnology sectors constitute the largest market segments demanding high-accuracy analytical chemistry solutions. These industries require precise quantification of compounds for drug development, quality control, and regulatory compliance. The implementation of stringent regulatory frameworks, such as FDA's Process Analytical Technology (PAT) initiative and ICH guidelines, has further intensified the need for minimizing systematic errors in analytical methodologies.

Environmental monitoring represents another substantial market driver, with governmental agencies worldwide establishing increasingly stringent detection limits for contaminants in air, water, and soil samples. The ability to detect trace compounds at parts-per-billion or parts-per-trillion levels necessitates analytical systems with minimal systematic errors. Market research indicates that environmental testing laboratories are willing to invest 15-20% premium on instrumentation that demonstrates superior accuracy and reproducibility.

The food and beverage industry has emerged as a rapidly growing segment for high-accuracy analytical chemistry applications. Consumer demand for food safety, authenticity verification, and nutritional content analysis has created a market estimated at $6.2 billion annually for advanced analytical testing. GC-MS systems with minimized systematic errors are particularly valuable for detecting adulterants, pesticide residues, and flavor compounds at trace levels.

Clinical diagnostics represents an expanding application area, with metabolomics and clinical toxicology increasingly relying on GC-MS for biomarker discovery and therapeutic drug monitoring. The precision medicine paradigm has amplified the importance of accurate quantification, as treatment decisions increasingly depend on precise measurement of biomarkers and drug metabolites.

Market surveys indicate that laboratories are prioritizing investments in error minimization strategies, with 78% of laboratory managers citing measurement accuracy as a "critical" or "very important" factor in purchasing decisions. This trend is particularly pronounced in contract research organizations (CROs) and testing laboratories, where analytical precision directly impacts business reputation and client retention.

The market demand for systematic error minimization in GC-MS extends beyond hardware to include software solutions, reference standards, and specialized training programs. This ecosystem approach to accuracy improvement represents a significant market opportunity, estimated at $1.8 billion annually and growing at 9.7% CAGR.

Pharmaceutical and biotechnology sectors constitute the largest market segments demanding high-accuracy analytical chemistry solutions. These industries require precise quantification of compounds for drug development, quality control, and regulatory compliance. The implementation of stringent regulatory frameworks, such as FDA's Process Analytical Technology (PAT) initiative and ICH guidelines, has further intensified the need for minimizing systematic errors in analytical methodologies.

Environmental monitoring represents another substantial market driver, with governmental agencies worldwide establishing increasingly stringent detection limits for contaminants in air, water, and soil samples. The ability to detect trace compounds at parts-per-billion or parts-per-trillion levels necessitates analytical systems with minimal systematic errors. Market research indicates that environmental testing laboratories are willing to invest 15-20% premium on instrumentation that demonstrates superior accuracy and reproducibility.

The food and beverage industry has emerged as a rapidly growing segment for high-accuracy analytical chemistry applications. Consumer demand for food safety, authenticity verification, and nutritional content analysis has created a market estimated at $6.2 billion annually for advanced analytical testing. GC-MS systems with minimized systematic errors are particularly valuable for detecting adulterants, pesticide residues, and flavor compounds at trace levels.

Clinical diagnostics represents an expanding application area, with metabolomics and clinical toxicology increasingly relying on GC-MS for biomarker discovery and therapeutic drug monitoring. The precision medicine paradigm has amplified the importance of accurate quantification, as treatment decisions increasingly depend on precise measurement of biomarkers and drug metabolites.

Market surveys indicate that laboratories are prioritizing investments in error minimization strategies, with 78% of laboratory managers citing measurement accuracy as a "critical" or "very important" factor in purchasing decisions. This trend is particularly pronounced in contract research organizations (CROs) and testing laboratories, where analytical precision directly impacts business reputation and client retention.

The market demand for systematic error minimization in GC-MS extends beyond hardware to include software solutions, reference standards, and specialized training programs. This ecosystem approach to accuracy improvement represents a significant market opportunity, estimated at $1.8 billion annually and growing at 9.7% CAGR.

Current Challenges in GC-MS Systematic Error Control

Despite significant advancements in GC-MS technology, laboratories continue to face persistent challenges in controlling systematic errors that compromise analytical accuracy and reliability. One of the primary obstacles is instrument drift, where detector sensitivity and chromatographic retention times gradually change during extended analytical sequences. This phenomenon introduces variability that becomes particularly problematic during large-scale studies or when comparing results across different time periods.

Sample preparation inconsistencies represent another major source of systematic error. The multi-step nature of sample extraction, derivatization, and concentration creates numerous opportunities for variability introduction. Even minor deviations in solvent volumes, extraction times, or temperature conditions can propagate through the analytical workflow, resulting in significant quantitative discrepancies that may not be immediately apparent during data analysis.

Matrix effects continue to challenge even experienced laboratories, as co-eluting compounds from complex biological or environmental samples can suppress or enhance analyte ionization. These effects vary unpredictably between samples and are difficult to model mathematically, making complete compensation nearly impossible through standard calibration approaches. The situation becomes more complex when dealing with diverse sample types within the same analytical batch.

Calibration strategy limitations further exacerbate systematic error issues. Traditional external calibration methods often fail to account for recovery losses during sample preparation, while internal standardization may be compromised by differential behavior between analytes and their designated internal standards. Multi-point calibrations can introduce their own errors through inappropriate mathematical models or weighting schemes that don't reflect the true instrument response characteristics.

Data processing workflows introduce additional layers of systematic error through peak integration algorithms that may handle baseline noise, peak overlap, or tailing inconsistently. The subjective nature of some integration decisions, particularly for complex chromatograms, creates operator-dependent variability that undermines reproducibility across different analysts or laboratories.

Instrument contamination represents a persistent challenge, with carryover effects and gradual column degradation introducing systematic biases that evolve over an instrument's operational lifetime. These effects are particularly insidious as they may manifest gradually, making them difficult to detect through routine quality control procedures until they reach critical levels that significantly impact analytical results.

Environmental factors including laboratory temperature fluctuations, humidity variations, and power supply instabilities further contribute to systematic error profiles that may not be adequately captured by standard quality control measures, creating subtle but significant shifts in analytical performance that compromise data comparability across different operating conditions.

Sample preparation inconsistencies represent another major source of systematic error. The multi-step nature of sample extraction, derivatization, and concentration creates numerous opportunities for variability introduction. Even minor deviations in solvent volumes, extraction times, or temperature conditions can propagate through the analytical workflow, resulting in significant quantitative discrepancies that may not be immediately apparent during data analysis.

Matrix effects continue to challenge even experienced laboratories, as co-eluting compounds from complex biological or environmental samples can suppress or enhance analyte ionization. These effects vary unpredictably between samples and are difficult to model mathematically, making complete compensation nearly impossible through standard calibration approaches. The situation becomes more complex when dealing with diverse sample types within the same analytical batch.

Calibration strategy limitations further exacerbate systematic error issues. Traditional external calibration methods often fail to account for recovery losses during sample preparation, while internal standardization may be compromised by differential behavior between analytes and their designated internal standards. Multi-point calibrations can introduce their own errors through inappropriate mathematical models or weighting schemes that don't reflect the true instrument response characteristics.

Data processing workflows introduce additional layers of systematic error through peak integration algorithms that may handle baseline noise, peak overlap, or tailing inconsistently. The subjective nature of some integration decisions, particularly for complex chromatograms, creates operator-dependent variability that undermines reproducibility across different analysts or laboratories.

Instrument contamination represents a persistent challenge, with carryover effects and gradual column degradation introducing systematic biases that evolve over an instrument's operational lifetime. These effects are particularly insidious as they may manifest gradually, making them difficult to detect through routine quality control procedures until they reach critical levels that significantly impact analytical results.

Environmental factors including laboratory temperature fluctuations, humidity variations, and power supply instabilities further contribute to systematic error profiles that may not be adequately captured by standard quality control measures, creating subtle but significant shifts in analytical performance that compromise data comparability across different operating conditions.

Best Laboratory Practices for GC-MS Error Minimization

01 Calibration methods for reducing systematic errors in GC-MS

Various calibration techniques can be employed to minimize systematic errors in GC-MS analysis. These include the use of internal standards, multi-point calibration curves, and reference materials to compensate for instrumental drift and response variations. Advanced calibration algorithms can adjust for matrix effects and detector non-linearity, significantly improving the accuracy and reliability of quantitative measurements in complex samples.- Calibration methods for reducing systematic errors in GC-MS: Various calibration techniques are employed to minimize systematic errors in GC-MS analysis. These include the use of internal standards, multi-point calibration curves, and reference materials to compensate for instrumental drift and matrix effects. Advanced calibration algorithms can automatically adjust for variations in retention time, peak intensity, and mass accuracy, ensuring more reliable quantitative results.

- Hardware modifications to minimize systematic errors: Hardware improvements in GC-MS systems can significantly reduce systematic errors. These include enhanced temperature control systems, improved ion source designs, and more stable mass analyzers. Specialized components such as high-precision flow controllers and advanced detector systems help maintain consistent performance and reduce drift over time, leading to more reproducible analytical results.

- Data processing algorithms for error correction: Sophisticated data processing algorithms are developed to identify and correct systematic errors in GC-MS data. These include baseline correction, peak deconvolution, and noise reduction techniques. Machine learning approaches can recognize patterns in systematic errors and automatically apply appropriate corrections, improving the accuracy of compound identification and quantification.

- Sample preparation techniques to reduce matrix-induced errors: Optimized sample preparation methods help minimize matrix-induced systematic errors in GC-MS analysis. These include improved extraction procedures, clean-up techniques, and derivatization methods that enhance analyte stability and reduce interference. Standardized sample handling protocols ensure consistency across analyses and minimize variability introduced during the pre-analytical phase.

- Quality control and validation procedures: Comprehensive quality control and validation procedures are essential for identifying and managing systematic errors in GC-MS. These include regular system suitability tests, performance verification with certified reference materials, and statistical process control methods. Implementing robust validation protocols helps establish method reliability and ensures that systematic errors are detected and addressed before they impact analytical results.

02 Hardware modifications to minimize systematic errors

Specialized hardware components and modifications can reduce systematic errors in GC-MS systems. These include improved ion source designs, enhanced vacuum systems, and temperature-controlled sample introduction mechanisms. Advanced detector technologies with better linearity and stability characteristics help minimize drift and response variations across different concentration ranges and sample types.Expand Specific Solutions03 Data processing algorithms for error correction

Sophisticated data processing algorithms can identify and correct systematic errors in GC-MS analysis. These include baseline correction methods, peak deconvolution techniques, and automated noise reduction algorithms. Machine learning approaches can recognize patterns in systematic errors and apply appropriate corrections, improving both qualitative identification and quantitative measurement precision across diverse sample types.Expand Specific Solutions04 Sample preparation techniques to reduce matrix-related errors

Optimized sample preparation methods can significantly reduce matrix-related systematic errors in GC-MS analysis. These include selective extraction procedures, derivatization techniques to improve compound volatility, and clean-up protocols to remove interfering substances. Standardized sample handling procedures help minimize variability introduced before instrumental analysis, ensuring more consistent and accurate results.Expand Specific Solutions05 Quality control and validation procedures

Comprehensive quality control and validation procedures are essential for identifying and managing systematic errors in GC-MS analysis. These include system suitability tests, regular performance verification using certified reference materials, and statistical process control methods. Interlaboratory comparison studies and proficiency testing help identify instrument-specific biases and establish correction factors to enhance method transferability and result comparability.Expand Specific Solutions

Leading Manufacturers and Research Institutions in GC-MS

GC-MS Systematic Error Minimization in laboratory practices is currently in a growth phase, with the market expanding as analytical chemistry demands increase across pharmaceutical, environmental, and research sectors. The global market for GC-MS technologies is estimated at $4-5 billion, growing at 5-7% annually. Technologically, the field is maturing with established protocols but continues to evolve through innovations in error reduction algorithms and calibration methods. Leading academic institutions like Tsinghua University, Fudan University, and Zhejiang University are advancing fundamental research, while companies including FUJIFILM, Abbott Laboratories, and Qualcomm are developing commercial applications with improved accuracy. Texas Instruments and Samsung Electronics contribute hardware innovations that enhance instrument precision, creating a competitive landscape balanced between academic research and industrial implementation.

Tsinghua University

Technical Solution: Tsinghua University has developed a comprehensive GC-MS systematic error minimization framework that combines advanced statistical methods with rigorous laboratory protocols. Their approach begins with a systematic characterization of error sources through designed experiments that quantify contributions from sample preparation, instrument variability, and data processing. Tsinghua's methodology incorporates multivariate statistical process control (MSPC) to monitor instrument performance across multiple parameters simultaneously, enabling early detection of subtle shifts in system behavior before they affect analytical results[1]. Their laboratory practices include standardized sample preparation workflows with gravimetric verification steps and temperature-controlled storage conditions to minimize pre-analytical variability. Tsinghua has pioneered advanced internal standardization techniques using multiple internal standards selected based on chemical similarity to target analytes rather than relying on a single compound[2]. Their data processing pipeline incorporates machine learning algorithms that can identify and correct for systematic biases in peak integration and quantitation, particularly for complex environmental and biological samples with challenging matrix effects[3]. The university has also developed specialized training programs for laboratory personnel that emphasize understanding sources of systematic error and implementing appropriate corrective actions.

Strengths: Their multivariate statistical process control approach provides exceptional sensitivity for detecting subtle instrument drift, reportedly identifying potential issues 2-3 days before conventional quality control methods would detect problems. The machine learning-based data processing significantly improves quantitation accuracy for complex samples. Weaknesses: Implementation requires substantial statistical expertise and computational resources that may not be available in routine analytical laboratories.

Brigham Young University

Technical Solution: Brigham Young University has developed an innovative GC-MS systematic error minimization approach focused on trace analysis and environmental applications. Their methodology emphasizes fundamental understanding of error propagation throughout the analytical workflow and implements targeted interventions at critical points. BYU's system incorporates a comprehensive initial validation protocol that characterizes instrument-specific response factors and detection limits for target compounds under various operating conditions[1]. Their laboratory practices include detailed temperature mapping of sample storage areas and preparation spaces to minimize environmentally-induced variability. BYU has pioneered advanced sample preparation techniques that minimize matrix effects through selective extraction and clean-up procedures tailored to specific sample types[2]. Their approach includes regular performance verification using certified reference materials with statistical evaluation of recovery rates and precision metrics. BYU's data processing methodology incorporates advanced deconvolution algorithms that can resolve closely eluting peaks and correct for spectral interferences, particularly valuable for complex environmental samples[3]. The university has also developed specialized training modules for analysts that emphasize understanding the theoretical basis of systematic errors and implementing appropriate quality control measures.

Strengths: Their selective sample preparation techniques have demonstrated significant improvements in method detection limits, with reported enhancements of 2-5 fold for challenging environmental contaminants. Their comprehensive validation approach provides robust performance across diverse sample types. Weaknesses: The intensive initial validation and ongoing quality control requirements demand significant time investment, potentially limiting sample throughput in high-volume laboratories.

Critical Innovations in Calibration and Reference Standards

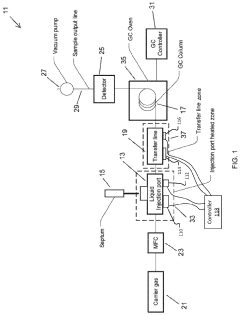

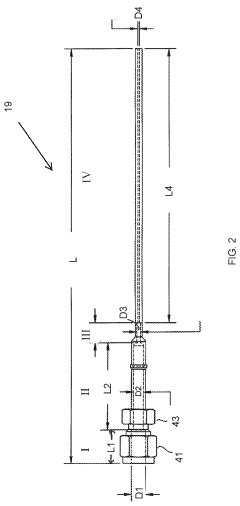

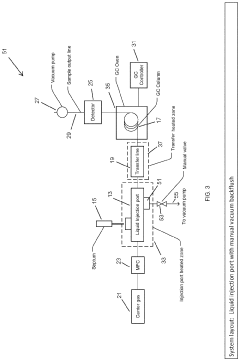

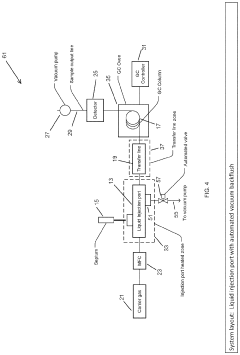

Large Volume Gas Chromatography Injection Port

PatentActiveUS20220082538A1

Innovation

- A method and system that condense solvent vapors before entering a temporally-resolving separator, such as a GC column, allowing larger sample volumes to be injected without splitting, thereby maintaining analytes in the vapor phase and enhancing detection sensitivity.

Phospholipid containing garlic, curry leaves and turmeric extracts for treatment of adipogenesis

PatentPendingIN202141048482A

Innovation

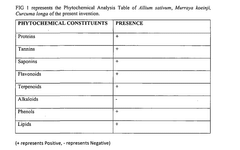

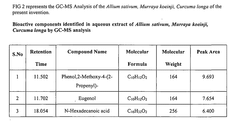

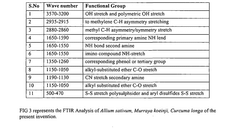

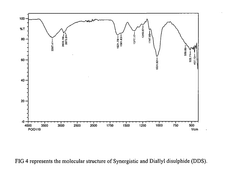

- A synergistic extract derived from Allium sativum, Murraya koenji, and Curcuma longa, combined with phospholipid as a Phytosome complex, is developed for enhanced bioavailability and therapeutic potential, involving a method of extraction, purification, and characterization using GC-MS, FTIR, and SEM, demonstrating the presence of bioactive compounds and antioxidant activity.

Quality Assurance Protocols for Analytical Laboratories

Quality assurance protocols form the backbone of reliable analytical operations in laboratories utilizing GC-MS technology. These protocols must be systematically implemented to minimize systematic errors that can compromise data integrity. A comprehensive quality assurance framework begins with the establishment of standard operating procedures (SOPs) that detail every aspect of sample handling, instrument operation, and data processing. These SOPs should be regularly reviewed and updated to incorporate technological advancements and regulatory changes.

Daily instrument performance verification represents a critical component of quality assurance. This includes running calibration standards at the beginning of each analytical session, monitoring retention time shifts, mass accuracy, and detector response. Implementing control charts to track these parameters over time enables early detection of instrumental drift before it affects analytical results. Statistical process control methods can be applied to establish warning and action limits for key performance indicators.

Reference materials and certified standards must be incorporated into analytical sequences at defined intervals. These materials, traceable to national or international standards, provide objective verification of method accuracy. For GC-MS specifically, isotopically labeled internal standards compensate for matrix effects and extraction variability, significantly reducing systematic bias in quantitative analyses.

Method validation protocols should be rigorously followed, encompassing assessments of linearity, precision, accuracy, limits of detection/quantification, and robustness. Validation studies must be performed under conditions that reflect actual sample analysis scenarios, including potential matrix interferences. Revalidation should be conducted following any significant changes to instrumentation, methodology, or when analyzing novel sample types.

Proficiency testing participation provides external validation of laboratory performance. By analyzing blind samples and comparing results with peer laboratories, systematic biases can be identified that might otherwise remain undetected through internal quality controls. Regular participation in such programs should be scheduled and results thoroughly documented and reviewed.

Documentation systems must capture all aspects of quality assurance activities, from instrument maintenance logs to analyst training records. Electronic laboratory information management systems (LIMS) facilitate comprehensive data tracking and enable trend analysis across multiple parameters. These systems should incorporate audit trails and electronic signatures to ensure data integrity and regulatory compliance.

Staff training programs represent another essential element of quality assurance. Analysts must receive thorough instruction on GC-MS principles, instrument operation, and error recognition. Competency assessments should be conducted periodically, with refresher training provided as needed to maintain analytical excellence and minimize operator-induced systematic errors.

Daily instrument performance verification represents a critical component of quality assurance. This includes running calibration standards at the beginning of each analytical session, monitoring retention time shifts, mass accuracy, and detector response. Implementing control charts to track these parameters over time enables early detection of instrumental drift before it affects analytical results. Statistical process control methods can be applied to establish warning and action limits for key performance indicators.

Reference materials and certified standards must be incorporated into analytical sequences at defined intervals. These materials, traceable to national or international standards, provide objective verification of method accuracy. For GC-MS specifically, isotopically labeled internal standards compensate for matrix effects and extraction variability, significantly reducing systematic bias in quantitative analyses.

Method validation protocols should be rigorously followed, encompassing assessments of linearity, precision, accuracy, limits of detection/quantification, and robustness. Validation studies must be performed under conditions that reflect actual sample analysis scenarios, including potential matrix interferences. Revalidation should be conducted following any significant changes to instrumentation, methodology, or when analyzing novel sample types.

Proficiency testing participation provides external validation of laboratory performance. By analyzing blind samples and comparing results with peer laboratories, systematic biases can be identified that might otherwise remain undetected through internal quality controls. Regular participation in such programs should be scheduled and results thoroughly documented and reviewed.

Documentation systems must capture all aspects of quality assurance activities, from instrument maintenance logs to analyst training records. Electronic laboratory information management systems (LIMS) facilitate comprehensive data tracking and enable trend analysis across multiple parameters. These systems should incorporate audit trails and electronic signatures to ensure data integrity and regulatory compliance.

Staff training programs represent another essential element of quality assurance. Analysts must receive thorough instruction on GC-MS principles, instrument operation, and error recognition. Competency assessments should be conducted periodically, with refresher training provided as needed to maintain analytical excellence and minimize operator-induced systematic errors.

Environmental Factors Affecting GC-MS Measurement Stability

Environmental stability is a critical factor in achieving reliable and reproducible results in Gas Chromatography-Mass Spectrometry (GC-MS) analyses. Laboratory temperature fluctuations can significantly impact retention times and peak areas, with studies showing that even a 1°C change can lead to retention time shifts of up to 0.1 minutes. Temperature control systems maintaining ±0.5°C stability are therefore essential for high-precision analyses, particularly in metabolomics and environmental monitoring applications.

Humidity represents another significant environmental challenge, affecting both electronic components and chromatographic performance. Excessive humidity (>60%) can cause electronic malfunctions and baseline instability, while also potentially altering the chemical properties of certain stationary phases. Modern laboratories implement humidity control systems maintaining 40-55% relative humidity to mitigate these effects.

Electromagnetic interference (EMI) from nearby equipment can introduce noise in the detector signal, particularly affecting low-abundance ion detection. Research has demonstrated that proximity to high-power instruments like NMR spectrometers or centrifuges can increase baseline noise by 15-20%. Proper laboratory layout with dedicated power circuits and electromagnetic shielding has become standard practice in high-sensitivity applications.

Vibration disturbances represent a subtle but impactful environmental factor affecting GC-MS performance. Mechanical vibrations from HVAC systems, nearby construction, or even foot traffic can cause peak broadening and reduced chromatographic resolution. Specialized anti-vibration tables and instrument platforms can reduce these effects by up to 90%, as demonstrated in precision forensic toxicology applications.

Airborne contaminants present a persistent challenge to measurement stability. Volatile organic compounds from cleaning agents, construction materials, or even personal care products can introduce ghost peaks and elevated baselines. HEPA filtration systems and positive pressure environments have been shown to reduce background contamination by 70-85% in ultra-trace analysis settings.

Barometric pressure variations, though often overlooked, can affect split/splitless injection systems and mass spectrometer vacuum stability. Studies indicate that rapid weather changes can alter injection reproducibility by 3-5%. Advanced systems now incorporate pressure-compensating algorithms and real-time monitoring to adjust critical parameters during atmospheric pressure fluctuations.

Humidity represents another significant environmental challenge, affecting both electronic components and chromatographic performance. Excessive humidity (>60%) can cause electronic malfunctions and baseline instability, while also potentially altering the chemical properties of certain stationary phases. Modern laboratories implement humidity control systems maintaining 40-55% relative humidity to mitigate these effects.

Electromagnetic interference (EMI) from nearby equipment can introduce noise in the detector signal, particularly affecting low-abundance ion detection. Research has demonstrated that proximity to high-power instruments like NMR spectrometers or centrifuges can increase baseline noise by 15-20%. Proper laboratory layout with dedicated power circuits and electromagnetic shielding has become standard practice in high-sensitivity applications.

Vibration disturbances represent a subtle but impactful environmental factor affecting GC-MS performance. Mechanical vibrations from HVAC systems, nearby construction, or even foot traffic can cause peak broadening and reduced chromatographic resolution. Specialized anti-vibration tables and instrument platforms can reduce these effects by up to 90%, as demonstrated in precision forensic toxicology applications.

Airborne contaminants present a persistent challenge to measurement stability. Volatile organic compounds from cleaning agents, construction materials, or even personal care products can introduce ghost peaks and elevated baselines. HEPA filtration systems and positive pressure environments have been shown to reduce background contamination by 70-85% in ultra-trace analysis settings.

Barometric pressure variations, though often overlooked, can affect split/splitless injection systems and mass spectrometer vacuum stability. Studies indicate that rapid weather changes can alter injection reproducibility by 3-5%. Advanced systems now incorporate pressure-compensating algorithms and real-time monitoring to adjust critical parameters during atmospheric pressure fluctuations.

Unlock deeper insights with Patsnap Eureka Quick Research — get a full tech report to explore trends and direct your research. Try now!

Generate Your Research Report Instantly with AI Agent

Supercharge your innovation with Patsnap Eureka AI Agent Platform!